Probabilistic Forecast of Visibility at Gimpo, Incheon, and Jeju International Airports Using Weighted Model Averaging

Abstract

1. Introduction

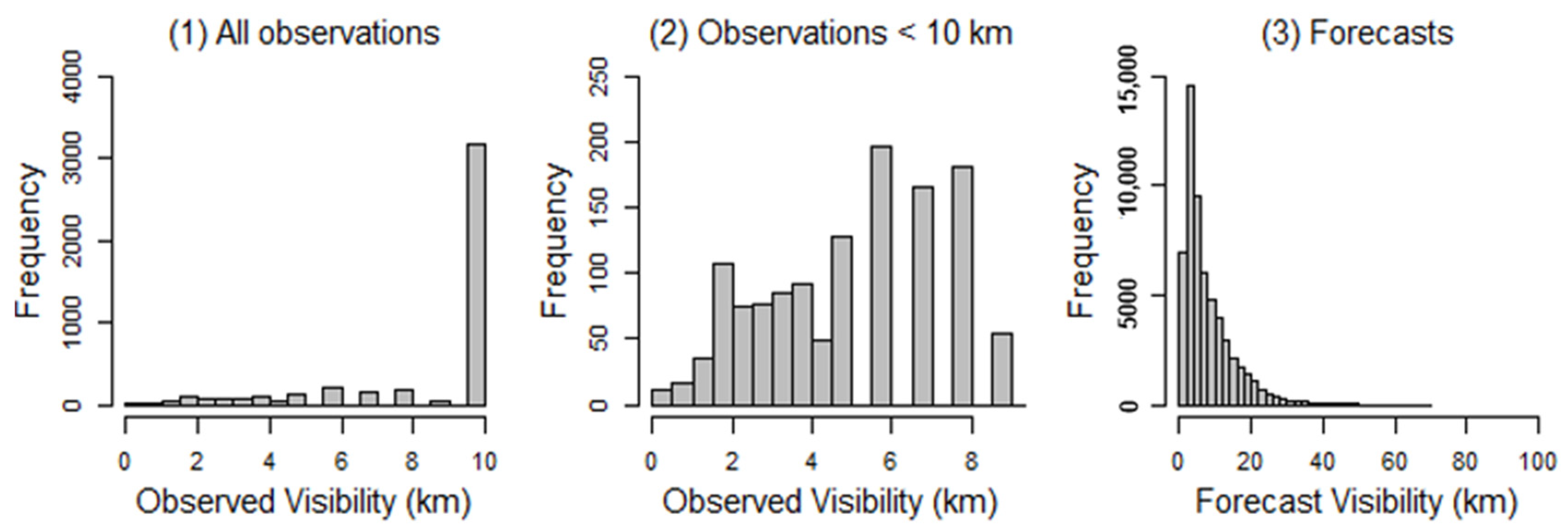

2. Data

3. Materials and Methods

3.1. Weighted Model Averaging

3.2. Scoring Rules

4. Results

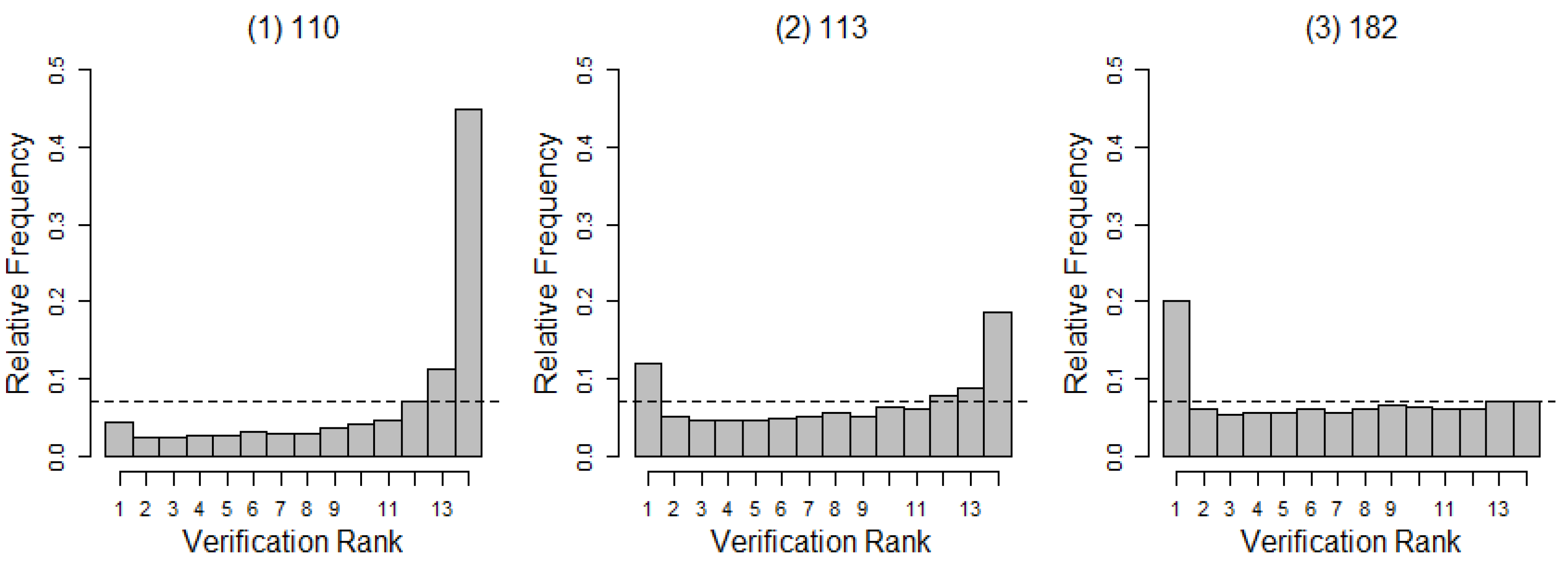

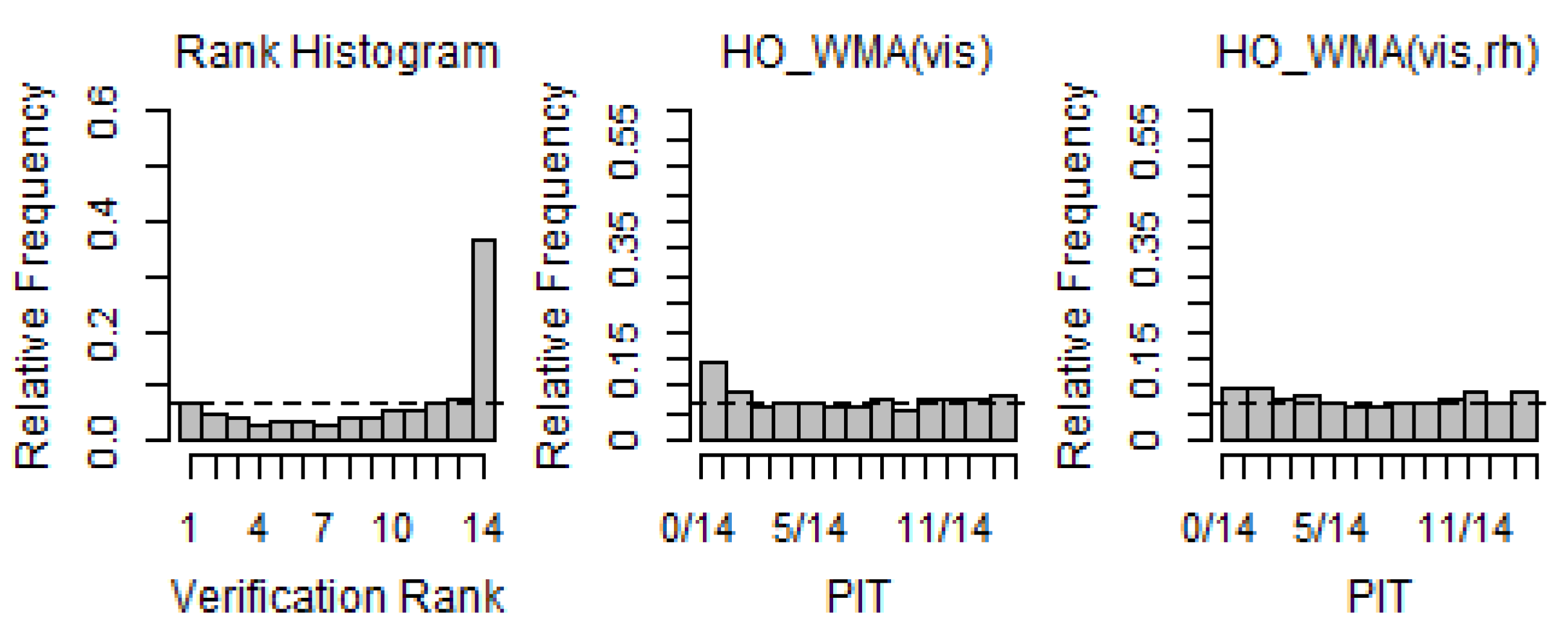

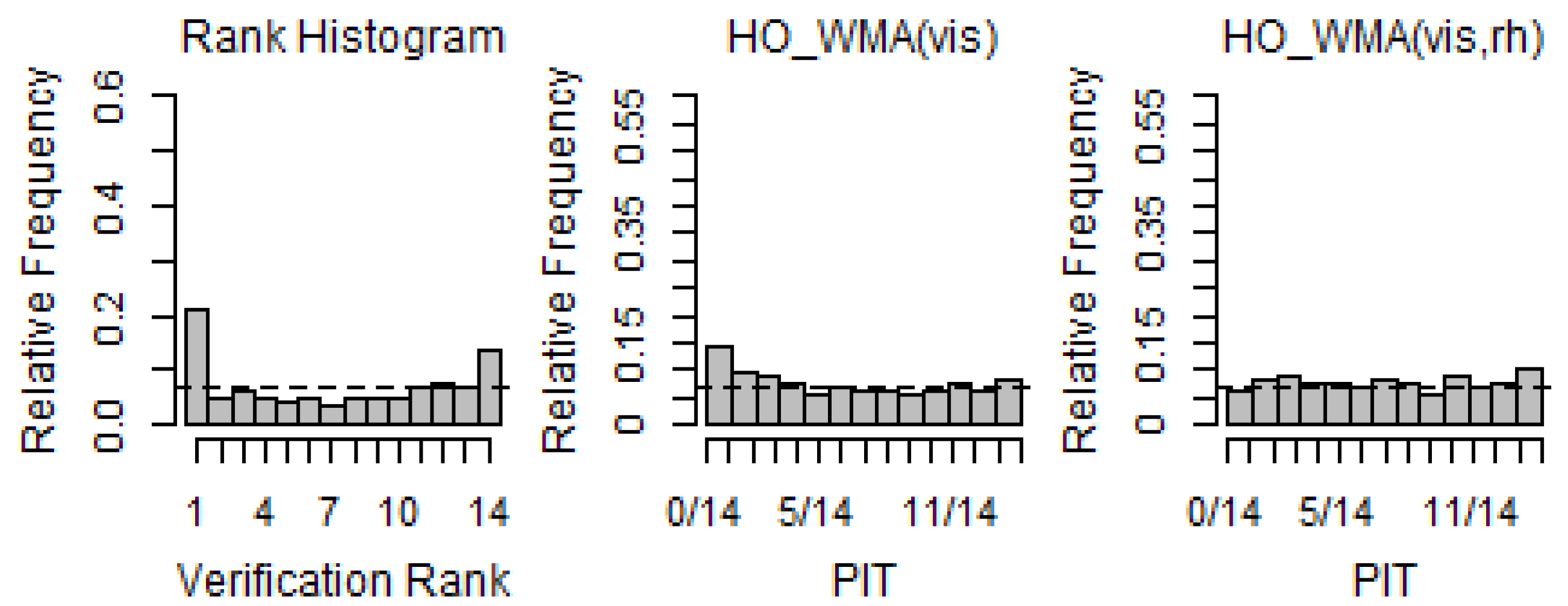

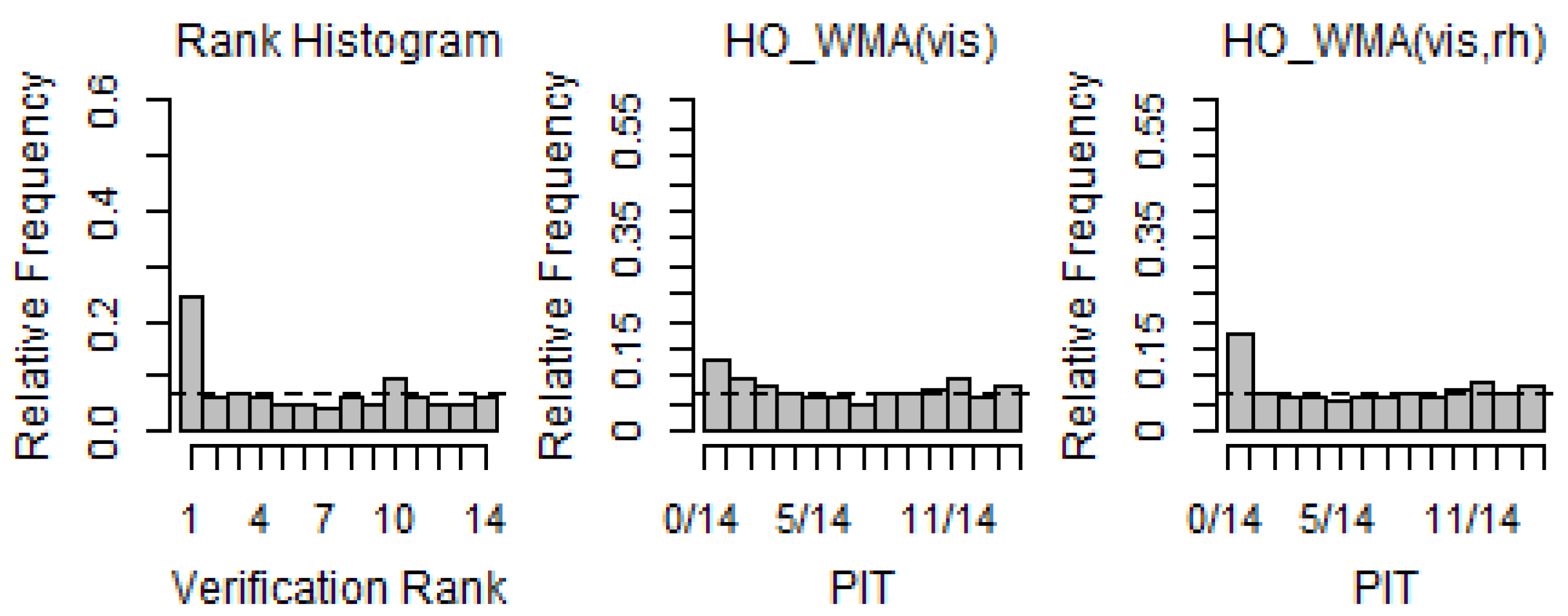

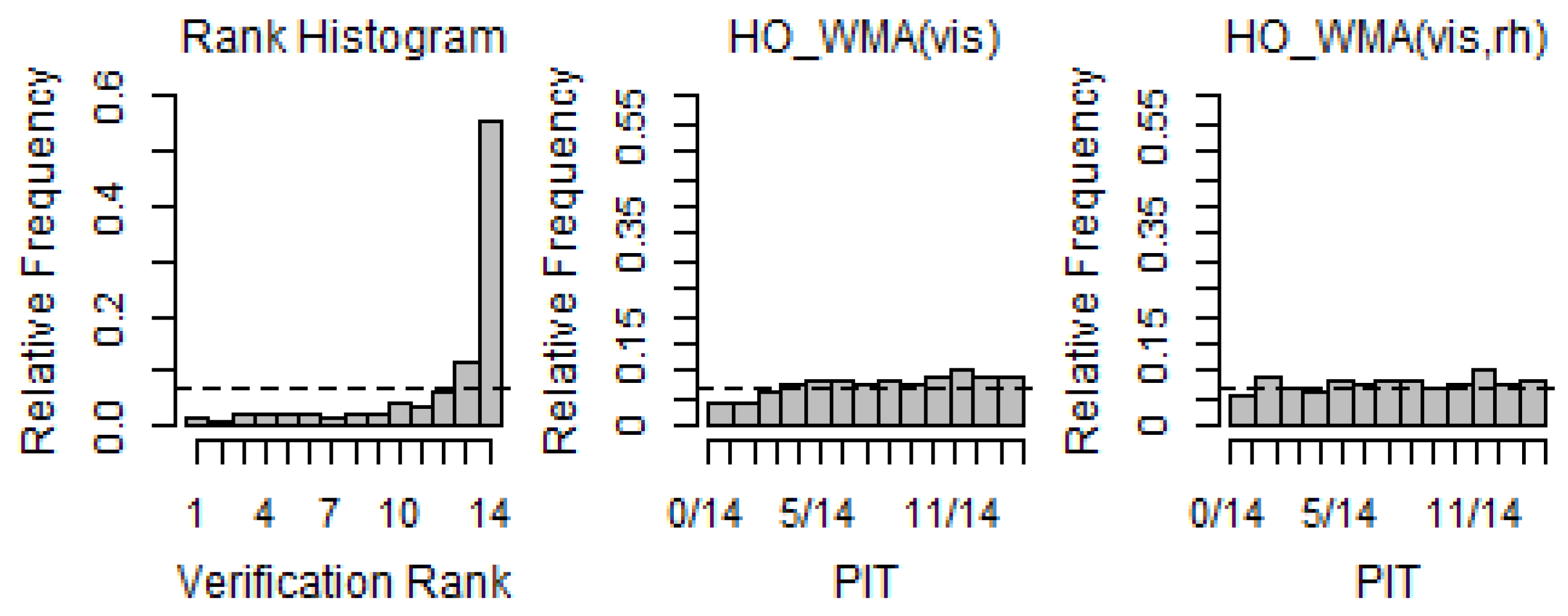

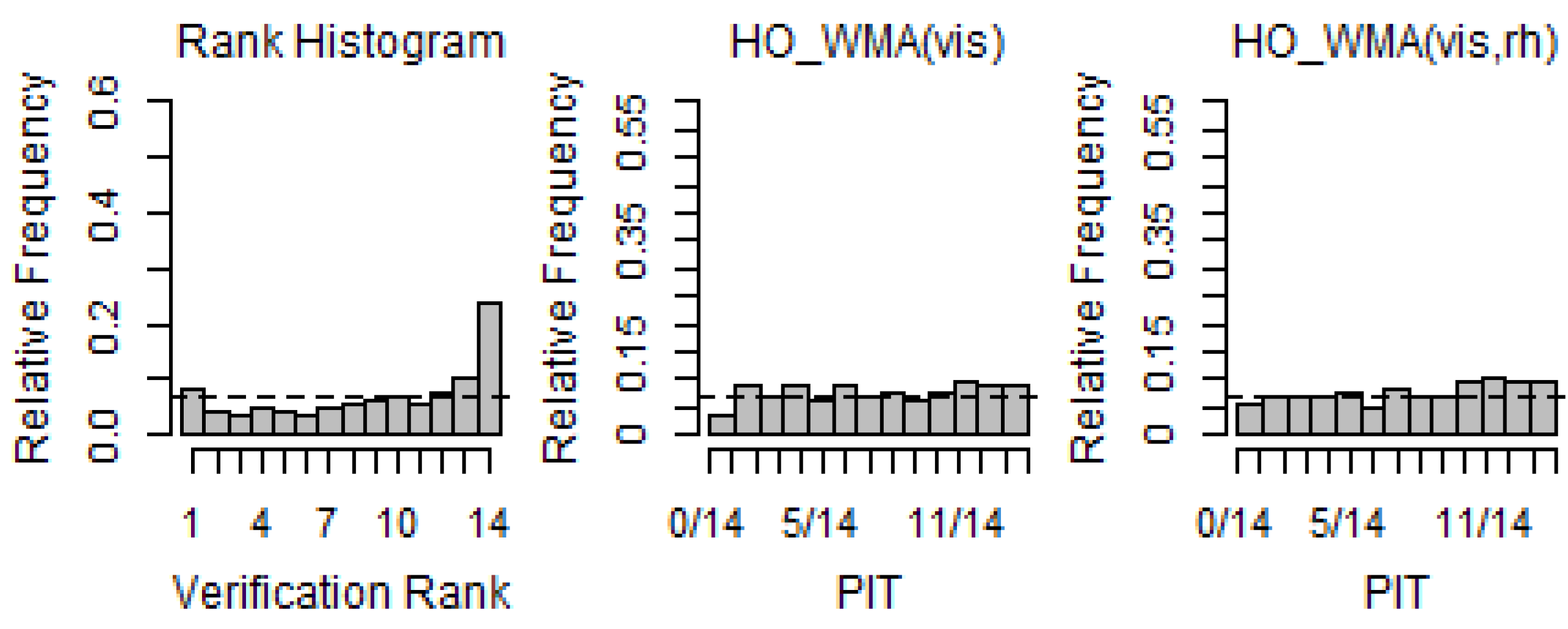

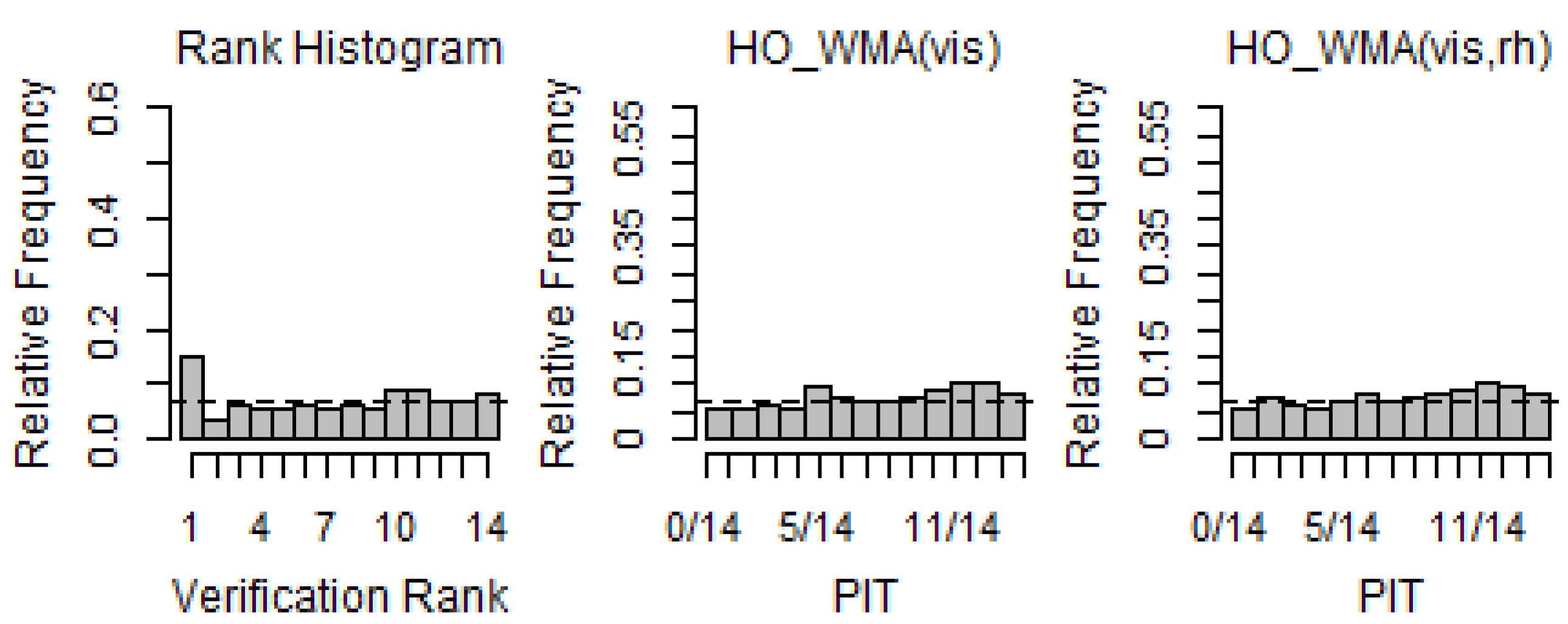

4.1. Reliability Analysis

4.2. Prediction Skill

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vislocky, R.L.; Fritsch, J.M. An automated, observations-based system for short-term prediction of ceiling and visibility. Weather Forecast. 1997, 12, 116–122. [Google Scholar] [CrossRef]

- Leyton, S.M.; Fritsch, J.M. Short-term probabilistic forecasts of ceiling and visibility utilizing high-density surface weather observations. Weather Forecast. 2003, 18, 891–902. [Google Scholar] [CrossRef]

- Leyton, S.M.; Fritsch, J.M. The impact of high-frequency surface weather observations on short-term probabilistic forecasts of ceiling and visibility. J. Appl. Meteorol. 2004, 43, 145–156. [Google Scholar] [CrossRef]

- Pasini, A.; Pelino, V.; Potesta, S. A neural network model for visibility nowcasting from surface observations: Results and sensitivity to physical input variables. J. Geophys. Res. 2001, 106, 14951–14959. [Google Scholar] [CrossRef]

- Bremnes, J.B.; Michaelides, S.C. Probabilistic visibility forecasting using neural networks. Pure Appl. Geophys. 2007, 164, 1365–1382. [Google Scholar] [CrossRef]

- Marzban, C.; Leyton, S.M.; Colman, B. Ceiling and visibility forecasts via neural networks. Weather Forecast. 2007, 22, 466–479. [Google Scholar] [CrossRef]

- Zhou, B.; Du, J.; McQueen, J.; Dimego, G. Ensemble forecast of ceiling, visibility, and fog with NCEP Short-Range Ensemble Forecast system (SREF). In Proceedings of the Aviation, Range and Aerospace Meteorology Special Symposium on Weather-Air Traffic Management Integration, Phoenix, AZ, USA, 11–15 January 2009. [Google Scholar]

- Roquelaure, S.; Bergot, T. A local ensemble prediction system for fog and low clouds: Construction, Bayesian model averaging calibration, and validation. J. Appl. Meteorol. Climatol. 2008, 47, 3072–3088. [Google Scholar] [CrossRef]

- Roquelaure, S.; Bergot, T. Contributions from a Local Ensemble Prediction System (LEPS) for improving low cloud forecasts at airports. Weather Forecast. 2009, 24, 39–52. [Google Scholar] [CrossRef]

- Roquelaure, S.; Tardif, R.; Remy, S.; Bergot, T. Skill of a ceiling and visibility Local Ensemble Prediction System (LEPS) according to fog-type prediction at Paris-Charles de Gaulle Airport. Weather Forecast. 2009, 24, 1511–1523. [Google Scholar] [CrossRef]

- Chmielecki, R.M.; Raftery, A.E. Probabilistic visibility forecasting using Bayesian model averaging. Mon. Weather Rev. 2011, 139, 1626–1636. [Google Scholar] [CrossRef]

- Raftery, A.E.; Gneiting, T.; Balabdaoui, F.; Polakowski, M. Using Bayesian model averaging to calibrate forecast ensembles. Mon. Weather Rev. 2005, 133, 1155–1174. [Google Scholar] [CrossRef]

- Sloughter, J.M.; Raftery, A.E.; Gneiting, T.; Fraley, C. Probabilistic quantitative precipitation forecasting using Bayesian model averaging. Mon. Weather Rev. 2007, 135, 3209–3220. [Google Scholar] [CrossRef]

- Han, K.; Choi, J.; Kim, C. Comparison of prediction performance using statistical postprocessing methods. Asia-Pac. J. Atmos. Sci. 2016, 52, 495–507. [Google Scholar] [CrossRef]

- Sloughter, J.M.; Gneiting, T.; Raftery, A.E. Probabilistic wind speed forecasting using ensembles and Bayesian model averaging. J. Am. Stat. Assoc. 2010, 105, 25–35. [Google Scholar] [CrossRef]

- Thorarinsdottir, T.L.; Gneiting, T. Probabilistic forecasts of wind speed: Ensemble model output statistics by using heteroscedastic censored regression. J. R. Stat. Soc. 2010, 173, 371–388. [Google Scholar] [CrossRef]

- Bao, I.; Gneiting, T.; Grimit, E.P.; Guttorp, P.; Raftery, A.E. Bias correction and Bayesian model averaging for ensemble forecasts of surface wind direction. Mon. Weather Rev. 2010, 138, 1811–1821. [Google Scholar] [CrossRef]

- Gneiting, T.; Raftery, A.E.; Westveld, A.H.; Goldman, T. Calibrated probabilistic forecasting using ensemble model output statistics and minimum CRPS estimation. Mon. Weather Rev. 2005, 133, 1098–1118. [Google Scholar] [CrossRef]

- Glahan, H.R.; Lowry, D.A. The use of Model Output Statistics (MOS) in objective weather forecasting. J. Appl. Meteorol. 1972, 11, 1203–1211. [Google Scholar] [CrossRef]

- Han, K.; Choi, J.; Kim, C. Comparison of statistical post-processing methods for probabilistic wind speed forecasting. Asia-Pac. J. Atmos. Sci. 2018, 54, 91–101. [Google Scholar] [CrossRef]

- Murphy, A.H.; Winkler, R.L. A general framework for forecast verification. Mon. Weather Rev. 1987, 115, 1330–1338. [Google Scholar] [CrossRef]

- Gneiting, T.; Reftery, A.E. Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 2007, 102, 359–378. [Google Scholar] [CrossRef]

- Wilson, D.R.; Ballard, S.P. A microphysically based precipitation scheme for the UK meteorological office unified model. Quart. J. R. Meteorol. Soc. 1999, 125, 1607–1636. [Google Scholar] [CrossRef]

- Clark, P.A.; Harcourt, S.A.; Macpherson, B.; Mathison, C.T.; Cusack, S.; Naylor, M. Prediction of visibility and aerosol within the operational Met Office Unified Model. I: Model formulation and variational assimilation. Quart. J. R. Meteorol. Soc. 2008, 134, 1801–1816. [Google Scholar] [CrossRef]

- Kim, M.; Lee, K.; Lee, Y.H. Visibility Data Assimilation and Prediction Using an Observation Network in South Korea. Pure Appl. Geophys. 2020, 177, 1125–1141. [Google Scholar] [CrossRef]

- Brier, G.W. Verification of forecasts expressed in terms of probability. Mon. Weather Rev. 1950, 78, 1–3. [Google Scholar] [CrossRef]

- Murphy, A.H. A new vector partition of the probability score. J. Appl. Meteorol. 1973, 12, 595–600. [Google Scholar] [CrossRef]

- Grimit, E.P.; Gneiting, T.; Berrocal, V.I.; Johnson, N.A. The continuous ranked probability score for circular variables and tis application to mesoscale forecast ensemble verification. Quart. J. R. Meteorol. Soc. 2006, 132, 3209–3220. [Google Scholar] [CrossRef]

- Hamil, T.M. Interpretation of rank histogram for verifying ensemble forecasts. Mon. Weather Rev. 2001, 129, 550–560. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences, 3rd ed.; Elsevier Academic Press: Amsterdam, The Netherlands, 2011; p. 113. [Google Scholar]

- Delle Monache, I.; Hacker, J.P.; Zhou, Z.; Deng, X.; Stull, R.B. Probabilistic aspects of meteorological and ozone regional ensemble forecasts. J. Geophy. Res. 2006, 111, D23407. [Google Scholar] [CrossRef]

| Ensemble Prediction System | Limited-Area ENsemble Prediction System (LENS) with 13 Ensemble Members | ||

|---|---|---|---|

| Data Period | 1 December 2018–30 June 2019 | ||

| UTC | 00 UTC | ||

| Projection time | 4 h to 24 h | ||

| Station | Station | Latitude | Longitude |

| Gimpo Int. Airport (110) | 37.5 | 126.4 | |

| Incheon Int. Airport (113) | 37.4 | 126.7 | |

| Jeju Int. Airport (182) | 33.5 | 126.5 | |

| Predictant | Visibility (km) | ||

| Predictors | Visibility, relative humidity, and precipitation forecasts generated using LENS | ||

| Year | 2018 | 2019 | ||||||

|---|---|---|---|---|---|---|---|---|

| Mon | 12 | 1 | 2 | 3 | 4 | 5 | 6 | |

| Dataset | 2018–2019 DJF | Training | Test | |||||

| 2019 MAM | Training | Test | ||||||

| Station | 110 | 113 | 182 |

|---|---|---|---|

| Reliability index | 0.837 | 0.375 | 0.261 |

| Station | MAE | CRPS | BS |

|---|---|---|---|

| 110 (Gimpo) | 3.248 | 2.651 | 0.422 |

| 113 (Incheon) | 2.135 | 1.655 | 0.281 |

| 182 (Jeju) | 1.004 | 0.885 | 0.213 |

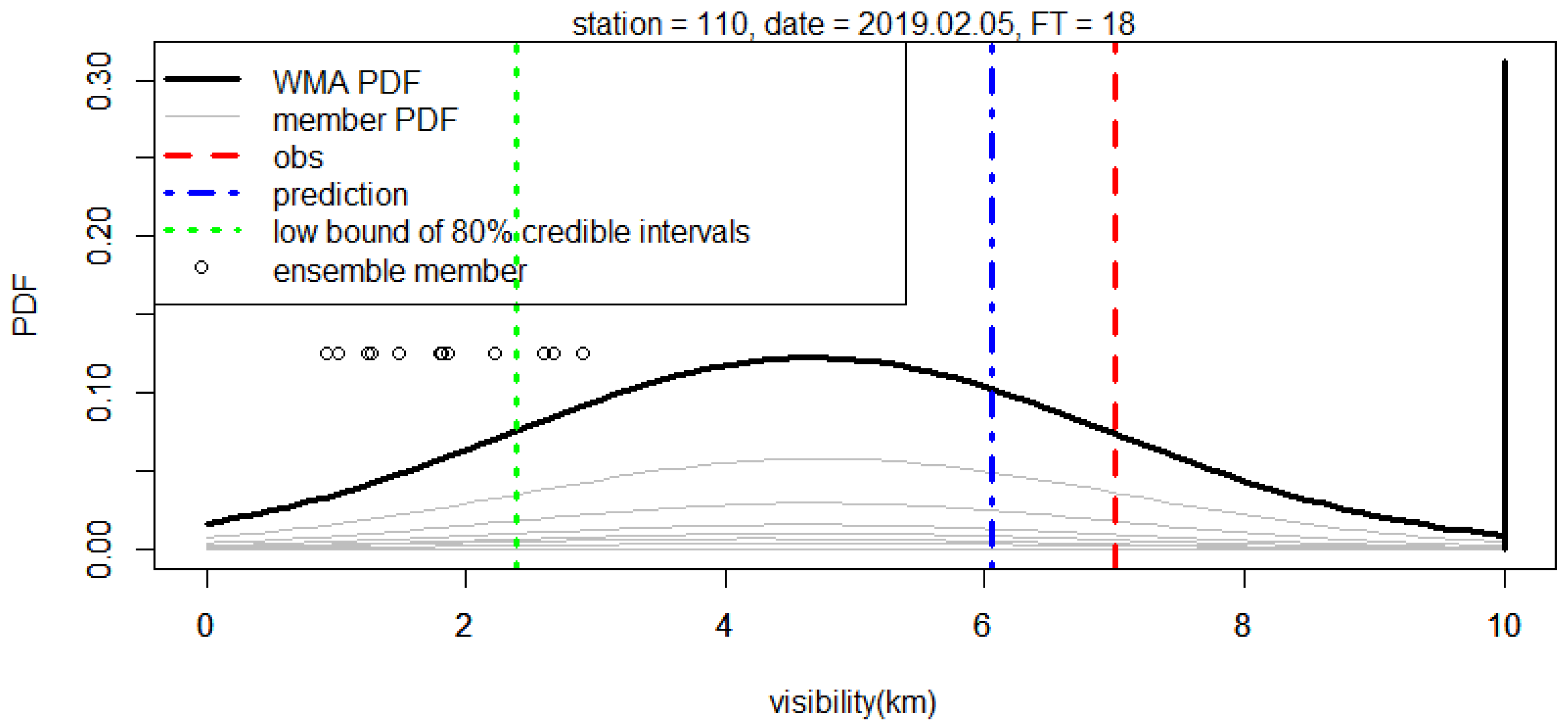

| Station 110 on 5 February 2019 (FT 18) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WMA | mvi0 | mvi1 | mvi2 | mvi3 | mvi4 | mvi5 | mvi6 | mvi7 | mvi8 | mvi9 | mvi10 | mvi11 | mvi12 | |

| Member forecast | 1.477 | 1.275 | 1.086 | 2.67 | 1.244 | 1.864 | 1.793 | 2.599 | 0.92 | 2.226 | 1.012 | 2.094 | 2.227 | |

| WMA weight | 0 | 0.131 | 0.416 | 0 | 0 | 0 | 0.093 | 0 | 0.052 | 0 | 0.027 | 0 | 0.28 | |

| Member P(y = 10) | 0.333 | 0.333 | 0.217 | 0.435 | 0.395 | 0.424 | 0.411 | 0.427 | 0.313 | 0.519 | 0.285 | 0.494 | 0.414 | |

| WMA P(y = 10) | 0.312 | |||||||||||||

| WMA median | 6.055 | |||||||||||||

| WMA lower bound | 2.387 | |||||||||||||

| Observation | 7 | |||||||||||||

| (a) 2018–2019 December, January, and February (DJF) | ||||||

| MAE | CRPS | BS (y = 10) | ||||

| Station | Ensemble | WMA | Ensemble | WMA | Ensemble | WMA |

| 110 | 2.842 | 1.610 | 3.914 | 2.806 | 0.355 | 0.211 |

| 113 | 2.263 | 1.854 | 3.502 | 3.272 | 0.302 | 0.255 |

| 182 | 0.967 | 0.942 | 0.901 | 0.776 | 0.223 | 0.196 |

| (b) 2019 March, April, and May (MAM) | ||||||

| MAE | CRPS | BS (y = 10) | ||||

| Station | Ensemble | WMA | Ensemble | WMA | Ensemble | WMA |

| 110 | 3.843 | 0.847 | 3.248 | 0.677 | 0.489 | 0.156 |

| 113 | 2.048 | 1.272 | 1.607 | 0.909 | 0.274 | 0.181 |

| 182 | 0.744 | 0.592 | 0.643 | 0.548 | 0.165 | 0.138 |

| 2018–2019 DJF | 2019 MAM | |||||||

|---|---|---|---|---|---|---|---|---|

| Station | 110 | 113 | 182 | 110 | 113 | 182 | ||

| MAE | Ensemble | 2.842 | 2.263 | 0.967 | 3.843 | 2.049 | 0.744 | |

| WMA | (vis) | 1.610 | 1.854 | 0.942 | 0.847 | 1.272 | 0.592 | |

| (vis, rh) | 1.267 | 1.300 | 0.936 | 0.715 | 1.213 | 0.593 | ||

| CRPS | Ensemble | 2.342 | 1.882 | 0.901 | 3.248 | 1.607 | 0.643 | |

| WMA | (vis) | 1.191 | 1.434 | 0.776 | 0.677 | 0.909 | 0.548 | |

| (vis, rh) | 0.898 | 0.901 | 0.786 | 0.545 | 0.855 | 0.459 | ||

| BS | Ensemble | 0.355 | 0.302 | 0.223 | 0.490 | 0.274 | 0.165 | |

| WMA | (vis) | 0.211 | 0.255 | 0.196 | 0.156 | 0.181 | 0.138 | |

| (vis, rh) | 0.160 | 0.144 | 0.196 | 0.119 | 0.166 | 0.114 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, H.-W.; Han, K.; Kim, C. Probabilistic Forecast of Visibility at Gimpo, Incheon, and Jeju International Airports Using Weighted Model Averaging. Atmosphere 2022, 13, 1969. https://doi.org/10.3390/atmos13121969

Choi H-W, Han K, Kim C. Probabilistic Forecast of Visibility at Gimpo, Incheon, and Jeju International Airports Using Weighted Model Averaging. Atmosphere. 2022; 13(12):1969. https://doi.org/10.3390/atmos13121969

Chicago/Turabian StyleChoi, Hee-Wook, Keunhee Han, and Chansoo Kim. 2022. "Probabilistic Forecast of Visibility at Gimpo, Incheon, and Jeju International Airports Using Weighted Model Averaging" Atmosphere 13, no. 12: 1969. https://doi.org/10.3390/atmos13121969

APA StyleChoi, H.-W., Han, K., & Kim, C. (2022). Probabilistic Forecast of Visibility at Gimpo, Incheon, and Jeju International Airports Using Weighted Model Averaging. Atmosphere, 13(12), 1969. https://doi.org/10.3390/atmos13121969