Estimating FAO Blaney-Criddle b-Factor Using Soft Computing Models

Abstract

1. Introduction

2. Materials and Methods

2.1. FAO Blaney-Criddle B Factor

2.2. Soft Computing Models

2.2.1. Random Forest (RF)

2.2.2. M5 Model Tree (M5)

2.2.3. Support Vector Regression (SVR)

2.2.4. Random Tree (RT)

2.3. Weka Machine Learning Tool

2.4. Tuning Hyper-Parameters

2.5. Data Used

| Statistical Values | Training | Testing | ||||||

|---|---|---|---|---|---|---|---|---|

| Ud | n/N | RHmin | b | Ud | n/N | RHmin | b | |

| Maximum | 10.00 | 1.00 | 100.00 | 2.63 | 10.00 | 1.00 | 100.00 | 2.63 |

| Minimum | 0.00 | 0.00 | 0.00 | 0.38 | 0.00 | 0.00 | 0.00 | 0.38 |

| Average | 5.01 | 0.51 | 49.68 | 1.19 | 5.01 | 0.50 | 49.72 | 1.18 |

| Standard Deviation | 3.42 | 0.34 | 34.06 | 0.47 | 3.42 | 0.34 | 34.07 | 0.46 |

| Kurtosis | −1.27 | −1.28 | −1.26 | −0.09 | −1.27 | −1.27 | −1.26 | 0.10 |

| Skewness | −0.01 | −0.05 | 0.01 | 0.62 | −0.01 | 0.01 | 0.01 | 0.67 |

| Correlation Coefficient (r) | 0.27 | 0.57 | −0.74 | 1.00 | 0.26 | 0.58 | −0.74 | 1.00 |

| Number of data | 186 | 216 | ||||||

2.6. Statistical Model Performance Indices

3. Results and Discussion

3.1. Results of Tuning Hyper-Parameters

3.1.1. Random Forest (RF)

3.1.2. M5 Model Tree (M5)

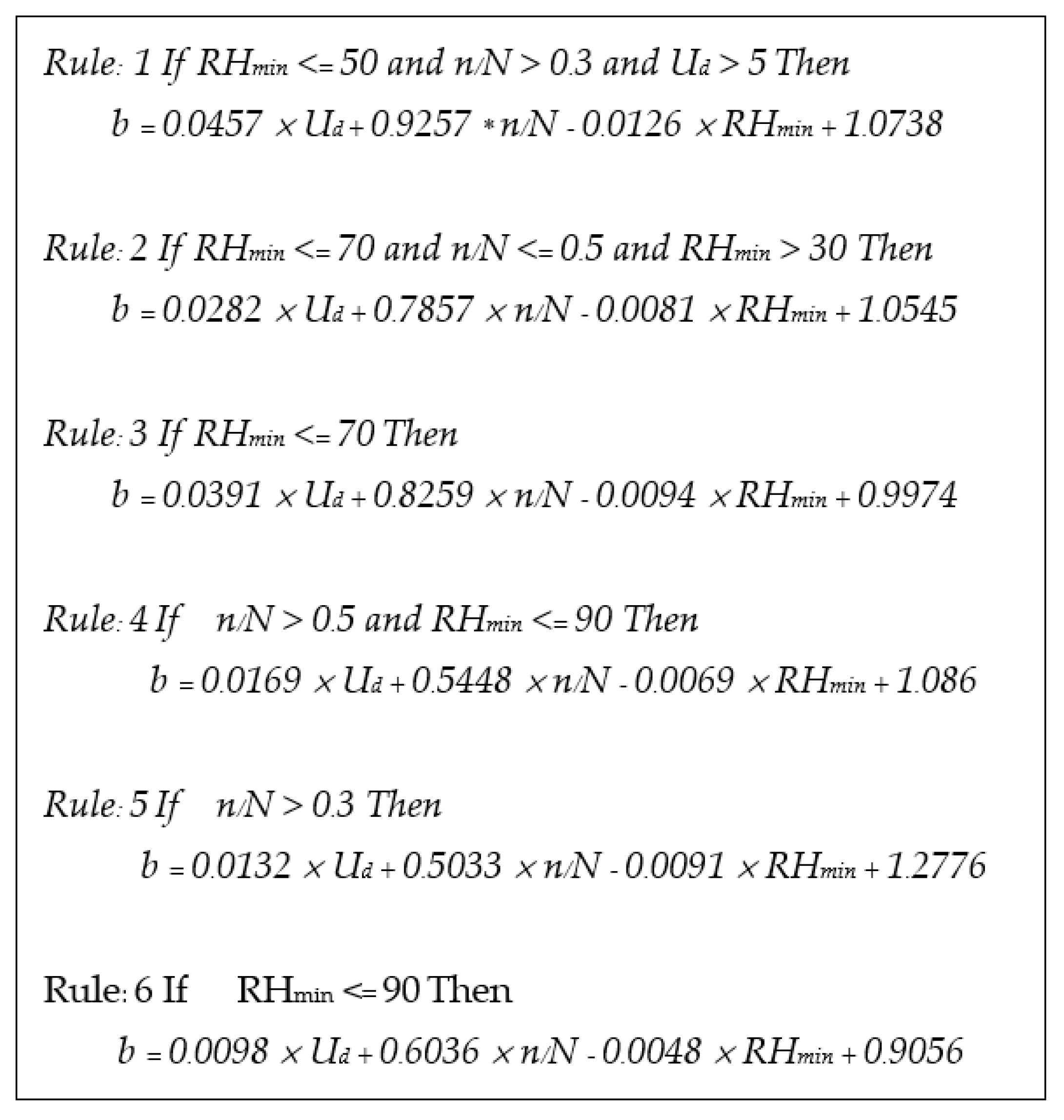

3.1.3. Support Vector Regression (SVR)

3.1.4. Random Tree (RT)

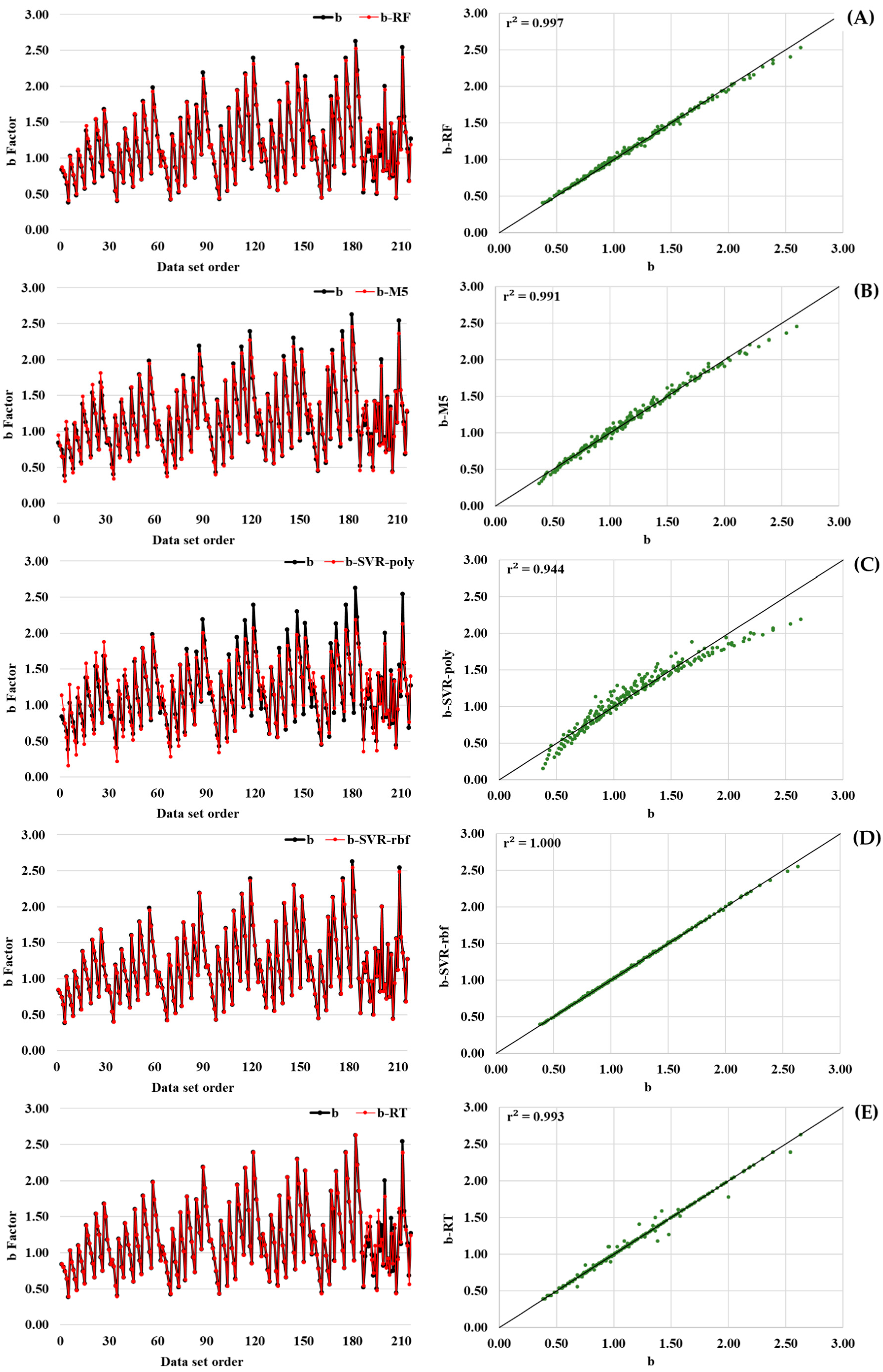

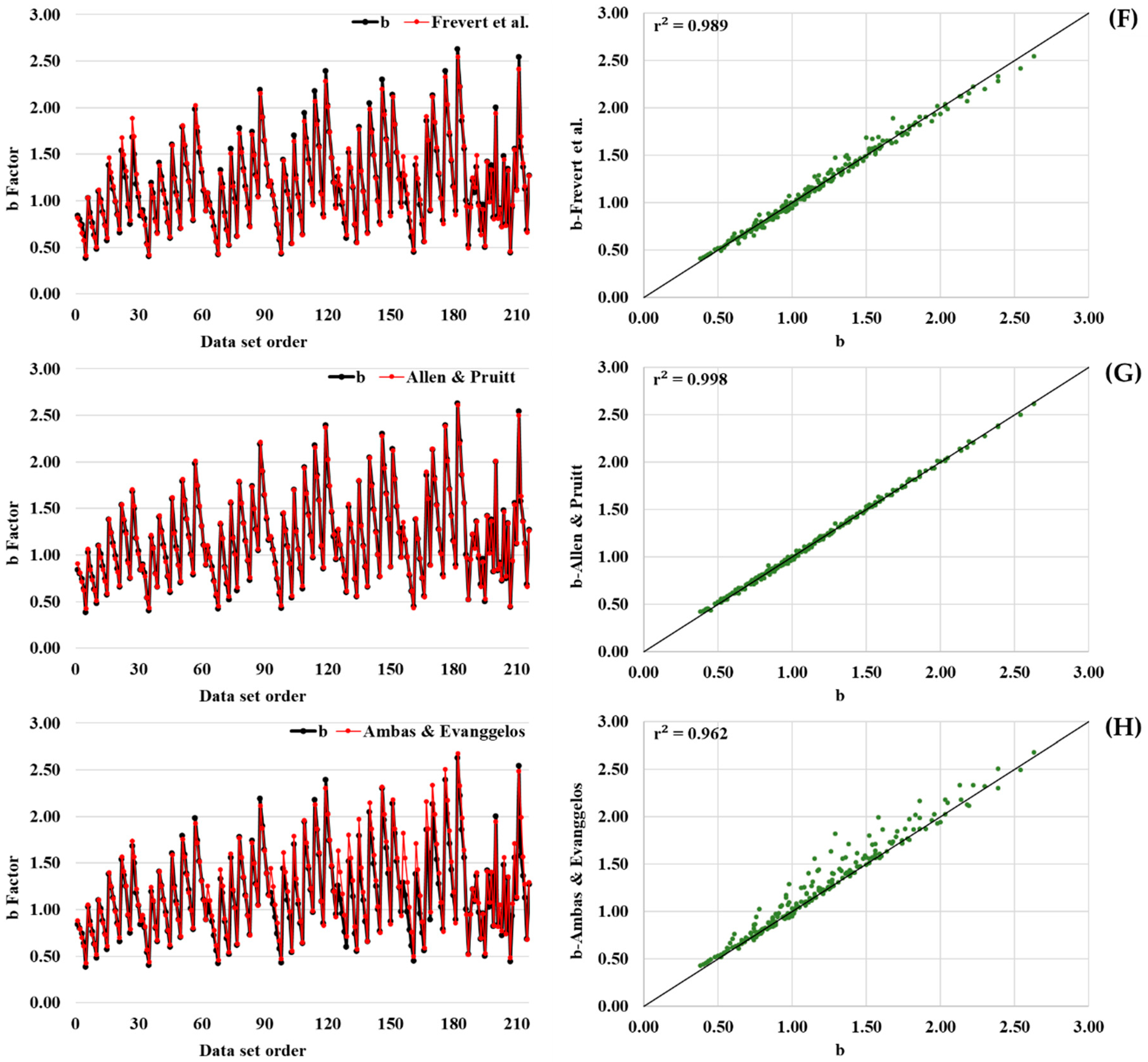

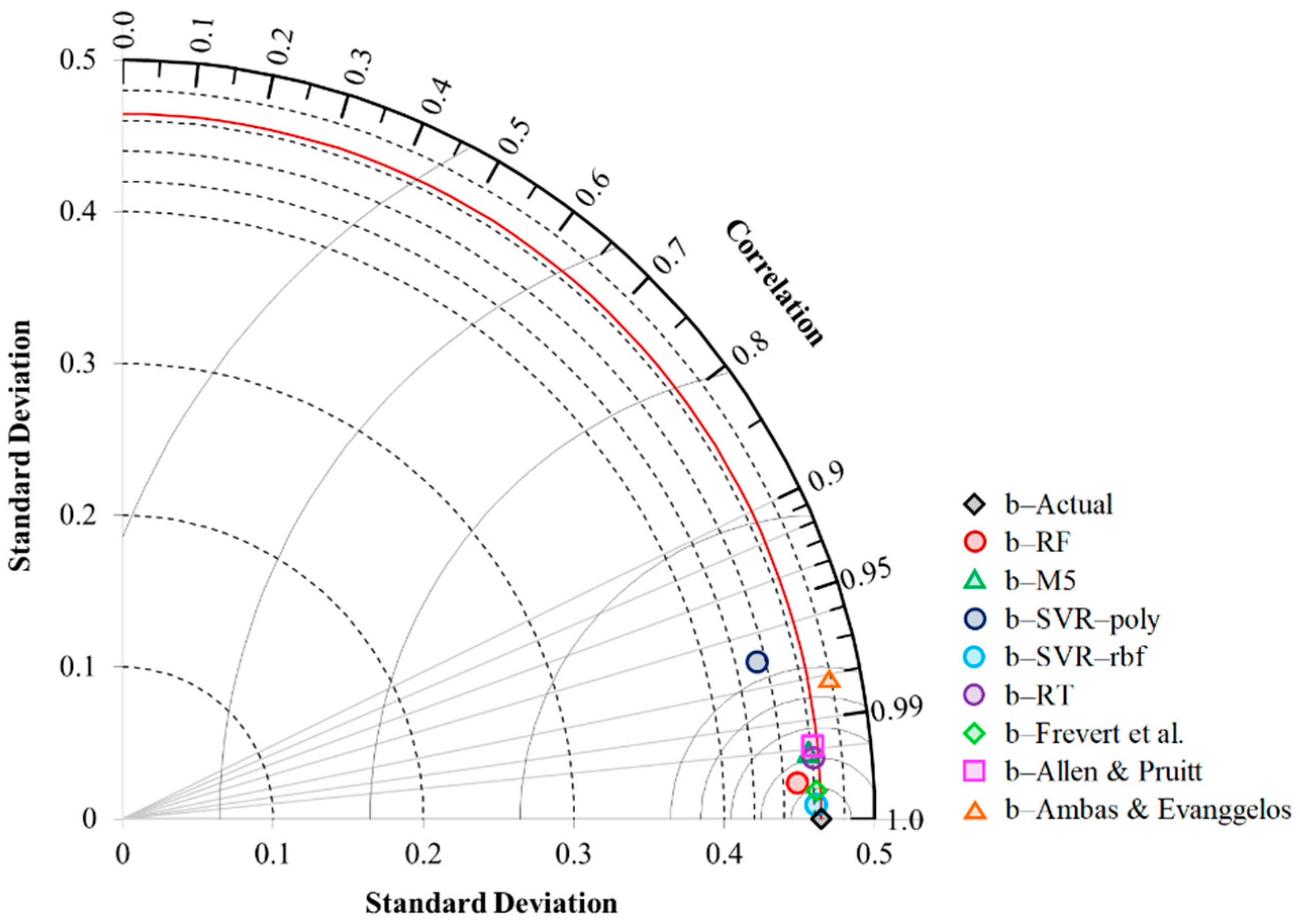

3.2. Model’s Performance Comparison

3.3. Models’ Applicability for Estimating Monthly Reference evapotranspiration (ETo)

4. Conclusions

- (1)

- Among five soft computing models, it was found that SVR-rbf gave the highest performance in reference evapotranspiration (ETo) estimation, followed by M5, RF, SVR-poly, and RT, respectively.

- (2)

- The new explicit equations for FAO Blaney-Criddle b-factor estimation were proposed herein using the M5 model. It is a rule set, including six linear equations.

- (3)

- Compared to the RBF network [25], SVR-rbf provided a bit lower performance but outperformed three previous regression equations.

- (4)

- The soft computing models outperformed the regression-based models in the b-factor estimation since they gave the lower values of MARE (%), MXARE (%), NE > 2%, and DEV (%) and the higher value of r2.

- (5)

- Models’ Applicability for estimating monthly reference evapotranspiration (ETo) revealed that the soft computing models outperformed the regression-based models in ETo estimation owing to the lower percentage of yearly difference. All three regression-based models underestimated ETo, while all six soft computing models slightly overestimated it.

- (6)

- This work’s usefulness is to support a more accurate and convenient evaluation of reference crop evapotranspiration with a temperature-based approach. It leads to agricultural water demand estimation accuracy as necessary data for water resources planning and management.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xiong, Y.; Luo, Y.; Wang, Y.; Seydou, T.; Xu, J.; Jiao, X.; Fipps, G. Forecasting daily reference evapotranspiration using the Blaney-Criddle model and temperature forecasts. Arch. Agron. Soil Sci. 2015, 62, 790–805. [Google Scholar] [CrossRef]

- Tabari, H.; Grismer, M.E.; Trajkovic, S. Comparative analysis of 31 reference evapotranspiration methods under humid conditions. Irrig. Sci. 2013, 31, 107–117. [Google Scholar] [CrossRef]

- Mobilia, M.; Longobardi, A. Prediction of potential and actual evapotranspiration fluxes using six meteorological data-based approaches for a range of climate and land cover types. ISPRS Int. J. Geo Inf. 2021, 10, 192. [Google Scholar] [CrossRef]

- Hafeez, M.; Chatha, Z.A.; Khan, A.A.; Bakhsh, A.; Basit, A.; Tahira, F. Estimating reference evapotranspiration by Hargreaves and Blaney-Criddle methods in humid subtropical conditions. Curr. Res. Agric. Sci. 2020, 7, 15–22. [Google Scholar] [CrossRef]

- Fooladmand, H. Evaluation of Blaney-Criddle equation for estimating evapotranspiration in south of Iran. Afr. J. Agric. Res. 2011, 6, 3103–3109. [Google Scholar]

- Jhajharia, D.; Ali, M.; Barma, D.; Durbude, D.G.; Kumar, R. Assessing Reference Evapotranspiration by Temperature-based Methods for Humid Regions of Assam. J. Indian Water Resour. Soc. 2009, 29, 1–8. [Google Scholar]

- Mehdi, H.M.; Morteza, H. Calibration of Blaney-Criddle equation for estimating reference evapotranspiration in semiarid and arid regions. Disaster Adv. 2014, 7, 12–24. [Google Scholar]

- Pandey, P.K.; Dabral, P.P.; Pandey, V. Evaluation of reference evapotranspiration methods for the northeastern region of India. Int. Soil Water Conserv. Res. 2016, 4, 52–63. [Google Scholar] [CrossRef]

- Rahimikhoob, A.; Hosseinzadeh, M. Assessment of Blaney-Criddle Equation for Calculating Reference Evapotranspiration with NOAA/AVHRR Data. Water Resour. Manag. 2014, 28, 3365–3375. [Google Scholar] [CrossRef]

- Zhang, L.; Cong, Z. Calculation of reference evapotranspiration based on FAO-Blaney-Criddle method in Hetao Irrigation district. Trans. Chin. Soc. Agric. Eng. 2016, 32, 95–101. [Google Scholar] [CrossRef]

- Abd El-wahed, M.; Ali, T. Estimating reference evapotranspiration using modified Blaney-Criddle equation in arid region. Bothalia J. 2015, 44, 183–195. [Google Scholar]

- El-Nashar, W.Y.; Hussien, E.A. Estimating the potential evapo-transpiration and crop coefficient from climatic data in Middle Delta of Egypt. Alex. Eng. J. 2013, 52, 35–42. [Google Scholar] [CrossRef]

- Ramanathan, K.C.; Saravanan, S.; Adityakrishna, K.; Srinivas, T.; Selokar, A. Reference Evapotranspiration Assessment Techniques for Estimating Crop Water Requirement. Int. J. Eng. Technol. 2019, 8, 1094–1100. [Google Scholar] [CrossRef]

- Tzimopoulos, C.; Mpallas, L.; Papaevangelou, G. Estimation of Evapotranspiration Using Fuzzy Systems and Comparison With the Blaney-Criddle Method. J. Environ. Sci. Technol. 2008, 1, 181–186. [Google Scholar] [CrossRef]

- Schwalm, C.R.; Huntinzger, D.N.; Michalak, A.M.; Fisher, J.B.; Kimball, J.S.; Mueller, B.; Zhang, Y. Sensitivity of inferred climate model skill to evaluation decisions: A case study using CMIP5 evapotranspiration. Environ. Res. Lett. 2013, 8, 24028. [Google Scholar] [CrossRef]

- Ferreira, L.B.; da Cunha, F.F.; de Oliveira, R.A.; Fernandes Filho, E.I. Estimation of reference evapotranspiration in Brazil with limited meteorological data using ANN and SVM–A new approach. J. Hydrol. 2019, 572, 556–570. [Google Scholar] [CrossRef]

- Yu, H.; Wen, X.; Li, B.; Yang, Z.; Wu, M.; Ma, Y. Uncertainty analysis of artificial intelligence modeling daily reference evapotranspiration in the northwest end of China. Comput. Electron. Agric. 2020, 176, 105653. [Google Scholar] [CrossRef]

- Shabani, S.; Samadianfard, S.; Sattari, M.T.; Mosavi, A.; Shamshirband, S.; Kmet, T.; Várkonyi-Kóczy, A.R. Modeling pan evaporation using Gaussian process regression K-nearest neighbors random forest and support vector machines; comparative analysis. Atmosphere 2020, 11, 66. [Google Scholar] [CrossRef]

- Mohammadi, B.; Mehdizadeh, S. Modeling daily reference evapotranspiration via a novel approach based on support vector regression coupled with whale optimization algorithm. Agric. Water Manag. 2020, 237, 106145. [Google Scholar] [CrossRef]

- Granata, F.; Di Nunno, F. Forecasting evapotranspiration in different climates using ensembles of recurrent neural networks. Agric. Water Manag. 2021, 255, 107040. [Google Scholar] [CrossRef]

- Doorenbos, J.; Pruitt, W.O. Guidelines for Predicting Crop Water Requirements; XF2006236315; FAO: Rome, Italy, 1977; 24p. [Google Scholar]

- Allen, R.; Pruitt, W. Rational Use of the FAO Blaney-Criddle Formula. J. Irrig. Drain. Eng. 1986, 112, 139–155. [Google Scholar] [CrossRef]

- Allen, R.G.; Jensen, M.E.; Wright, J.L.; Burman, R.D. Operational Estimates of Reference Evapotranspiration. Agron. J. 1989, 81, 650–662. [Google Scholar] [CrossRef]

- Jensen, M.E.; Burman, R.D.; Allen, R.G. Evapotranspiration and Irrigation Water Requirements: A Manual; American Society of Civil, Engineers Committee on Irrigation Water Requirements: New York, NY, USA, 1990. [Google Scholar]

- Trajkovic, S.; Stankovic, M.; Todorovic, B. Estimation of FAO Blaney-Criddle b factor by RBF network. J. Irrig. Drain. Eng. 2000, 126, 268–270. [Google Scholar] [CrossRef]

- Frevert, D.K.; Hill, R.W.; Braaten, B.C. Estimation of FAO evapotranspiration coefficients. J. Irrig. Drain. Eng. 1983, 109, 265–270. [Google Scholar] [CrossRef]

- Allen, R.G.; Pruitt, W.O. FAO-24 reference evapotranspiration factors. J. Irrig. Drain. Eng. 1991, 117, 758–773. [Google Scholar] [CrossRef]

- Ambas, V.; Evanggelos, B. The Estimation of b Factor of the FAO24 Blaney—Cridlle Method with the Use of Weighted Least Squares. 2010. Available online: https://ui.adsabs.harvard.edu/abs/2010EGUGA..1213424V/abstract (accessed on 31 July 2022).

- Solomatine, D.; See, L.M.; Abrahart, R.J. Data-Driven Modelling: Concepts, Approaches and Experiences. In Practical Hydroinformatics: Computational Intelligence and Technological Developments in Water Applications; Springer: Berlin/Heidelberg, Germany, 2008; pp. 17–30. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Akay, H. Spatial modeling of snow avalanche susceptibility using hybrid and ensemble machine learning techniques. Catena 2021, 206, 105524. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests—Random Features; Statistics Department, University of California: Berkeley, CA, USA, 1999. [Google Scholar]

- Quinlan, J.R. Learning with continuous classes. In Proceedings of the 5th Australian Joint Conference on Artificial Intelligence, Hobart, TAS, Australia, 16–18 November 1992; pp. 343–348. [Google Scholar]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar]

- Xie, W.; Li, X.; Jian, W.; Yang, Y.; Liu, H.; Robledo, L.F.; Nie, W. A Novel Hybrid Method for Landslide Susceptibility Mapping-Based GeoDetector and Machine Learning Cluster: A Case of Xiaojin County, China. ISPRS Int. J. Geo-Inf. 2021, 10, 93. [Google Scholar] [CrossRef]

- Xie, W.; Nie, W.; Saffari, P.; Robledo, L.F.; Descote, P.; Jian, W. Landslide hazard assessment based on Bayesian optimization–support vector machine in Nanping City, China. Nat. Hazards 2021, 109, 931–948. [Google Scholar] [CrossRef]

| Hyper-Parameter | RF | M5 | SVR-poly | SVR-rbf | RT | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Value | Sensitive | Value | Sensitive | Value | Sensitive | Value | Sensitive | Value | Sensitive | |

| numIteration | 300 | yes | - | - | - | - | - | - | - | - |

| batchSize | 100 | no | 100 | no | - | - | - | - | 100 | no |

| numExecutionSlots | 1 | no | - | - | - | - | - | - | - | - |

| minNumInstances | - | - | 4 | yes | - | - | - | - | - | - |

| numDecimalPLaces | - | - | 4 | no | - | - | - | - | 2 | no |

| buildRegressionTree | - | - | FALSE | yes | - | - | - | - | - | - |

| complexity | - | - | - | - | 0.8 | yes | 1.0 | yes | - | - |

| exponent | - | - | - | - | 1.0 | yes | - | - | ||

| gamma | - | - | - | - | - | - | 1.0 | yes | - | - |

| minNum | - | - | - | - | - | - | - | - | 1.0 | yes |

| numFolds | - | - | - | - | - | - | - | - | 0 | yes |

| minVarianceProp | - | - | - | - | - | - | - | - | 0.001 | yes |

| RRSE | 12.14 | 11.46 | 24.21 | 2.37 | 24.23 | |||||

| Statistical Indices | Present Study | Previous Studies | |||||||

|---|---|---|---|---|---|---|---|---|---|

| RF | M5 | SVR-poly | SVR-rbf | RT | Frevert et al. (1983) | Allen & Pruitt (1991) | Ambas & Evanggelos (2010) | RBF Network | |

| MARE (%) | 1.81 | 2.96 | 7.52 | 0.49 | 1.19 | 3.07 | 1.69 | 5.99 | 0.34 |

| MXARE (%) | 8.1 | 19.2 | 58.7 | 5.0 | 17.6 | 14.4 | 11.8 | 41.1 | 1.8 |

| NE > 2% | 80 | 116 | 171 | 7 | 25 | 126 | 64 | 141 | 0 |

| DEV (%) | 1.62 | 2.97 | 8.00 | 0.55 | 3.16 | 2.72 | 1.68 | 7.22 | 0.31 |

| r2 | 0.997 | 0.991 | 0.944 | 1.000 | 0.993 | 0.989 | 0.998 | 0.962 | 1.000 |

| Months | Climatological Variables | |||||

|---|---|---|---|---|---|---|

| T (°C) | RHmin (%) | U2 (m/s) | n/N | P | A | |

| Feb. | 1.8 | 65 | 1.40 | 0.276 | 0.240 | −1.407 |

| Mar. | 8.3 | 50 | 1.89 | 0.366 | 0.270 | −1.561 |

| Apr. | 10.5 | 50 | 1.65 | 0.390 | 0.300 | −1.585 |

| May | 12.7 | 61 | 1.60 | 0.311 | 0.330 | −1.459 |

| Jun. | 20.6 | 45 | 0.77 | 0.636 | 0.347 | −1.853 |

| Jul. | 21.4 | 55 | 1.17 | 0.535 | 0.337 | −1.709 |

| Aug. | 19.6 | 56 | 1.00 | 0.510 | 0.310 | −1.679 |

| Sep. | 17.9 | 43 | 1.25 | 0.626 | 0.280 | −1.851 |

| Oct. | 11.6 | 55 | 1.44 | 0.323 | 0.250 | −1.497 |

| Nov. | 7.8 | 63 | 1.34 | 0.238 | 0.220 | −1.377 |

| Months | b | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Frevert et al. (1983) | Allen & Pruitt (1991) | Ambas & Evanggelos (2010) | Table Interpolation [25] | RBF [25] | RF | M5 | SVR-poly | SVR-rbf | RT | |

| Feb. | 0.779 | 0.788 | 0.803 | 0.821 | 0.823 | 0.846 | 0.844 | 0.823 | 0.823 | 0.823 |

| Mar. | 0.965 | 0.977 | 0.989 | 1.011 | 1.012 | 1.002 | 1.008 | 1.012 | 1.012 | 1.012 |

| Apr. | 0.975 | 0.981 | 0.993 | 1.016 | 1.017 | 1.000 | 1.000 | 1.017 | 1.022 | 1.020 |

| May | 0.836 | 0.846 | 0.860 | 0.886 | 0.884 | 0.888 | 0.909 | 0.883 | 0.884 | 0.884 |

| Jun. | 1.175 | 1.136 | 1.149 | 1.162 | 1.165 | 1.174 | 1.141 | 1.174 | 1.165 | 1.165 |

| Jul. | 1.030 | 1.015 | 1.025 | 1.047 | 1.053 | 1.047 | 1.088 | 1.052 | 1.054 | 1.053 |

| Aug. | 0.998 | 0.982 | 0.994 | 1.017 | 1.022 | 1.035 | 1.038 | 1.021 | 1.022 | 1.020 |

| Sep. | 1.203 | 1.179 | 1.185 | 1.199 | 1.202 | 1.202 | 1.197 | 1.202 | 1.202 | 1.202 |

| Oct. | 0.881 | 0.889 | 0.903 | 0.928 | 0.930 | 0.940 | 0.900 | 0.929 | 0.930 | 0.930 |

| Nov. | 0.764 | 0.775 | 0.791 | 0.813 | 0.811 | 0.831 | 0.795 | 0.812 | 0.818 | 0.811 |

| Months | Difference of b-Factor | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Frevert et al. (1983) | Allen & Pruitt (1991) | Ambas & Evanggelos (2010) | Table Interpolation [25] | RBF [25] | RF | M5 | SVR-poly | SVR-rbf | RT | |

| Feb. | −0.042 | −0.033 | −0.018 | 0.000 | 0.002 | 0.025 | 0.023 | 0.002 | 0.002 | 0.002 |

| Mar. | −0.046 | −0.034 | −0.022 | 0.000 | 0.001 | −0.009 | −0.003 | 0.001 | 0.001 | 0.001 |

| Apr. | −0.041 | −0.035 | −0.023 | 0.000 | 0.001 | −0.016 | −0.016 | 0.001 | 0.006 | 0.004 |

| May | −0.050 | −0.040 | −0.026 | 0.000 | −0.002 | 0.002 | 0.023 | −0.003 | −0.002 | −0.002 |

| Jun. | 0.013 | −0.026 | −0.013 | 0.000 | 0.003 | 0.012 | −0.021 | 0.012 | 0.003 | 0.003 |

| Jul. | −0.017 | −0.032 | −0.022 | 0.000 | 0.006 | 0.000 | 0.041 | 0.005 | 0.007 | 0.006 |

| Aug. | −0.019 | −0.035 | −0.023 | 0.000 | 0.005 | 0.018 | 0.021 | 0.004 | 0.005 | 0.003 |

| Sep. | 0.004 | −0.020 | −0.014 | 0.000 | 0.003 | 0.003 | −0.002 | 0.003 | 0.003 | 0.003 |

| Oct. | −0.047 | −0.039 | −0.025 | 0.000 | 0.002 | 0.012 | −0.028 | 0.001 | 0.002 | 0.002 |

| Nov. | −0.049 | −0.038 | −0.022 | 0.000 | −0.002 | 0.018 | −0.018 | −0.001 | 0.005 | −0.002 |

| Months | ETo (mm/month) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Frevert et al. (1983) | Allen & Pruitt (1991) | Ambas & Evanggelos (2010) | Table Interpolation [25] | RBF [25] | RF | M5 | SVR-poly | SVR-rbf | RT | |

| Feb. | 7.5 | 8.1 | 8.9 | 10.0 | 10.2 | 11.5 | 11.4 | 10.2 | 10.2 | 10.2 |

| Mar. | 48.1 | 49.3 | 50.6 | 52.7 | 52.8 | 51.8 | 52.4 | 52.8 | 52.8 | 52.8 |

| Apr. | 66.1 | 66.9 | 68.3 | 71.0 | 71.1 | 69.1 | 69.1 | 71.1 | 71.7 | 71.4 |

| May | 74.3 | 75.7 | 77.7 | 81.4 | 81.1 | 81.7 | 84.7 | 81.0 | 81.1 | 81.1 |

| Jun. | 159.8 | 152.7 | 155.0 | 157.4 | 157.9 | 159.6 | 153.5 | 159.6 | 157.9 | 157.9 |

| Jul. | 140.4 | 137.6 | 139.6 | 143.6 | 144.8 | 143.6 | 151.3 | 144.6 | 145.0 | 144.8 |

| Aug. | 112.3 | 109.7 | 111.8 | 115.5 | 116.3 | 118.5 | 119.0 | 116.2 | 116.3 | 116.0 |

| Sep. | 109.8 | 106.5 | 107.3 | 109.3 | 109.7 | 109.7 | 109.0 | 109.7 | 109.7 | 109.7 |

| Oct. | 45.6 | 46.4 | 47.9 | 50.5 | 50.7 | 51.7 | 47.5 | 50.6 | 50.7 | 50.7 |

| Nov. | 17.8 | 18.6 | 19.9 | 21.6 | 21.4 | 23.0 | 20.2 | 21.5 | 22.0 | 21.4 |

| Yearly | 781.7 | 771.5 | 786.9 | 813.0 | 816.0 | 820.2 | 818.2 | 817.1 | 817.3 | 816.0 |

| Yearly Difference (%) | −3.9 | −5.1 | −3.2 | 0.0 | 0.4 | 0.9 | 0.6 | 0.5 | 0.5 | 0.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thongkao, S.; Ditthakit, P.; Pinthong, S.; Salaeh, N.; Elkhrachy, I.; Linh, N.T.T.; Pham, Q.B. Estimating FAO Blaney-Criddle b-Factor Using Soft Computing Models. Atmosphere 2022, 13, 1536. https://doi.org/10.3390/atmos13101536

Thongkao S, Ditthakit P, Pinthong S, Salaeh N, Elkhrachy I, Linh NTT, Pham QB. Estimating FAO Blaney-Criddle b-Factor Using Soft Computing Models. Atmosphere. 2022; 13(10):1536. https://doi.org/10.3390/atmos13101536

Chicago/Turabian StyleThongkao, Suthira, Pakorn Ditthakit, Sirimon Pinthong, Nureehan Salaeh, Ismail Elkhrachy, Nguyen Thi Thuy Linh, and Quoc Bao Pham. 2022. "Estimating FAO Blaney-Criddle b-Factor Using Soft Computing Models" Atmosphere 13, no. 10: 1536. https://doi.org/10.3390/atmos13101536

APA StyleThongkao, S., Ditthakit, P., Pinthong, S., Salaeh, N., Elkhrachy, I., Linh, N. T. T., & Pham, Q. B. (2022). Estimating FAO Blaney-Criddle b-Factor Using Soft Computing Models. Atmosphere, 13(10), 1536. https://doi.org/10.3390/atmos13101536