Deterministic and Probabilistic Evaluation of Sub-Seasonal Precipitation Forecasts at Various Spatiotemporal Scales over China during the Boreal Summer Monsoon

Abstract

:1. Introduction

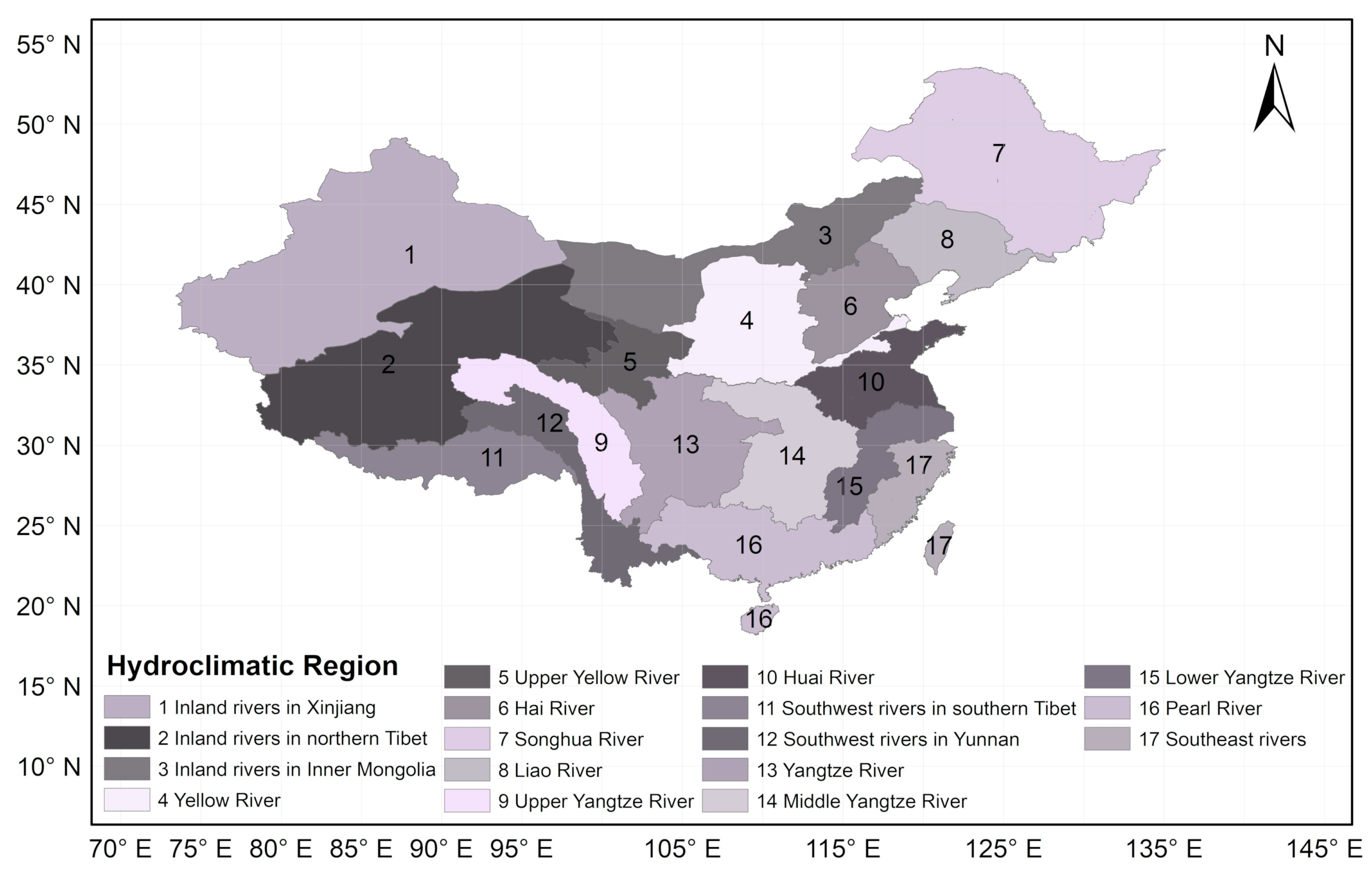

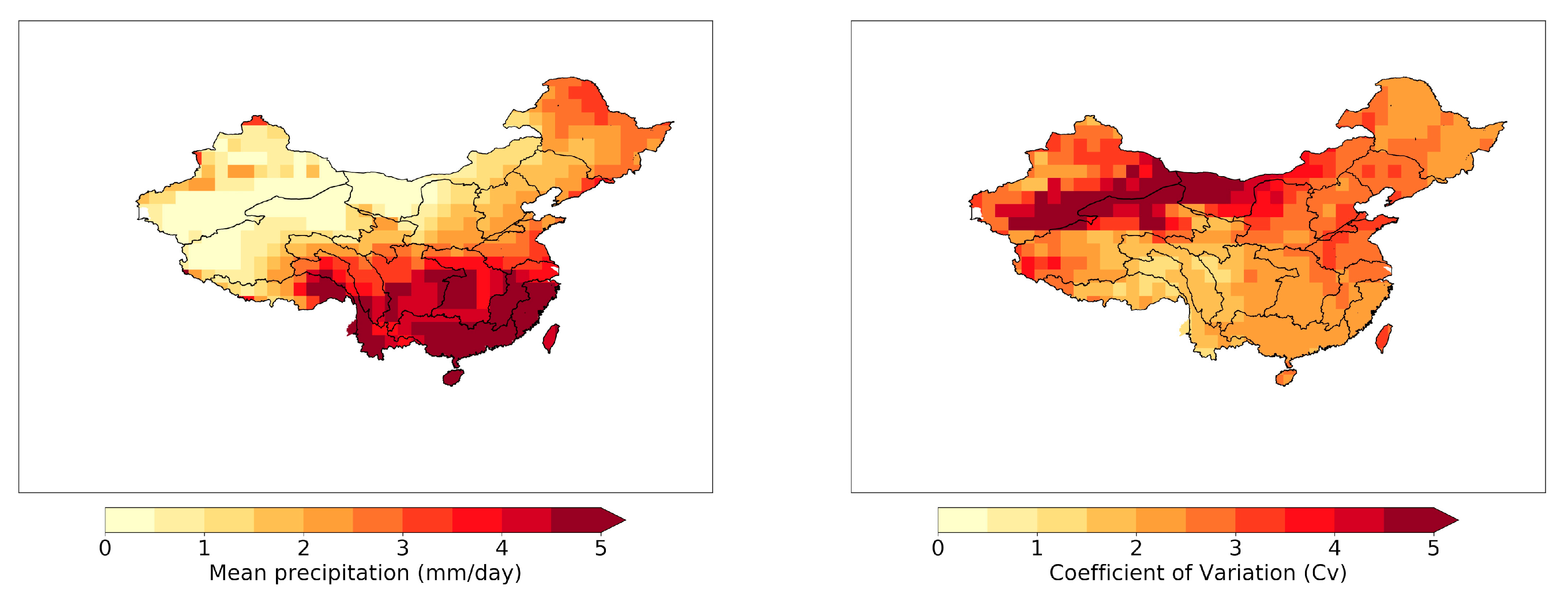

2. Data and Methodology

2.1. GCM Models and Reference Dataset

2.2. Evaluation Strategy and Skill Metrics

2.2.1. Deterministic Metrics

2.2.2. Probabilistic Metrics

2.3. Sources of Sub-Seasonal Predictability

3. Results

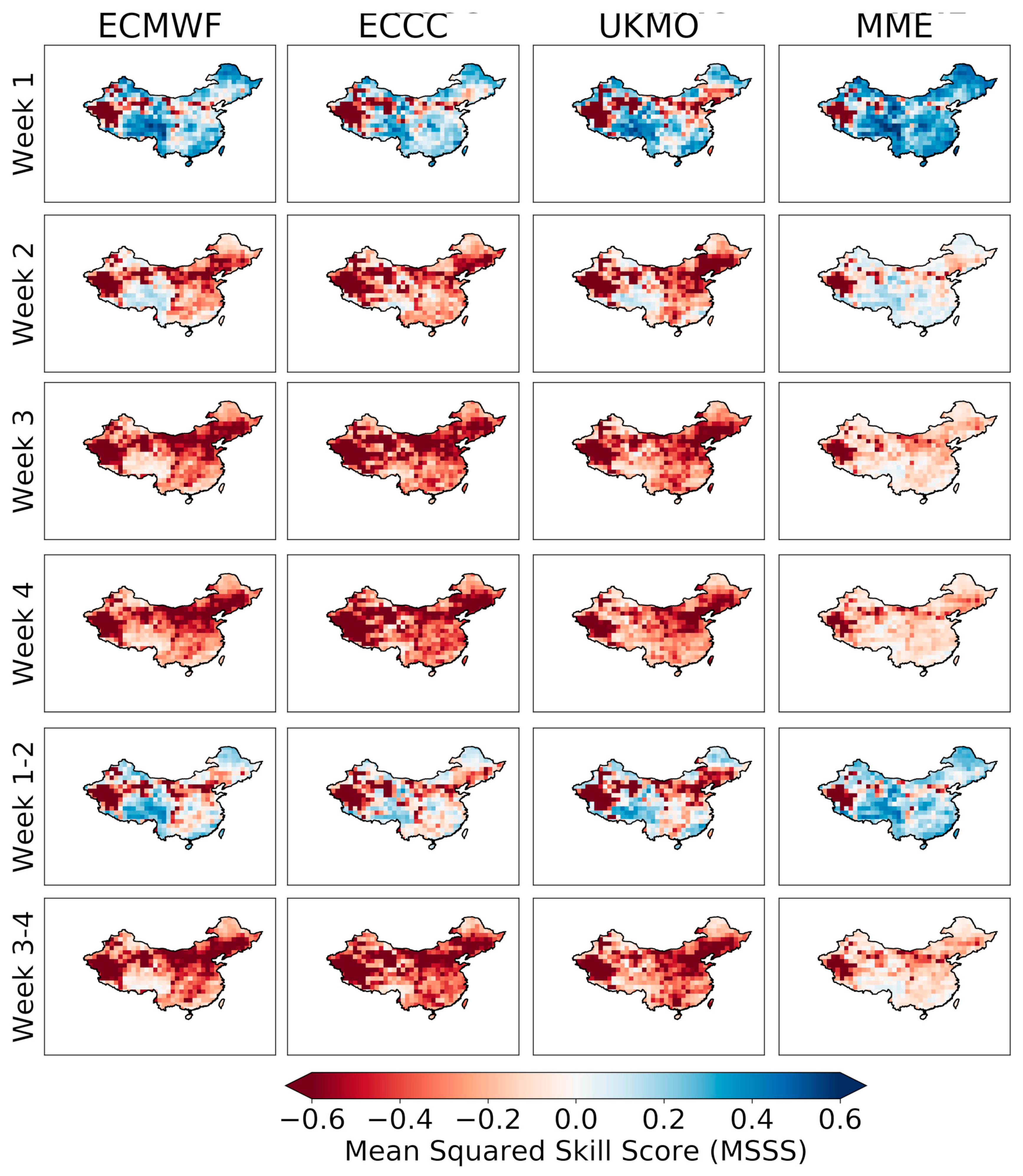

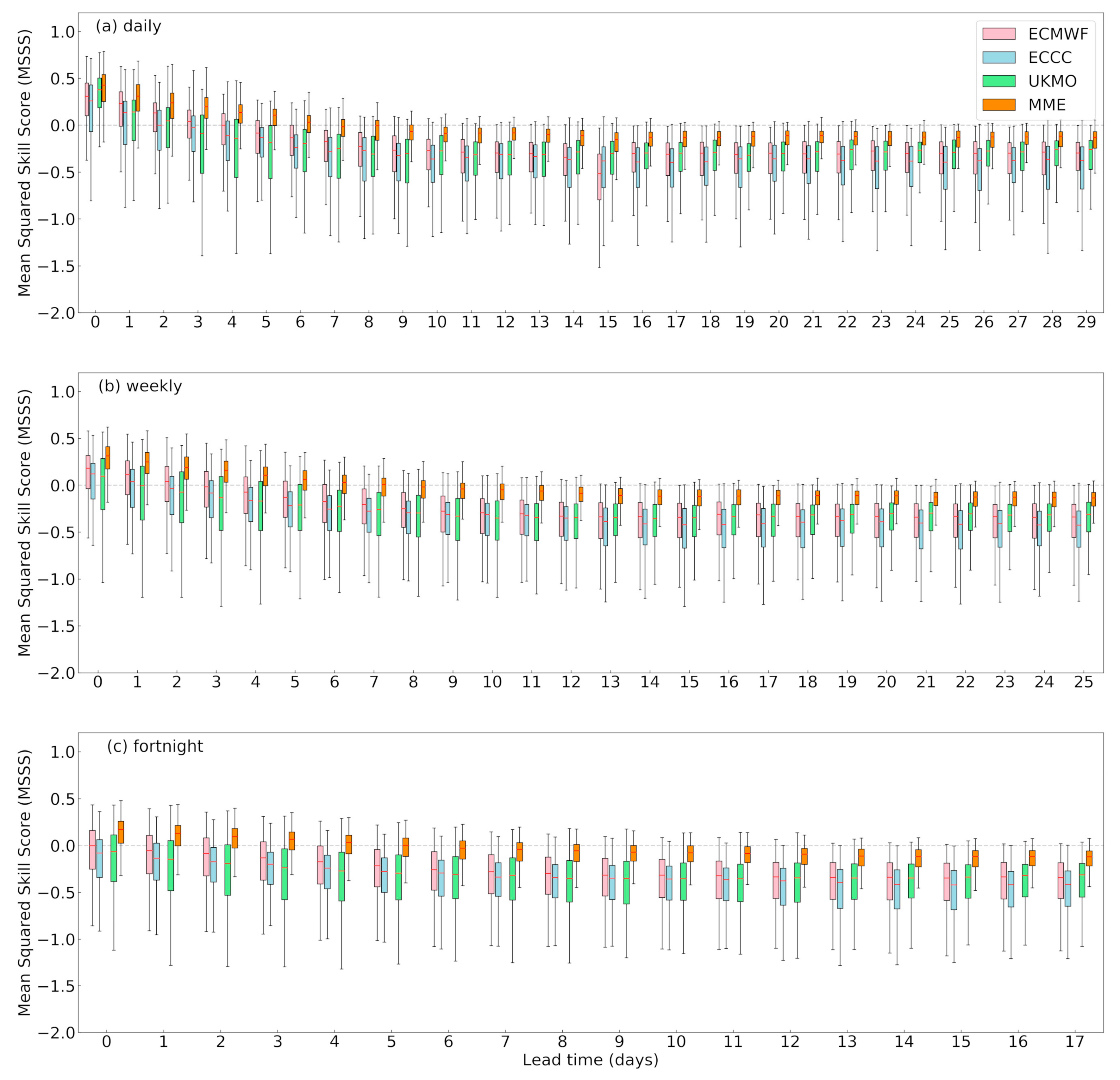

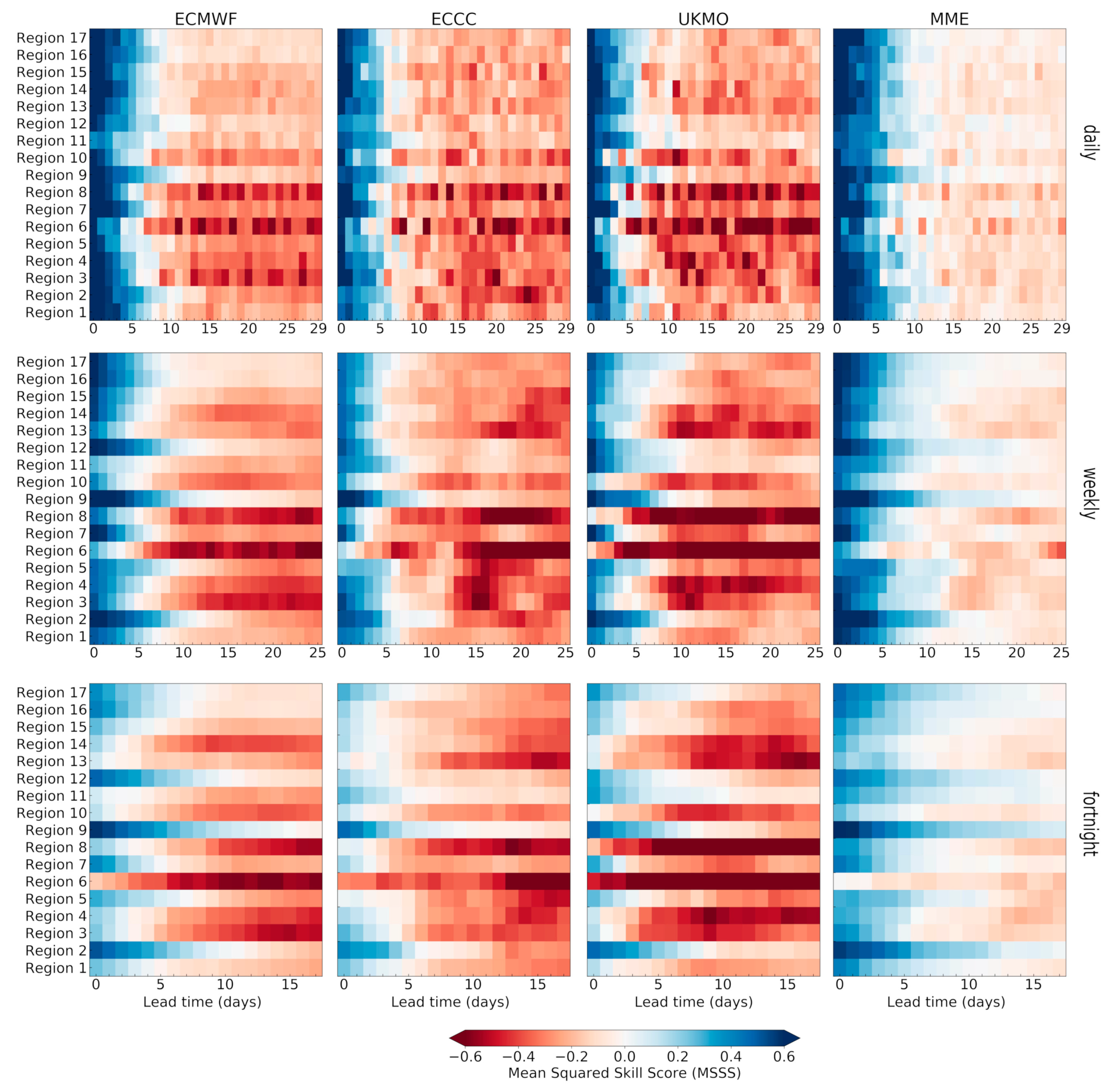

3.1. Deterministic Evaluation

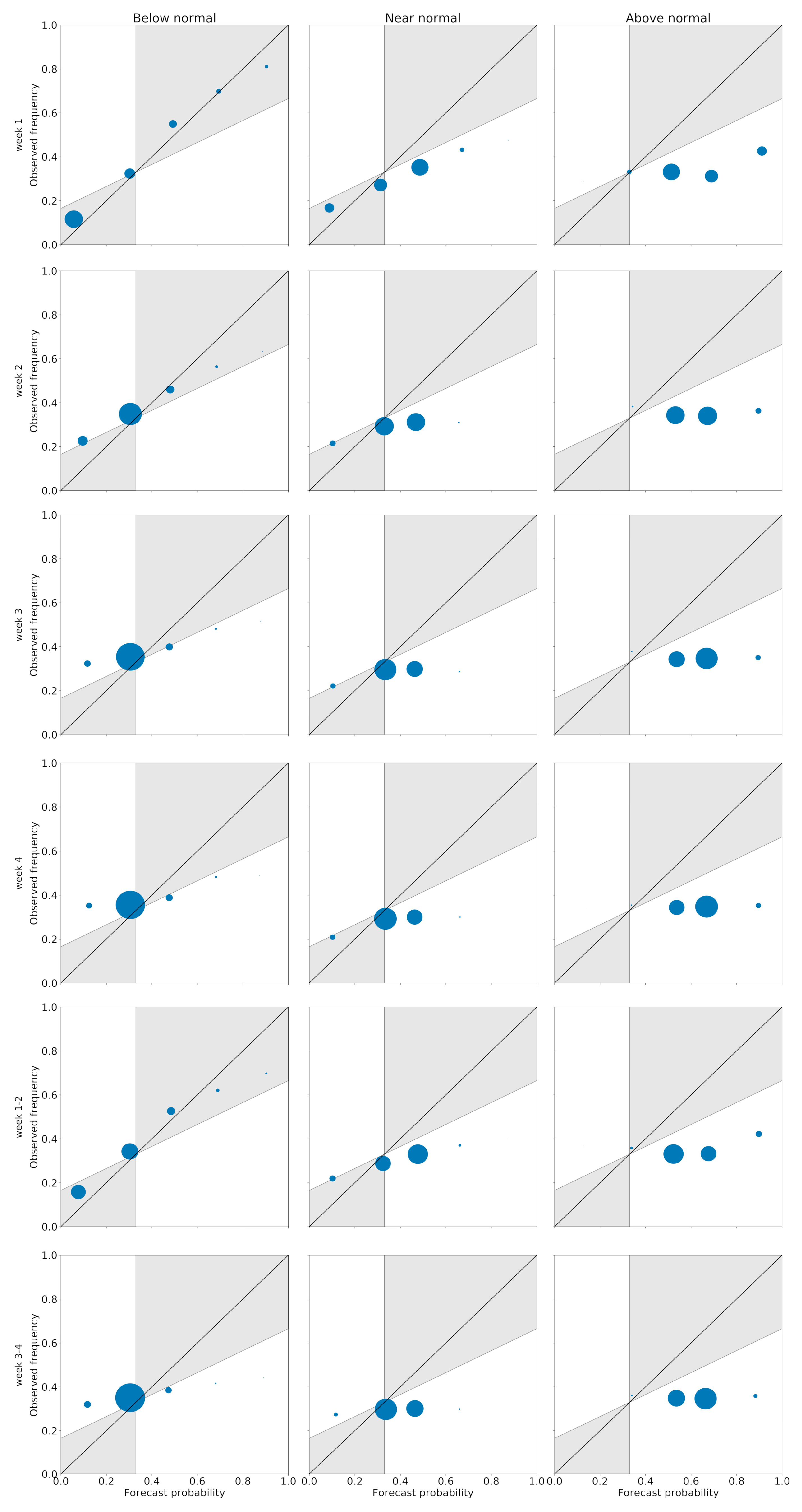

3.2. Probabilistic Evaluation

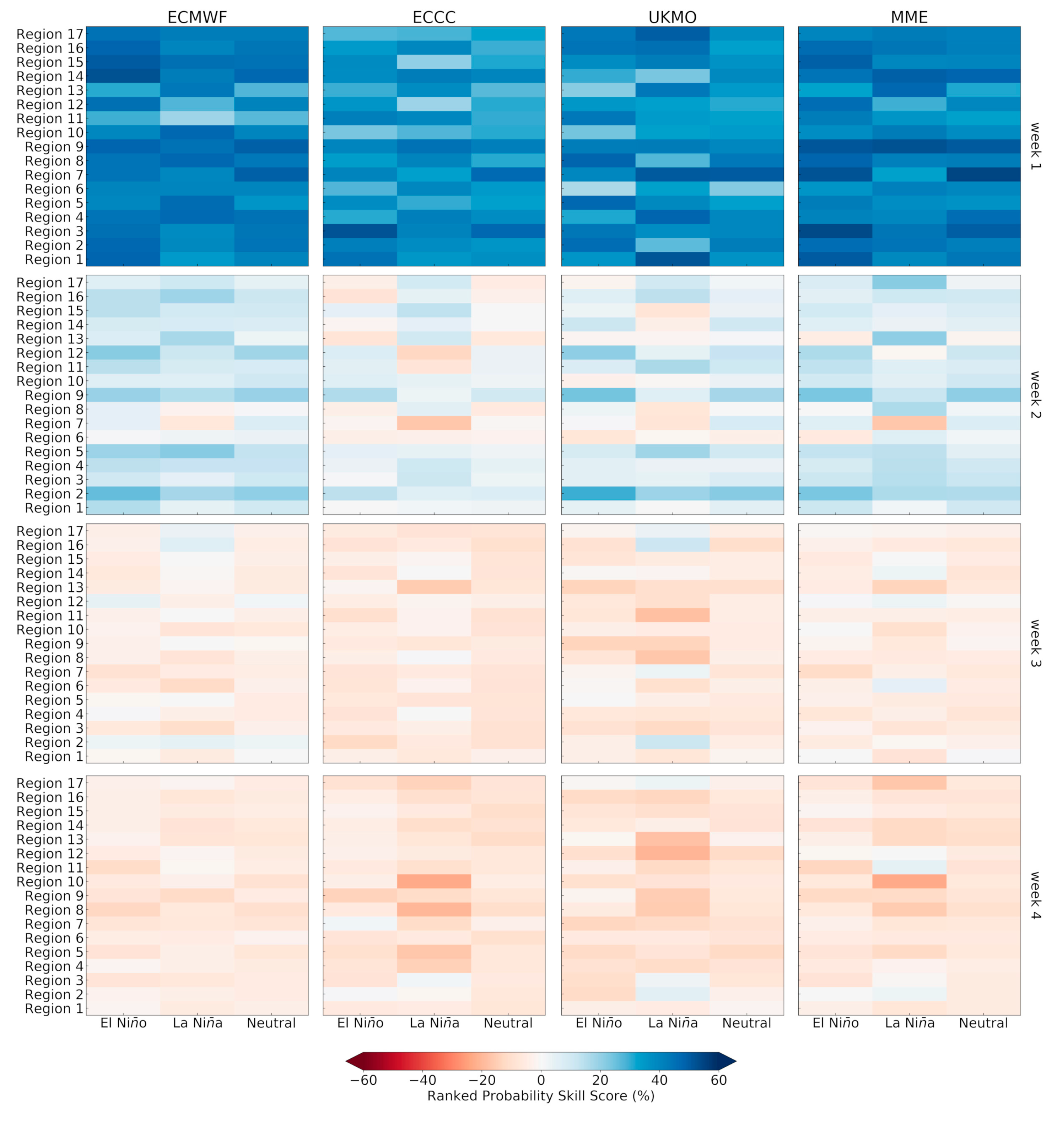

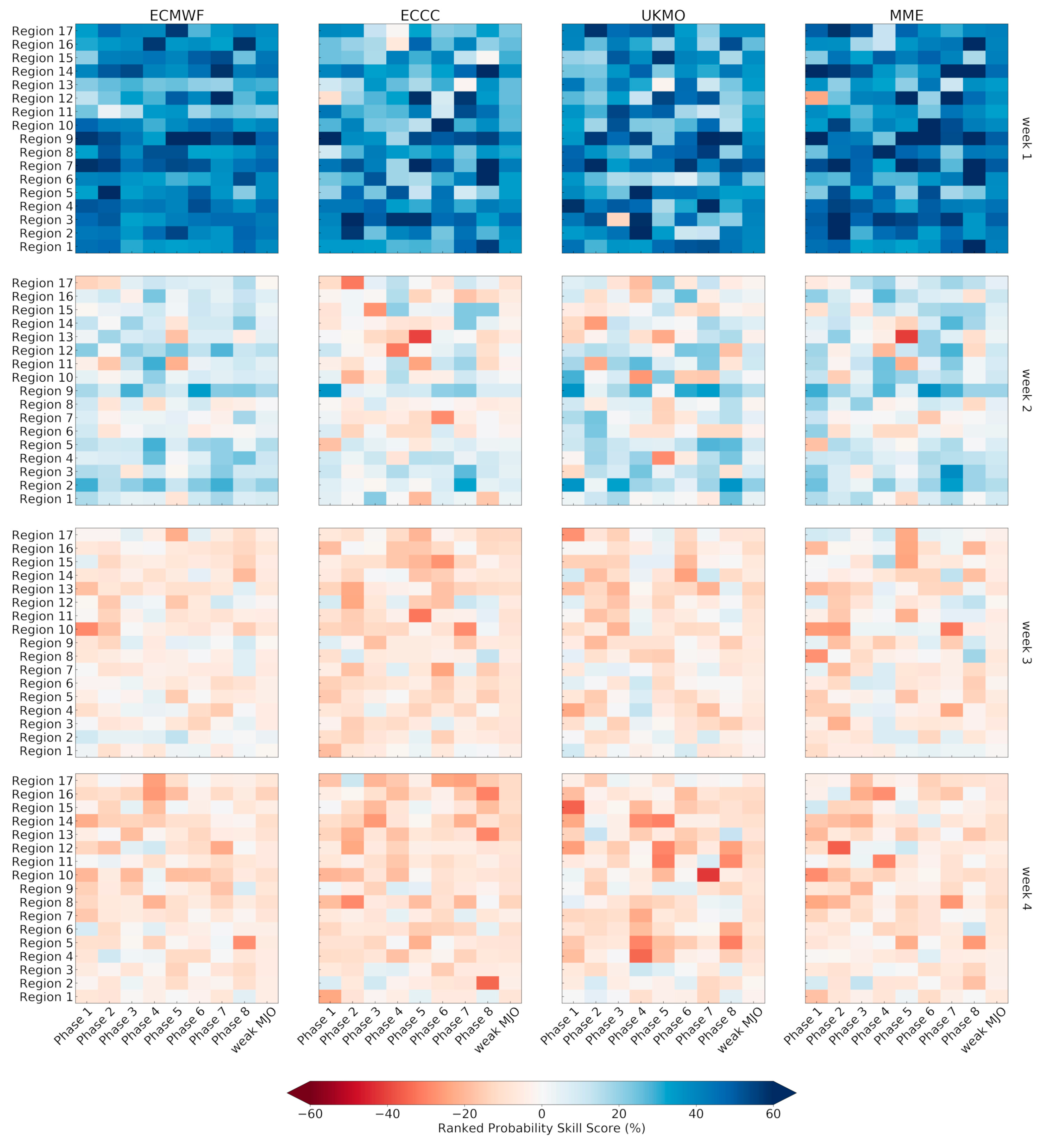

3.3. The Impact of ENSO and MJO on Sub-Seasonal Predictability

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vitart, F.; Ardilouze, C.; Bonet, A.; Brookshaw, A.; Chen, M.; Codorean, C.; Déqué, M.; Ferranti, L.; Fucile, E.; Fuentes, M. The subseasonal to seasonal (S2S) prediction project database. Bull. Am. Meteorol. Soc. 2017, 98, 163–173. [Google Scholar] [CrossRef]

- Vitart, F.; Robertson, A.W. The sub-seasonal to seasonal prediction project (S2S) and the prediction of extreme events. Npj Clim. Atmos. Sci. 2018, 1, 3. [Google Scholar] [CrossRef] [Green Version]

- Vitart, F.; Robertson, A.W.; Anderson, D.L. Subseasonal to Seasonal Prediction Project: Bridging the gap between weather and climate. Bull. World Meteorol. Organ. 2012, 61, 23. [Google Scholar]

- de Andrade, F.M.; Coelho, C.A.; Cavalcanti, I.F. Global precipitation hindcast quality assessment of the Subseasonal to Seasonal (S2S) prediction project models. Clim. Dyn. 2018, 1–25. [Google Scholar] [CrossRef]

- Vitart, F. Evolution of ECMWF sub-seasonal forecast skill scores. Q. J. R. Meteorol. Soc. 2014, 140, 1889–1899. [Google Scholar] [CrossRef]

- Miura, H.; Satoh, M.; Nasuno, T.; Noda, A.T.; Oouchi, K. A Madden-Julian oscillation event realistically simulated by a global cloud-resolving model. Science 2007, 318, 1763–1765. [Google Scholar] [CrossRef] [Green Version]

- Vitart, F. Madden—Julian oscillation prediction and teleconnections in the S2S database. Q. J. R. Meteorol. Soc. 2017, 143, 2210–2220. [Google Scholar] [CrossRef]

- Baldwin, M.P.; Stephenson, D.B.; Thompson, D.W.J.; Dunkerton, T.J.; Charlton, A.J.; O’Neill, A. Stratospheric Memory and Skill of Extended-Range Weather Forecasts. Science 2003, 301, 636–640. [Google Scholar] [CrossRef]

- Domeisen, D.I.; Butler, A.H.; Charlton-Perez, A.J.; Ayarzagüena, B.; Baldwin, M.P.; Dunn-Sigouin, E.; Furtado, J.C.; Garfinkel, C.I.; Hitchcock, P.; Karpechko, A.Y. The role of the stratosphere in subseasonal to seasonal prediction: 2. Predictability arising from stratosphere-troposphere coupling. J. Geophys. Res. Atmos. 2020, 125, e2019JD030923. [Google Scholar] [CrossRef]

- Prodhomme, C.; Doblas-Reyes, F.; Bellprat, O.; Dutra, E. Impact of land-surface initialization on sub-seasonal to seasonal forecasts over Europe. Clim. Dyn. 2016, 47, 919–935. [Google Scholar] [CrossRef]

- Zhao, M.; Zhang, H.; Dharssi, I. On the soil moisture memory and influence on coupled seasonal forecasts over Australia. Clim. Dyn. 2019, 52, 7085–7109. [Google Scholar] [CrossRef]

- Thomas, J.A.; Berg, A.A.; Merryfield, W. Influence of snow and soil moisture initialization on sub-seasonal predictability and forecast skill in boreal spring. Clim. Dyn. 2016, 47, 49–65. [Google Scholar] [CrossRef]

- Orsolini, Y.; Senan, R.; Balsamo, G.; Doblas-Reyes, F.; Vitart, F.; Weisheimer, A.; Carrasco, A.; Benestad, R. Impact of snow initialization on sub-seasonal forecasts. Clim. Dyn. 2013, 41, 1969–1982. [Google Scholar] [CrossRef]

- Liang, P.; Lin, H. Sub-seasonal prediction over East Asia during boreal summer using the ECCC monthly forecasting system. Clim. Dyn. 2018, 50, 1007–1022. [Google Scholar] [CrossRef]

- Saravanan, R.; Chang, P. Midlatitude mesoscale ocean-atmosphere interaction and its relevance to S2S prediction. In Sub-Seasonal to Seasonal Prediction; Elsevier: Amsterdam, The Netherlands, 2019; pp. 183–200. [Google Scholar]

- Tian, D.; Wood, E.F.; Yuan, X. CFSv2-based sub-seasonal precipitation and temperature forecast skill over the contiguous United States. Hydrol. Earth Syst. Sci. 2017, 21, 1477–1490. [Google Scholar] [CrossRef] [Green Version]

- Gong, X.; Barnston, A.G.; Ward, M.N. The effect of spatial aggregation on the skill of seasonal precipitation forecasts. J. Clim. 2003, 16, 3059–3071. [Google Scholar] [CrossRef]

- Lau, K.M.; Wu, H.T. Detecting trends in tropical rainfall characteristics, 1979–2003. Int. J. Climatol. 2007, 27, 979–988. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Robertson, A.W. Evaluation of submonthly precipitation forecast skill from global ensemble prediction systems. Mon. Weather Rev. 2015, 143, 2871–2889. [Google Scholar] [CrossRef]

- Pan, B.; Hsu, K.; AghaKouchak, A.; Sorooshian, S.; Higgins, W. Precipitation Prediction Skill for the West Coast United States: From Short to Extended Range. J. Clim. 2019, 32, 161–182. [Google Scholar] [CrossRef]

- Gultepe, I.; Agelin-Chaab, M.; Komar, J.; Elfstrom, G.; Boudala, F.; Zhou, B. A Meteorological Supersite for Aviation and Cold Weather Applications. Pure Appl. Geophys. 2019, 176, 1977–2015. [Google Scholar] [CrossRef]

- Gultepe, I.; Sharman, R.; Williams, P.D.; Zhou, B.; Ellrod, G.; Minnis, P.; Trier, S.; Griffin, S.; Yum, S.S.; Gharabaghi, B.; et al. A Review of High Impact Weather for Aviation Meteorology. Pure Appl. Geophys. 2019, 176, 1869–1921. [Google Scholar] [CrossRef]

- Kuhn, T.; Gultepe, I. Ice Fog and Light Snow Measurements Using a High-Resolution Camera System. Pure Appl. Geophys. 2016, 173, 3049–3064. [Google Scholar] [CrossRef] [Green Version]

- Murali Krishna, U.V.; Das, S.K.; Deshpande, S.M.; Doiphode, S.L.; Pandithurai, G. The assessment of Global Precipitation Measurement estimates over the Indian subcontinent. Earth Space Sci. 2017, 4, 540–553. [Google Scholar] [CrossRef]

- Buizza, R.; Leutbecher, M. The forecast skill horizon. Q. J. R. Meteorol. Soc. 2015, 141, 3366–3382. [Google Scholar] [CrossRef]

- Vigaud, N.; Tippett, M.K.; Robertson, A.W. Probabilistic Skill of Subseasonal Precipitation Forecasts for the East Africa–West Asia Sector during September–May. Weather Forecast. 2018, 33, 1513–1532. [Google Scholar] [CrossRef]

- van Straaten, C.; Whan, K.; Coumou, D.; van den Hurk, B.; Schmeits, M. The influence of aggregation and statistical post-processing on the subseasonal predictability of European temperatures. Q. J. R. Meteorol. Soc. 2020, 146, 2654–2670. [Google Scholar] [CrossRef]

- Krishnamurti, T.N.; Kishtawal, C.M.; LaRow, T.E.; Bachiochi, D.R.; Zhang, Z.; Williford, C.E.; Gadgil, S.; Surendran, S. Improved Weather and Seasonal Climate Forecasts from Multimodel Superensemble. Science 1999, 285, 1548. [Google Scholar] [CrossRef] [Green Version]

- Krishnamurti, T.N.; Kishtawal, C.M.; Zhang, Z.; LaRow, T.; Bachiochi, D.; Williford, E.; Gadgil, S.; Surendran, S. Multimodel Ensemble Forecasts for Weather and Seasonal Climate. J. Clim. 2000, 13, 4196–4216. [Google Scholar] [CrossRef]

- Krishnamurti, T.N.; Kumar, V.; Simon, A.; Bhardwaj, A.; Ghosh, T.; Ross, R. A review of multimodel superensemble forecasting for weather, seasonal climate, and hurricanes. Rev. Geophys. 2016, 54, 336–377. [Google Scholar] [CrossRef]

- Vigaud, N.; Robertson, A.; Tippett, M. Multimodel Ensembling of Subseasonal Precipitation Forecasts over North America. Mon. Weather Rev. 2017, 145, 3913–3928. [Google Scholar] [CrossRef]

- Wang, Y.; Ren, H.-L.; Zhou, F.; Fu, J.-X.; Chen, Q.-L.; Wu, J.; Jie, W.-H.; Zhang, P.-Q. Multi-Model Ensemble Sub-Seasonal Forecasting of Precipitation over the Maritime Continent in Boreal Summer. Atmosphere 2020, 11, 515. [Google Scholar] [CrossRef]

- Schepen, A.; Zhao, T.; Wang, Q.J.; Robertson, D.E. A Bayesian modelling method for post-processing daily sub-seasonal to seasonal rainfall forecasts from global climate models and evaluation for 12 Australian catchments. Hydrol. Earth Syst. Sci. 2018, 22, 1615–1628. [Google Scholar] [CrossRef] [Green Version]

- Ding, Y. Summer Monsoon Rainfalls in China. J. Meteorol. Soc. Japan. Ser. II 1992, 70, 373–396. [Google Scholar] [CrossRef] [Green Version]

- Yihui, D.; Chan, J.C.L. The East Asian summer monsoon: An overview. Meteorol. Atmos. Phys. 2005, 89, 117–142. [Google Scholar] [CrossRef]

- Li, Y.; Wu, Z.; He, H.; Wang, Q.J.; Xu, H.; Lu, G. Post-processing sub-seasonal precipitation forecasts at various spatiotemporal scales across China during boreal summer monsoon. J. Hydrol. 2021, 598, 125742. [Google Scholar] [CrossRef]

- Robertson, A.W.; Kumar, A.; Peña, M.; Vitart, F. Improving and promoting subseasonal to seasonal prediction. Bull. Am. Meteorol. Soc. 2015, 96, ES49–ES53. [Google Scholar] [CrossRef]

- Sun, Q.; Miao, C.; Duan, Q.; Ashouri, H.; Sorooshian, S.; Hsu, K.L. A review of global precipitation data sets: Data sources, estimation, and intercomparisons. Rev. Geophys. 2018, 56, 79–107. [Google Scholar] [CrossRef] [Green Version]

- Xie, P.; Arkin, P.A. Global precipitation: A 17-year monthly analysis based on gauge observations, satellite estimates, and numerical model outputs. Bull. Am. Meteorol. Soc. 1997, 78, 2539–2558. [Google Scholar] [CrossRef]

- Beck, H.E.; Wood, E.F.; Pan, M.; Fisher, C.K.; Miralles, D.G.; van Dijk, A.I.; McVicar, T.R.; Adler, R.F. MSWEP V2 global 3-hourly 0.1 precipitation: Methodology and quantitative assessment. Bull. Am. Meteorol. Soc. 2019, 100, 473–500. [Google Scholar] [CrossRef] [Green Version]

- Wu, Z.; Xu, Z.; Fang, W.; Hai, H.; Zhou, J.; Wu, X.; Liu, Z. Hydrologic Evaluation of Multi-Source Satellite Precipitation Products for the Upper Huaihe River Basin, China. Remote. Sens. 2018, 10, 840. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Wu, Z.; He, H.; Wu, X.; Guo, X. Evaluating the accuracy of MSWEP V2.1 and its performance for drought monitoring over mainland China. Atmos. Res. 2019, 226. [Google Scholar] [CrossRef]

- Murphy, A.H. Skill Scores Based on the Mean Square Error and Their Relationships to the Correlation Coefficient. Mon. Weather Rev. 1988, 116, 2417–2424. [Google Scholar] [CrossRef]

- Tippett, M.; Barnston, A.; Robertson, A. Estimation of Seasonal Precipitation Tercile-Based Categorical Probabilities from Ensembles. J. Clim. 2007, 20, 2210–2228. [Google Scholar] [CrossRef] [Green Version]

- Hsu, W.-R.; Murphy, A.H. The attributes diagram A geometrical framework for assessing the quality of probability forecasts. Int. J. Forecast. 1986, 2, 285–293. [Google Scholar] [CrossRef]

- Peng, Z.; Wang, Q.; Bennett, J.C.; Pokhrel, P.; Wang, Z. Seasonal precipitation forecasts over China using monthly large-scale oceanic-atmospheric indices. J. Hydrol. 2014, 519, 792–802. [Google Scholar] [CrossRef]

- Xavier, P.; Rahmat, R.; Cheong, W.K.; Wallace, E. Influence of Madden-Julian Oscillation on Southeast Asia rainfall extremes: Observations and predictability. Geophys. Res. Lett. 2014, 41, 4406–4412. [Google Scholar] [CrossRef]

- Ouyang, R.; Liu, W.; Fu, G.; Liu, C.; Hu, L.; Wang, H. Linkages between ENSO/PDO signals and precipitation, streamflow in China during the last 100 years. Hydrol. Earth Syst. Sci. 2014, 18, 3651–3661. [Google Scholar] [CrossRef] [Green Version]

- Lang, Y.; Ye, A.; Gong, W.; Miao, C.; Di, Z.; Xu, J.; Liu, Y.; Luo, L.; Duan, Q. Evaluating skill of seasonal precipitation and temperature predictions of NCEP CFSv2 forecasts over 17 hydroclimatic regions in China. J. Hydrometeorol. 2014, 15, 1546–1559. [Google Scholar] [CrossRef]

- Fu, X.; Wang, B. Differences of boreal summer intraseasonal oscillations simulated in an atmosphere–ocean coupled model and an atmosphere-only model. J. Clim. 2004, 17, 1263–1271. [Google Scholar] [CrossRef]

- Wang, Q.J.; Shao, Y.; Song, Y.; Schepen, A.; Robertson, D.E.; Ryu, D.; Pappenberger, F. An evaluation of ECMWF SEAS5 seasonal climate forecasts for Australia using a new forecast calibration algorithm. Environ. Model. Softw. 2019, 122, 104550. [Google Scholar] [CrossRef]

- Barriopedro, D.; Gouveia, C.l.M.; Trigo, R.M.; Wang, L. The 2009/10 Drought in China: Possible Causes and Impacts on Vegetation. J. Hydrometeorol. 2012, 13, 1251–1267. [Google Scholar] [CrossRef] [Green Version]

- Xie, Y.; Xing, J.; Shi, J.; Dou, Y.; Lei, Y. Impacts of radiance data assimilation on the Beijing 7.21 heavy rainfall. Atmos. Res. 2016, 169, 318–330. [Google Scholar] [CrossRef]

- Lavaysse, C.; Vogt, J.; Pappenberger, F. Early warning of drought in Europe using the monthly ensemble system from ECMWF. Hydrol. Earth Syst. Sci. 2015, 19, 3273–3286. [Google Scholar] [CrossRef] [Green Version]

- Robertson, A.; Vitart, F. Sub-seasonal to Seasonal Prediction: The Gap between Weather and Climate Forecasting; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Wang, L.; Robertson, A.W. Week 3–4 predictability over the United States assessed from two operational ensemble prediction systems. Clim. Dyn. 2018, 1–15. [Google Scholar] [CrossRef] [Green Version]

| S2S Model | Time Range (Days) | Spatial Resolution | Hindcast Frequency | Hindcast Period | Ensemble Size | Ocean Coupling |

|---|---|---|---|---|---|---|

| ECMWF * | 46 | Tco639/Tco319, L91 | 2/week | Past 20 years | 11 | Yes |

| UKMO * | 60 | N216, L85 | 4/month | 1993–2017 | 7 | Yes |

| ECCC * | 32 | 0.45° × 0.45°, L40 | Weekly | 1998–2018 | 4 | No |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wu, Z.; He, H.; Lu, G. Deterministic and Probabilistic Evaluation of Sub-Seasonal Precipitation Forecasts at Various Spatiotemporal Scales over China during the Boreal Summer Monsoon. Atmosphere 2021, 12, 1049. https://doi.org/10.3390/atmos12081049

Li Y, Wu Z, He H, Lu G. Deterministic and Probabilistic Evaluation of Sub-Seasonal Precipitation Forecasts at Various Spatiotemporal Scales over China during the Boreal Summer Monsoon. Atmosphere. 2021; 12(8):1049. https://doi.org/10.3390/atmos12081049

Chicago/Turabian StyleLi, Yuan, Zhiyong Wu, Hai He, and Guihua Lu. 2021. "Deterministic and Probabilistic Evaluation of Sub-Seasonal Precipitation Forecasts at Various Spatiotemporal Scales over China during the Boreal Summer Monsoon" Atmosphere 12, no. 8: 1049. https://doi.org/10.3390/atmos12081049

APA StyleLi, Y., Wu, Z., He, H., & Lu, G. (2021). Deterministic and Probabilistic Evaluation of Sub-Seasonal Precipitation Forecasts at Various Spatiotemporal Scales over China during the Boreal Summer Monsoon. Atmosphere, 12(8), 1049. https://doi.org/10.3390/atmos12081049