Abstract

The Cloud–Aerosol Lidar with Orthogonal Polarization (CALIOP), on-board the Cloud–Aerosol Lidar and Infrared Pathfinder Satellite Observations (CALIPSO) platform, is an elastic backscatter lidar that has been providing vertical profiles of the spatial, optical, and microphysical properties of clouds and aerosols since June 2006. Distinguishing between feature types (i.e., clouds vs. aerosol) and subtypes (e.g., ice clouds vs. water clouds and dust aerosols from smoke) in the CALIOP measurements is currently accomplished using layer-integrated measurements acquired by co-polarized (parallel) and cross-polarized (perpendicular) 532 nm channels and a single 1064 nm channel. Newly developed deep machine learning (DML) semantic segmentation methods now have the ability to combine observations from multiple channels with texture information to recognize patterns in data. Instead of focusing on a limited set of layer integrated values, our new DML feature classification technique uses the full scope of range-resolved information available in the CALIOP attenuated backscatter profiles. In this paper, one of the convolutional neural networks (CNN), SegNet, a fast and efficient DML model, is used to distinguish aerosol subtypes directly from the CALIOP profiles. The DML method is a 2D range bin-to-range bin aerosol subtype classification algorithm. We compare our new DML results to the classifications generated by CALIOP’s 1D layer-to-layer operational retrieval algorithm. These two methods, which take distinctly different approaches to aerosol classification, agree in over 60% of the comparisons. Higher levels of agreement are found in homogeneous scenes containing only a single aerosol type (i.e., marine, stratospheric aerosols). Disagreement between the two techniques increases in regions containing mixture of different aerosol types. The multi-dimensional texture information leveraged by the DML method shows advantages in differentiating between aerosol types based on their classification scores, as well as in distinguishing vertical distributions of aerosol types within individual layers. However, untangling mixtures of aerosol subtypes is still challenging for both the DML and operational algorithms.

1. Introduction

The Cloud–Aerosol Lidar and Infrared Pathfinder Satellite Observations (CALIPSO) mission, launched in April 2006, is a collaboration between the National Aeronautics and Space Administration (NASA) and the Centre National D’Etudes Spatial (CNES) [1]. The primary instrument onboard CALIPSO is the Cloud–Aerosol Lidar with Orthogonal Polarization (CALIOP), which has now collected more than 14 years of altitude-resolved backscatter measurements (total backscatter information at 1064 nm, and at 532 nm backscatter information measured in separate polarization planes oriented parallel and perpendicular to the polarization plane of the outgoing laser pulse). The calibrated lidar signals are analyzed to detect atmospheric layer boundaries, classify layers by type (i.e., cloud vs. aerosol) and subtype, and retrieve their optical properties [2]. This unprecedented global record of the vertical distributions of clouds and aerosols in the Earth’s atmosphere is continually released for public use through NASA’s Atmospheric Sciences Data Center (ASDC) at the Langley Research Center.

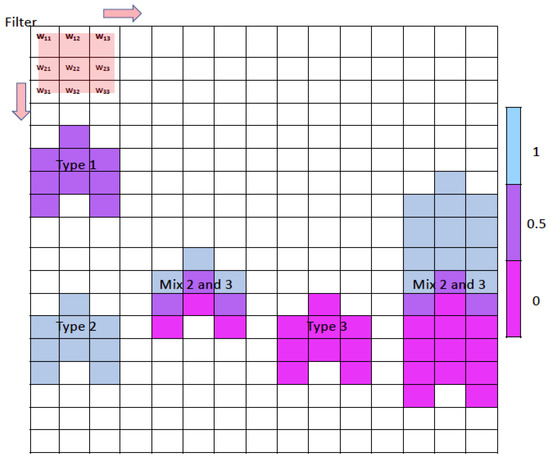

At present, the CALIOP operational scene classification algorithms (COSCAs) rely on geospatial information and spectrally resolved layer integrated properties [3,4,5]. Because the layer detection scheme averages data both horizontally and vertically, detailed intra-layer spatial information is not exploited, leading to uncertainties in the type and subtype assignments. Uncertainties can also arise from errors in estimating the attenuation corrections that are required for secondary layers lying beneath other layers (e.g., boundary layer aerosols beneath cirrus clouds). Many of the shortcomings in the current scheme can potentially be resolved by jointly considering both spectral and textural information [6]. For any sensor, the scope of the spectral information available depends on the instrument’s optical design (e.g., the number of spectral channels, polarization sensitivity, etc.). Spectral differences in scattering behaviors provide clues to layer type. Unlike spectral information, textural information depends on the instrument’s spatial resolution and signal-to-noise ratio (SNR). Additional classification clues can be gleaned from the differing spatial structures exhibited by the scattering from different feature types. An example illustrating the importance of texture information is given below in Figure 1, which shows the schematic of a 2D image consisting of an array of pixels. A set of filters measuring 3 × 3 (weights: wi,j; biases: bi,j) move across the image. Depending on the type of filter applied, convolutions of the filter with the image pixels can retrieve the mean value of any feature within the 3 × 3 “super pixel” (i.e., wi,j = 1 and bi,j = 0), the horizontal (or vertical) gradient of these features (i.e., w1,j = 1, w2,j = 0, w3,j = −1 and bi,j = 0), or the boundaries of the features within the super pixel (i.e., wi,j = 1 except w2,2 = 0 and bi,j = 0). The fine structural details provided by this texture information can distinguish between different feature types that are classified as the same type according to the layer integrated values used by the COSCAs. For example, the COSCA layers shown in the far right of Figure 1 comprise multiple feature types. The COSCAs identify these layers as being a single type, based on integrated values calculated over the layer vertical extent. However, when CNN texture information is considered, the vertical transitions between different types can be identified within a single COSCA layer. This texture information, therefore, allows us to better exploit lidar profile measurements for clustering and classification. By simultaneously evaluating multiple information sources, convolutional neural networks can identify complex spatial relationships that convey significantly more information than can be derived from simple statistical measurements such as means, standard deviations, and covariances [7].

Figure 1.

Schematic texture information in the role of classification. Vertically adjacent colored grid cells represent layers identified by the COSCAs. The colors of the grid cells indicate feature types, i.e., feature type 1 is shown in purple, type 2 in blue, and type 3 in magenta. In this example, some COSCA layers (e.g., on the far right) are seen to comprise a mix of different feature types.

While the COSCAs currently use coarsely resolved spatial and spectral information, these algorithms would undoubtedly benefit from the inclusion of additional signal characterizations already available in the data. Optically thick smoke layers provide a striking example. The differential absorption of smoke particles at 532 and 1064 nm results in a constant increase in the attenuated backscatter intensity ratio (i.e., color ratio) with increasing layer penetration depth [3]. However, the current generation of COSCAs does not use this intra-layer vertical texture information; instead, the cloud-aerosol discrimination (CAD) algorithm uses the layer mean color ratio, which effectively obscures the characteristic structure of the internal changes within smoke layers. Fortunately, deep machine learning (DML) can help exploit intra-layer vertical texture information, and as this paper will demonstrate, can help improve CALIOP aerosol subtyping.

During the recent rapid evolution of deep machine learning (DML) methods, the 2014 development of fully convolutional neural networks (CNNs) has allowed semantic segmentation algorithms to achieve operational maturity [8]. Since then, CNNs have been widely applied to different domains, including in the field of satellite remote sensing [9,10,11]. When applied to the sematic segmentation tasks, CNNs can take full advantage of combined spectral and texture information for object recognition and classification. Several different semantic segmentation CNN architectures are available, including fully convolutional networks (FCNs) [8], U-Net [12], and SegNet, a deep encoder–decoder architecture for multi-class semantic segmentation developed by the Computer Vision and Robotics Group at Cambridge University, UK [13,14]. All of these neural network architectures include entangled encoder and decoder processes. The encoder typically uses some well-known building blocks, such as convolutional layers, down pooling, and rectified linear units (ReLU), to ignore fine textures within a feature while extracting coarser descriptive information. The decoder process semantically projects the discriminative features at a lower resolution (e.g., see Figure 2). The learning that results from the encoder process is then transferred back onto the pixel space at the original (higher) resolution, with each pixel being assigned to a class membership through the decoder. The decoder also uses some of the same building blocks used in the encoding process. Down pooling is used to increase the field of view (i.e., the number of pixels considered) and at the same time reduce the feature map resolution. This works best for classification, where the end goal is to find the presence of a particular class irrespective of the spatial location of the object. Thus, pooling is introduced after each convolution layer to enable the succeeding block to extract more abstract, class-salient features from the pooled features. The 3-dimensional convolutional layers convolve multiple 2-dimensional filters with the original image to derive different aspects of the neighborhood texture information within super-pixels, which are perceptually more meaningful. The convolutional process captures and retains low-level geometric information.

Figure 2.

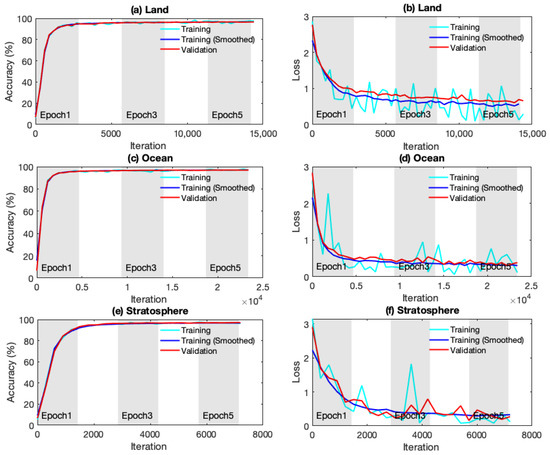

Training (cyan/blue) and validation (red) accuracy (left column) and loss (right column) against iteration or epoch over tropospheric land (a,b), tropospheric ocean (c,d), and in the stratosphere (e,f).

Because semantic segmentation neural networks incorporate neighborhood texture information, they offer new opportunities for improving atmospheric feature classification using 2-D lidar imagery. In this work, we have created a SegNet-like architecture using the routines provided in the MATLAB computer vision and deep learning toolboxes [15,16]. We subsequently used the trained CNN to demonstrate the ability of semantic segmentation to improve the classifications currently being delivered by the COSCAs. This study, thus, provides an initial investigation of feature classification within a 2-D framework that explicitly includes spatial texture information, and investigates the role of spatial texture information in the classification of features present in lidar backscatter signals. In Section 2, we briefly review the architecture of the MATLAB-SegNet model and describe the CALIPSO aerosol subtyping scheme incorporated in the COSCAs. Section 3 describes the preparation of data sampling and training. Section 4 compares test results derived from our trained network with the corresponding COSCA results. In the last section, conclusions and perspectives are given.

2. Model Description

2.1. The Sematic Segmentation CNN

Sematic segmentation CNN architectures are characterized by sets of paired encoder and decoder processes. The goal of the encoders is to produce a low-dimensional representation of the inputs, while the decoder reconstructs the original input from the low-dimensional representation, mapping it back to the original high-resolution pixel space in order to learn the most representative structures contained within the data [13,14]. This sparse mapping is then convolved with trainable, multi-channel filters to capture low-level geometric information and generate an original resolution image in which each pixel is fully classified. The convolution operation can be thought of as operating a spatial “polarimeter” (instead of angularly filtering, convolutional kernels spatially filter the light) that extracts the fine resolution texture information used in the classification.

In this work, we choose the memory efficiency Segnet architecture to test the impact of 2-dimensional texture information on the classification. The MATLAB code using the MATLAB computer vision and deep learning toolboxes is conceptually identical to the Python version of the SegNet shown in Figure 2 from [14]. The architecture includes five levels of paired encoders and decoders. The first two encoder layers consist of two pairs of convolution + batch normalization + ReLU operations (in the order of convolution, normalization, and then ReLU in each pair operation), followed by a max pooling step. The three remaining encoder levels are similar to the first two encoder levels, except that three pairs are used rather than two. The configuration of the decoders are similar to encoders but with up-sampling replacing the max pooling step. The final layer of the network (i.e., occurring after the five encoder-decoder layers) applies a softmax function. We choose cross-entropy as our loss function to assign one of the CALIOP aerosol subtypes to each pixel in the input dataset. A class membership score is also assigned according to the calculated probabilities of belonging to each class. The final classification is determined by the highest probability (i.e., class membership score).

2.2. CALIOP Aerosol Subtyping Algorithm

The CALIOP level 2 algorithm first identifies atmospheric layers using a threshold-based detection technique, using modeled molecular signals as a baseline [17]. After layers have been detected, the CALIOP CAD algorithm distinguishes clouds from aerosols using latitude, altitude, the 532 nm attenuated backscatter coefficients and volume depolarization ratios, and the attenuated total backscatter color ratios [3]. The version 4 COSCAs then identify seven tropospheric aerosol subtypes (marine, dust, polluted continental/smoke, clean continental, polluted dust, elevated smoke, dusty marine) and three stratospheric aerosol subtypes (polar stratospheric aerosol, volcanic ash, and sulfate/other classes).

The CALIOP aerosol subtyping scheme, described fully in [4], is a multi-path decision tree that determines aerosol subtypes based on a set of scalar values, which includes the local tropopause altitude and surface type, season, 532 nm integrated attenuated backscatter (γ′532) information, estimated particulate depolarization ratio (δp), and layer top and base altitudes. Differences in the lidar measured quantities (γ′532 and δp) arise from underlying differences in aerosol optical properties, such as the refractive indices and particle size distributions between subtypes [18]. Our SegNet classifier takes a vastly different approach. The inputs are 2-dimensional arrays (images) of height-varying 532 nm attenuated backscatter coefficients (β′532), volume depolarization ratios (δv), and attenuated total backscatter color ratios (χ′). The raw 2-D measurement data are normalized before being fed into training and testing processes. Layer top and base altitudes are separately specified as locations within the images. Unlike the COSCA aerosol subtyping module, we also include color ratios to exploit their potential utility in identifying smoke and other differentially absorbing aerosols (i.e., aerosols having very different extinction coefficients at 532 and 1064 nm).

3. Data Preparation and Training

3.1. Data Sample

Our primary training and validation dataset consists of three full months of CALIOP version 4.10 level 1B and level 2 vertical feature mask data (1435 orbits, both daytime and nighttime) acquired from July through September 2008. The training/validation ratio is 90:10%. To ensure adequate representation of relatively rare injections of stratospheric aerosols, we augmented our initial training, validation, and testing dataset with additional data from several volcanic eruptions and high-altitude smoke events chosen from [4], Table 1, with additional cases from the Calbuco Eruption (April 2015), the Sarychev Eruption (June 2009), and two North America smoke events (July–August 2007 and July 2014). Half of the augmented volcanic eruption and smoke samples were randomly chosen to add into training and validation dataset and the other half were used for testing. For our test dataset, all data were acquired from July through to September 2010, plus half of the stratospheric volcanic and smoke cases mentioned above. Our ground truth for this training dataset (i.e., the correct feature classification map) was synthesized from the high-confidence classifications reported in the CALIPSO version 4 (V4) data products and augmented by expert judgement, as required, to correct obvious COSCA errors. Our inputs for this training dataset are the CALIOP signals (β′532, δv, and χ′) averaged horizontally to a 5 km (15 shots) along-track resolution in order to pair with the ground truth. The signals are then normalized to the 0–1 range for each channel individually and resized to uniform dimensions of (400 (altitude pixel size) × 400 (along track pixel size) × 3 (spectral size)) for training, validation, and testing.

Table 1.

Confusion matrix (for July, August, and September 2010 plus stratospheric volcanic eruption and smoke cases) showing the mapping of aerosol subtypes from the CNN (columns) to the COSCAs (rows). The most frequently identified types in classes in each row are highlighted in purple, and the second most frequently identified types in classes are highlighted in blue.

3.2. Model Setup and Training

For the encoder, we chose the pretrained vgg16 [19] SegNet model weights and biases as initial training values. The initial learning rate was set to 0.001. We used a stochastic gradient descent momentum (SGDM) optimizer [20] with momentum equal to 0.9. The maximum epoch was set to 5 and the minimum batch size was set to 4. To avoid overfitting, we applied data augmentation to the normalized lidar signals. Augmentation is picked randomly from a continuous uniform distribution.

Training was performed separately for data acquired in the stratosphere and for data acquired over land surfaces and oceans in the troposphere. Source locations can provide substantial clues about the formation regime of aerosols, and thus separate training over disjoint geographic locations provides an independent index for classifying aerosol subtypes.

Because the natural occurrence frequency of some aerosol subtypes is small compared to others, inputs to the CNN loss function are weighted to account for population size differences. The weight coefficients of the classes are calculated using the inverse of the population sample sizes. These weights prevent large biases in recognizing classes with small sample sizes, as large or small sample sizes now have equal chances to minimize the loss [21]. The weighting coefficients for different classes over land, ocean, and stratospheric atmosphere areas range between 0 and 1.

Figure 2 illustrates the training and validation accuracy and loss values for processes over three different geographic regions. As expected, training and validation classification accuracy increases and loss function values decrease as the number of iterations increases. In all cases, the model converges to stable accuracies greater than 90% after ~3000 iterations. Once the loss values have stabilized, additional iterations do not further improve classification correctness. After training, the final weight coefficients and biases were saved for use in the testing and validation phases. Validation accuracies are similar to training accuracies, while validation loss values are slightly higher compared to the training values. The convergence of both loss and correctness in the training and validation datasets shown in Figure 2 demonstrates that the neural network model chosen and the hyper parameters setups are correct and can work for our purpose on the aerosol classifications.

4. Test and Validation

Validating the CALIOP aerosol typing results is a challenging task, which to date has been attempted by relatively few researchers [22,23,24,25,26,27,28]. Previous studies have approached this task by determining how well certain combinations of the CALIOP extrinsic data, together with relevant ancillary information, can be mapped into separate, sometimes different families of aerosol types, defined by other researchers and based on other measurements and class definition criteria. Because the aerosol class definitions, instrument capabilities (e.g., CALIOP vs. AERONET), and spatial and temporal coverages are often quite different, these studies can fail to reach satisfactory, dispositive conclusions. Consequently, in this paper we have chosen not to reproduce previous validation studies by substituting our CNN algorithm for the COSCAs. We focus instead on documenting the improvements made in the spatial coherence and contiguousness seen within individual layers and quantifying the increased spatial resolution at which the current CALIOP aerosol types are identified. Future comparisons to altitude-resolved aerosol typing datasets (e.g., derived from Raman or high spectral resolution lidars) should shed additional light on the performance gains that can be realized by adopting machine learning algorithms for CALIOP aerosol typing.

4.1. Case Studies

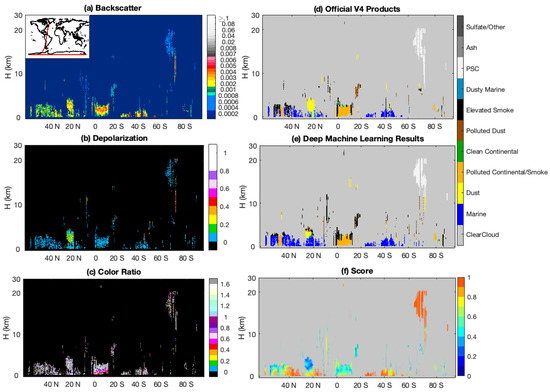

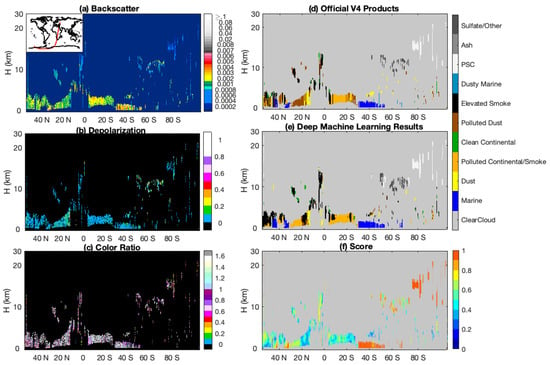

For an initial demonstration of the performance of our trained CNN, we use the nighttime portion of an orbit from 17 August 2010, beginning at 05:56:13 UTC. Figure 3a–c shows, respectively, the β′532, δv, and χ′ measurements that comprise the algorithm inputs. Figure 3d shows the aerosol subtypes assigned in the CALIOP V4 data products, while Figure 3e shows the subtypes assigned by our DML method. The results derived by the two methods are seen to be quite similar. The agreement between the V4 data products and the CNN in clouds and clear skies is ~98%, while the agreement within aerosols layers falls to 73%. This disparity in aerosol subtyping is expected. While the V4 COSCAs assign only a single subtype to all pixels within a layer, the CNN is not similarly constrained—the CNN can make multiple subtype assignments within a layer. The added flexibility of the CNN is especially noticeable below ~5 km at ~20° N, where the COSCAs identify a Saharan dust layer transported over the Caribbean Sea that extends from ~4 km to the surface. The CNN classifications in this region show a more physically plausible transition from pure dust in the upper portion of the layer to an intermediate dusty marine mix and finally to a surface-attached marine aerosol in the marine boundary layer. This transitional typing is possible because the textural information encoded in the lower altitudes of the aerosol layer helps sway the subtyping decision. Other obvious differences between the two methods are cross-classifications between polluted continental/smoke and elevated smoke classes and between elevated smoke and polluted dust classes. Both of these are reasonable because these subtypes are frequently found in close proximity to one another and because mixtures of smoke and dust can frequently be misclassified as one of the dominate aerosol class. We also notice that the COSCA products can exhibit abrupt classification discontinuities, where an otherwise unexpected aerosol class is identified within an extended horizontal plume of another class. An example of this behavior occurs around 42° N in Figure 3, where a column of smoke is embedded in a large area of marine aerosols. While this abrupt change may indicate aerosol mixing, a more likely explanation is intermittent misclassification. In contrast, the CNN’s use of texture information tends to remove these discontinuous classes, thus enabling the CNN to predict a plume of aerosol of the same species (marine) in this same region.

Figure 3.

CALIOP measurements and aerosol classification results for nighttime data acquired on 17 August 2010, starting from 05:56:13 UTC. (a–c) The 532 nm backscatter intensity, volume depolarization, and attenuated total backscatter color ratio measurements, respectively. The CALIPSO ground track is overlaid in the upper-left corner of panel (a). (d) The aerosol classifications reported by the CALIOP operational scene classification algorithms. (e) The classifications determined by the trained convolutional neural network. (f) The CNN classification scores.

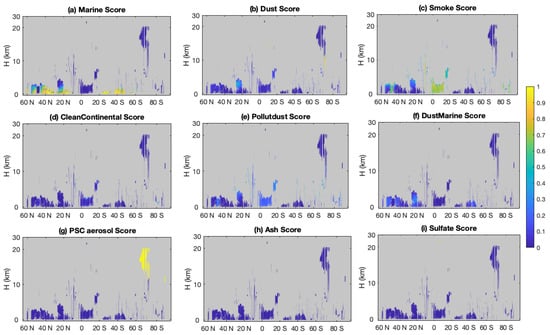

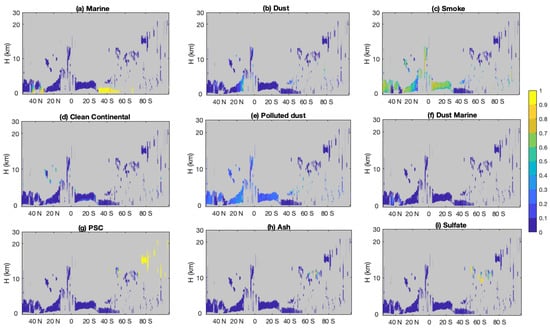

The COSCAs provide only a binary assessment of aerosol classification confidence; any classification is judged as either “confident” or “not confident”. In contrast, the CNN algorithm returns floating point classification scores between 0 and 1 associated with the final categorical label (i.e., the assigned aerosol type; see Figure 3f), as well as the classification scores (probabilities) associated with belonging to each of the other aerosol subtypes (Figure 4). As shown in Figure 3f and Figure 4a,g, the polar stratospheric aerosols and marine aerosols are identified with high confidence scores, while other aerosol types have low confidence scores, possibly related to mixtures of aerosol types being present or to unintended overlap in the class definitions. The smoke occurring between 10° S to 20° S should be confidently classified based on the increase in color ratio with increasing penetration depth into the layer. However, low confidence scores appear here due to classification confusion between elevated smoke and smoke regimes. If we combine the possibilities of these two regimes, as shown in Figure 4c, the scores increase, indicating higher confidence that smoke is the correct classification. Low classification scores also appear for dust around 20° N. In the upper part of this plume, dust is confused between smoke and polluted dust (Figure 4b,c,e), while in the lower part, dust is confused with dusty marine and marine types (Figure 4a,b,f). According to these scores, the CNN method suggests that there is a possibility of mixing of aerosols away from local sources in the area—Sahara dust could transfer across the Atlantic Ocean, mixing with the smoke transferred from the Amazon basis to the south and marine aerosols lofted from underlying layers.

Figure 4.

CNN classification scores assigned to all aerosol classes (a): marine aerosols; (b): dust; (c): smoke; (d): clean continental; (e): polluted dust; (f): dust marine aerosols; (g): PSC aerosols; (h): ash; (i): sulfate or other stratospheric aerosols) for nighttime data acquired on 17 August 2010, starting at 05:56:13 UTC, as shown in Figure 3. Note the smoke and elevated smoke are combined in panel (c).

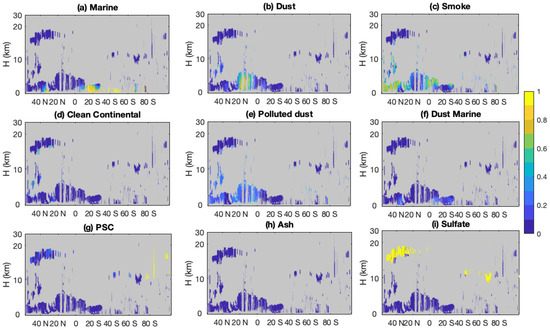

Another case for the nighttime portion of an orbit from 19 June 2011, starting at 23:53:12 UTC, is shown in Figure 5 and Figure 6. The agreement between the V4 COSCAs and the CNN in clouds and clear skies is ~96%, while the agreement within aerosols layers falls to 60%—slightly lower percentages compared to Figure 3. The disagreement is mainly due to different classifications of dust, polluted dust, and polluted continental/smoke class in the troposphere. Between 0 and 20° S, over central Africa where fires frequently occur during this season, the COSCAs and the CNN agree for most of the layers classified as polluted continental/smoke class. There is slight disagreement beyond 20° S near the Namib Desert, where the COSCAs show a mixture of dust and smoke. The CNN classifies these aerosols as smoke, perhaps because the training dataset contains relatively few samples of mixtures of dust and smoke in this region. The fact that smoke can have elevated depolarization ratios also presents challenges for both algorithms in separately identifying dust, smoke, and mixtures of the two.

Figure 5.

The same information is shown as in Figure 3 except for nighttime data acquired on 19 June 2011, starting at 23:53:12 UTC. (a–c) The 532 nm backscatter intensity, volume depolarization, and attenuated total backscatter color ratio measurements, respectively. The CALIPSO ground track is overlaid in the upper-left corner of panel (a). (d) The aerosol classifications reported by the CALIOP operational scene classification algorithms. (e) The classifications determined by the trained convolutional neural network. (f) The CNN classification scores.

Figure 6.

CNN classification scores assigned to all aerosol classes (a): marine aerosols; (b): dust; (c): smoke; (d): clean continental; (e): polluted dust; (f): dust marine aerosols; (g): PSC aerosols; h): ash; (i): sulfate or other stratospheric aerosols) for nighttime data acquired on 19 June 2011, starting at 23:53:12 UTC shown in Figure 5.

Over the northern hemisphere, smoke is detected beyond 40° N by both algorithms, although the color ratios do not show the typical transition pattern of smoke. Between 20° N and 40° N, the COSCAs classify the plume as a mixture of smoke and dust. The CNN classifies it as polluted continental/smoke class, but assigns low classification scores to the features (Figure 5f). These low scores (Figure 6b,c,e) may indicate a possible mixing of smoke and dust. Again, this is a complicated case due to the varying mixture of smoke and dust. The lack of well-defined boundaries separating dust, smoke, and mixtures of the two types, combined with noise in the lidar backscatter signals, can lead the CNN to seemingly erratic and erroneous classifications. Additional in situ measurements in these regions could provide the ground truth information needed to better define the class boundaries for these three subtypes, and hence improve CNN future training.

For marine aerosol, both algorithms agree in the classification. An exception occurs beyond 60° S where the COSCAs classify the features as dusty marine class, while the CNN assigns them marine aerosols. The CNN identifies this region as marine aerosols, most likely due to the use of texture information and less samples of dust marine origin in the training dataset in this region. In the stratosphere, volcanic ash layers from the June 2011 Puyehue-Cordón Caulle eruption [29] are detected above 10 km from 40° S–70° S. The COSCAs and the CNN agree on the ash classification for much of the plume. They also agree on PSC and sulfate/other classifications over the southern hemisphere.

A third case for the nighttime portion of an orbit from 14 July 2011, starting at 00:42:02 UTC, is shown in Figure 7 and Figure 8. The agreement between the V4 COSCAs and the CNN in clouds and clear skies is ~96%, while the agreement within aerosols layers is 81%. The disagreement is mainly due to confusion in classifying dusty marine and marine+smoke mixtures in the troposphere. Around 40° N and 20° S, the aerosols near the ocean seem to be a mix of smoke and marine classes. The COSCAs classify these layers as dusty marine class, while the CNN classifies them as pure marine class. Because we did not define a marine + smoke class in either algorithm, neither method can correctly identify the aerosol type in this case. Again, it is worth noting that accurate identification of arbitrary mixtures of aerosol types remains an exceedingly difficult problem, irrespective of the algorithm(s) applied to the task.

Figure 7.

The information shown above is the same as in Figure 3 except for nighttime data acquired on 14 July 2011, starting from 00:42:02 UTC. (a–c) The 532 nm backscatter intensity, volume depolarization, and attenuated total backscatter color ratio measurements, respectively. The CALIPSO ground track is overlaid in the upper-left corner of panel (a). (d) The aerosol classifications reported by the CALIOP operational scene classification algorithms. (e) The classifications determined by the trained convolutional neural network. (f) The CNN classification scores.

Figure 8.

CNN classification scores assigned to all aerosol classes (a): marine aerosols; (b): dust; (c): smoke; (d): clean continental; (e): polluted dust; (f): dust marine aerosols; (g): PSC aerosols; (h): ash; (i): sulfate or other stratospheric aerosols) for nighttime data acquired on 14 July 2011, starting at 00:42:02 UTC, as shown in Figure 7.

4.2. Statistical Comparisons

Independent characterization of the CNN was conducted using unexamined data from the months of July, August, and September 2010. Aerosol subtypes assigned by our CNN are compared to the assignments reported in the V4 data products in the confusion matrix shown in Table 1. Each cell reports an occurrence frequency (as a percentage), which maps aerosol subtypes from the CNN (columns) to the COSCAs (rows). These frequencies are normalized so that the sum of each row is 100%. As shown in Table 1, we again see that the agreement between the two methods in aerosol-free conditions is ~98%, while the overall agreement within aerosol layers is ~62%. For stratospheric aerosols, the two methods agree in ~87% of all cases. In the troposphere, agreements are higher over oceans (~62%) than over land (~57%). This difference is expected. Over land, numerous sources of natural and anthropogenic aerosols are frequently mixed during transport, resulting in stratified layers containing different subtypes. While these subtypes can be correctly identified by the CNN, the COSCAs are constrained to classify each layer as homogeneous. Globally, marine and dust are the most frequently occurring aerosol subtypes [4], and the agreement between the two methods for these subtypes is reasonably high at ~78% and ~64%, respectively. Relatively good agreement is also seen for clean continental (~67%), polluted continental/smoke (~75%), and all the stratospheric aerosol types.

Subtyping mismatches between the two methods may simply indicate the lack of crisp separation between the CALIOP aerosol classes. Alternatively, mismatches may be highlighting those areas where either the COSCAs or the CNN (or both) have wrongly classified the aerosol. For example, while the agreement for marine aerosols is high (~78%), a substantial portion (~20%) of the COSCA-identified marine aerosols are classified as dusty marine by the CNN. In other words, ~97% of the marine class identified by the V4 COSCAs is classified as marine or dusty marine by the CNN. Similarly, while the agreement for dusty marine class is low (~37%), ~43% of the COSCA dusty marine samples were identified as marine by the CNN and another ~9% as pure dust. Together, these cases account for ~90% of the COSCA-identified dusty marine aerosols. We attribute these subtyping differences to the incorporation of textural information by the CNN algorithm. More importantly, the CNN has the ability to identify multiple subtypes within a single layer.

While the agreement for dust cases is relatively high (~64%), we were initially surprised that it was not a good deal higher, as dust layers are readily identified by their high values of δp. However, upon reflection, it becomes clear that this less than expected agreement arises from fundamental differences in depolarization ratios used by the two methods. While δp is an intrinsic aerosol property, δv is an extrinsic property that depends on the aerosol concentration within a scattering volume. For low concentrations of dust, δv will be uniformly lower than δp. Because the COSCAs use layer-integrated properties, they can derive reliable estimates of δp from δv using an estimate of the overlying attenuation due to clouds and aerosols [4]. The disparity in dust classifications arises because unlike the COSCAs, the current CNN architecture does not incorporate an in-line extinction solver that operates on a pixel-by-pixel basis to propagate attenuation corrections into underlying but unclassified pixels or signals. Devising such an intricate companion algorithm is a highly worthy goal. However, it is not immediately clear how it can be accomplished and integrated into the existing CNN framework. (One complication is that adding in-line attenuation corrections will introduce potentially large changes into the texture of the underlying image from one CNN iteration to the next.) This task, thus, lies in the realm of future research that is well beyond the scope of our current investigation.

Approximately 9% of the V4 COSCA dust layers are classified as polluted dust by the CNN and 10% are classified as smoke. This confusion of classifications is likely due to adjacent source locations that may lead mixing of different aerosol types during transport, as explained in Section 4.3. Because the COSCAs cannot identify mixed types within a single layer, the algorithms are forced to choose between different discrete options. This suboptimal partitioning could bias the characterization of the truth dataset.

For the polluted continental/smoke class, agreement between the V4 COSCAs and the CNN is 74.7%. An additional 9.9% of the COSCA-classified polluted continental/smoke aerosols are classified as elevated smoke by the CNN, bringing the overlap for the two smoke classes to ~85%. However, when classifying layers as elevated smoke, the two methods agree only 54.6% of the time. The agreement for the clean continental subtype is 67%. The poorest agreement is found for the polluted dust class at only 12.4%. This class is defined as a mixture of smoke and dust, with characteristic properties derived from a cluster analysis of AERONET data [30]. Particularly over Africa, where dust and smoke sources are geographically close, local meteorology can generate arbitrary mixtures of the two types that are not well-represented by the idealized model properties. This lack of specificity in the class definition makes it difficult to identify reliable exemplars for use in the training dataset. Consequently, as seen in Table 1, the CNN has difficulty distinguishing CALIOP’s polluted dust class from the dust, clean continental, and smoke classes. In addition to the difficulties introduced by an overly broad CALIOP class definition, some fraction of this classification confusion could be due to the CNN’s reliance on δv rather than δp as a discriminator for non-spherical particles.

In the stratosphere, 97% of polar stratospheric aerosols are correctly identified by the CNN when compared to the COSCA classifications. While volcanic ash is correctly identified more than 56% of the time, this type is also frequently misclassified as sulfate (28.8%) or high-altitude smoke (8.7%). This is not entirely unexpected, as stratospheric sulfate and ash layers frequently originate from the same sources (i.e., volcanic eruptions), with differences in layer subtyping likely being related to the age of the volcanic plumes. When classifying sulfates, the COSCAs and the CNN agree 78% of the time, with most of the differences being classified as smoke by the CNN. The differences in the sulfate/other subtype could also arise from the COSCA class definition, which defines all stratospheric features for which the 532 nm integrated attenuated backscatter as less than 0.001 sr−1 as sulfate/other. The CNN on the other hand utilizes texture information even when classifying these very weakly stratospheric layers. The overall agreement between the two methods in the stratosphere is ~87%.

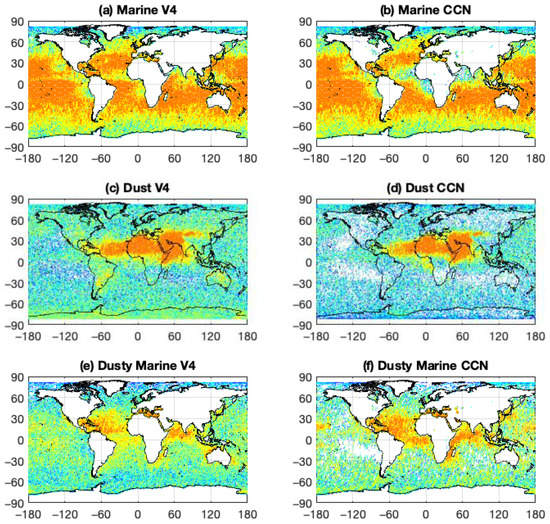

4.3. Geographical Comparisons

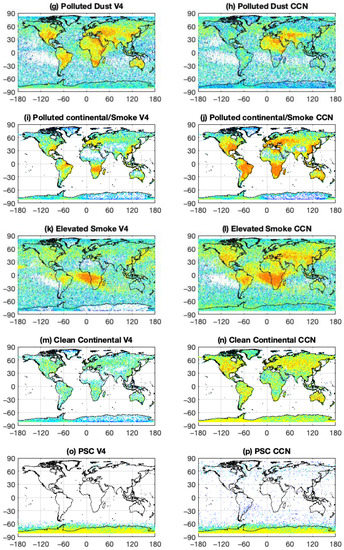

Geographical distributions of aerosol subtypes identified by the V4 COSCAs (left column) and the CNN (right column) for July, August, and September 2010, plus stratospheric volcanic eruption and smoke cases, are compared in Figure 9. In general, the different tropospheric aerosol types identified by the V4 COSCAs and the CNN are located in similar geographic regions, which are reasonable according to their source locations. As expected, marine aerosols are found in high concentrations over the oceans (Figure 9a,b). Dust is identified predominantly over the Saharan and Taklamakan Deserts and surrounding areas (Figure 9c,d), with the CNN results showing relatively lower occurrence frequencies in regions far from natural dust sources (e.g., over the Southern Oceans and Antarctic). Dusty marine aerosols are located over oceans adjacent to various dust sources (e.g., in the Gulf of Mexico, around Africa, over the Mediterranean, etc.; see Figure 9e,f). While the geographical distribution patterns for dusty marine are generally similar for both methods, the occurrence frequency is typically higher in the CNN results. The higher frequency could come from the bias of ground truth information for dusty marine type in the training dataset, which may also include a small fraction of polluted continental/smoke and marine mixture classes. Polluted dust is pervasive over continental land masses everywhere (Figure 9g,h) in the COSCA results but is largely confined to African and Asian desert regions in the CNN results. As with the dusty marine class, the geographic distribution patterns identified by the COSCAs and the CNN are similar for the polluted continental/smoke class (Figure 9i,j), but the CNN occurrence frequencies are substantially higher because the polluted continental/smoke truth information used in the training may contain a mixture of smoke and polluted dust classes. This is especially noticeable in biomass burning regions (e.g., southern Africa and the Amazon regions of South America) and regions with high human population densities (e.g., Europe, East Asia, and the United States). The elevated smoke (Figure 9k,l) and clean continental classes (Figure 9m,n) show the same behaviors evidenced by the dusty marine continental pollution/smoke classes; that is, the geographic distributions identified by the two classification algorithms are similar, but in both cases the CNN identifies larger occurrence frequencies. As seen in Table 1, the more extensive identification of elevated smoke by the CNN frequently comes at the expense of aerosols identified as polluted dust by the COSCAs.

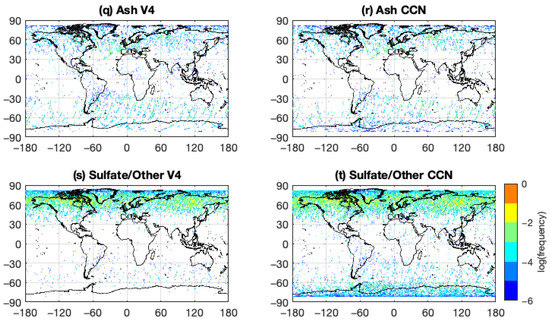

Figure 9.

Geographical distributions of aerosol subtypes identified by the V4 COSCAs (a): marine aerosols; (c): dust; (e): dusty marine; (g): polluted dust; (i): polluted continent or smoke (k): elevated smoke; (m): clean continental aerosols; (o): PSC aerosols; (q): Ash; (s): sulfate or other stratospheric aerosols) and the CNN (b): marine aerosols; (d): dust; (f): dusty marine; (h): polluted dust; (j): polluted continent or smoke (l): elevated smoke; (n): clean continental aerosols; (p): PSC aerosols; (r): Ash; (t): sulfate or other stratospheric aerosols for the same data used in Table 1.

With the exception of polar stratospheric aerosols (Figure 9o,p), the COSCAs and the CNN largely agree on the spatial distributions and occurrence frequencies for the stratospheric aerosol classes. In the COSCAs, the possible identification of polar stratospheric aerosols is restricted according to season and latitude. Because the CNN is not similarly restricted, it occasionally identifies “polar” aerosols as occurring in the mid-latitudes. Volcanic ash (Figure 9q,r) is identified only rarely by both classifiers. Sulfates (Figure 9s,t) are found predominantly in high northern latitudes, consistent with the locations of volcanic eruptions occurring during the study period chosen. The CNN shows a mild propensity for misclassifying some polar stratospheric aerosols as sulfate/other class.

5. Conclusions

Using SegNet, a convolutional neural network (CNN) architecture that implements deep machine learning (DML) methods, we have used the vertical and horizontal texture information intrinsic to space-based lidar observations to classify aerosol subtypes. The CNN method takes advantage of additional independent information that is available in the lidar profiles but not currently being used by the CALIOP operational scene classification algorithms (COSCAs). Our study shows that when using a 1435 orbit training set (i.e., three full months of measurements), the CNN classifier will identify the same aerosol subtypes as the COSCAs in ~62% of the tropospheric cases and ~87% of the stratospheric cases. Given that the CALIOP aerosol class definitions are not discrete, but instead specify mixture continuums (e.g., marine to dusty marine to dust classes) and contain altitude-dependent types (e.g., polluted continental/smoke vs. elevated smoke classes), we find this to be a reasonably good level of agreement. In fact, one might argue that the COSCA classifications, which are taken as truth in this experiment, are sometimes erroneous, simply because the COSCAs are constrained to identify each layer as wholly homogeneous. Because the pixel-by-pixel CNN classifications can identify multiple aerosol subtypes occurring within the predetermined layer boundaries, in these cases the CNN aerosol subtypes may be more accurate. We note, however, that for this iteration of our CNN classifier, we did not attempt to estimate particulate depolarization ratios, but instead used the directly measured volume depolarization ratio, which can cause underestimates in the CNN classifications for dusts and other irregularly shaped, depolarizing aerosol types.

In this paper, we have shown the application of a DML method for aerosol subtype classifications from lidar profile data. The CNN algorithm we have implemented exploits the texture information already embedded in the space-based lidar measurements. Because aerosols can exist in elaborate mixtures of many different types, the goal of this new approach is to deliver a more realistic accounting of the different aerosol types within individual layers. In the future, CNN training sets could be augmented at single-shot resolution with more accurate in situ and remotely sensed field measurements to improve classification skill. These additional training resources should prove particularly beneficial when assessing space-based lidar measurements of aerosol plumes detected in transition zones between major aerosol source regions, where mixing currently poses significant challenges to the methods now being used. A three-dimensional sematic segmentation CNN model with a single profile convolutional kernel that includes geographic and altitudinal information (instead of training over land and ocean and in the stratosphere) should further enhance the classification accuracy.

Author Contributions

Conceptualization, S.Z.; Data curation, S.Z.; Formal analysis, S.Z.; Investigation, S.Z.; Methodology, S.Z., M.O. and J.T.; Resources, J.T., J.Y., S.R. and B.G.; Supervision, S.Z., A.O., M.V., C.T., P.L., Y.H. and D.W.; Validation, S.Z.; Visualization, S.Z.; Writing—original draft, S.Z.; Writing—review & editing, S.Z., A.O., M.O., J.T. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Winker, D.; Pelon, J.; Coakley, J.A.; Ackerman, S.A.; Charlson, R.J.; Colarco, P.R.; Flamant, P.; Fu, Q.; Hoff, R.M.; Kittaka, C.; et al. The CALIPSO Mission. Bull. Am. Meteorol. Soc. 2010, 91, 1211–1230. [Google Scholar] [CrossRef]

- Winker, D.; Vaughan, M.A.; Omar, A.; Hu, Y.; Powell, K.A.; Liu, Z.; Hunt, W.H.; Young, S.A. Overview of the CALIPSO Mission and CALIOP Data Processing Algorithms. J. Atmos. Ocean. Technol. 2009, 26, 2310–2323. [Google Scholar] [CrossRef]

- Liu, Z.; Kar, J.; Zeng, S.; Tackett, J.; Vaughan, M.A.; Avery, M.; Pelon, J.; Getzewich, B.; Lee, K.-P.; Magill, B.; et al. Discriminating between clouds and aerosols in the CALIOP version 4.1 data products. Atmos. Meas. Tech. 2019, 12, 703–734. [Google Scholar] [CrossRef]

- Kim, M.-H.; Omar, A.H.; Tackett, J.L.; Vaughan, M.A.; Winker, D.; Trepte, C.R.; Hu, Y.; Liu, Z.; Poole, L.R.; Pitts, M.C.; et al. The CALIPSO version 4 automated aerosol classification and lidar ratio selection algorithm. Atmos. Meas. Tech. 2018, 11, 6107–6135. [Google Scholar] [CrossRef]

- Avery, M.; Ryan, R.A.; Getzewich, B.J.; Vaughan, M.A.; Winker, D.M.; Hu, Y.; Garnier, A.; Pelon, J.; Verhappen, C.A. CALIOP V4 cloud thermodynamic phase assignment and the impact of near-nadir viewing angles. Atmos. Meas. Tech. 2020, 13, 4539–4563. [Google Scholar] [CrossRef]

- Geethu Chandran, A.; Christy, J. A survey of cloud detection techniques for satellite images. Int. Res. J. Eng. Technol. 2015, 02, 2485–2490. [Google Scholar]

- Tian, B.; Shaikh, M.A.; Azimi-Sadjadi, M.R.; Haar, T.H.V.; Reinke, D.L. A study of cloud classification with neural networks using spectral and textural features. IEEE Trans. Neural Netw. 1999, 10, 138–151. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. arXiv 2014, arXiv:1411.4038. [Google Scholar]

- Yan, Z.; Yan, M.; Sun, H.; Fu, K.; Hong, J.; Sun, J.; Zhang, Y.; Sun, X. Cloud and cloud shadow detection using multilevel feature fused segmentation network. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1600–1604. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, T.; Chen, G.; Tan, X.; Zhu, K. Convective Clouds Extraction from Himawari–8 Satellite Images Based on Double-Stream Fully Convolutional Networks. IEEE Geosci. Remote Sens. Lett. 2019, 17, 553–557. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Kendall, A.; Badrinarayanan, V.; Cipolla, R. Bayesian SegNet: Model Uncertainty in Deep Convolutional Encoder-Decoder Architectures for Scene Understanding. arXiv 2015, arXiv:1511.02680. [Google Scholar]

- The MathWorks, Inc. MATLAB and Deep Learning Toolbox Release 2019; The MathWorks, Inc.: Natick, MA, USA, 2019; Available online: https://www.mathworks.com/help/deeplearning/index.html (accessed on 17 September 2019).

- The MathWorks, Inc. MATLAB and Computer Vision Release 2019; The MathWorks, Inc.: Natick, MA, USA, 2019; Available online: https://www.mathworks.com/help/vision/index.html (accessed on 17 September 2019).

- Vaughan, M.A.; Powell, K.A.; Winker, D.M.; Hostetler, C.A.; Kuehn, R.E.; Hunt, W.H.; Getzewich, B.J.; Young, S.A.; Liu, Z.; McGill, M.J. Fully Automated Detection of Cloud and Aerosol Layers in the CALIPSO Lidar Measurements. J. Atmos. Ocean. Technol. 2009, 26, 2034–2050. [Google Scholar] [CrossRef]

- Omar, A.H.; Won, J.; Winker, D.; Yoon, S.; Dubovik, O.; McCormick, M.P. Development of global aerosol models using cluster analysis of Aerosol Robotic Network (AERONET) measurements. J. Geophys. Res. Space Phys. 2005, 110, 110. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. NIPS. arXiv 2014, arXiv:1406.2199. [Google Scholar]

- Cui, Y.; Jia, M.; Lin, T.-Y.; Song, Y.; Belongie, S. Class-Balanced Loss Based on Effective Number of Samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9260–9269. [Google Scholar]

- Qian, N. On the momentum term in gradient descent learning algorithms. Neural Netw. 1999, 12, 145–151. [Google Scholar] [CrossRef]

- Burton, S.; Ferrare, R.A.; Vaughan, M.A.; Omar, A.H.; Rogers, R.R.; Hostetler, C.A.; Hair, J.W. Aerosol classification from airborne HSRL and comparisons with the CALIPSO vertical feature mask. Atmos. Meas. Tech. 2013, 6, 1397–1412. [Google Scholar] [CrossRef]

- Kanitz, T.; Ansmann, A.; Foth, A.; Seifert, P.; Wandinger, U.; Engelmann, R.; Baars, H.; Althausen, D.; Casiccia, C.; Zamorano, F. Surface matters: Limitations of CALIPSO V3 aerosol typing in coastal regions. Atmos. Meas. Tech. 2014, 7, 2061–2072. [Google Scholar] [CrossRef][Green Version]

- Kim, M.-H.; Kim, S.-W.; Yoon, S.-C.; Omar, A.H. Comparison of aerosol optical depth between CALIOP and MODIS-Aqua for CALIOP aerosol subtypes over the ocean. J. Geophys. Res. Atmos. 2013, 118, 13–241. [Google Scholar] [CrossRef]

- Mielonen, T.; Arola, A.; Komppula, M.; Kukkonen, J.; Koskinen, J.; De Leeuw, G.; Lehtinen, K.E.J. Comparison of CALIOP level 2 aerosol subtypes to aerosol types derived from AERONET inversion data. Geophys. Res. Lett. 2009, 36. [Google Scholar] [CrossRef]

- Papagiannopoulos, N.; Mona, L.; Alados-Arboledas, L.; Amiridis, V.; Baars, H.; Binietoglou, I.; Bortoli, D.; D’Amico, G.; Giunta, A.; Guerrero-Rascado, J.L.; et al. CALIPSO climatological products: Evaluation and suggestions from EARLINET. Atmos. Chem. Phys. Discuss. 2016, 16, 2341–2357. [Google Scholar] [CrossRef]

- Rogers, R.R.; Vaughan, M.A.; Hostetler, C.A.; Burton, S.; Ferrare, R.A.; Young, S.A.; Hair, J.W.; Obland, M.D.; Harper, D.B.; Cook, A.L.; et al. Looking through the haze: Evaluating the CALIPSO level 2 aerosol optical depth using airborne high spectral resolution lidar data. Atmos. Meas. Tech. 2014, 7, 4317–4340. [Google Scholar] [CrossRef]

- Tesche, M.; Wandinger, U.; Ansmann, A.; Althausen, D.; Muller, D.; Omar, A.H. Ground-based validation of CALIPSO observations of dust and smoke in the Cape Verde region. J. Geophys. Res. Atmos. 2013, 118, 2889–2902. [Google Scholar] [CrossRef]

- Bignami, C.; Corradini, S.; Merucci, L.; De Michele, M.; Raucoules, D.; De Astis, G.; Stramondo, S.; Piedra, J. Multisensor Satellite Monitoring of the 2011 Puyehue-Cordon Caulle Eruption. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2786–2796. [Google Scholar] [CrossRef]

- Omar, A.H.; Winker, D.M.; Vaughan, M.A.; Hu, Y.; Trepte, C.R.; Ferrare, R.A.; Lee, K.-P.; Hostetler, C.A.; Kittaka, C.; Rogers, R.R.; et al. The CALIPSO Automated Aerosol Classification and Lidar Ratio Selection Algorithm. J. Atmos. Ocean. Technol. 2009, 26, 1994–2014. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).