A Statistical Calibration Framework for Improving Non-Reference Method Particulate Matter Reporting: A Focus on Community Air Monitoring Settings

Abstract

1. Introduction

2. Methods

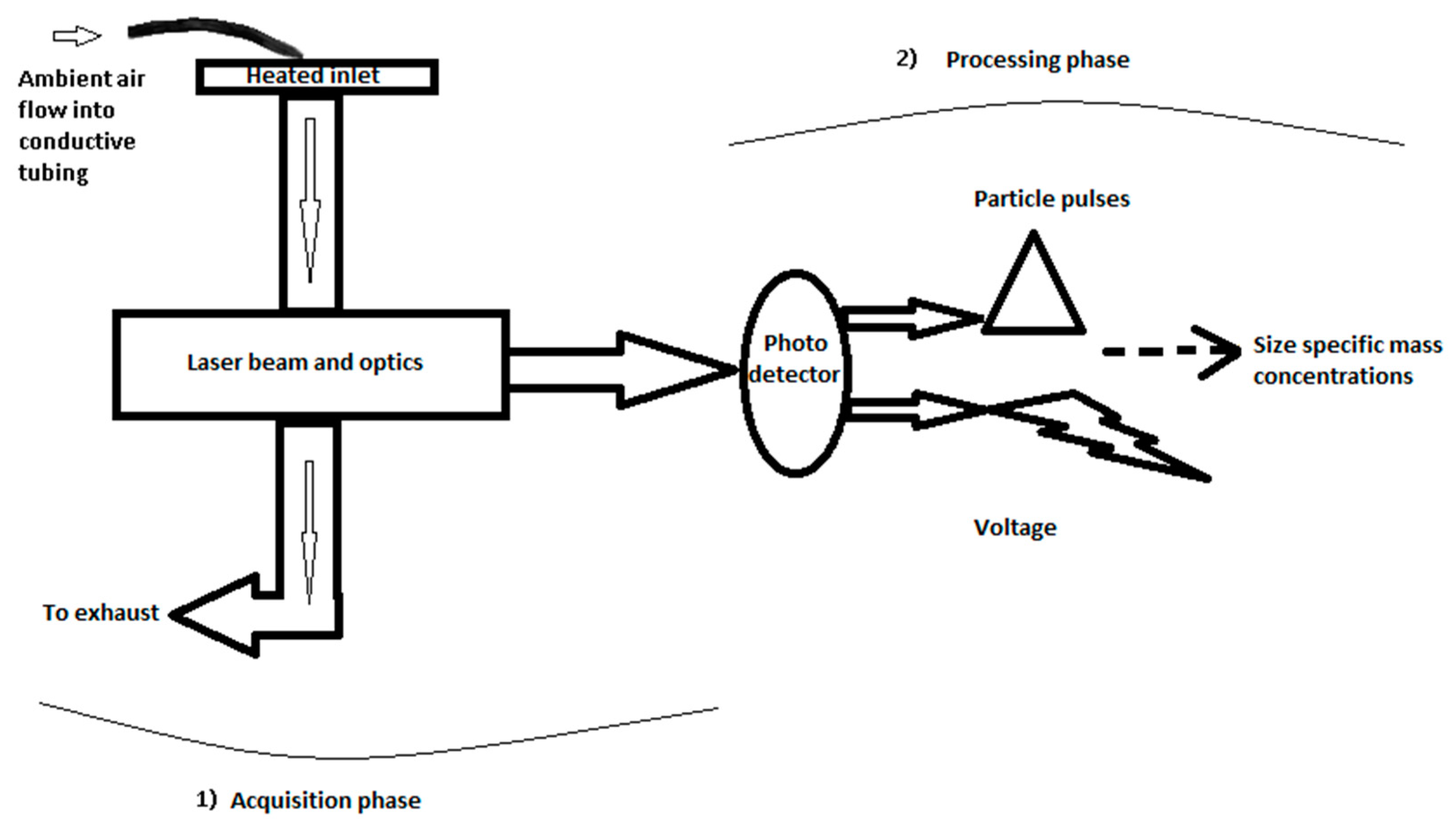

2.1. Non-Reference Method (NRM) Monitoring Equipment

2.2. Data Collection, Management, and Preparation

2.3. Statistical Analyses

2.4. Calibration Model

3. Results

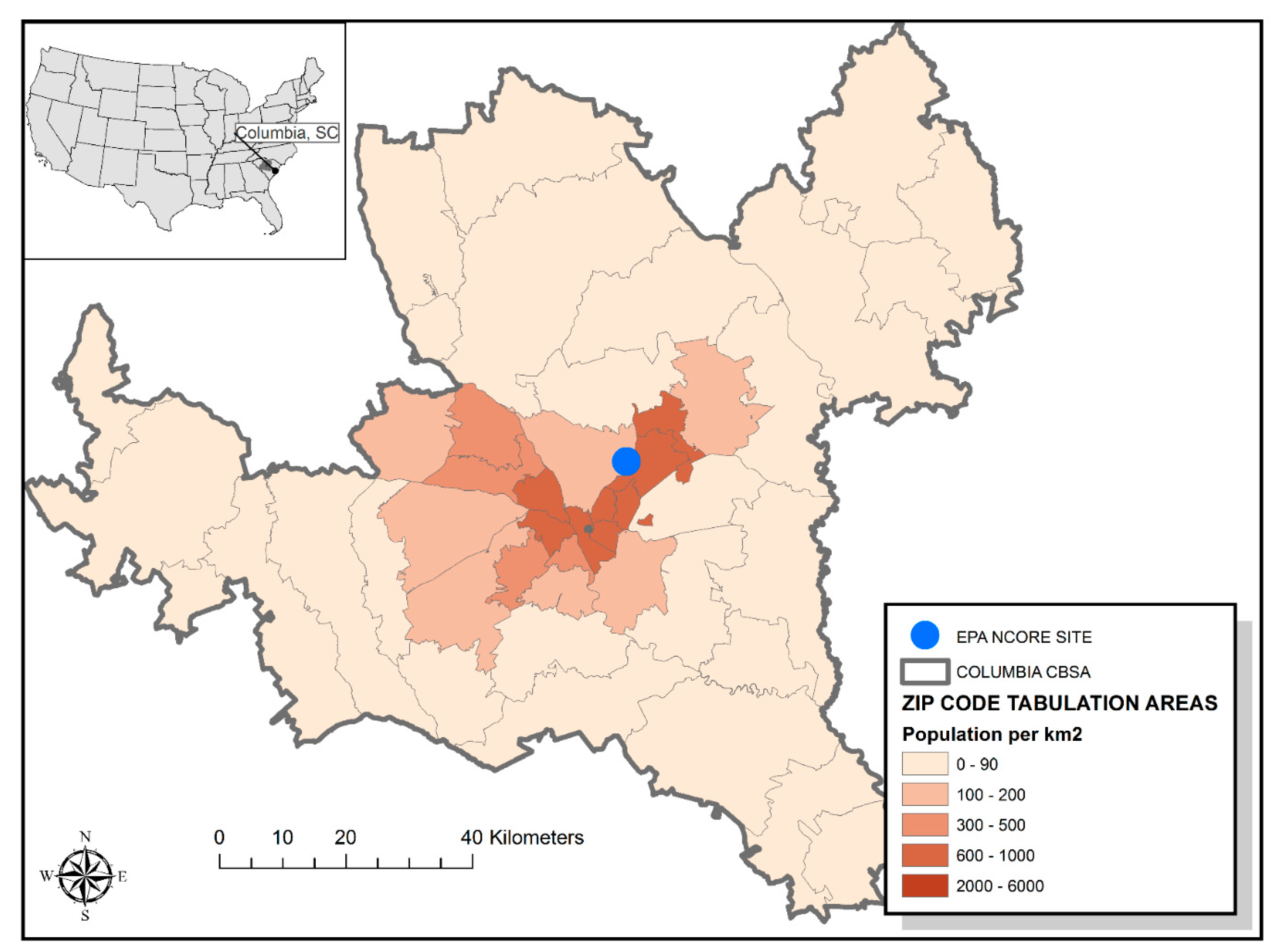

3.1. Co-Location Study

Ambient Conditions during Monitoring Period

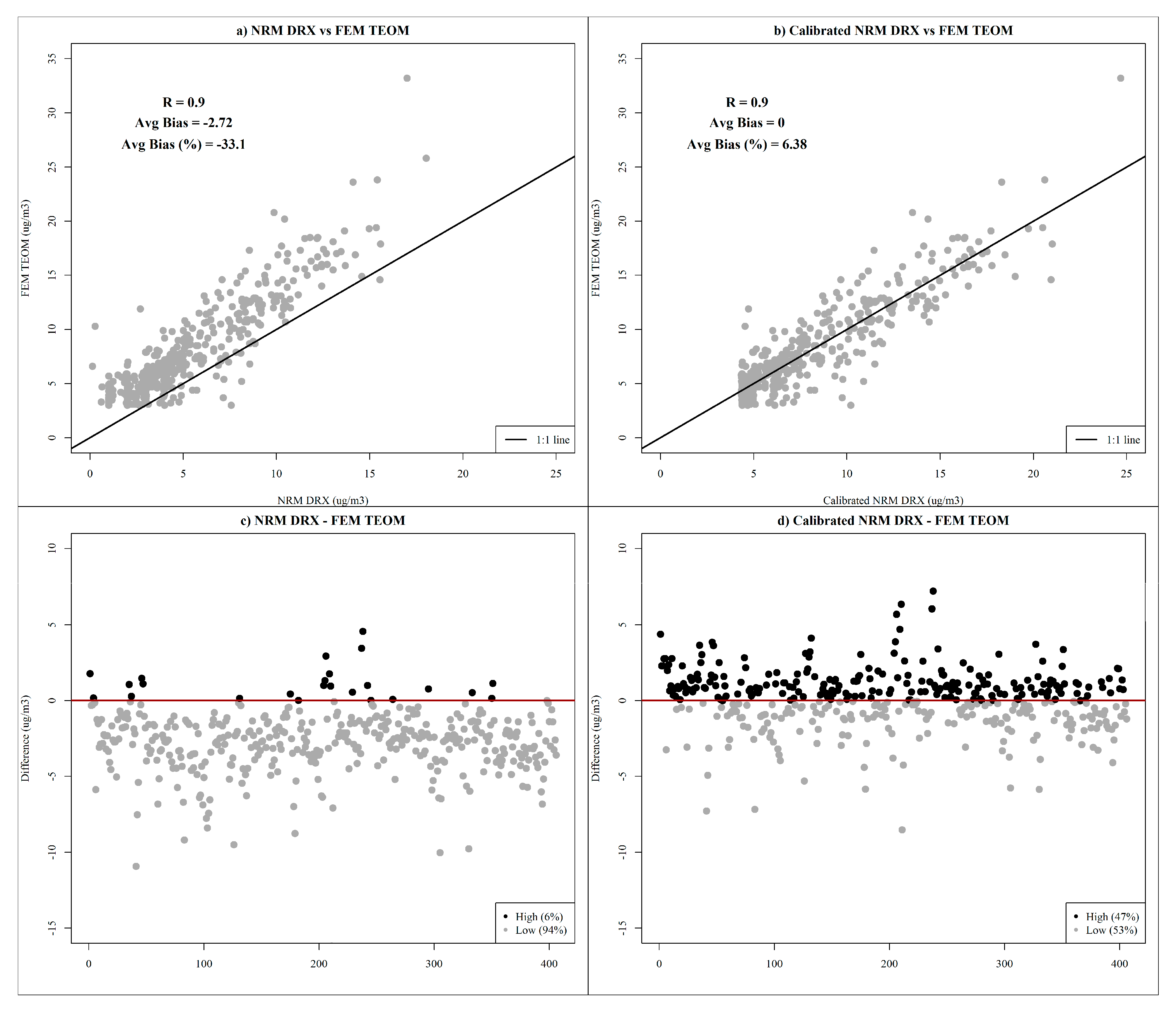

3.2. PM Data Summary and Evaluation

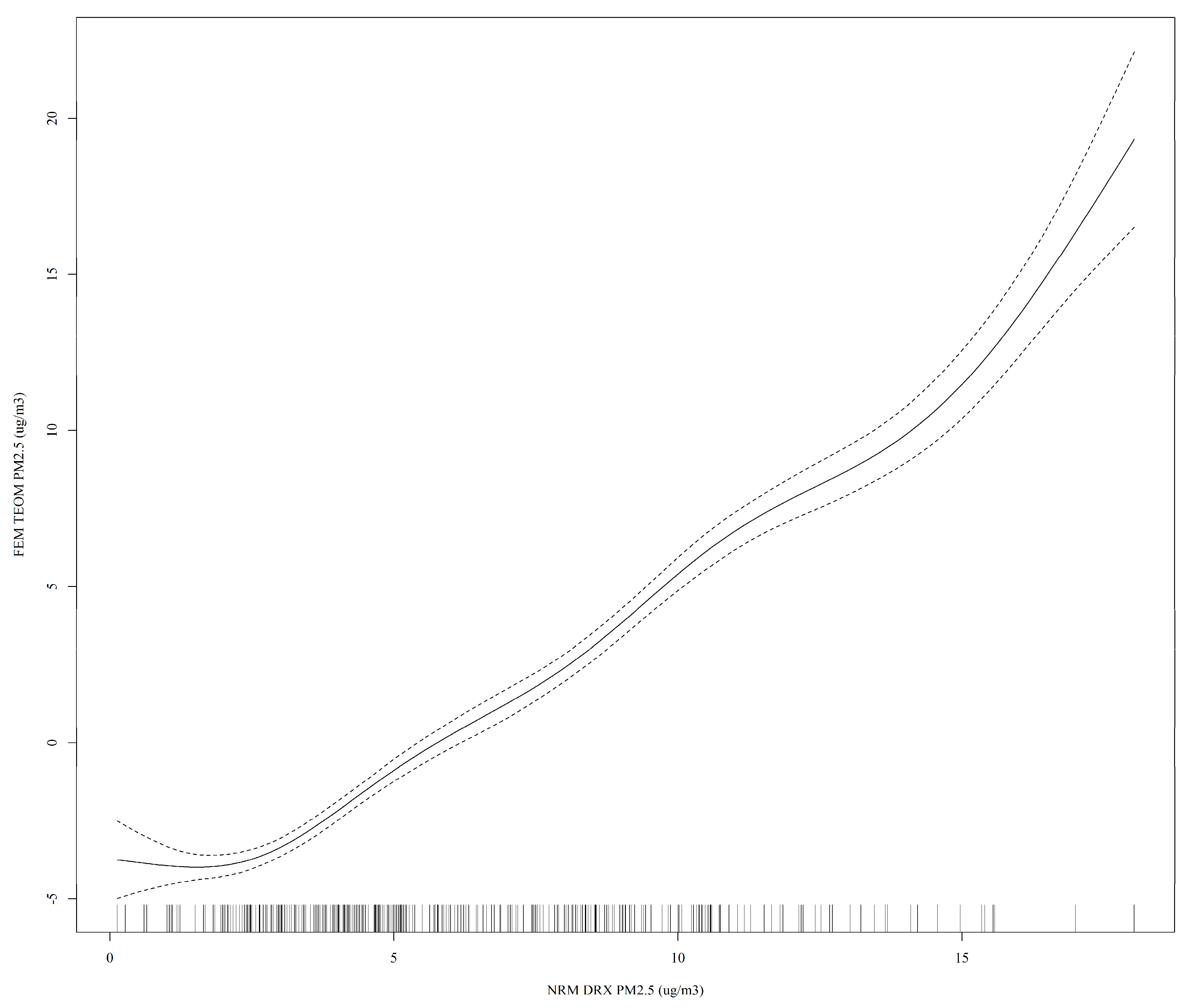

3.3. Statistical Calibration Model

4. Discussion

5. Limitations

6. Future Directions

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dutta, P.; Aoki, P.M.; Kumar, N.; Mainwaring, A.; Myers, C.; Willett, W.; Woodruff, A. Common Sense: Participatory urban sensing using a network of handheld air quality monitors. In Proceedings of the 7th ACM Conference on Embedded Networked Sensor Systems; ACM: Berkeley, CA, USA, 2009; pp. 349–350. [Google Scholar]

- Miskell, G.; Salmond, J.A.; Williams, D.E. Use of a handheld low-cost sensor to explore the effect of urban design features on local-scale spatial and temporal air quality variability. Sci. Total Environ. 2018, 619, 480–490. [Google Scholar] [CrossRef] [PubMed]

- Popoola, O.A.; Carruthers, D.; Lad, C.; Bright, V.B.; Mead, M.I.; Stettler, M.E.; Saffell, J.R.; Jones, R.L. Use of networks of low cost air quality sensors to quantify air quality in urban settings. Atmos. Environ. 2018, 194, 58–70. [Google Scholar] [CrossRef]

- Krause, A.; Zhao, J.; Birmili, W. Low-cost sensors and indoor air quality: A test study in three residential homes in Berlin, Germany. Gefahrst. Reinhalt. Der Luft 2019, 79, 87–92. [Google Scholar]

- Jiao, W.; Hagler, G.; Williams, R.; Sharpe, R.; Brown, R.; Garver, D.; Judge, R.; Caudill, M.; Rickard, J.; Davis, M. Community Air Sensor Network (CAIRSENSE) project: Evaluation of low-cost sensor performance in a suburban environment in the southeastern United States. Atmos. Meas. Tech. 2016, 9, 5281–5292. [Google Scholar] [CrossRef]

- Fuller, C.H.; Patton, A.P.; Lane, K.; Laws, M.B.; Marden, A.; Carrasco, E.; Spengler, J.; Mwamburi, M.; Zamore, W.; Durant, J.L.; et al. A community participatory study of cardiovascular health and exposure to near-highway air pollution: Study design and methods. Rev. Environ. Health 2013, 28, 21–35. [Google Scholar] [CrossRef]

- Lane, K.J.; Levy, J.I.; Scammell, M.K.; Peters, J.L.; Patton, A.P.; Reisner, E.; Lowe, L.; Zamore, W.; Durant, J.L.; Brugge, D. Association of modeled long-term personal exposure to ultrafine particles with inflammatory and coagulation biomarkers. Environ. Int. 2016, 92–93, 173–182. [Google Scholar] [CrossRef]

- Kaufman, A.; Williams, R.; Barzyk, T.; Greenberg, M.; O’Shea, M.; Sheridan, P.; Hoang, A.; Ash, C.; Teitz, A.; Mustafa, M. A citizen science and government collaboration: Developing tools to facilitate community air monitoring. Environ. Justice 2017, 10, 51–61. [Google Scholar] [CrossRef]

- The Richmond Community Air Monitoring Program Chevron Richmond Refinery Fence Line and Community Air Monitoring Systems. Available online: http://www.fenceline.org/richmond/index.htm (accessed on 6 July 2016).

- Buonocore, J.J.; Lee, H.J.; Levy, J.I. The influence of traffic on air quality in an urban neighborhood: A community-university partnership. Am. J. Public Health 2009, 99, S629–S635. [Google Scholar] [CrossRef]

- EPA, U.S. Environmental Protection Agency. Planning and Implementing a Real-time Air Pollution Monitoring and Outreach Program for Your Community: The AirBeat Project of Roxbury, Massachusetts; EPA/625/R-02/012; Office of Research and Development, National Risk Management Research Laboratory: Cincinnati, OH, USA, 2002.

- EPA. Delivering Timely Air Quality, Traffic, and Weather Information to Your Community: The Paso Del Norte Environmental Monitoring Project; EPA/625/R-02/013; EPA: Cincinnati, OH, USA, 2003.

- Truax, C.; Hricko, A.; Gottlieb, R.; Tovar, J.; Betancourt, S.; Chien-Hale, M. Neighborhood Assessment Teams: Case Studies from Southern California and Instructions on Community Investigations of Traffic-Related Air Pollution; University of Southern California: Los Angeles, CA, USA, 2013. [Google Scholar]

- Chow, J.C. Measurement Methods to Determine Compliance with Ambient Air Quality Standards for Suspended Particles. J. Air Waste Manag. Assoc. 1995, 45, 320–382. [Google Scholar] [CrossRef]

- Castell, N.; Dauge, F.R.; Schneider, P.; Vogt, M.; Lerner, U.; Fishbain, B.; Broday, D.; Bartonova, A. Can commercial low-cost sensor platforms contribute to air quality monitoring and exposure estimates? Environ. Int. 2017, 99, 293–302. [Google Scholar] [CrossRef]

- Hagan, D.H.; Isaacman-VanWertz, G.; Franklin, J.P.; Wallace, L.M.; Kocar, B.D.; Heald, C.L.; Kroll, J.H. Calibration and assessment of electrochemical air quality sensors by co-location with regulatory-grade instruments. Atmos. Meas. Tech. 2018, 11, 315–328. [Google Scholar] [CrossRef]

- Holstius, D.M.; Pillarisetti, A.; Smith, K.; Seto, E. Field calibrations of a low-cost aerosol sensor at a regulatory monitoring site in California. Atmos. Meas. Tech. 2014, 7, 1121–1131. [Google Scholar] [CrossRef]

- Fishbain, B.; Lerner, U.; Castell, N.; Cole-Hunter, T.; Popoola, O.; Broday, D.M.; Iñiguez, T.M.; Nieuwenhuijsen, M.; Jovasevic-Stojanovic, M.; Topalovic, D.; et al. An evaluation tool kit of air quality micro-sensing units. Sci. Total Environ. 2017, 575, 639–648. [Google Scholar] [CrossRef] [PubMed]

- Mead, M.I.; Popoola, O.; Stewart, G.; Landshoff, P.; Calleja, M.; Hayes, M.; Baldovi, J.; McLeod, M.; Hodgson, T.; Dicks, J. The use of electrochemical sensors for monitoring urban air quality in low-cost, high-density networks. Atmos. Environ. 2013, 70, 186–203. [Google Scholar] [CrossRef]

- Piedrahita, R.; Xiang, Y.; Masson, N.; Ortega, J.; Collier, A.; Jiang, Y.; Li, K.; Dick, R.P.; Lv, Q.; Hannigan, M. The next generation of low-cost personal air quality sensors for quantitative exposure monitoring. Atmos. Meas. Tech. 2014, 7, 3325–3336. [Google Scholar] [CrossRef]

- Williams, D.E.; Henshaw, G.S.; Bart, M.; Laing, G.; Wagner, J.; Naisbitt, S.; Salmond, J.A. Validation of low-cost ozone measurement instruments suitable for use in an air-quality monitoring network. Meas. Sci. Technol. 2013, 24, 065803. [Google Scholar] [CrossRef]

- Williams, R.; Duvall, R.; Kilaru, V.; Hagler, G.; Hassinger, L.; Benedict, K.; Rice, J.; Kaufman, A.; Judge, R.; Pierce, G.; et al. Deliberating performance targets workshop: Potential paths for emerging PM2.5 and O3 air sensor progress. Atmos. Environ. X 2019, 2, 100031. [Google Scholar] [CrossRef]

- EPA, U. LIST OF DESIGNATED REFERENCE AND EQUIVALENT METHODS. Issue Date: 17 December 2016. Available online: https://www3.epa.gov/ttnamti1/files/ambient/criteria/AMTIC%20List%20Dec%202016-2.pdf (accessed on 25 October 2019).

- SC DHEC, 2018. Ambient Air Network Monitoring Plan: State of South Carolina Network Description and Ambient Air Network Monitoring Plan Calendar Year 2018; Quality, B.o.A., Ed.; SC DHEC: Columbia, SC, USA, 2017.

- Wang, X.; Chancellor, G.; Evenstad, J.; Farnsworth, J.E.; Hase, A.; Olson, G.M.; Sreenath, A.; Agarwal, J.K. A novel optical instrument for estimating size segregated aerosol mass concentration in real time. Aerosol Sci. Technol. 2009, 43, 939–950. [Google Scholar] [CrossRef]

- Naz, M.; Sulaiman, S.; Shukrullah, S.; Sagir, M. Investigation of Air Quality and Suspended Particulate Matter Inside and Outside of University Research Laboratories. Trends Appl. Sci. Res. 2014, 9, 43–53. [Google Scholar] [CrossRef]

- Day, D.E.; Malm, W.C.; Kreidenweis, S.M. Aerosol light scattering measurements as a function of relative humidity. J. Air Waste Manag. Assoc. 2000, 50, 710–716. [Google Scholar] [CrossRef]

- Day, D.E.; Malm, W.C. Aerosol light scattering measurements as a function of relative humidity: A comparison between measurements made at three different sites. Atmos. Environ. 2001, 35, 5169–5176. [Google Scholar] [CrossRef]

- TSI Inc. Heated Inlet Sample Conditioning Effects on Remote Dust Monitoring: Application Note EXPMN-008 (US). Available online: http://www.tsi.com/uploadedFiles/_Site_Root/Products/Literature/Application_Notes/EXPMN-008_Heated_Inlet_US-web.pdf (accessed on 29 November 2018).

- Wood, S. Generalized Additive Models; Chapman and Hall/CRC: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016; Available online: https://www.R-project.org/ (accessed on 22 July 2020).

- US EPA Quality Assurance Guidance Document. Quality Assurance Project Plan: PM2.5 Chemical Speciation Sampling at Trends, NCore, Supplemental and Tribal Sites. Available online: https://www3.epa.gov/ttnamti1/files/ambient/pm25/spec/CSN_QAPP_v120_05-2012.pdf (accessed on 7 April 2017).

- EPA. Ambient Air Quality Monitoring Program. In QA Handbook for Air Pollution Measurement Systems; EPA-454/B-13-003; EPA: Washington, DC, USA, 2013; Volume II, p. 348. [Google Scholar]

- Williams, R.; Kilaru, V.; Snyder, E.; Kaufman, A.; Dye, T.; Rutter, A.; Russell, A.; Hafner, H. Air Sensor Guidebook; EPA/600/R-14/159 (NTIS PB2015-100610); U.S. Environmental Protection Agency: Washington, DC, USA, 2014.

- Rivas, I.; Mazaheri, M.; Viana, M.; Moreno, T.; Clifford, S.; He, C.; Bischof, O.F.; Martins, V.; Reche, C.; Alastuey, A.; et al. Identification of technical problems affecting performance of DustTrak DRX aerosol monitors. Sci. Total Environ. 2017, 584–585, 849–855. [Google Scholar] [CrossRef] [PubMed]

- Charron, A.; Harrison, R.M.; Moorcroft, S.; Booker, J. Quantitative interpretation of divergence between PM10 and PM2.5 mass measurement by TEOM and gravimetric (Partisol) instruments. Atmos. Environ. 2004, 38, 415–423. [Google Scholar] [CrossRef]

- Price, M.; Bulpitt, S.; Meyer, M.B. A comparison of PM10 monitors at a Kerbside site in the northeast of England. Atmos. Environ. 2003, 37, 4425–4434. [Google Scholar] [CrossRef]

- Speer, R.E.; Edney, E.O.; Kleindienst, T.E. Impact of organic compounds on the concentrations of liquid water in ambient PM2.5. J. Aerosol Sci. 2003, 34, 63–77. [Google Scholar] [CrossRef]

- Meyer, M.B.; Patashnick, H.; Ambs, J.L.; Rupprecht, E. Development of a sample equilibration system for the TEOM continuous PM monitor. J. Air Waste Manag. Assoc. 2000, 50, 1345–1349. [Google Scholar] [CrossRef][Green Version]

- Malings, C.; Tanzer, R.; Hauryliuk, A.; Kumar, S.P.; Zimmerman, N.; Kara, L.B.; Presto, A.A.; Subramanian, R. Development of a general calibration model and long-term performance evaluation of low-cost sensors for air pollutant gas monitoring. Atmos. Meas. Tech. 2019, 12, 903–920. [Google Scholar] [CrossRef]

- South Carolina Ports Authority Capital Plan. Available online: http://www.scspa.com/cargo/planned-improvements/capital-plan/ (accessed on 29 August 2019).

- Subramanian, R.; Ellis, A.; Torres-Delgado, E.; Tanzer, R.; Malings, C.; Rivera, F.; Morales, M.; Baumgardner, D.; Presto, A.; Mayol-Bracero, O.L. Air Quality in Puerto Rico in the Aftermath of Hurricane Maria: A Case Study on the Use of Lower Cost Air Quality Monitors. ACS Earth Space Chem. 2018, 2, 1179–1186. [Google Scholar] [CrossRef]

- Austin, E.; Coull, B.A.; Zanobetti, A.; Koutrakis, P. A framework to spatially cluster air pollution monitoring sites in US based on the PM2.5 composition. Environ. Int. 2013, 59, 244–254. [Google Scholar] [CrossRef]

| Variable | Instrument | Approach | Period | n | Avg | Sd | Min | Max |

|---|---|---|---|---|---|---|---|---|

| PM2.5 (µg/m3) | DustTrak DRX | Non-Reference | 1 hr | 406 | 5.6 | 3.5 | 0.1 | 18 |

| PM2.5 (µg/m3) | Thermo Model 1405F TEOM | Federal Equivalent | 1 hr | 406 | 8.3 | 4.6 | 3 | 33.2 |

| PM2.5 (µg/m3) | DustTrak DRX | Non-Reference | 24 hr | 7 | 5 | 2.7 | 1.9 | 8.8 |

| PM2.5 (µg/m3) | Thermo Model 1405F TEOM | Federal Equivalent | 24 hr | 7 | 7.1 | 3.7 | 3 | 12.7 |

| PM2.5 (µg/m3) | Gravimetric | Federal Reference | 24 hr | 7 | 7 | 3.5 | 3.4 | 12.6 |

| PM10 (µg/m3) | Low Volume | Federal Reference | 24 hr | 7 | 11.2 | 3.9 | 4.5 | 15.9 |

| Instrument | Designation | Measured Sample Volume | Total Flow | Difference |

|---|---|---|---|---|

| DustTrak DRX Aerosol Monitor 8533 | Non-reference monitor (NRM) | 2.0 L/min | 3.0 L/min | Sheath Flow Rate: 1 L/min |

| Thermo Model 1405 F Tapered element oscillating microbalance (TEOM) continuous PM monitor | U.S. EPA PM-2.5 Equivalent Monitor | 3.0 L/min | 16.67 L/min | Bypass Flow Rate: 13.67 L/min |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Commodore, S.; Metcalf, A.; Post, C.; Watts, K.; Reynolds, S.; Pearce, J. A Statistical Calibration Framework for Improving Non-Reference Method Particulate Matter Reporting: A Focus on Community Air Monitoring Settings. Atmosphere 2020, 11, 807. https://doi.org/10.3390/atmos11080807

Commodore S, Metcalf A, Post C, Watts K, Reynolds S, Pearce J. A Statistical Calibration Framework for Improving Non-Reference Method Particulate Matter Reporting: A Focus on Community Air Monitoring Settings. Atmosphere. 2020; 11(8):807. https://doi.org/10.3390/atmos11080807

Chicago/Turabian StyleCommodore, Sarah, Andrew Metcalf, Christopher Post, Kevin Watts, Scott Reynolds, and John Pearce. 2020. "A Statistical Calibration Framework for Improving Non-Reference Method Particulate Matter Reporting: A Focus on Community Air Monitoring Settings" Atmosphere 11, no. 8: 807. https://doi.org/10.3390/atmos11080807

APA StyleCommodore, S., Metcalf, A., Post, C., Watts, K., Reynolds, S., & Pearce, J. (2020). A Statistical Calibration Framework for Improving Non-Reference Method Particulate Matter Reporting: A Focus on Community Air Monitoring Settings. Atmosphere, 11(8), 807. https://doi.org/10.3390/atmos11080807