Abstract

Under the motivation of the great success of four-dimensional variational (4D-Var) data assimilation methods and the advantages of ensemble methods (e.g., Ensemble Kalman Filters and Particle Filters) in numerical weather prediction systems, we introduce the implicit equal-weights particle filter scheme in the weak constraint 4D-Var framework which avoids the filter degeneracy through implicit sampling in high-dimensional situations. The new variational particle smoother (varPS) method has been tested and explored using the Lorenz96 model with dimensions , , , and . The results show that the new varPS method does not suffer from the curse of dimensionality by construction and the root mean square error (RMSE) in the new varPS is comparable with the ensemble 4D-Var method. As a combination of the implicit equal-weights particle filter and weak constraint 4D-Var, the new method improves the RMSE compared with the implicit equal-weights particle filter and LETKF (local ensemble transformed Kalman filter) methods and enlarges the ensemble spread compared with ensemble 4D-Var scheme. To overcome the difficulty of the implicit equal-weights particle filter in real geophysical application, the posterior error covariance matrix is estimated using a limited ensemble and can be calculated in parallel. In general, this new varPS performs slightly better in ensemble quality (the balance between the RMSE and ensemble spread) than the ensemble 4D-Var and has the potential to be applied into real geophysical systems.

1. Introduction

The Particle filter (PF) is a continuous-time sequential Monte Carlo method, which uses Monte Carlo sampling particles to estimate and represent the posterior probability density functions (PDFs). The advantage of PFs over variational methods and EnKF is that the PFs estimate the posteriors without the linear and Gaussian assumptions. PFs have been successfully applied in systems with low dimensions, e.g., [1,2]. PF uses a set of weighted particles to estimate the posterior PDFs of the model state. The weight of each particle is proportional to the likelihood of these observations, which are the conditional PDFs of the observations given the model state. However, in the case of high-dimensional model with a large number of independent observations, it tends to encounter the problem that only one particle gets a weight close to one, while others have weights close to zero, which is called filter degeneracy or the collapse of the filter. To prevent filter degeneracy, PF requires the particle number to scale exponentially with the dimension of independent observations [3,4]. In practical application of NWP systems, the number of the ensemble members is always on the order of 10–100 [5] because of the limitation of computation resources, which makes the obstacle of degeneracy inevitable for PFs.

One potential method to avoid filter degeneracy is drawing samples from a proposal transition density rather than the original transition density [6]. The limitations on the proposal transition density are few as long as the support of the proposal density is larger than that of the original density and the proposal density should be non-zero where the original density is non-zero. Doucet et al. [6] proposed an optimal proposal density which minimizes the variance of the weights. In the weighted ensemble Kalman filter (WEKF) [7], the stochastic EnKF [8] is used as the proposal density. Morzfeld et al. [9] discussed the behavior of WEKF in high-dimensional systems, and suggested localization to make WEKF effective. Chen et al. [10] extended the localization approach to the WEKF, providing better performance in nonlinear systems than the local particle filter (LPF). Like the EnKF, 4D-Var can also be used as a proposal density, by using the particle filter in a 4D-Var framework. Morzfeld et al. [11] proposed a variational particle smoother method and introduced localization to eliminate particle collapse. The equivalent-weights particle filter (EWPF) allows the proposal density to depend on all particles at the previous time and gives equal weights to most particles to avoid degeneracy [12,13,14,15]. Combining the idea of implicit sampling and equivalent-weights, Zhu et al. [16] proposed the implicit equal-weights particle filter (IEWPF), in which there is no need for parameter tuning. To remedy the bias in IEWPF of Zhu et al. [16], Skauvold et al. [17] proposed a two-stage IEWPF method. Other techniques to eliminate filter degeneracy have been reviewed by van Leeuwen et al. [18], including transformation, localization, and hybridization.

The IEWPF [16] is an indirect method based on the ideas of implicit sampling and proposal transition density [19]. The basic idea of implicit sampling is to locate the regions of high probability and to draw samples in these regions. This scheme uses a proposal transition density in which each particle is drawn implicitly from a slightly different proposal density by introducing a factor in front of the covariance of the proposal transition density. For each particle, this factor depends on the proposal density of all particles, and is calculated to fulfill the equal-weights property.

The most successful data assimilation (DA) methods implemented in the operational numerical weather prediction (NWP) centers in the world are variational methods (e.g., 3D-Var and 4D-Var, [20]), ensemble Kalman filter (EnKF) method, and its variants [8,21,22,23]. Variational methods try to search the peak value of the posterior PDF through a minimization process of the cost function, which cannot be guaranteed to be the global optimum provided that the search may stop at a local mode. It is also hard to estimate the uncertainties in variational methods. EnKF methods estimate the mean and covariance of the posterior PDF because the EnKF implicitly assumes that the model is linear and that the posterior PDF is Gaussian. Consequently, the estimation of the posterior PDF becomes simplified under those assumptions, which means the mean value is close to the peak and the covariance becomes much easier to calculate. These implicit conditions and assumptions of the variational methods and the EnKF methods are unlikely to be satisfied in most real geophysical systems. Neither of these two DA methods can describe nonlinear and non-Gaussian posterior PDFs in an accurate manner, and it is still unclear what these two methods describe for the multi-modal posterior PDFs. Therefore, the variational methods and EnKF methods cannot meet the increasingly complex and advanced nonlinear models because these methods are relatively incomplete.

The success of 4D-Var data assimilation in operational NWP centres is due to the following four aspects [24]. (1) Variational methods are able to handle the increasing quantity of asynchronous satellite observations effectively and are consistent with the model dynamics. (2) Variational methods have the capacity to contain the weak nonlinearities of the model and observation operators. (3) Variational methods avoid localization and perform the data assimilation process in a global way. (4) Variational methods allow additional terms in the cost function, e.g., variational bias correction, variational quality control, digital filter initialization, weak constraint term, etc. If it is assumed that the dynamical model is perfect, the objective is to seek a best initial condition, which minimizes the errors in the background and observations during a time window. This is the so-called strong constraint 4D-Var. Relaxing the perfect model assumption by allowing for a Gaussian additive model error in the dynamical model, the problem has become fully 4-dimensional. The most appropriate initial condition and model error are found by minimizing the errors in the initial state, observations and the model during a time window. This is the weak constraint 4D-Var.

The new algorithm is designed as a compromise between weak constraint 4D-Var and the IEWPF, and inherits the merits of both. Efforts have been made to introduce a particle filter scheme in a 4D-Var framework. Morzfeld et al. [11] apply a localized particle smoother in the strong constraint 4D-Var, which prevents the collapse of the variational particle smoother and yields results that are comparable with those of the ensemble version of the 4D-Var method. The particle smoother has a kind of natural connection to weak constraint 4D-Var formulation, and implicit sampling by Monte Carlo methods can be easily applied in the 4D-Var data assimilation system through the proposal transition density. The major obstacle for the implementation of IEWPF in a real dynamical system is the calculation of the covariance of the proposal density (P matrix). To implement the IEWPF in the weak constraint 4D-Var framework, the major point is the expression of the P matrix. As shown in Morzfeld et al. [11], the P matrix has a connection with the Hessian matrix of the weak constraint 4D-Var cost function. In addition, the weak constraint 4D-Var gives an effective way of calculating its Hessian matrix of the cost function. To avoid explicit estimation of the P matrix, we estimate the random part of each updated model state using ensembles. We introduce the implicit sampling and proposal transition density in the weak constraint 4D-Var framework, using a scale factor to slightly adjust the covariance of the proposal density (P matrix) for the purpose of fulfilling the equal-weights.

2. Implicit Equal-Weights Variational Particle Smoother

In this section, we describe the basic idea of the new algorithm, referred to from now on as the implicit equal-weights variational particle smoother (IEWVPS). Two key points, the selection of and estimation of the P matrix, are introduced in detail in the subsections.

2.1. The Basic Idea

Before introducing the IEWVPS algrithom, some assumptions must be made. As in Zhu et al. [16], it is assumed that the observation errors have a Gaussian distribution and that the observations are spatially and temporally independent. The observation operator is linear and the model errors are Gaussian. The dynamical model system is Markovian, which means that the model state at time t only relates to the model state at the previous time .

According to Bayes’ theorem, the posterior PDF of model state given the observations during the time window can be written as:

Applying the idea of proposal density, one can multiply the numerator and the denominator of Equation (1) by the same factor , leading to:

The support of should be equal to or larger than that of . Instead of drawing samples from the original transition density , we could now draw samples from the proposal transition density , leading the posterior PDF to be expressed as:

where is the number of particles and is the weight of particle i:

As it is assumed that the observation error and model error are Gaussian, the numerator of Equation (4) could be expressed in terms of the cost function of weak constraint 4D-Var of particle i:

where is the cost function of weak constraint 4D-Var of particle i:

where . is the initial background state and Q is the model error covariance.

Morzfeld et al. [11] described the connection between the variational particle smoother and the ensemble 4D-Var. According to this connection, the numerator of Equation (4) could be written as:

where , A is constant, is the minimizer of the cost function, and is the minimum of the cost function. is the inverse of the Hessian of and could be estimated as the Gauss–Newton approximation of the Hessian, which requires the second derivative of , computed by tangent linear and adjoint model of the dynamical model.

In this study, the proposal transition density now can be chosen as Gaussian with mean and covariance , so that:

Through implementing the implicit equal-weights particle filter (IEWPF) scheme (Zhu et al.) in the weak constraint 4D-Var framework, the updated model state of particle i are computed as:

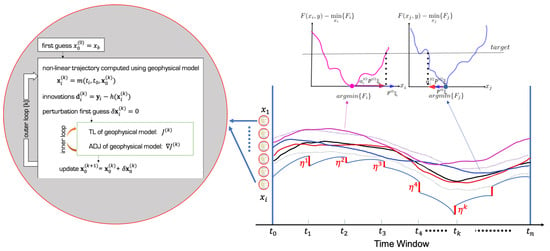

where is a random vector drawn from the implicit proposal density . is a scalar chosen by setting the weights of all particles to the same target weight [16]. In the original formulation of Zhu et al. [16], has an analytical solution in terms of the Lambert W function [25]. Pseudo-code for the IEWVPS is provided in Algorithm 1 and a schematic diagram of the IEWVPS is shown in Figure 1. In the implementation of the IEWVPS, the weak contraint 4D-Var can be implemented in fully nonlinear or incremental form.

| Algorithm 1 The implict equal-weights variational particle filter (IEWVPS). |

| (1) Run ensemble weak constraint 4D-Var, save the minimizer and |

| (2) Sample from . |

| (3) Select judiciously to ensure all particles weights are equal. |

| (4) Calculate the random part according to Section 2.3. |

| (5) Update the model state using Equation (9). |

| (6) Go to the next assimilation window and repeat steps (1) to (5). |

Figure 1.

Schematic diagram of the implicit equal-weights variational particle smoother (IEWVPS).

2.2. The Scale Factor

In the IEWPF method, is an important parameter, which is selected by setting all particle weights to the target weight. In this section, we extend the calculation of in Zhu et al. [16] to 4D model state.

As mentioned above, we draw samples implicitly from a Gaussian proposal density instead of the original proposal density . These two PDFs are related by:

where denotes the absolute value of the determinant of the Jacobian matrix of the transformation . The function is defined by Equation (9). The weight of particle i is given by:

Neglecting and taking of both sides gives:

According to Zhu et al. [16], can be reduced to:

Using and , the becomes:

Setting the weights of all particles to the target weight is equivalent to setting the right-hand side of Equation (14) to a constant C:

and leads to:

where and has been absorbed into C. Although Equations (15) or (16) have analytical solutions, it is solved by the Newton iterative method for practical reasons. As discussed in Zhu et al. [16] and Skauvold et al. [17], there exist two branches of . Sampling in different branches yields different performances. In this study, as in Zhu et al. [16], a randomly chosen scheme of 50% and 50% has been adopted.

2.3. The Expression of the P Matrix

To implement the new algorithm or IEWPF, one major problem is calculation of the covariance of the proposal density (P matrix). In IEWPF, P is given explicitly, which is difficult to calculate in real geophysical systems. In our method or the method of Morzfeld et al. [11], P has a connection with the Hessian matrix of the cost function of 4D-Var, which is also difficult to calculate explicitly in red high-dimensional problems. In this study, we estimate the P matrix implicitly using a limited ensemble.

Given the model state at time , the state at time is given by:

where denotes forward integration of the dynamical model, and represents additive Gaussian model error with mean zero and covariance Q. Following the notation in El-Said [26], we define the four-dimensional model state and model error as follows:

z and p are linked by 4D model operator :

is the 4D model operator:

With this formulation, the cost function can be written as:

is the 4D background state. The Hessian of the cost function is as follows:

The operators L and H are written as:

where M is a linearisation of the forward model and H is a linearisation of . Therefore, P in Equation (9) is related to S by:

Extracting out of parentheses, we get:

Performing a singular value decomposition (SVD) of : , we can estimate directly:

In this formulation, and are expressed explicitly, which is applicable to a low-dimensional problem. If the model dimension is , and the length of assimilation window is n, the dimension of the 4D model state is ; thus, the dimension of P is . When the model dimension is high, or the assimilation window is long, it is difficult to explicitly express P and . Since what we need is , we do not need to calculate the whole Hessian of . can be estimated using tangent linear and adjoint models.

Define the ensemble of as , where is the particle number. Let , where is the ensemble mean of . Instead of performing SVD of , we perform SVD of . We now get :

3. Numerical Experiments

In this section, the new scheme is tested on the Lorenz96 model [27], which is given by:

where the indices wrap around, so that , for . F is often set to 8 for chaotic behavior. One feature of the Lorenz96 model is that it is a chaotic system, as are the atmospheric or oceanic systems. Another is that the model dimension can be easily extended. Thus, the performance of the new scheme can be tested from low to high-dimensional problems. The performance of IEWVPS is compared with ensemble 4D-Var (En4DVar), which is a Monte Carlo method [28]. The En4DVar is an ensemble of weak constraint 4D-Var analysis cycles taking account of observations, boundary, forcing, and model error sources. With a sufficiently large ensemble size, it has the ability to handle non-Gaussian PDFs [24]. In our study, only the initial conditions and observations are perturbed according to their pre-specified error covariance matrices to account for the uncertainties. There is no information exchange between ensemble members; thus, the model state of each ensemble member (or particle) depends only on itself. However, in the IEWVPS, the model state of each particle depends on all particles. In this study, the weak constraint 4D-Var scheme is from El-Said [26]. The results are also compared with the IEWPF and LETKF methods.

3.1. Comparison on Different Model Dimensions

In this study, the new scheme has been tested with 40, 100, 250, and 400 dimensions, representing low- to high-dimensional problems. All IEWVPS experiments use 50 particles (except the 400-dimensional experiments which use 20 particles) and the same assimilation window (10 model steps). The truth and perturbed observations are generated as follows: first, the model is integrated for a spin-up period of 50 steps. The final spin-up state is used as the true initial condition (). Then, the model is integrated for 200 assimilation windows to generate the truth. Perturbed observations are sampled from the truth every fifth step. The IEWVPS and En4DVar are smoother methods which have an assimilation window; all observations during 10 steps are assimilated in one assimilation window when doing a data assimilation process. While the IEWPF and LETKF are filter methods, the observations are assimilated only at an analysis time step. Despite of the difference of the data assimilation process in the assimilation window, the observation frequency is same in all experiments, which means that the total amount and the positions of observations are totally the same in the smoother and filter experiments. An adaptive localization covariance has been used in the LETKF. In these experiments, the model error covariance matrix Q and background error covariance matrix B are specified as tridiagonal matrices, while the observation error covariance matrix R is a diagonal matrix:

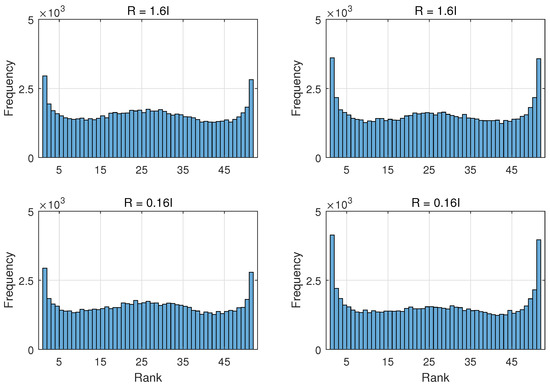

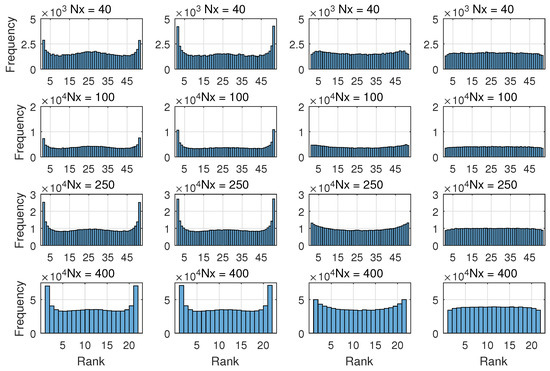

It should be noted that the error variance in this study is larger than in Zhu et al. [16] and Skauvold et al. [17]. Although the RMSE is smaller with than , which is as expected, the ratio of RMSE to ensemble spread does not change much, as shown in Table 1. Figure 2 compares rank histograms of one run for different error variances. A similar shape can be found for and . Thus, in what follows, we set variances of B, Q, R to 2.0, 1.0, and 1.6, respectively.

Table 1.

The root mean square error (RMSE), spread and their ratio for different error variance.

Figure 2.

The rank histograms calculated from posterior particles generated by the IEWVPS (left panel) and En4DVar (right panel) with (top panel) and (bottom panel). Ranks are aggregated over all steady-state time steps and all state elements.

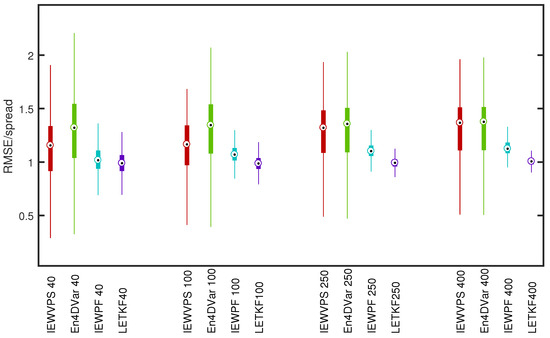

The averaged ratio of the RMSE to ensemble spread over 2000 model time steps has been used to evaluate the performance for different dimensions. The value of the ratio close to 1 indicates that the RMSE and ensemble spread are matched. Figure 3 illustrates the averaged ratio of the RMSE to ensemble spread as a function of model dimension. The mean ratio is listed in Table 2. In general, the ratio of the RMSE to ensemble spread increases as the model dimension enlarges. In the IEWVPS experiments, the ratio is closer to 1.0 than in the En4DVar experiments but larger than in the IEWPF and LETKF experiments. Although LETKF and IEWPF provide the best performance in terms of the ratio, the RMSE is not smallest in the LETKF and IEWPF experiments.

Figure 3.

The ratio of the averaged posterior RMSE to ensemble spread for the different model dimensions. Red for the IEWVPS experiments; Green for the En4DVar experiments; Cyan for the IEWPF experiments, and Purple for the LETKF; the edges of the bar represent the minimum and maximum value, the filled box in the bar represents 99.3% data, and the cycle in bar represents the median value.

Table 2.

The mean RMSE, ensemble spread, and the ratio of RMSE to ensemble spread.

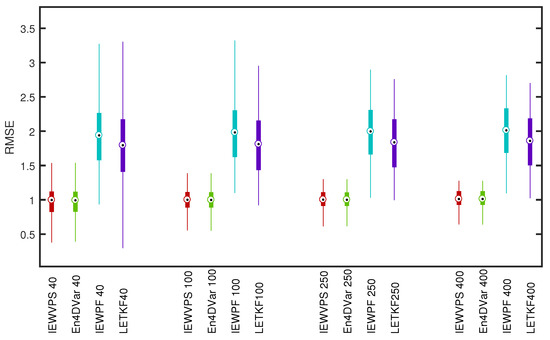

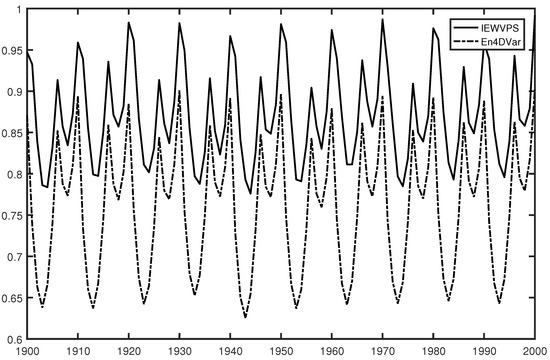

Since the deterministic part in Equation (9) is provided by weak constraint 4D-Var, the mean RMSE in the IEWVPS experiments is similar to that in the En4DVar experiments, as shown in Figure 4. Compared with the IEWPF and LETKF experiments, an extraordinary improvement is seen in the IEWVPS experiments using the same observation frequency, the mean RMSE has been reduced from ∼2.0 to ∼1.0 (Figure 4 and Table 2). With the additional random part introduced in Equation (9), the IEWVPS increases the ensemble spread relative to the En4DVar (Figure 5). This means that the IEWVPS could maintain the same level of the RMSE as the En4DVar and increase the ensemble spread at the same time. Figure 6 compares the rank histograms of one run for different model size. A rank histogram is generated by ranking the truth or observation in the set of ascend sorting ensemble members over a period of time. It can be used to evaluate the reliability of the ensemble forecast qualitatively and diagnostic the ensemble spread. Usually, an uniform histogram is desirable, which means the ensemble spread matches the RMSE. A tilted shape indicates that systematic bias exist, and the U-shape histogram indicates a too little spread, while the humped histogram indicates that the ensemble spread is too large [29]. As shown in Figure 6, U-shaped rank histograms occur when model dimension is larger than 100 in the IEWVPS and En4DVar experiments, indicating that the ensemble spread is smaller than the RMSE.

Figure 4.

The averaged RMSE for the different model dimensions. Red for IEWVPS experiments; Green for En4DVar experiments; Cyan for IEWPF experiments; and Purple for LETKF; the edges of the bar represent the minimum and maximum value, the filled box in the bar represents 99.3% data, and the cycle in bar represents the median value.

Figure 5.

The ensemble spread over the last 100 model steps. The model dimension is .

Figure 6.

The rank histograms calculated from posterior particles generated by the IEWVPS (first column), En4DVar (second column), IEWPF (third column), and LETKF (last column). Ranks are aggregated over all steady-state time steps and all state elements.

3.2. Influence of Ensemble

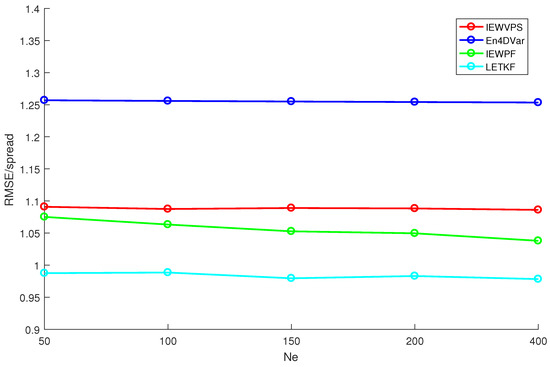

In the standard PF approach, the number of particles must increase exponentially with the number of independent observations to prevent collapse [3]. To test the performance for different ensemble sizes, we set the model dimension to and increase the ensemble size from 50 to 100, 150, 200, and 400. Results are shown in Figure 7. In all experiments, the ratio of RMSE to spread is not sensitive to the ensemble size. The LETKF and IEWPF outperform the IEWVPS and En4DVar in maintaining the balance of the RMSE and ensemble spread. The RMSE is also not sensitive to the ensemble size with the pre-specified background and observation error covariance matrices in the Section 3.1 (not shown). Although there is no significant improvement in the IEWVPS or other experiments by increasing the ensemble size, it shows that a small ensemble size (less than 100) would yield results comparable to that of the large ensemble size (). In a real geophysical system, the ensemble size usually is 50–100. Thus, the ensemble size (less than 100) which is needed to promise a good performance of the IEWVPS is enough for the real geophysical application. Generally speaking, even with a small ensemble size (less than 100), the new approach performs well and does not degenerate.

Figure 7.

Mean RMSE/spread as a function of ensemble size. Red for the IEWVPS experiments; Blue for the En4DVar experiments; Green for the IEWPF experiments; Cyan for the LETKF experiments. The model dimension .

3.3. Deterministic Observation Experiments

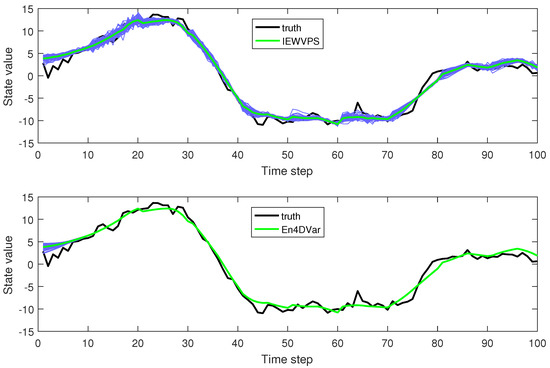

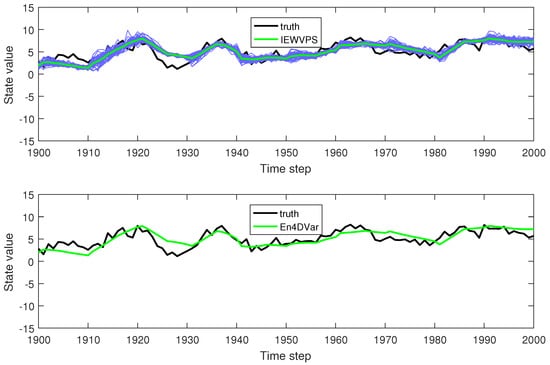

In the above experiments, to describe the uncertainties, perturbed observations are assimilated, that is, the observation ensemble size is the same as the number of particles. In atmospheric or oceanic data assimilation, we often do not add noise to the observations, that is, in variational methods and in the EnKF, only one set of observations is used. We do experiments in this way to see how the IEWVPS maintains the capability of describing uncertainty. The results of the first 100 and last 100 model steps are shown in Figure 8 and Figure 9. When the observations are deterministic, the En4DVar experiment collapses after one assimilation window (10 model steps), while the IEWVPS experiment does not collapse.

Figure 8.

Trajectories of the No. 50 variable during the first 100 model steps. The black line represents truth, blue lines represent the evolution of each particle, and the green line represents the ensemble mean. Experiments are implemented with and .

Figure 9.

Trajectories of the No. 50 variable during the last 100 model steps. The black line represents truth, blue lines represent the evolution of each particle, and the green line represents the ensemble mean. Experiments are implemented with and .

4. Discussions and Conclusions

In this study, we use the weak constraint 4D-Var as proposal density in a standard IEWPF framework [16]. To ensure good performance in the IEWPF, a relaxing term, which forces the model state towards the future observation [13,16], must be included. There is no need for the relaxing scheme in this new approach, since the deterministic component is provided by the analysis of weak constraint 4D-Var, which guarantees the accuracy.

This new approach is tested on the Lorenz96 model with different model dimensions. As shown in Section 3, it is applicable to both low and high-dimensional problems. A comparison with the En4DVar reveals that the ensemble spread is larger in the IEWVPS experiments than in the En4DVar experiments while the RMSE is on the same level. If observations are considered to be deterministic, as is usually done in 4D-Var and for the EnKF, the ensemble 4D-Var collapses quickly after one assimilation window, whereas the IEWVPS performs well and does not degenerate. Compared with IEWPF and LETKF experiments, the RMSE in the IEWVPS is much smaller using the same observation frequency. With the pre-specified error covariance, the RMSE of the IEWVPS is about 0.93, while the RMSE of the IEWPF and LETKF is about 1.9. Not only is the RMSE reduced in the IEWVPS experiment, the ensemble spread (∼0.8) is also reduced. As a result, the ensemble spread is smaller than RMSE in both IEWVPS and En4DVar experiments, although perturbed observations are used.

As pointed out by Snyder et al. [3], the number of particles must increase exponentially with the number of independent observations to prevent filter degeneracy. We test the performance of the 100-dimensional Lorenz96 model with different ensemble size. It is proven that, even with a small ensemble size (less than 100), the IEWVPS can perform well and yield results comparable to that of large ensemble size (). In the real atmospheric or oceanic application, the ensemble size is usually less than 100. Thus, the curse of dimensionality does not exist in the application of the IEWPF to real atmosphere or ocean systems.

Our method is implemented in the standard IEWPF framework. As proven by Skauvold et al. [17], the gap in the IEWPF will lead to systematic bias in the predictions. This systematic bias can be eliminated by using a two-stage IEWPF method [17]. U-shaped rank histograms are seen for both low- and high-dimensional Lorenz96 models, indicating that the ensemble spread is smaller than the RMSE. One possible reason for the U-shaped rank histograms is the systematic underestimation of variance. Another possible reason is the estimation of the P matrix. To avoid direct calculation of the Hessian matrix and its inverse, we directly estimate using a limited ensemble. The P matrix is specific to each particle, but, to reduce computational cost, we assume that it is the same for all particles. These factors lead to a smaller ensemble spread. However, there is an advantage of the direct estimation of using a limited ensemble, namely that this process can be implemented in parallel. Benefiting from this parallel advantage, estimation of computationally cheap compared with the cost of ensemble 4D-Var. Thus, the new approach has the potential to be applied to practical geophysical systems, since even ensemble 4D-Var can be implemented in parallel.

To ensure good performance, other techniques could be tested and used, such as posterior inflation [30], kernel density distribution mapping (KDDM, McGinnis et al. [31]), etc. We plan to implement the IEWVPS in the regional ocean model system (ROMS) or the Weather Research and Forecasting Model (WRF) in the near future.

Author Contributions

Conceptualization, P.W.; methodology, P.W., Y.C., M.Z., and W.Z.; software, P.W. and M.Z.; validation, P.W. and M.Z.; formal analysis, P.W.; data curation, P.W.; writing—original draft preparation, P.W. and M.Z.; writing—review and editing, M.Z. and W.Z.; visualization, P.W. and M.Z.; supervision, M.Z. and W.Z.; project administration, W.Z.; funding acquisition, M.Z. and Y.C. P.W. and M.Z. are co-first authors of the article. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (2018YFC1406202), the National Natural Science Foundation of China (NSFC, Grant No. 41675097, 41830964), and the Hunan Provincial Innovation Foundation For Postgraduate (No. CX2017B034).

Acknowledgments

The weak constraint 4D-Var codes with the Lorenz96 model in this study is provided by El-Said, downloading from https://github.com/draelsaid/AES-4DVAR-Suite.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 4D-Var | 4-dimensional variational |

| ADJ | adjoint model |

| DA | data assimilation |

| EnKF | ensemble Kalman filter |

| En4DVar | ensemble 4D-Var |

| IEWPF | implicit Equal-Weights Particle Filter |

| IEWVPS | implicit Equal-Weights Variational Particle Smoother |

| KDDM | kernel density distribution mapping |

| NWP | numerical weather prediction |

| probability density function | |

| PF | particle filter |

| RMSE | Root Mean Squared Error |

| ROMS | regional ocean model system |

| TL | tangent linear model |

| varPS | variational particle smoother |

| WEKF | weighted ensemble Kalman filter |

References

- Kuznetsov, L.; Ide, K.; Jones, C.K. A Method For Assimilation Of Lagrangian Data. Mont. Wea. Rev. 2003, 131, 2247–2260. [Google Scholar] [CrossRef]

- Spiller, E.T.; Budhiraja, A.; Ide, K.; Jones, C.K. Modified Particle Filter Methods For Assimilating Lagrangian Data Into A Point-Vortex Model. Phys. D 2008, 237, 1498–1506. [Google Scholar] [CrossRef]

- Snyder, C.; Bengtsson, T.; Bickel, P.; Anderson, J. Obstacles to high-dimensional particle filtering. Mon. Wea. Rev. 2008, 136, 4629–4640. [Google Scholar] [CrossRef]

- Snyder, C.; Bengtsson, T.; Morzfeld, M. Performance bounds for particle filters using the optimal proposal. Mon. Wea. Rev. 2015, 143, 4750–4761. [Google Scholar] [CrossRef]

- van Leeuwen, P.J. Particle filtering in geophysical systems. Mon. Wea. Rev. 2009, 137, 4089–4114. [Google Scholar] [CrossRef]

- Doucet, A.; de Freitas, N.; Gordon, N. Sequential Monte Carlo Methods in Practice; Springer: Berlin, Germany, 2001. [Google Scholar]

- Papadakis, N.; MÉMin, É.; Cuzol, A.; Gengembre, N. Data Assimilation With The Weighted Ensemble Kalman Filter. Tellus A 2010, 62, 673–697. [Google Scholar] [CrossRef]

- Burgers, G.; van Leeuwen, P.J.; Evensen, G. Analysis scheme in the ensemble Kalman filter. Mon. Wea. Rev. 1998, 126, 1719–1724. [Google Scholar] [CrossRef]

- Morzfeld, M.; Hodyss, D.; Snyder, C. What the collapse of the ensemble Kalman filter tells us about particle filters. Tellus A Dyn. Meteorol. Oceanogr. 2017, 69, 1283809. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, W.; Zhu, M. A Localized Weighted Ensemble Kalman Filter for High-Dimensional Systems. Q. J. R. Meteorol. Soc. 2019. [Google Scholar] [CrossRef]

- Morzfeld, M.; Hodyss, D.; Poterjoy, J. Variational particle smoothers and their localization. Q. J. R. Meteorol. Soc. 2018, 144, 806–825. [Google Scholar] [CrossRef]

- van Leeuwen, P.J. Nonlinear data assimilation in geosciences: An extremely efficient particle filter. Q. J. R. Meteorol. Soc. 2010, 136, 1991–1999. [Google Scholar] [CrossRef]

- Ades, M.; van Leeuwen, P.J. An exploration of the equivalent weights particle filter. Q. J. R. Meteorol. Soc. 2013, 139, 820–840. [Google Scholar] [CrossRef]

- Ades, M.; van Leeuwen, P.J. The effect of the equivalent-weights particle filter on dynamical balance in a primitive equation model. Mon. Wea. Rev. 2015, 143, 581–596. [Google Scholar] [CrossRef]

- Browne, P.A.; van Leeuwen, P.J. Twin experiments with the equivalent weights particle filter and HadCM3. Q. J. R. Meteorol. Soc. 2015, 141, 3399–3414. [Google Scholar] [CrossRef]

- Zhu, M.; van Leeuwen, P.J.; Amezcua, J. Implicit equal-weights particle filter. Q. J. R. Meteorol. Soc. 2016, 142, 1904–1919. [Google Scholar] [CrossRef]

- Skauvold, J.; Eidsvik, J.; van Leeuwen, P.J.; Amezcua, J. A revised implicit equal-weights particle filter. Q. J. R. Meteorol. Soc. 2019, 145, 1490–1502. [Google Scholar] [CrossRef]

- van Leeuwen, P.J.; Künsch, H.R.; Nerger, L.; Potthast, R.; Reich, S. Particle filters for high-dimensional geoscience applications: A review. Q. J. R. Meteorol. Soc. 2019, 145, 2335–2365. [Google Scholar] [CrossRef]

- Chorin, A.J.; Tu, X. Implicit sampling for particle filters. Proc. Natl. Acad. Sci. USA 2009, 106, 17249–17254. [Google Scholar] [CrossRef]

- Talagrand, O.; Courtier, P. Variational assimilation of meteorological observations with the adjoint vorticity equation. I: Theory. Q. J. R. Meteorol. Soc. 1987, 113, 1311–1328. [Google Scholar] [CrossRef]

- Evensen, G. Sequential data assimilation with a nonlinear quasi-geostrophic model using Monte Carlo methods to forecast error statistics. J. Geophys. Res. Ocean. 1994, 99, 10143–10162. [Google Scholar] [CrossRef]

- Anderson, J.L. An ensemble adjustment Kalman filter for data assimilation. Mon. Wea. Rev. 2001, 129, 2884–2903. [Google Scholar] [CrossRef]

- Hunt, B.R.; Kostelich, E.J.; Szunyogh, I. Efficient data assimilation for spatiotemporal chaos: A local ensemble transform Kalman filter. Phys. D 2007, 230, 112–126. [Google Scholar] [CrossRef]

- Bonavita, M.; Trémolet, Y.; Holm, E.E.A. A strategy for data assimilation. ECMWF Tech. Memo. 2017, 2017. [Google Scholar] [CrossRef]

- Weisstein, E.W. Lambert W-function. From MathWorld—A Wolfram Web Resource. 2002. Available online: http://mathworld.wolfram.com/LambertW-Function.html (accessed on 12 February 2020).

- El-Said, A. Conditioning of the Weak-Constraint Variational Data Assimilation Problem for Numerical Weather Prediction. Ph.D. Thesis, University of Reading, Reading, UK, 2015. [Google Scholar]

- Lorenz, E.N. Predictability: A problem partly solved. In Proceedings of the Seminar on Predictabilit, Reading, UK, 4–8 September 1995; pp. 1–18. [Google Scholar]

- Bonavita, M.; Isaksen, L.; Hólm, E. On the use of EDA background error variances in the ECMWF 4D-Var. Q. J. R. Meteorol. Soc. 2012, 138, 1540–1559. [Google Scholar] [CrossRef]

- Hamill, T.M. Interpretation of Rank Histograms for Verifying Ensemble Forecasts. Mon. Wea.Rev. 2001, 129, 550–560. [Google Scholar] [CrossRef]

- Anderson, J.L. An adaptive covariance inflation error correction algorithm for ensemble filters. Tellus A 2007, 59, 210–224. [Google Scholar] [CrossRef]

- McGinnis, S.; Nychka, D.; Mearns, L.O. A New Distribution Mapping Technique For Climate Model Bias Correction. In Machine Learning in addition, Data Mining Approaches to Climate Science; Springer: Berlin, Germany, 2015; pp. 91–99. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).