Comparative Analysis of Deep Learning Models for Predicting Causative Regulatory Variants

Abstract

1. Introduction

2. Results

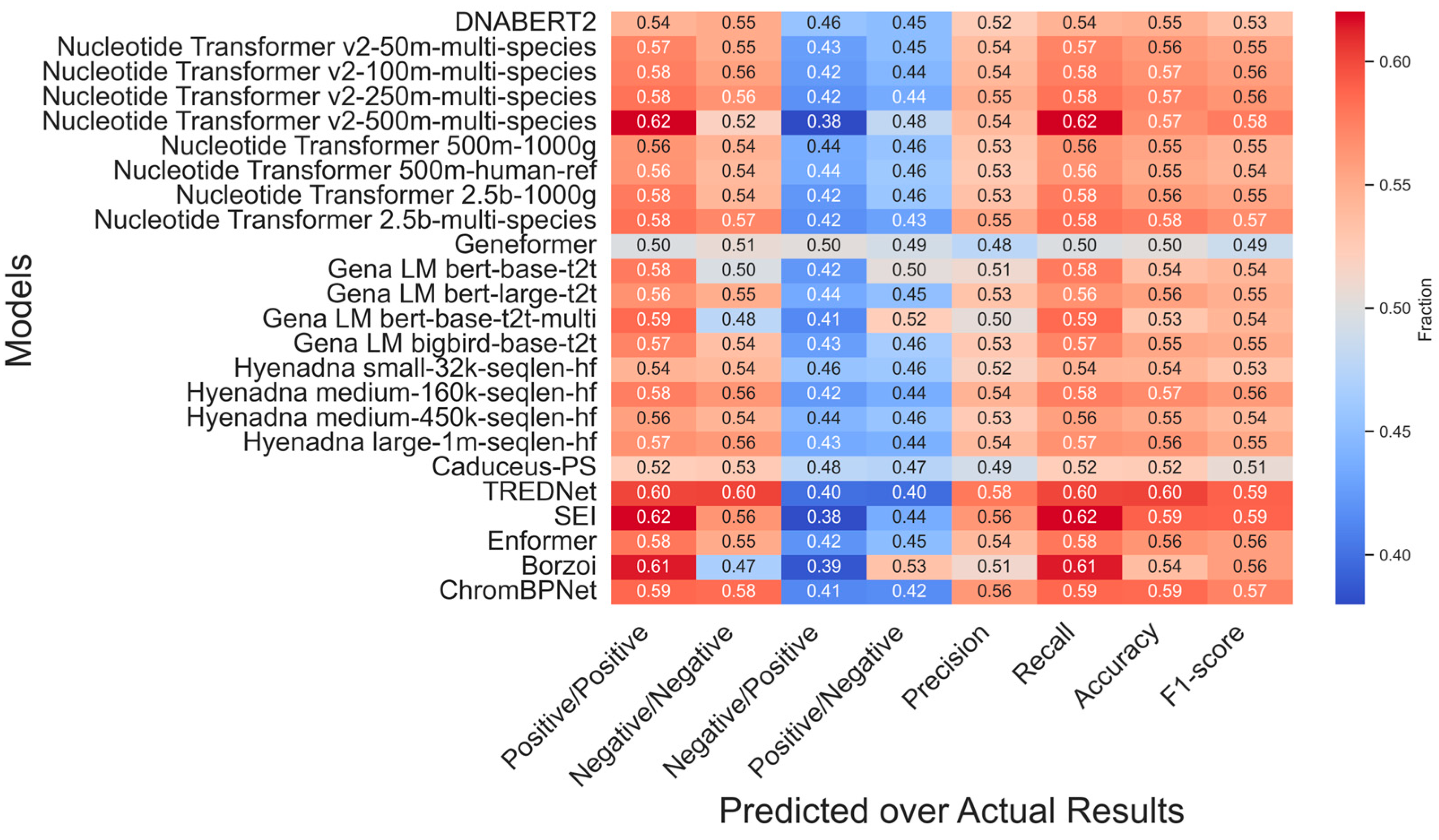

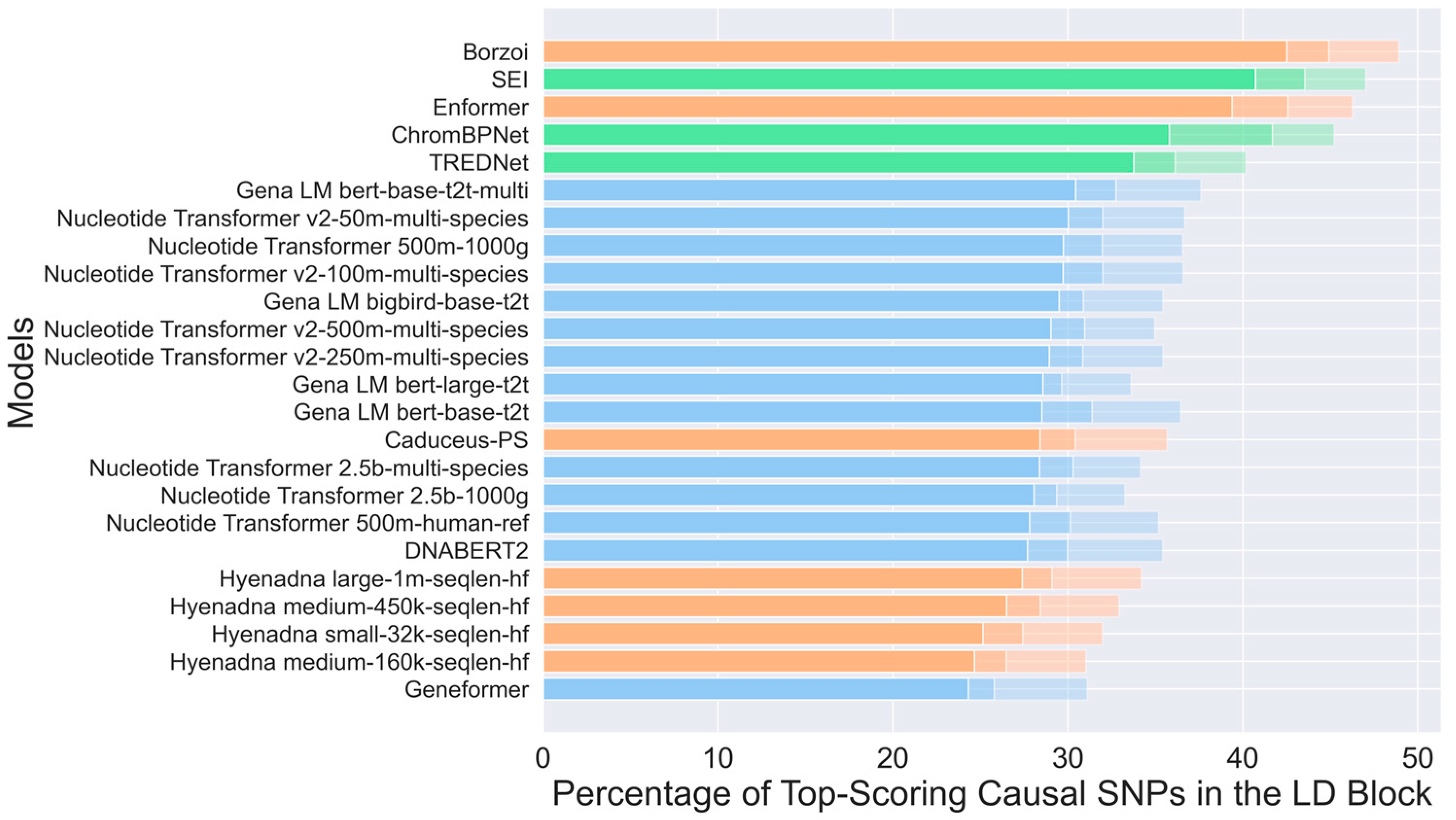

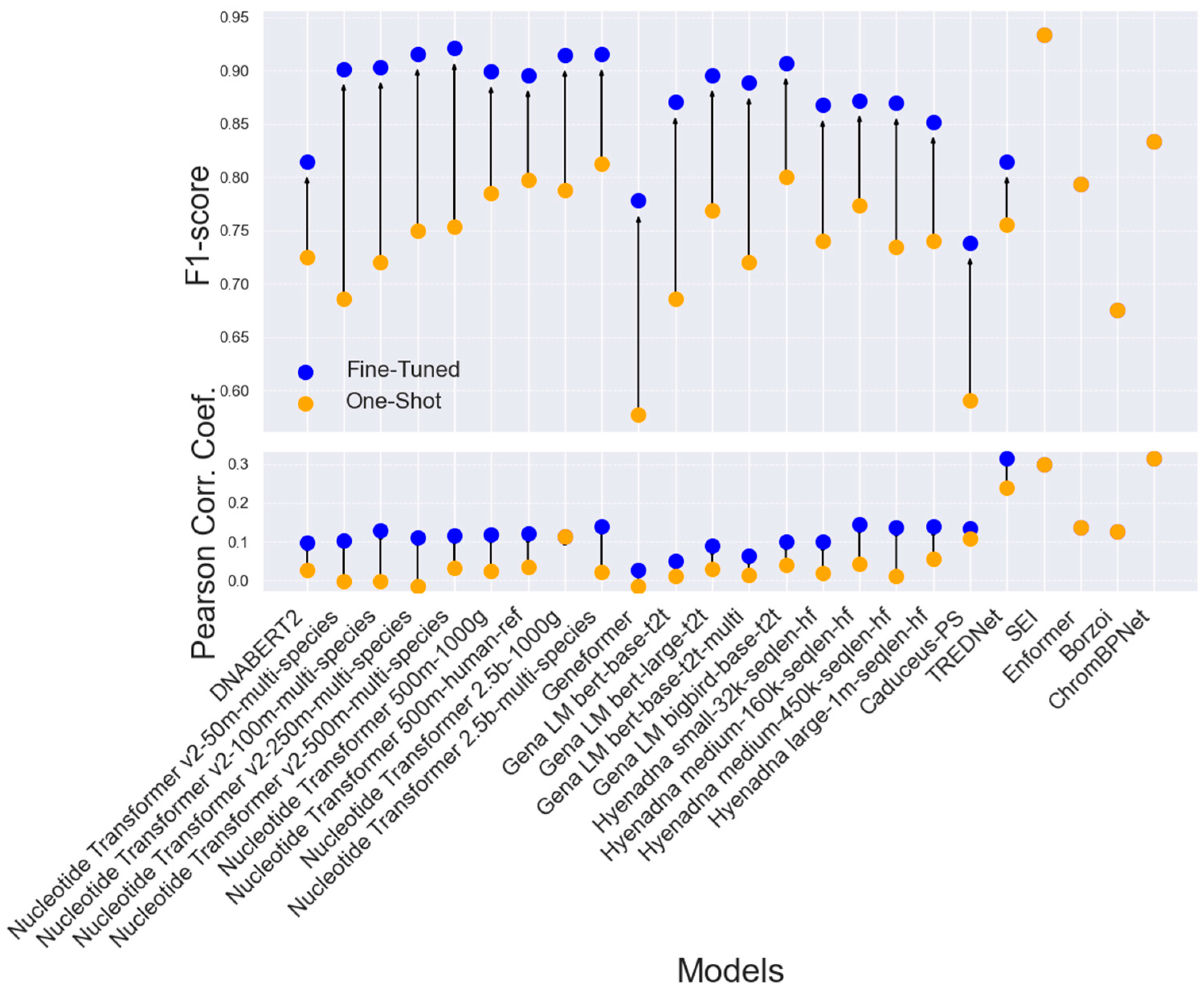

2.1. Comparison of Enhancer Variant Prediction Models

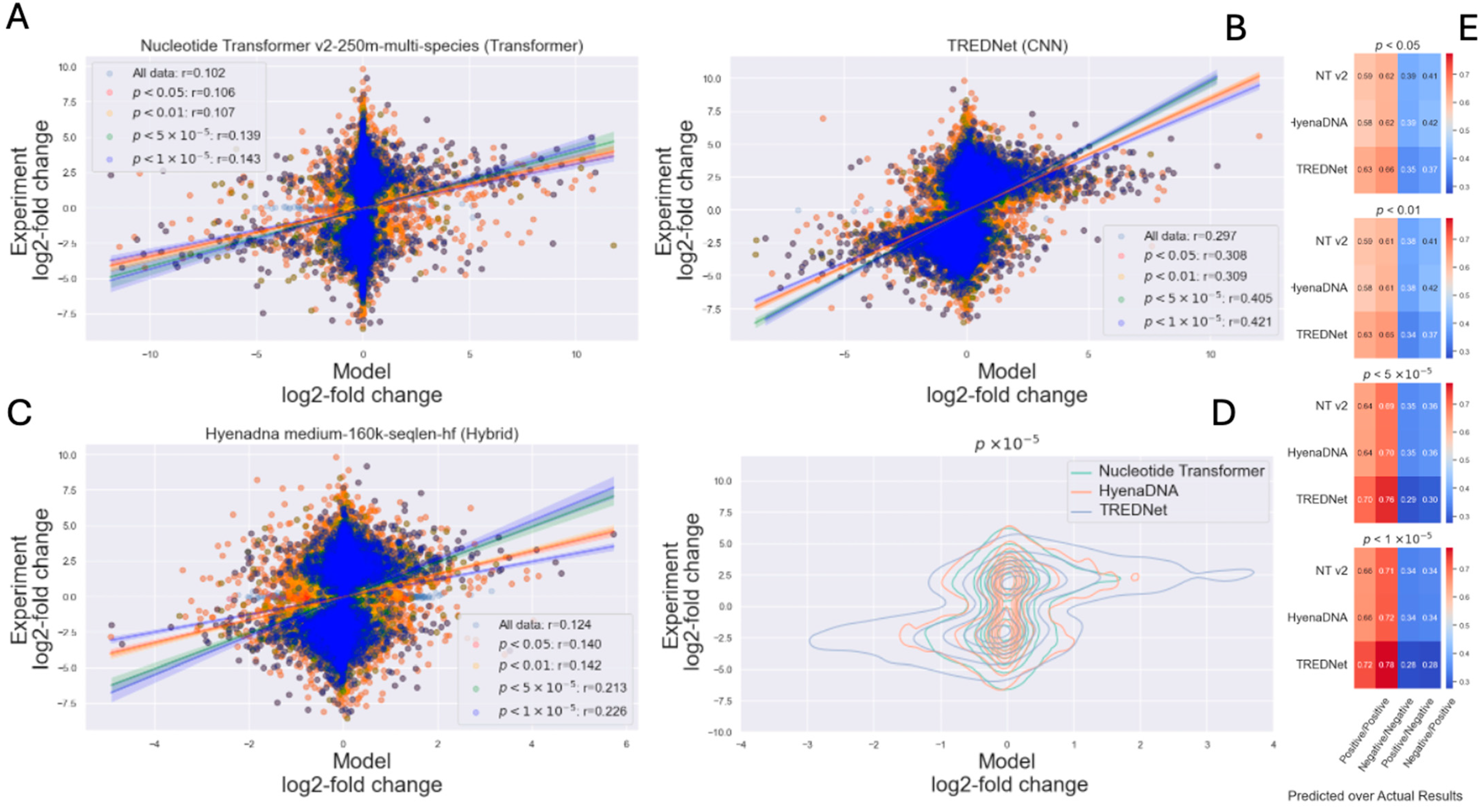

2.2. Impact of Certainty in Experimental Results

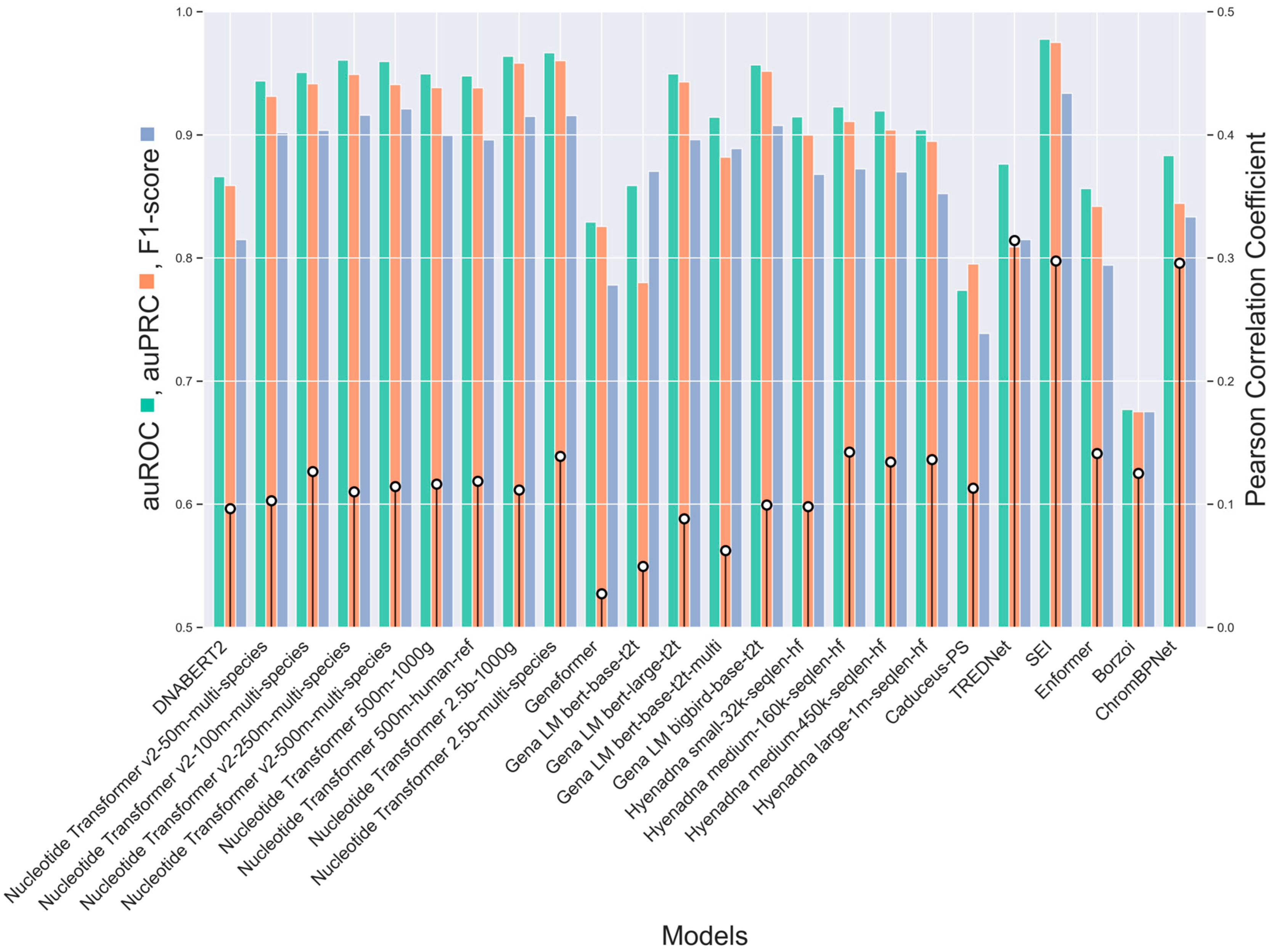

2.3. Enhancer Detection Models for Variant Effect Assessment

3. Discussion

3.1. CNNs-, Transformers-, and Hybrid-Based Models

3.2. Impact of Experimental Data Quality and Model Generalization

4. Materials and Methods

4.1. Data Pre-Processing

4.2. Model Adaptation and Fine-Tuning

4.3. Validation and Post-Processing

4.4. Deep Learning Models

4.5. Training Data

4.6. Validation Data

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Uffelmann, E.; Huang, Q.Q.; Munung, N.S.; De Vries, J.; Okada, Y.; Martin, A.R.; Martin, H.C.; Lappalainen, T.; Posthuma, D. Genome-wide association studies. Nat. Rev. Methods Primers 2021, 1, 59. [Google Scholar] [CrossRef]

- Visscher, P.M.; Wray, N.R.; Zhang, Q.; Sklar, P.; McCarthy, M.I.; Brown, M.A.; Yang, J. 10 Years of GWAS Discovery: Biology, Function, and Translation. Am. J. Hum. Genet. 2017, 101, 5–22. [Google Scholar] [CrossRef]

- Knight, J.C. Approaches for establishing the function of regulatory genetic variants involved in disease. Genome Med. 2014, 6, 92. [Google Scholar] [CrossRef]

- Albert, F.W.; Kruglyak, L. The role of regulatory variation in complex traits and disease. Nat. Rev. Genet. 2015, 16, 197–212. [Google Scholar] [CrossRef]

- Ong, C.-T.; Corces, V.G. Enhancer function: New insights into the regulation of tissue-specific gene expression. Nat. Rev. Genet. 2011, 12, 283–293. [Google Scholar] [CrossRef]

- Heintzman, N.D.; Stuart, R.K.; Hon, G.; Fu, Y.; Ching, C.W.; Hawkins, R.D.; Barrera, L.O.; Van Calcar, S.; Qu, C.; Ching, K.A.; et al. Distinct and predictive chromatin signatures of transcriptional promoters and enhancers in the human genome. Nat. Genet. 2007, 39, 311–318. [Google Scholar] [CrossRef] [PubMed]

- Creyghton, M.P.; Cheng, A.W.; Welstead, G.G.; Kooistra, T.; Carey, B.W.; Steine, E.J.; Hanna, J.; Lodato, M.A.; Frampton, G.M.; Sharp, P.A.; et al. Histone H3K27ac separates active from poised enhancers and predicts developmental state. Proc. Natl. Acad. Sci. USA 2010, 107, 21931–21936. [Google Scholar] [CrossRef] [PubMed]

- Thurman, R.E.; Rynes, E.; Humbert, R.; Vierstra, J.; Maurano, M.T.; Haugen, E.; Sheffield, N.C.; Stergachis, A.B.; Wang, H.; Vernot, B.; et al. The accessible chromatin landscape of the human genome. Nature 2012, 489, 75–82. [Google Scholar] [CrossRef]

- Hnisz, D.; Abraham, B.J.; Lee, T.I.; Lau, A.; Saint-André, V.; Sigova, A.A.; Hoke, H.A.; Young, R.A. Super-Enhancers in the Control of Cell Identity and Disease. Cell 2013, 155, 934–947. [Google Scholar] [CrossRef] [PubMed]

- Maurano, M.T.; Humbert, R.; Rynes, E.; Thurman, R.E.; Haugen, E.; Wang, H.; Reynolds, A.P.; Sandstrom, R.; Qu, H.; Brody, J.; et al. Systematic Localization of Common Disease-Associated Variation in Regulatory DNA. Science 2012, 337, 1190–1195. [Google Scholar] [CrossRef]

- Farh, K.K.-H.; Marson, A.; Zhu, J.; Kleinewietfeld, M.; Housley, W.J.; Beik, S.; Shoresh, N.; Whitton, H.; Ryan, R.J.H.; Shishkin, A.A.; et al. Genetic and epigenetic fine mapping of causal autoimmune disease variants. Nature 2015, 518, 337–343. [Google Scholar] [CrossRef]

- Catarino, R.R.; Stark, A. Assessing sufficiency and necessity of enhancer activities for gene expression and the mechanisms of transcription activation. Genes Dev. 2018, 32, 202–223. [Google Scholar] [CrossRef] [PubMed]

- Melnikov, A.; Murugan, A.; Zhang, X.; Tesileanu, T.; Wang, L.; Rogov, P.; Feizi, S.; Gnirke, A.; Callan, C.G.; Kinney, J.B.; et al. Systematic dissection and optimization of inducible enhancers in human cells using a massively parallel reporter assay. Nat. Biotechnol. 2012, 30, 271–277. [Google Scholar] [CrossRef]

- Tewhey, R.; Kotliar, D.; Park, D.S.; Liu, B.; Winnicki, S.; Reilly, S.K.; Andersen, K.G.; Mikkelsen, T.S.; Lander, E.S.; Schaffner, S.F.; et al. Direct Identification of Hundreds of Expression-Modulating Variants using a Multiplexed Reporter Assay. Cell 2016, 165, 1519–1529. [Google Scholar] [CrossRef]

- Foutadakis, S.; Bourika, V.; Styliara, I.; Koufargyris, P.; Safarika, A.; Karakike, E. Machine learning tools for deciphering the regulatory logic of enhancers in health and disease. Front. Genet. 2025, 16, 1603687. [Google Scholar] [CrossRef]

- Zhou, J.; Troyanskaya, O.G. Predicting effects of noncoding variants with deep learning–based sequence model. Nat. Methods 2015, 12, 931–934. [Google Scholar] [CrossRef]

- Chen, K.M.; Wong, A.K.; Troyanskaya, O.G.; Zhou, J. A sequence-based global map of regulatory activity for deciphering human genetics. Nat. Genet. 2022, 54, 940–949. [Google Scholar] [CrossRef] [PubMed]

- Hudaiberdiev, S.; Taylor, D.L.; Song, W.; Narisu, N.; Bhuiyan, R.M.; Taylor, H.J.; Tang, X.; Yan, T.; Swift, A.J.; Bonnycastle, L.L.; et al. Modeling islet enhancers using deep learning identifies candidate causal variants at loci associated with T2D and glycemic traits. Proc. Natl. Acad. Sci. USA 2023, 120, e2206612120. [Google Scholar] [CrossRef] [PubMed]

- Pampari, A.; Shcherbina, A.; Kvon, E.Z.; Kosicki, M.; Nair, S.; Kundu, S.; Kathiria, A.S.; Risca, V.I.; Kuningas, K.; Alasoo, K.; et al. ChromBPNet: Bias factorized, base-resolution deep learning models of chromatin accessibility reveal cis-regulatory sequence syntax, transcription factor footprints and regulatory variants. BioRxiv 2025. BioRxiv:2024.12.25.630221. [Google Scholar] [CrossRef]

- Ji, Y.; Zhou, Z.; Liu, H.; Davuluri, R.V. DNABERT: Pre-trained Bidirectional Encoder Representations from Transformers model for DNA-language in genome. Bioinformatics 2021, 37, 2112–2120. [Google Scholar] [CrossRef]

- Zhou, Z.; Ji, Y.; Li, W.; Dutta, P.; Davuluri, R.; Liu, H. DNABERT-2: Efficient Foundation Model and Benchmark For Multi-Species Genome. arXiv 2023. [Google Scholar] [CrossRef]

- Dalla-Torre, H.; Gonzalez, L.; Mendoza Revilla, J.; Lopez Carranza, N.; Henryk Grywaczewski, A.; Oteri, F.; Dallago, C.; Trop, E.; De Almeida, B.P.; Sirelkhatim, H.; et al. The Nucleotide Transformer: Building and Evaluating Robust Foundation Models for Human Genomics. bioRxiv 2023. [Google Scholar] [CrossRef]

- De Almeida, B.P.; Dalla-Torre, H.; Richard, G.; Blum, C.; Hexemer, L.; Gelard, M.; Mendoza-Revilla, J.; Tang, Z.; Marin, F.I.; Emms, D.M.; et al. Annotating the genome at single-nucleotide resolution with DNA foundation models. bioRxiv 2024. [Google Scholar] [CrossRef]

- Schiff, Y.; Kao, C.-H.; Gokaslan, A.; Dao, T.; Gu, A.; Kuleshov, V. Caduceus: Bi-Directional Equivariant Long-Range DNA Sequence Modeling. arXiv 2024. [Google Scholar] [CrossRef]

- Avsec, Ž.; Agarwal, V.; Visentin, D.; Ledsam, J.R.; Grabska-Barwinska, A.; Taylor, K.R.; Assael, Y.; Jumper, J.; Kohli, P.; Kelley, D.R. Effective gene expression prediction from sequence by integrating long-range interactions. Nat. Methods 2021, 18, 1196–1203. [Google Scholar] [CrossRef]

- Theodoris, C.V.; Xiao, L.; Chopra, A.; Chaffin, M.D.; Al Sayed, Z.R.; Hill, M.C.; Mantineo, H.; Brydon, E.M.; Zeng, Z.; Liu, X.S.; et al. Transfer learning enables predictions in network biology. Nature 2023, 618, 616–624. [Google Scholar] [CrossRef] [PubMed]

- Consens, M.E.; Dufault, C.; Wainberg, M.; Forster, D.; Karimzadeh, M.; Goodarzi, H.; Theis, F.J.; Moses, A.; Wang, B. To Transformers and Beyond: Large Language Models for the Genome. arXiv 2023. [Google Scholar] [CrossRef]

- Alharbi, W.S.; Rashid, M. A review of deep learning applications in human genomics using next-generation sequencing data. Hum. Genom. 2022, 16, 26. [Google Scholar] [CrossRef]

- Yue, T.; Wang, Y.; Zhang, L.; Gu, C.; Xue, H.; Wang, W.; Lyu, Q.; Dun, Y. Deep Learning for Genomics: From Early Neural Nets to Modern Large Language Models. Int. J. Mol. Sci. 2023, 24, 15858. [Google Scholar] [CrossRef]

- Patel, A.; Singhal, A.; Wang, A.; Pampari, A.; Kasowski, M.; Kundaje, A. DART-Eval: A Comprehensive DNA Language Model Evaluation Benchmark on Regulatory DNA. arXiv 2024. [Google Scholar] [CrossRef]

- Wu, C.; Huang, J. Enhancer selectivity across cell types delineates three functionally distinct enhancer-promoter regulation patterns. BMC Genom. 2024, 25, 483. [Google Scholar] [CrossRef]

- Fishman, V.; Kuratov, Y.; Shmelev, A.; Petrov, M.; Penzar, D.; Shepelin, D.; Chekanov, N.; Kardymon, O.; Burtsev, M. GENA-LM: A Family of Open-Source Foundational DNA Language Models for Long Sequences. bioRxiv 2023. [Google Scholar] [CrossRef]

- Nguyen, E.; Poli, M.; Faizi, M.; Thomas, A.; Birch-Sykes, C.; Wornow, M.; Patel, A.; Rabideau, C.; Massaroli, S.; Bengio, Y.; et al. HyenaDNA: Long-Range Genomic Sequence Modeling at Single Nucleotide Resolution. arXiv 2023. [Google Scholar] [CrossRef]

- Linder, J.; Srivastava, D.; Yuan, H.; Agarwal, V.; Kelley, D.R. Predicting RNA-seq coverage from DNA sequence as a unifying model of gene regulation. bioRxiv 2023. [Google Scholar] [CrossRef]

- Van Arensbergen, J.; Pagie, L.; FitzPatrick, V.D.; De Haas, M.; Baltissen, M.P.; Comoglio, F.; Van Der Weide, R.H.; Teunissen, H.; Võsa, U.; Franke, L.; et al. High-throughput identification of human SNPs affecting regulatory element activity. Nat. Genet. 2019, 51, 1160–1169. [Google Scholar] [CrossRef]

- Kircher, M.; Xiong, C.; Martin, B.; Schubach, M.; Inoue, F.; Bell, R.J.A.; Costello, J.F.; Shendure, J.; Ahituv, N. Saturation mutagenesis of twenty disease-associated regulatory elements at single base-pair resolution. Nat. Commun. 2019, 10, 3583. [Google Scholar] [CrossRef]

- Ulirsch, J.C.; Nandakumar, S.K.; Wang, L.; Giani, F.C.; Zhang, X.; Rogov, P.; Melnikov, A.; McDonel, P.; Do, R.; Mikkelsen, T.S.; et al. Systematic Functional Dissection of Common Genetic Variation Affecting Red Blood Cell Traits. Cell 2016, 165, 1530–1545. [Google Scholar] [CrossRef]

- Vockley, C.M.; Guo, C.; Majoros, W.H.; Nodzenski, M.; Scholtens, D.M.; Hayes, M.G.; Lowe, W.L.; Reddy, T.E. Massively parallel quantification of the regulatory effects of noncoding genetic variation in a human cohort. Genome Res. 2015, 25, 1206–1214. [Google Scholar] [CrossRef]

- Weiss, C.V.; Harshman, L.; Inoue, F.; Fraser, H.B.; Petrov, D.A.; Ahituv, N.; Gokhman, D. The cis-regulatory effects of modern human-specific variants. eLife 2021, 10, e63713. [Google Scholar] [CrossRef]

- Birnbaum, R.Y.; Patwardhan, R.P.; Kim, M.J.; Findlay, G.M.; Martin, B.; Zhao, J.; Bell, R.J.A.; Smith, R.P.; Ku, A.A.; Shendure, J.; et al. Systematic Dissection of Coding Exons at Single Nucleotide Resolution Supports an Additional Role in Cell-Specific Transcriptional Regulation. PLoS Genet. 2014, 10, e1004592. [Google Scholar] [CrossRef]

| Cell Lines | ||||

|---|---|---|---|---|

| Models | K562 (19,321 SNPs) | HepG2 (16,255 SNPs) | NPC (14,042 SNPs) | Hela (5241 SNPs) |

| DNABERT-2 | 0.086 (0.039) | 0.096 (0.191) | −0.004 (0.007) | 0.120 (0.140) |

| NT v2-50m-ms | 0.147 (0.058) | 0.103 (0.036) | 0.020 (0.003) | 0.074 (0.047) |

| NT v2-100m-ms | 0.152 (0.065) | 0.127 (0.050) | 0.016 (0.003) | 0.042 (0.027) |

| NT v2-250m-ms | 0.166 (0.064) | 0.110 (0.020) | 0.023 (0.003) | 0.030 (0.018) |

| NT v2 500m-ms | 0.199 (0.036) | 0.114 (0.007) | 0.028 (0.003) | 0.036 (0.011) |

| NT 500m1000g | 0.123 (0.084) | 0.116 (0.023) | 0.001 (0.002) | 0.022 (0.018) |

| NT 500m-h-ref | 0.149 (0.139) | 0.119 (0.038) | 0.013 (0.001) | 0.067 (0.043) |

| NT 2.5b-1000g | 0.147 (0.052) | 0.112 (0.021) | 0.005 (0.003) | 0.087 (0.105) |

| NT 2.5b-m-s | 0.153 (0.055) | 0.139 (0.027) | 0.027 (0.001) | 0.066 (0.027) |

| Geneformer | 0.005 (0.198) | 0.027 (0.160) | −0.002 (0.138) | −0.007 (0.057) |

| Gena LM-base | 0.077 (0.042) | 0.050 (0.033) | 0.024 (0.002) | 0.029 (0.227) |

| Gena LM large | 0.117 (0.044) | 0.088 (0.036) | 0.030 (0.002) | 0.069 (0.216) |

| Gena LM b-multi | 0.077 (0.051) | 0.062 (0.024) | 0.010 (0.001) | 0.026 (0.061) |

| Gena LM bigbird | 0.136 (0.055) | 0.099 (0.029) | 0.021 (0.002) | 0.045 (0.015) |

| Hyenadna 32 k | 0.084 (0.071) | 0.098 (0.061) | 0.002 (0.002) | 0.031 (0.013) |

| Hyenadna 160 k | 0.148 (0.053) | 0.142 (0.040) | −0.007 (0.003) | 0.046 (0.018) |

| Hyenadna 450 k | 0.077 (0.074) | 0.134 (0.050) | −0.007 (0.003) | 0.055 (0.015) |

| Hyenadna 1 mf | 0.118 (0.056) | 0.136 (0.059) | 0.001 (0.003) | 0.046 (0.013) |

| Caduceus | 0.133 (0.030) | 0.108 (0.021) | 0.023 (0.004) | 0.020 (0.022) |

| ChromBPNet | 0.287 (0.020) | 0.295 (0.021) | 0.080 (0.001) | 0.289 (0.023) |

| TREDNet | 0.315 (0.025) | 0.314 (0.018) | 0.075 (0.003) | 0.076 (0.063) |

| SEI | 0.297 (0.022) | 0.298 (0.029) | 0.073 (0.003) | 0.280 (0.008) |

| Enformer | 0.059 (0.059) | 0.141 (0.110) | 0.008 (0.080) | 0.245 (0.027) |

| Borzoi | 0.033 (0.016) | 0.125 (0.025) | 0.023 (0.004) | −0.066 (0.005) |

| Models | Adaptation Strategy |

|---|---|

| DNABERT-2 [20,21] | Directly fine-tuned using Hugging Face’s ‘Trainer’ on 1 kb genomic sequences using the pre-trained BPE tokenizer. Model input length was restricted to 512 tokens. Adapted for binary classification (enhancer vs. control) across biosamples. Its ALiBi positional encoding and Flash Attention enabled efficient fine-tuning without modification to input format. |

| Nucleotide Transformer [22,23] | Adapted using Hugging Face’s ‘Trainer’ with 6-mer tokenization on 1 kb enhancer/control sequences. The tokenizer processed sequences into 512 tokens. No architectural changes were required, and the model’s multi-species pretraining proved robust for human sequence classification. |

| Geneformer [26] | Originally trained on single-cell transcriptomic data using gene-level inputs, Geneformer was adapted for genomic language modeling by tokenizing 1 kb enhancer/control sequences using the pretrained vocabulary and tokenizer. Tokens mapped sequence chunks to “gene-like” units, enabling use of the Transformer for DNA sequence classification. Fine-tuning was performed using Hugging Face’s ‘Trainer’, with input sequences truncated or padded to 512 tokens. Despite domain shift, the model generalized well. |

| GENA-LM [32] | Fine-tuned on 1 kb genomic sequences using its native sparse attention tokenizer (BigBird). Used Hugging Face-compatible tokenization pipelines for BERT-base, BERT-large, and BigBird variants. Hugging Face ‘Trainer’ enabled flexible training for binary classification. Input sequences were processed to fit within the model’s max length. |

| Enformer [25] | As the model was designed for very long sequences (up to 200 kb), adaptation involved truncating input to 1 kb and padding as needed. Preprocessing followed the authors’ GitHub pipeline with minimal changes [25]. No fine-tuning was performed—predictions were extracted directly from the pre-trained model for enhancer/control sequences. |

| HyenaDNA [33] | Used pre-trained weights and inference pipeline from the official GitHub repository. Inputs were standardized to 1 kb and padded to reach 32,768 tokens, as required by the model’s architecture. Model was used in inference-only mode to evaluate predictions without fine-tuning. |

| Borzoi [34] | Applied directly on 1 kb input sequences using the GitHub-published inference code. Adaptation involved formatting enhancer/control sequences to match input requirements (one-hot or token-based). The model was not fine-tuned but used as-is for classification via regression output interpretation. |

| Caduceus [24] | Adopted the PS version of Caduceus from the Hugging Face platform and appended a classification layer to predict the probability that a 1kb input sequence functions as an enhancer. |

| ChromBPNet [19] | Employed the official ChromBPNet model available on GitHub and utilized its evaluation API to compute variant effect scores based on the 1kb input sequence. |

| SEI [17] | Used as a non-fine-tuned model. 1 kb input sequences were one-hot encoded and passed through the SEI inference pipeline, which includes dilated convolutional layers for multi-scale pattern detection. Outputs were extracted from the final regulatory activity scores across 40+ cell types. |

| TREDNet [18] | Adapted for enhancer classification and SNP prioritization using the published pipeline. The model was inference-only; sequences were formatted to one-hot encoding and input into the TREDNet CNN. Saturated mutagenesis was used to evaluate variant effects post-classification. |

| Model | Architecture | Training Details | Applications |

|---|---|---|---|

| DNABERT-2 | Transformer-based with ALiBi and Flash Attention | Pre-trained using Byte Pair Encoding (BPE) tokenization; compact representation of sequences; optimized for computational efficiency and scalability. | Transformer-based model pre-trained on k-mers; well suited for sequence classification, motif discovery, and variant effect prediction across species, with strong performance on short-range motif detection. |

| Nucleotide Transformer (8 models, 2 versions) | Transformer-based (50 M–2.5 B parameters) | Pretrained on multi-species genomes with 6-mer tokenization; trained on 300B tokens using Adam optimizer with warmup and decay schedules; supports long-range genomic dependencies. | Large-scale Transformer trained on human and multi-species genomes; supports comparative genomics, functional annotation, and variant effect prediction; versions range from smaller models for standard GPUs to billion-parameter models for large-scale training, and species-specific vs. multi-species training. |

| Geneformer | Transformer-based with rank-based analysis | Pretrained on single-cell transcriptome datasets using masked gene prediction; optimized for noise resilience and stability in single-cell data. | Transformer optimized for single-cell data; captures gene network dynamics and enables single-cell state classification; available in versions pre-trained on different single-cell datasets. |

| GENA-LM (4 models) | BERT-base, BERT-large, BigBird variants | Pretrained on genomic sequences using sparse attention mechanisms (BigBird); supports long-range sequence modeling and local feature detection; memory augmentation techniques applied for scalability. | Transformer optimized for single-cell data; captures gene network dynamics and enables single-cell state classification; available in versions pre-trained on different single-cell datasets. |

| Enformer | Convolution + Transformer hybrid | Pretrained on DNA sequences up to 200,000 bp using convolutional blocks for spatial reduction and Transformer layers for long-range interactions; predicts over 5000 epigenetic features. | Attention-based architecture capable of modeling >100 kb genomic context; excels at gene expression prediction and regulatory element analysis; distributed in full and lightweight implementations. |

| HyenaDNA (4 models) | Runtime-scalable models | Pretrained on human reference genome with extended sequence lengths (32,000–1,000,000 bp); optimized for runtime scalability using AWS HealthOmics and SageMaker infrastructure. | Hybrid convolutional/state-space model for long-range genomic interaction analysis; computationally efficient for long sequences, with model size scalable to hardware constraints. |

| Caduceus | Transformer-based model with position-aware embeddings and rotary attention | Pretrained on large-scale human genomic sequences in a self-supervised manner using masked language modeling. | General-purpose foundation model for regulatory genomics; trained on large-scale datasets; offers different checkpoints for diverse regulatory genomics tasks. |

| ChromBPNet | CNN with dilated convolutions and residual connections | Trained on chromatin accessibility data (e.g., ATAC-seq or DNase-seq). | CNN-based model designed for predicting chromatin accessibility; base-resolution DNase/ATAC signal prediction; relatively lightweight and accessible for most hardware setups. |

| Borzoi | Multi-layer regulatory model | Trained to predict RNA-seq coverage directly from DNA sequences; integrates multiple layers of regulatory predictions; fine-tuned for tissue-specific applications. | Hybrid CNN/attention model for cell- and tissue-specific RNA-seq coverage prediction and cis-regulatory pattern detection; released in different model sizes to balance accuracy with resource use. |

| SEI | Residual dilated convolutional layers | Trained on one-hot encoded sequences (4096 bp) using residual paths for multi-scale pattern detection; incorporates global integration layers for long-range dependencies; balances computational efficiency with expressiveness. | CNN-based sequence-to-function model for variant effect prediction on cis-regulatory activity across chromatin profiles; robust across cell types without fine-tuning, available in multiple pre-trained versions. |

| Dataset [ref] | Cell Type | Assay Type | No. of Variants/SNPs | Key Findings |

|---|---|---|---|---|

| Dataset 1 [35] | K562 | SuRE reporter assay | 19,237 raQTLs | Mean allelic fold-change: 4.0-fold. Most raQTLs are cell-type-specific. |

| Dataset 2 [35] | HepG2 | SuRE reporter assay | 14,183 raQTLs | Mean allelic fold-change: 7.8-fold. Limited overlap with K562 raQTLs, indicating cell-type specificity. |

| Dataset 3 [36] | HepG2 | Luciferase reporter | 1 enhancer (SORT1, ~600 bp, incl. rs12740374) | SNP rs12740374 creates C/EBP binding site affecting SORT1 expression. Flipped and deletion data excluded. |

| Dataset 4 [37] | K562 | MPRA | 2756 variants | 84 significant SNPs with functional effects, enriched in open chromatin shared with human erythroid progenitors (HEPs). |

| Dataset 5 [38] | HepG2 | MPRA | 284 SNPs | SNPs in high LD regions from eQTL analyses. Provides allele-specific regulatory effects relevant to liver gene regulation. |

| Dataset 6 [39] | NPC | lentiMPRA | 14,042 SNVs | Variants fixed/nearly fixed in modern humans (absent in archaic). 1791 with regulatory activity; 407 show differential expression. Focus on NPC data. |

| Dataset 7 [40] | HeLa | MPRA coding exon | 1962 SNPs (SORL1 exon 17) | ~12% of mutations alter enhancer activity by ≥1.2-fold; ~1% by <2-fold. |

| Dataset 8 [40] | HeLa | MPRA coding exon | 1614 SNPs (TRAF3IP2 exon 2) | Same as above—functional testing of coding exon enhancer activity. |

| Dataset 9 [40] | HeLa | MPRA coding exon | 1665 SNPs (PPARG exon 6) | Same as above—functional testing of coding exon enhancer activity. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manzo, G.; Borkowski, K.; Ovcharenko, I. Comparative Analysis of Deep Learning Models for Predicting Causative Regulatory Variants. Genes 2025, 16, 1223. https://doi.org/10.3390/genes16101223

Manzo G, Borkowski K, Ovcharenko I. Comparative Analysis of Deep Learning Models for Predicting Causative Regulatory Variants. Genes. 2025; 16(10):1223. https://doi.org/10.3390/genes16101223

Chicago/Turabian StyleManzo, Gaetano, Kathryn Borkowski, and Ivan Ovcharenko. 2025. "Comparative Analysis of Deep Learning Models for Predicting Causative Regulatory Variants" Genes 16, no. 10: 1223. https://doi.org/10.3390/genes16101223

APA StyleManzo, G., Borkowski, K., & Ovcharenko, I. (2025). Comparative Analysis of Deep Learning Models for Predicting Causative Regulatory Variants. Genes, 16(10), 1223. https://doi.org/10.3390/genes16101223