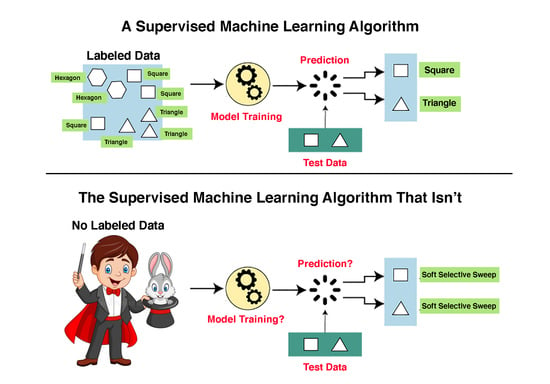

2. Principles and Limitations of Machine Learning

The field of machine learning is concerned with the development and application of computer algorithms that improve with experience [

9]. Machine learning methods are roughly classified into two primary categories: supervised and unsupervised machine learning. The main task of both machine learning categories is to predict the assignment of an input object (usually a vector of character states) to a label, where labels are the targets of the prediction task.

Unsupervised machine learning methods are designed to identify patterns in the data without training, while supervised machine learning methods, which include support vector machines (SVMs), require a training dataset. Unsupervised machine learning algorithms require only unlabeled input data and the desired number of different labels to assign as input. For example, we may segment the human genome into equal-size segments of length 100,000 nucleotides, collect data on each segment, such as nucleotide composition, mean length of mononucleotide runs, and distance from the centromere, and ask the algorithm to assign each segment to one of five labels or one of 216 labels. We note that none of the labels in unsupervised machine learning can be named a priori, only a posteriori.

Supervised machine learning (SML) algorithms aim to solve two types of problem: (1) the classification problem, whose target is a qualitative variable, e.g., determining whether a gene is expressed or not expressed under certain conditions, and (2) the regression problem, whose target is to predict a numerical value, e.g., the level of gene expression of a gene under certain conditions. In the following, we will only deal with the classification problem.

Classification SML algorithms are predicated on the existence of a large set of labeled examples. SML algorithms are trained on labeled examples and are then used to make predictions on unlabeled examples. The set of labeled examples is called the “training dataset”. Classification SML algorithms use what they have learned from the training dataset to make predictions or decisions on a “test dataset”, i.e., a set of unlabeled examples. For example, if we wish to determine whether or not a gene is expressed, we need a “training dataset” of genes for which we have solid empirical evidence on their expression status. We can then unleash the machine learning algorithm on a collection of genes for which expression data is lacking.

The immense success that SML techniques have had in the world of e-commerce [

10], as well as in some areas of medicine [

11,

12], genetics [

13], genomics [

14], and biochemistry [

15] may have prompted Schrider and Kern to develop so-called supervised machine learning methodologies [

3] to address evolutionary questions, particularly those aimed to clarify the relative importance of selection and random genetic drift during the evolution of genomes [

4]. Such studies have been ballyhooed as “sophisticated”, “cutting-edge”, “robust”, and “valuable”, and it has been argued that they “make a strong case for the idea that machine learning methods could be useful for addressing diverse questions in molecular evolution” [

16]. The method has since been applied to various other organisms besides humans (e.g., [

17,

18,

19]).

Which type of machine learning should we use? In their influential review of machine learning application in genetics and genomics, Libbrecht and Noble [

10] put forward some clear and simple rules on the use of supervised and unsupervised learning. In particular, they note that when a labeled training set is not available, one can only perform unsupervised learning. Interestingly, however, when labels are available, it is not always the case that the supervised machine learning approach is a good idea. This is because every supervised learning method rests upon the assumption that the distribution responsible for generating the training dataset is the same as the distribution responsible for generating the test dataset. This assumption is only met if a single labeled dataset is randomly subdivided into a training set and a testing set.

SML works well when the training data are sufficiently detailed and comprehensive to represent the truth. When the data deviate from this optimum, so will the SML results. When the training dataset does not exist, as is the case in evolutionary studies where the past is unknown, it is impossible to use SML [

10], and unsupervised methods should be preferred, though with extreme caution.

6. Other Questionable Practices

The “mortal sin” of using a supervised machine learning algorithm with no training dataset should be enough to invalidate Schrider and Kern’s results and conclusion. However, on top of this delictum, Schrider and Kern piled a large number of sampling transgressions, logical missteps, internal inconsistencies, and corruption of statistical practices, which we present henceforth.

Cherry-Picking Population Data. To characterize selection in global human populations, Schrider and Kern [

4] had first to choose which populations to use. Because populations with a large variation in demographic and historical parameters due to processes such as extreme bottlenecks, a history of migrations, and complex admixture or gene flow patterns would introduce substantial uncertainties in their subsequent simulations, they decided to exclude such populations from their sample. But have they?

Schrider and Kern [

4] claimed to have selected six populations that experienced the minimal influence of admixture or migration: They included Gambians in Western Divisions in The Gambia (GWD) and Yoruba in Ibadan, Nigeria (YRI) from West Africa; Luhya in Webuye, Kenya (LWK) from East Africa; Japanese in Tokyo, Japan (JPT) from Asia; Utah residents with Northern and Western European Ancestry (CEU) from Europe; and Peruvians from Lima, Peru (PEL) from the Americas. They motivated their choice by the homogeneity of populations over various numbers of splits (

K) in the ADMIXTURE analysis [

22] carried out by Auton et al. ([

23], Extended Figure 5):

“We see that for most values of K, each of these populations appears to correspond primarily to a single ancestral population rather than displaying multiple clusters of ancestry”. An examination of the ADMIXTURE results of Auton et al. shows that Schrider and Kern [

4] frequently violated their own selection criteria. For example, CEU, a population of unclear origins that shows multiple splits over various

K values, was included, while the more homogeneous British (GBR) and Finnish (FIN) populations were excluded from their analysis. All the three African populations included in the study yield larger and more numerous splits compared to the Esans (ESN), the most homogeneous African population, which was excluded from their study. Finally, the Chinese (CDX) population was excluded despite its genetic homogeneity.

Schrider and Kern [

4] admitted to one exception by including the cross-continentally admixed PEL population because “among the highly admixed American samples it appears to exhibit the smallest amount of possible mixed ancestry (for most values of

K)”, so they “retained this population to have some representation from the Americas”. Oddly, this “exception” clause was not applied to South Asian populations, which represent a considerable fraction of human global genetic diversity.

The choice of populations is further questionable on several grounds. First, Schrider and Kern’s [

4] inference of populations with “single ancestral population rather than displaying multiple clusters of ancestry” is doubtful since ADMIXTURE is unreliable when used to determine whether groups are pure representatives of one ancestral source and was certainly not designed to test whether two populations have mixed. Even groups that appear to be extremely homogeneous are known to have contributions from ancestrally related groups [

24]. Second, applying ADMIXTURE analysis with an arbitrary number of splits yields inconsistent splits across the population panel. The appearance of “heterogeneity” is driven by populations with distinct combinations of allele frequencies rather than true homogeneity. Finally, considering the inconsistency between the selection criteria outlined by Schrider and Kern [

4] and their sample population set, it is likely that these criteria were applied

post hoc to match populations already included in the dbPSHP database [

25], which the authors later employed to study selection. dbPSHP has no data for the most homogeneous populations in Auton et al. (GBR, FIN, ESN, and CDX) but has data for four of the six populations analyzed by Schrider and Kern [

4]: YRI, LWK, CEU, and JPT. Excepting Gujarati Indians in Houston, Texas (GIH), dbPSHP excludes all South Asians.

To summarize, Schrider and Kern [

4] did not follow their own criteria for selecting populations with the least complex demographic histories. Instead, Schrider and Kern [

4] employed a type of observational bias, called the “streetlight effect” [

26], by only searching for preconceived answers where it is easiest to find them (i.e., in populations for which selection data were readily available).

Estimating the Parameters of the Demographic Model. To detect sweeps, Schrider and Kern [

4] applied a maximum likelihood approach that considers simulated genomic patterns using a variety of population genetic summary statistics and classifies genomic windows as being: (1) the target of a completed hard sweep (hard), (2) closely linked to a hard sweep (hard-linked), (3) a completed soft sweep (soft), (4) closely linked to a soft sweep (soft-linked), or (5) to have evolved neutrally (neutral). Since no training dataset was available for exploratory evolutionary studies, Schrider and Kern [

4] developed a demographic model that disgorged a number of summary statistics for genomic regions that have experienced simulated hard sweeps, simulated soft sweeps, or have evolved under simulated neutrality.

We emphasize again that unlike in normal SML approaches, where training and testing of the classifier are two separate operations, in Schrider and Kern [

4] the classifier was never trained. Notwithstanding this glaring insufficiency, the authors use the terms “training” eleven times in their article. For instance, they claim that “training examples for the hard class experienced a hard sweep in the center of the central sub-window” despite the fact that the “training” data were not actual data as required for SML applications, but rather simulated “data” created in, as we will demonstrate, an extremely problematic manner. The use of terms such as “machine learning” and “classifier” is, thus, entirely inappropriate. In the next section, we show that even as far as building their faux “classifier” and estimating the variables on which it was based, the authors made some very questionable decisions.

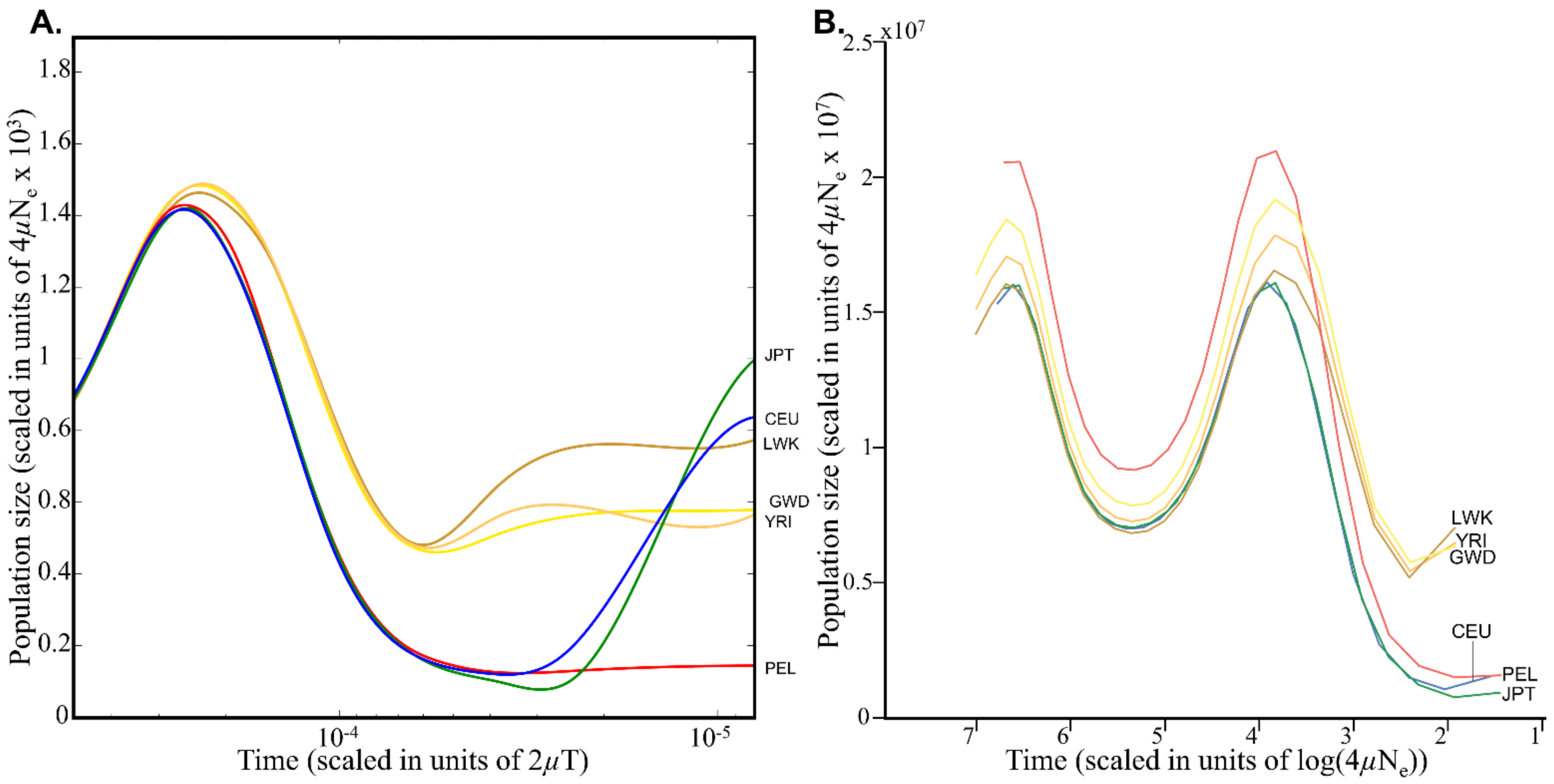

Estimating Demographic History from Genomic Data. Before selection can be simulated on the genomic data, Schrider and Kern [

4] had to reliably capture population-size changes over discrete time intervals for their coalescent simulation tool

discoal to work properly [

27]. For that, they turned to the demographic model calculated by Auton et al. [

23] using the Pairwise Sequentially Markovian Coalescent (PSMC) model [

28]. PSMC employs coalescent methodology to reconstruct changes in the effective population-size history over time under neutrality. Schrider and Kern extracted 26 discrete points per population from Auton et al.’s extended Figure 5 (

Figure 1A), scaled them by the mean population mutation rate

θ of the population under study (which they determined, as we show in the next section) and by the present-day effective population size

N0 (10,000), and included them in their simulation (the -

en parameter) (

Figure 1B). This simple procedure, which should have resulted in a similar demographic model to the one used by Auton et al., has instead resulted in inflated population sizes by a factor of up to 10

4 and a complete distortion of Auton et al.’s demographic model. We note that PSMC’s output cannot always be reliably interpreted as plots of population-size changes, particularly if the population is admixed, in which case peaks on the demographic plot might correspond to periods of increased population structure rather than increased population size. Remarkably, Schrider and Kern [

4] noted that “these models may not accurately capture the demographic histories of the populations we examined.” However, they claimed that their Soft/Hard Inference through Classification or S/HIC methods [

27] is robust to “demographic misspecification”, and hence did not expect this factor “to severely impact” their analysis.

Estimating The Population Mutation Rate (θ). The population mutation rate (

θ) is a fundamental parameter in evolutionary biology as it measures the average genomic mutation rate of the entire population or, stated differently, it describes the amount of selectively neutral diversity in a population [

29]. It is also necessary to estimate the effective population size (

Ne).

θ is calculated as 2

pNeμtot, where

p = 1 or 2 for haploids and diploids, respectively,

Ne is the effective population size, and

μtot is the mutation rate at the locus of interest. Schrider and Kern [

4] assumed that the present-day grid of

θ ranged from 10 to 250 and was calculated from 4

NeμL. Here,

μ is the mutation rate per nucleotide, which Schrider and Kern [

4] set as 1.2 × 10

−8 per base pair per generation as was calculated by Kong et al. [

30] (based on Icelandic trios for all their populations), and

L is the length of the segment.

Confusingly, multiple

L values were used: 100,000, the only one reported in the paper; 200,000, which was used in the code (Table S5 in [

4]); 2,200,000, which was used for their Table S1 (D. R. Schrider, personal communication); and 1,100,000, the “correct” value for this analysis which was never reported (D. R. Schrider, personal communication). In their paper, Schrider and Kern [

4] neither disclosed the range of

L values nor explained them. Using the latter value of

L,

Ne = 1500 for Africans and

Ne = 4250 for non-Africans from [

23],

θ should have a theoretical range of 79 and 224, similarly to the proposed grid. Unfortunately, neither these nor the stated grid values of

θ (10–250) were used. In practice, Schrider and Kern [

4] employed

θ values that ranged from 40 to 2200 (their Table S5,

-Pt parameter) since they chose “as the final values of

θ that for which the sum of the percent deviations of the simulated from the observed means of each statistic was minimized.” By inflating the range of

θ, Schrider and Kern [

4] have artificially modified the genomic mutation rate providing more opportunities for “selection” to act. As we shall next show, this choice also had the effect of bloating the effective population size.

Estimating the Effective Population Size (Ne). Deriving

Ne from the two extreme values of

θ (40 and 2200) and the published

L value (

L = 100,000) yields a remarkable

Ne range of 8333 <

Ne < 458,333, i.e., values similar to those of

Plasmodium falciparum (210,000 <

Ne < 300,000) and

Drosophila melanogaster (

Ne = 1,150,000) [

31]. Even using the “correct”

L value (

L = 1,100,000) (D. R. Schrider, personal communication) results in an extreme range for

Ne 757 <

Ne < 41,666, compared to the

Ne calculated by Auton et al. (1500 <

Ne < 4250) [

23] (

Figure 1A), which Schrider and Kern (2017) supposedly relied on for their demographic model and the well-established range for humans (1000 <

Ne < 10,000) (e.g., [

32,

33,

34,

35]). This

Ne is also higher than in most apes (7 <

Ne < 20,000), excepting Central chimpanzee (25,000 <

Ne < 100,000) [

36]. By inflating

Ne, Schrider and Kern [

4] have biased all their subsequent calculations.

Estimation of the Population Recombination Rate (ρ). To understand the magnitude of the bias in Schrider and Kern’s [

4] analyses, let us consider their simulation of the population recombination rate,

ρ = 4

Ner, where

r is the crossover rate per base pair. The distribution of

ρ was empirically found to be “unambiguously unimodal”, with a mean of 10

−4 ([

37], Figure 3). Further,

ρ is normally distributed with 80% of its values in the range of 10

−4.5 to 10

−3.5 ([

37], Figure 3). In contradistinction, Schrider and Kern [

4] supposedly derived

ρ from an exponential distribution with a mean of 1 × 10

−8, although their Table S5 (-

Pre parameter) indicates, again, that different values of

ρ were used (Table S5, -

Pre parameter). According to that table, the mean of the exponential distribution ranged from 183 to 1008.

Simulating Genomic Sequences. Deciding on the demographic model parameters for each population, Schrider and Kern [

4] have next generated genomic sequences using several approaches that we have attempted to replicate. Replication is the “cornerstone of science” and its most fundamental principle; the inability to replicate published studies has been termed the “replicability crisis” [

38]. We found that Schrider and Kern’s [

4] study is part of this crisis.

The Methods section in Schrider and Kern [

4] renders replication impossible. First, the authors employed various computational tools, only some of which are mentioned in the paper. Second, they provided the code for only one of the tools (Table S5 in [

4]). And finally, their description of the simulation is partial at times, erroneous at other times, and inconsistent with the code they have provided.

To name a few examples, Schrider and Kern [

4] wrote that “we used the program

discoal [

27] to simulate large chromosomal regions, subdivided into 11 sub-windows”. However,

discoal simulates very short genomic regions (in a setting of 200,000 bp,

discoal simulated regions varied in size from 0–2600 bp with a mean of 934 bp) and the subdivision into windows is part of a different package, called S/HIC (which, however, is no longer available where it was supposed to be deposited,

https://github.com/kern-lab/, accessed on 31 March 2021). To understand whether these numbers are reasonable, we downloaded the CEU data from the 1000 Genomes Phase 3 (v5) [

23]. We sampled 200,000 regions from all autosomal chromosomes 1000 times and counted the number of SNPs. The number of SNPs ranged from 176 to 6277, with a mean of 2195 and a median of 2134. It was never 0, as in the

discoal simulations. In other words, the number of SNPs in the simulated

discoal sequences is less than half than in reality.

In another place in the article, the authors wrote, “we simulated additional test sets of 1000 genomic windows 1.1 Mb in length with varying arrangements of selected sites”, without telling the reader which simulation tool they used.

Another typical paragraph reads:

“The simulation program discoal requires some of these parameters to be scaled by the present-day effective population size; we did this by taking the mean value of θ and dividing by 4 uL, where u was set to 1.2 × 10−8 [30]. The full command lines we used to generate 1.1 Mb regions (to be subdivided into 11 windows each 100 kb in length) for each population are shown in Supplementary Table S5, Supplementary Material online. We also simulated 1000 test examples for each population in the same manner as for the training data”. This section describes the use of at least three tools with only discoal referenced explicitly. It remains unclear how many regions were simulated since parts of the manuscript mention 100 kb and other parts mention 1.1 Mb. Adding to the confusion is that the code (their Table S5) simulates 200 kb regions but employs parameters calculated for 1.1 Mb regions.

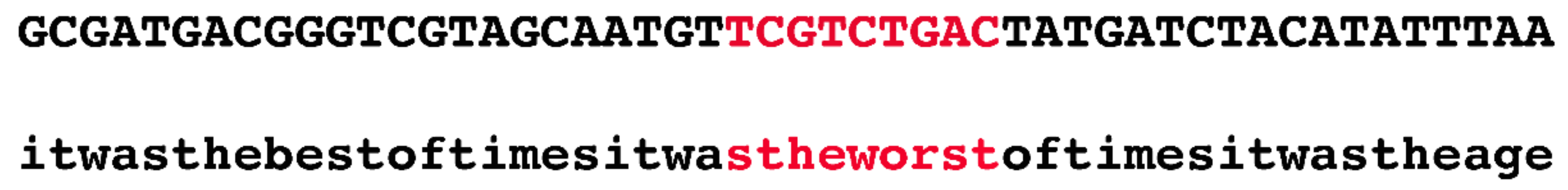

Bypassing Training Data by Importing Random Annotations. Since Schrider and Kern [

4] lacked a true training dataset based on actual genomic data with factual annotation, they simulated their own dataset and generated their own annotation for the simulated dataset.

Innovatively, the authors devised the following quick fix to this seemingly intractable problem. They randomly selected 1.1 Mb regions from the human genome and used public datasets, such as phastCons, to annotate them. In the next stage, they generated a random sequence and copied the annotation of the real sequence onto the simulated one. For example, if the authors selected the region chr1:55000000–56100000 and if there was a conserved phastCons element at chr1:55000000–55000009, then the first ten bp of the simulated region would be marked as negatively selected (i.e., evolving in a neutral fashion). At no time were actual nucleotides from the human genome considered.

Figure 2 illustrates, in an exaggerated fashion, the ridiculousness of this method.

Classifying the Simulated Sequences into Five Classes. Schrider and Kern [

4] applied their SML classifier to the simulated data so that their classifier will assign the sequences to one of the five classes (experienced a hard selective sweep, linked to a hard selective sweep, experienced a soft selective sweep, linked to a soft selective sweep, and evolved neutrally, which presumably includes regions evolving under strict neutrality as well as sequences affected by purifying selection).

The authors are very unclear about how the classifier’s decisions were made. For instance, they write: “For our classifications we simply took the class that S/HIC’s classifier inferred to be the most likely one, but we also used S/HIC’s posterior class membership probability estimates in order to experiment with different confidence thresholds”. We interpret this statement to mean that irrespective of the class inferred by the S/HIC classifier, the authors chose any threshold they fancied to make the final class determination.

Schrider and Kern [

4] argued that soft sweeps are the dominant mode of adaptation in humans. Analyzing the six human populations, they identified 1927 distinct selective sweeps patterns, 1776 (92.2%) of which were classified as being a soft sweep using their S/HIC method. An examination of Table S2 in Schrider and Kern [

4] shows that the classification is biased towards “soft sweep” irrespective of the cutoff, which varies from 0.2 to 0.9 per population. In all those cases, S/HIC “classified” 72.22–99.15% of the segments as “softly selected” with a median of 93.04%. No doubt, this resulted in 73.1% of these sweeps being deemed “novel” as they do not exist in dbPSHP. To get these newsworthy results, it was essential for the authors to preselect populations that are included in dbPSHP, regardless of their heterogeneity. The “classifications” to “hard sweep” ranged from 0.85–27.78%, with a median of 6.96%. These are remarkable figures because hard sweeps are extremely rare in human populations (e.g., [

39]). We also note that the exact balance between hard and soft sweeps depends on the yet undetermined distribution of mutational target sizes [

40]. We also note that on theoretical grounds [

20,

21], the number of soft selective sweeps is expected to be much lower than that of hard selective sweeps.

There are other problems with the S/HIC classifications, one of which is that “adjacent windows are especially likely to receive identical annotations [

3]. To overcome this difficulty, a secret (i.e., unpublished) “permutation algorithm” was implemented that considered the lengths of a run of consecutive windows assigned to each class per population. The run-length distribution was then obtained from the simulated data. By the end of this procedure, the windows are thoroughly reshuffled, which addresses the concern that adjacent windows have similar annotation but not the concern that certain features and classifications are inflated due to the over-classification in the adjacent windows in the first place.

To summarize, Schrider and Kern’s classification of genomic regions into classes required not only an SML classifier but also a human intervention in the form of setting subjective thresholds that ranged widely in value. These thresholds, in turn, generated preconceived results that lack any scientific merit.

Identifying Genetic-Element Enrichment in Selective Sweeps. To complete their study that rests entirely on imaginary sequences onto which random annotations were thrust upon, Schrider and Kern [

4] ask which “biological pathways” show “a strong enrichment” in genomic regions that were deemed to have been subject to selective sweeps. Here, it was expected that the authors would test the enrichment of actual biological features, such as certain metabolic pathways, certain gene families, or genes expressed in certain tissues. Alas, this is not the case. With the exception of the vague category “coding sequences”, which includes open reading frames for which we have no evidence of translation, let alone function, all other features that were found to be enriched in one class or another have nothing to do with real data. For example, the transcription factor binding sites were taken from the ENCODE project [

41], which, as we all know, cannot be used to identify biological functions [

42]. Another so-called functional dataset is COSMIC, which is a set of somatic mutations that have been observed in cancer cells [

43]. Admittedly, a minuscule minority of these mutations may play some role in tumor suppression or progression; however, the vast majority of mutations in cancer cells are incidental and have neither a causative role in cancer nor in the progression of the disease. The closest Schrider and Kern [

4] came to using real biological functions were the inferred functional categories from Gene Ontology [

44], with a strong emphasis on “inferred” rather than “known”.

One such example of functional futility involves Schrider and Kern’s [

4] “dramatic enrichment” of sweeps in genes that encode proteins that interact with one another. As far as “gene networks” are concerned, however, the term “interaction” is defined very broadly. To illustrate the problematics of using interactions as a validation method, we performed the following experiment. We selected 100 random protein-coding genes that had a HUGO (Human Genome Organization)/Gene Nomenclature Committee (HGNC) symbols [

45] and used GeneMania [

46] to identify genetic interactions among those genes. Of the hundred random sequences, only 17 had no “genetic interaction” with another gene. The remaining genes exhibited “genetic interactions” and “physical interactions” although none of these “interacting pairs shared a single biochemical pathway. By showing that random genes in our negative control exhibit extensive “genetic interactions”, we have demonstrated that Schrider and Kern’s [

4] “interacting gene networks” is a meaningless concept and that the “dramatic enrichment” is biologically insignificant. Here, it may be worth mentioning Schrider and Kern’s [

4] statistical choices. First, in all their calculations, they used one-tailed statistical tests, which are more likely to reject null hypotheses than two-tailed tests. Second, they did not employ any type of control.

Finally, we would like to mention a common failure to most data-driven studies—lack of any follow-up. Schrider and Kern’s [

4] study contains some results that

prima facie seem remarkable. For example, of the 19 annotated features, the CEU population (Utah residents with Northern and Western European Ancestry) showed no significant enrichment for any annotation except “enhancers lost in humans since splitting with rhesus.” By contrast, all the other populations showed significant enrichment of more than 50% of the annotated features (Table S3 in [

4]). The authors offer neither an explanation for this difference nor a reason why such a result is reasonable. Similarly, ClinVar pathogenic SNPs were significantly enriched only in PEL (Peruvians from Lima, Peru) despite the fact that this population has fewer ClinVar pathogenic mutations than YRI, CEU, and CHB (Figure S14 in [

47]). As in all other cases, the authors are mum on these findings.