Artificial Intelligence and Integrated Genotype–Phenotype Identification

Abstract

1. Introduction

2. Genomics

3. Phenomics

4. Conclusions

Supplementary Materials

Funding

Conflicts of Interest

References

- Turing, A.M. Computing machinery and intelligence. Mind 1950, 59, 433–460. [Google Scholar] [CrossRef]

- Buchanan, B.G. A (very) brief history of artificial intelligence. AI Magazine 2005, 26, 53. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, J. Programs with Common Sense; RLE and MIT Computation Center: Cambridge, MA, USA, 1960. [Google Scholar]

- Minsky, M. Steps toward Artificial Intelligence. Proc. IRE 1961, 49, 8–30. [Google Scholar] [CrossRef]

- Buchanan, B.G.; Shortliffe, E.H. Rule-Based Expert Systems: The MYCIN Experiments of the Stanford Heuristic Programming Project; Addison-Wesley: Reading, MA, USA, 1984; ISBN 9780201101720. [Google Scholar]

- Rosenblatt, F. The Perceptron, a Perceiving and Recognizing Automaton Project Para; Cornell Aeronautical Laboratory: Buffalo, NY, USA, 1957. [Google Scholar]

- Rumelhart, D.E.; McClelland, J.L.; PDP Research Group. Parallel Distributed Processing; MIT Press: Cambridge, MA, USA, 1987; Volume 1. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484. [Google Scholar] [CrossRef] [PubMed]

- Domingos, P. A Few Useful Things to Know about Machine Learning. Commun. ACM 2012, 55, 78–87. [Google Scholar] [CrossRef]

- Hinton, G. Deep Learning—A Technology with the Potential to Transform Health Care. JAMA 2018, 320, 1101–1102. [Google Scholar] [CrossRef] [PubMed]

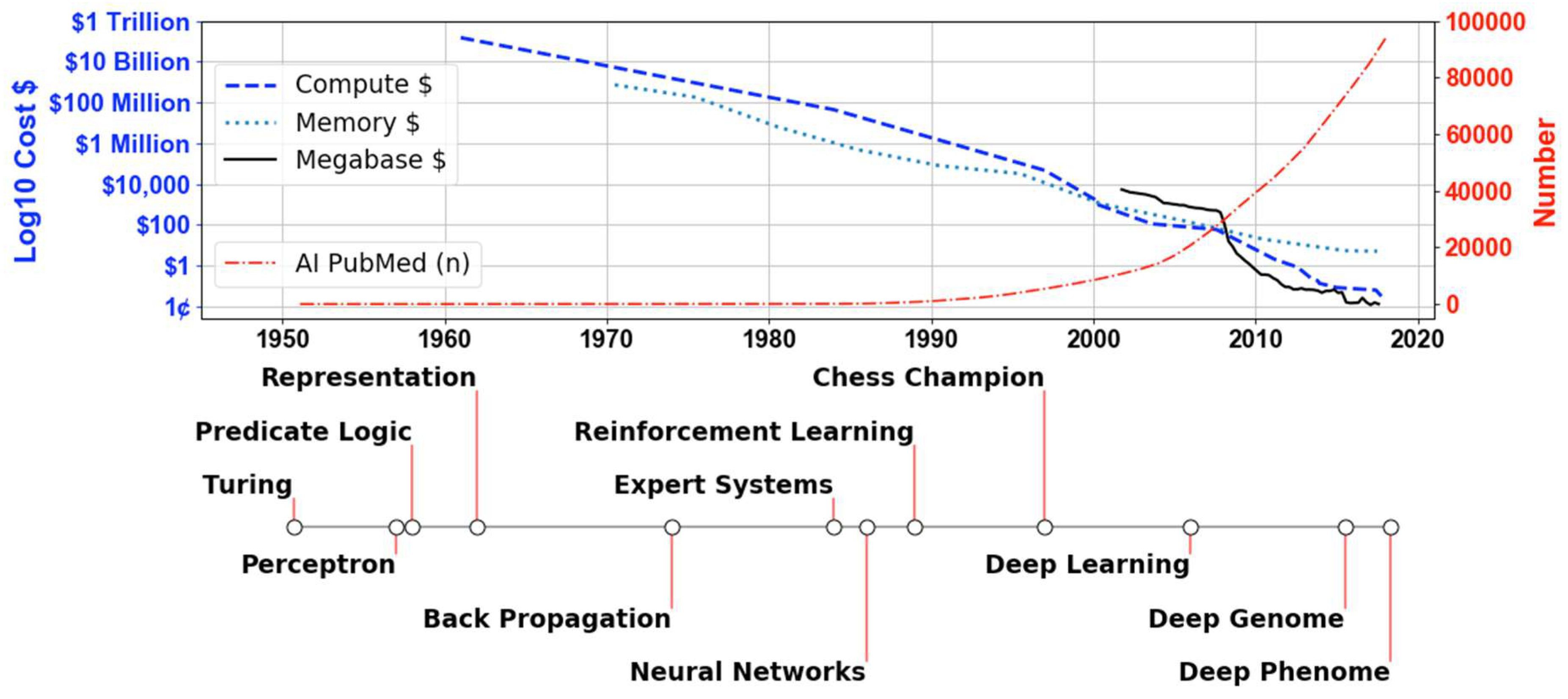

- FLOPS. Available online: https://en.wikipedia.org/wiki/FLOPS (accessed on 7 December 2018).

- Mearian, L. CW@50: Data Storage Goes from $1M to 2 Cents per Gigabyte. Available online: https://www.computerworld.com/article/3182207/data-storage/cw50-data-storage-goes-from-1m-to-2-cents-per-gigabyte.html (accessed on 7 December 2018).

- Wetterstrand, K.A. DNA Sequencing Costs: Data from the NHGRI Genome Sequencing Program (GSP). Available online: https://www.genome.gov/sequencingcostsdata/ (accessed on 7 December 2018).

- Camacho, D.M.; Collins, K.M.; Powers, R.K.; Costello, J.C.; Collins, J.J. Next-Generation Machine Learning for Biological Networks. Cell 2018, 173, 1581–1592. [Google Scholar] [CrossRef] [PubMed]

- Pirih, N.; Kunej, T. Toward a Taxonomy for Multi-Omics Science? Terminology Development for Whole Genome Study Approaches by Omics Technology and Hierarchy. OMICS 2017, 21, 1–16. [Google Scholar] [CrossRef]

- Stephens, Z.D.; Lee, S.Y.; Faghri, F.; Campbell, R.H.; Zhai, C.; Efron, M.J.; Iyer, R.; Schatz, M.C.; Sinha, S.; Robinson, G.E. Big Data: Astronomical or Genomical? PLoS Biol. 2015, 13, e1002195. [Google Scholar] [CrossRef]

- Frey, L.J. Data Integration Strategies for Predictive Analytics in Precision Medicine. Per. Med. 2018, 15, 543–551. [Google Scholar] [CrossRef] [PubMed]

- Kitano, H. Artificial intelligence to win the Nobel Prize and beyond: Creating the engine for scientific discovery. AI magazine 2016, 37, 39–49. [Google Scholar] [CrossRef]

- Alipanahi, B.; Delong, A.; Weirauch, M.T.; Frey, B.J. Predicting the sequence specificities of DNA- and RNA-binding proteins by deep learning. Nat. Biotechnol. 2015, 33, 831–838. [Google Scholar] [CrossRef] [PubMed]

- Rajkomar, A.; Oren, E.; Chen, K.; Dai, A.M.; Hajaj, N.; Hardt, M.; Liu, P.J.; Liu, X.; Marcus, J.; Sun, M. Scalable and accurate deep learning with electronic health records. NPJ Digital Medicine 2018, 1, 18. [Google Scholar] [CrossRef]

- Frey, L.J.; Bernstam, E.V.; Denny, J.C. Precision medicine informatics. J. Am. Med. Inform. Assoc. 2016, 23, 668–670. [Google Scholar] [CrossRef] [PubMed]

- Collins, F.S.; Varmus, H. A New Initiative on Precision Medicine. N. Engl. J. Med. 2015, 372, 793–795. [Google Scholar] [CrossRef] [PubMed]

- National Research Council; Division on Earth and Life Studies; Board on Life Sciences; Committee on a Framework for Developing a New Taxonomy of Disease. Toward Precision Medicine: Building a Knowledge Network for Biomedical Research and a New Taxonomy of Disease; National Academies Press: Washington, DC, USA, 2011; ISBN 9780309222259. [Google Scholar]

- Baader, F.; Calvanese, D.; McGuinness, D.; Patel-Schneider, P.; Nardi, D. The Description Logic Handbook: Theory, Implementation and Applications; Cambridge University Press: Cambridge, UK, 2003; ISBN 9780521781763. [Google Scholar]

- Mitchell, T.; Cohen, W.; Hruschka, E.; Talukdar, P.; Yang, B.; Betteridge, J.; Carlson, A.; Dalvi, B.; Gardner, M.; Kisiel, B. Never-ending Learning. Commun. ACM 2018, 61, 103–115. [Google Scholar] [CrossRef]

- Nickel, M.; Murphy, K.; Tresp, V.; Gabrilovich, E. A Review of Relational Machine Learning for Knowledge Graphs. Proc. IEEE 2016, 104, 11–33. [Google Scholar] [CrossRef]

- The Gene Ontology Handbook; Methods in Molecular Biology; Dessimoz, C., Škunca, N., Eds.; Humana Press: New York, NY, USA, 2017; ISBN 9781493937417. [Google Scholar]

- Köhler, S.; Vasilevsky, N.A.; Engelstad, M.; Foster, E.; McMurry, J.; Aymé, S.; Baynam, G.; Bello, S.M.; Boerkoel, C.F.; Boycott, K.M.; et al. The human phenotype ontology in 2017. Nucleic Acids Res. 2016, 45, D865–D876. [Google Scholar] [CrossRef]

- Frey, L.J.; Lenert, L.; Lopez-Campos, G. EHR Big Data Deep Phenotyping: Contribution of the IMIA Genomic Medicine Working Group. Yearb. Med. Inform. 2014, 9, 206–211. [Google Scholar]

- Bejnordi, B.E.; Veta, M.; van Diest, P.J.; van Ginneken, B.; Karssemeijer, N.; Litjens, G.; van der Laak, J.A.W.M.; Hermsen, M.; Manson, Q.F.; Balkenhol, M.; et al. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with Breast Cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Oron, T.R.; Clark, W.T.; Bankapur, A.R.; D’Andrea, D.; Lepore, R.; Funk, C.S.; Kahanda, I.; Verspoor, K.M.; Ben-Hur, A. An expanded evaluation of protein function prediction methods shows an improvement in accuracy. Genome Biol. 2016, 17, 184. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Frey, L.J. Artificial Intelligence and Integrated Genotype–Phenotype Identification. Genes 2019, 10, 18. https://doi.org/10.3390/genes10010018

Frey LJ. Artificial Intelligence and Integrated Genotype–Phenotype Identification. Genes. 2019; 10(1):18. https://doi.org/10.3390/genes10010018

Chicago/Turabian StyleFrey, Lewis J. 2019. "Artificial Intelligence and Integrated Genotype–Phenotype Identification" Genes 10, no. 1: 18. https://doi.org/10.3390/genes10010018

APA StyleFrey, L. J. (2019). Artificial Intelligence and Integrated Genotype–Phenotype Identification. Genes, 10(1), 18. https://doi.org/10.3390/genes10010018