Unmanned Aircraft System (UAS) Technology and Applications in Agriculture

Abstract

1. Introduction

2. Areas of Use

2.1. Field Mapping

2.2. Plant Stress Detection

2.3. Biomass and Field Nutrient Estimation

2.4. Weed Management

2.5. Counting

2.6. Chemical Spraying

2.7. Miscellaneous

3. Platforms and Peripherals

3.1. Platforms

3.2. Imaging

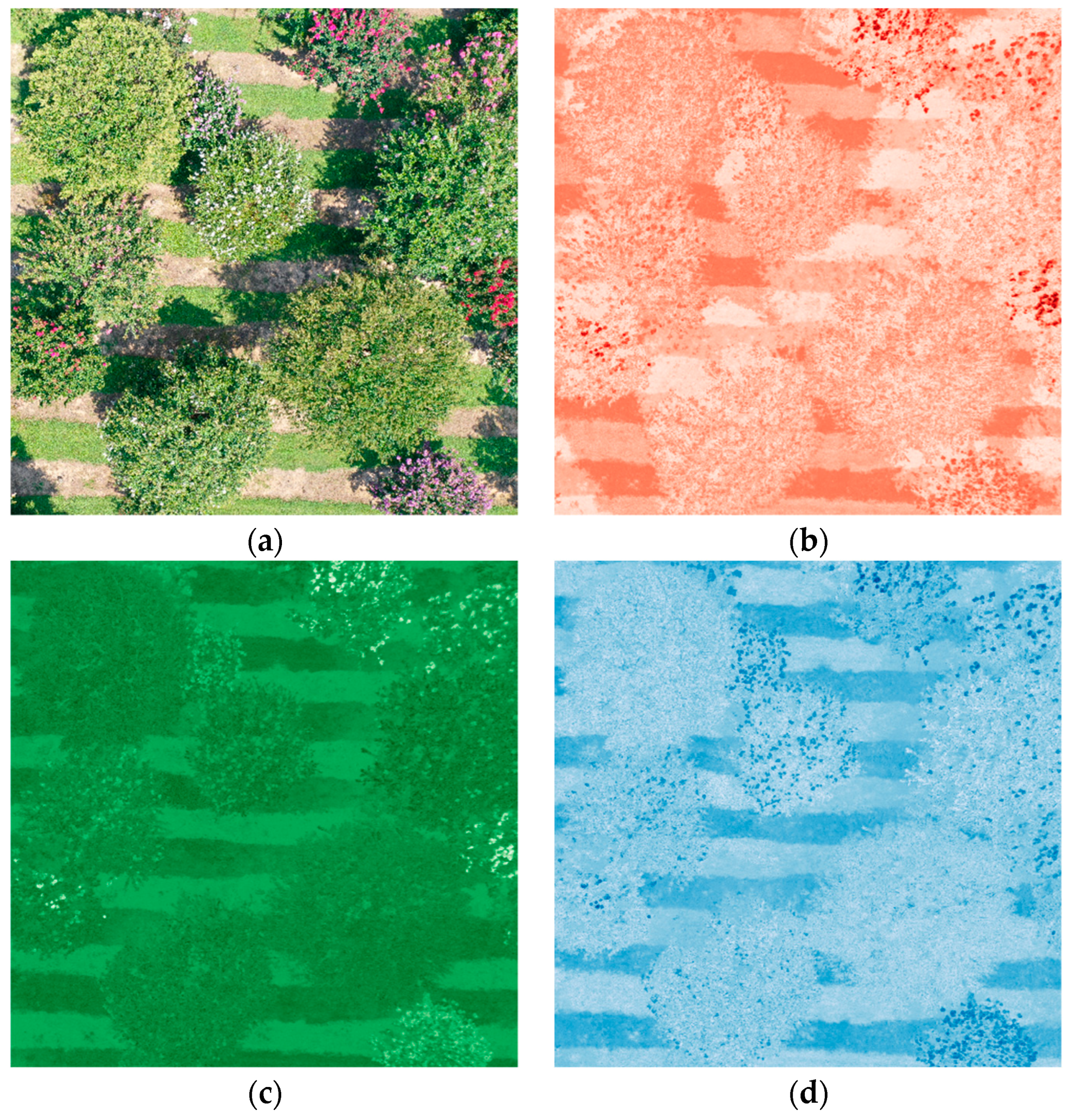

3.2.1. RGB Cameras

3.2.2. Multispectral and NIR Cameras

3.2.3. Hyperspectral Cameras

3.2.4. Thermal Cameras

3.2.5. Depth Sensors

3.3. Spraying Equipment

3.4. Gripping Tools

3.5. Geospatial Technology

4. Data Processing

4.1. Vegetation

4.2. 3D Point Clouds

4.3. Data Processing

4.3.1. Image Processing

4.3.2. Data Processing

4.3.3. Multi-Sensor Processing

4.3.4. Autonomy

5. Considerations

5.1. Legal

5.2. Economic

5.3. Integration and Usability

6. Discussion

6.1. Advantages and Limitations

6.2. Future Areas of Research

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, N.; Wang, M.; Wang, N. Precision agriculture—A worldwide overview. Comput. Electron. Agric. 2002, 36, 113–132. [Google Scholar] [CrossRef]

- Tokekar, P.; Vander Hook, J.; Mulla, D.; Isler, V. Sensor planning for a symbiotic UAV and UGV system for precision agriculture. IEEE Trans. Robot. 2016, 32, 1498–1511. [Google Scholar] [CrossRef]

- Kumar, S.A.; Ilango, P. The impact of wireless sensor network in the field of precision agriculture: A review. Wirel. Pers. Commun. 2018, 98, 685–698. [Google Scholar] [CrossRef]

- Murugan, D.; Garg, A.; Singh, D. Development of an adaptive approach for precision agriculture monitoring with drone and satellite data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5322–5328. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Viljanen, N.; Honkavaara, E.; Näsi, R.; Hakala, T.; Niemeläinen, O.; Kaivosoja, J. A novel machine learning method for estimating biomass of grass swards using a photogrammetric canopy height model, images and vegetation indices captured by a drone. Agriculture 2018, 8, 70. [Google Scholar] [CrossRef]

- Marino, S.; Alvino, A. Detection of spatial and temporal variability of wheat cultivars by high-resolution vegetation indices. Agronomy 2019, 9, 226. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Tang, J.; Suomalainen, J.; Kooistra, L. Combining hyperspectral UAV and multispectral FORMOSAT-2 imagery for precision agriculture applications. In Proceedings of the Workshop on Hyperspectral Image and Signal Processing, Lausanne, Switzerland, 24–27 June 2014. [Google Scholar]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Canis, B. Unmanned aircraft systems (UAS): Commercial outlook for a new industry. Congr. Res. Serv. Rep. 2015, 7-5700, R44192. [Google Scholar]

- Gupta, S.G.; Ghonge, M.M.; Jawandhiya, P.M. Review of unmanned aircraft system (UAS). Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 1646–1658. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T.; Mirsky, S.B.; Hively, W.D. Remote sensing with simulated unmanned aircraft imagery for precision agriculture applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4566–4571. [Google Scholar] [CrossRef]

- Chang, A.; Jung, J.; Maeda, M.M.; Landivar, J. Crop height monitoring with digital imagery from Unmanned Aerial System (UAS). Comput. Electron. Agric. 2017, 141, 232–237. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of spectral-temporal response surfaces by combining multispectral satellite and hyperspectral UAV imagery for precision agriculture applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Primicerio, J.; Di Gennaro, S.F.; Fiorillo, E.; Genesio, L.; Lugato, E.; Matese, A.; Vaccari, F.P. A flexible unmanned aerial vehicle for precision agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- Bachmann, F.; Herbst, R.; Gebbers, R.; Hafner, V.V. Micro UAV based georeferenced orthophoto generation in VIS + NIR for precision agriculture. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 11–16. [Google Scholar] [CrossRef]

- Navia, J.; Mondragon, I.; Patino, D.; Colorado, J. Multispectral mapping in agriculture: Terrain mosaic using an autonomous quadcopter UAV. In Proceedings of the IEEE 2016 International Conference on Unmanned Aircraft Systems, ICUAS 2016, Arlington, VA, USA, 7–10 June 2016; pp. 1351–1358. [Google Scholar]

- Nolan, A.P.; Park, S.; Fuentes, S.; Ryu, D.; Chung, H. Automated detection and segmentation of vine rows using high resolution UAS imagery in a commercial vineyard. In Proceedings of the 21st International Congress on Modelling and Simulation, Gold Coast, Australia, 29 November–4 December 2015; pp. 1406–1412. [Google Scholar]

- Surový, P.; Almeida Ribeiro, N.; Panagiotidis, D. Estimation of positions and heights from UAV-sensed imagery in tree plantations in agrosilvopastoral systems. Int. J. Remote Sens. 2018, 39, 4786–4800. [Google Scholar] [CrossRef]

- Rokhmana, C.A. The potential of UAV-based remote sensing for supporting precision agriculture in Indonesia. Procedia Environ. Sci. 2015, 24, 245–253. [Google Scholar] [CrossRef]

- Roth, L.; Streit, B. Predicting cover crop biomass by lightweight UAS-based RGB and NIR photography: An applied photogrammetric approach. Precis. Agric. 2017, 19, 1–22. [Google Scholar] [CrossRef]

- Katsigiannis, P.; Misopolinos, L.; Liakopoulos, V.; Alexandridis, T.K.; Zalidis, G. An autonomous multi-sensor UAV system for reduced-input precision agriculture applications. In Proceedings of the IEEE 24th Mediterranean Conference on Control and Automation, MED 2016, Athens, Greece, 21–24 June 2016; pp. 60–64. [Google Scholar]

- Gago, J.; Douthe, C.; Coopman, R.E.; Gallego, P.P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.L.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.J.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11, 35. [Google Scholar] [CrossRef] [PubMed]

- Cilia, C.; Panigada, C.; Rossini, M.; Meroni, M.; Busetto, L.; Amaducci, S.; Boschetti, M.; Picchi, V.; Colombo, R. Nitrogen status assessment for variable rate fertilization in maize through hyperspectral imagery. Remote Sens. 2014, 6, 6549–6565. [Google Scholar] [CrossRef]

- Li, J.; Zhang, F.; Qian, X.; Zhu, Y.; Shen, G. Quantification of rice canopy nitrogen balance index with digital imagery from unmanned aerial vehicle. Remote Sens. Lett. 2015, 6, 183–189. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Al-Saddik, H.; Simon, J.C.; Brousse, O.; Cointault, F. Multispectral band selection for imaging sensor design for vineyard disease detection: Case of Flavescence Dorée. Adv. Anim. Biosci. 2017, 8, 150–155. [Google Scholar] [CrossRef]

- De Castro, A.I.; Ehsani, R.; Ploetz, R.C.; Crane, J.H.; Buchanon, S. Detection of laurel wilt disease in avocado using low altitude aerial imaging. PLoS ONE 2015, 10, e0124642. [Google Scholar] [CrossRef] [PubMed]

- Mattupalli, C.; Moffet, C.A.; Shah, K.N.; Young, C.A. Supervised classification of RGB Aerial imagery to evaluate the impact of a root rot disease. Remote Sens. 2018, 10, 917. [Google Scholar] [CrossRef]

- Zhang, D.; Zhou, X.; Zhang, J.; Lan, Y.; Xu, C.; Liang, D. Detection of rice sheath blight using an unmanned aerial system with high-resolution color and multispectral imaging. PLoS ONE 2018, 13, e0187470. [Google Scholar] [CrossRef]

- Kalischuk, M.; Paret, M.L.; Freeman, J.H.; Raj, D.; Da Silva, S.; Eubanks, S.; Wiggins, D.J.; Lollar, M.; Marois, J.J.; Mellinger, H.C.; et al. An improved crop scouting technique incorporating unmanned aerial vehicle–assisted multispectral crop imaging into conventional scouting practice for gummy stem blight in watermelon. Plant Dis. 2019, 103, 1642–1650. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [PubMed]

- Sabrol, H.; Satish, K. Tomato plant disease classification in digital images using classification tree. In Proceedings of the IEEE International Conference on Communication and Signal Processing, ICCSP 2016, Melmaruvathur, India, 6–8 April 2016; pp. 1242–1246. [Google Scholar]

- Dhaware, C.G.; Wanjale, K.H. A modern approach for plant leaf disease classification which depends on leaf image processing. In Proceedings of the 2017 International Conference on Computer Communication and Informatics, ICCCI 2017, Coimbatore, India, 5–7 January 2017. [Google Scholar]

- Grüner, E.; Astor, T.; Wachendorf, M. Biomass prediction of heterogeneous temperate grasslands using an SfM approach based on UAV imaging. Agronomy 2019, 9, 54. [Google Scholar] [CrossRef]

- Honkavaara, E.; Kaivosoja, J.; Mäkynen, J.; Pellikka, I.; Pesonen, L.; Saari, H.; Salo, H.; Hakala, T.; Marklelin, L.; Rosnell, T. Hyperspectral reflectance signatures and point clouds for precision agriculture by light weight UAV imaging system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I–7, 353–358. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Wirwahn, J.; Claupein, W. A programmable aerial multispectral camera system for in-season crop biomass and nitrogen content estimation. Agriculture 2016, 6, 4. [Google Scholar] [CrossRef]

- Aldana-Jague, E.; Heckrath, G.; Macdonald, A.; van Wesemael, B.; Van Oost, K. UAS-based soil carbon mapping using VIS-NIR (480–1000 nm) multi-spectral imaging: Potential and limitations. Geoderma 2016, 275, 55–66. [Google Scholar] [CrossRef]

- Thilakarathna, M.; Raizada, M. Challenges in using precision agriculture to optimize symbiotic nitrogen fixation in legumes: Progress, limitations, and future improvements needed in diagnostic testing. Agronomy 2018, 8, 78. [Google Scholar] [CrossRef]

- Berger, K.; Atzberger, C.; Danner, M.; D’Urso, G.; Mauser, W.; Vuolo, F.; Hank, T. Evaluation of the PROSAIL model capabilities for future hyperspectral model environments: A review study. Remote Sens. 2018, 10, 85. [Google Scholar] [CrossRef]

- Duan, S.B.; Li, Z.L.; Wu, H.; Tang, B.H.; Ma, L.; Zhao, E.; Li, C. Inversion of the PROSAIL model to estimate leaf area index of maize, potato, and sunflower fields from unmanned aerial vehicle hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 12–20. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.M.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- Sandler, H. Weed management in cranberries: A historical perspective and a look to the future. Agriculture 2018, 8, 138. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; De Castro, A.I.; López-Granados, F. Assessing the accuracy of mosaics from unmanned aerial vehicle (UAV) imagery for precision agriculture purposes in wheat. Precis. Agric. 2014, 15, 44–56. [Google Scholar] [CrossRef]

- Mink, R.; Dutta, A.; Peteinatos, G.; Sökefeld, M.; Engels, J.; Hahn, M.; Gerhards, R. Multi-temporal site-specific weed control of Cirsium arvense (L.) Scop. and Rumex crispus L. in maize and sugar beet using unmanned aerial vehicle based mapping. Agriculture 2018, 8, 65. [Google Scholar] [CrossRef]

- Pflanz, M.; Nordmeyer, H.; Schirrmann, M. Weed mapping with UAS imagery and a bag of visual words based image classifier. Remote Sens. 2018, 10, 1530. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Weeds detection in UAV imagery using SLIC and the hough transform. In Proceedings of the 7th International Conference on Image Processing Theory, Tools and Applications, IPTA 2017, Montreal, QC, Canada, 28 November–1 December 2017; Volume 2018, pp. 1–6. [Google Scholar]

- Rasmussen, J.; Nielsen, J.; Streibig, J.C.; Jensen, J.E.; Pedersen, K.S.; Olsen, S.I. Pre-harvest weed mapping of Cirsium arvense in wheat and barley with off-the-shelf UAVs. Precis. Agric. 2018, 20, 983–999. [Google Scholar] [CrossRef]

- Zortea, M.; Macedo, M.M.G.; Mattos, A.B.; Ruga, B.C.; Gemignani, B.H. Automatic citrus tree detection from UAV images based on convolutional neural networks. In Proceedings of the 31th Sibgrap/WIA—Conference on Graphics, Patterns and Images, SIBGRAPI’18, Foz do Iguacu, Brazil, 29 October–1 November 2018. [Google Scholar]

- She, Y.; Ehsani, R.; Robbins, J.; Leiva, J.N.; Owen, J. Applications of small UAV systems for tree and nursery inventory management. In Proceedings of the 12th International Conference on Precision Agriculture (ICPA), Sacramento, CA, USA, 20–23 July 2014. [Google Scholar]

- She, Y.; Ehsani, R.; Robbins, J.; Leiva, J.N.; Owen, J. Applications of high-resolution imaging for open field container nursery counting. Remote Sens. 2018, 10, 12. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep count: Fruit counting based on deep simulated learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef] [PubMed]

- Rahnemoonfar, M.; Dobbs, D.; Yari, M.; Starek, M.J. DisCountNet: Discriminating and counting network for real-time counting and localization of sparse objects in high-resolution UAV imagery. Remote Sens. 2019, 11, 1128. [Google Scholar] [CrossRef]

- Pederi, Y.A.; Cheporniuk, H.S. Unmanned aerial vehicles and new technological methods of monitoring and crop protection in precision agriculture. In Proceedings of the 2015 IEEE 3rd International Conference Actual Problems of Unmanned Aerial Vehicles Developments, APUAVD 2015—Proceedings, Kiev, Ukraine, 13–15 October 2015; pp. 298–301. [Google Scholar]

- Xiao, Q.; Xin, F.; Lou, Z.; Zhou, T.; Wang, G.; Han, X.; Lan, Y.; Fu, W. Effect of aviation spray adjuvants on defoliant droplet deposition and cotton defoliation efficacy sprayed by unmanned aerial vehicles. Agronomy 2019, 9, 217. [Google Scholar] [CrossRef]

- Zhu, H.; Li, H.; Zhang, C.; Li, J.; Zhang, H. Performance characterization of the UAV chemical application based on CFD simulation. Agronomy 2019, 9, 308. [Google Scholar] [CrossRef]

- Lou, Z.; Xin, F.; Han, X.; Lan, Y.; Duan, T.; Fu, W. Effect of Unmanned Aerial Vehicle Flight Height on Droplet Distribution, Drift and Control of Cotton Aphids and Spider Mites. Agronomy 2018, 8, 187. [Google Scholar] [CrossRef]

- Myers, D.; Ross, C.; Liu, B.; Ave, G.; Poly, C.; Obispo, S.L. A review of unmanned aircraft system (UAS) applications for agriculture. In Proceedings of the 2015 ASABE Annual International Meeting, New Orleans, LA, USA, 26-29 July 2015. [Google Scholar]

- Kale, S.D.; Khandagale, S.V.; Gaikwad, S.S.; Narve, S.S.; Gangal, P.V. International journal of advanced research in computer science and software engineering agriculture drone for spraying fertilizer and pesticides. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2015, 5, 804–807. [Google Scholar]

- Mogili, U.R.; Deepak, B.B.V.L. Review on application of drone systems in precision agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Deery, D.; Jimenez-Berni, J.; Jones, H.; Sirault, X.; Furbank, R. Proximal remote sensing buggies and potential applications for field-based phenotyping. Agronomy 2014, 5, 349–379. [Google Scholar] [CrossRef]

- Chapman, S.; Merz, T.; Chan, A.; Jackway, P.; Hrabar, S.; Dreccer, M.; Holland, E.; Zheng, B.; Ling, T.; Jimenez-Berni, J. Pheno-copter: A low-altitude, autonomous remote-sensing robotic helicopter for high-throughput field-based phenotyping. Agronomy 2014, 4, 279–301. [Google Scholar] [CrossRef]

- Patrick, A.; Pelham, S.; Culbreath, A.; Corely Holbrook, C.; De Godoy, I.J.; Li, C. High throughput phenotyping of tomato spot wilt disease in peanuts using unmanned aerial systems and multispectral imaging. IEEE Instrum. Meas. Mag. 2017, 20, 4–12. [Google Scholar] [CrossRef]

- Tripicchio, P.; Satler, M.; Dabisias, G.; Ruffaldi, E.; Avizzano, C.A. Towards smart farming and sustainable agriculture with drones. In Proceedings of the 11th International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 140–143. [Google Scholar]

- Pobkrut, T.; Eamsa-Ard, T.; Kerdcharoen, T. Sensor drone for aerial odor mapping for agriculture and security services. In Proceedings of the 13th IEEE International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Chiang Mai, Thailand, 28 June–1 July 2016. [Google Scholar]

- Rangel, R.K. Development of an UAVS distribution tools for pest’s biological control “Bug Bombs!”. In Proceedings of the IEEE Aerospace Conference Proceedings, Big Sky, MT, USA, 5–12 March 2016. [Google Scholar]

- Tewes, A.; Schellberg, J. Towards remote estimation of radiation use efficiency in maize using UAV-based low-cost camera imagery. Agronomy 2018, 8, 16. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, R.R.N.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Stefanakis, D.; Hatzopoulos, J.N.; Margaris, N. Creation of a remote sensing unmanned aerial system (UAS) for precision agriculture and related mapping applications. In Proceedings of the ASPRS 2013 Annual Conference, Baltimore, MA, USA, 24–28 March 2013. [Google Scholar]

- Hogan, S.D.; Kelly, M.; Stark, B.; Chen, Y.Q. Unmanned aerial systems for agriculture and natural resources. Calif. Agric. 2017, 71, 5–14. [Google Scholar] [CrossRef]

- Yallappa, D.; Veerangouda, M.; Maski, D.; Palled, V.; Bheemanna, M. Development and evaluation of drone mounted sprayer for pesticide applications to crops. In Proceedings of the 2017 IEEE Global Humanitarian Technology Conference (GHTC), San Jose, CA, USA, 19–22 October 2017. [Google Scholar]

- Reinecke, M.; Prinsloo, T. The influence of drone monitoring on crop health and harvest size. In Proceedings of the 2017 1st International Conference on Next Generation Computing Applications (NextComp), Port Louis, Mauritius, 19–21 July 2017. [Google Scholar]

- Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 2018, 176, 172–184. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K. Simplified radiometric calibration for UAS-mounted multispectral sensor. Eur. J. Remote Sens. 2018, 51, 301–313. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Mahajan, U.; Bundel, B.R. Drones for normalized difference vegetation index ( NDVI ), to estimate crop health for precision agriculture: A cheaper alternative for spatial satellite sensors. In Proceedings of the International Conference on Innovative Research in Agriculture, Food Science, Forestry, Horticulture, Aquaculture, Animal Sciences, Biodiversity, Ecological Sciences and Climate Change, New Delhi, India, 22 October 2016; pp. 38–41. [Google Scholar]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Methods 2017, 13, 80. [Google Scholar] [CrossRef] [PubMed]

- Proctor, C.; He, Y. Workflow for building a hyperspectral UAV: Challenges and opportunities. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2015, 40, 415–419. [Google Scholar] [CrossRef]

- Nackaerts, K.; Everaerts, J.; Michiels, B.; Holmlund, C.; Saari, H. Evaluation of a lightweigth UAS-prototype for hyperspectral imaging. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, XXXVIII, 478–483. [Google Scholar]

- Saari, H.; Akujärvi, A.; Holmlund, C.; Ojanen, H.; Kaivosoja, J.; Nissinen, A.; Niemeläinen, O. Visible, very near IR and short wave IR hyperspectral drone imaging system for agriculture and natural water applicationS. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 165–170. [Google Scholar] [CrossRef]

- Honkavaara, E.; Hakala, T.; Markelin, L.; Jaakkola, A.; Saari, H.; Ojanen, H.; Pölönen, I.; Tuominen, S.; Näsi, R.; Rosnell, T.; et al. Autonomous hyperspectral UAS photogrammetry for environmental monitoring applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2014, 40, 155–159. [Google Scholar] [CrossRef]

- Costa, J.M.; Grant, O.M.; Chaves, M.M. Thermography to explore plant-environment interactions. J. Exp. Bot. 2013, 64, 3937–3949. [Google Scholar] [CrossRef]

- Granum, E.; Pérez-Bueno, M.L.; Calderón, C.E.; Ramos, C.; de Vicente, A.; Cazorla, F.M.; Barón, M. Metabolic responses of avocado plants to stress induced by Rosellinia necatrix analysed by fluorescence and thermal imaging. Eur. J. Plant Pathol. 2015, 142, 625–632. [Google Scholar] [CrossRef]

- Ribeiro-Gomes, K.; Hernández-López, D.; Ortega, J.F.; Ballesteros, R.; Poblete, T.; Moreno, M.A. Uncooled thermal camera calibration and optimization of the photogrammetry process for UAV applications in agriculture. Sensors 2017, 17, 2173. [Google Scholar] [CrossRef] [PubMed]

- Vit, A.; Shani, G. Comparing RGB-D sensors for close range outdoor agricultural phenotyping. Sensors 2018, 18, 4413. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Li, C. Size estimation of sweet onions using consumer-grade RGB-depth sensor. J. Food Eng. 2014, 142, 153–162. [Google Scholar] [CrossRef]

- Saha, A.K.; Saha, J.; Ray, R.; Sircar, S.; Dutta, S.; Chattopadhyay, S.P.; Saha, H.N. IOT-based drone for improvement of crop quality in agricultural field. In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference, Las Vegas, NV, USA, 8–10 January 2018; pp. 612–615. [Google Scholar]

- Zollhöfer, M.; Stotko, P.; Görlitz, A.; Theobalt, C.; Nießner, M.; Klein, R.; Kolb, A. State of the Art on 3D Reconstruction with RGB-D Cameras; Wiley: Hoboken, NJ, USA, 2018. [Google Scholar]

- Andújar, D.; Dorado, J.; Fernández-Quintanilla, C.; Ribeiro, A. An approach to the use of depth cameras for weed volume estimation. Sensors 2016, 16, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Stark, B.; Rider, S.; Chen, Y.Q. Optimal pest management by networked unmanned cropdusters in precision agriculture: A cyber-physical system approach. In Proceedings of the 2nd IFAC Workshop on Research, Education and Development of Unmanned Aerial Systems, IFAC, Compiegne, France, 20–22 November 2013; pp. 296–302. [Google Scholar]

- Salama, S.; Hajjaj, H. Review of agriculture robotics: Practicality and feasibility. In Proceedings of the 2016 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), Tokyo, Japan, 17–20 December 2016; pp. 194–198. [Google Scholar]

- Gealy, D.V.; McKinley, S.; Guo, M.; Miller, L.; Vougioukas, S.; Viers, J.; Carpin, S.; Goldberg, K. DATE: A handheld co-robotic device for automated tuning of emitters to enable precision irrigation. In Proceedings of the IEEE International Conference on Automation Science and Engineering, Fort Worth, TX, USA, 21–24 August 2016; pp. 922–927. [Google Scholar]

- Thatshayini, D. FPGA realization of fuzzy based robotic manipulator for agriculture applications. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019. [Google Scholar]

- Font, D.; Pallejà, T.; Tresanchez, M.; Runcan, D.; Moreno, J.; Martínez, D.; Teixidó, M.; Palacín, J. A proposal for automatic fruit harvesting by combining a low cost stereovision camera and a robotic arm. Sensors 2014, 14, 11557–11579. [Google Scholar] [CrossRef]

- Roshanianfard, A.; Noguchi, N.; Kamata, T. Design and performance of a robotic arm for farm use. Int. J. Agric. Biol. Eng. 2019, 12, 146–158. [Google Scholar] [CrossRef]

- Sabanci, K.; Aydin, C. Smart robotic weed control system for sugar beet. J. Agric. Sci. Technol. 2017, 19, 73–83. [Google Scholar]

- Shamshiri, R.R.; Weltzien, C.; Hameed, I.A.; Yule, I.J.; Grift, T.E.; Balasundram, S.K.; Pitonakova, L.; Ahmad, D.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–14. [Google Scholar] [CrossRef]

- Konam, S. Agricultural aid for mango cutting (AAM). In Proceedings of the IEEE International Conference on Advances in Computing, Communications and Informatics, New Delhi, India, 24–27 September 2014; pp. 1520–1524. [Google Scholar]

- Varadaramanujan, S.; Sreenivasa, S.; Pasupathy, P.; Calastawad, S.; Morris, M.; Tosunoglu, S. Design of a drone with a robotic end-effector. In Proceedings of the 30th Florida Conference on Recent Advances in Robotics, Boca Raton, FL, USA, 11–12 May 2017. [Google Scholar]

- Guo, J.; Li, X.; Li, Z.; Hu, L.; Yang, G.; Zhao, C.; Fairbairn, D.; Watson, D.; Ge, M. Multi-GNSS precise point positioning for precision agriculture. Precis. Agric. 2018, 19, 895–911. [Google Scholar] [CrossRef]

- Pauly, K. Applying conventional vegetation vigor indices to UAS-derived orthomosaics: Issues and considerations. In Proceedings of the 12th International Conference for Precision Agriculture, Sacramento, CA, USA, 20–23 July 2014. [Google Scholar]

- Kaivosoja, J.; Pesonen, L.; Kleemola, J.; Pölönen, I.; Salo, H.; Honkavaara, E.; Saari, H.; Mäkynen, J.; Rajala, A. A case study of a precision fertilizer application task generation for wheat based on classified hyperspectral data from UAV combined with farm history data. In Proceedings of the SPIE—The International Society for Optics and Photonics SPIE, Dresden, Germany, 23–26 September 2013. [Google Scholar]

- Fuertes, F.C.; Wilhelm, L.; Porté-Agel, F. Multirotor UAV-based platform for the measurement of atmospheric turbulence: Validation and signature detection of tip vortices of wind turbine blades. J. Atmos. Ocean. Technol. 2019, 36, 941–955. [Google Scholar] [CrossRef]

- Atkins, E.M. Autonomy as an enabler of economically-viable, beyond-line-of-sight, low-altitude UAS applications with acceptable risk. In Proceedings of the AUVSI Unmanned Systems, Orlando, FL, USA, 12–15 May 2014. [Google Scholar]

- Fuentes-Peailillo, F.; Ortega-Farias, S.; Rivera, M.; Bardeen, M.; Moreno, M. Comparison of vegetation indices acquired from RGB and multispectral sensors placed on UAV. In Proceedings of the 2018 IEEE International Conference on Automation/XXIII Congress of the Chilean Association of Automatic Control (ICA-ACCA), Concepcion, Chile, 17–19 October 2018. [Google Scholar]

- Steven, M.D. The sensitivity of the OSAVI vegetation index to observational parameters. Remote Sens. Environ. 1998, 63, 49–60. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Mortensen Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 2013, 38, 259–269. [Google Scholar] [CrossRef]

- United States Naval Academy Point Cloud Data. Available online: https://www.usna.edu/Users/oceano/pguth/md_help/html/pt_clouds.htm (accessed on 7 October 2019).

- Potena, C.; Khanna, R.; Nieto, J.; Siegwart, R.; Nardi, D.; Pretto, A. AgriColMap: Aerial-ground collaborative 3D mapping for precision farming. IEEE Robot. Autom. Lett. 2019, 4, 1085–1092. [Google Scholar] [CrossRef]

- Dong, J.; Burnham, J.G.; Boots, B.; Rains, G.; Dellaert, F. 4D crop monitoring: Spatio-temporal reconstruction for agriculture. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 3878–3885. [Google Scholar]

- Chebrolu, N.; Labe, T.; Stachniss, C. Robust long-term registration of UAV images of crop fields for precision agriculture. IEEE Robot. Autom. Lett. 2018, 3, 3097–3104. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Baugh, W.M.; Groeneveld, D.P. Empirical proof of the empirical line. Int. J. Remote Sens. 2008, 29, 665–672. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. Automatically designing CNN architectures using genetic algorithm for image classification. arXiv 2018, arXiv:1808.03818. [Google Scholar]

- Alsalam, B.H.Y.; Morton, K.; Campbell, D.; Gonzalez, F. Autonomous UAV with vision based on-board decision making for remote sensing and precision agriculture. In Proceedings of the IEEE Aerospace Conference, IEEE, Big Sky, MT, USA, 4–11 March 2017. [Google Scholar]

- Luxhøj, J.T. A socio-technical model for analyzing safety risk of unmanned aircraft systems (UAS): An application to precision agriculture. Procedia Manuf. 2015, 3, 928–935. [Google Scholar] [CrossRef]

- Jamoom, M.B.; Joerger, M.; Pervan, B. Sense and avoid for unmanned aircraft systems: Ensuring integrity and continuity for three dimensional intruder trajectories. In Proceedings of the 28th International Technical Meeting of the Satellite Division of The Institute of Navigation, Tampa, FL, USA, 14–18 September 2015. [Google Scholar]

- Sholes, E. Evolution of a UAV autonomy classification taxonomy. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2007. [Google Scholar]

- FAA Recreational Flyers & Modeler Community-Based Organizations. Available online: https://www.faa.gov/uas/recreational_fliers/ (accessed on 7 October 2019).

- FAA Certificated Remote Pilots Including Commercial Operators. Available online: https://www.faa.gov/uas/commercial_operators/ (accessed on 7 October 2019).

- Petty, R.V. Drone use in aerial pesticide application faces outdated regulatory hurdles. Harvard J. Law Technol. Dig. 2018. Available online: https://jolt.law.harvard.edu/digest/drone-use-pesticide-application/ (accessed on 7 October 2019).

- Stoica, A.-A. Emerging legal issues regarding civilian drone usage. Challenges Knowl. Soc. 2018, 12, 692–699. [Google Scholar]

- Stöcker, C.; Bennett, R.; Nex, F.; Gerke, M.; Zevenbergen, J. Review of the current state of UAV regulations. Remote Sens. 2017, 9, 459. [Google Scholar] [CrossRef]

- UAV. Coach Master List of Drone Laws (Organized by State & Country). Available online: https://uavcoach.com/drone-laws/ (accessed on 7 October 2019).

- Helnarska, K.J.; Krawczyk, J.; Motrycz, G. Legal regulations of UAVs in Poland and France. Sci. J. Silesian Univ. Technol. Ser. Transp. 2018, 101, 89–97. [Google Scholar] [CrossRef]

- DJI Mavic 2 Pro. Available online: https://store.dji.com/product/mavic-2?vid=45291/ (accessed on 7 October 2019).

- Aqeel-Ur-Rehman; Abbasi, A.Z.; Islam, N.; Shaikh, Z.A. A review of wireless sensors and networks’ applications in agriculture. Comput. Stand. Interfaces 2014, 36, 263–270. [Google Scholar] [CrossRef]

- Wang, N.; Zhang, N.; Wang, M. Wireless sensors in agriculture and food industry—Recent development and future perspective. Comput. Electron. Agric. 2006, 50, 14. [Google Scholar] [CrossRef]

- Primicerio, J.; Matese, A.; Di Gennaro, S.F.; Albanese, L.; Guidoni, S.; Gay, P. Development of an integrated, low-cost and open-source system for precision viticulture: From UAV to WSN. In Proceedings of the EFITA-WCCA-CIGR Conference Sustainable Agriculture through ICT Innovation, Turin, Italy, 24–27 June 2013; pp. 24–27. [Google Scholar]

- Moribe, T.; Okada, H.; Kobayashl, K.; Katayama, M. Combination of a wireless sensor network and drone using infrared thermometers for smart agriculture. In Proceedings of the 15th IEEE Annual Consumer Communications and Networking Conference, Las Vegas, NV, USA, 12–15 January 2018. [Google Scholar]

- Uddin, M.A.; Mansour, A.; Le Jeune, D.; Ayaz, M.; Aggoune, E.H.M. UAV-assisted dynamic clustering of wireless sensor networks for crop health monitoring. Sensors 2018, 18, 555. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hassler, S.C.; Baysal-Gurel, F. Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy 2019, 9, 618. https://doi.org/10.3390/agronomy9100618

Hassler SC, Baysal-Gurel F. Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy. 2019; 9(10):618. https://doi.org/10.3390/agronomy9100618

Chicago/Turabian StyleHassler, Samuel C., and Fulya Baysal-Gurel. 2019. "Unmanned Aircraft System (UAS) Technology and Applications in Agriculture" Agronomy 9, no. 10: 618. https://doi.org/10.3390/agronomy9100618

APA StyleHassler, S. C., & Baysal-Gurel, F. (2019). Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy, 9(10), 618. https://doi.org/10.3390/agronomy9100618