1. Introduction

Banana, as one of the world’s most important economic crops, is widely cultivated and highly appreciated for its nutritional value [

1]. In 2020, China’s banana output reached 11.113 million tons, accounting for a significant share of global production [

2,

3]. The development and intelligent management of the banana industry have thus become critically important [

4,

5]. However, accurate estimation of banana plants’ phenotypic parameters remains a major challenge in agriculture [

6]. Since plant growth status directly affects yield, leaf count serves as an important indicator of the growth stage of banana plants [

7,

8]. At present, leaf counting in banana plantations relies primarily on manual observation, leading to low efficiency and high labor intensity [

9,

10]. Moreover, in the complex environment of banana orchards, leaves often occlude one another and can be easily confused with morphologically similar weeds [

11]. Research on banana leaf recognition and counting remains scarce, which in turn restricts the advancement of intelligent plantation management. Therefore, developing a high-precision method for banana leaf recognition and counting is of great practical significance for realizing smart management of banana orchards.

In recent years, with the rapid advancement of deep learning technologies, deep learning-based plant leaf counting methods can be broadly divided into three main approaches: regression methods, which directly predict the total number of leaves per plant; detection methods, which first detect each leaf or leaf tip and then perform counting; and segmentation methods, which carry out instance segmentation for every leaf in the image and subsequently tally the leaf count [

10,

12].

Regression-based approaches predict the number of leaves directly from an image without explicitly detecting each leaf. Ref. [

7] introduced a deep learning-based automated leaf counting method that treats leaf counting as a direct regression problem, requiring only the total leaf count per potted plant as annotation. This method offers low annotation cost and easy implementation, but its performance degrades significantly when the number of targets to be predicted is large [

13]. To overcome this limitation, ref. [

14] proposed a multi-scale regression model that counts leaves at different resolutions and then fuses the predictions from each scale to obtain the final count. This approach not only adapts to leaves of varying sizes and shapes, but also maintains high counting accuracy even when center-point annotations are missing.

Detection-based methods first localize each leaf and then count them. Ref. [

15] proposed a flexible deep network, Pheno-Deep Counter, capable of adapting to different plant species and accurately predicting leaf counts across diverse plant images. Ref. [

16] developed an end-to-end detection algorithm that employs the YOLOv3 model to detect potted rice leaf tips for total leaf count estimation, demonstrating strong counting performance. Ref. [

17] utilized a ResNet50 backbone as a feature extractor and trained the network end-to-end to predict Arabidopsis leaf numbers. Ref. [

14] introduced a hybrid detection–regression approach in which the model first identifies leaf center points and then aggregates them to obtain the final count; this strategy achieved excellent performance on a large dataset with an average precision (AP) of 0.94. Ref. [

18] improved the CenterNet model for leaf detection and counting in greenhouse-grown cucumber, eggplant, and other crops, effectively detecting leaves of varying sizes and shapes. Ref. [

19] applied a two-stage deep learning framework to drone-acquired RGB images—first detecting maize leaves and then counting them—demonstrating effectiveness in real-world conditions. Ref. [

20] proposed a dual-stream deep learning framework that segments plants and counts leaves of different sizes from 2D images, achieving strong counting performance. Ref. [

21] designed a network based on the encoder–decoder EffUnet++ architecture, employing an EfficientNet-B4 encoder along with redesigned skip connections and residual modules to precisely capture key features and thereby achieve high precision leaf counting.

Segmentation-based methods first perform semantic or instance segmentation to delineate each leaf or plant region and then count leaves by tallying segmented regions or analyzing connected components. Ref. [

20] proposed a dual-stream deep network framework in which one stream conducts multi-scale semantic segmentation of the plant, while the other stream performs regression counting using the segmentation masks as auxiliary inputs. Ref. [

22] designed a leaf-counting model that combines SegNet with a custom CNN: leaf pixels are first segmented by SegNet, and the resulting masks are stacked with the original image as input to the counting network. Ref. [

23] applied an instance segmentation approach to handle leaves in complex backgrounds, generating individual leaf masks and introducing a local refinement mechanism based on Gaussian low-pass and high-boost filtering to enhance segmentation quality. Other studies have explored attention mechanisms [

24] and recurrent structures [

25] for per-leaf segmentation and counting. Ref. [

26] employed U-Net for leaf segmentation and then used a fine-tuned AlexNet to regress the leaf count from the segmentation results. These works demonstrate that segmentation-based leaf-counting methods can substantially reduce background interference and perform particularly well in scenarios with overlapping or heavily occluded leaves.

Each of these leaf-counting approaches has its limitations. First, although regression-based methods can handle leaves of varying sizes and shapes and remain effective without center-point annotations, they perform poorly under heavy leaf occlusion because direct regression cannot precisely capture the features of occluded leaves [

27]. Moreover, such models typically require large amounts of training data to achieve high accuracy. Second, detection-based methods can deliver high-precision leaf localization and counting [

14,

19], but they incur substantial computational costs—especially when processing large or high-resolution images—and are prone to false positives or duplicate detections in scenes with overlapping leaves or many plant species, which limits their applicability in complex environments. Finally, segmentation-based methods offer fine, pixel-level results but demand costly, precise annotations; they also struggle with under- or over-segmentation in cases of dense overlap or dramatic leaf shape variation, leading to reduced counting accuracy, and they suffer from slower inference speeds and greater computational overhead [

22].

Despite the progress made by existing leaf-counting methods, challenges remain under complex conditions such as occlusion, illumination variation, and species diversity. To address the task of banana leaf detection and counting in real orchard environments, this study proposes an enhanced YOLOv8n-MDSD model that delivers both high robustness and accuracy. We designed the C2f_MLCA (Mixed Local Channel Attention) module and integrated it into the backbone; this module combines local attention and channel attention to fuse and weight features across different scales and channels, thereby focusing on salient information and improving key feature extraction [

28]. In the neck, we developed a C2f_DWR module, which leverages dilated convolutions coupled with wavelet transforms to enhance convolutional feature-extraction efficiency and effectiveness, capturing multi-scale contextual information for more precise object recognition [

29]. To mitigate occlusion effects, the SEAM attention mechanism is introduced, using multi-view feature fusion and consistency regularization to concentrate the model’s focus on occluded regions [

30]. In the head, we adopt a Dynamic Head module augmented with self-attention, which integrates scale-awareness, spatial-awareness, and task-awareness to accurately capture object spatial information and reduce small-object omissions [

31]. For banana leaf counting, we design a robust counting pipeline that applies automatic thresholding and interquartile range (IQR)-based noise and outlier removal to refine detections [

32], followed by HDBSCAN clustering to identify and distinguish leaves belonging to individual banana plants [

33]. Finally, the improved algorithm is deployed on an embedded device and validated through field tests in an actual orchard.

In recent years, for agricultural applications, researchers have increasingly deployed lightweight deep learning models on edge devices to meet the requirements of real-time performance and low energy consumption. For example, ref. [

34] proposed Faster-YOLO-AP, a lightweight apple detection algorithm based on an improved YOLOv8, which integrates the PDWConv module and DWSConv downsampling technique to enable efficient real-time detection on orchard robots deployed at the edge. Ref. [

35] introduced Insect-YOLO, a lightweight pest detection algorithm deployed on an agricultural IoT monitoring platform, achieving an average processing time of approximately 17.0 ms per image, with detection results showing an R

2 value of 0.99 compared to manual counting. In addition, lightweight detection models have been developed for rice diseases [

36] and blueberry ripeness assessment [

37], both of which have been efficiently deployed on edge devices such as NVIDIA Jetson, successfully replacing traditional manual inspection. Therefore, applying lightweight models and edge computing to banana leaf recognition and counting can not only enable automated and high-precision leaf detection, but also significantly improve plantation management efficiency, providing a feasible technical solution for the construction of smart banana orchards.

The main contributions of this study are as follows: (1) To address the challenges of reduced target separability in complex banana orchard environments caused by leaf occlusion and overlap, background interference, and illumination variations, we designed an effective multi-scale feature enhancement mechanism that integrates spatial-depth dual-dimensional feature modeling with adaptive clustering inference. (2) We propose YOLOv8n-MDSD, a leaf detection model tailored for complex banana orchard conditions, which incorporates a lightweight local channel attention module (MLCA), a multi-rate depthwise separable dilated convolution module (DWR), and a self-ensemble attention module (SEAM), while introducing a task-aware dynamic detection head (Dyhead). (3) We design a robust banana leaf counting method that combines an interquartile range (IQR)-based outlier removal strategy with density-based spatial clustering (HDBSCAN) to achieve instance separation and counting of occluded leaves. (4) Finally, the proposed algorithm is deployed on an edge device and validated through field experiments in banana orchards.

2. Materials and Methods

2.1. Image Acquisition and Preprocessing

In this study, banana plant images were collected in October 2023 from a banana orchard at South China Agricultural University. The data were acquired under clear weather and sufficient natural illumination using an iPhone 12 (Apple Inc., Cupertino, CA, USA) and a SONY 5100 digital camera (Sony Corporation, Tokyo, Japan). All images were stored in JPG format, with resolutions of 4032 × 3024 pixels and 6024 × 4000 pixels, respectively, resulting in a total of 466 original images. To preserve image information while reducing computational cost, the images were proportionally resized to 1080 × 810 pixels and 1280 × 720 pixels according to their original resolutions. All images were manually annotated using the LabelImg tool in YOLO format.

A five-fold cross-validation strategy was adopted in the experimental design: the entire dataset was randomly divided into five mutually exclusive subsets, with one subset used as the validation set and the remaining four as the training set in each iteration, thereby ensuring a comprehensive evaluation of model stability and generalization by averaging the performance across five folds. After splitting the dataset into training and validation sets, data augmentation was applied exclusively to the training set to enhance model robustness under varying imaging conditions. The augmentation techniques included rotation, flipping, brightness adjustment, salt-and-pepper noise, and Gaussian noise, with each image randomly subjected to two augmentation operations. The augmented training set size was thereby expanded, while the validation set was preserved as the original images without any augmentation to ensure the independence and objectivity of evaluation. Examples of the original and augmented images are shown in

Figure 1a,b.

2.2. Problem Analysis and Motivation

In the complex natural environment of the banana plantation, the task of detecting and counting banana leaves faces the following major challenges [

38]. First, large variations in leaf scale and severe occlusion and overlap make it difficult to distinguish individual instances using only local texture or edge information, resulting in reduced detection and counting accuracy. Second, the leaf color closely resembles that of background weeds, causing foreground–background confusion under natural background interference and impairing precise leaf localization and recognition [

39]. Moreover, drastic variations in natural illumination lead to fluctuations in leaf texture, color, and morphology with changing capture conditions [

40], inducing feature drift and exacerbating instability in detection results. Finally, the absence of depth and spatial position information for occluded leaves makes 2D-image-based detection prone to false positives and false negatives, thereby undermining subsequent counting accuracy.

However, in the complex and dynamic banana plantation environment, existing feature extraction modules struggle to balance local detail enhancement with global contextual modeling. Traditional convolutions, constrained by a fixed receptive field, cannot adapt to the structural variations of leaves at different scales. The detection head’s limited sensitivity to scale, spatial position, and semantic tasks further undermines its adaptability to complex environmental changes. Moreover, a single two-dimensional feature space is insufficient to fully represent the spatial hierarchical relationships of leaves in occluded scenarios.

Therefore, this study proposes a spatial-depth-collaborative inference strategy with integrated multi-scale feature enhancement. To address the inability of existing detection feature extraction modules to simultaneously enhance local details and model global context, we design and introduce a Mixed Local Channel Attention (MLCA) module into the backbone network. MLCA employs local average pooling and global average pooling branches to extract fine-grained details and holistic contextual features [

41], respectively, and fuses them to amplify responses in critical local regions while suppressing background interference. We also adopt a Self-Ensembling Attention Mechanism (SEAM), which uses multi-scale patch partitioning and a multi-branch self-ensembling fusion strategy, together with feature-consistency regularization, to strengthen feature representations in unobstructed regions and mitigate occlusion noise. These two modules jointly overcome the shortcomings of traditional detection feature extractors in local detail representation, global context modeling, and feature consistency under occluded conditions.

To address the limited receptive field of conventional convolutions and their inability to capture structural variations of leaves at different scales [

42], we enhance the standard C2f module by introducing multi-rate depthwise separable dilated convolutions (DWR_Conv) during feature extraction. Unlike traditional single-dilation-rate convolutions or static multi-scale fusion methods, our approach employs a two-step residualized feature extraction strategy—spatial residualization followed by semantic residualization—to further expand the receptive field. This design significantly improves the model’s contextual modeling ability and scale adaptability for leaves of varying sizes.

To address the detection head’s limited adaptability to complex environmental variations, we incorporate a task-aware dynamic detection head (DyHead) into the detection head [

43]. Unlike traditional detection heads that rely on fixed feature-fusion pathways, DyHead employs a tri-level self-attention mechanism—scale-aware, spatial-aware, and task-aware—to dynamically adjust the feature-fusion strategy across different feature levels and spatial locations, thereby adapting to targets of varying sizes, occlusion relationships, and task semantic changes.

To address the inability of a single 2D feature space to fully represent the spatial hierarchy of leaves under occlusion, this study proposes a detection-guided spatial-depth positional modeling method. Building on the detected leaf center points and integrating depth data from a RealSense sensor, we construct a dual dimensional spatial-depth feature representation. Depth data robustness is enhanced via automatic thresholding and an interquartile range (IQR)-based outlier removal strategy. Finally, HDBSCAN clustering is applied to separate occluded leaf instances, enabling high-precision banana leaf counting. Compared to conventional counting approaches based solely on image segmentation or density estimation, our detection-driven spatial-depth-collaborative inference fully exploits the complementarity of spatial and depth information, effectively suppressing background interference and accurately modeling the hierarchical relationships of leaves in occluded scenarios.

2.3. YOLOv8n-MDSD Network

This study proposes YOLOv8n-MDSD, a banana plant leaf detection model developed by enhancing the YOLOv8n architecture. First, the C2f module in the backbone’s feature extraction stage is replaced with the C2f_MLCA module to strengthen the capture of multi-scale features and contextual information. Second, in the neck, the C2f modules are improved and the SEAM attention mechanism is integrated to boost the efficiency of multi-scale information fusion and improve occlusion detection performance in complex scenarios. Finally, we introduce a novel dynamic detection head, DyHead, which unifies scale-aware, spatial-aware, and task-aware mechanisms into a single framework, significantly enhancing the model’s adaptability to diverse targets. The overall YOLOv8n-MDSD architecture is illustrated in

Figure 2.

2.3.1. C2f_MLCA Module

In the backbone network, we design the C2f_MLCA module. The MLCA module combines local attention and channel attention mechanisms to enhance the capture of salient features by performing feature fusion and weighting across multiple scales and channels. To maintain computational efficiency, the MLCA module is deliberately kept lightweight, avoiding excessive overhead. This design allows for performance improvements without significantly increasing model complexity. By strengthening the network’s ability to capture multi-scale features and contextual information, the MLCA module improves feature extraction under challenging conditions where leaf and background colors are similar.

After the standard convolution in the C2f module, hybrid enhancement is achieved by combining local and global feature attention.

Figure 3 illustrates the module architecture. Given an input feature map

, the MLCA module first obtains local features

via Local Average Pooling (LAP) (Equation (1)) and global features

via Global Average Pooling (GAP) (Equation (2)). Each is then passed through a one-dimensional convolution (Conv1D) followed by a Sigmoid activation to yield local (Equation (3)) and global (Equation (4)) attention weights. After upsampling

to the original spatial dimensions, it is fused with

to produce the hybrid attention

(Equation (5)). Finally, the input feature map is enhanced by channel-wise weighting with

to obtain the output feature map

(Equation (6)), where

represents the fusion ratio factor, and ⊙ denotes element-wise multiplication.

2.3.2. C2f_DWR Module

We further design the C2f_DWR module, whose architecture is shown in

Figure 4. The DWR_Conv (Dilated Wavelet Residual Convolution) combines dilated convolution with wavelet transform to improve both the efficiency and effectiveness of feature extraction in convolutional neural networks (CNNs). Dilated convolution increases the spacing between kernel elements to expand the receptive field without adding computational complexity, enabling the network to capture contextual information over a larger area without increasing the number of parameters. Wavelet transform, on the other hand, effectively captures the relationship between local details and global patterns, helping the network to process complex image features such as edges, textures, and shapes. By integrating these two operations, the C2f_DWR module achieves more refined feature extraction and richer feature representation.

Specifically, given an input feature map

, we first perform regional residualization. Depthwise separable dilated convolutions with different dilation rates (

) are employed in parallel to process the input features and extract multi-scale regional features, as shown in Equation (7), where

denotes a depthwise separable convolution operation with dilation rate

, and

represents the regional residual feature. Subsequently, semantic residualization is performed, where a depthwise separable convolution with a fixed small dilation rate (small receptive field) is used to further refine

for semantic residual extraction. This primarily serves to supplement morphological details and suppress noise, as shown in Equation (8), where

denotes a small dilation rate (typically 1 or 2). Finally, the semantic residual feature

is added element-wise to the input feature

F (residual connection), completing the residual fusion and yielding the output feature

of the C2f_DWR module (Equation (9)).

2.3.3. SEAM Module

The Self-Ensemble Attention Mechanism (SEAM) is introduced to address issues such as mutual occlusion among banana leaves. By leveraging multi-view feature fusion and consistency regularization, SEAM enhances the model’s robustness and generalization, making it particularly well suited for handling occlusions and multi-scale feature integration. Its key advantages include the following: (1) Multi-view feature fusion: Input images are subjected to various transformations (e.g., rotation, scaling), producing feature maps from different perspectives that are then fused to provide a more comprehensive representation of each target. (2) Consistency regularization: During training, a consistency loss is imposed to ensure that predictions for the same target remain stable across different views, thereby improving both stability and robustness. SEAM effectively manages occlusion relationships among overlapping objects and, through its multi-scale feature fusion capability, strengthens the model’s ability to detect targets of varying sizes.

The architecture of the SEAM module is shown in

Figure 5. On the left side of

Figure 5 is the overall SEAM framework, which comprises three CSMM modules operating on patches of sizes 6, 7, and 8. Each CSMM module begins by applying average pooling to the input features in order to capture local contextual information. A subsequent channel expansion operation then transforms the feature dimensionality. Finally, the multi-scale feature maps are fused via element-wise multiplication, enabling interaction across scales and further amplifying the response of salient features.

The right side of

Figure 5 details the internal structure of the CSMM module. Within each scale-specific branch, the input patch is first encoded by a Patch Embedding module. The resulting features then pass through GELU activation and Batch Normalization to standardize their distribution. Next, a Depthwise Convolution extracts local spatial features, and a Pointwise Convolution fuses information across channels, thereby capturing the interdependencies between spatial and channel dimensions. In this way, SEAM not only reweights salient features at multiple spatial scales, but also leverages an inter-channel attention mechanism to further filter critical information, achieving finer-grained feature discrimination.

2.3.4. DyHead Detection Head

The complexity of localization and classification tasks in object detection has driven a proliferation of diverse approaches. Traditional object detection research has focused on improving the performance of individual detection heads but has not offered a unified perspective (Dynamic Head). To enhance model performance, researchers have optimized detection heads along three dimensions: object scale, spatial location, and task requirements. In this study, we introduce the novel DyHead architecture (

Figure 6), proposed by Microsoft Research in 2021, which provides a dynamic detection design capable of adjusting its output structure via a dynamic mechanism. Specifically, DyHead dynamically adapts its outputs based on the contextual information in the input feature map, rather than relying on a fixed head configuration. This dynamic adjustment allows DyHead to flexibly accommodate the diversity of objects across different scenes, thereby improving overall detection performance.

In the DyHead architecture used in this study, the input four-dimensional tensor

is reinterpreted as a three-dimensional tensor

, where

L denotes the feature level,

S the spatial position (i.e., flattened

locations), and

C the number of channels. DyHead employs an independent design philosophy by applying three separate attention functions to a given feature tensor

, as expressed in Equation (

10). Here,

,

, and

denote the attention functions applied independently along the

L,

S, and

C dimensions, respectively.

Scale-aware attention focuses on feature levels by dynamically adjusting the weights of different scale features and integrating semantic correlations, thereby enhancing the model’s ability to perceive objects across scales. Spatial-aware attention employs deformable convolutions to learn a sparse attention map over spatial positions, fusing information from multiple feature levels at each location to improve the model’s adaptability to variations in object shape and position. Task-aware attention concentrates on channel selection, dynamically enabling or disabling feature channels to support different tasks and strengthening the representation capacity for task-specific features. By incorporating these three attention mechanisms—scale-aware, spatial-aware, and task-aware—DyHead comprehensively enhances the model’s perception of object features.

2.4. Banana Plant Leaf Counting Method

To address challenges such as noise interference, irregular leaf morphology, and occlusions, we propose a highly robust counting approach. This method integrates deep learning-based object detection, outlier detection techniques, and the HDBSCAN clustering algorithm to group leaves belonging to the same plant. By overcoming the effects of leaf occlusion and non-uniform depth distribution in complex environments, it enables accurate counting of banana leaves in real-world plantation settings.

2.4.1. Image Processing

In this study, both RGB and depth images were synchronously acquired using a RealSense camera and spatially aligned. Leaf detection of banana plants was performed solely on the RGB images, while the depth data were incorporated in the subsequent counting stage to improve overall accuracy. Specifically, the improved YOLOv8n-MDSD model was employed to detect banana leaves in the RGB images, and the pixel coordinates of the center point of each bounding box were extracted. The corresponding depth values were then obtained from the aligned depth images according to these coordinates, thereby effectively integrating the two-dimensional detection results with three-dimensional spatial information. This approach enables the discrimination of spatial distances between leaves and the camera, allowing for the differentiation of targets at different depth levels.

In practical applications, edge computing devices typically perform leaf counting only for banana plants located close to the camera, while distant plants and background leaves often introduce interference to the counting results. By leveraging depth information, foreground leaves belonging to the target plants can be accurately identified, and background leaves can be excluded, thereby substantially reducing miscounts and double counts. This process significantly enhances the reliability and accuracy of banana leaf counting.

2.4.2. Outlier Detection Method

To enhance the robustness and accuracy of the depth data, this study combines a dynamic thresholding approach with the interquartile range (IQR) method to perform adaptive filtering on the depth values at the object centroids, thereby removing outliers.

The dynamic thresholding method automatically adapts to varying data distributions by computing the mean and standard deviation of the depth values at all detected centroids and then dynamically adjusting the acceptance bounds to avoid the limitations of a fixed threshold. The dynamic threshold is defined in Equation (11), where is the mean depth of the detected centroids, is their standard deviation, and k is a scalar coefficient. This criterion is used to flag and exclude extreme outliers.

The IQR method is a classic and widely used statistical technique for outlier detection. It is defined in Equation (12), where

is the first quartile—the value at the 25th percentile of the ordered dataset—and

is the third quartile—the value at the 75th percentile. The IQR represents the spread of the middle 50% of the data.

2.4.3. HDBSCAN Clustering

HDBSCAN is a density-based clustering algorithm well suited for spatial data, especially when the data contain noise or irregular shapes. Unlike traditional clustering methods such as K-Means, HDBSCAN does not require a predefined number of clusters; instead, it adapts the clustering structure based on changes in data density. Banana plant leaves often exhibit irregular shapes and distributions, and HDBSCAN can effectively handle these non-standard morphologies without assuming spherical or regularly shaped clusters. By avoiding the need to specify the number of clusters in advance, HDBSCAN performs adaptive clustering across regions of varying density in complex environments—such as overlapping leaves or cluttered backgrounds—and is thus particularly well suited for datasets with noise or complex distributions.

In HDBSCAN clustering, the core distance of a point

x to its

k-th nearest neighbor is defined in Equation (13), where

represents the minimum number of neighboring points required to designate

x as a core point. The core distance quantifies local density: a smaller core distance implies a higher density around

x. The mutual reachability distance between two points

x and

p is then defined as the maximum of the core distances of

x and

p and their Euclidean distance, as shown in Equation (14). For the parameter settings, the sparsity and heterogeneity of leaf depth distribution in banana orchard scenarios were comprehensively considered. Ultimately, a minimum sample count of 2 and a minimum cluster unit size of 2 were chosen. This configuration reliably groups leaves from the same plant into the same cluster based on the three-dimensional spatial density distribution of their detected centroids.

2.5. Experimental Environment

The experiments in this study were conducted on Ubuntu 20.04.6 LTS with 125 GB of RAM and an NVIDIA GeForce RTX 3090 GPU. All Python scripts were executed under Python 3.9 using the PyTorch 2.0.1 deep learning framework, with training accelerated by the NVIDIA CUDA 11.8 driver.

During model training, we employed the stochastic gradient descent (SGD) optimizer for iterative optimization. Owing to its inherent randomness, SGD can reduce the likelihood of the model becoming trapped in local minima and achieve faster convergence. The training hyperparameters were configured as follows: 300 epochs, a batch size of 16, an initial learning rate of 0.01, and a weight decay of 0.0005, while all other parameters were kept as the default settings of the original YOLOv8 model. To enhance the model’s robustness and increase data diversity, we incorporated the mosaic data augmentation method, which randomly concatenates four images—each subjected to various augmentation operations—into a single mosaic, thereby improving the model’s generalization ability. Since mosaic augmentation sufficiently enriched the dataset, it was disabled during the final 10 epochs of training to further refine model performance.

2.6. Evaluation Metrics

To compare the performance of different models, we employed recall (R), precision (P), average precision (AP), mean average precision (mAP), computational complexity, and parameter count as quantitative evaluation metrics for banana plant leaf detection. The corresponding formulas are given in Equations (15)–(18), where

denotes the number of true positive predictions,

denotes the number of false positive predictions, and

denotes the number of false negative predictions. In this study, the average AP over IoU thresholds from 0.5 to 0.95 with a step size of 0.05 is denoted as mAP50-95, and the AP at an IoU threshold of 0.5 is denoted as mAP50.

4. Discussion

In this study, we propose a banana leaf detection and counting method tailored for complex plantation environments, integrating a multi-scale feature enhancement and spatial-depth-collaborative reasoning strategy to achieve accurate leaf recognition and counting. Several optimization modules were incorporated into the YOLOv8n baseline model, including the SEAM module, C2f_MLCA, C2f_DWR, and the DyHead dynamic detection head. These enhancements effectively improve the model’s feature extraction capability and robustness under challenging field conditions. Specifically, the SEAM module focuses on occlusion-prone regions, alleviating detection difficulties caused by overlapping leaves; the C2f_DWR module, through the introduction of dilated convolution and wavelet transform, strengthens the ability to capture multi-scale contextual information; the C2f_MLCA module enhances attention to key features in the backbone; and the DyHead module facilitates multi-dimensional feature interaction across scale, spatial, and task-aware dimensions in the detection head, thereby improving detection performance for small and occluded objects. In addition, a robust leaf counting strategy—combining dynamic thresholding, the interquartile range (IQR) method, and the HDBSCAN clustering algorithm—enables accurate estimation of leaf counts even under irregular morphology and severe occlusion, effectively mitigating the impact of field complexity on counting accuracy.

Compared with existing YOLO-based improvements, the distinctiveness of this work lies in the synergistic integration of multiple modules with a robust counting strategy: not only does the detection network achieve enhanced adaptability to small-scale and occluded targets, but the introduction of clustering and statistical methods in the post-processing stage also significantly improves the accuracy and robustness of leaf counting. This end-to-end improvement demonstrates superior generalization and applicability in real banana plantation scenarios. Unlike prior studies that relied solely on single-module enhancements, our approach exhibits more stable performance under complex field conditions.

Despite the favorable performance of YOLOv8n-MDSD across multiple scenarios, several limitations remain. First, the dataset used in this study is relatively limited in size and covers only a single crop (banana), leaving its generalization across different crops, seasons, and geographic regions to be further verified. Second, detection accuracy still decreases under extreme lighting conditions (overly bright or dark) or in cases of severe leaf overlap; moreover, the depth measurement precision of the RealSense sensor is constrained in complex field environments, which may affect the accuracy of leaf counting. Third, the dataset distribution exhibits some imbalance, with limited samples from certain growth stages, potentially leading to overfitting in specific scenarios. Furthermore, as the images were captured from random single-view angles, some leaves may be occluded by pseudostems or other leaves and thus excluded from the camera’s field of view, highlighting a fundamental limitation of single-view methods. Future work could incorporate multi-view image acquisition and fusion to systematically evaluate model stability and robustness under partial occlusion, thereby further improving its practical value. Finally, long-term inference on resource-constrained embedded devices (e.g., Jetson Orin NX) may be affected by thermal issues and computational bottlenecks, reducing stability and detection accuracy.

Future research can be advanced in several directions: (1) expanding data collection across different years and locations to validate the model’s generalization under diverse environments and cultivation conditions; (2) conducting transfer learning and evaluation on other crops to assess method generalizability; (3) collaborating with farmers to implement real-time deployment tests, further verifying the model’s applicability and practical value in agricultural production; (4) integrating the model with crop management decision-support tools to provide actionable insights for precision agriculture; (5) exploring richer data augmentation strategies and multimodal inputs (e.g., point cloud and hyperspectral data) to enhance robustness under extreme conditions; and (6) further optimizing the network architecture and parameter size to achieve improved efficiency and stability on edge devices while maintaining high accuracy.

5. Conclusions

In this study, we propose the YOLOv8n-MDSD model, which achieves high-precision detection and effectively addresses leaf occlusion by integrating multiple optimization modules. Compared to the original model, mAP50 and mAP50-95 increase by 0.68 % and 2.08 %, reaching 89.32 % and 65.38 %, respectively. When compared with other mainstream models, the YOLOv8n-MDSD model delivers the best overall performance.

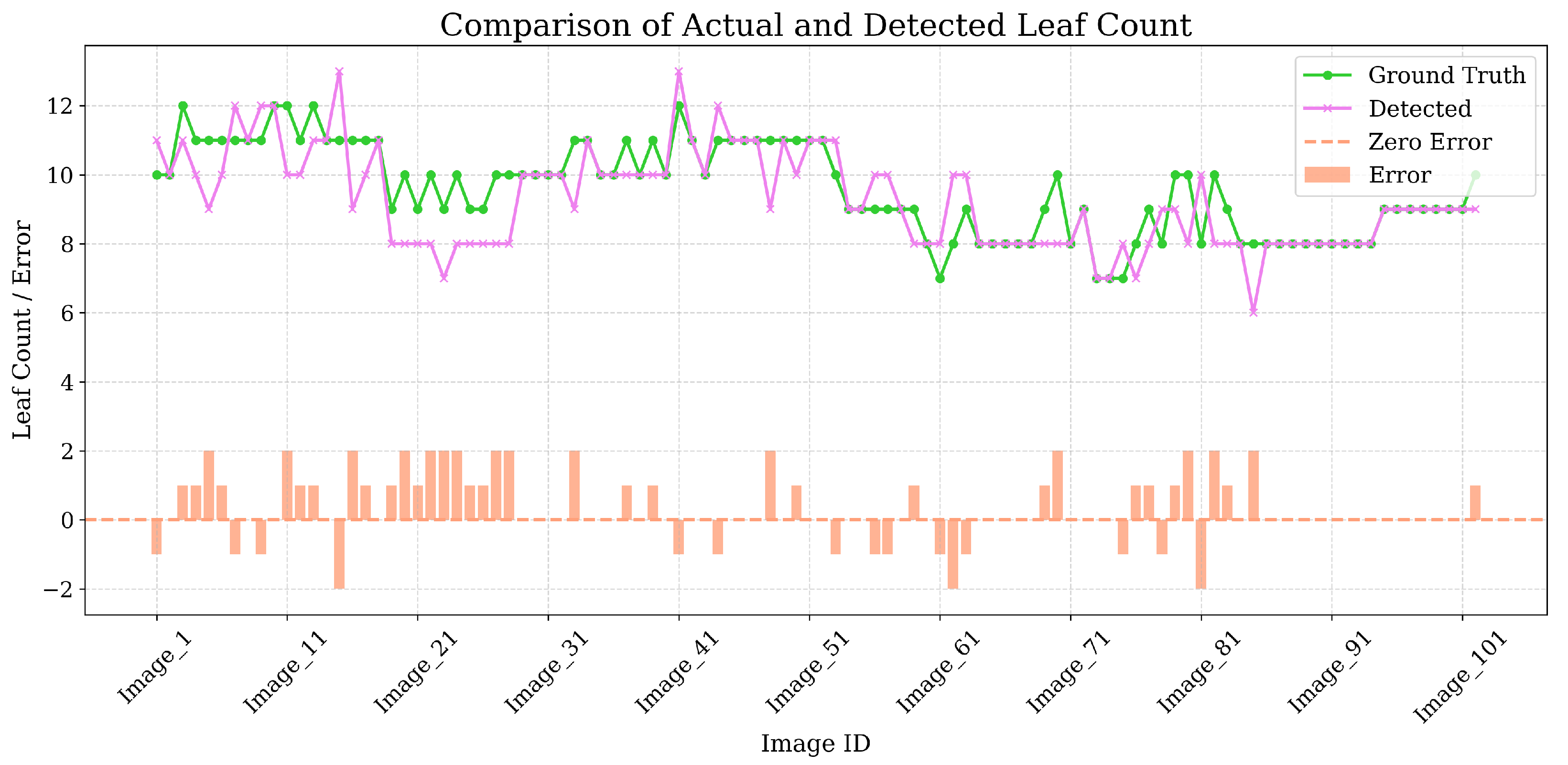

By leveraging the IQR method alongside the alignment of RGB and depth images, we introduce a banana leaf counting approach that first removes anomalous depth values encountered during acquisition and replaces them with the median value within the local neighborhood. Experimental results demonstrate that this method achieves high counting accuracy under real-world conditions, with a MAE of 0.67 and an RMSE of 1.01. The errors primarily arise from missed detections of heavily occluded leaves and occasional false positives. Overall, the proposed method exhibits robust accuracy and stability, effectively fulfilling the task of counting banana leaves.

We deployed the YOLOv8n-MDSD model on an NVIDIA Jetson Orin NX embedded platform mounted on a Scout Mini wheeled chassis and conducted in-field tests within a banana plantation. The experiments evaluated the model’s performance and suitability in real-world conditions and validated the accuracy of the leaf-counting method. The results indicate that, particularly for plants located near the device, the leaf-count error remains within 1–2 leaves, with 100 % count accuracy achieved in most cases. These findings demonstrate the algorithm’s adaptability and stability in complex scenarios, enabling fully automated and precise counting of banana leaves.

Future work will focus on enhancing the model’s accuracy in detecting and counting occluded leaves under complex conditions. On one hand, we will optimize the YOLOv8n-MDSD network architecture and introduce multimodal fusion techniques to strengthen robustness in challenging scenarios; on the other hand, we will investigate lightweight model designs and inference-acceleration schemes for embedded platforms to improve the system’s real-time performance and energy efficiency. Furthermore, the algorithm will be extended to different growth stages and diverse climatic conditions to establish a more intelligent banana plantation monitoring system. Finally, we will explore the intrinsic relationships between leaf count, plant health, and yield to provide technical support for precision management and efficient development of the banana industry, thereby ushering in a new era of digital plantation management.