Comparative Analysis of Base-Width-Based Annotation Box Ratios for Vine Trunk and Support Post Detection Performance in Agricultural Autonomous Navigation Environments

Abstract

1. Introduction

2. Materials and Methods

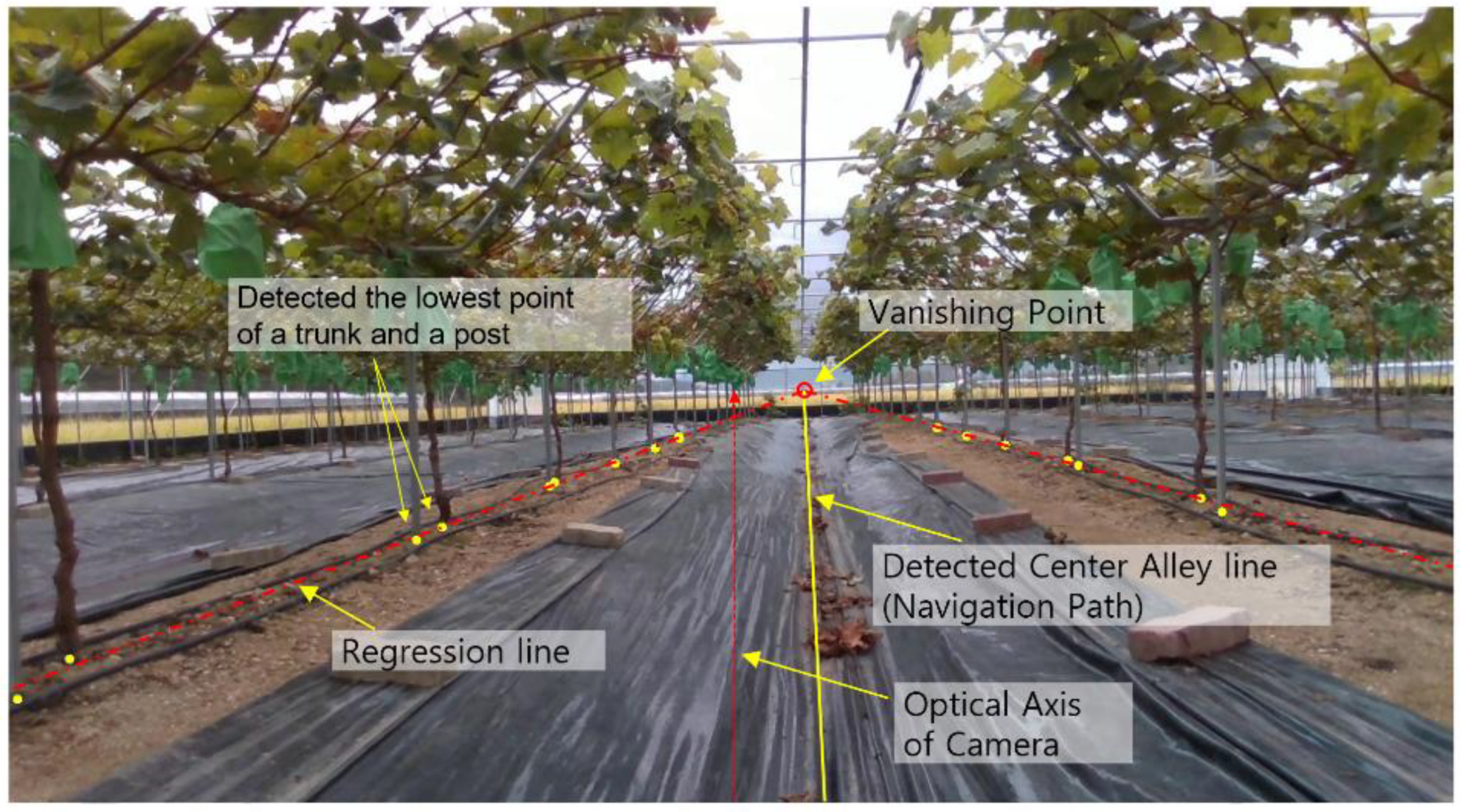

2.1. Greenhouse Vineyard Environment

2.2. Annotation Methodology

| Algorithm 1. StandardizedBoundingBoxGeneration |

| Require: pre_annotation_dataset // Dataset extracted from Pre-annotation Phase Require: aspect_ratios // Predefined list of aspect ratios (aW × bW) 1:function StandardizedBoundingBoxGen (pre_annotation_dataset, aspect_ratios) 2: object_base_endpoints ← LoadPreAnnotationData(pre_annotation_dataset) 3: objects_with_width ← CalculateBaseWidth(object_base_endpoints) 4: standardized_objects ← ApplyAspectRatios(objects_with_width, aspect_ratios) 5: positioned_boxes ← PositionBoundingBoxes(standardized_objects) 6: standard_format_annotations ← ConvertToStandardFormat(positioned_boxes) 7: verified_annotations ← VerifyBoundingBoxes(standard_format_annotations) 8: final_annotation_dataset ← GenerateFinalDataset(verified_annotations) 9: return final_annotation_dataset 10:end function |

2.3. Deep Learning Model Configuration

2.4. Dataset Preparation

2.5. Evaluation Metrics

2.6. Experimental Procedure

3. Results

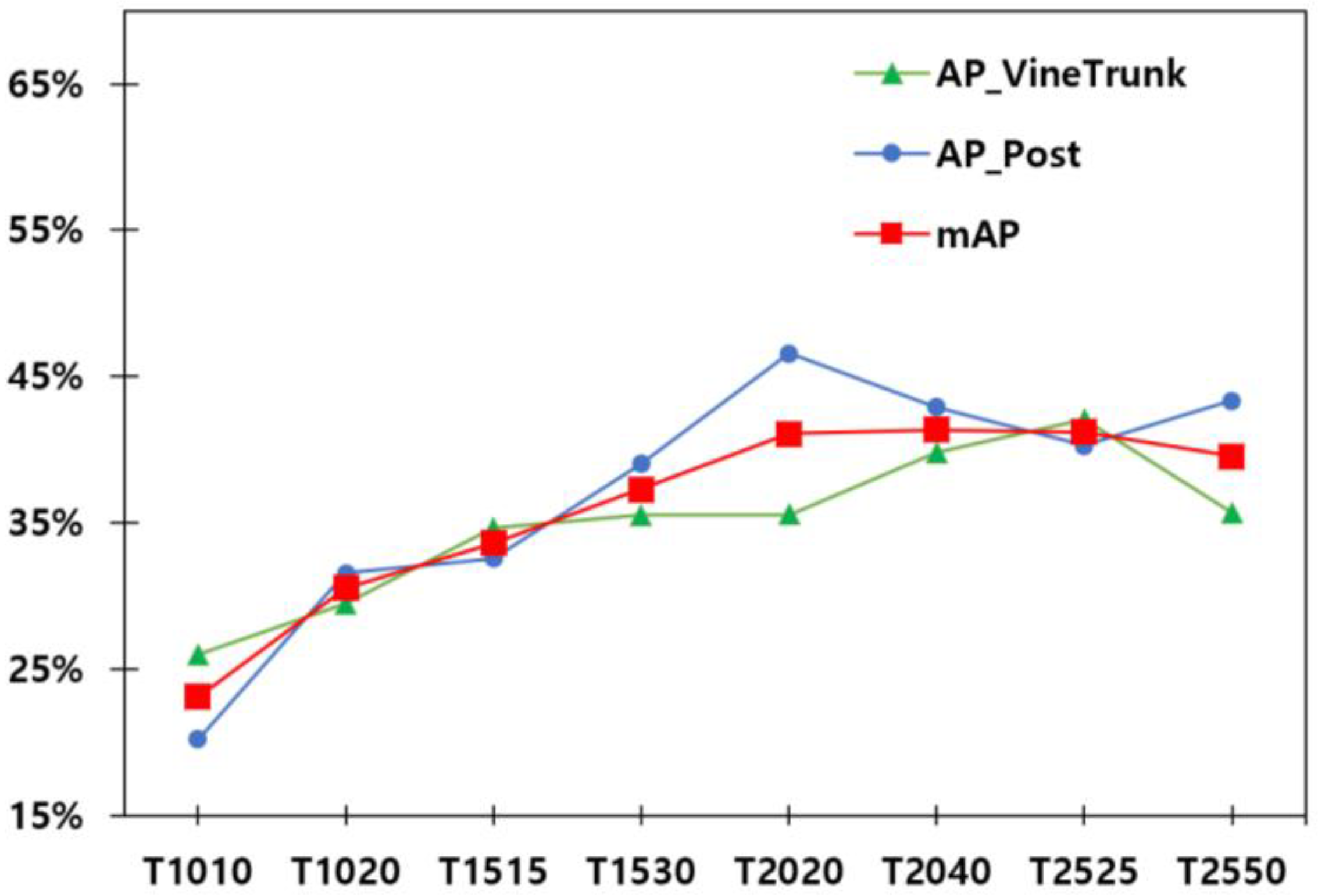

3.1. Performance Comparison Based on Bounding Box Configuration

3.2. Optimal Configuration Analysis

3.3. Practical Implementation Results

4. Discussion

4.1. Optimal Bounding Box Configuration Analysis

4.2. Effectiveness of Base-Width-Based Annotation Method

4.3. Practical Implementation Considerations

4.4. Comparison with Traditional Methods

4.5. Limitations and Future Research

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lowenberg-DeBoer, J.; Huang, I.Y.; Grigoriadis, V.; Blackmore, S. Economics of robots and automation in field crop production. Precis. Agric. 2020, 21, 278–299. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Weltzien, C.; Hameed, I.A.; Yule, I.J.; Grift, T.E.; Balasundram, S.K.; Pitonakova, L.; Ahmad, D.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–14. [Google Scholar] [CrossRef]

- Chen, J.; Qiang, H.; Wu, J.; Xu, G.; Wang, Z. Navigation path extraction for greenhouse cucumber-picking robots using the prediction-point Hough transform. Comput. Electron. Agric. 2021, 180, 105911. [Google Scholar] [CrossRef]

- Lyu, H.K.; Park, C.H.; Han, D.H.; Kwak, S.W.; Choi, B. Orchard free space and center line estimation using naive Bayesian classifier for unmanned ground self-driving vehicle. Symmetry 2018, 10, 355. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; Wang, J.; Chen, X. An autonomous navigation method for orchard rows based on a combination of an improved A-star algorithm and SVR. Precis. Agric. 2024, 25, 1142–1165. [Google Scholar] [CrossRef]

- Li, H.; Huang, K.; Sun, Y.; Lei, X.; Yuan, Q.; Zhang, J.; Lv, X. An autonomous navigation method for orchard mobile robots based on octree 3D point cloud optimization. Front. Plant Sci. 2024, 15, 1510683. [Google Scholar] [CrossRef]

- Aghi, D.; Mazzia, V.; Chiaberge, M. Local motion planner for autonomous navigation in vineyards with a RGB-D camera-based algorithm and deep learning synergy. Machines 2020, 8, 27. [Google Scholar] [CrossRef]

- Han, Z.; Li, J.; Yuan, Y.; Fang, X.; Zhao, B.; Zhu, L. Path Recognition of Orchard Visual Navigation Based on U-Net. Trans. Chin. Soc. Agric. Mach. 2021, 52, 30–39. [Google Scholar]

- Mazzia, V.; Salvetti, F.; Chiaberge, M. Position-agnostic autonomous navigation in vineyards with deep reinforcement learning. Eng. Appl. Artif. Intell. 2022, 112, 104868. [Google Scholar]

- Adhikari, S.P.; Yang, C.; Kim, H. Learning semantic segmentation of large-scale point clouds with random sampling. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 8793–8808. [Google Scholar]

- Chen, J.; Wang, H.; Zhang, L.; Wu, Y. An Autonomous Navigation Method for Orchard Robots Based on Machine Vision and YOLOv4 Model. Sensors 2022, 22, 3215. [Google Scholar]

- Zhang, Q.; Liu, Y.; Wang, J.; Zhang, H.; Chen, X. Improved Hybrid Model of Autonomous Navigation in Orchard Environment Based on YOLOv7 and RRT. Sensors 2024, 24, 975. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhu, H.; Jing, D. Optimizing Slender Target Detection in Remote Sensing with Adaptive Boundary Perception. Remote Sens. 2024, 16, 2643. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Biondi, F.N.; Cacanindin, A.; Douglas, C.; Cort, J. Overloaded and at work: Investigating the effect of cognitive workload on assembly task performance. Hum. Factors 2021, 63, 813–820. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Silva Aguiar, A.; Monteiro, N.N.; Santos, F.N.; Pires, E.J.S.; Silva, D.; Sousa, A.J.; Boaventura-Cunha, J. Bringing semantics to the vineyard: An approach on deep learning-based vine trunk detection. Agriculture 2021, 11, 131. [Google Scholar] [CrossRef]

- Santos, T.T.; de Souza, L.L.; dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef]

- Papadopoulos, D.P.; Uijlings, J.R.; Keller, F.; Ferrari, V. Extreme clicking for efficient object annotation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4930–4939. [Google Scholar]

- Konyushkova, K.; Uijlings, J.; Lampert, C.H.; Ferrari, V. Learning intelligent dialogs for bounding box annotation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 9175–9184. [Google Scholar]

- Ma, J.; Liu, C.; Wang, Y.; Lin, D. The effect of improving annotation quality on object detection datasets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–24 June 2022; pp. 3121–3130. [Google Scholar]

- Murrugarra-Llerena, R.; Price, B.L.; Cohen, S.; Liu, B.; Rehg, J.M. Can we trust bounding box annotations for object detection? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–24 June 2022; pp. 3001–3010. [Google Scholar]

- Shi, C.; Yang, S. The devil is in the object boundary: Toward annotation-free instance segmentation. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 5–9 May 2024. [Google Scholar]

- Li, J.; Xiong, C.; Socher, R.; Hoi, S.C.H. Towards noise-resistant object detection with noisy annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11457–11465. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

| Type 1 | Width | Height |

|---|---|---|

| T1010 | 1.0 × W | 1.0 × W |

| T1020 | 1.0 × W | 2.0 × W |

| T1515 | 1.5 × W | 1.5 × W |

| T1530 | 1.5 × W | 3.0 × W |

| T2020 | 2.0 × W | 2.0 × W |

| T2040 | 2.0 × W | 4.0 × W |

| T2525 | 2.5 × W | 2.5 × W |

| T2550 | 2.5 × W | 5.0 × W |

| Class | Type 1 | Accuracy | Precision | Recall | F1 Score | AP |

|---|---|---|---|---|---|---|

| Posts #0 | T1010 | 0.53 | 0.42 | 0.40 | 0.41 | 0.20 |

| T1020 | 0.62 | 0.53 | 0.49 | 0.51 | 0.32 | |

| T1515 | 0.61 | 0.52 | 0.51 | 0.52 | 0.33 | |

| T1530 | 0.67 | 0.59 | 0.57 | 0.58 | 0.39 | |

| T2020 | 0.69 | 0.60 | 0.62 | 0.61 | 0.47 | |

| T2040 | 0.67 | 0.55 | 0.58 | 0.57 | 0.43 | |

| T2525 | 0.65 | 0.56 | 0.57 | 0.57 | 0.40 | |

| T2550 | 0.69 | 0.59 | 0.60 | 0.60 | 0.43 | |

| Vine trunks #1 | T1010 | 0.62 | 0.50 | 0.44 | 0.47 | 0.26 |

| T1020 | 0.64 | 0.53 | 0.51 | 0.52 | 0.30 | |

| T1515 | 0.66 | 0.56 | 0.54 | 0.55 | 0.35 | |

| T1530 | 0.67 | 0.57 | 0.54 | 0.55 | 0.36 | |

| T2020 | 0.69 | 0.58 | 0.57 | 0.58 | 0.36 | |

| T2040 | 0.71 | 0.59 | 0.58 | 0.58 | 0.40 | |

| T2525 | 0.70 | 0.61 | 0.56 | 0.58 | 0.42 | |

| T2550 | 0.70 | 0.58 | 0.57 | 0.57 | 0.36 |

| Type 1 | AP_VineTrunk | AP_Post | mAP |

|---|---|---|---|

| T1010 | 26.01% | 20.21% | 23.11% |

| T1020 | 29.51% | 31.57% | 30.54% |

| T1515 | 34.61% | 32.57% | 33.59% |

| T1530 | 35.52% | 39.03% | 37.28% |

| T2020 | 35.57% | 46.59% | 41.08% |

| T2040 | 39.81% | 42.91% | 41.36% |

| T2525 | 42.08% | 40.29% | 41.19% |

| T2550 | 35.72% | 43.36% | 39.54% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, H.-K.; Yun, S.; Park, S. Comparative Analysis of Base-Width-Based Annotation Box Ratios for Vine Trunk and Support Post Detection Performance in Agricultural Autonomous Navigation Environments. Agronomy 2025, 15, 2107. https://doi.org/10.3390/agronomy15092107

Lyu H-K, Yun S, Park S. Comparative Analysis of Base-Width-Based Annotation Box Ratios for Vine Trunk and Support Post Detection Performance in Agricultural Autonomous Navigation Environments. Agronomy. 2025; 15(9):2107. https://doi.org/10.3390/agronomy15092107

Chicago/Turabian StyleLyu, Hong-Kun, Sanghun Yun, and Seung Park. 2025. "Comparative Analysis of Base-Width-Based Annotation Box Ratios for Vine Trunk and Support Post Detection Performance in Agricultural Autonomous Navigation Environments" Agronomy 15, no. 9: 2107. https://doi.org/10.3390/agronomy15092107

APA StyleLyu, H.-K., Yun, S., & Park, S. (2025). Comparative Analysis of Base-Width-Based Annotation Box Ratios for Vine Trunk and Support Post Detection Performance in Agricultural Autonomous Navigation Environments. Agronomy, 15(9), 2107. https://doi.org/10.3390/agronomy15092107