Deployment of CES-YOLO: An Optimized YOLO-Based Model for Blueberry Ripeness Detection on Edge Devices

Abstract

1. Introduction

- (1)

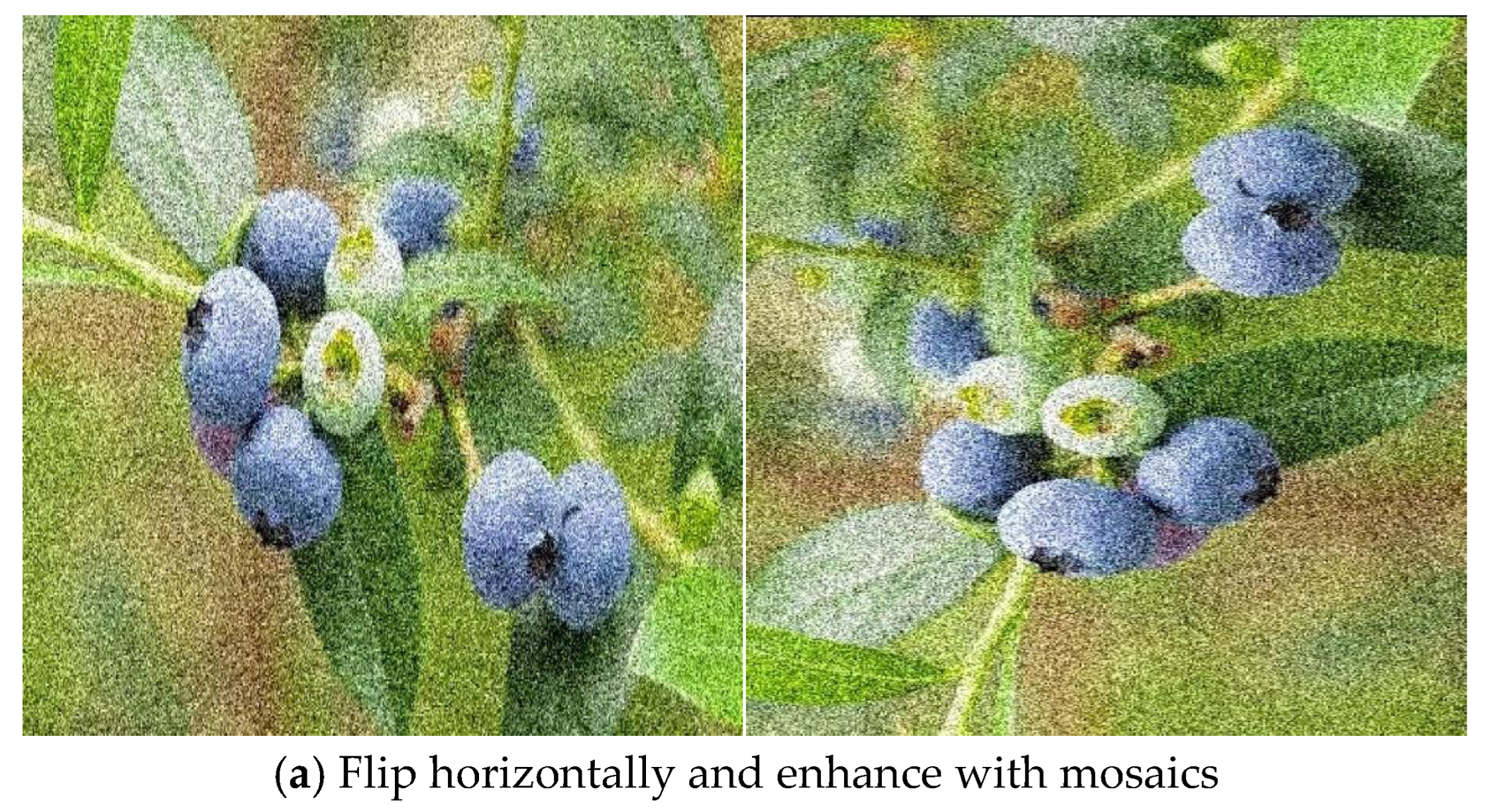

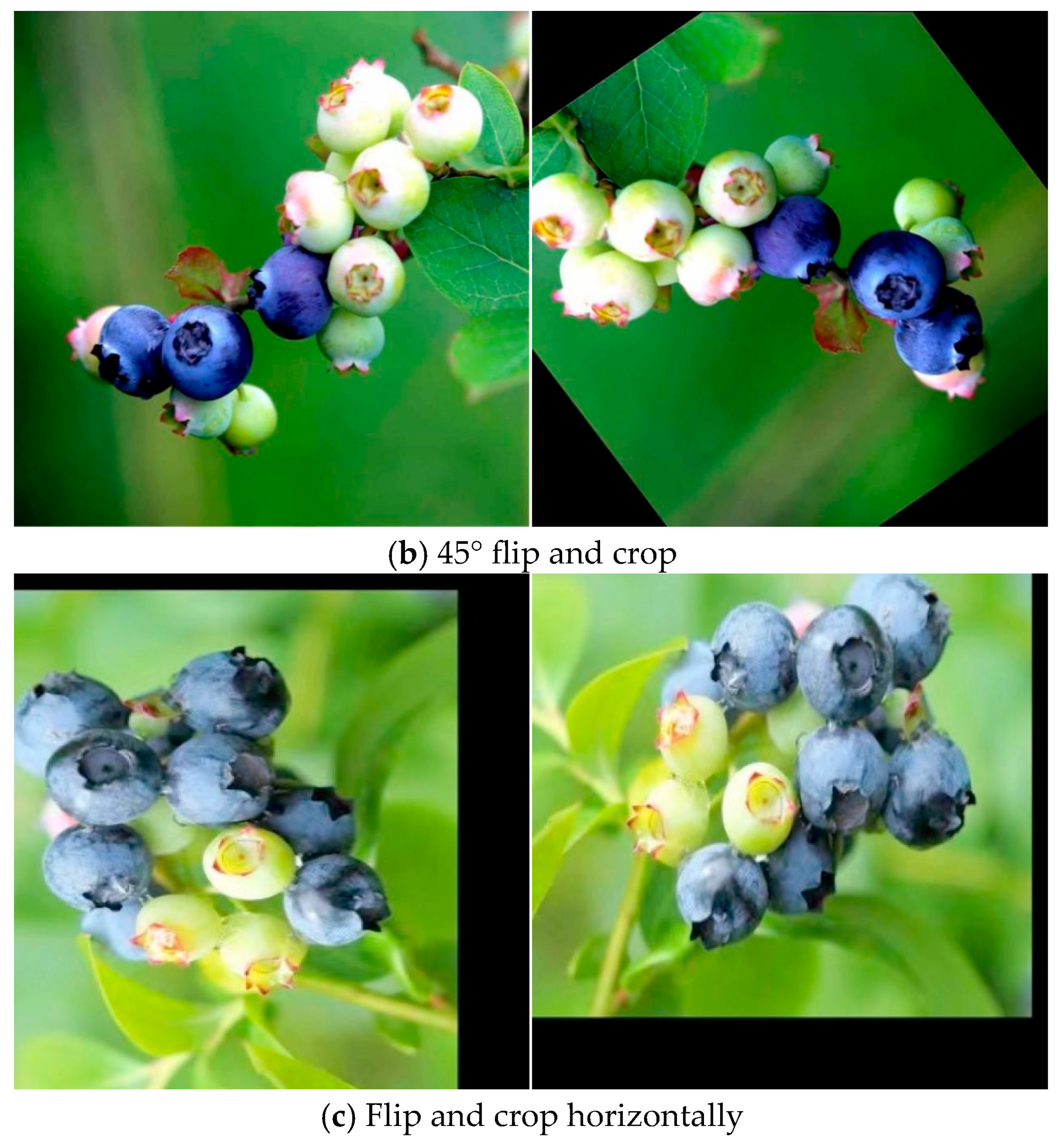

- Construction of a task-adapted blueberry dataset: Based on a publicly available dataset from a published study, this work applied data augmentation to enhance the sample diversity, improving the suitability for detection tasks in orchard environments.

- (2)

- Development of the CES-YOLO model: Building upon YOLOv11, CES-YOLO introduces three main improvements: (i) replacing the original C3k2 modules with lightweight C3k2_Ghost modules, to reduce parameters and computational cost; (ii) integrating an Efficient Multi-scale Attention (EMA) mechanism to enhance semantic feature representation across scales; and (iii) designing a customized detection head, SEAM (Semantic Enhancement Attention Module), to improve multi-level feature fusion and robustness, especially for small or scale-variant targets. These enhancements jointly improve detection accuracy and model efficiency, making CES-YOLO suitable for deployment on resource-limited edge devices.

- (3)

- Deployment on edge devices: To validate its practical applicability, the CES-YOLO model was deployed on the NVIDIA Jetson Nano platform, achieving efficient real-time detection performance under constrained computing resources, and demonstrating its potential for intelligent orchard applications.

2. Materials and Methods

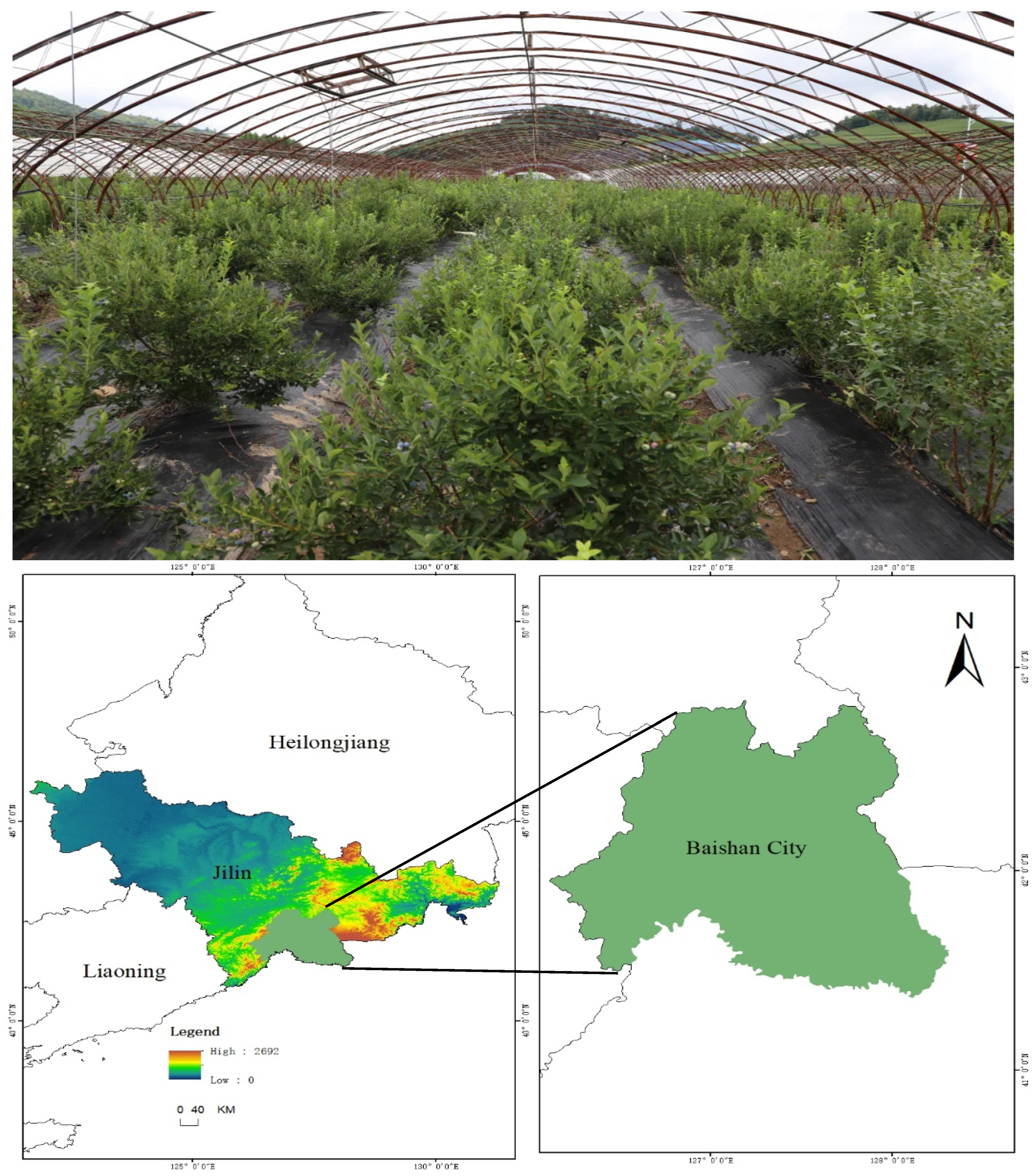

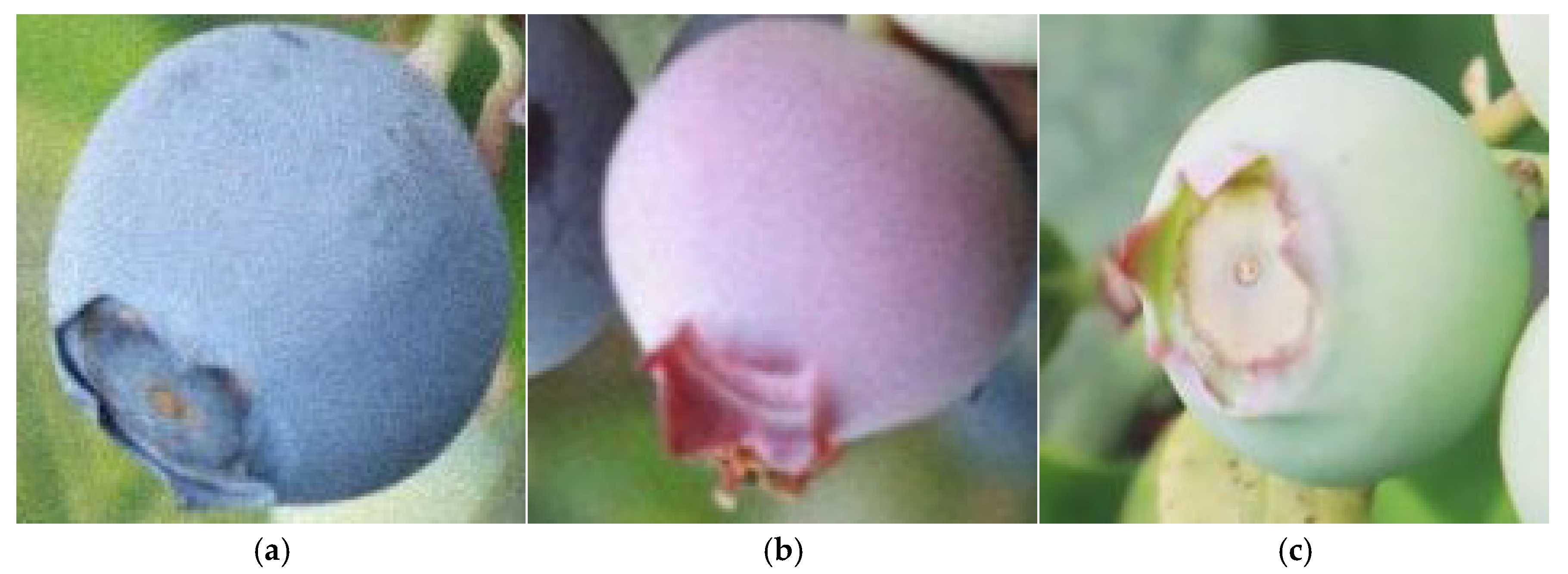

2.1. Dataset Construction

2.2. Dataset Production

2.3. Model Selection and Enhancement

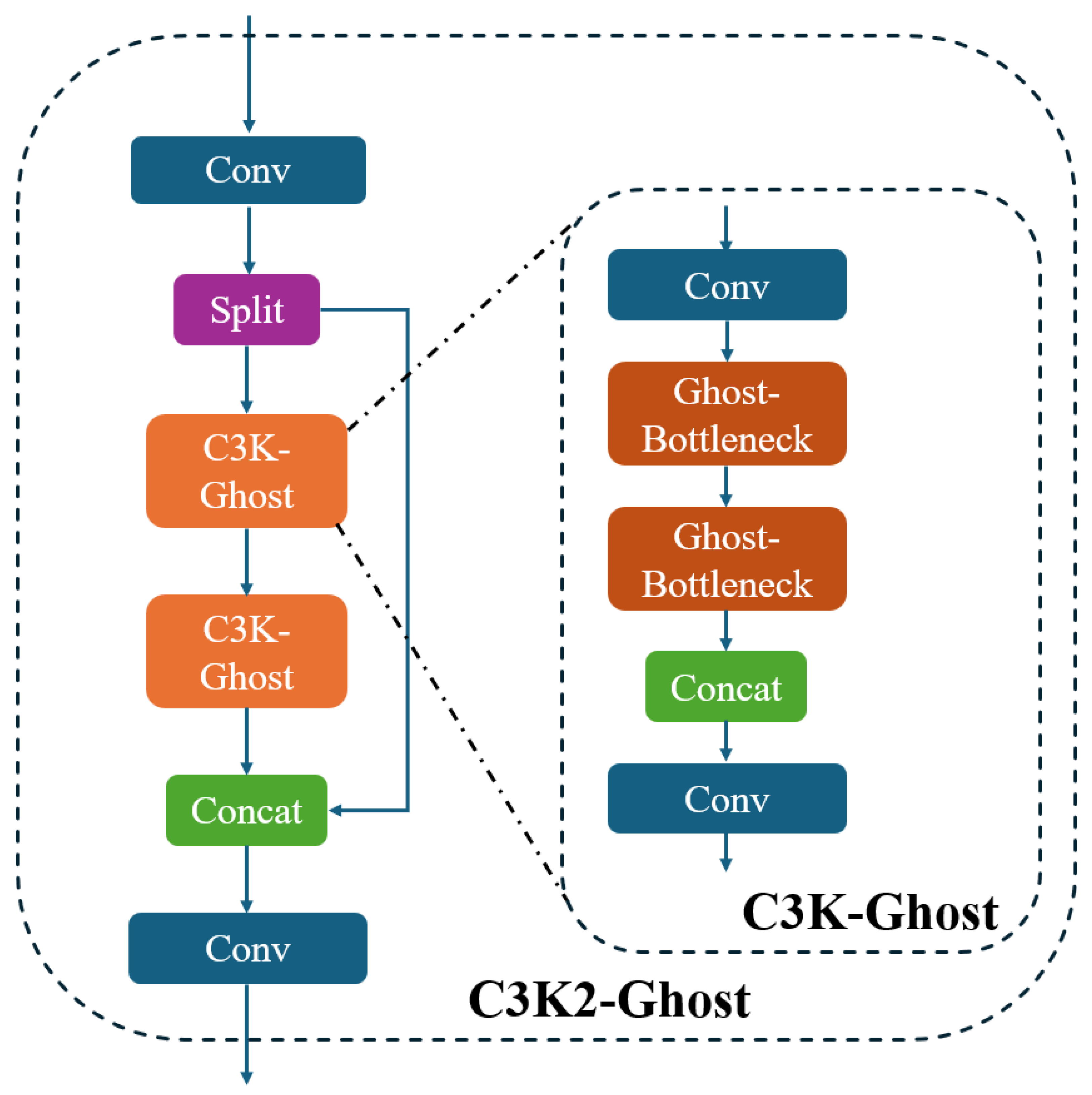

2.3.1. C3K2_Ghost

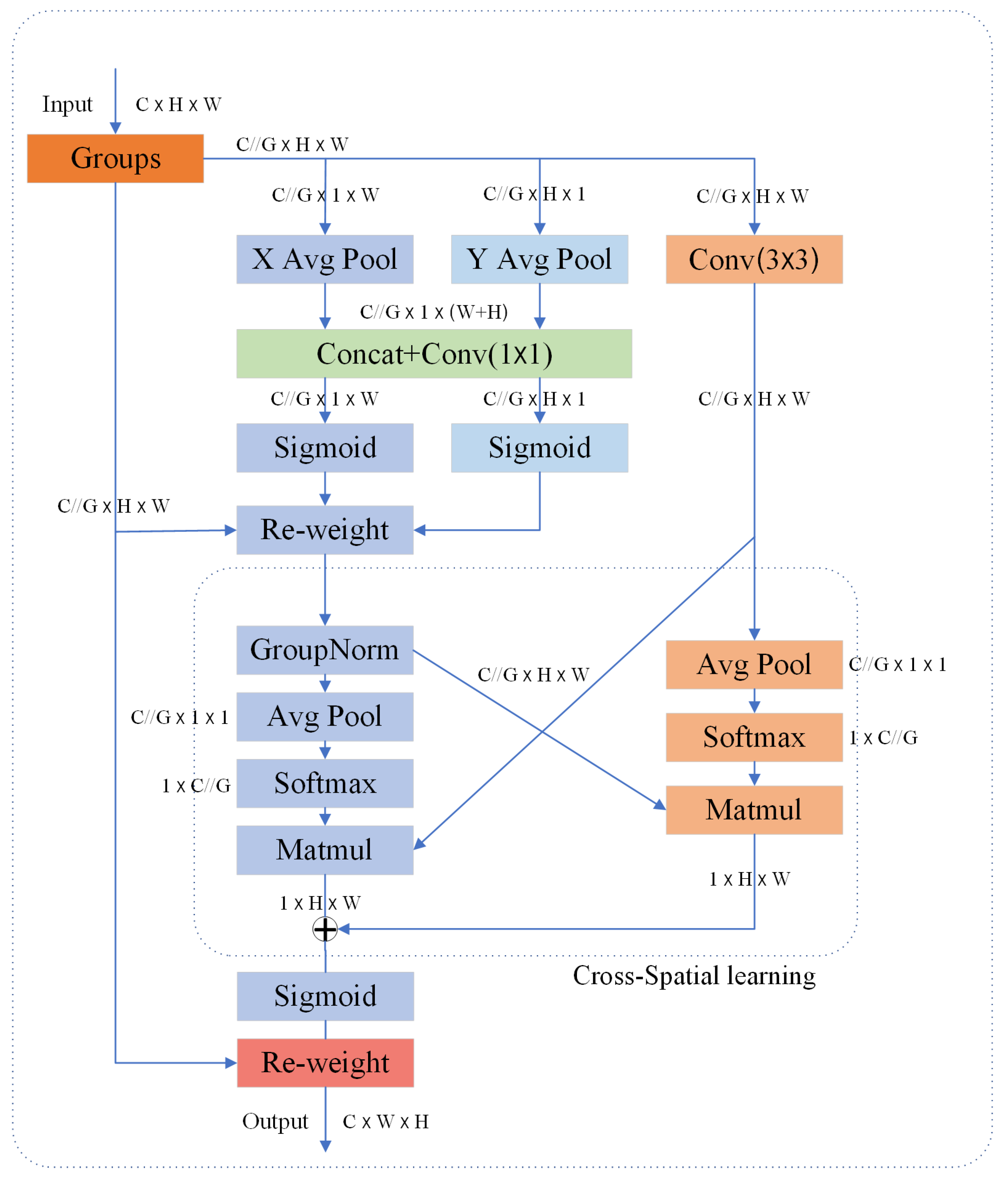

2.3.2. Efficient Multi-Scale Attention

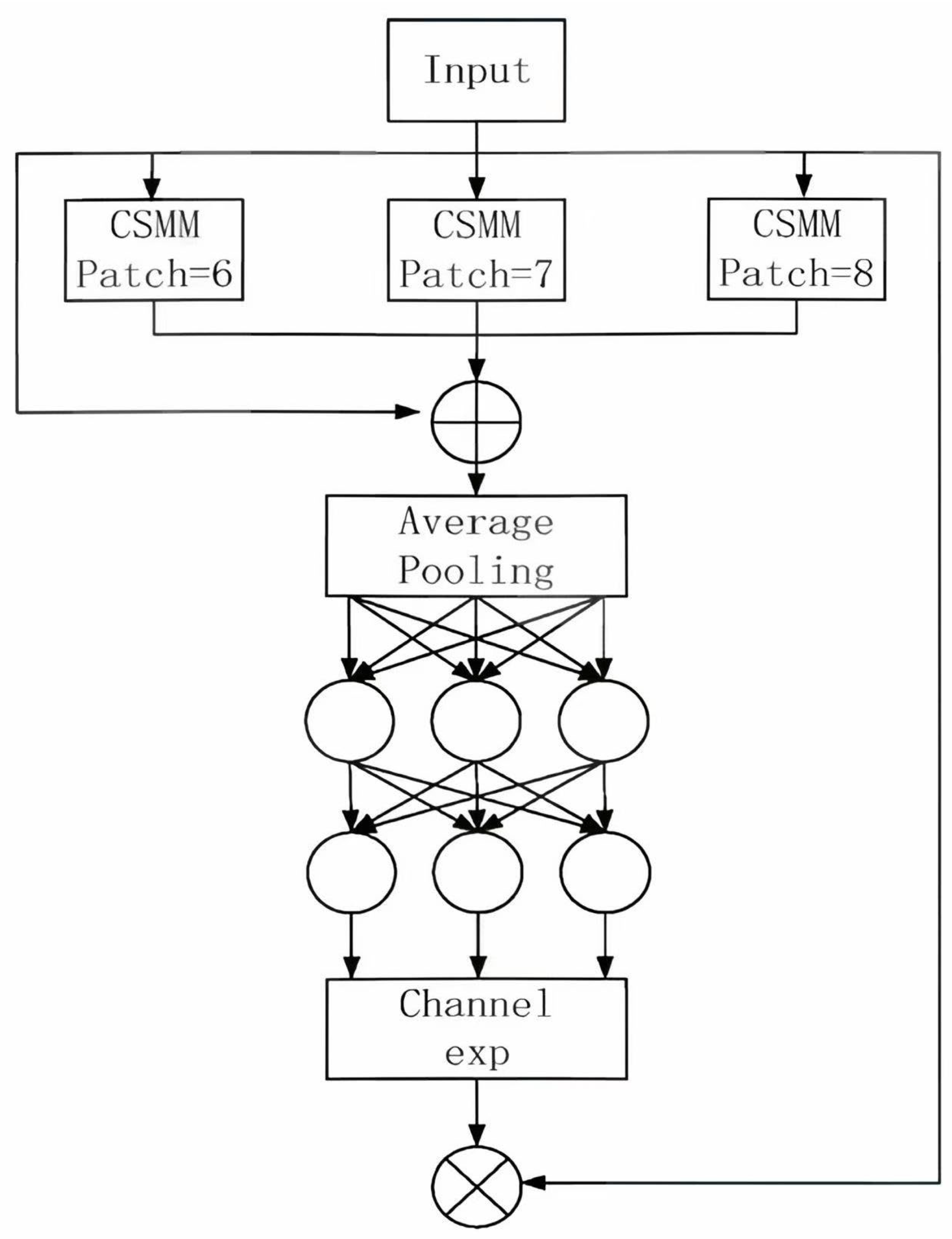

2.3.3. SEAMHead

2.4. Experimental Environment

2.5. Evaluation Criteria

3. Experimental Part

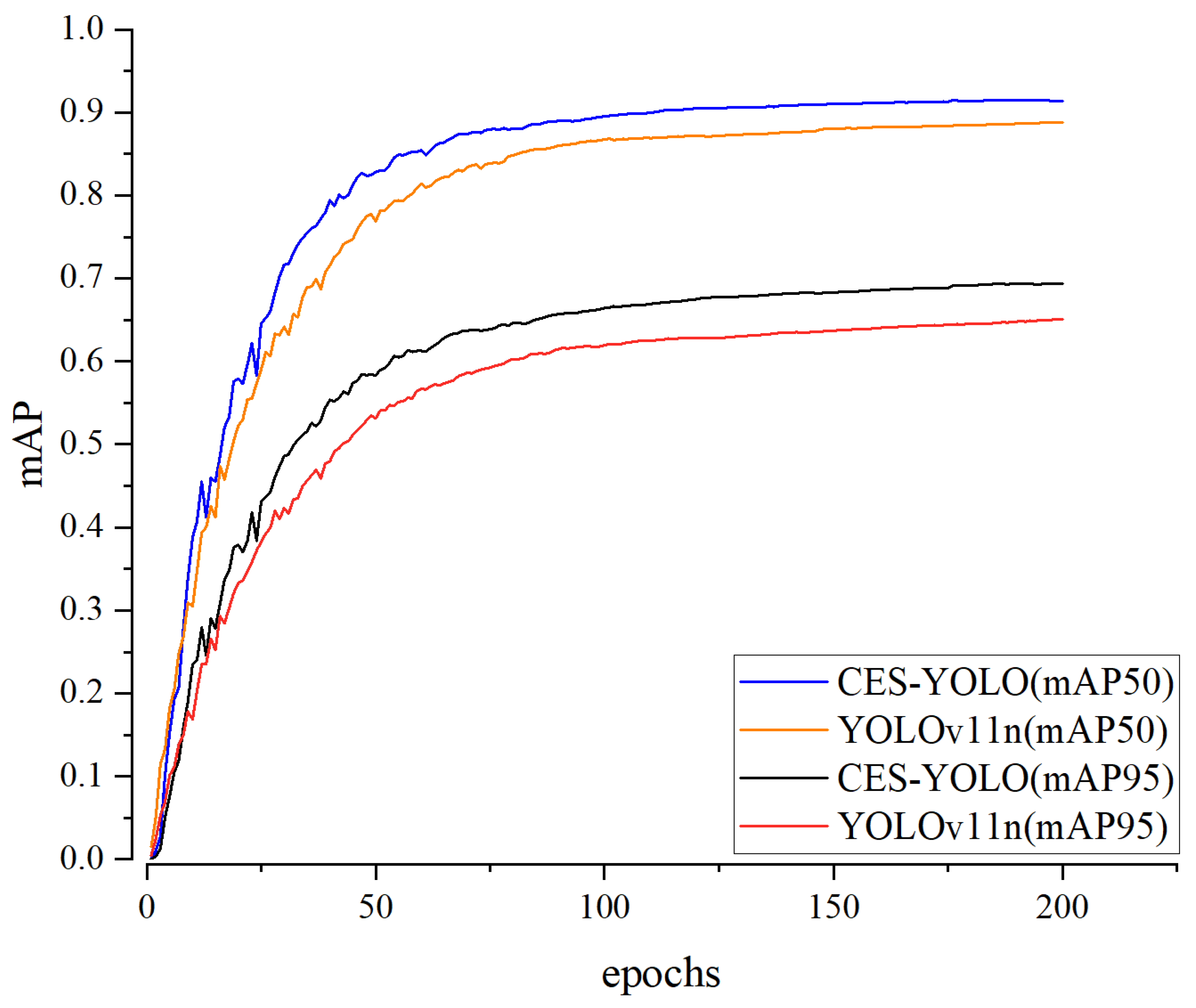

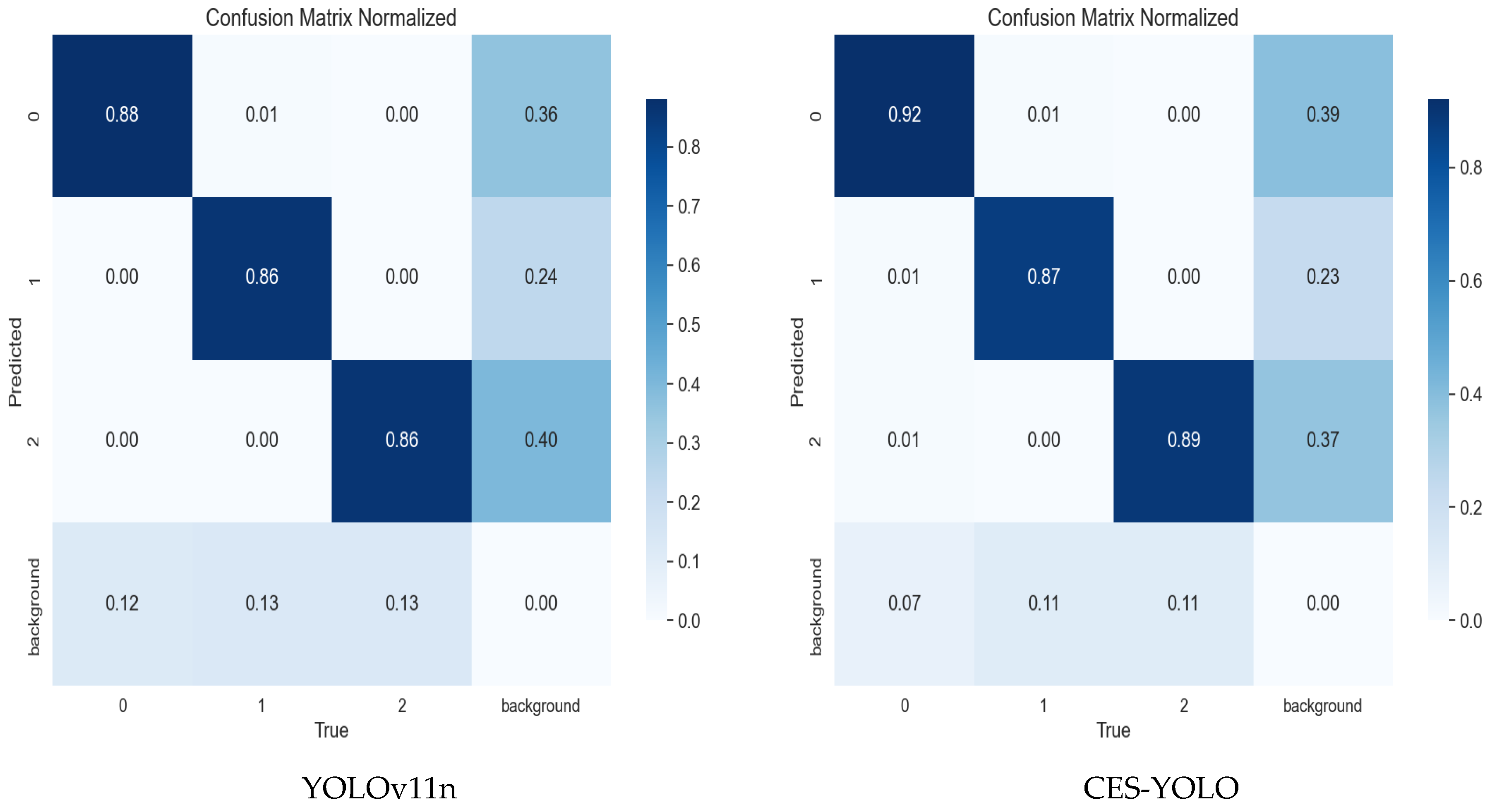

3.1. Before and After the Experiment

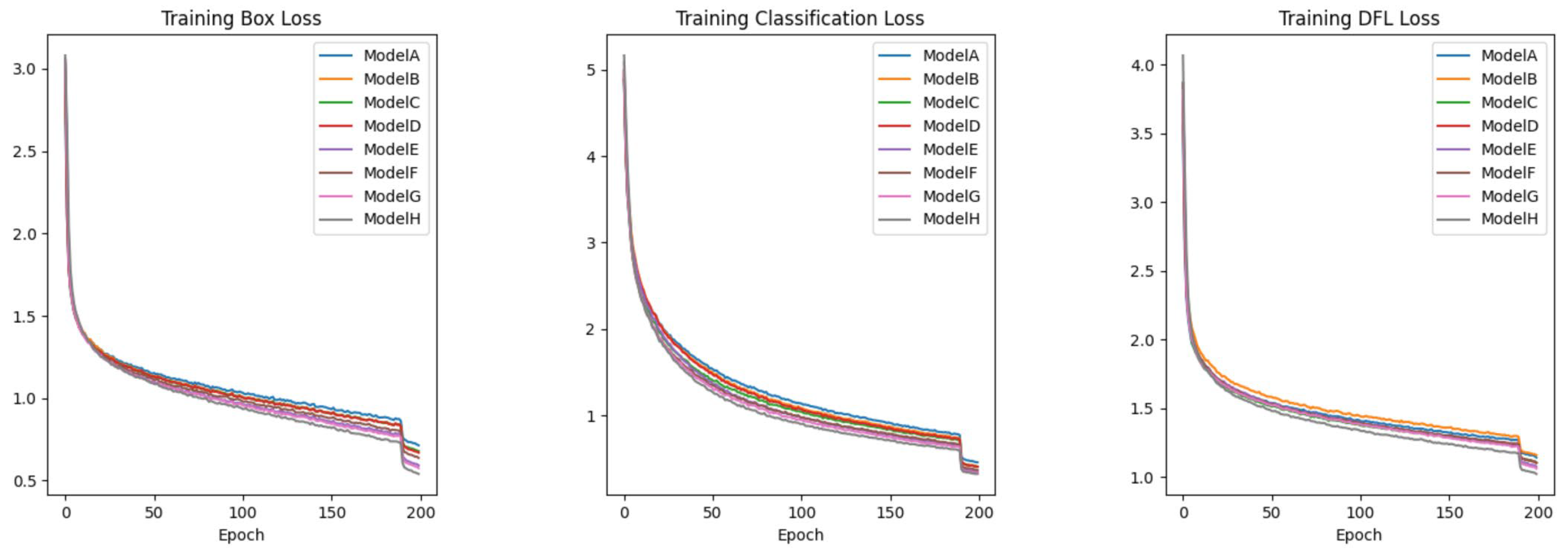

3.2. Ablation Experiment

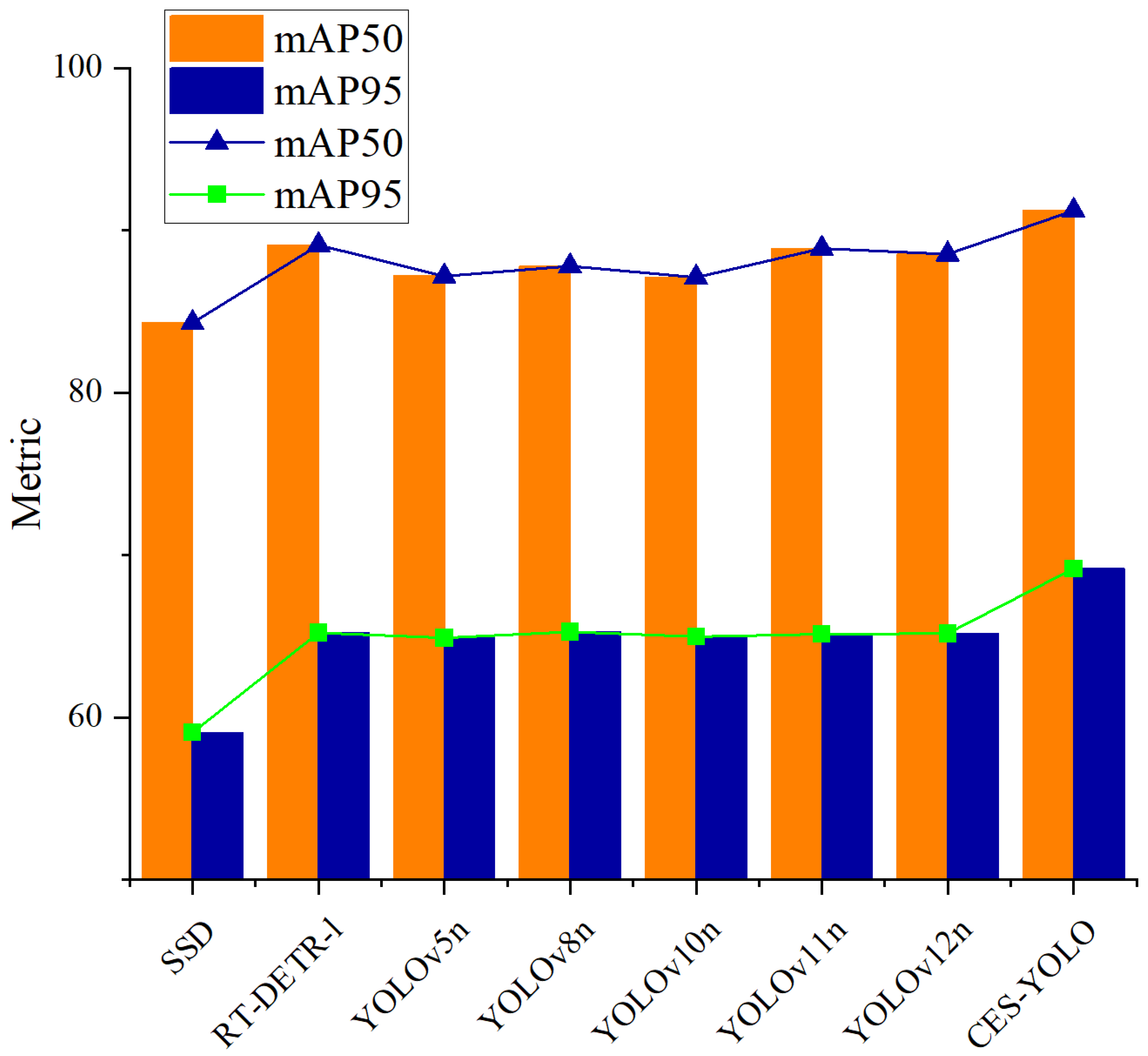

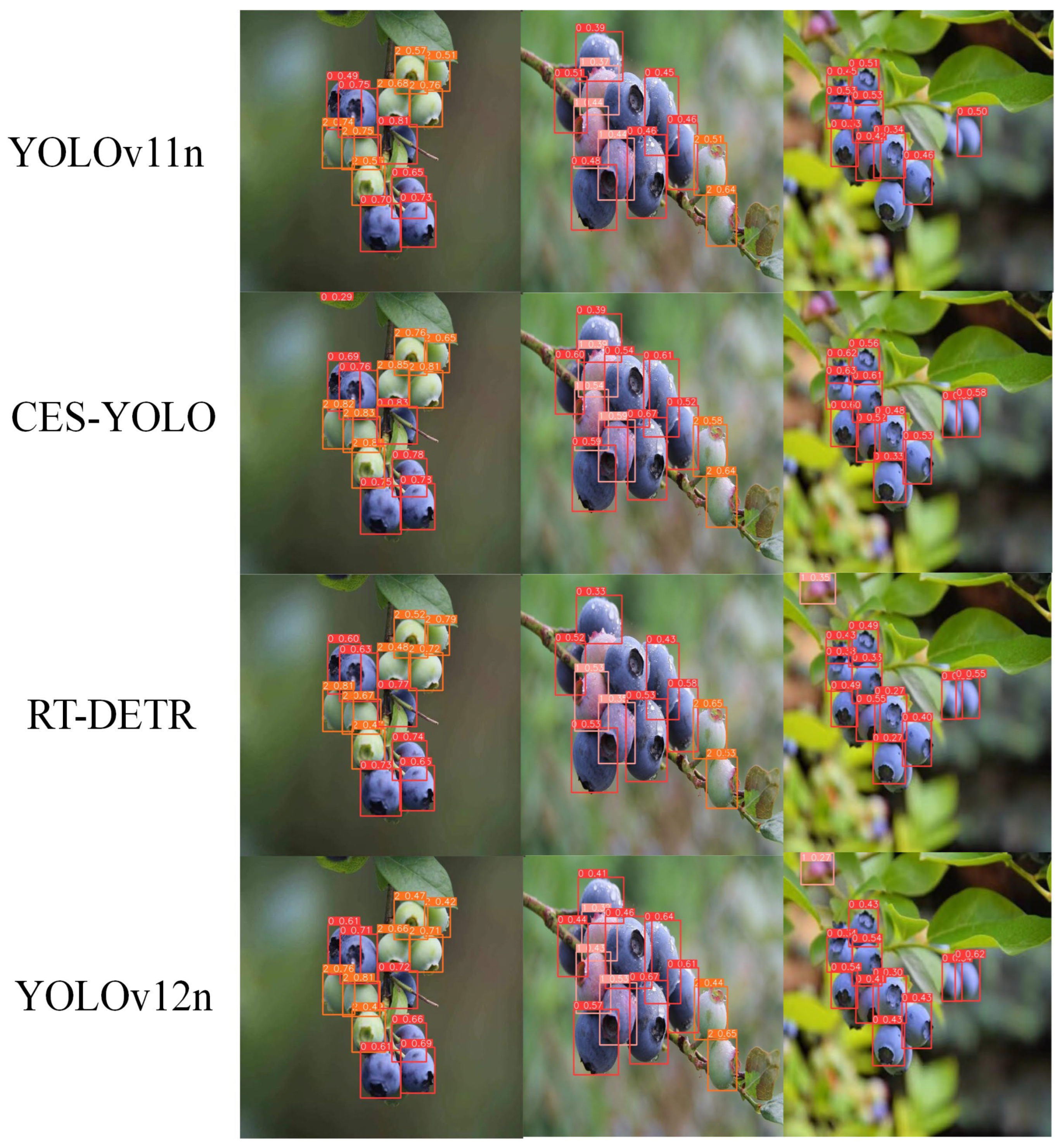

3.3. Model Comparison Experiment

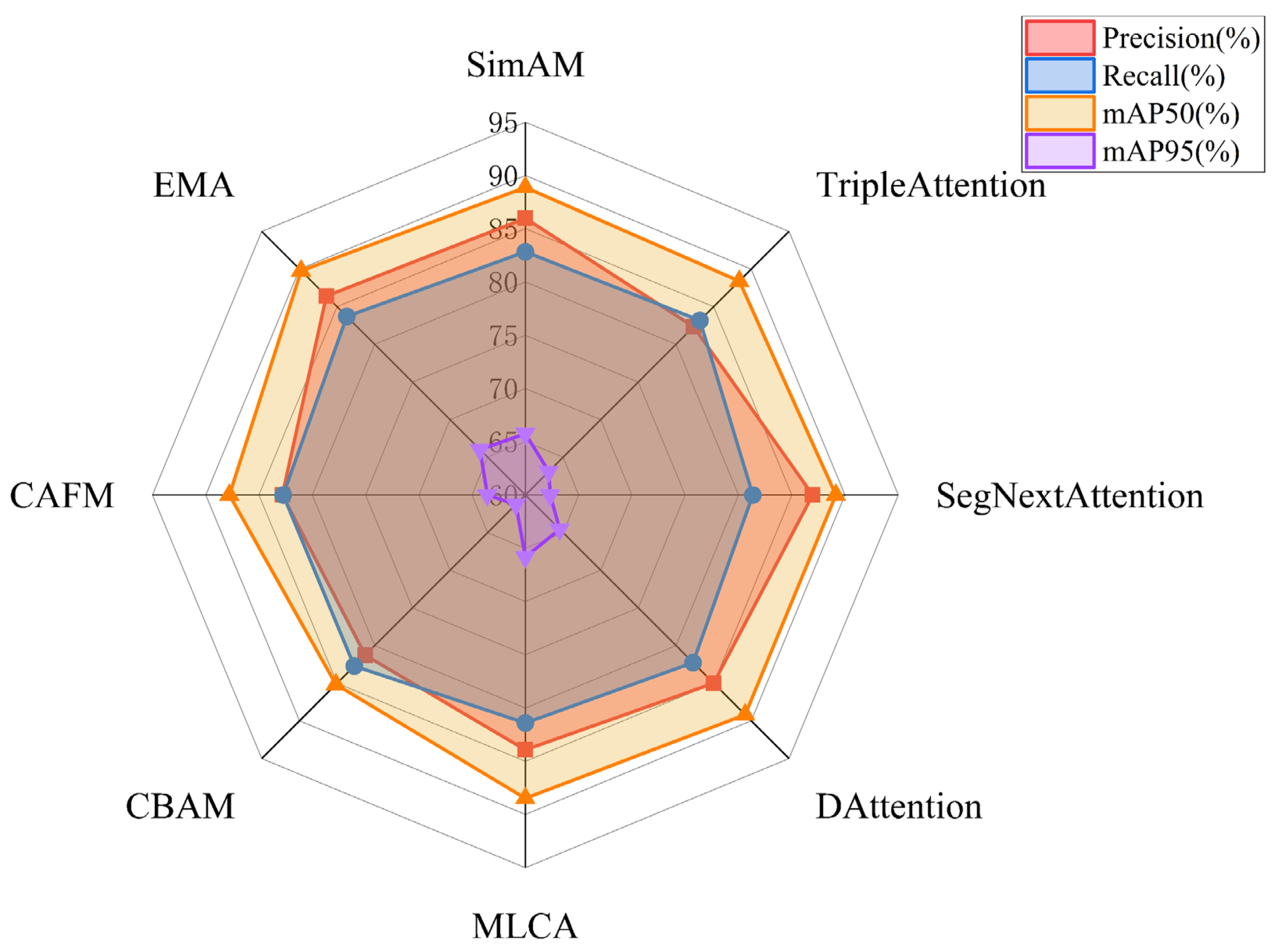

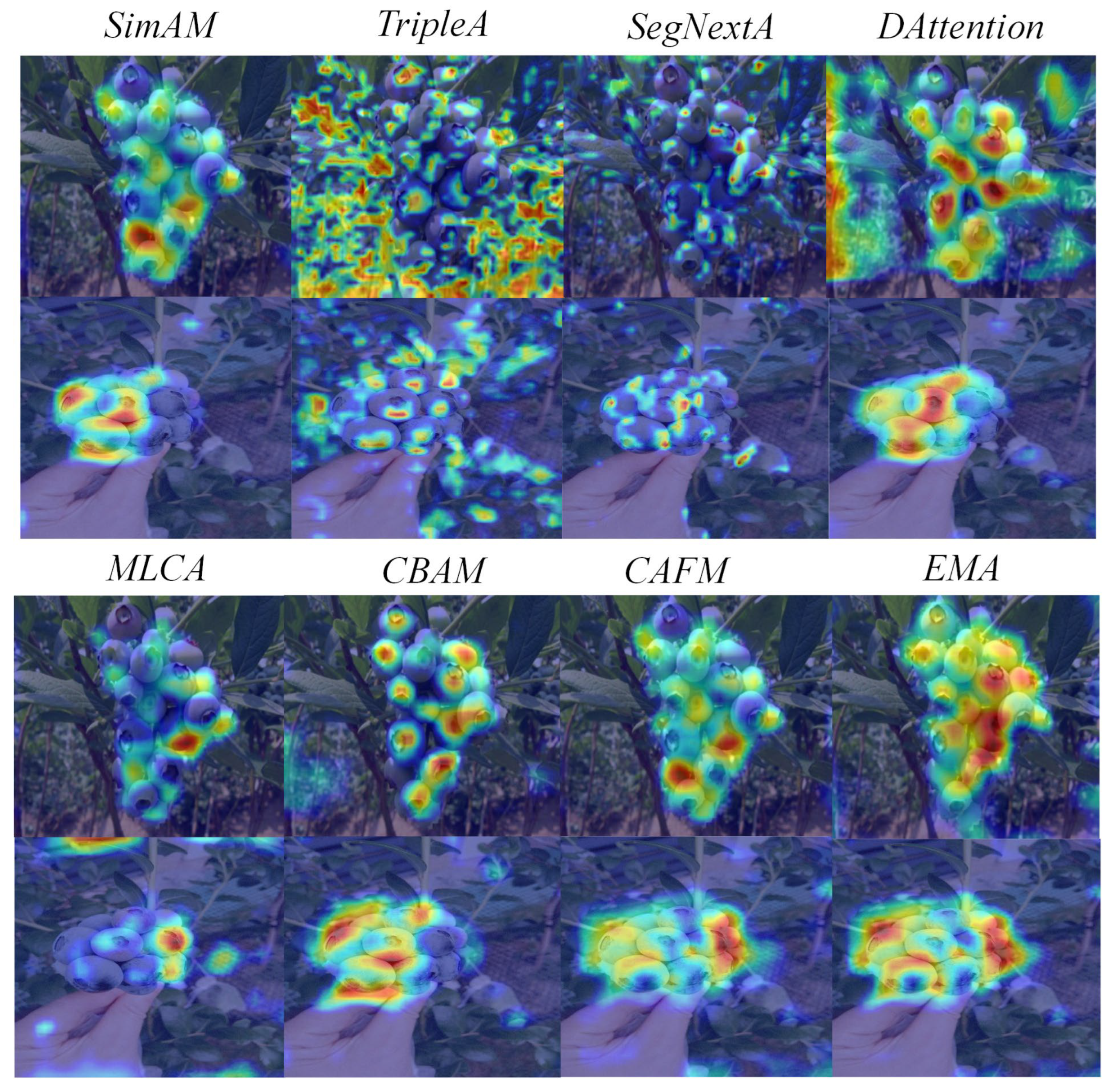

3.4. Contrastive Experiment on Attention Mechanisms

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bradshaw, M.; Ivors, K.; Broome, J.C.; Carbone, I.; Braun, U.; Yang, S.; Meng, E.; Warres, B.; Cline, W.O.; Moparthi, S. An emerging fungal disease is spreading across the globe and affecting the blueberry industry. New Phytol. 2025, 246, 103–112. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, W.; Wu, L.; Chen, P.; Li, X.; Wen, G.; Tangtrakulwanich, K.; Chethana, K.W.T.; Al-Otibi, F.; Hyde, K.D. Characterization of fungal pathogens causing blueberry fruit rot disease in China. Pathogens 2025, 14, 201. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Fang, D.; Shi, C.; Xia, S.; Wang, J.; Deng, B.; Kimatu, B.M.; Guo, Y.; Lyu, L.; Wu, Y. Anthocyanin-loaded polylactic acid/quaternized chitosan electrospun nanofiber as an intelligent and active packaging film in blueberry preservation. Food Hydrocoll. 2025, 158, 110586. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, L.; Ma, C.; Xu, M.; Guan, X.; Lin, F.; Jiang, T.; Chen, X.; Bu, N.; Duan, J. Konjac glucomannan/xanthan gum film embedding soy protein isolate–tannic acid–iron complexes for blueberry preservation. Food Hydrocoll. 2025, 163, 111040. [Google Scholar] [CrossRef]

- Song, Z.; Chen, C.; Duan, H.; Yu, T.; Zhang, Y.; Wei, Y.; Xu, D.; Liu, D. Identification of VcRBOH genes in blueberry and functional characterization of VcRBOHF in plant defense. BMC Genom. 2025, 26, 153. [Google Scholar] [CrossRef]

- Gasdick, M.; Dick, D.; Mayhew, E.; Lobos, G.; Moggia, C.; VanderWeide, J. First they’re sour, then they’re sweet: Exploring the berry-to-berry uniformity of blueberry quality at harvest and implications for consumer liking. Postharvest Biol. Technol. 2025, 230, 113765. [Google Scholar] [CrossRef]

- Arellano, C.; Sagredo, K.; Muñoz, C.; Govan, J. Bayesian Ensemble Model with Detection of Potential Misclassification of Wax Bloom in Blueberry Images. Agronomy 2025, 15, 809. [Google Scholar] [CrossRef]

- Júnior, M.R.B.; Dos Santos, R.G.; de Azevedo Sales, L.; Vargas, R.B.S.; Deltsidis, A.; de Oliveira, L.P. Image-based and ML-driven analysis for assessing blueberry fruit quality. Heliyon 2025, 11, e42288. [Google Scholar] [CrossRef]

- Zhao, F.; He, Y.; Song, J.; Wang, J.; Xi, D.; Shao, X.; Wu, Q.; Liu, Y.; Chen, Y.; Zhang, G. Smart UAV-assisted blueberry maturity monitoring with Mamba-based computer vision. Precis. Agric. 2025, 26, 56. [Google Scholar] [CrossRef]

- Jiang, D.; Wang, H.; Li, T.; Gouda, M.A.; Zhou, B. Real-time tracker of chicken for poultry based on attention mechanism-enhanced YOLO-Chicken algorithm. Comput. Electron. Agric. 2025, 237, 110640. [Google Scholar] [CrossRef]

- Reddy, B.S.H.; Venkatramana, R.; Jayasree, L. Enhancing apple fruit quality detection with augmented YOLOv3 deep learning algorithm. Int. J. Hum. Comput. Intell. 2025, 4, 386–396. [Google Scholar]

- Zhou, X.; Hu, X.; Sun, J. A review of fruit ripeness recognition methods based on deep learning. Cyber-Phys. Syst. 2025, 1–35. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Do, P.B.L.; Nguyen, D.D.K.; Lin, W.-C. A lightweight and optimized deep learning model for detecting banana bunches and stalks in autonomous harvesting vehicles. Smart Agric. Technol. 2025, 11, 101051. [Google Scholar] [CrossRef]

- Sun, H.; Ren, R.; Zhang, S.; Yang, S.; Cui, T.; Su, M. Detection of young fruit for “Yuluxiang” pears in natural environments using YOLO-CiHFC. Signal Image Video Process. 2025, 19, 382. [Google Scholar] [CrossRef]

- Zhang, D.; Chen, N.; Mao, S.; Wu, C.; Gu, D.; Zhang, L. A lightweight real-time algorithm for plum harvesting detection in orchards under complex conditions. Signal Image Video Process. 2025, 19, 327. [Google Scholar] [CrossRef]

- Yang, H.; Yang, L.; Wu, T.; Yuan, Y.; Li, J.; Li, P. MFD-YOLO: A fast and lightweight model for strawberry growth state detection. Comput. Electron. Agric. 2025, 234, 110177. [Google Scholar] [CrossRef]

- Wu, Y.; Huang, J.; Yang, C.; Yang, J.; Sun, G.; Liu, J. TobaccoNet: A deep learning approach for tobacco leaves maturity identification. Expert Syst. Appl. 2024, 255, 124675. [Google Scholar] [CrossRef]

- Zhu, F.; Wang, S.; Liu, M.; Wang, W.; Feng, W. A Lightweight Algorithm for Detection and Grading of Olive Ripeness Based on Improved YOLOv11n. Agronomy 2025, 15, 1030. [Google Scholar] [CrossRef]

- Wang, C.; Han, Q.; Li, J.; Li, C.; Zou, X. YOLO-BLBE: A novel model for identifying blueberry fruits with different maturities using the I-MSRCR method. Agronomy 2024, 14, 658. [Google Scholar] [CrossRef]

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Deep learning image segmentation and extraction of blueberry fruit traits associated with harvestability and yield. Hortic. Res. 2020, 7, 110. [Google Scholar] [CrossRef]

- Ni, X.; Takeda, F.; Jiang, H.; Yang, W.Q.; Saito, S.; Li, C. A deep learning-based web application for segmentation and quantification of blueberry internal bruising. Comput. Electron. Agric. 2022, 201, 107200. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, W.; Ma, H.; Liu, Y.; Zhang, Y. Lightweight YOLO v5s blueberry detection algorithm based on attention mechanism. J. Henan Agric. Sci. 2024, 53, 151. [Google Scholar]

- Zhao, K.; Li, Y.; Liu, Z. Three-Dimensional Spatial Perception of Blueberry Fruits Based on Improved YOLOv11 Network. Agronomy 2025, 15, 535. [Google Scholar] [CrossRef]

- Song, Z.; Li, W.; Tan, W.; Qin, T.; Chen, C.; Yang, J. LBSR-YOLO: Blueberry health monitoring algorithm for WSN scenario application. Comput. Electron. Agric. 2025, 238, 110803. [Google Scholar] [CrossRef]

- Deng, B.; Lu, Y.; Vander Weide, J. Development and Preliminary Evaluation of a YOLO-Based Fruit Counting and Maturity Evaluation Mobile Application for Blueberries. Appl. Eng. Agric. 2025, 41, 391–399. [Google Scholar] [CrossRef]

- Dai, J.; Wang, G.; Yang, M.; Liu, D. PEBU-Net: A lightweight segmentation network for blueberry bruising based on Unet3+ using hyperspectral transmission imaging. Measurement 2025, 253, 117700. [Google Scholar] [CrossRef]

- Li, Z.; Xu, R.; Li, C.; Munoz, P.; Takeda, F.; Leme, B. In-field blueberry fruit phenotyping with a MARS-PhenoBot and customized BerryNet. Comput. Electron. Agric. 2025, 232, 110057. [Google Scholar] [CrossRef]

- Mullins, C.C.; Esau, T.J.; Zaman, Q.U.; Al-Mallahi, A.A.; Farooque, A.A.; MacEachern, C.B. Time-of-flight-based advanced surface reconstruction methods for real-time volume estimation of bulk harvested wild blueberries. Smart Agric. Technol. 2025, 11, 101050. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Shi, Z. A Lightweight Detection Method for Blueberry Fruit Maturity Based on an Improved YOLOv5 Algorithm. Agriculture 2024, 14, 36. [Google Scholar] [CrossRef]

- Zhai, X.; Zong, Z.; Xuan, K.; Zhang, R.; Shi, W.; Liu, H.; Han, Z.; Luan, T. Detection of maturity and counting of blueberry fruits based on attention mechanism and bi-directional feature pyramid network. J. Food Meas. Charact. 2024, 18, 6193–6208. [Google Scholar] [CrossRef]

- Yang, W.; Ma, X.; An, H. Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly. Agronomy 2023, 13, 1613. [Google Scholar] [CrossRef]

- You, H.; Li, Z.; Wei, Z.; Zhang, L.; Bi, X.; Bi, C.; Li, X.; Duan, Y. A Blueberry Maturity Detection Method Integrating Attention-Driven Multi-Scale Feature Interaction and Dynamic Upsampling. Horticulturae 2025, 11, 600. [Google Scholar] [CrossRef]

- Ropelewska, E.; Koniarski, M. A novel approach to authentication of highbush and lowbush blueberry cultivars using image analysis, traditional machine learning and deep learning algorithms. Eur. Food Res. Technol. 2025, 251, 193–204. [Google Scholar] [CrossRef]

- Li, X.; Ru, S.; He, Z.; Spiers, J.D.; Xiang, L. High-throughput phenotyping tools for blueberry count, weight, and size estimation based on modified YOLOv5s. Fruit Res. 2025, 5, e012. [Google Scholar] [CrossRef]

- Tian, J.; Jiang, Y.; Zhang, J.; Luo, H.; Yin, S. A novel data augmentation approach to fault diagnosis with class-imbalance problem. Reliab. Eng. Syst. Saf. 2024, 243, 109832. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Xiao, R.; Wang, H.; Wang, L.; Yuan, H. C3Ghost and C3k2: Performance study of feature extraction module for small target detection in YOLOv11 remote sensing images. In Proceedings of the Second International Conference on Big Data, Computational Intelligence, and Applications (BDCIA 2024), Huanggang, China, 20 March 2025; pp. 464–470. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Kuok, K.; Liu, X.; Ye, J.; Wang, Y.; Liu, W. GDE-Pose: A Real-Time Adaptive Compression and Multi-Scale Dynamic Feature Fusion Approach for Pose Estimation. Electronics 2024, 13, 4837. [Google Scholar] [CrossRef]

- Ganapathy, M.R.; Periasamy, S.; Pugazhendi, P.; Manuvel Antony, C.G. YOLOv11n for precision agriculture: Lightweight and efficient detection of guava defects across diverse conditions. J. Sci. Food Agric. 2025, 105, 6182–6195. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Chen, P.; Liu, W.; Dai, P.; Liu, J.; Ye, Q.; Xu, M.; Chen, Q.A.; Ji, R. Occlude them all: Occlusion-aware attention network for occluded person re-id. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11833–11842. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Zhou, T.; Ruan, S.; Vera, P.; Canu, S. A Tri-Attention fusion guided multi-modal segmentation network. Pattern Recognit. 2022, 124, 108417. [Google Scholar] [CrossRef]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision transformer with deformable attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

| Parameters | Setup |

|---|---|

| Epochs | 200 |

| Batch Size | 16 |

| Optimizer | SGD |

| Initial Learning Rate | 0.01 |

| Final Learning Rate | 0.01 |

| Momentum | 0.937 |

| Weight-Decay | 5 × 10−4 |

| Close Mosaic | Last ten epochs |

| Images | 640 |

| workers | 8 |

| Mosaic | 1.0 |

| Model Code | C3K2- Ghost | EMA Attention | SEAM Head | Precision (%) | Recall (%) | mAP50 (%) | mAP95 (%) | Parameters (M) | Flops (G) |

|---|---|---|---|---|---|---|---|---|---|

| Model-A | × | × | × | 84.63 | 83.15 | 88.88 | 65.13 | 2.6 | 6.2 |

| Model-B | √ | × | × | 84.57 | 83.99 | 89.49 | 65.92 | 2.2 | 5.5 |

| Model-C | × | √ | × | 86.40 | 83.71 | 89.75 | 66.03 | 2.6 | 6.2 |

| Model-D | × | × | √ | 86.96 | 82.23 | 89.04 | 65.38 | 2.5 | 5.9 |

| Model-E | √ | √ | × | 88.52 | 81.73 | 90.06 | 67.13 | 2.2 | 5.5 |

| Model-F | × | √ | √ | 87.06 | 84.05 | 89.92 | 66.64 | 2.5 | 5.9 |

| Model-G | √ | × | √ | 89.12 | 84.78 | 91.04 | 68.97 | 2.1 | 5.0 |

| Model-H | √ | √ | √ | 89.21 | 85.23 | 91.22 | 69.18 | 2.1 | 5.0 |

| Models | Precision (%) | Recall (%) | mAP50 (%) | mAP95 (%) | Parameters (M) | Flops (G) |

|---|---|---|---|---|---|---|

| SSD (resnet-50) | 81.72 | 80.67 | 84.30 | 59.07 | 46.7 | 15.1 |

| RT-DETR-l | 84.09 | 84.17 | 89.10 | 65.22 | 32 | 103.5 |

| YOLOv5n | 81.82 | 82.55 | 87.17 | 64.91 | 2.5 | 7.1 |

| YOLOv8n | 84.77 | 82.22 | 87.80 | 65.27 | 3.0 | 8.2 |

| YOLOv10n | 83.58 | 81.90 | 87.10 | 64.98 | 2.7 | 8.3 |

| YOLOv11n | 84.63 | 83.15 | 88.88 | 65.13 | 2.6 | 6.6 |

| YOLOv12n | 84.66 | 82.95 | 88.53 | 65.17 | 2.5 | 6.0 |

| CES-YOLO | 89.21 | 85.23 | 91.22 | 69.18 | 2.7 | 6.5 |

| Attention Method | Precision (%) | Recall (%) | mAP50 (%) | mAP95 (%) |

|---|---|---|---|---|

| SimAM | 85.97 | 82.83 | 88.87 | 65.77 |

| TripleAttention | 82.34 | 83.18 | 88.40 | 63.12 |

| SegNextAttention | 86.97 | 81.35 | 89.15 | 62.34 |

| DAttention | 84.98 | 82.25 | 89.16 | 64.57 |

| MLCA | 83.92 | 81.41 | 88.47 | 65.82 |

| CBAM | 81.25 | 82.71 | 85.14 | 61.27 |

| CAFM | 82.81 | 82.73 | 87.78 | 63.56 |

| EMA | 86.40 | 83.71 | 89.75 | 66.03 |

| Models | SSD | RT-Detr-l | YOLOv5n | YOLOv8n | YOLOv10n | YOLOv11n | YOLOv12n | CES-YOLO |

| FPS | 12.2 | 8.9 | 17.5 | 18.3 | 19.7 | 19.1 | 18.9 | 20.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, J.; Fan, J.; Sun, Z.; Liu, H.; Yan, W.; Li, D.; Liu, H.; Wang, J.; Huang, D. Deployment of CES-YOLO: An Optimized YOLO-Based Model for Blueberry Ripeness Detection on Edge Devices. Agronomy 2025, 15, 1948. https://doi.org/10.3390/agronomy15081948

Yuan J, Fan J, Sun Z, Liu H, Yan W, Li D, Liu H, Wang J, Huang D. Deployment of CES-YOLO: An Optimized YOLO-Based Model for Blueberry Ripeness Detection on Edge Devices. Agronomy. 2025; 15(8):1948. https://doi.org/10.3390/agronomy15081948

Chicago/Turabian StyleYuan, Jun, Jing Fan, Zhenke Sun, Hongtao Liu, Weilong Yan, Donghan Li, Hui Liu, Jingxiang Wang, and Dongyan Huang. 2025. "Deployment of CES-YOLO: An Optimized YOLO-Based Model for Blueberry Ripeness Detection on Edge Devices" Agronomy 15, no. 8: 1948. https://doi.org/10.3390/agronomy15081948

APA StyleYuan, J., Fan, J., Sun, Z., Liu, H., Yan, W., Li, D., Liu, H., Wang, J., & Huang, D. (2025). Deployment of CES-YOLO: An Optimized YOLO-Based Model for Blueberry Ripeness Detection on Edge Devices. Agronomy, 15(8), 1948. https://doi.org/10.3390/agronomy15081948