YOLOv8n-FDE: An Efficient and Lightweight Model for Tomato Maturity Detection

Abstract

1. Introduction

- (1)

- A novel feature extraction module C3-FNet and a PSLD head were designed to achieve model lightweighting while improving detection accuracy;

- (2)

- The PIoUv2 loss function was introduced, and the optimal hyperparameter values were determined experimentally according to different research datasets;

- (3)

- The detection performance of the model was verified by analyzing its effectiveness in specific scenarios such as similar maturity, small distant objects, fruit stacking, and high-brightness conditions;

- (4)

- Compared to the baseline, the improved model reduced the number of parameters, computational complexity, and model size by 46%, 21%, and 60%, respectively, while improving detection performance by 1.8 percentage points, achieving a balance between lightweight design and detection accuracy.

2. Materials and Methods

2.1. Image Acquisition

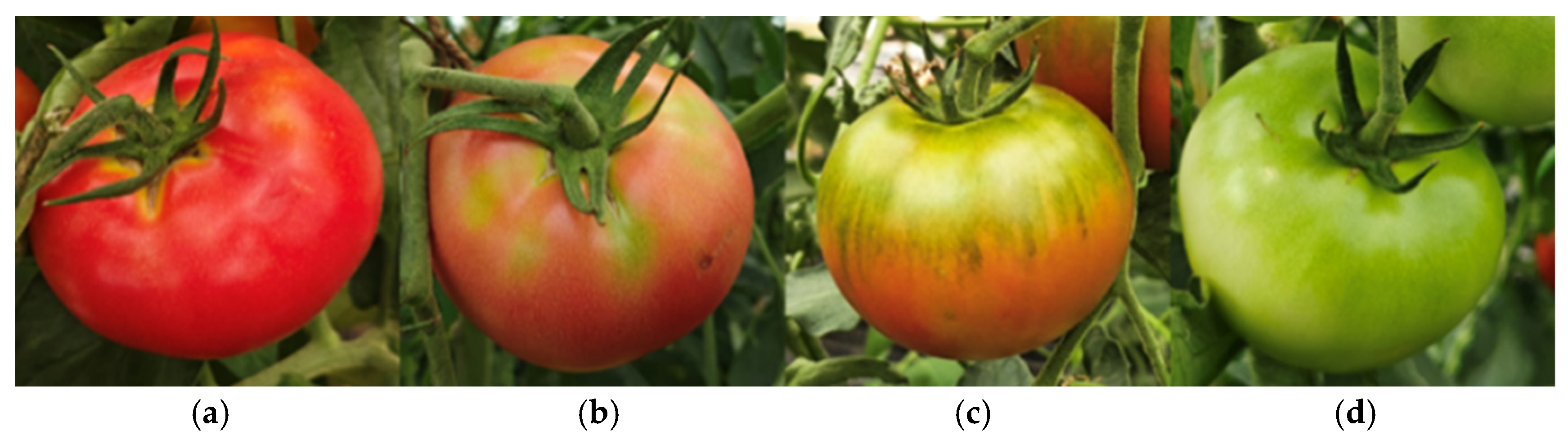

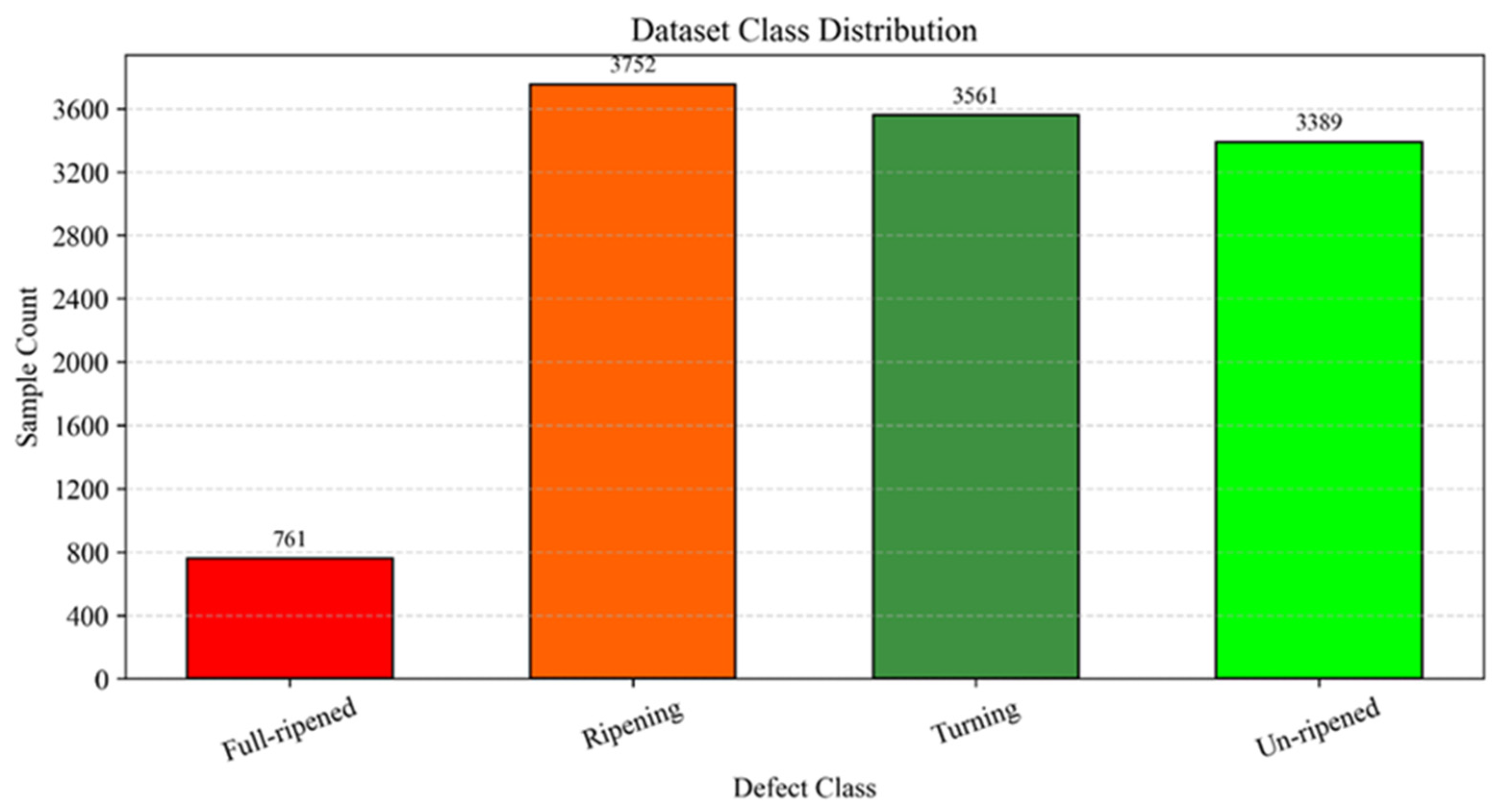

2.2. Dataset Construction

2.3. Baseline Model Selection

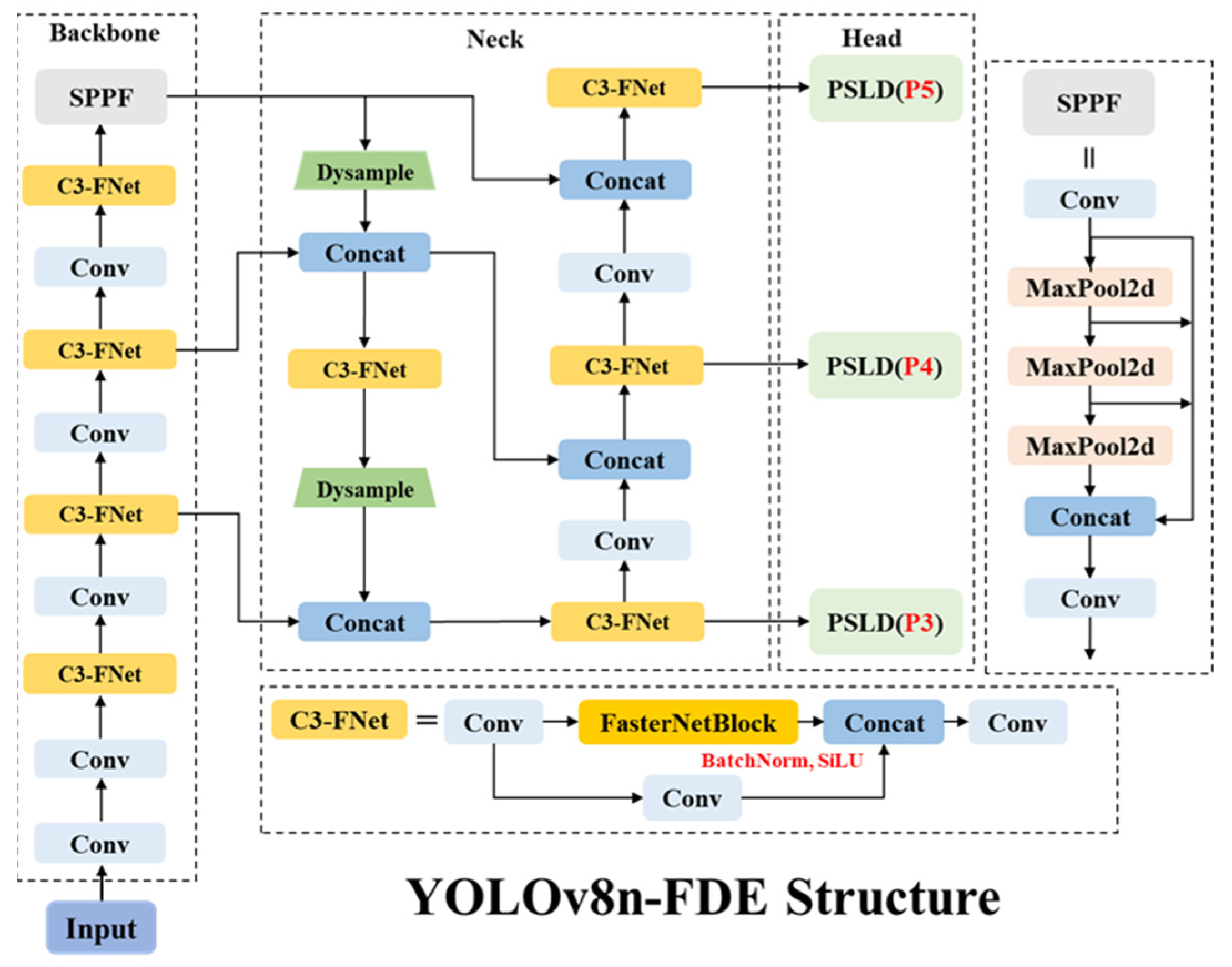

2.4. YOLOv8n-FDE Model

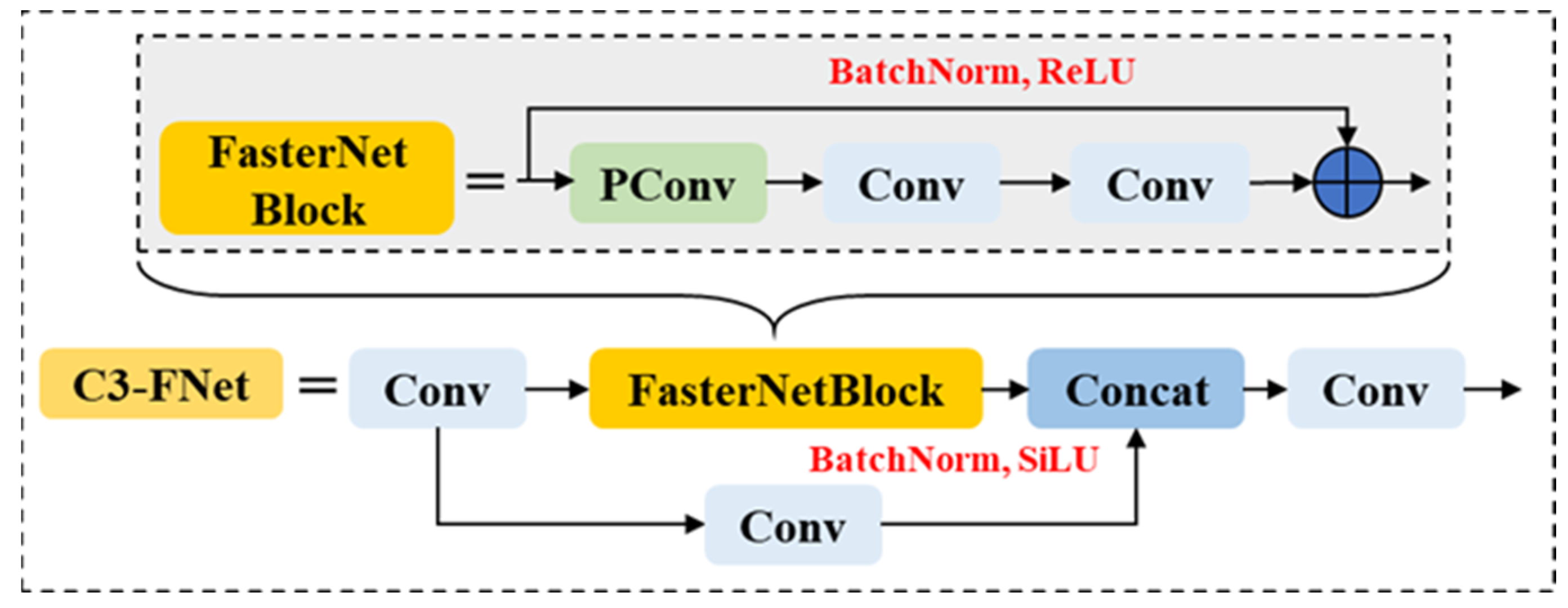

2.4.1. C3-FNet Feature Extraction and Fusion Module

2.4.2. Dysample Upsampling

2.4.3. Parameter-Sharing Lightweight Detection (PSLD) Head

2.4.4. PIoUv2 Loss Function

3. Experimental Setup

3.1. Experimental Parameters and Evaluation Metrics

3.2. Experimental Results and Analysis

3.2.1. Comparison of Upsampling Module Performance

3.2.2. Comparison of Detection Heads

3.2.3. Analysis of PIoUv2 Hyperparameter Settings

3.2.4. Ablation Experiments

3.2.5. Experimental Comparison of Different Models

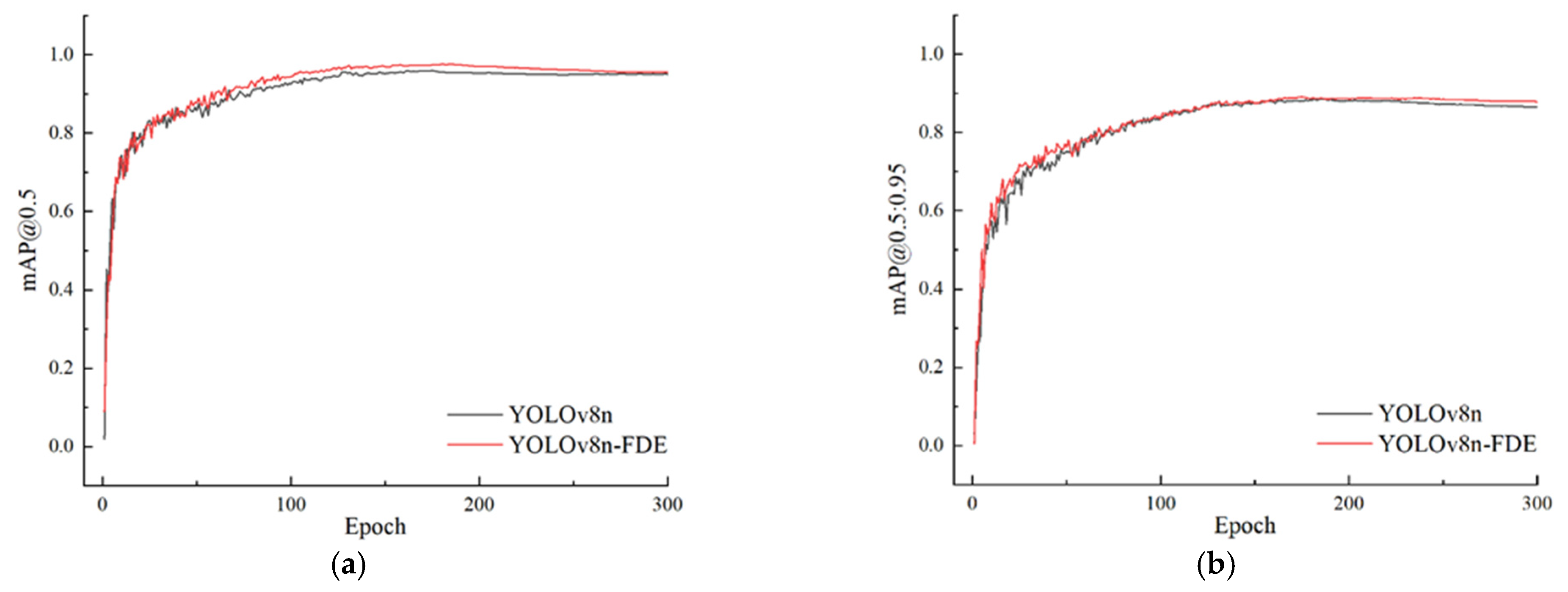

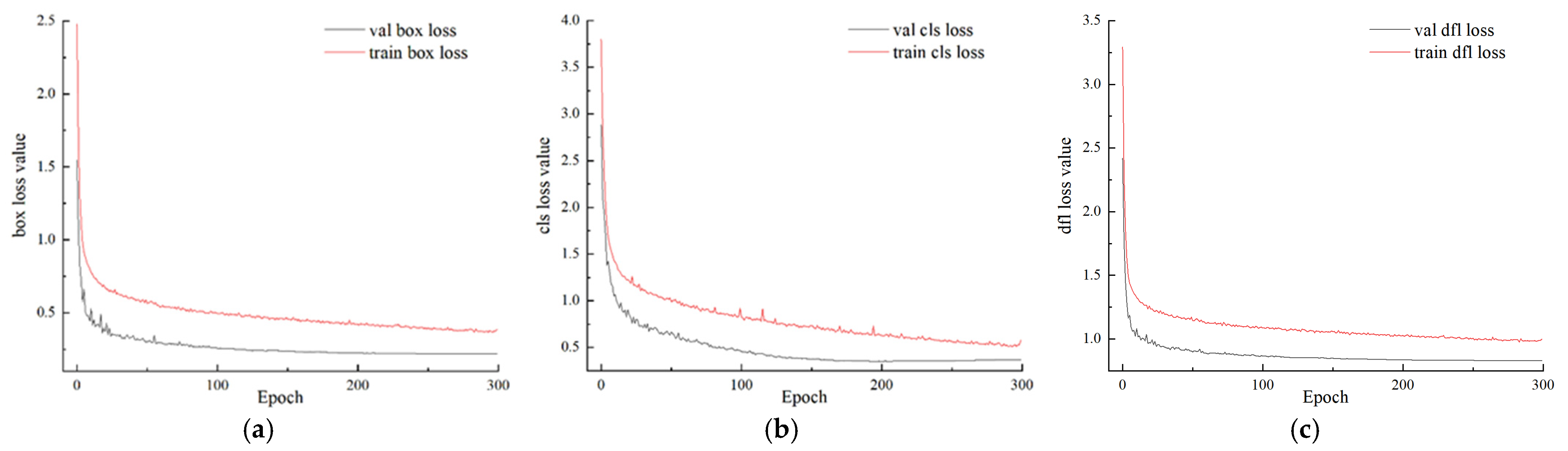

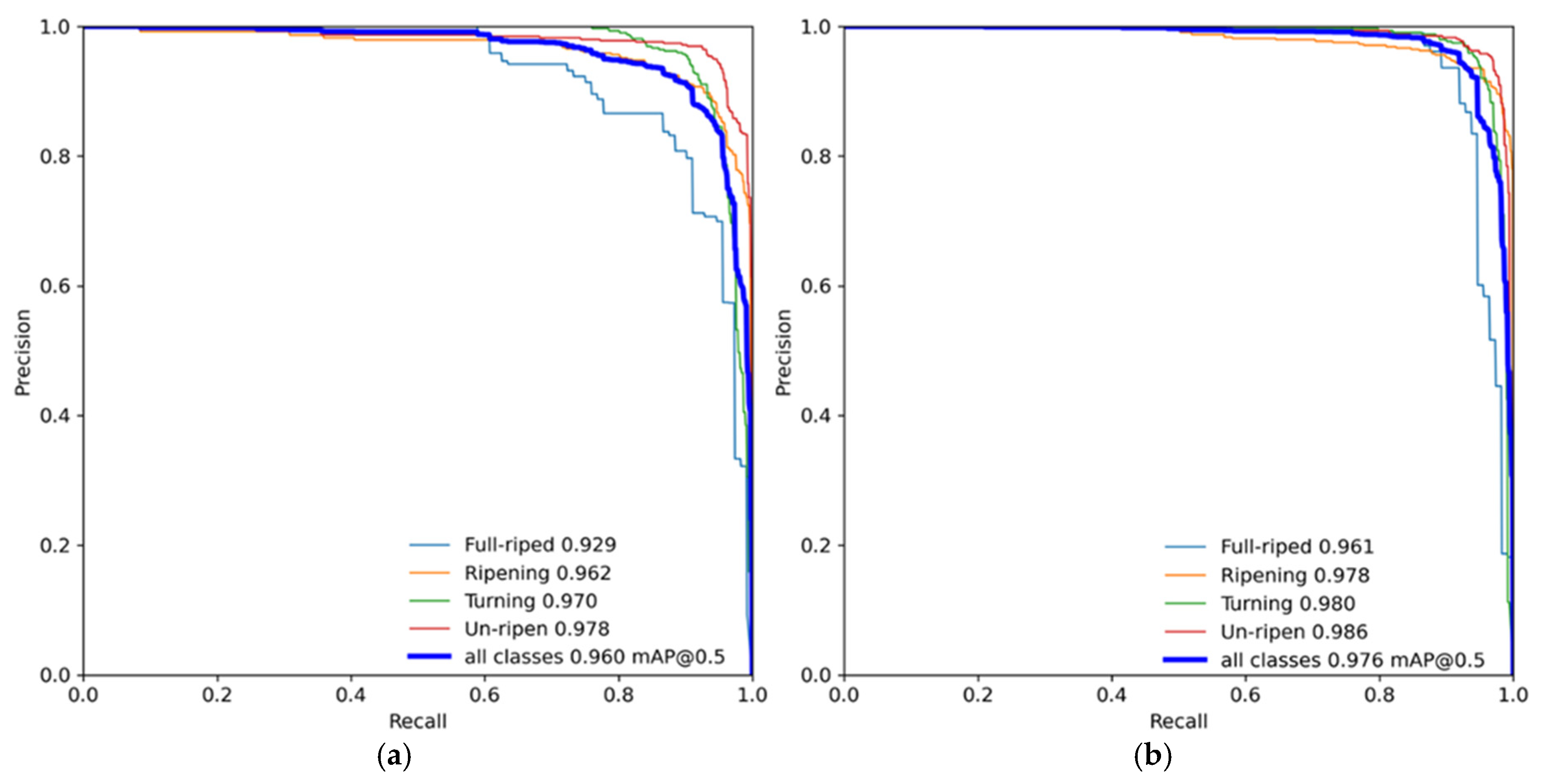

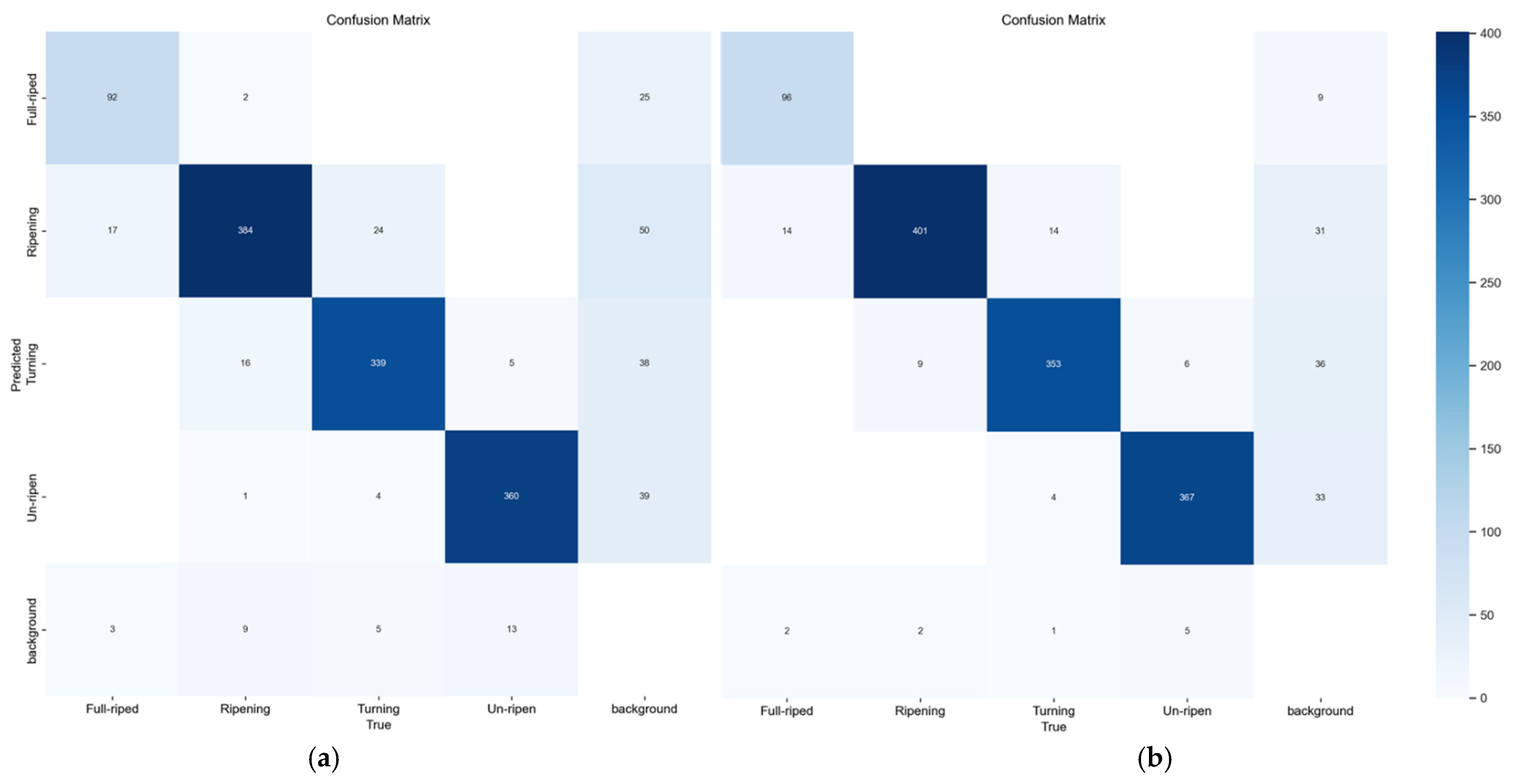

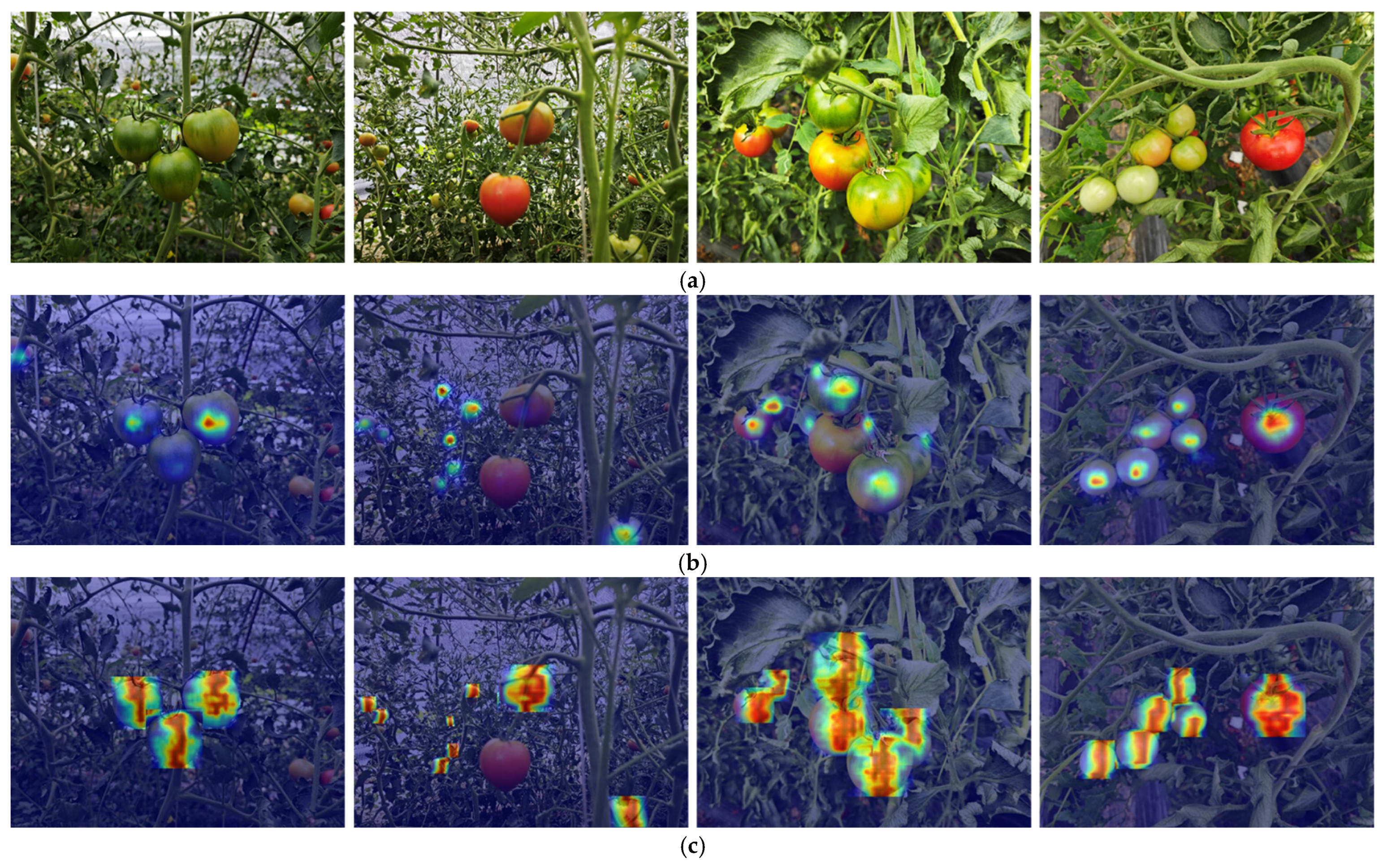

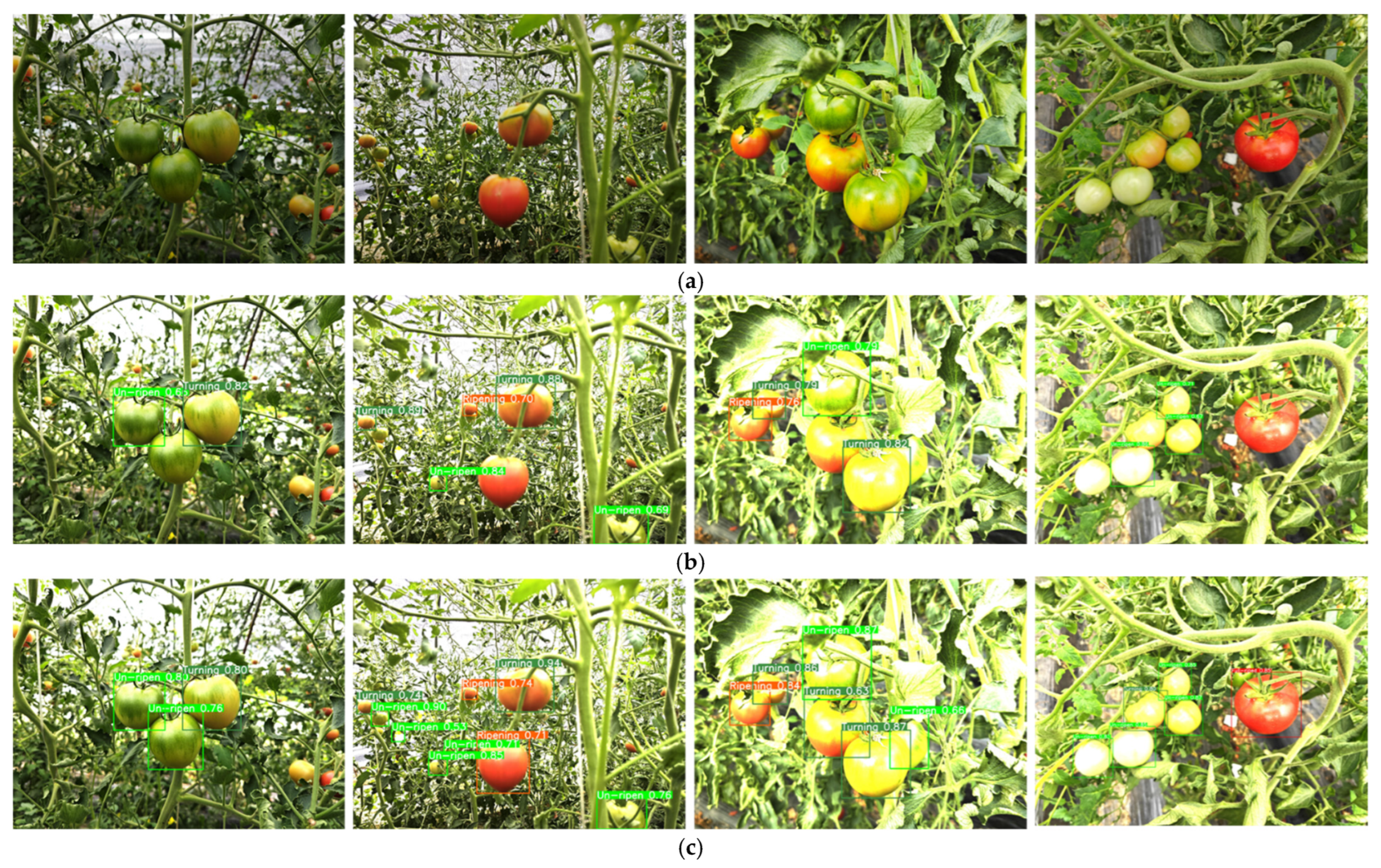

3.2.6. Comparison of Model Performance Before and After Improvement

4. Conclusions

5. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, A.; Xu, Y.; Hu, D.; Zhang, L.; Li, A.; Zhu, Q.; Liu, J. Tomato Yield Estimation Using an Improved Lightweight YOLO11n Network and an Optimized Region Tracking-Counting Method. Agriculture 2025, 15, 1353. [Google Scholar] [CrossRef]

- Malik, M.H.; Zhang, T.; Li, H.; Zhang, M.; Shabbir, S.; Saeed, A. Mature Tomato Fruit Detection Algorithm Based on improved HSV and Watershed Algorithm. IFAC-PapersOnLine 2018, 51, 431–436. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Gu, Z.; Ma, X.; Guan, H.; Jiang, Q.; Deng, H.; Wen, B.; Zhu, T.; Wu, X. Tomato fruit detection and phenotype calculation method based on the improved RTDETR model. Comput. Electron. Agric. 2024, 227, 109524. [Google Scholar] [CrossRef]

- Ninja, B.; Manuj, K.H. Maturity detection of tomatoes using transfer learning. Meas. Food 2022, 7, 100038. [Google Scholar] [CrossRef]

- Chen, W.; Liu, M.; Zhao, C.; Li, X.; Wang, Y. MTD-YOLO: Multi-task deep convolutional neural network for cherry tomato fruit bunch maturity detection. Comput. Electron. Agric. 2024, 216, 108533. [Google Scholar] [CrossRef]

- Wu, Q.; Huang, H.; Song, D.; Zhou, J. YOLO-PGC: A Tomato Maturity Detection Algorithm Based on Improved YOLOv11. Appl. Sci. 2025, 15, 5000. [Google Scholar] [CrossRef]

- Qin, J.; Chen, Z.; Zhang, Y.; Nie, J.; Yan, T.; Wan, B. YOLO-CT: A method based on improved YOLOv8n-Pose for detecting multi-species mature cherry tomatoes and locating picking points in complex environments. Measurement 2025, 254, 117954. [Google Scholar] [CrossRef]

- Ayyad, S.M.; Sallam, N.M.; Gamel, S.A.; Ali, Z.H. Particle swarm optimization with YOLOv8 for improved detection performance of tomato plants. J. Big Data 2025, 12, 152. [Google Scholar] [CrossRef]

- Lawal, M.O. Tomato detection based on modified YOLOv3 framework. Sci. Rep. 2021, 11, 1447. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Ding, J.; Zhang, R.; Xi, X. YOLOv8n-CA: Improved YOLOv8n Model for Tomato Fruit Recognition at Different Stages of Ripeness. Agronomy 2025, 15, 188. [Google Scholar] [CrossRef]

- Appe, S.N.; Arulselvi, G.; Balaji, G.N. CAM-YOLO: Tomato detection and classification based on improved YOLOv5 using combining attention mechanism. PeerJ Comput. Sci. 2023, 9, e1463. [Google Scholar] [CrossRef]

- Vo, H.T.; Mui, K.C.; Thien, N.N.; Tien, P.P. Automating Tomato Ripeness Classification and Counting with YOLOv9. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 1120–1128. [Google Scholar] [CrossRef]

- Gao, G.H.; Shuai, C.Y.; Wang, S.Y.; Ding, T. Using improved YOLO V5s to recognize tomatoes in a continuous working environment. Signal Image Video Process. 2024, 18, 4019–4028. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Detection of tomato plant phenotyping traits using YOLOv5-based single stage detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Wang, W.; Gong, L.; Wang, T.; Yang, Z.; Zhang, W.; Liu, C. Tomato fruit recognition based on multi-source fusion image segmentation algorithm in open environment. Trans. Chin. Soc. Agric. Mac. (Trans. CSAM) 2021, 52, 156–164. [Google Scholar]

- Zu, L.; Zhao, Y.; Liu, J.; Su, F.; Zhang, Y.; Liu, P. Detection and Segmentation of Mature Green Tomatoes Based on Mask R-CNN with Automatic Image Acquisition Approach. Sensors 2021, 21, 7842. [Google Scholar] [CrossRef] [PubMed]

- Afonso, M.; Fonteijn, H.; Fiorentin, F.S.; Lensink, D.; Mooij, M.; Faber, N.; Polder, G.; Wehrens, R. Tomato Fruit Detection and Counting in Greenhouses Using Deep Learning. Front. Plant Sci. 2020, 11, 571299. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Hua, Y.; Lou, Q.; Kan, X. SWMD-YOLO: A Lightweight Model for Tomato Detection in Greenhouse Environments. Agronomy 2025, 15, 1593. [Google Scholar] [CrossRef]

- Hao, F.; Zhang, Z.; Ma, D.; Kong, H. GSBF-YOLO: A lightweight model for tomato ripeness detection in natural environments. J. Real-Time Image Process. 2025, 22, 47. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Parico, A.I.B.; Ahamed, T. Real Time Pear Fruit Detection and Counting Using YOLOv4 Models and Deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef]

- Glukhikh, I.; Glukhikh, D.; Gubina, A.; Chernysheva, T. Deep Learning Method with Domain-Task Adaptation and Client-Specific Fine-Tuning YOLO11 Model for Counting Greenhouse Tomatoes. Appl. Syst. Innov. 2025, 8, 71. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.; Gary, C.S.H. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Zhao, J.; Xi, X.; Shi, Y.; Zhang, B.; Qu, J.; Zhang, J.; Zhu, Z.; Zhang, R. An Online Method for Detecting Seeding Performance Based on Improved YOLOv5s Model. Agronomy 2023, 13, 2391. [Google Scholar] [CrossRef]

- Ha, C.K.; Nguyen, H.; Van, V.D. YOLO-SR: An optimized convolutional architecture for robust ship detection in SAR Imagery. Intell. Syst. Appl. 2025, 26, 200538. [Google Scholar] [CrossRef]

- Kim, B.J.; Choi, H.; Jang, H.; Kim, S.W. On the ideal number of groups for isometric gradient propagation. Neurocomputing 2024, 573, 127217. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: A simple and strong anchor-free object detector. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1922–1933. [Google Scholar] [CrossRef]

- Liu, C.; Wang, K.; Li, Q.; Li, Q.; Zhao, F.; Zhao, K.; Ma, H. Powerful-IoU: More straightforward and faster bounding box regression loss with a nonmonotonic focusing mechanism. Neural Netw. 2024, 170, 276–284. [Google Scholar] [CrossRef] [PubMed]

- Di, X.; Cui, K.; Wang, R.-F. Toward Efficient UAV-Based Small Object Detection: A Lightweight Network with Enhanced Feature Fusion. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

| Name | Detailed Specifications |

|---|---|

| CPU | i5-13600KF |

| GPU | NVIDIA RTX A2000 |

| RAM | 32 GB |

| VRAM | 6 GB |

| Operating system | Windows 10 |

| Programming language | Python 3.9 |

| Deep learning framework | Pytorch |

| Models | Parameter/M | Calculation/GFLOPs | Model Size/MB | mAP@.5/% |

|---|---|---|---|---|

| YOLOv8n | 3.05 | 8.2 | 5.99 | 96.0 |

| YOLOv8n-CARAFE | 3.20 | 8.8 | 6.23 | 95.9 |

| YOLOv8n-DySample | 3.01 | 8.1 | 5.98 | 96.9 |

| Models | Parameter/M | Calculation/GFLOPs | Model Size/MB | mAP@.5/% |

|---|---|---|---|---|

| YOLOv8n | 3.05 | 8.2 | 5.99 | 96.0 |

| YOLOv8n-Dyhead | 3.63 | 10.5 | 6.90 | 96.0 |

| YOLOv8n-PSLD | 2.36 | 6.5 | 4.72 | 96.3 |

| No. | Models | mAP@.5/% |

|---|---|---|

| 1 | λ = 1.1 | 96.4 |

| 2 | λ = 1.3 | 97.4 |

| 3 | λ = 1.5 | 97.0 |

| 4 | λ = 1.7 | 97.6 |

| Models | Parameter/M | Calculation/GFLOPs | Model Size/MB | mAP@.5/% |

|---|---|---|---|---|

| YOLOv8n | 3.05 | 8.2 | 5.99 | 96.0 |

| YOLOv8n-DySample | 3.01 | 8.1 | 5.98 | 96.9 |

| YOLOv8n-PSLD | 2.36 | 6.5 | 4.72 | 96.3 |

| YOLOv8n-C3_FNet | 2.19 | 6.1 | 4.44 | 96.5 |

| YOLOv8n-DySample-PSLD | 2.37 | 6.5 | 4.74 | 96.8 |

| YOLOv8n-C3_FNet-DySample-PSLD | 1.56 | 4.5 | 3.20 | 97.4 |

| YOLOv8n-C3_FNet-DySample-PSLD-PIoUv2 | 1.56 | 4.5 | 3.20 | 97.6 |

| Models | Parameter/M | Calculation/GFLOPs | Model Size/MB | mAP@.5/% | mAP@.5:0.95/% | Detect Times/ms |

|---|---|---|---|---|---|---|

| EfficientDet | 27.3 | 58.2 | 15.0 | 87.2 | 72.3 | 30.5 |

| Faster R-CNN | 137.75 | 403.8 | 107.8 | 62.3 | 53.8 | 71.1 |

| YOLOv5s | 2.36 | 6.5 | 4.72 | 94.2 | 78.3 | 38.8 |

| YOLOv7 | 36.51 | 103.4 | 71.5 | 93.1 | 79.1 | 45.3 |

| YOLOv8n | 3.01 | 8.1 | 5.98 | 96.0 | 88.7 | 23.6 |

| YOLOv10n | 2.37 | 6.5 | 5.50 | 95.1 | 86.3 | 20.8 |

| YOLO11n | 1.56 | 4.5 | 5.23 | 93.2 | 87.4 | 18.3 |

| YOLOv8n-FDE | 1.56 | 4.5 | 3.20 | 97.6 | 89.2 | 16.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, X.; Ding, J.; Bie, M.; Yu, H.; Shen, Y.; Zhang, R.; Xi, X. YOLOv8n-FDE: An Efficient and Lightweight Model for Tomato Maturity Detection. Agronomy 2025, 15, 1899. https://doi.org/10.3390/agronomy15081899

Gao X, Ding J, Bie M, Yu H, Shen Y, Zhang R, Xi X. YOLOv8n-FDE: An Efficient and Lightweight Model for Tomato Maturity Detection. Agronomy. 2025; 15(8):1899. https://doi.org/10.3390/agronomy15081899

Chicago/Turabian StyleGao, Xin, Jieyuan Ding, Mengxuan Bie, Hao Yu, Yang Shen, Ruihong Zhang, and Xiaobo Xi. 2025. "YOLOv8n-FDE: An Efficient and Lightweight Model for Tomato Maturity Detection" Agronomy 15, no. 8: 1899. https://doi.org/10.3390/agronomy15081899

APA StyleGao, X., Ding, J., Bie, M., Yu, H., Shen, Y., Zhang, R., & Xi, X. (2025). YOLOv8n-FDE: An Efficient and Lightweight Model for Tomato Maturity Detection. Agronomy, 15(8), 1899. https://doi.org/10.3390/agronomy15081899