Abstract

In agricultural pest detection, the small size of pests poses a critical hurdle to detection accuracy. To mitigate this concern, we propose a Lightweight Cross-Level Feature Aggregation Network (LCFANet), which comprises three key components: a deep feature extraction network, a deep feature fusion network, and a multi-scale object detection head. Within the feature extraction and fusion networks, we introduce the Dual Temporal Feature Aggregation C3k2 (DTFA-C3k2) module, leveraging a spatiotemporal fusion mechanism to integrate multi-receptive field features while preserving fine-grained texture and structural details across scales. This significantly improves detection performance for objects with large scale variations. Additionally, we propose the Aggregated Downsampling Convolution (ADown-Conv) module, a dual-path compression unit that enhances feature representation while efficiently reducing spatial dimensions. For feature fusion, we design a Cross-Level Hierarchical Feature Pyramid (CLHFP), which employs bidirectional integration—backward pyramid construction for deep-to-shallow fusion and forward pyramid construction for feature refinement. The detection head incorporates a Multi-Scale Adaptive Spatial Fusion (MSASF) module, adaptively fusing features at specific scales to improve accuracy for varying-sized objects. Furthermore, we introduce the MPDINIoU loss function, combining InnerIoU and MPDIoU to optimize bounding box regression. The LCFANet-n model has parameters and a computational cost of GFLOPs, enabling lightweight deployment. Extensive experiments on the public dataset demonstrate that the LCFANet-n model achieves a precision of , recall of , mAP50 of , and mAP50-95 of , reaching state-of-the-art (SOTA) performance in small-sized pest detection while maintaining a lightweight architecture.

1. Introduction

Agriculture serves as the foundation for humanity to sustain production and livelihoods and constitutes the fundamental guarantee for the normal functioning of human society. Ensuring the yield and quality of crops such as grains, fruits, and vegetables has always been an ongoing priority. Plant diseases and insect pests are one of the primary factors that hinder the normal growth of crops and lead to reduced yields. In severe cases, they can even negatively impact the economic development of nations and regions, as well as disrupt the normal livelihoods of people [1]. Therefore, the timely detection of plant diseases and insect pests, coupled with rapid response and intervention, is crucial. Due to the relatively underdeveloped agricultural infrastructure in most countries and regions, manual inspection remains the primary method for detecting plant diseases and insect pests during high-risk seasons. However, due to the high concealment, mobility, and morphological similarity of pests, this approach often leads to missed detection or misidentification, resulting in inappropriate control measures [2]. Such errors not only cause misuse and waste of pesticide resources but also fail to prevent the expansion of agricultural economic losses.

In response to the inherent limitations of traditional manual inspection methods for agricultural pest and disease control, an increasing number of studies are leveraging deep learning approaches to detect crop diseases and insect pests, driven by the widespread adoption of computer vision methods and artificial intelligence technologies across various domains [3]. Convolutional Neural Networks (CNNs), leveraging their profound capability to extract image pixel features and semantic features, have been extensively applied to classification and detection tasks for plant diseases and insect pests [4].

Deep learning-driven target identification and detection in insect imagery is a key focus in agricultural pest control research [3]. YOLO [5,6,7] redefines target detection by treating it as a regression task, offering a groundbreaking approach to real-time detection. Recent advancements in agricultural image analysis have witnessed a proliferation of detection frameworks derived from the YOLO architecture [8,9,10]. The CSF-YOLO framework, developed by Wang et al. [11], enhances YOLOv8-n [12] with a FasterNet backbone to achieve lightweight grape leafhopper damage assessment, supporting real-time vineyard surveillance. Huang et al. [13] proposed YOLO-YSTs, a wild pest detection method based on YOLOv10-n [14]. This approach was experimentally validated on a single-species pest dataset, achieving a mean average precision of . Feng et al. [15] proposed LCDDN-YOLO, a lightweight cotton disease detection model based on improved YOLOv8n. By introducing the BiFPN network and PDS-C2f module, the model enhances detection accuracy while achieving lightweight performance.

R-CNN [16,17,18] pioneered a two-stage framework by combining selective search region proposals with CNN-based feature extraction. Mu et al. [19] proposed a pest detection method called TFEMRNet based on the improved Mask R-CNN [20] model for edge devices. By incorporating a multi-attention mechanism, this method achieved a precision of in pest detection tasks. Li et al. [21] proposed an LLA-RCNN method, which achieved partial lightweighting of the Faster R-CNN [17] model by incorporating MobileNetV3 [22]. This approach enables accurate detection of pests on leaf surfaces. Guan et al. [23] proposed a GC-Faster RCNN agricultural pest detection model based on Faster R-CNN and a hybrid attention mechanism, achieving a improvement in average precision on the Insect25 dataset. Although the aforementioned agricultural pest detection models can achieve accurate insect identification, their reliance on the two-stage detection principle of R-CNN imposes significant barriers to breakthroughs in lightweight optimization.

Transformer-based detectors leverage self-attention mechanisms to model global contextual relationships, breaking convolutional inductive biases for improved scale adaptability [24,25,26]. Liu et al. [27] proposed an end-to-end pest object detection method based on the transformer, which achieved mAP in leaf-surface pest detection tasks by incorporating FRC and RPSA mechanisms. To achieve accurate pest detection in cistanche, Zhang et al. [28] proposed a transformer-based target identification module. Enhanced by a bridge attention mechanism and loss function, the network achieved average precision, demonstrating excellent results in complicated agricultural scenes. TinySegFormer [29] tackles edge computing limitations in agricultural pest detection by hybridizing the transformer and CNN architectures. It attained a segmentation precision at 65 FPS, demonstrating real-time applicability for leaf pest analysis. However, existing studies exclusively utilize near-field leaf-surface pest imagery, creating artificially magnified pest features that misrepresent actual field conditions characterized by smaller pest-to-image ratios.

Our analysis of the existing research indicates that current object detection methods face significant challenges in accurately identifying small-scale agricultural pests, primarily due to the following limitations:

- (1)

- Small pests often exhibit a low resolution and high morphological similarity, leading to a weak feature extraction and misclassification. Standard CNNs struggle to balance large receptive fields and fine-grained details required for small pests, resulting in poor localization [30].

- (2)

- To enhance small-scale object detection accuracy, current research predominantly adopts network parameter scaling strategies. However, increasing parameters significantly slows down inference speed. Real-time constraints in agricultural drones or edge devices make such models impractical for field deployment.

To overcome the above challenges in small pest imagery, we present the Lightweight Cross-Level Feature Aggregation Network (LCFANet). This framework integrates three core mechanisms: a deep feature extraction network that progressively converts RGB inputs from basic spatial details to advanced semantic information through hierarchical mapping, yielding four distinct feature maps; a hierarchical feature pyramid architecture employing top–down context propagation with lateral detail retention and adaptive feature refinement for cross-scale fusion; and a scale-aware detection head with trainable spatial weighting that achieves scale-invariant object recognition across all pyramid levels simultaneously. The integrated architecture maintains computational efficiency while addressing the characteristic challenges of small pest targets. The core contributions and innovative elements are detailed as follows:

- (1)

- LCFANet is proposed to achieve small pest object detection in insect trapping devices. Extensive evaluations on public Pest24 and our private datasets demonstrated its capability to accurately classify similar-feature pests and reliably detect objects with a small scale and debris, ultimately achieving SOTA detection performance.

- (2)

- We propose a Dual Temporal Feature Aggregation C3k2 (DTFA-C3k2) architecture, a Cross-Level Hierarchical Feature Pyramid (CLHFP), and a Multi-Scale Adaptive Spatial Fusion (MSASF) method in LCFANet. By integrating spatial-channel fusion, hierarchical feature interactions, and adaptive multi-scale fusion, these approaches maintain critical fine-grained details and structural hierarchies of small pests, significantly enhancing detection precision in agricultural scenarios.

- (3)

- To address the challenge in model lightweighting, we incorporate the Aggregated Downsampling Convolution (ADown-Conv) module into both the feature extraction and fusion stages of the LCFANet. ADown-Conv provides an effective downsampling solution for real-time object detection models through its lightweight design and structural flexibility.

The following sections are organized as follows: Section 2 details the pest dataset provenance and the architectural design of LCFANet. Section 3 evaluates the performance of LCFANet in small-scale insect object detection through generalization, comparative, and ablation experiments. Section 4 provides critical discussions on the proposed approach. Finally, the conclusion section summarizes the key findings and contributions of this paper.

2. Materials and Methods

2.1. Construction of Agricultural Pest Image Datasets

In this paper, to validate the effectiveness and generalization capability of our proposed pest detection model, we prepared a private dataset and a public dataset Pest24 [31]. Both datasets were collected using pest traps.

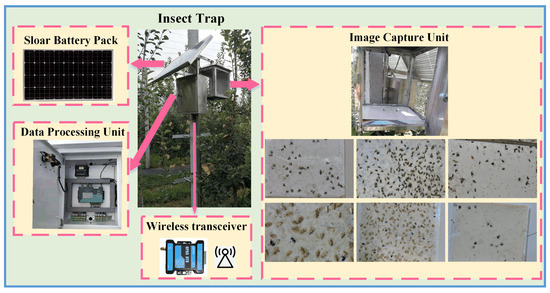

The image acquisition method and sample images of our private dataset are shown in Figure 1. We deployed self-powered pest traps equipped with image-capturing capabilities in an apple orchard located in Lingbao City, Henan Province, China. These traps utilized adhesive boards coated with different types of lures to capture three pest species: Grapholita molesta, Anarsia lineatella, and Cydia pomonella. Simultaneously, images of the adhesive boards were systematically captured. A total of 1246 images were obtained, of which 945 were validated as effective samples. Effective image samples contain pest images that need to be detected, while invalid images do not contain any target pest image information. Among the 945 valid images, there were 321 Grapholita molesta pest trap images, 307 Anarsia lineatella pest trap images, and 317 Cydia pomonella pest trap images. The average size of small-target pests is 48 pixels. The quantities of these three categories of pest trap images are essentially balanced. We used the LabelImg software to annotate these images in YOLO format, with each image corresponding to a TXT file containing the coordinates of the object detection bounding boxes.

Figure 1.

The proprietary pest trap for dataset acquisition.

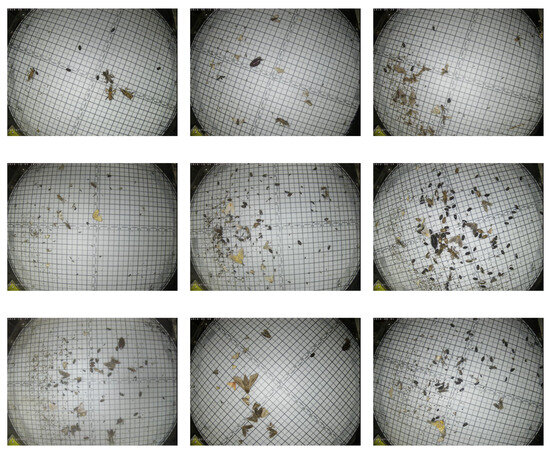

The Pest24 dataset consists of pest trap images with a resolution of , as illustrated in Figure 2. Among these images, the average size of small-target pests is 25 pixels. This dataset includes images of 24 pest categories, such as Rice planthopper, Meadow borer, and Holotrichia oblita, all annotated in YOLO format.

Figure 2.

Examples of the Pest24 [31] dataset.

As shown in Figure 1 and Figure 2, the pests captured in both our private dataset and the Pest24 dataset exhibit notably small sizes, which aligns with the characteristics of pest images in field scenarios. Therefore, we utilized these two datasets as our research subjects and divided them into training, validation, and test sets at a ratio of 6:2:2.

2.2. Construction of the Lightweight Cross-Level Feature Aggregation Network

In practical agricultural scenarios, small-target pests pose significant challenges to object detection models due to their small pixel area and subtle inter-species feature differences. Meanwhile, since the timeliness of pest detection is crucial for implementing emergency measures and minimizing crop yield loss, the lightweight edge deployment of pest detection models is equally important. Therefore, the current research priority lies in improving the accuracy of small-sized, multi-target pest detection models while maintaining their lightweight design. To mitigate these difficulties, we developed the Lightweight Cross-Level Feature Aggregation Network (LCFANet), an enhanced framework derived from YOLOv11 [32] architecture, specifically designed for small-scale imagery analysis.

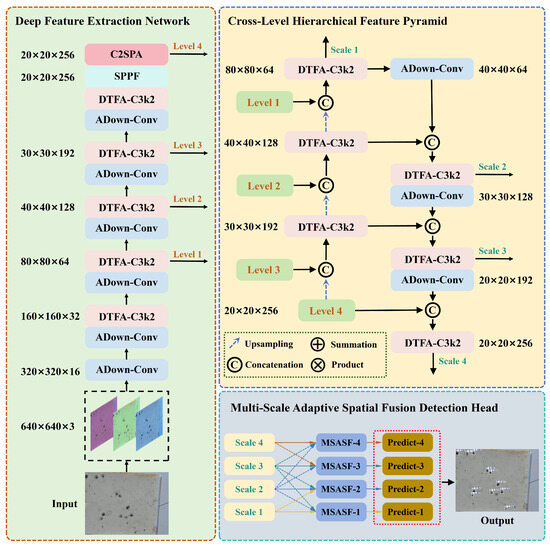

As depicted in Figure 3, the LCFANet framework integrates three core modules specifically designed for small-scale pest image target detection. The deep feature extraction network processes RGB inputs through convolutional operations, progressively transforming shallow spatial patterns (e.g., colors, edges, and textures) into high-level semantic representations, generating four hierarchical feature maps at resolutions of , , , and . These multi-scale features are subsequently fed into a hierarchical feature pyramid architecture that implements cross-resolution fusion through top–down contextual propagation, lateral detail preservation connections, and adaptive feature recalibration. Finally, the scale-aware detection head incorporates spatial fusion mechanisms with learnable weights, enabling simultaneous object detection across four pyramid levels while maintaining scale invariance. The architectural details and innovations of each module are detailed in the following sections.

Figure 3.

The structure of LCFANet.

2.2.1. Deep Feature Extraction Network

LCFANet generates four hierarchically structured feature maps through its image feature extraction backbone. These multi-level representations comprehensively integrate both shallow spatial patterns and deep semantic attributes of targets, establishing the foundation for robust small-scale target detection.

To counteract feature deterioration caused by network depth escalation while enhancing feature utilization efficiency, we propose the C3k2 module with Dual Temporal Feature Aggregation [33] capability (DTFA-C3k2). This architecture synergistically combines multi-receptive-field features through spatial-temporal fusion mechanisms, preserving critical fine-grained textures and structural details across scales, thereby improving detection accuracy for objects with significant scale variations.

Considering the increased computational complexity from deepened networks, we introduce an Aggregated Downsampling Convolution (ADown-Conv) [34] module with a lightweight architecture. ADown-Conv achieves an optimal balance between parameter efficiency and learning capacity, significantly enhancing operational performance while maintaining precision, which facilitates deployment in resource-constrained scenarios.

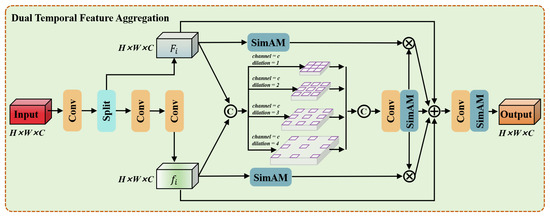

A. DTFA-C3k2 Module

In YOLOv11, the C3k2 mechanism serves as the core feature extraction methodology. This module integrates two cascaded bottleneck submodules that perform feature learning and propagation through streamlined convolutional operations and element-wise addition. However, the progressive feature degradation caused by low-level detail loss in bottleneck modules becomes inevitable with the deepened network layers of LCFANet, particularly constraining detection accuracy improvement for small-scale pest image targets.

To mitigate this drawback, we propose a DTFA-C3k2 architecture comprising two serially connected DTFA-C3k modules, which is depicted in Figure 4. Input features are processed by the DTFA-C3k module through a structured pipeline: the initial convolution adjusts the channel dimensions before splitting into dual branches. The primary branch undergoes two sequential convolutions to generate the refined feature , while the parallel branch preserves the split-originated feature . Both outputs maintain identical spatial-channel dimensions (), forming bi-temporal feature layers that synergistically preserve spatial details while enabling multi-scale representation learning. The feature map transformation is as follows:

where denotes input features. By concatenating the input feature pair along the channel dimension, we construct a hierarchical perception feature extraction framework. The architecture employs four parallel dilated grouped convolution modules with kernels and differentiated dilation rates , where c denotes the input channel count. The gradient variation of dilation rates establishes multi-level receptive fields, enabling shallow branches to focus on local textural details while deeper branches capture wide-range contextual semantics, forming complementary feature representations. The incorporation of grouped convolution reduces computational complexity while preserving the spatial topological structure through channel-wise independence constraints, effectively avoiding information loss caused by conventional downsampling. The calculation is as follows:

Figure 4.

Structural diagram of DTFA-C3k.

Subsequent processing utilizes a convolution for the channel dimension reduction of fused multi-scale features, coupled with the adaptive feature enhancement capability of the SimAM [35] attention mechanism. Leveraging its parameter-free attention computation based on the energy function , this mechanism dynamically amplifies responses in regions with significant variations. The entire pipeline maintains the original spatial resolution while balancing the multi-scale feature synergy and computational efficiency, establishing robust feature representation foundations for pest target object detection in complex scenarios. After extracting the commonality features from dual temporal features , we perform multiplication between these commonality features and the original temporal features to quantify their similarity. This operation can be mathematically represented as follows:

Finally, we integrate the components through element-wise summation, followed by convolutional operations coupled with the SimAM attention mechanism:

The DTFA-C3k architecture optimizes feature utilization through dimension-consistent transformations, particularly crucial for pest target image targets requiring joint low-level texture and high-level semantic retention.

B. ADown-Conv Module

In LCFANet, the integration of the deep feature extraction network, Cross-Level Hierarchical Feature Pyramid (CLHFP), and scale-aware detection head results in increased model depth, parameter count, and computational complexity. To address this challenge in model lightweighting, we strategically incorporate ADown-Conv [34] modules into both the deep feature extraction network and CLHFP in LCFANet.

The ADown-Conv module is a dual-path compression unit designed to achieve efficient spatial reduction while enhancing feature representation. It comprises two complementary processing streams: the Context Preservation Path and the Salient Feature Path. The Context Preservation Path processes half channels through convolution. The Salient Feature Path extracts key features via max pooling and point-wise convolution. The final output combines both paths through channel concatenation. In Algorithm 1, ADown-Conv processes input through parallel pooling-convolution paths, producing enriched output features .

| Algorithm 1 ADown-Conv Forward Propagation |

|

ADown-Conv decomposes the standard convolution into parallel sub-operations, which reduces the parameters as follows:

This yields parameter reduction compared to conventional approaches. The FLOPs complexity is optimized as follows:

maintaining lower computations than standard strided convolutions.

In summary, ADown-Conv provides an effective downsampling solution for real-time object detection models through its lightweight design and structural flexibility. By integrating the ADown-Conv module into LCFANet, it achieves a significant reduction in parameter count while maintaining object detection accuracy.

2.2.2. Cross-Level Hierarchical Feature Pyramid

The deep feature extraction network hierarchically processes input RGB images through multi-scale learning, generating four-level feature map vectors (Level 1–4) that progressively evolve from shallow pixel patterns to deep semantic representations. Specifically, Level 1 features predominantly retain low-level visual cues like edge details and texture patterns, while Level 4 features emphasize high-level semantic understanding through contextual relationships and object part configurations.

We subsequently construct a Cross-Level Hierarchical Feature Pyramid (CLHFP). The architecture implements a bidirectional feature integration process through backward pyramid construction for deep-to-shallow fusion and forward pyramid construction for feature refinement, as depicted in Figure 3. The backward pyramid is initiated with Level 4’s deep features, which undergo upsampling and concatenation with Level 3’s features before DTFA-C3k2 processing to generate outputs. This process sequentially repeats through Level 2 and Level 1, ultimately producing Scale 1’s features through progressive upsampling and fusion. The forward pyramid is then activated by downscaling Scale 1 through ADown-Conv to , which combines with the backward pyramid’s residual features via concatenation and DTFA-C3k2 refinement to create Scale 2 of . This pattern continues through subsequent scales: Scale 2’s downscaled features merge with the backward pyramid’s components to form Scale 3 of , while Scale 3 integrates with the original Level 4 inputs to finalize Scale 4 of . The complete multi-scale representation subsequently feeds into a scale-aware detection head for coordinated object recognition tasks.

The CLHFP architecture demonstrates substantial advancements in small-scale pest target image target detection through three principal contributions. Firstly, it implements bidirectional cross-resolution feature propagation, where the backward pyramid facilitates deep-to-shallow semantic enrichment through successive upsampling and concatenation operations, while the forward pyramid achieves feature consolidation via ADown-Conv-driven hierarchical downsampling. Secondly, the integration of DTFA-C3k2 modules at each fusion stage enables adaptive feature recalibration, effectively suppressing redundant activation while optimizing feature utilization. Thirdly, the architecture establishes a multi-granularity representation hierarchy through four geometrically progressive feature maps, which synergistically preserve high-frequency texture details and contextual semantics. The CLHFP permits the optimization of detection sensitivity for small-scale targets.

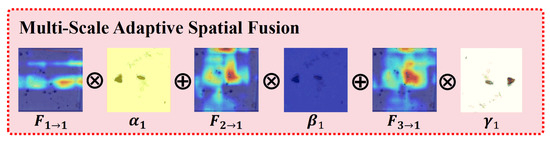

2.2.3. Multi-Scale Adaptive Spatial Fusion Detection Head

CLHFP addresses varying-scale feature fusion challenges. While feature pyramids remain fundamental in modern detection frameworks, they exhibit inherent limitations in one-stage detectors (e.g., YOLO, SSD) due to inter-scale feature conflicts. Conventional implementations employ heuristic-based feature assignment: high-level features (with a lower resolution but broader receptive fields) detect large objects, while low-level features (higher resolution with detailed textures) handle small targets. However, this rigid allocation creates conflicting gradient signals when processing images containing mixed-scale objects: regions designated as positive samples at one pyramid level are simultaneously treated as background at adjacent levels. The conflict becomes particularly pronounced in our architecture’s four-scale feature outputs, where overlapping receptive fields across pyramid levels amplify contradictory feature responses. This inter-layer discord disrupts gradient computation during backpropagation, ultimately compromising model convergence and detection accuracy.

To address multi-scale feature conflicts in feature pyramids within single-shot detectors, the ASFF method is proposed [36]. This approach enhances scale-invariant feature representation through learnable spatial weighting mechanisms, where features from different pyramid levels are adaptively combined based on their discriminative power at each spatial location. The ASFF framework dynamically suppresses conflicting signals while amplifying consensus patterns across scales, effectively resolving the inherent gradient interference caused by heuristic-based feature assignment in conventional pyramid architectures.

In this section, a Multi-Scale Adaptive Spatial Fusion (MSASF) module is presented, designed specifically for the four-scale feature maps generated by CLHFP, as illustrated in Figure 3. The MSASF module at level i () performs varying-scale feature fusion through resolution-adaptive resampling and spatially weighted integration. This process combines the feature map with two adjacent-scale features via dual-path alignment: (1) for upsampling, the channel dimensions are first compressed by a convolutional layer to match ’s channel count , followed by bilinear interpolation to achieve resolution parity. (2) Downsampling employs scale-specific operators: a max-pooling layer (stride = 1) for scaling ratios, a 2-stride convolution for ratios, and a max-pooling layer (stride = 4) for ratios, each simultaneously adjusting channel dimensions and spatial resolution. Crucially, the module learns spatial attention maps through depth-wise separable convolutions and sigmoid activation, enabling pixel-wise adaptive fusion weights across scales. This mechanism dynamically balances contributions from different pyramid levels ( to resolutions) while resolving channel-depth disparities (64 to 256 channels) inherent in LCFANet’s hierarchical features. The fused output maintains discriminative power for both high-frequency textures and contextual semantics through this resolution-aware blending paradigm.

Next, we introduce the adaptive fusion strategy, as illustrated in Figure 5. For MSASF-1 to MSASF-4, the fusion formulas are as follows:

where corresponds to the output of MSASF-i, and represents the feature map transferred from Scale j to MSASF-i through resizing. , and denote the spatial adaptive weights for at three different scales to . , , and are obtained as follows:

where the parameters , , and are derived through the softmax normalization of their corresponding control parameters , , and . Specifically in the MSASF-1 module, these control parameters are generated via dedicated convolutional layers that process the input feature maps , , and , respectively, forming learnable weight scalars that undergo optimization through standard backpropagation during network training.

Figure 5.

Adaptive fusion strategy of MSASF module, as exemplified by MSASF-1.

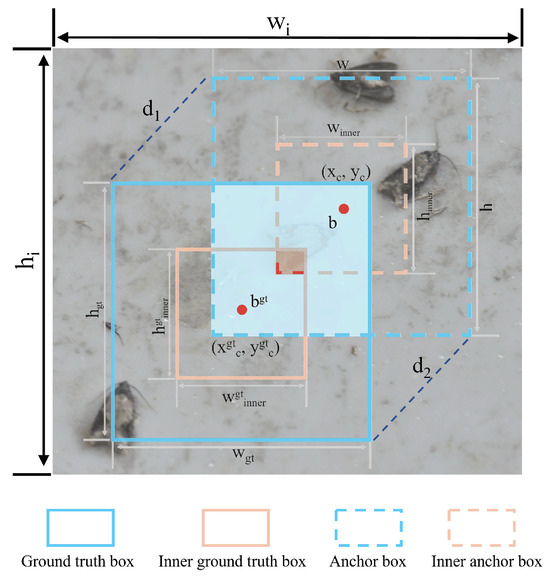

2.2.4. MPDINIoU Loss Function

The improvement of the localization accuracy for small objects remains a key objective in loss function design. Conventional localization loss functions, such as IoU loss and CIoU loss, lack specific considerations for tiny targets. To address this problem, we introduce the concepts of InnerIoU [37] and MPDIoU [38] and propose the MPDINIoU loss function. MPDINIoU is depicted in Figure 6 through a holistic integration of spatial overlap metrics, centroid displacement analysis, and dimensional variance assessment, with particular emphasis on inter-region intersection characteristics. The proposed approach significantly improves LCFANet’s capability for detecting diminutive objects in trap imagery.

Figure 6.

The diagram of MPDINIoU.

The MPDINIoU dynamically scales the auxiliary detection region through an adaptive scaling , facilitating reliable small-scale target detection. The scaling coefficient is empirically constrained within the interval . Values exceeding 1 trigger the generation of an expanded reference frame surpassing the ground-truth boundary, thereby extending the effective optimization space for low IoU instances. Conversely, sub-unity scaling induces a contracted detection window, which amplifies the gradient magnitude during backpropagation through intensified loss computation compared to conventional IoU metrics. The localization precision is further refined by incorporating spatial deviation metrics derived from coordinate offsets (denoted as , ) between corresponding vertices of the primary and auxiliary bounding boxes. The MPDINIoU is shown below:

where denote the boundary coordinates and the center point of the inner anchor box, while correspond to the inner ground truth box.

The input image possesses dimensional parameters , where and denote its vertical and horizontal extents, respectively. The ground-truth annotation is defined by its top-left vertex coordinates and bottom-right vertex , while the predicted bounding region is characterized by the initial anchor point and terminal anchor point . The MPDINIoU loss function is as follows:

3. Results

3.1. Model Training Parameters and Evaluation Metrics

We conducted comparative experiments, ablation studies, and generalization validation experiments in this section. All experiments were implemented on an IW4210-8G server with 8 NVIDIA GeForce RTX 2080 Ti GPUs running Ubuntu 16.04 LTS.

To assess LCFANet’s effectiveness in small-scale pest image target detection, we performed extensive comparative analyses against leading real-time detection methods. The evaluation framework employed key metrics: precision, recall, mAP50 (mean Average Precision at a IoU threshold), and mAP50-95 (averaged across IoU thresholds from to in increments). The central objective was to systematically analyze the balance between detection accuracy and computational resource requirements, demonstrating LCFANet’s superior performance characteristics in practical agricultural pest detection applications. The calculation formulas are as follows:

where denotes that the model correctly predicts the positive class, and denotes that the model incorrectly predicts the positive class.

where denotes that the model fails to detect an actual positive instance.

where n signifies the total number of object classes, and stands for the average precision of the i-th category. Specifically, each corresponds to the area beneath the precision–recall curve generated for its respective class, calculated by integrating the precision values across all recall levels for that category.

where signifies the precision of class i corresponding to recall .

The params quantifies the model’s scale by computing total trainable parameters. The FLOPs measures the computational workload through floating-point operations per inference cycle, serving as an indicator of hardware resource demands.

3.2. Detection Performance and Generalization Experiments of LCFANet

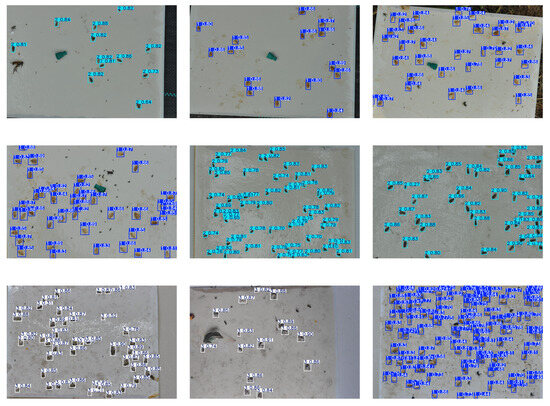

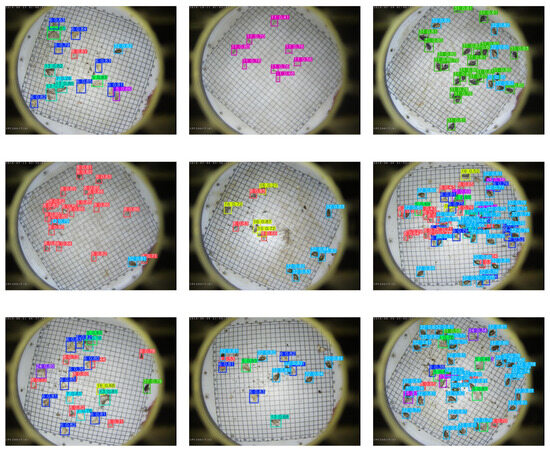

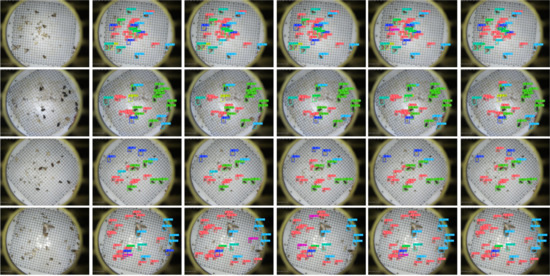

The LCFANet model, an enhanced iteration of YOLOv11, includes 5 variants with escalating parameter sizes as well as computational demands (GFLOPs). Since model lightweighting is a primary focus of our study, we selected the LCFANet-n variant (the version with the smallest Params and GFLOPs) as our chosen architecture. We trained the LCFANet-n model separately on both our private dataset and the Pest24 dataset, with representative testing results illustrated in Figure 7 and Figure 8. The detection results of LCFANet-n are presented in Table 1. The detection performance of the three pest species on our private dataset is shown in Table 2.

Figure 7.

Test examples on our private dataset.

Figure 8.

Test examples on the Pest24 [31] dataset.

Table 1.

Detection performance of LCFANet-n on the test datasets.

Table 2.

Detection performance of three pest species on our private dataset.

3.3. Comparison Experiments

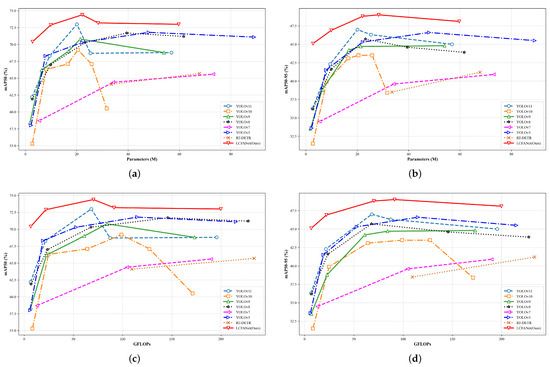

To validate the performance of LCFANet, we conducted comparative experiments divided into two components: one focusing on benchmarking against SOTA generic object detection models, and the other comparing with SOTA lightweight agricultural pest image detection models. In the comparative experiments with general object detection models, we established a benchmarking framework involving YOLOv5-v11, SSD [39], Faster R-CNN, as well as RT-DETR [40]. These models were evaluated against LCFANet across their respective variants categorized by ascending order of parameter counts. Comprehensive cross-version comparisons were conducted under equivalent computational constraints. The comparison experiments were validated on the Pest24 dataset. The results are presented in Figure 9 and Table 3. To further confirm the exceptional performance of LCFANet, we performed comparative experiments on the Pest24 dataset, evaluating it against 4 state-of-the-art lightweight pest detection models, as shown in Table 4. To visually illustrate the detection results of LCFANet-n, we provide selected detection result examples in Figure 10.

Figure 9.

Detection performance comparison between LCFANet and other SOTA baseline algorithms: (a) the mAP50 curves corresponding to models with different parameters. (b) The mAP50-95 curves corresponding to models with different parameters. (c) The mAP50 curves corresponding to models with different GFLOPs. (d) The mAP50-95 curves corresponding to models with different GFLOPs.

Table 3.

Performance comparison between LCFANet and other SOTA baseline algorithms.

Table 4.

Performance comparison between LCFANet and other SOTA lightweight pest image detection algorithms.

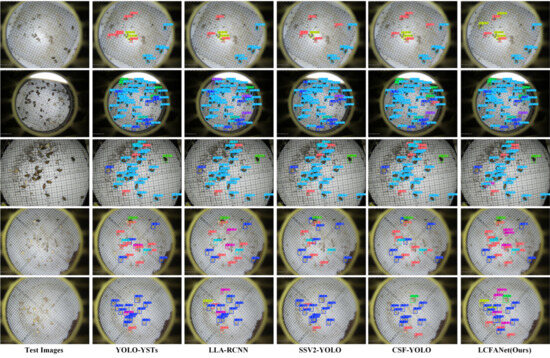

Figure 10.

Detection result examples of LCFANet and other SOTA lightweight pest image detection algorithms.

3.4. Ablation Experiments

To achieve accurate detection of small-sized pest image targets, we introduced the DTFA-C3k2 module, CLHFP, and MSASF module in the LCFANet detection model, while proposing an MPDINIoU loss function. To realize model lightweighting, the ADown-Conv module was incorporated. Ablation studies were performed to verify the efficacy of these functional modules, with outcomes detailed in Table 5.

Table 5.

Results of the ablation experiments.

Ablation experiments were carried out on the LCFANet-n model, separated into two parts. The initial phase examines the effect of each innovation on model performance, including model parameters, computational costs, and average precision. In Table 5, the performance parameters of each innovation are compared with those of YOLOv11-n (without any innovations), with improvements highlighted in red and degradations marked in green. The second part analyzes the combined effects of innovations by incrementally adding components and comparing results with/without each added module. Performance improvements are highlighted in red, while degradations are marked in green.

4. Discussion

Timely detection and intervention of pests are prerequisites for effective pest control and agricultural loss reduction. To achieve this, an increasing number of studies employ deep learning methods to process images captured by pest traps or mobile devices, thereby identifying pest species and quantities to guide control measures. Wang et al. [11] enhanced the YOLOv8 network, proposing the CSF-YOLO detection model. Feng et al. [15] developed the LCDDN-YOLO model based on YOLOv8. Huang et al. [13] introduced YOLO-YSTs using YOLOv10, improving detection accuracy while achieving lightweight deployment via a modified YOLOv10n. Our analysis reveals that customized adaptations of high-performing generic detection models are viable for addressing challenges in pest imagery, such as small target sizes, debris clutter, overlapping pests, and inter-species similarity.

Therefore, we selected the currently best-performing YOLOv11 model and proposed an LCFANet model based on YOLOv11 to address challenges in pest trap images such as abundant debris, overlapping pests, small pest targets, multiple pest species, and similar image features among different species. The model improves pest detection accuracy through the proposed DTFA-C3k2 module, CLHFP, MSASF module, and MPDIoU loss function, while achieving lightweight deployment via the introduction of the ADown-Conv module.

4.1. Experimental Results’ Discussion

We describe the conducted detection performance and generalization experiments, comparison experiments, and ablation experiments in Section 3.

In the detection performance and generalization experiments, as illustrated in Figure 7 and Table 2, the LCFANet-n model demonstrates a precise detection of three small-target pest species in trap images. On a test set of 189 images containing 2985 detection instances, LCFANet-n achieves a precision of 92.4%, a recall of 91.7%, an mAP50 of 86.8%, and an mAP50-95 of 54.7%. On the Pest24 dataset containing 24 pest categories, the LCFANet-n model also demonstrates robust detection performance, as shown in Figure 8. Evaluated on a test set of 5075 images with 38,666 detection instances, LCFANet-n achieves a precision of , a recall of , an mAP50 of , and an mAP50-95 of . From the detection results on both public and private datasets, we observe that the LCFANet-n model demonstrates strong performance in small-sized, multi-category agricultural pest detection tasks, exhibiting robust generalization capability.

In the comparison experiments, we conducted experiments on both private datasets and the public Pest24 dataset, demonstrating LCFANet’s excellent generalization performance across different datasets. As illustrated in Figure 9, where model Params and GFLOPs are plotted on the horizontal axes against detection precision metrics (mAP50 and mAP50-95) on the vertical axes, LCFANet consistently outperforms baseline models across all model scales. Table 3 provides a detailed comparison of params, GFLOPs, precision, recall, mAP50, and mAP50-95 for all evaluated models. The lightweight LCFANet-n variant demonstrates a competitive efficiency with parameters ( higher than YOLOv9-t, the most parameter-efficient baseline) and GFLOPs ( higher than YOLOv5-n, the lowest-computation baseline), while achieving significant accuracy improvements of mAP50 and mAP50-95 over YOLOv11-n, the best-performing lightweight baseline. Across all model variants, LCFANet maintains consistent performance superiority, particularly with the LCFANet-m attaining SOTA detection precision at a mAP50 and a mAP50-95, effectively balancing computational demands and detection precision in small-pest detection scenarios.

To further confirm the exceptional performance of LCFANet, we performed comparative experiments on the Pest24 dataset, evaluating it against 4 state-of-the-art lightweight pest detection models, as shown in Table 4. As evidenced by Table 4, the LCFANet-n model achieves optimal lightweight design while outperforming all 4 SOTA models in detection accuracy. Specifically, it demonstrates a improvement in mAP50 over the top-performing model LLA-RCNN and a improvement in mAP50-95 over CSF-YOLO, establishing its effectiveness in balancing computational efficiency and precision for small-pest detection applications. The LCFANet-n model achieves SOTA performance in agricultural small-pest image target detection, demonstrating the highest precision, recall, and mAP among comparative models.

To visually illustrate the detection results of LCFANet-n, we provide selected detection result examples in Figure 10. It can be observed that in small-pest image target detection tasks, the LCFANet-n model performs the best. For small-sized target detection issues, the LCFANet-n model can accurately detect targets, while other comparison models exhibit missed detections. In detecting targets with similar image features, the LCFANet-n models can accurately distinguish between different pest species, whereas other comparison models show misclassifications. For partially occluded targets, LCFANet-n achieves accurate detection, while other models fail to detect it. These results further confirm that the LCFANet-n model demonstrates superiority in small-pest image target detection tasks; achieves precise classification of targets with similar image features, such as the shape, size, color, and texture of pest images; as well as effectively handles complex scenarios such as occlusions.

In ablation experiments, by analyzing Table 5, it can be concluded that the DTFA-C3k2 module, CLHFP, and MSASF module all contribute to improved model accuracy, thereby demonstrating their positive effects in integrating shallow pixel features with deep semantic features and enhancing feature utilization. Among these, CLHFP demonstrated the most significant improvement, increasing the mAP50 by and mAP50-95 by . However, their introduction also led to increased model parameters and computational costs. To address this, we incorporated the lightweight ADown-Conv module, which reduced the parameters by approximately and computational costs by approximately . While lightweighting initially caused a slight accuracy decline, the detection performance saw further improvement with the MPDINIoU loss function. These ablation studies confirm the proposed modules’ effectiveness in improving both precision and model efficiency.

4.2. Limitations and Future Works

Although the proposed method demonstrates good accuracy and lightweight performance for small-target pest detection, some limitations remain. Firstly, the growth rate and trend of pest populations are key indicators for assessing infestation severity. However, our current method can only detect pest categories and counts in single images, without the capability to analyze population dynamics. In future work, we will investigate temporal patterns in pest trap images to establish statistical models, enabling precise monitoring and management of pest outbreaks by integrating ecological patterns. Secondly, current small-target pest detection relies on supervised deep learning requiring substantial training samples for accuracy. However, in practical agricultural scenarios, some pest species occur infrequently, creating significant challenges for sample collection. Nevertheless, monitoring these rare pests remains essential. Therefore, we will explore semi-supervised and unsupervised deep learning approaches to achieve accurate identification and detection for pest species with limited training samples.

5. Conclusions

The development of high-precision and computationally efficient models for agricultural small-target pest detection remains a critical research focus. This paper presents LCFANet, an innovative deep learning framework specifically designed for small agricultural pest detection, which addresses three key challenges: the accurate identification of small-sized targets under complex imaging conditions, precise classification of visually similar species, and robust detection of partially occluded specimens. To address these challenges, this paper proposes LCFANet, a lightweight small-target agricultural pest detection model, with the following key contributions:

- (1)

- The proposed DTFA-C3k2 module effectively handles scale variations through the spatiotemporal fusion of multi-receptive-field features, preserving cross-scale fine-grained textures and structural details to enhance detection accuracy.

- (2)

- The CLHFP architecture systematically reorganizes multi-scale feature representations from the backbone network, overcoming challenges posed by target scale diversity and complex background interference.

- (3)

- The MSASF module implements learnable adaptive fusion of four-scale features from CLHFP, significantly improving detection performance.

- (4)

- The ADown-Conv modules in both feature extraction and fusion networks achieve model compression, reducing parameters by and computational costs by while maintaining detection accuracy.

Extensive experiments on both our proprietary dataset and the public Pest24 benchmark demonstrate LCFANet’s superior performance. Compared with existing pest detectors, our model achieved state-of-the-art results with and improvements in mAP50 and mAP50-95, respectively, while maintaining an efficient architecture ( parameters, GFLOPs). These advancements position LCFANet as an optimal solution for real-time agricultural pest monitoring applications.

Author Contributions

Conceptualization, S.H. and Y.T. (Yunong Tian); methodology, S.H. and Y.T. (Yunong Tian); validation, Z.L.; investigation, Y.T. (Yong Tan); resources, S.H. and Y.T. (Yunong Tian); data curation, Z.L.; writing—original draft preparation, S.H. and Y.T. (Yong Tan); writing—review and editing, Z.L.; supervision, Z.L. and S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China (Grant No. 62206275), the Science and Technology Research Program of Chongqing Municipal Education Commission (Grant Nos. KJZD-K202401406, KJQN202101446, KJQN20240143), and Natural Science Foundation of Chongqing (Grant No. cstc2021jcyj-msxmX0510).

Data Availability Statement

The data used in this study are available upon request from the corresponding author via email.

Acknowledgments

Acknowledgments for the data support from Chinese Academy of Sciences.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, Z.; Luo, Y.; Wang, L.; Sun, D.; Wang, Y.; Zhou, J.; Luo, B.; Liu, H.; Yan, R.; Wang, L. Advancements in Life Tables Applied to Integrated Pest Management with an Emphasis on Two-Sex Life Tables. Insects 2025, 16, 261. [Google Scholar] [CrossRef]

- Guo, B.; Wang, J.; Guo, M.; Chen, M.; Chen, Y.; Miao, Y. Overview of Pest Detection and Recognition Algorithms. Electronics 2024, 13, 3008. [Google Scholar] [CrossRef]

- Wang, S.; Xu, D.; Liang, H.; Bai, Y.; Li, X.; Zhou, J.; Su, C.; Wei, W. Advances in Deep Learning Applications for Plant Disease and Pest Detection: A Review. Remote Sens. 2025, 17, 698. [Google Scholar] [CrossRef]

- Paymode, A.S.; Malode, V.B. Transfer Learning for Multi-Crop Leaf Disease Image Classification Using Convolutional Neural Network VGG. Artif. Intell. Agric. 2022, 6, 23–33. [Google Scholar] [CrossRef]

- Khan, D.; Waqas, M.; Tahir, M.; Islam, S.U.; Amin, M.; Ishtiaq, A.; Jan, L. Revolutionizing Real-Time Object Detection: YOLO and MobileNet SSD Integration. J. Comput. Biomed. Inform. 2023, 6, 41–49. [Google Scholar]

- Hussain, M.; Khanam, R. In-Depth Review of YOLOv1 to YOLOv10 Variants for Enhanced Photovoltaic Defect Detection. Solar 2024, 4, 351–386. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, S.; Li, E.; Yang, G.; Liang, Z.; Tan, M. MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 2023, 213, 108233. [Google Scholar] [CrossRef]

- Xu, W.; Xu, T.; Thomasson, J.A.; Chen, W.; Karthikeyan, R.; Tian, G.; Shi, Y.; Ji, C.; Su, Q. A lightweight SSV2-YOLO based model for detection of sugarcane aphids in unstructured natural environments. Comput. Electron. Agric. 2023, 211, 107961. [Google Scholar] [CrossRef]

- Wang, C.; Wang, L.; Ma, G.; Zhu, L. CSF-YOLO: A Lightweight Model for Detecting Grape Leafhopper Damage Levels. Agronomy 2025, 15, 741. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 24 October 2024).

- Huang, Y.; Liu, Z.; Zhao, H.; Tang, C.; Liu, B.; Li, Z.; Wan, F.; Qian, W.; Qiao, X. YOLO-YSTs: An Improved YOLOv10n-Based Method for Real-Time Field Pest Detection. Agronomy 2025, 15, 575. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Feng, H.; Chen, X.; Duan, Z. LCDDN-YOLO: Lightweight Cotton Disease Detection in Natural Environment, Based on Improved YOLOv8. Agriculture 2025, 15, 421. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Mu, J.; Sun, L.; Ma, B.; Liu, R.; Liu, S.; Hu, X.; Zhang, H.; Wang, J. TFEMRNet: A Two-Stage Multi-Feature Fusion Model for Efficient Small Pest Detection on Edge Platforms. AgriEngineering 2024, 6, 4688–4703. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Li, K.-R.; Duan, L.-J.; Deng, Y.-J.; Liu, J.-L.; Long, C.-F.; Zhu, X.-H. Pest Detection Based on Lightweight Locality-Aware Faster R-CNN. Agronomy 2024, 14, 2303. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Guan, B.; Wu, Y.; Zhu, J.; Kong, J.; Dong, W. GC-Faster RCNN: The Object Detection Algorithm for Agricultural Pests Based on Improved Hybrid Attention Mechanism. Plants 2025, 14, 1106. [Google Scholar] [CrossRef]

- Koay, H.V.; Chuah, J.H.; Chow, C.; Chang, Y. Detecting and recognizing driver distraction through various data modality using machine learning: A review, recent advances, simplified framework and open challenges (2014–2021). Eng. Appl. Artif. Intell. 2022, 115, 105309. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 1, 2, 4, 6. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2021, arXiv:2010.04159. [Google Scholar]

- Liu, H.; Zhan, Y.; Sun, J.; Mao, Q.; Wu, T. A transformer-based model with feature compensation and local information enhancement for end-to-end pest detection. Comput. Electron. Agric. 2017, 138, 200–209. [Google Scholar] [CrossRef]

- Zhang, H.; Gong, Z.; Hu, C.; Chen, C.; Wang, Z.; Yu, B.; Suo, J.; Jiang, C.; Lv, C. A Transformer-Based Detection Network for Precision Cistanche Pest and Disease Management in Smart Agriculture. Plants 2025, 14, 499. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Lv, C. TinySegformer: A lightweight visual segmentation model for real-time agricultural pest detection. Comput. Electron. Agric. 2024, 218, 180740. [Google Scholar] [CrossRef]

- Bottou, L.; Curtis, F.E.; Fang, L.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. SIAM Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, S.; Dong, S.; Zhang, G.; Yang, J.; Li, R.; Wang, H. Pest24: A large-scale very small object data set of agricultural pests for multi-target detection. Comput. Electron. Agric. 2020, 175, 105585. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOV11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Zhang, Z.; Bao, L.; Xiang, S.; Xie, G.; Gao, R. B2CNet: A Progressive Change Boundary-to-Center Refinement Network for Multitemporal Remote Sensing Images Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 11322–11338. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar]

- Ma, S.; Xu, Y. MPDIoU: A Loss for Efficient and Accurate Bounding Box Regression. arXiv 2023, arXiv:2307.07662. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Lv, W.; Zhao, Y.; Xu, S.; Wei, J.; Wang, G.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).