An Automated Image Segmentation, Annotation, and Training Framework of Plant Leaves by Joining the SAM and the YOLOv8 Models

Abstract

1. Introduction

2. Related Work

2.1. Applications of Visual Object Detection and Image Segmentation

2.2. Visual Object Detection Models

2.3. Image Segmentation Methods

2.4. Visual Object Annotation and Its Efficiency and Cost

3. Materials and Methods

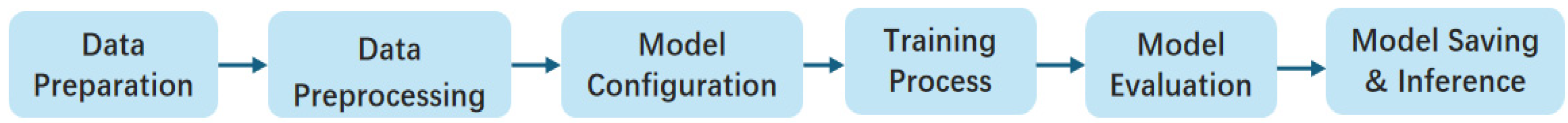

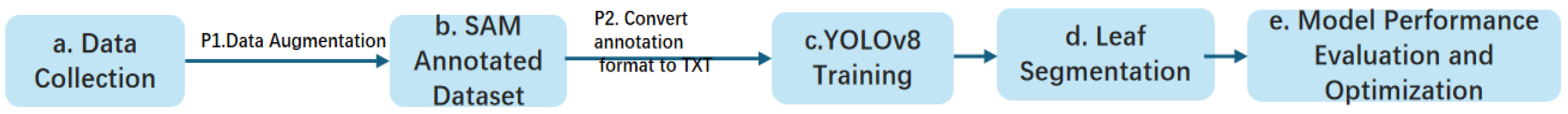

3.1. Framework Overview

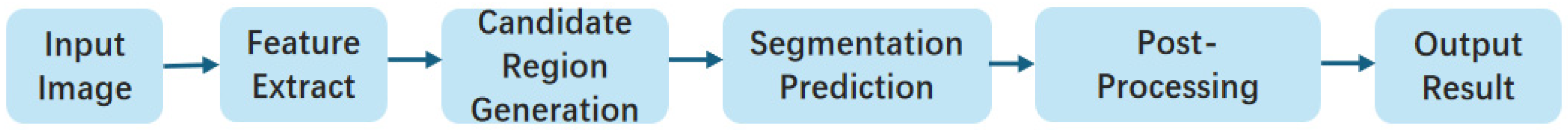

3.2. Model Integration

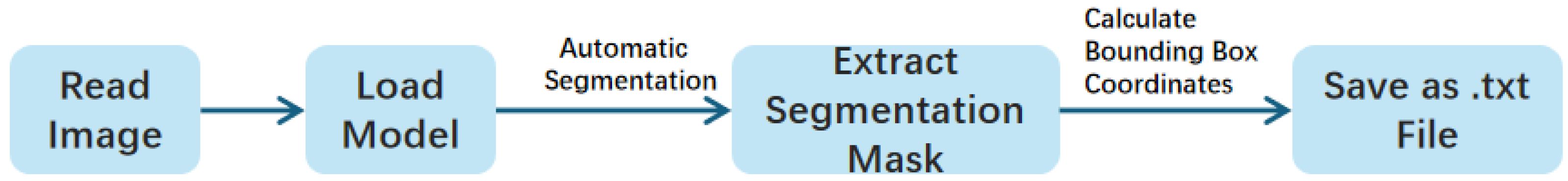

Automatic Conversion from SAM’s Output Labels to the VOC Format

3.3. Automatic Learning by Using the YOLOv8 Framework and Labels Converted

3.4. Performance Evaluation Indicators and Methods

4. Results

4.1. Experimental Datasets

4.2. Computing Environments

4.3. Segmentation Effect of Plant Leaf Scene Image Based on SAM Segmentation Model

4.4. Performances of Automatic Conversion from Segmentation Results to Annotated Data

4.5. Performance of the Training of the YOLOv8 Model

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Allabadi, G.; Lucic, A.; Wang, Y.X.; Adve, V. Learning to Detect Novel Species with SAM in the Wild. Int. J. Comput. Vis. 2024, 133, 2247–2258. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision-ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.; Bischof, L. Seeded Region Growing. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 641–647. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. GrabCut: Interactive Foreground Extraction using Iterated Graph Cuts. ACM Trans. Graph. (TOG) 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Jiang, W.; Zhou, H.; Shen, Y.; Liu, B.; Fu, Z. Image segmentation with pulse-coupled neural networks and Canny operators. Comput. Electr. Eng. 2015, 46, 528–538. [Google Scholar] [CrossRef]

- Sun, S.; Jiang, M.; He, D.; Long, Y.; Song, H. Recognition of green apples in an orchard environment by combining the GrabCut model and Ncut algorithm. Biosyst. Eng. 2019, 187, 201–213. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany; pp. 234–241. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested U-Net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; Proceedings 4; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 1055–1059. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 4015–4026. [Google Scholar]

- Cao, Y.; Zhao, Z.; Huang, Y.; Lin, X.; Luo, S.; Xiang, B.; Yang, H. Case instance segmentation of small farmland based on Mask R-CNN of feature pyramid network with double attention mechanism in high- resolution satellite images. Comput. Electron. Agric. 2023, 212, 108073. [Google Scholar] [CrossRef]

- Yang, H.; Lang, K.; Wang, X. Identify and segment microalgae in complex backgrounds with improved YOLO. Algal Res. 2024, 82, 103651. [Google Scholar] [CrossRef]

- Wang, A.; Qian, W.; Li, A.; Xu, Y.; Hu, J.; Xie, Y.; Zhang, L. NVW-YOLOv8s: An improved YOLOv8s network for real-time detection and segmentation of tomato fruits at different ripeness stages. Comput. Electron. Agric. 2024, 219, 108833. [Google Scholar] [CrossRef]

- Geng, Q.; Zhang, H.; Gao, M.; Qiao, H.; Xu, X.; Ma, X. A rapid, low-cost wheat spike grain segmentation and counting system based on deep learning and image processing. Eur. J. Agron. 2024, 156, 127158. [Google Scholar] [CrossRef]

- Wang, S.; Su, D.; Jiang, Y.; Tan, Y.; Qiao, Y.; Yang, S.; Feng, Y.; Hu, N. Fusing vegetation index and ridge segmentation for robust vision-based autonomous navigation of agricultural robots in vegetable farms. Comput. Electron. Agric. 2023, 213, 108235. [Google Scholar] [CrossRef]

- Ankita, K.; Kale, P.D.; Tanvi, M.; Samrudhi, S.; Shweta, J. Comparative Analysis of Image Annotation Tools: LabelImg, VGG Annotator, Label Studio, and Roboflow. Int. J. Emerg. Technol. Innov. Res. 2024, 11, n398–n403. [Google Scholar]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-based Tool for Image Annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Koch, A.; Coppert, R.; Kanas, J.T. The Cost of Data Labeling in Machine Learning Models. In Advances in AI Data Management; Springer: Berlin/Heidelberg, Germany, 2022; pp. 75–88. [Google Scholar]

- Northcutt, A.; Schutze, J.B.; Davis, J.A. Evaluating the Impact of LabelNoise on Image Classification. In Proceedings of the Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021. [Google Scholar]

- Stewart, A.J.; O’Hare, G.P.A.; O’Hare, K.P. Challenges in Data Labeling for Agricultural Applications. In Artificial Intelligence in Agriculture; Lopes, D.M.A.C., Melo, J.C.A., Eds.; Springer: Cham, Switzerland, 2020; pp. 25–45. [Google Scholar]

- Ultralytics. Ultralytics. 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 5 January 2024).

- Facebook Research. Segment Anything. 2023. Available online: https://github.com/facebookresearch/segment-anything (accessed on 6 January 2024).

| Partition Model | Average Time/s for GPU-Based Single Image Segmentation | Average CPU-Based Single Image Segmentation Time/s | GPU Computing Performance Acceleration Ratio | CPU Performance Acceleration Ratio |

|---|---|---|---|---|

| SAM | 96.7 | 98.4 | ||

| SAM + YOLOv8 | 0.03 | 0.3 | 3223.33 | 328 |

| Plant Leaf Type | Precision % | Recall Rate % | F1 Score % | MSE | RMSE | PSNR |

|---|---|---|---|---|---|---|

| wool | 87 | 89 | 87.9 | 0.003 | 0.055 | 38.2 |

| Mulberry leaf peony | 86 | 84 | 84.9 | 0.005 | 0.071 | 35.8 |

| lilac | 87 | 90 | 88.5 | 0.002 | 0.045 | 40.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, L.; Olivier, K.; Chen, L. An Automated Image Segmentation, Annotation, and Training Framework of Plant Leaves by Joining the SAM and the YOLOv8 Models. Agronomy 2025, 15, 1081. https://doi.org/10.3390/agronomy15051081

Zhao L, Olivier K, Chen L. An Automated Image Segmentation, Annotation, and Training Framework of Plant Leaves by Joining the SAM and the YOLOv8 Models. Agronomy. 2025; 15(5):1081. https://doi.org/10.3390/agronomy15051081

Chicago/Turabian StyleZhao, Lumiao, Kubwimana Olivier, and Liping Chen. 2025. "An Automated Image Segmentation, Annotation, and Training Framework of Plant Leaves by Joining the SAM and the YOLOv8 Models" Agronomy 15, no. 5: 1081. https://doi.org/10.3390/agronomy15051081

APA StyleZhao, L., Olivier, K., & Chen, L. (2025). An Automated Image Segmentation, Annotation, and Training Framework of Plant Leaves by Joining the SAM and the YOLOv8 Models. Agronomy, 15(5), 1081. https://doi.org/10.3390/agronomy15051081