Abstract

The current image registration models have problems such as low feature point matching accuracy, high memory consumption, and significant computational complexity in heterogeneous image registration, especially in complex environments. In this context, significant differences in lighting and leaf occlusion in orchards can result in inaccurate feature extraction during heterogeneous image registration. To address these issues, this study proposes an AD-ResSug model for heterogeneous image registration. First, a VGG16 network was included as the encoder in the feature point encoder system, and the positional encoding was embedded into the network. This enabled us to better understand the spatial relationships between feature points. The addition of residual structures to the feature point encoder aimed to solve the gradient diffusion problem and enhance the flexibility and scalability of the architecture. Then, we used the Sinkhorn AutoDiff algorithm to iteratively optimize and solve the optimal transmission problem, achieving optimal matching between feature points. Finally, we carried out network pruning and compression operations to minimize parameters and computation cost while maintaining the model’s performance. This new AD-ResSug model uses evaluation indicators such as peak signal-to-noise ratio and root mean square error as well as registration efficiency. The proposed method achieved robust and efficient registration performance, verified through experimental results and quantitative comparisons of processing color with ToF images captured using heterogeneous cameras in natural apple orchards.

1. Introduction

Heterogeneous image registration involves aligning images captured with different imaging devices or at different time points to simultaneously utilize information from these multiple images. Due to the spatial resolution limitations of single-sensor band imaging, the target interpretation capacity of single-sensor images is limited, making it difficult for them to accurately and comprehensively represent the target object. Researching heterogeneous image registration technology creates conditions to solve these problems. Heterogeneous image registration utilizing Time-of-Flight (ToF) and color cameras often involves significant geometric differences. Different sensors have different imaging principles because of the wide variety of methods and means for collecting image data. Similar objects usually show significantly different visual characteristics in terms of their grayscale and texture [1], and there may be a high degree of complementarity and redundancy between different images. Therefore, heterogeneous image registration and fusion techniques can be used to obtain richer and clearer target information from the same scene. The premise of this technology is that accurate registration of heterogeneous images can effectively utilize the advantages of various sensors to integrate and provide multi-dimensional imaging results for the same scene, improving target information perception and the representational capacity of fused images [2].

1.1. Related Works

The literature describes two kinds of method traditionally used for image registration. The first type is based on template matching and includes grayscale or region-based image registration methods. The second type is feature-matching-based image registration [3].

Representative template-matching-based image registration methods include pixel comparison-based and filter-based image registration algorithms. These methods significantly improve adaptability to image-matching noise and further improve accuracy. However, their main problem is that they cannot meet the speed and accuracy requirements simultaneously.

Regarding image registration methods that use feature point matching, these include manual extraction and deep learning extraction. Representative algorithms used in manual feature matching methods include Scale Invariant Feature Transform (SIFT) [4], Speeded-Up Robust Features (SURFs) [5], Oriented FAST, and Rotating BRIEF (ORB) [6]. These methods perform well in solving the problems of rotation and scale consistency in image matching but fail to provide effective solutions for addressing heterogeneous images with noticeable noise; moreover, they have high computational complexity and slow running speed. Deep learning-based registration methods include single-link network models and end-to-end systems, according to the different structures of deep learning networks [7]. Commonly used networks include Siamese neural networks [8], graph attention networks [9], generative adversarial networks [10], graph neural networks [11], etc. These models have demonstrated good registration results in terms of efficiency and accuracy in learning image features and combining them, similar to corresponding measurement methods. However, these models are computationally complex, and their generalization ability needs to be improved.

Wang et al. [12] proposed MatchFormer with an interleaved attention mechanism for use with low-texture scenes. Xie et al. [13] proposed DeepMatcher to address difficulties in local feature matching, including insufficient matching accuracy between images with significant changes in appearance and limited robustness. Sarlin et al. [14] proposed the SuperGlue model for use in complex environments featuring significant changes in viewpoint and lighting. However, the model training process is long, and it is hard to ensure robustness and uniqueness when facing challenges such as weak changes in texture, viewpoint, or lighting. Lindenberger et al. [15] proposed LightGlue, introducing rotation encoding as a form of positional encoding through an adaptive inference mechanism and early point dropout strategy to capture the relative positional relationship between feature points, significantly improving inference speed while maintaining high accuracy. Viniavskyi et al. [16] proposed an image-matching model called OpenGlue based on open-source graph neural networks, adapting the graph neural network design to extend registration to large-scale datasets and complex scenes.

The above algorithms and models are commonly used to process homologous or unimodal images, but their registration performance when used with heterogeneous or multimodal images has been poor. With the significant development of feature matching methods, it should become possible to achieve the automatic and effective extraction of feature differences between image pairs, further improving matching accuracy and registration efficiency, which will be the focus of future research.

1.2. Highlights

In the actual apple orchards, the heterogeneous image registration effect may be affected by specific scenarios, such as the conditions from Time-of-Flight (ToF) and color cameras. An AD-ResSug model is proposed to address the heterogeneous image registration in an apple orchard. The specific contributions are as follows:

- (1)

- In the feature point extraction module, we adopt the Visual Geometry Group (VGG) network to process the information of the input image. Through stacking multiple convolutional layers and pooling layers, it can gradually extract different levels of features of the image.

- (2)

- The feature point encoder adds a residual module mechanism, allowing the Multi-Layer Perceptron (MLP) to show more significant advantages in fitting complex function mappings. The position encoding is embedded into neural networks to help the model better understand the spatial relationships between feature points.

- (3)

- We use the Sinkhorn AutoDiff algorithm for adaptive inference and solving optimization transmission problems. It is used to perform model pruning and network parameter compression on the network module of feature point matching to reduce computational complexity.

2. Materials and Methods

2.1. Materials and Datasets

This experiment used two publicly available datasets, including papple dataset [17] and kfuji dataset [18]. All of them were apple datasets. To ensure the richness of the image dataset and facilitate model training, relevant operations such as cropping were performed on the obtained images. Divide the image dataset into 6:2:2 ratios to obtain the training sets, verifying sets and testing sets required for the experiment, and enhance the data through operations such as mirroring and rotation to avoid overfitting and enrich the image dataset, thereby helping to improve the model’s generalization performance. In addition, to ensure the proposed AD-ResSug model is applicable to actual work, we adopt three self-built datasets for this model’s application task test. The self-built datasets were collected in different apple growth periods, such as early stage of rapid fruit enlargement, which was in the mid-June stage; fruit swelling period, which was in late July; and maturation period, which was in early September. These self-built datasets were collected from the heterogeneous camera in a natural apple orchard, Gansu, China [19]. All of them were ToF and color images. The heterogeneous image dataset used in this experiment is shown in Table 1.

Table 1.

Heterogeneous image dataset.

2.2. Model

2.2.1. Overall Framework of the Model

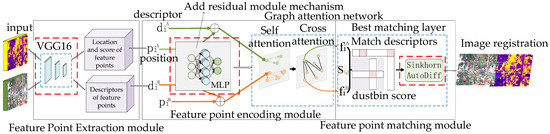

Based on the original SuperGlue model, an orchard heterogeneous image registration model named AD-ResSug is proposed, integrating an automatic differentiation algorithm and residual mechanism. This model framework includes three functional modules, such as feature point extraction, feature point encoding, and feature point matching. The structure of the AD-ResSug model is shown in Figure 1.

Figure 1.

AD-ResSug model structure.

The function of the feature point extraction module is to obtain feature points and generate corresponding descriptions of these feature points. VGG16 [20] is added as a shared encoder to capture image feature points and reduce image size. The feature point encoding module is used to process the position encoding of visual descriptors, introducing a residual [21] structured MLP network. Its purpose is to achieve dimensionality enhancement of low-dimensional features while effectively integrating visual appearance information with feature point position information. The function of the final feature point matching module is to obtain the best match by completing the matching score prediction through the matching layer, integrating an automatic differentiation algorithm [22]. Figure 2 shows the AD-ResSug framework.

Figure 2.

AD-ResSug model framework.

2.2.2. Modules

- (1)

- Feature point extraction module

The key to feature point extraction lies in higher-precision feature point detection, which can accurately detect more feature points and extract feature point information to obtain richer positional information [23]. Its principle is to extract feature points and corresponding descriptors from images through self-supervised deep learning networks [24]. The algorithm’s core lies in using deep learning to extract corner points from images as candidates for feature points and then further output stable feature points and their descriptors [25]. Due to the fact that corner points are usually located in high-contrast areas such as edge intersections in the image, feature points selected from these areas can maintain better stability under different lighting conditions and viewing angles and have rich local information, providing better feature description capabilities. This process avoids traditional manual annotation work and uses a self-supervised approach to complete training [26].

This study uses VGG16 [20] to process the extracted feature point. Its primary function is to obtain critical information, output the location and descriptor, and provide effective input for subsequent image-matching tasks.

Figure 3 gives the details of the feature point detection network.

Figure 3.

Feature point detection network.

- (2)

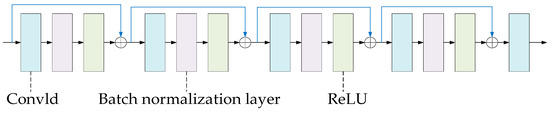

- Feature point Encoding Module

The feature point encoding module of the model will receive a set of key point position information and corresponding descriptors [27]. This study uses an MLP as the encoder for feature points. A residual structure is added to the encoder. Its purpose is to deal with gradient vanishing and improve the accuracy of feature point detection. The position information will be encoded into a high-dimensional vector, namely position encoding, by adding a residual structure MLP. It contains the spatial position information of key points. It embeds the key point positions into the high-dimensional space, enabling the model to capture the spatial position relationships between key points more efficiently.

Combining the position information of feature points with their descriptors allows more discriminative feature representations to be obtained. Therefore, the positions of the feature points are fused with the descriptors here. Among them, MLP is used to transform low-dimensional features into high-dimensional space through an embedding layer, which achieves the fusion of visual appearance and feature point position information of the image. This encoding method enables subsequent attention mechanisms to consider the similarity of features in appearance and location comprehensively.

The feature point encoder is a one-dimensional convolutional neural network. In order to establish a clear order between the layers of the network, the Sequential class is used to stack multiple neural network layers in sequence. Sequential is a container class with a linear structure. When input to Sequential, the data will pass through each layer in the order of layer addition and finally obtain the model’s output. Define a neural network model with five convolutional layers to implement a feature point encoder. The parameter settings for each layer of the feature point encoder convolutional network are listed in Table 2.

Table 2.

Parameter of each layer of convolution network by feature point encoder.

The primary purpose of adding residual structures to neural networks is to enable the network to learn features better. Based on this method, we redesigned the feature point encoder in the network model to enable the network to pass information layer by layer through learning residual mappings, thereby simplifying the learning process. This study improves the MLP encoder structure based on the SuperGlue model, using four residual blocks to provide flexible network design choices. The number and size of hidden layers can be adjusted according to actual needs to adapt to different tasks and datasets. MLP can capture complex patterns in input data as a powerful feature extractor. Adding residual structures can further enrich feature representations and help the network converge faster during training. A structure diagram of the feature point encoder with residual structure added is shown in Figure 4.

Figure 4.

Structure diagram of feature point encoder with added residual structure.

The process of updating descriptors is to use MLP to calculate the encoded descriptors desc0 and desc1, to obtain incremental delta0 or delta1. Input descriptors into multiple fully connected layers of MLP to extract their hidden representations. By performing dot product operation on desc0 and desc1 and inputting the calculated results into the ReLU function, attention weights are obtained to calculate self-attention or cross-attention between descriptors. By using this attention weight, the incremental delta0 or delta1 between descriptors can be calculated. This can be achieved by multiplying the attention weights with descriptors and summing them up. Add the calculated increment delta0 or delta1 to the original descriptor desc0 or desc1 to update the descriptor. A flowchart of incremental computation using a neural network is shown in Figure 5.

Figure 5.

Neural network calculation incremental flowchart.

Among them, ① represents self-attention; ② represents cross-attention.

The above flowchart is an algorithm for updating descriptors, which captures important change information between descriptors by calculating the increment between descriptors.

- (3)

- Feature point matching module

In the feature point matching module of AD-ResSug, the automatic differentiation technique AutoDiff [22] is used to calculate gradients and achieve efficient and accurate differentiation automatically. The optimal matching layer creates a score matrix and expands it with dustbin. The Sinkhorn AutoDiff algorithm is adopted to obtain the optimal partial allocation.

This network model takes feature points and their descriptors from two images as inputs and outputs the matching relationships between these feature points. This process essentially involves determining the local correspondence and matching method between two sets of data points. The solution of the AutoDiff algorithm could deal with accuracy. This approach can solve some of the difficulties encountered in traditional methods, such as multiple feature points in the source image being incorrectly matched with the same feature point in the target image, especially in the natural heterogeneous image.

2.2.3. Improvement in Sinkhorn AutoDiff Algorithm

This study improves the Sinkhorn algorithm in the feature point matching module by introducing automatic differentiation techniques to calculate gradients, which enables direct backpropagation of Sinkhorn distances during the optimization process.

In Sinkhorn AutoDiff, automatic differentiation is used to calculate gradients in order to update model parameters during the optimization process.

2.3. Algorithm

The input contains two sets of feature points kpts0 and kpts1, two sets of descriptors desc0 and desc1, and dictionary data. The data include key value pairs, such as file names, matched feature point pairs, feature point score sets, descriptor dimensions, iteration times, and matching thresholds. The output includes matches0 and matches1 as matching feature point indices; Matchingscores0 and matchingscores1 are matching scores; Loss is the loss value; Skiptrain indicates whether to skip training. The heterogeneous image registration algorithm based on AD-ResSug is shown in Algorithm 1.

| Algorithm 1: Image registration algorithm based on AD-ResSug | |

| Input: kpts0, kpts1, desc0, desc1, data | |

| Output: matches0, matches1, matchingscores0, matchingscores1, loss, | |

| 1. | Begin |

| 2. | Input feature points and descriptors |

| 3. | Normalize feature points |

| 4. | Encode descriptors |

| 5. | Perform feature propagation |

| 6. | Perform graph neural network computation |

| 7. | Calculate the optimal transmission score |

| 8. | Obtain matching results based on the score threshold |

| 9. | Loss value calculation |

| 10. | End |

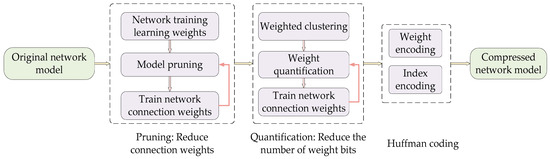

We conduct network pruning and compression operations to obtain fewer parameters and less computation while maintaining the model’s performance. The method is to remove the connections below the threshold in the AD-ResSug model. We compress AD-ResSug through cutting the connections with small value weights. The specific steps of network pruning are shown in Figure 6.

Figure 6.

Network pruning and model compression design flowchart.

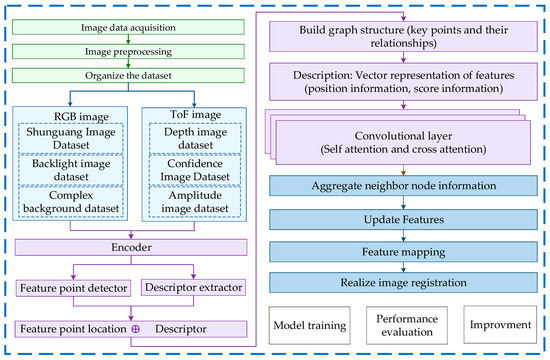

We made the above improvements to the model and designed an experimental plan to verify its performance. The experimental process includes image dataset acquisition, model improvement, model training, and performance evaluation. Figure 6 shows the architecture of an image registration system based on image data processing and feature extraction. The entire system starts with image data acquisition, goes through multiple processing steps, and finally achieves image registration, as well as model training, performance evaluation, and improvement. This study’s framework is shown in Figure 7.

Figure 7.

This study’s framework.

3. Results

3.1. Comparative Experiment

3.1.1. Test on Public Datasets

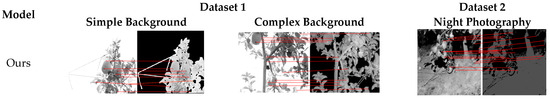

This study conducts comparative experiments with seven models, including SIFT, SURF, ORB, LoFTR [28], MatchFormer, SuperGlue, and LightGlue. The visualization analysis of heterogeneous image registration results is a comprehensive process that requires a combination of multiple methods and tools for comprehensive evaluation. Through these methods, the performance of registration algorithms can be better understood, providing valuable information for further research and applications. The experimental results of our model on Dataset 1 and Dataset 2 are shown in Figure 8. The average values of each indicator tested on Dataset 1 for each registration algorithm are shown in Table 3.

Figure 8.

Experimental results on Dataset 1 and Dataset 2.

Table 3.

The average value of each indicator tested on Dataset 1 in common registration algorithms.

3.1.2. Application Task Test on Self-Built Datasets

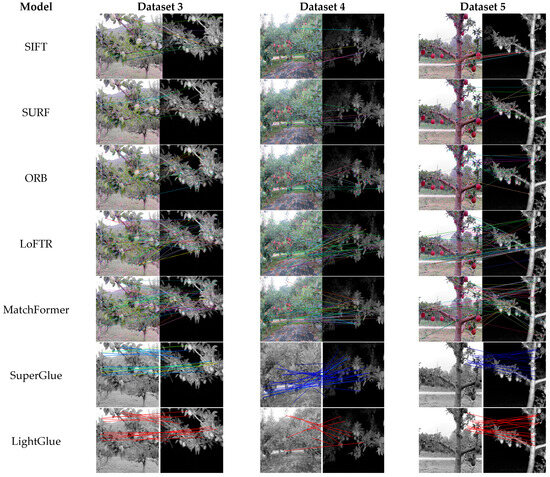

To ensure the proposed AD-ResSug model applies to this work, we adopt the self-built dataset for this model’s application task test. An experimental comparison of common image registration models on Dataset 3, Dataset 4 and Dataset 5 is shown in Figure 9.

Figure 9.

Experimental comparison of common image registration models on Datasets 3~5. Note: The color of the lines in the registration result image depends on the predicted confidence of the feature points. Red indicates high reliability, blue indicates low reliability, and lines of other colors indicate reliability between red and blue.

3.2. Comprehensive Evaluation Experiment of Model Performance

During the model testing and comparison phase, two public image datasets were selected for experimentation and comparison. Table 4 presents the evaluation results of the experimental output, showing the average performance metrics on 30 pairs of images. AUC@5 is the probability of a correct match in the first five recommendations for each image. Prec represents the proportion of correct matches among all recommendations. MScore represents the overall rating of the model. These indicators can help evaluate the performance and accuracy of the model. The results of the experiment are shown in Table 4.

Table 4.

Comparison table of results from experiments on different datasets.

3.3. Ablation Experiment

3.3.1. Feature Point Detection Ablation Experiment

The original SuperPoint is used for feature point extraction, while SuperGlue is used for feature point matching. On this basis, the improved AD-ResSug performs similarly to SuperPoint in feature point extraction and has a certain improvement in image registration efficiency. Compared with traditional feature extraction algorithms, it also has advantages. A qualitative comparison between the improved algorithm in this study and other common feature extraction algorithms is shown in Table 5.

Table 5.

Qualitative comparison with other common feature extraction algorithms.

3.3.2. Improved Model Ablation Experiment

It is necessary to conduct ablation experiments and analyze the AD-ResSug model in order to evaluate the actual effectiveness of different modules after improvement. The comparison results are shown in Table 6. The experiment was conducted by using the SuperGlue model as the reference core network to evaluate the overall performance of the network model. In the experiment, comparative experiments were conducted on the improved feature point encoding module, network pruning effect, and position encoding module to compare the numbers of feature point extraction, registration algorithm, and required time in the registration task.

Table 6.

Comparison table of ablation experiment results.

AD stands for AutoDiff, which means using automatic differentiation algorithms to achieve optimal allocation; Res represents the addition of a residual structure; NP represents performing network pruning operations; AD-ResSug means adding positional encoding and introducing residual module mechanism at the same time.

3.4. Model Performance Analysis

When conducting model performance analysis, five deep learning registration models were compared in terms of parameter count (Params) and runtime (Runtime) for performance testing, and the performance differences between several models were analyzed and compared. The performance analysis results of the model are shown in Table 7.

Table 7.

Model performance analysis table.

4. Discussion

From the performance analysis results, it can be seen that LoFTR has the highest number of parameters, while LightGlue has the lowest number of parameters. The parameter count of AD-ResSug is second only to LightGlue, and LoFTR has the highest running time, while AD-ResSug has the shortest running time. From this, it can be seen that the performance of the AD-ResSug model is relatively advantageous.

4.1. Comparative Algorithm Analysis

4.1.1. Discussion of Test on Public Datasets

In Table 3, the final matching results on Dataset 1 of several compared image registration methods show that traditional manual feature extraction matching methods can identify fewer feature points, have lower registration accuracy, and are far less efficient than deep learning-based feature extraction methods. From the common evaluation indicators, the improved model has further improved in several key indicators, such as root mean square error, peak signal-to-noise ratio, and relative global accuracy error.

4.1.2. Discussion of Application Task Test

To ensure the proposed AD-ResSug model can apply to our work, we conducted the application task test. The average values of various indicators tested on Dataset 5 using commonly used registration algorithms are shown in Table 8.

Table 8.

The average values of various indicators tested on Dataset 5 using commonly used registration algorithms.

Table 8 shows the test results of eight models on Dataset 5. This is to test the experimental performance of several commonly used registration models on self-built datasets. Through the comparison of experimental results, we found that the higher the PSNR value, the better the quality of the corresponding image. When this value is below 20, the image quality is very poor. Our model has a PSNR of over 30 dB and produces good image quality. The closer the SSIM value is to 1, the smaller the difference between the two images. Our model has the smallest RMSE value, indicating that the model’s prediction of feature points is more accurate. The maximum UQI of our model means that the image quality has an advantage over other models.

Figure 9 shows a comparative experiment of eight image registration models using Dataset 3~5. From the results, it indicates that traditional registration algorithms, such as SIFT, SURF, ORB, etc., have the problem of low feature point detection accuracy and even more feature point matching errors when processing heterogeneous image registration. However, Transformer-based models such as LoFTR and MatchFormer enhance the performance of the model in low-texture scenes, using local feature matching methods to more effectively solve the matching problem in scenes with significant perspective changes. They can detect more feature points, but there is a problem of low registration accuracy. LightGlue is a matching algorithm based on the SuperGlue model, which generates a matching cost matrix through graph neural networks and solves for the optimal matching matrix to obtain the globally optimal matching result. These models have higher accuracy compared to the previous models. This study improves on SuperGlue by using residual structures in the feature point encoding module and optimizing the Sinkhorn algorithm in the feature matching module. The AutoDiff technique is used to automatically calculate gradients and further obtain good results of accuracy and efficiency of differentiation, allowing the model to detect more feature points. Therefore, higher matching accuracy can be achieved without affecting registration efficiency compared to the seven previous models.

4.2. Discussion of Ablation Experiment

This study conducts ablation experiments on both the feature extraction module and the overall model. From Table 5 and Table 6, the feature extraction module mainly compares and analyzes the performance differences between traditional feature extraction algorithms and deep learning-based algorithms. From the ablation experiment results, it can be seen that the efficiency of deep learning-based feature extraction algorithms is much higher than traditional algorithms, while our improved feature extraction algorithm has a certain improvement in performance compared to previous models such as LoFTR and MatchFormer. The ablation experiment of the overall model mainly tested the influence of different modules on the overall performance. From the results, it shows that after adding a residual mechanism to the feature point encoding module, a larger number of key points were ultimately extracted. After adopting automatic differentiation processing technology for the Sinkhorn algorithm, the performance was better in calculating the optimal transmission problem.

During our experimental testing, we found that most current image registration models have low accuracy in detecting feature points in ToF depth maps. This is because ToF depth maps have low resolution and are more susceptible to external noise when collecting ToF image datasets. Therefore, we believe that the feature point detection module can be further improved and optimized to enhance the model’s feature point recognition ability and prediction accuracy.

4.3. Summary of Experiment’s Results

From the experimental results, compared with other methods, AD-ResSug has significant performance improvements in feature matching and image registration tasks and can effectively handle the relationships between feature points. Optimizing the matching process through global contextual information can effectively improve the accuracy and robustness of heterogeneous image matching and perform well in handling complex scenes. It can maintain high matching accuracy, even in the presence of occlusion and repeated textures. Table 9 is a discussion on the performance advantages and disadvantages of the heterogeneous image registration model of AD-ResSug and seven comparative models in practical applications.

Table 9.

Analysis of the advantages and disadvantages of the model.

The results of the discussion in Table 9 are supported by the results of comparative experiments. It suggests that the AD-ResSug model overcomes the problem of low accuracy in heterogeneous image registration exhibited by other models in the past. However, the AD-ResSug model is only applicable to specific types of datasets and performs well in processing color and ToF heterogeneous images. However, there may be a problem of low accuracy when processing other types of heterogeneous image datasets. Subsequent research can improve and optimize this aspect to enable it to handle more types of heterogeneous image registration tasks.

Overall, the AD-ResSug model was compared with relevant models proposed in recent years on publicly available datasets, as well as on self-built datasets. From the experimental results, it can be seen that our model has significant performance improvements in processing complex orchard environment images and heterogeneous image processing. It is practical in processing agricultural images and has important significance for promoting agricultural modernization.

5. Conclusions

Aiming at the complex scenes of orchards and the problem of insufficient accuracy of existing image registration models in heterogeneous image registration, a specialized model, AD-ResSug, is proposed to handle heterogeneous image registration tasks in orchards.

- (1)

- In the feature point extraction module, we adopt the Visual Geometry Group (VGG) network to process the information of the input image. Through stacking multiple convolutional layers and pooling layers, it can gradually extract different levels of features of the image. Compared with other methods, AD-ResSug has significant performance improvements in feature matching and image registration tasks and can effectively handle the relationships between feature points. The public test results in Table 3 shows that the AD-ResSug model has further improved in several key indicators, such as root mean square error, peak signal-to-noise ratio, and relative global accuracy error.

- (2)

- The Sinkhorn AutoDiff algorithm is used to perform model pruning and network parameter compression on the network module of feature point matching to reduce computational complexity. From the ablation experiment results of Table 5 and Table 6, optimizing the matching process through global contextual information can effectively improve the accuracy and robustness of heterogeneous image matching and perform well in handling complex scenes. After adding a residual mechanism to the feature point encoding module, a larger number of key points were ultimately extracted. After adopting automatic differentiation processing technology for the Sinkhorn algorithm, the performance was better in calculating the optimal transmission problem. It can maintain high matching accuracy, even in the presence of occlusion and repeated textures.

- (3)

- More importantly, the AD-ResSug model is more suitable for heterogeneous registration, especially for the color and ToF heterogeneous images. The results of the discussion on self-built datasets in Table 8 suggest that the AD-ResSug model overcomes the problem of low accuracy in heterogeneous image registration exhibited by other models. It shows the model is more suitable for the actual application scene.

Overall, the AD-ResSug model was compared with relevant models proposed in recent years on publicly available datasets, as well as on self-built datasets. From the results, it can be seen that our model has significant performance improvements in processing complex orchard environment images and heterogeneous image processing. It is practical in processing agricultural images and has important significance for promoting agricultural modernization.

There are still some limitations to this study, such as the introduction of positional encoding, which may increase the complexity of the model, and the use of network pruning and compression operations may result in the loss of some data and information when processing complex tasks. Future work will focus on how to further optimize the performance of the model and improve registration efficiency and accuracy, studying how to more closely integrate position encoding with optimizing the structure of attention graph neural networks to enhance the performance of the model in processing spatial information. Another area of work is to enable the model to handle more types of heterogeneous image registration tasks.

Author Contributions

D.H. designed, wrote the paper and analyzed the experiments. L.L. collected data, reviewed and edited the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China [grant number 32460440] and Gansu Provincial University Teacher Innovation Fund Project [grant number 2023A-051].

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, D.; Yan, Y.; Liang, D.; Du, S. MSFormer: Multi-scale transformer with neighborhood consensus for feature matching. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Xia, Y.; Ma, J. Locality-guided global-preserving optimization for robust feature matching. IEEE Trans. Image Process. 2022, 31, 5093–5108. [Google Scholar] [CrossRef] [PubMed]

- Cao, J.; You, Y.; Li, C.; Liu, J. TSK: A Trustworthy Semantic Keypoint Detector for Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Bellavia, F. SIFT matching by context exposed. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2445–2457. [Google Scholar] [CrossRef]

- Kumar, A. SURF feature descriptor for image analysis. Imaging Radiat. Res. 2023, 6, 5643. [Google Scholar] [CrossRef]

- Chen, Q.; Yao, L.; Xu, L.; Yang, Y.; Xu, T.; Yang, Y.; Liu, Y. Horticultural image feature matching algorithm based on improved ORB and LK optical flow. Remote Sens. 2022, 14, 4465. [Google Scholar] [CrossRef]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Kim, S.H.; Choi, H.L. Convolutional neural network for monocular vision-based multi-target tracking. Int. J. Control Autom. Syst. 2019, 17, 2284–2296. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Corso, G.; Stark, H.; Jegelka, S.; Jaakkola, T.; Barzilay, R. Graph neural networks. Nat. Rev. Methods Primers 2024, 4, 17. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, J.; Yang, K.; Peng, K.; Stiefelhagen, R. Matchformer: Interleaving attention in transformers for feature matching. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 2746–2762. [Google Scholar]

- Xie, T.; Dai, K.; Wang, K.; Li, R.; Zhao, L. Deepmatcher: A deep transformer-based network for robust and accurate local feature matching. Expert Syst. Appl. 2024, 237, 121361. [Google Scholar]

- Sarlin, P.E.; Detone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 4938–4947. [Google Scholar]

- Lindenberger, P.; Sarlin, P.E.; Pollefeys, M. Lightglue: Local feature matching at light speed. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 17627–17638. [Google Scholar]

- Viniavskyi, O.; Dobko, M.; Mishkin, D.; Dobosevych, O. OpenGlue: Open source graph neural net based pipeline for image matching. arXiv 2022, arXiv:2204.08870. [Google Scholar]

- Ferrer, M.; Ruiz-Hidalgo, J.; Gregorio, E.; Vilaplana, V.; Morros, J.R.; Gené-Mola, J. Simultaneous fruit detection and size estimation using multitask deep neural networks. Biosyst. Eng. 2023, 233, 63–75. [Google Scholar]

- Gené-Mola, J.; Vilaplana, V.; Rosell-Polo, J.R.; Morros, J.-R.; Ruiz-Hidalgo, J.; Gregorio, E. Multi-modal Deep Learning for Fruit Detection Using RGB-D Cameras and their Radiometric Capabilities. Comput. Electron. Agric. 2019, 162, 689–698. [Google Scholar]

- Xu, W.; Liu, L. PCNN orchard heterogeneous image fusion with semantic segmentation of significance regions. Comput. Electron. Agric. 2024, 216, 108454. [Google Scholar]

- Yang, H.; Ni, J.; Gao, J.; Han, Z.; Luan, T. A novel method for peanut variety identification and classification by Improved VGG16. Sci. Rep. 2021, 11, 15756. [Google Scholar] [CrossRef]

- Feng, M.; Lu, H.; Yu, Y. Residual learning for salient object detection. IEEE Trans. Image Process. 2020, 29, 4696–4708. [Google Scholar]

- Luise, G.; Rudi, A.; Pontil, M.; Ciliberto, C. Differential properties of sinkhorn approximation for learning with wasserstein distance. Adv. Neural Inf. Process. Syst. 2018, 31, 1467–5463. [Google Scholar]

- Lee, S.; Lim, J.; Suh, I.H. Progressive feature matching: Incremental graph construction and optimization. IEEE Trans. Image Process. 2020, 29, 6992–7005. [Google Scholar]

- Poiesi, F.; Boscaini, D. Learning general and distinctive 3D local deep descriptors for point cloud registration. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3979–3985. [Google Scholar]

- Jiang, Q.; Liu, Y.; Yan, Y.; Deng, J.; Fang, J.; Li, Z.; Jiang, X. A contour angle orientation for power equipment infrared and visible image registration. IEEE Trans. Power Deliv. 2020, 36, 2559–2569. [Google Scholar]

- Xu, S.; Chen, S.; Xu, R.; Wang, C.; Lu, P.; Guo, L. Local feature matching using deep learning: A survey. Inf. Fusion 2024, 107, 102344. [Google Scholar]

- Chen, J.; Li, G.; Zhang, Z.; Zeng, D. EFDCNet: Encoding fusion and decoding correction network for RGB-D indoor semantic segmentation. Image Vis. Comput. 2024, 142, 104892. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8922–8931. [Google Scholar]

- Choy, C.B.; Gwak, J.; Savarese, S.; Chandraker, M. Universal correspondence network. Adv. Neural Inf. Process. Syst. 2016, 29, 1–9. [Google Scholar]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 467–483. [Google Scholar]

- Verdie, Y.; Yi, K.; Fua, P.; Lepetit, V. Tilde: A temporally invariant learned detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 5279–5288. [Google Scholar]

- Simo-Serra, E.; Trulls, E.; Ferraz, L.; Kokkinos, I.; Fua, P.; Moreno-Noguer, F. Discriminative learning of deep convolutional feature point descriptors. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 118–126. [Google Scholar]

- Detone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 224–236. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).