1. Introduction

Rice is an important crop that supports the livelihood and sustenance of over half of the world’s population [

1,

2]. In China, rice is widely cultivated, covering over 30.07 million hectares in 2020, playing a pivotal role in ensuring global food security [

3,

4]. However, the quality and yield of rice are easily affected by various diseases [

5]. The fluctuations in rice yield and quality not only affect the stability of the agricultural economy but also have a direct impact on food security [

6]. Therefore, the early recognition and control of rice leaf diseases are crucial for rice production.

Early rice disease monitoring was primarily conducted manually, requiring farmers to determine the type of diseases based on experience or expert consultation [

7,

8]. This method cannot meet the requirements of modern agricultural development. Furthermore, it is susceptible to human subjectivity, which can lead to the misdiagnosis of diseases. To eliminate diseases, farmers often overuse chemical pesticides, leading to environmental pollution [

9]. Therefore, establishing an efficient detection approach for rice diseases is essential to ensure accurate diagnosis and timely warning, thereby supporting green and sustainable agricultural production.

Over the past few years, computer vision has been widely applied in agriculture, offering robust technical support for the automated identification of crop diseases. Early computer vision approaches primarily constructed disease recognition models by integrating handcrafted features (e.g., color, texture, and shape) with machine learning algorithms. Snehaprava et al. [

10] adopted an adaptive thresholding segmentation strategy based on the Otsu method to segment rice disease regions according to Saturation (S) and Hue (H). Subsequently, they constructed a disease recognition model using LS-SVM, achieving recognition accuracies of 91.3% and 98.87% on two datasets, respectively. BHARANIDHARAN et al. [

11] extracted 14 statistical features from thermal images of rice leaves and designed a feature transformation method based on the LOA to enhance the adaptability of features in machine learning algorithms. The enhanced features were fed into the KNN algorithm to construct a classifier, achieving a recognition accuracy of over 90%. Kumar et al. [

12] employed the Discrete Wavelet Transform (DWT) to extract three types of image features—color, shape, and texture. These features were subsequently input into an AdaBoost-SVM classifier, achieving an accuracy of 98.8% in identifying three rice diseases. Although machine learning methods have shown considerable effectiveness in crop disease detection, their applicability to large-scale datasets remains limited. The preprocessing stage is complex, requiring manual extraction of disease-specific features. Furthermore, the feature selection process is inherently subjective, which may result in the omission of critical features and restrict the model’s generalization ability.

Nowadays, various deep learning methods have been proposed for crop disease identification [

13,

14,

15,

16]. Among these methods, object detection is a deep learning approach that simultaneously classifies and localizes diseases in an image. Currently, two main approaches are commonly employed for plant disease detection: Transformer-based methods and YOLO-based methods. The Transformer architecture possesses excellent capabilities in global dependency modeling and contextual information integration, enabling it to demonstrate significant advantages in complex crop disease detection tasks. Yang et al. [

17] Transformer-based rice disease detection model, DHLC-DETR, which enhances the DETR backbone by integrating Res2Net and a high-density hierarchical hybrid sampling mechanism to improve multi-scale feature representation. The Hungarian algorithm is further employed to optimize prediction matching. Experimental results demonstrate that DHLC-DETR achieves a 17.3% improvement in mAP, with an average detection accuracy of 97.44%. Hanxiang et al. [

18] proposed an end-to-end detection model, PD-TR, which enhances the DINO framework by integrating BatchFormerV2, the LAMB optimizer, and the CIoU loss function. The model effectively detects multiple crop diseases on a large-scale dataset, achieving a maximum mAP of 56.3%. Similarly, Li et al. [

19] proposed PL-DINO, a Transformer-based crop leaf disease detection model that incorporates CBAM to enhance feature extraction and employs EQL to mitigate class imbalance. PL-DINO demonstrates superior performance to Faster R-CNN and YOLOv7 in detecting leaf diseases under natural conditions. Transformer-based plant disease detection methods excel at modeling global dependencies and capturing contextual features, thereby achieving high accuracy in disease recognition [

20]. However, in rice leaf disease detection tasks under complex field environments, their high computational cost, slower inference speed, and reliance on large-scale training data significantly limit their deployment in practical scenarios, particularly on resource-constrained edge devices. In contrast, yolo-based detection approach can improve detection speed while maintaining reasonable accuracy, which renders it more appropriate for deployment in agriculture.

YOLO-based methods employ a fully convolutional design that performs classification and localization directly on feature maps, thereby enabling faster inference and simpler optimization. In the field of crop disease detection, researchers have progressively optimized and improved the network structure of YOLO to address diverse challenges across application scenarios, thereby improving detection performance and generalization. To detect rice blast in field environments, Cao et al. [

21] designed a lightweight C2F-Pyramid module to improve the computational efficiency of YOLOv8x. To reduce the misdetection of small disease targets, they incorporated a CBAM module to enhance the network’s ability to capture multi-scale features from both spatial and channel dimensions, and added an additional detection head to supplement small target information. The network ultimately achieved a mAP of 84.3%, surpassing the baseline by 6.1%. To address the challenges of small lesion areas and high density in peanut leaf spot disease, Zhang et al. [

22] proposed a lightweight detection method, ESM-YOLOv11. By incorporating EMA, SLIM-NECK, and MPDIoU to improve the structure and loss function of YOLOv11, this method reduced the number of parameters by 3.87% while increasing the mAP to 96.90%. Meng et al. [

23] enhanced the feature extraction capability of YOLOv8n by introducing multi-scale variable kernel convolution and a selective kernel (SK) attention module. In addition, they optimized the loss function using MPDIoU to improve the model’s localization ability for occluded targets. Finally, the mAP of improved model achieved 89.24%. Gao et al. [

24] proposed a lightweight CB module to enhance YOLOv5s, integrating StarNet and Shape-NWD to improve backbone features and bounding box computation for efficient wheat Fusarium head blight detection. The method achieved a mAP of 90.51% and was successfully deployed on an embedded platform. YOLO-based methods have demonstrated strong performance not only in single-disease detection tasks but also in multi-disease detection scenarios. To address the challenge of detecting disease regions on different parts of peppers, Zheng et al. [

25] proposed MSPB-YOLO, a YOLOv8-based detection algorithm. By incorporating the RVB-EMA module, the RepGFPN structure, and the DIOU loss function, the algorithm enhances the network’s feature extraction capability and optimizes the training process, enabling efficient localization of disease regions across multiple parts, with an mAP@0.5 of 96.4%. Pan et al. [

26] proposed SSD-YOLO, a rice disease detection method that integrates SENet, DySample, and ShapeIoU into the YOLOv8 framework. SSD-YOLO achieved detection accuracies of 87.52%, 99.48%, and 98.99% for three diseases, outperforming the baseline by 11.11%, 1.73%, and 3.81%. To address the challenges of varying target scales and complex backgrounds in field scenarios, Gan et al. [

27] introduced a lightweight efficient detection head (LEDH) and multi-scale spatial pyramid pooling (MSPPF) into YOLOv8n. These improvements effectively enhanced the network’s ability to capture intricate details of rice leaf diseases, achieving a 4.4% increase in mAP compared with the original YOLOv8n. To deploy the model on embedded mobile devices, Wang et al. [

28] introduced a structural re-parameterization module RepGhost and GhostConv to lighten YOLOv8. They also incorporated CBAM into the backbone and reduced the number of parameters by 33.2%. It maintaining high accuracy with lower params and can be widely applied to different crops.

The above research indicates that deep learning-based methods have achieved remarkable progress in crop disease detection. However, in real field environments, rice leaf disease detection is still influenced by multiple factors, such as complex backgrounds, diverse disease types with varying visual characteristics, and sample imbalance. Therefore, achieving reliable detection accuracy while ensuring efficient deployment on mobile devices remains a critical challenge. To solve these issues, this study proposes EAG-YOLOv11n, a rice leaf disease detection method based on YOLOv11n. It incorporates the EMA [

29] into the shallow C3K2 modules of the backbone and neck to enhance the network’s attention to the feature details of various diseases. Additionally, a global local complementary attention module (GLC-PSA) is designed to suppress background interference by integrating global and local information. At the same time, ATFL [

30] is employed to enhance the network’s ability on challenging samples. The main contributions of this work are summarized as follows:

- (1)

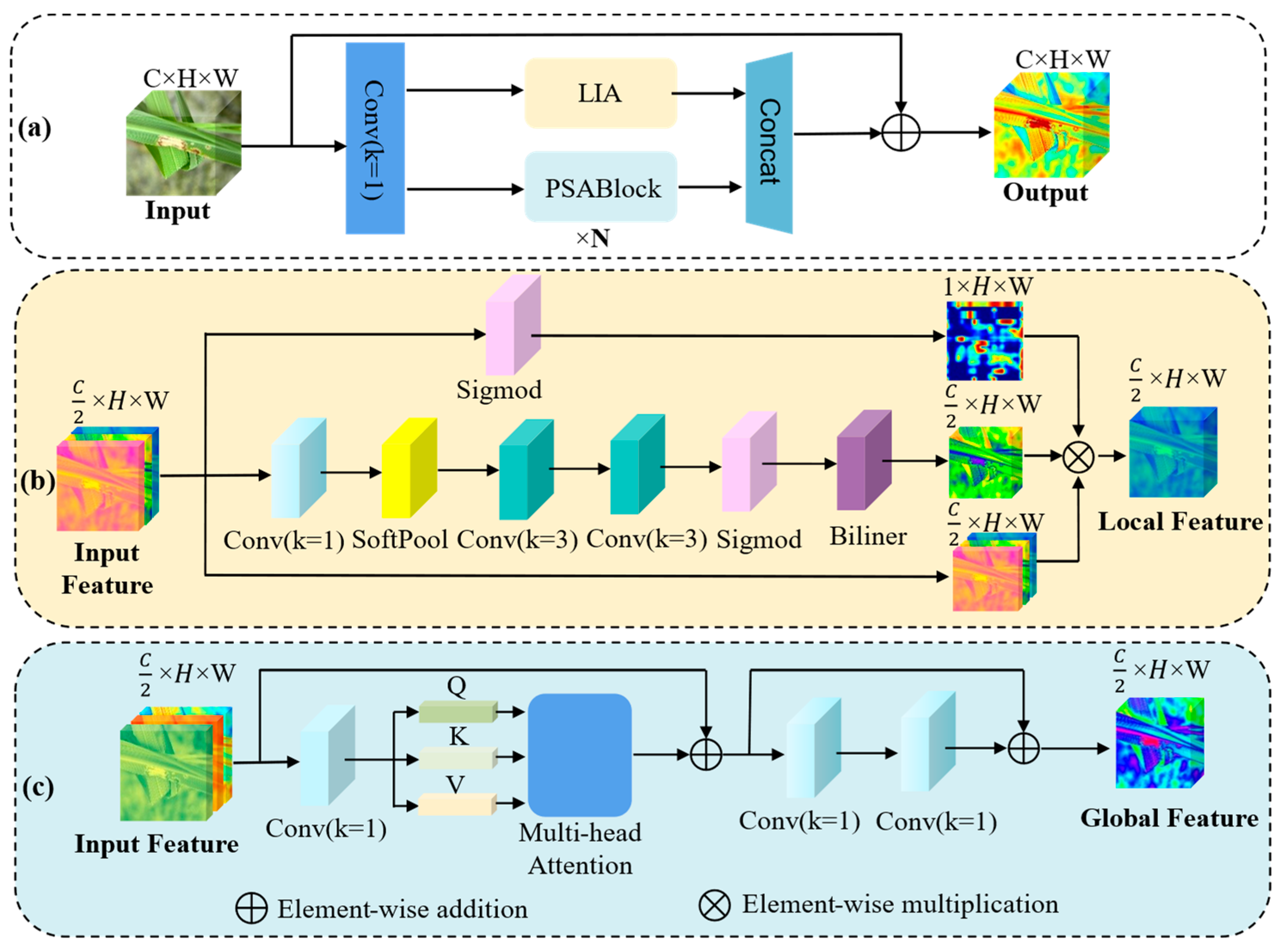

A global local complementary attention module (GLC-PSA) is proposed and integrated into the backbone of YOLOv11n. This module enhances the perception of lesion regions while effectively suppressing background interference.

- (2)

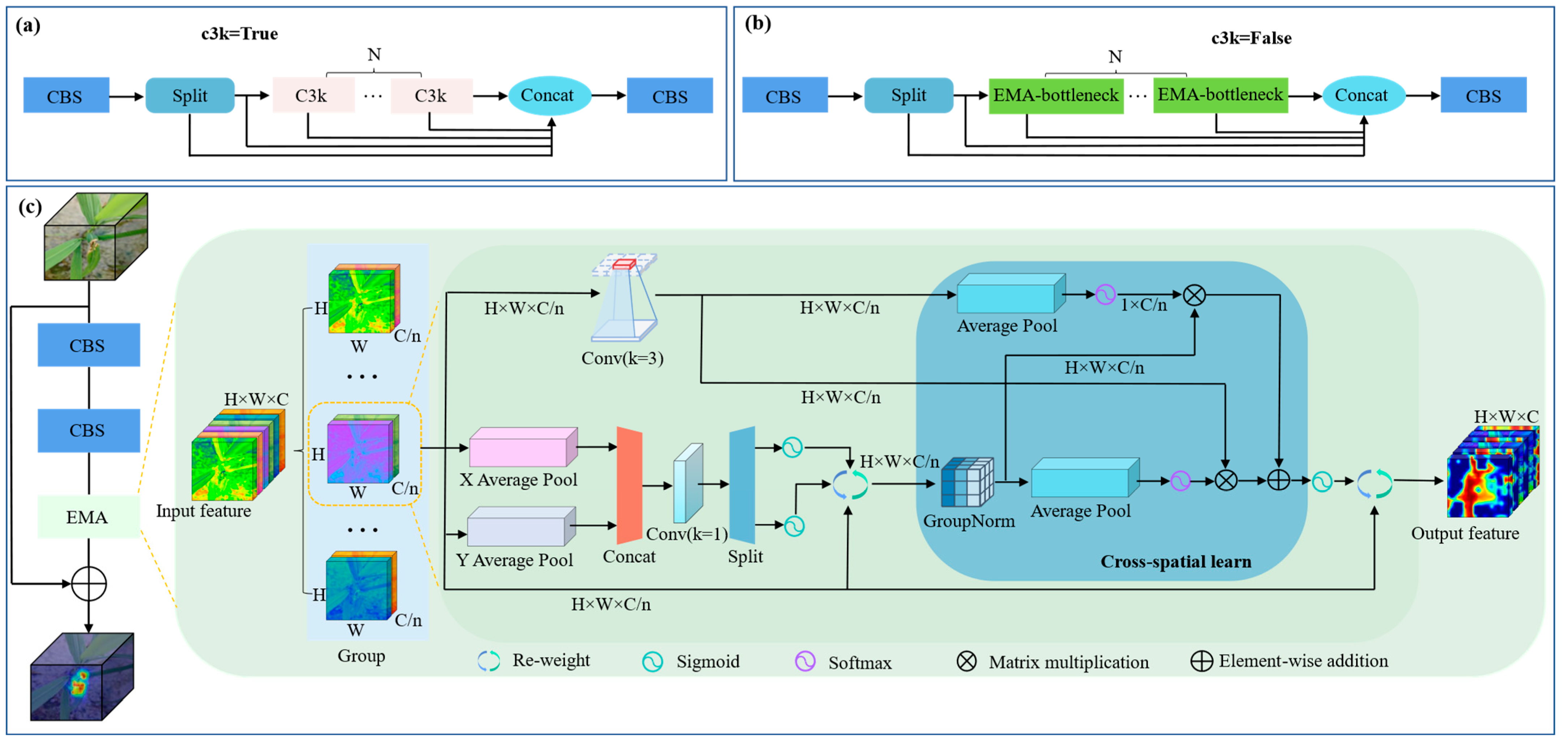

An EMA-C3K2 module is constructed and strategically deployed into the shallow C3K2 layers of the backbone and neck. By introducing Efficient Multi-Scale Attention (EMA), this module effectively improves the network’s sensitivity to multi-scale disease feature.

- (3)

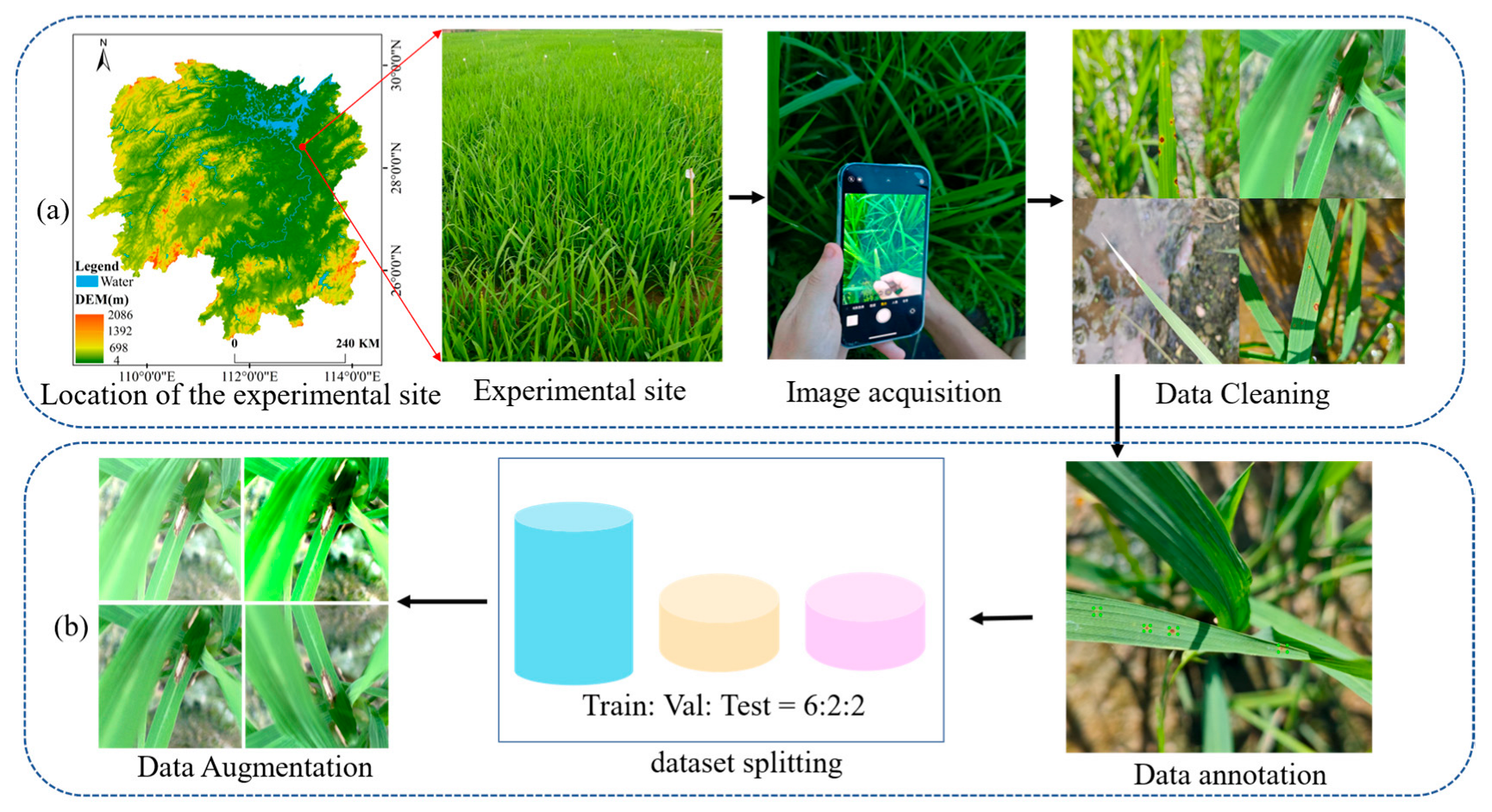

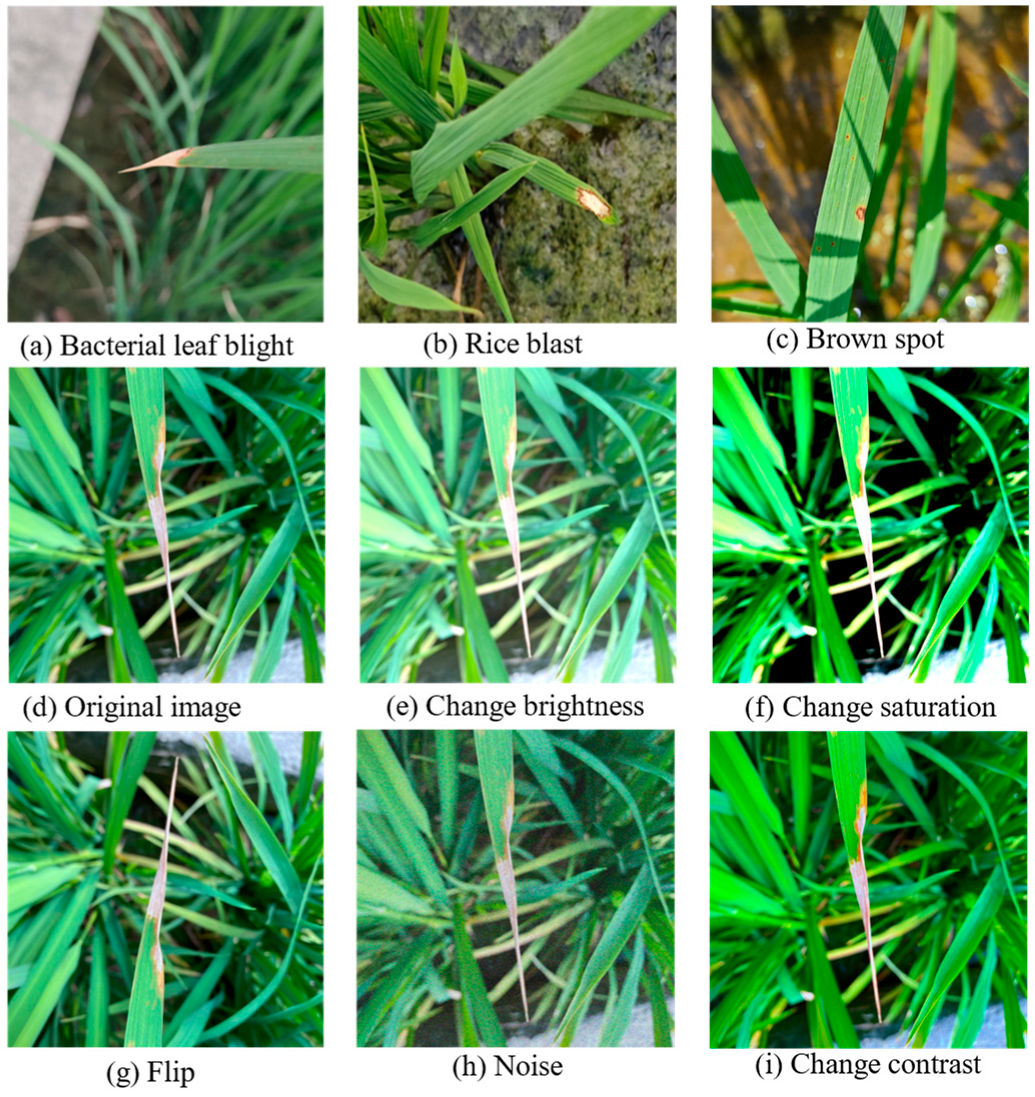

An Adaptive Threshold Focal Loss (ATFL) is introduced to optimize the network’s learning on hard-to-detect samples. In addition, a rice leaf disease dataset RLD-3C is constructed to validate the model’s effectiveness.

3. Results

3.1. Experimental Design

The experiments in this study were conducted on the following hardware environment: NVIDIA GeForce RTX 2080Ti GPUs, Intel Xeon E5-2680v3 CPU, and 128 GB RAM. The software environment includes python 3.8.20, pytorch 1.8.1, and CUDA 11.1. During training, SGD is adopted to update the model. The initial learning rate, momentum factor, and weight decay coefficient are 0.1, 0.937, and 0.0005, respectively. To ensure sufficient iterations for capturing key features and mitigating underfitting, the model is trained for a total of 200 epochs with a batch size of 32. For consistency in training, the resolution of input image is set to 640 × 640. To ensure consistency and comparability of results, all subsequent experiments are conducted under this experimental environment.

3.2. Comparison of Different Models

To assess the effectiveness of EAG-YOLOv11n for rice disease detection, a comprehensive comparison was performed with Transformer-based model RT-DETR-L [

34] and six representative lightweight YOLO models: YOLOv7tiny [

35], YOLOv8n [

36], YOLOv9n [

37], YOLOv10n [

38], YOLOv11n [

32], and YOLOv12n [

39]. The experimental results are summarized in

Table 4. Overall, EAG-YOLOv11n outperforms other models across multiple evaluation metrics. It achieves the highest recall of 82.5%, mAP@50 of 87.3% and mAP@50–95 of 52.0%, demonstrating the superiority of EAG-YOLOv11n in the current task.

Among the comparative models, RT-DETR-L achieves a high precision of 90.6% and a relatively high recall of 80.0%, reflecting strong classification confidence in detecting rice leaf disease targets. However, its mAP@50 of 83.1% and mAP@50–95 of 45.7% remain comparatively low, indicating weaker localization accuracy across IoU thresholds. This is likely due to the lesions being generally small, fine-grained, and having blurred boundaries. The global modeling mechanism of the Transformer tends to average local features, which weakens the model’s ability to accurately localize small targets. YOLOv7tiny achieves 89.5% precision but suffers from a sharp drop in recall to 76.6%, indicating a higher rate of missed detections, particularly for small disease regions. YOLOv8n offers a better trade-off between precision at 87.5% and recall at 82.4%, achieving a higher mAP@50–95 of 46.8% compared with YOLOv5n and YOLOv7tiny. YOLOv9tiny achieves the highest mAP@50–95 of 50.2% and a mAP@50 of 86.2% among the comparative models with a small number of parameters. Although its recall of 79.6% is slightly lower than that of YOLOv8n, it demonstrates notable advantages in precision and overall detection accuracy. YOLOv10n exhibits limited capacity, resulting in lower detection accuracy, with only 78.6% mAP@50 and 39.5% mAP@50–95, which restricts its practical applicability. YOLOv11n demonstrates balanced performance, achieving 87.3% precision, 79.9% recall, and 49.1% mAP@50–95 with a low computational cost of 6.3 Gflops. In contrast, YOLOv12n attains highest precision at 90.7% but exhibits a comparatively lower recall of 77.0%. Although it has fewer parameters and lower computational cost than YOLOv11n, it still exhibits notable missed detections in rice leaf disease detection.

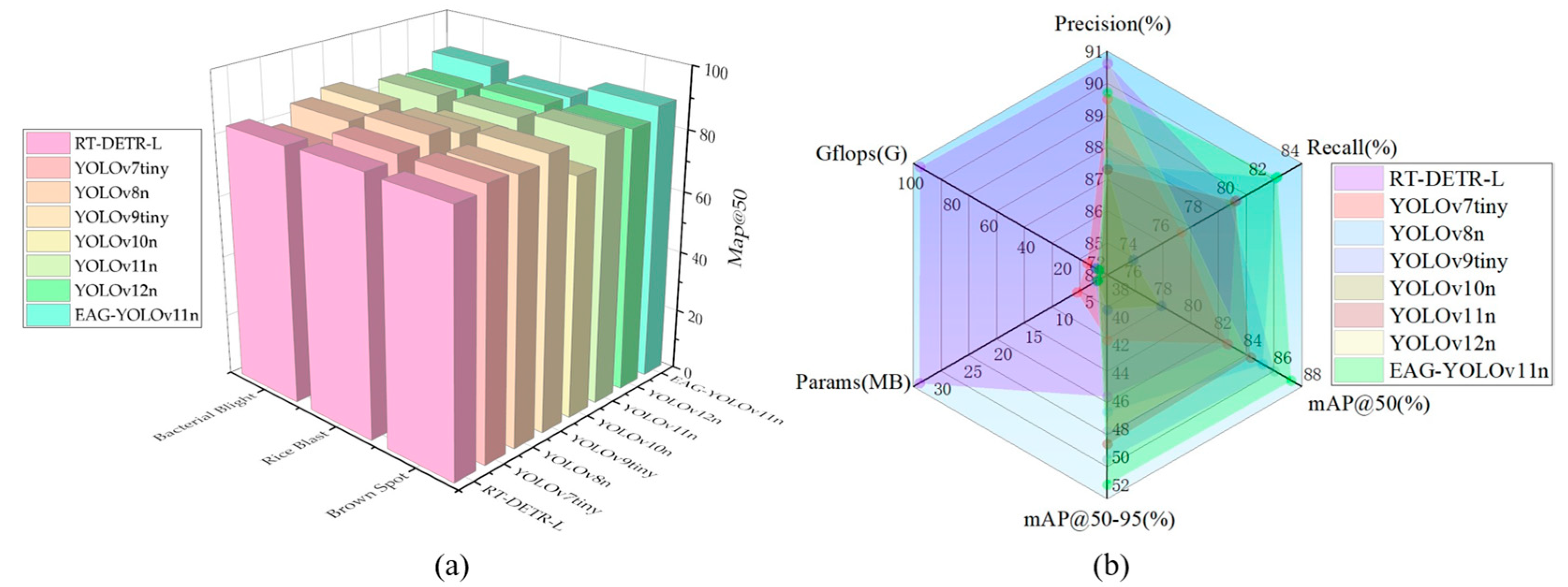

Meanwhile, EAG-YOLOv11n maintains a lightweight design while substantially enhancing detection performance. Its recall improves to 82.5%, representing a 2.6% increase over YOLOv11n, effectively reducing missed detections of small and challenging disease targets. Additionally, mAP@50 and mAP@50–95 rise to 87.3% and 52.0%, respectively, achieving the highest overall accuracy among all compared models while keeping parameters nearly increased. To clearly demonstrate the performance of EAG-YOLOv11n on three different diseases, we employed the mAP@50 metric to conduct a comparative evaluation of various models. As shown in

Figure 6a, due to the relatively small number of Rice Blast samples, most models achieve lower detection accuracy for this disease compared with the other two. In contrast, EAG-YOLOv11n exhibits a significantly higher mAP@50 for Brown Spot and Bacterial Blight than the compared models; although its mAP@50 for Rice Blast is not the highest, it still surpasses that of YOLOv11n. These results indicate that the proposed module offers a distinct advantage in modeling features across different rice diseases. In addition, a radar chart is employed to visualize the evaluation metrics of each model, as shown in

Figure 6b. The parameter and GFLOPs of RT-DETR-L are considerably larger than those of the YOLO-based methods. Among the YOLO-based models, except for YOLOv7-tiny, which exhibits a substantially higher number of parameters and FLOPs compared to the other counterparts, the remaining models consistently keep their parameter sizes within approximately 2–3M and their FLOPs below 10G. Specifically, EAG-YOLOv11n requires only 0.3G more FLOPs than YOLOv11n, with almost no increase in parameter size. As illustrated in the radar chart, EAG-YOLOv11n achieves broader coverage across the four evaluation metrics while maintaining low parameter size and computational cost, thereby demonstrating an effective trade-off between model performance and complexity.

3.3. Ablation Experiment

To further assess the impact of each module on the model’s performance, a series of ablation experiments were performed on the RLD-3C dataset. As presented in

Table 5, the integration of the EMA-C3K2 module results in a precision of 89.1% and a mAP@50 of 86.1%, representing a notable improvement over the baseline. This indicates that embedding EMA into the shallow C3K2 layer enhances feature extraction, particularly for local textures and small-scale lesions. Similarly, the incorporation of the GLC-PSA module achieves balanced improvements, increasing recall to 80.5% and mAP@50 to 86.0%, which confirms its effectiveness in strengthening local feature representation while suppressing background interference. In contrast, the adoption of ATFL decreases precision but increases recall to 83.3%. This result indicates that ATFL enables the model to capture more challenging samples, thereby reducing missed detections and achieving the highest mAP@50 of 86.3% among single-module variants. When combining modules, complementary improvements are observed: EMA-C3K2 with GLC-PSA improves both recall and overall accuracy, while EMA-C3K2 with ATFL enhances precision and recall simultaneously.

When the integration of all three modules, the model attains its optimal overall performance, with precision, recall, mAP@50, and mAP@50–95 reaching 89.7%, 82.5%, 87.3%, and 52.0%, respectively. Compared with the baseline, these improvements are obtained with nearly no increase in parameters, 2.58M compared to 2.58M, and only marginal growth in model size, 5.27 MB compared to 5.25 MB, and computational cost, 6.6 compared to 6.3 GFLOPs, demonstrating that the proposed design attains superior accuracy while preserving lightweight. These results indicate that each module provides a significant contribution, and their combined integration achieves the highest performance improvement.

3.4. EAG-YOLOv11n Detection Results Analysis

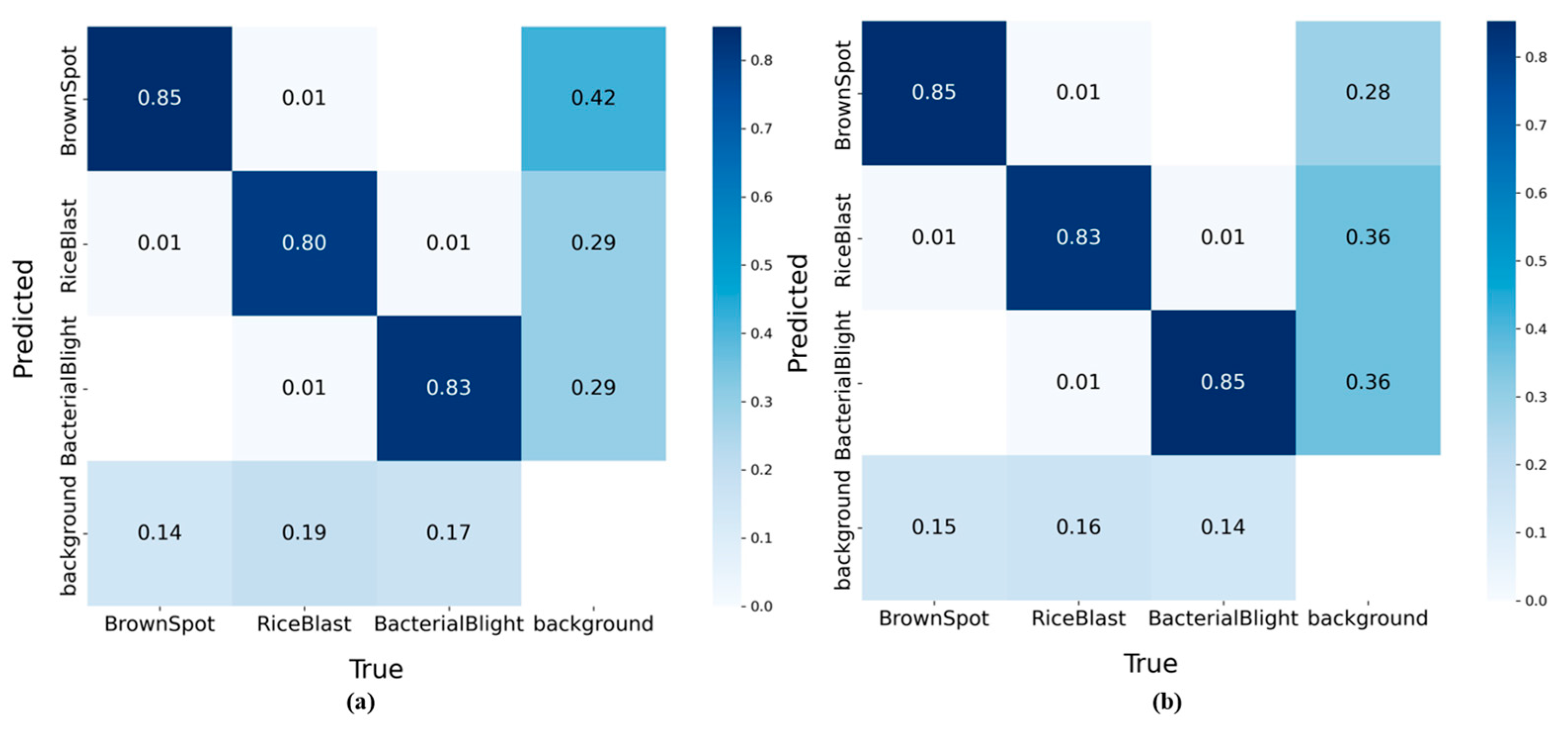

To provide a clear assessment of EAG-YOLOv11n’s discriminative capability across three rice diseases and backgrounds, a confusion matrix is introduced, as shown in

Figure 7. For rice blast, the classification accuracy improves from 80% to 83%, with the proportion of instances misclassified as background reduced by 3%, indicating that the model achieves higher accuracy in capturing the key characteristics of this disease. Similarly, the classification accuracy for bacterial blight increases from 83% to 85%, with a 3% reduction in background misclassification. This result further demonstrates the enhanced feature extraction capability of the model. Although the classification accuracy for brown spot remains unchanged, the reduced background confusion demonstrates stronger robustness in suppressing interference from non-target regions. Overall, the background confusion rates for all categories decrease to varying degrees, indicating that EAG-YOLOv11n can not only learn the distinctive characteristics of different diseases but also effectively reduce background interference.

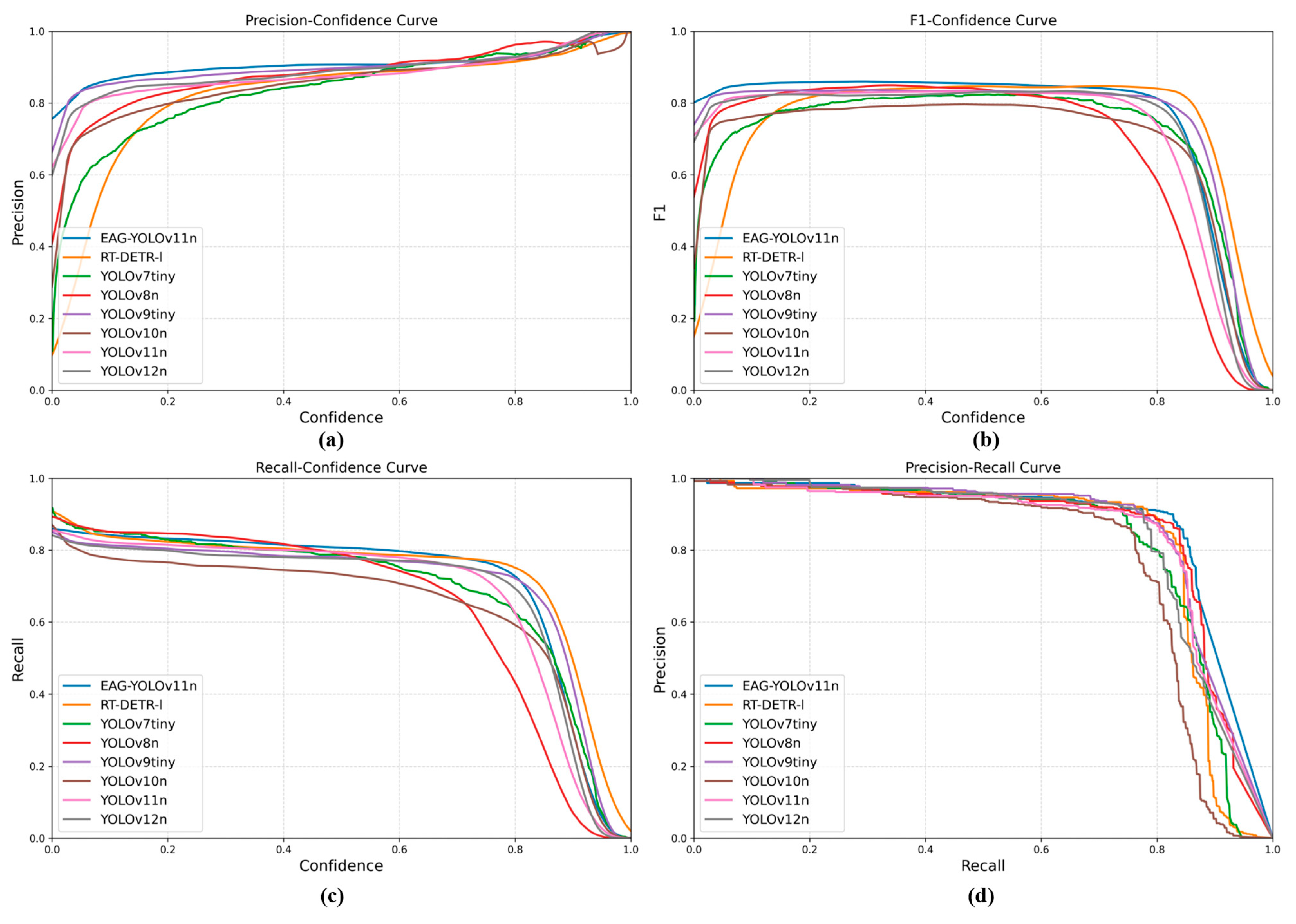

To further evaluate the performance of EAG-YOLOv11n, the F1-Confidence, P-Confidence, R-Confidence, and Precision–Recall curves were compared with those of seven representative models. As illustrated in

Figure 8a,b, EAG-YOLOv11n maintains higher and more stable precision and F1-scores across the entire confidence range. Its precision in the low-confidence region is significantly higher than that of other models, indicating stronger robustness against false positives; meanwhile, the curve remains smooth in the high-confidence region, reflecting the stability and consistency of the prediction results. As shown in

Figure 8c, EAG-YOLOv11n exhibits a relatively gentle decline in recall within the medium-to-high confidence range, indicating stable detection performance across different confidence thresholds.

Figure 8d further demonstrates that the precision curve of EAG-YOLOv11n remains above those of other models throughout the recall range. When recall exceeds 0.8, its precision decreases most gradually, indicating superior stability and generalization in balancing precision and recall for the detection of diverse rice leaf disease under complex backgrounds.

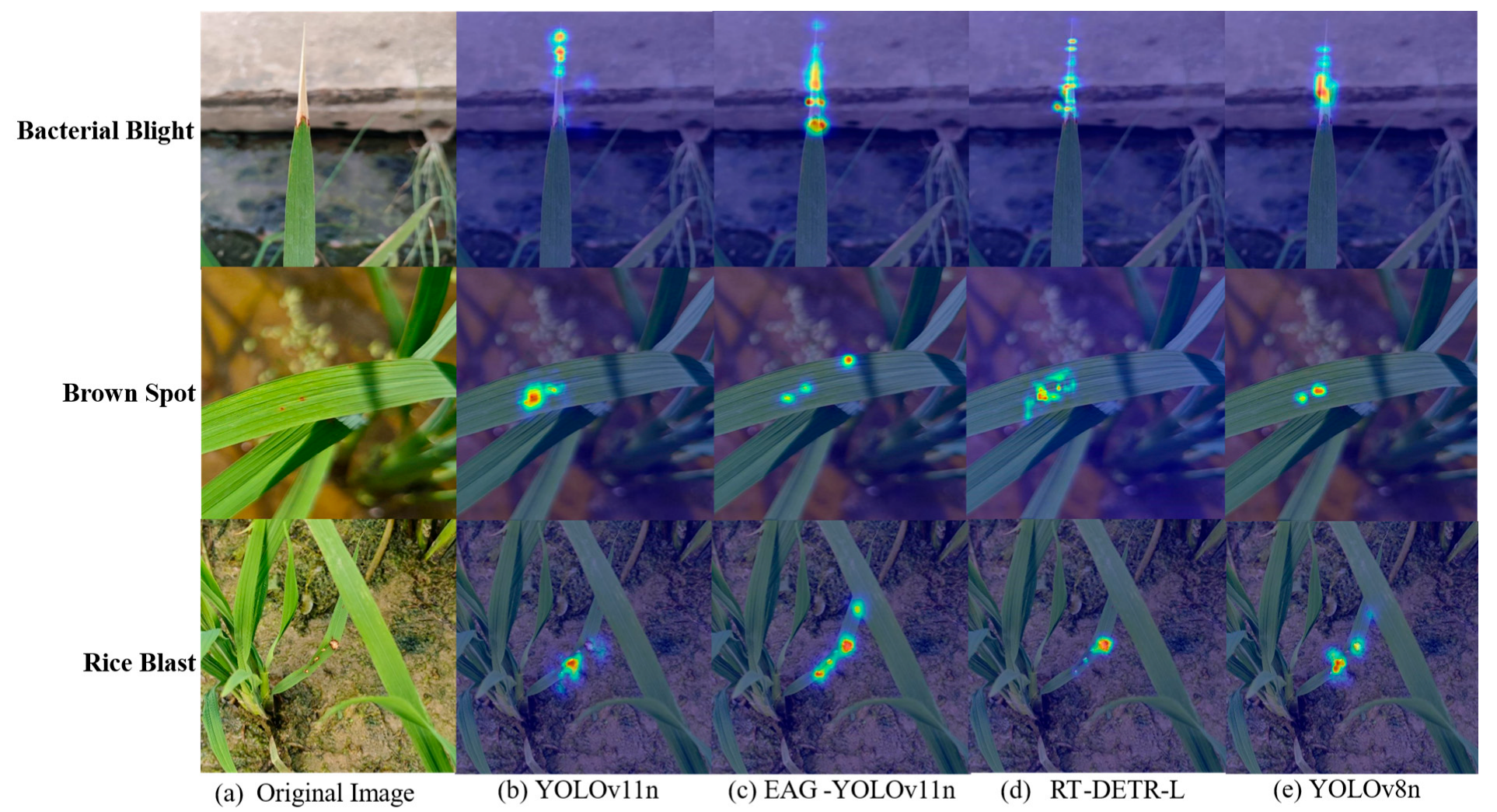

To clearly demonstrate the performance of EAG-YOLOv11n, Heatmap-based visual analysis was conducted on the experimental results. Three representative disease samples were selected, and their original images along with the corresponding heatmaps from four representative models are shown in

Figure 9. For bacterial blight, YOLOv11n was able to localize lesion areas but was still affected by background interference. In contrast, EAG-YOLOv11n, RT-DETR-L and YOLOv8n achieved more precise localization of the lesion areas. For brown spot, YOLOv11n, RT-DETR-L, and YOLOv8n effectively perceive the prominent lesion regions but exhibit missed detections for small targets. In contrast, EAG-YOLOv11n not only localizes the obvious lesion areas but also successfully identifies small diseased regions. Similarly, for rice blast under complex backgrounds, EAG-YOLOv11n successfully localized all lesion areas, demonstrating enhanced background suppression and improved robustness.

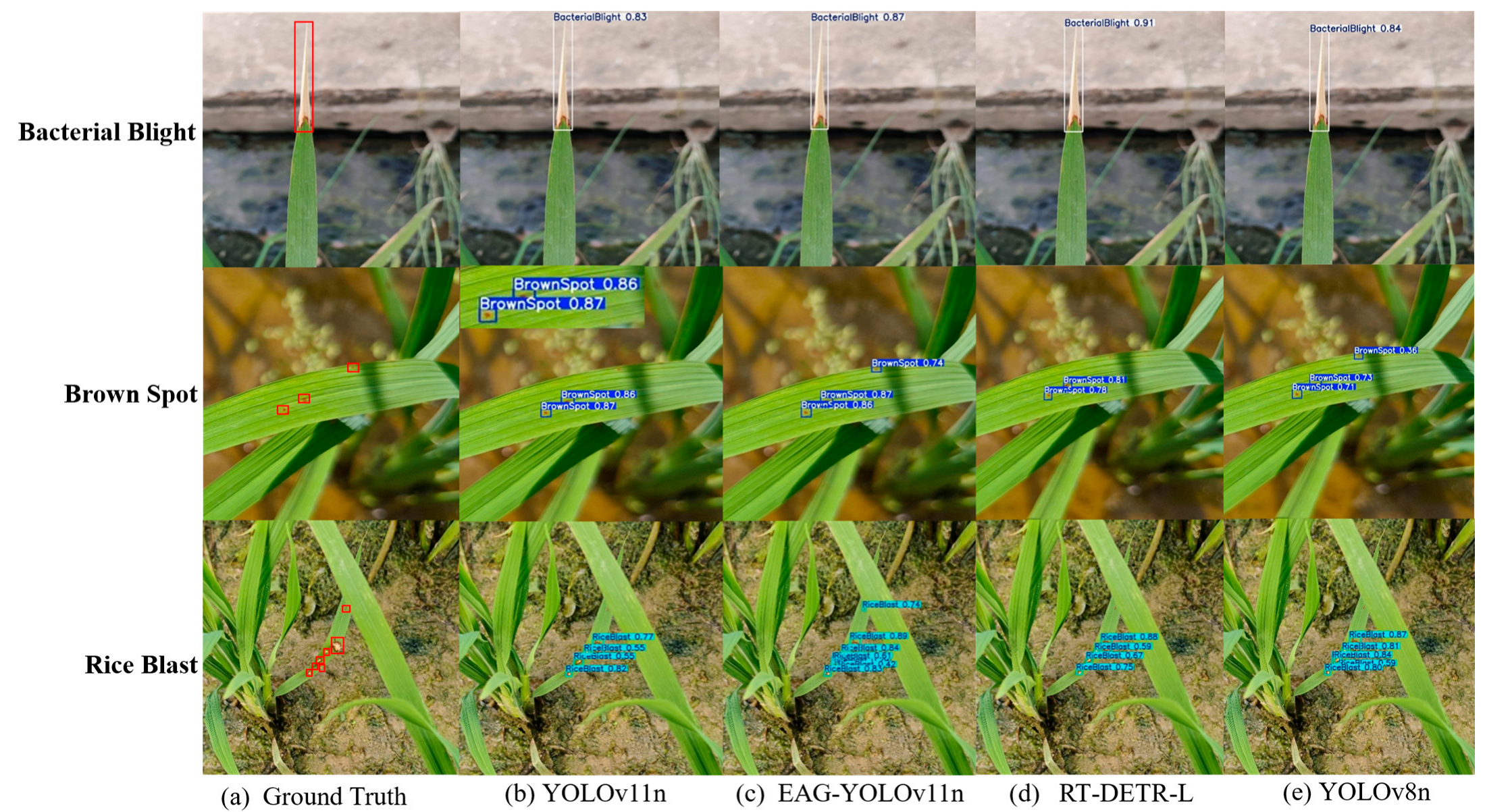

To more intuitively demonstrate the effectiveness of EAG-YOLOv11n on the RLD-3C dataset, three disease images with different detection difficulties were selected to validate EAG-YOLOv11n, YOLOv11n, RT-DETR-L, and YOLOv8n. The results are shown in

Figure 10. For disease regions with clearly defined bacterial blight areas, all four models accurately performed localization. The confidence score of RT-DETR-L reached 91%, which is higher than that of EAG-YOLOv11n, indicating that RT-DETR-L exhibits better classification performance, especially for simpler tasks. For the detection of complex brown spot disease, RT-DETR-L and YOLOv11n exhibited missed detections. Although YOLOv8n successfully detected all diseased regions, its confidence scores were significantly lower than those of EAG-YOLOv11n. In the rice blast detection task, EAG-YOLOv11n successfully detected all diseased regions, achieving higher confidence scores than the three comparison models. Overall, EAG-YOLOv11n exhibited superior detection accuracy and robustness across multiple disease types and complex scenarios, confirming its effectiveness.

3.5. Five-Fold Cross-Validation Experiment

To evaluate the robustness and generalization capability of EAG-YOLOv11n, a five-fold cross-validation was performed on RLD-3C, and the results are shown in

Table 6. Compared with YOLOv11n, EAG-YOLOv11n achieved higher precision of 89.96 ± 1.24%, recall of 81.64 ± 0.92%, mAP@50 of 87.28 ± 0.30%, and mAP@50–95 of 51.92 ± 0.45%. Except for the standard deviation of precision, which fluctuates by more than 1%, the standard deviations across all other metrics remain relatively small. These experimental results indicate that EAG-YOLOv11n maintains consistent performance across different folds, demonstrating its stability and reliability in disease detection tasks.

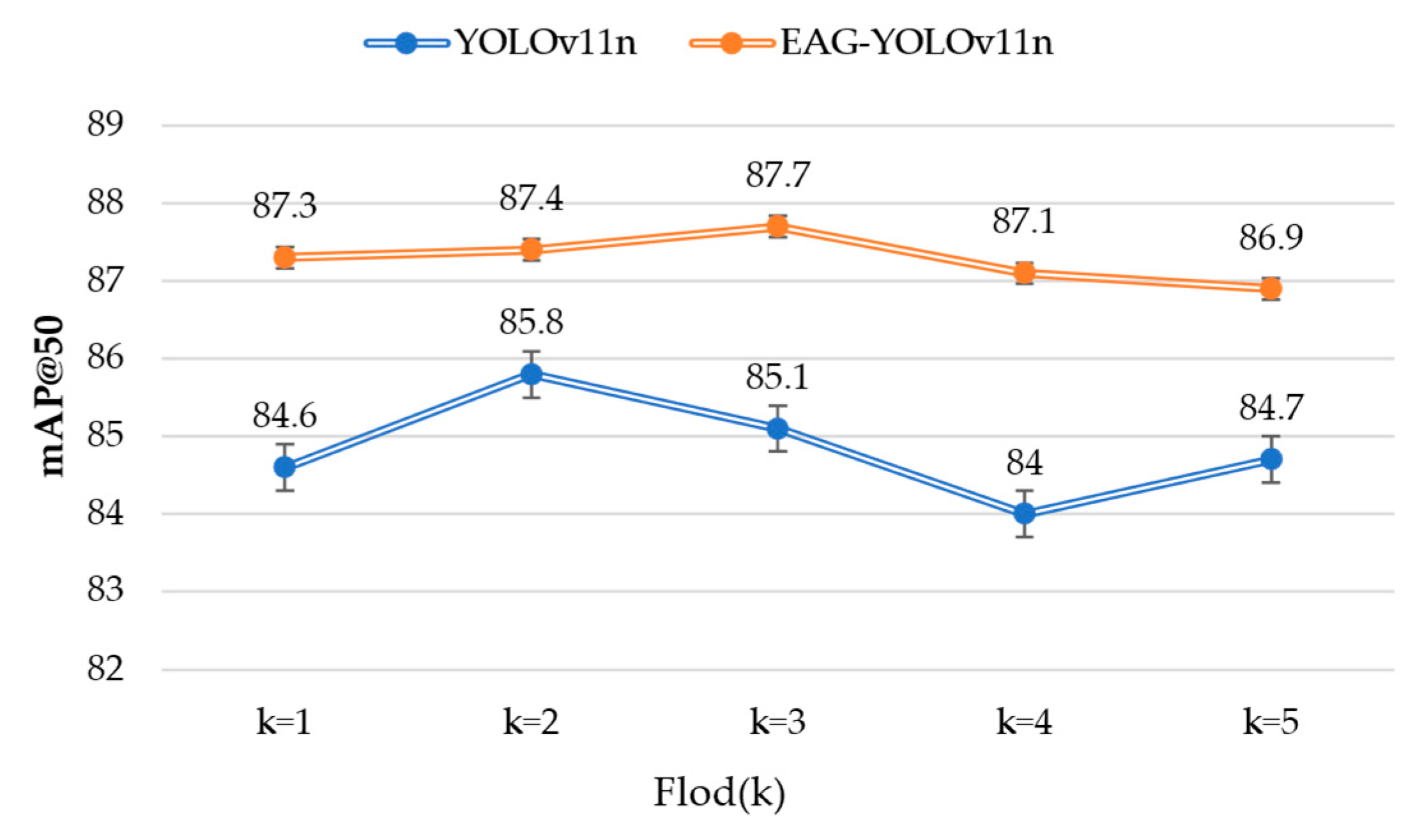

In addition, the mAP@50 results of EAG-YOLOv11n and YOLOv11n across different cross-validation folds are presented in

Figure 11. Although the random partitioning of the dataset introduces variations in model performance, the mAP@50 of EAG-YOLOv11n varies within the range of 86.9% to 87.7%, whereas that of YOLOv11n ranges from 84.0% to 85.8%. Nevertheless, the mAP@50 of EAG-YOLOv11n consistently exceeds that of YOLOv11n, thereby further validating the effectiveness of the proposed enhancements.

4. Discussion

4.1. Key Contributions and Advantage

This study tackles several key challenges in rice leaf disease detection under field conditions, including background interference, large variations in lesion scale, sample imbalance, and the balance between model performance and complexity. To address these issues, an EMA module is integrated into the shallow C3K2 layers, enhancing the network’s sensitivity to shallow features such as color and texture. A Global–Local Complementary PSA (GLC-PSA) attention module is designed to effectively suppress background interference by combining global and local contextual information. In addition, ATFL is introduced to improve the network’s learning on challenging or underrepresented samples. Finally, a dedicated dataset RLD-3C containing three types of rice leaf diseases is constructed, providing a benchmark to assess the effectiveness and practical applicability of EAG-YOLOv11n.

Compared with mainstream lightweight YOLO models, EAG-YOLOv11n demonstrates significant advantages in both performance and model complexity. It achieves a recall of 82.5%, mAP@50 of 87.3%, and mAP@50–95 of 52.0%, effectively reducing missed detections of small disease. Correspondingly, YOLOv5n achieves a recall of 80.6% and a mAP@50–95 of 43.9%, reflecting relatively lower overall performance. Although YOLOv7tiny attains a high precision of 89.5%, its recall is only 76.6% and mAP@50–95 is 41.7%, indicating a higher rate of missed detections for small and challenging lesions. In comparison with YOLOv8n, EAG-YOLOv11n improves precision, mAP@50, and mAP@50–95 by 2.2%, 1.9%, and 5.2%, respectively, demonstrating its superior robustness in accurately detecting lesions across different scales and complex scenarios. Compared with YOLOv9tiny, EAG-YOLOv11n increases the number of parameters by only 0.71M while reducing GFLOPs by 0.5G. Its recall, mAP@50, and mAP@50–95 improve by 2.9%, 1.1%, and 1.8%, respectively, demonstrating a better balance between detection performance and model complexity. YOLOv10n exhibits limited overall detection capability, with a recall of only 73.6% and mAP@50–95 of 39.5%. Although YOLOv12n achieves high precision of 90.7%, its recall is only 77.0%, and mAP@50 and mAP@50–95 reach 84.4% and 48.2%, respectively. Compared with RT-DETR-L, EAG-YOLOv11n achieves higher detection accuracy and maintains an optimal balance between precision and model complexity.

In addition to outperforming mainstream YOLO models, EAG-YOLOv11n also demonstrates competitive advantages compared with other recently improved YOLO-based architectures designed for specific crops. For example, ESM-YOLOv11 [

22] integrates EMA attention, Slim-neck and the MPDIoU loss to improve YOLOv11 for detecting a single dense peanut leaf disease, achieving 2.48M parameters, 5.80 GFLOPs, and an mAP of 96.90%. These results demonstrate strong performance; however, the model is primarily validated in tightly controlled conditions characterized by a single disease category and spatially clustered lesions, which may affect its direct transferability to multi-class field environments. By contrast, EAG-YOLOv11n is designed to enhance lesion perception in more complex field conditions involving three rice leaf diseases with irregular lesion distributions and background interference, while maintaining a lightweight structure with 2.58M parameters and 6.6 GFLOPs. Similarly, Pyramid-YOLOv8 [

21] employs a multi-attention fusion mechanism and a C2F-Pyramid module within the YOLOv8x framework to detect rice blast, achieving an mAP of 84.3%. Nevertheless, this performance is obtained at the cost of a substantially larger model size with 42.0M parameters and a computational complexity of 196.2 GFLOPs, which may limits its applicability to real-time scenarios on resource-constrained devices. In comparison, EAG-YOLOv11n achieves a comparable mAP@50 of 87.3% with less than 1/15 of the parameters and approximately 1/30 of the GFLOPs, indicating better suitability for deployment in mobile or edge-based agricultural monitoring systems.

In summary, EGA-YOLOv11n achieves high detection accuracy while maintaining a lightweight structure and strong adaptability for real-world deployment. The model can automatically and accurately identify three major rice leaf diseases—bacterial leaf blight, leaf blast, and brown spot—from field images, supporting early warning, precision spraying, and smart agricultural monitoring through integration with drones, mobile devices, and IoT platforms. Although chemical pesticide application remains a common practice in rice disease management, early and accurate detection is essential for optimizing pesticide use, reducing unnecessary chemical inputs, and minimizing environmental impact. Therefore, the proposed model provides not only a reliable visual detection framework but also a practical and sustainable solution that can be readily applied in intelligent and precision agriculture.

4.2. Future Improvement for EAG-YOLOv11n

Although this study has made progress in rice leaf diseases detection, several limitations remain to be addressed. To begin with, the performance of model is highly dependent on the scale and quality of the dataset, which restricts its applicability in scenarios with limited samples or non-uniform data distribution. In addition, the RLD-3C dataset used in this study was entirely collected from the experimental fields of the Hunan Academy of Agricultural Sciences between May and late July 2025, resulting in a relatively homogeneous data source. Variations in rice growth environments (e.g., farm locations), collection periods (e.g., different seasons), and imaging devices may cause shifts in feature distributions, thereby limiting the model’s generalization capability under diverse environmental conditions. Furthermore, the current framework relies solely on visible light imagery, which is susceptible to illumination fluctuations and background noise, potentially leading to misclassification in complex field scenarios. Finally, the current model is limited to identifying diseased areas without distinguishing the developmental stages of diseases or offering further diagnostic insights. In practical applications, different disease stages have varying impacts on crop growth, and thus mere detection remains inadequate.

To alleviate the influence of dataset limitations on model performance, future work will employ strategies such as data augmentation, semi-supervised learning, and diffusion-based data generation to mitigate the challenges of insufficient training samples and imbalanced data distribution, thereby enhancing the model’s adaptability and robustness in real-world scenarios. In addition, domain adaptation techniques are employed, and diverse multi-source, multi-scene datasets are constructed to enhance the model’s generalization across different crops, environments, and acquisition conditions. Given that visible light imagery offers limited spectral information, resulting in reduced feature discrimination under complex illumination and background variations, future work will explore multimodal fusion approaches. The integration of hyperspectral imaging is expected to provide richer physiological and biochemical cues (e.g., chlorophyll variations), thus improving disease characterization accuracy and model generalization. To further enhance diagnostic capability, future research will collect and analyze image data over time for dynamic monitoring of disease progression. In addition, future work can integrate large language models to interpret detection results and perform knowledge reasoning, providing farmers with targeted management and control recommendations, enhancing the model’s applicability in agricultural production. Finally, future research will focus on the lightweight optimization of the model to improve inference speed and hardware adaptability. This improvement will facilitate the deployment of EGA-YOLOv11n on field monitoring cameras and farmers’ smartphones, enabling the construction of an agricultural integrated system. The system combines remote intelligent real-time monitoring with on-site image recognition, which is expected to significantly enhance the timeliness and practicality of disease detection and provide more efficient technical support for the precise prevention and control of rice diseases.