1. Introduction

Multi-span greenhouses, recognized for their high space-use efficiency and precise environmental controllability, have emerged as a dominant configuration of facility agriculture [

1]. Concurrent progress in artificial intelligence and robotics has accelerated the adoption of mobile robots in greenhouse operations, advancing the sector from conventional automation toward intelligent production [

2]. As facility agriculture scales and advances, the use of multi-span systems in vegetable, floriculture, and seedling production continues to grow. However, the large footprints of single-structure systems, compressed operational cycle, and stringent quality uniformity requirements make it increasingly difficult for manual inspection, crop protection, and intralogistics to meet the integrated demands for cost, efficiency, and traceability [

3]. Autonomous mobile robots equipped with perception, localization, and planning capabilities thus represent a critical pathway toward enhancing both the efficiency and quality of greenhouse production.

Agricultural robotics has become increasingly integrated with core perception technologies such as Simultaneous Localization and Mapping (SLAM), inertial navigation, and machine vision. Alongside advances in path planning and decision-making, these technologies have converged into closed-loop architectures that encompass perception, localization, mapping, planning, and control. Such integration has emerged as the mainstream paradigm enabling stable autonomous operation of mobile robots across diverse scenarios and ground conditions and represents an inevitable trajectory in the evolution of next-generation agricultural machinery [

4].

With the deep integration of artificial intelligence and robotics, agricultural mobile robots are taking on an increasing range of tasks, including harvesting [

5,

6,

7], material handling [

8,

9], crop protection [

10,

11], and monitoring [

12,

13]. However, greenhouse environments present unique challenges for robotic localization and navigation due to complex lighting conditions, limited availability of Global Navigation Satellite System (GNSS) signals, and highly repetitive structural patterns [

14].

To improve robustness in such environments, research has progressed in three principal directions: multi-sensor fusion [

15], LiDAR-based SLAM [

16], and semantic mapping [

17]. Kutyrev et al. [

18] developed an autonomous robotic platform for apple orchards that integrates inertial and GNSS navigation with LiDAR-based obstacle avoidance, which can traverse orchard rows and support concurrent operations such as spraying and mowing. Tsiakas et al. [

19] proposed a camera-LiDAR fusion method that couples range sensing with semantic segmentation to identify rails and crop rows in greenhouse environments, thereby enabling autonomous row traversal and automated inspection. Gupta et al. [

20] employed a hybrid U-Net model to enhance weed detection and achieved multi-class weed identification through semantic segmentation, thereby improving weed control technologies. Saati et al. [

21] presented an improved two-stage path-planning algorithm that achieved superior navigation performance in dynamic, crowded indoor environments.

Although significant advances have been made in prior research, studies on a unified, integrated navigation scheme that simultaneously addresses the distinct characteristics of the intralogistics and cultivation areas in multi-span greenhouses remain limited. In multi-span greenhouses, the intralogistics area is characterized by open space, and autonomous navigation performance is governed by the accuracy of localization and mapping, the quality of path planning, and the effectiveness of motion control and obstacle avoidance strategies. However, glass curtain walls create strong specular reflections and sparse usable features, complicating both LiDAR and vision-based perception. In contrast, the cultivation area offers explicit path constraints via pre-installed rails. Yet, the interleaving of corridors and rails, combined with frequent entry, exit, and rail-switching operations, places stringent demands on track recognition, lateral switching, and stable inter-row traversal. Given the multiplicity of operating scenarios in greenhouses, navigation must switch modes according to subtask objectives. The central challenge for practical deployment is to realize a unified, robust localization–navigation framework that performs consistently across the heterogeneous intralogistics and cultivation environments.

Despite these advances, studies on unified navigation schemes for multi-span greenhouses remain scarce. Existing works typically focus on LiDAR-SLAM optimization in logistics-like open spaces or on vision-based servoing for navigation along predefined rails in cultivation zones. Few have addressed the integration of these approaches into a cross-region framework capable of handling the heterogeneous conditions of intralogistics and cultivation areas. Moreover, the challenge of seamlessly switching between open-space navigation and rail-constrained traversal remains underexplored.

Research on robotic control further highlights this gap. Classical approaches, including kinematic modeling, proportional-integral-derivative (PID) control, and pure-pursuit trajectory tracking, have demonstrated effectiveness in structured field environments. More advanced methods, such as adaptive control, model predictive control (MPC), and reinforcement learning, have been introduced to cope with uncertainties in dynamic operating conditions. However, the unique characteristics of greenhouses, such as glass-induced reflections, narrow passages, and repetitive layouts, restrict the direct transfer of these methods from open-field applications.

To systematically evaluate robotic navigation and control strategies for greenhouse operations, synthesis criteria can be classified into three categories: technical (e.g., mapping accuracy, localization error, computational efficiency), functional (e.g., navigation robustness, rail-switching success rate, obstacle avoidance capability), and operational (e.g., labor savings, adaptability to heterogeneous layouts, ease of system integration). Together, these criteria define the practical requirements for deployment and provide a benchmark for assessing the effectiveness of novel approaches.

Motivated by these challenges, this study develops an integrated localization-navigation framework tailored to multi-span glass greenhouses, combining algorithmic innovations with a versatile robotic chassis. The main contributions are as follows:

- (1)

We proposed an integrated cross-region localization-navigation framework that accounted for the distinct characteristics of both the intralogistics and cultivation areas, establishing a coordinated aisle-rail workflow and control strategy.

- (2)

In the intralogistics area, we introduced a series of multi-layer optimizations into the LiDAR-Inertial Odometry via Smoothing and Mapping (LIO-SAM) framework, designed to suppress glass-induced reflections and mitigate feature sparsity, thereby enhancing mapping accuracy and strengthening localization robustness. When combined with A-Star global planning and the Dynamic Window Approach (DWA) for local obstacle avoidance, this approach ensured reliable autonomous navigation.

- (3)

In the cultivation area, we developed a visual-servo mechanism that coupled YOLOv8n track-center detection with pure pursuit control, enabling cross-ridge rail switching without whole-vehicle steering while ensuring stable inter-row traversal.

- (4)

We completed platform integration and field validation, which confirmed improvements in localization accuracy, rail-switching efficiency, and navigation robustness, and further demonstrated the integrated platform’s potential for extension to dynamic obstacle avoidance and multi-robot coordination.

2. Materials and Methods

2.1. Representative Operating Scenarios in Multi-Span Greenhouses

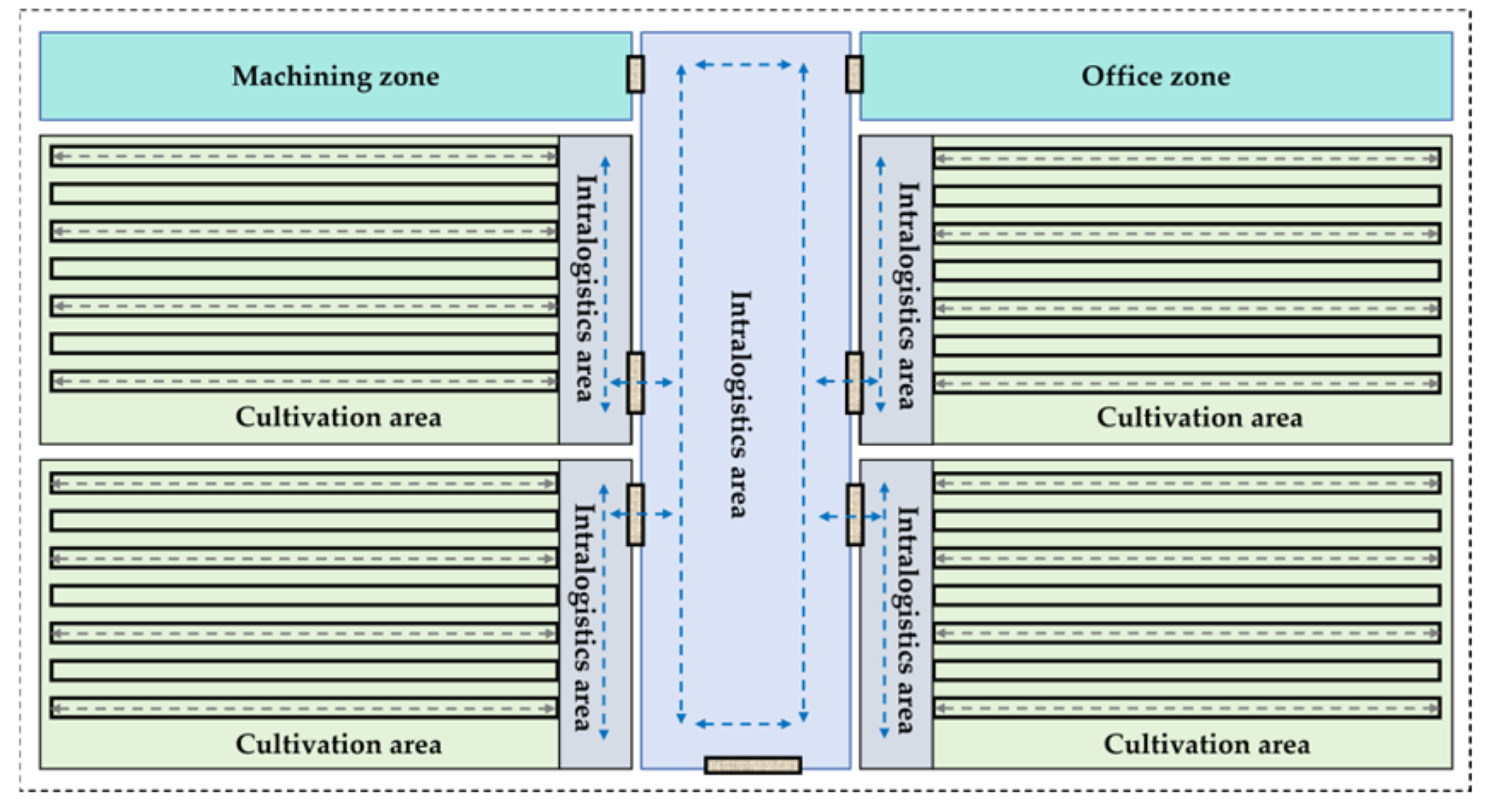

The experimental site is the Nijiawan Greenhouse in Suzhou, Jiangsu Province, China (120.62° E, 31.32° N). As shown in

Figure 1, contemporary multi-span greenhouses are organized into two core functional areas that collectively support high-efficiency facility-agriculture workflows: the intralogistics area, typically aligned with glass curtain walls, serves as the hub for material flow and operational scheduling, covering tasks such as the transfer of production inputs and harvested products, as well as non-cultivation activities like transport, loading, unloading, and sorting. The cultivation area forms the main spatial component, equipped with crop-production installations and work aisles. This area is coupled with integrated environmental control systems that maintain optimal microclimatic conditions, ensuring stable production and high quality. Together, these two partitions are complementary and synergistic, forming a complete operational system that enables continuous and standardized greenhouse production.

2.2. Structural Optimization and System Integration

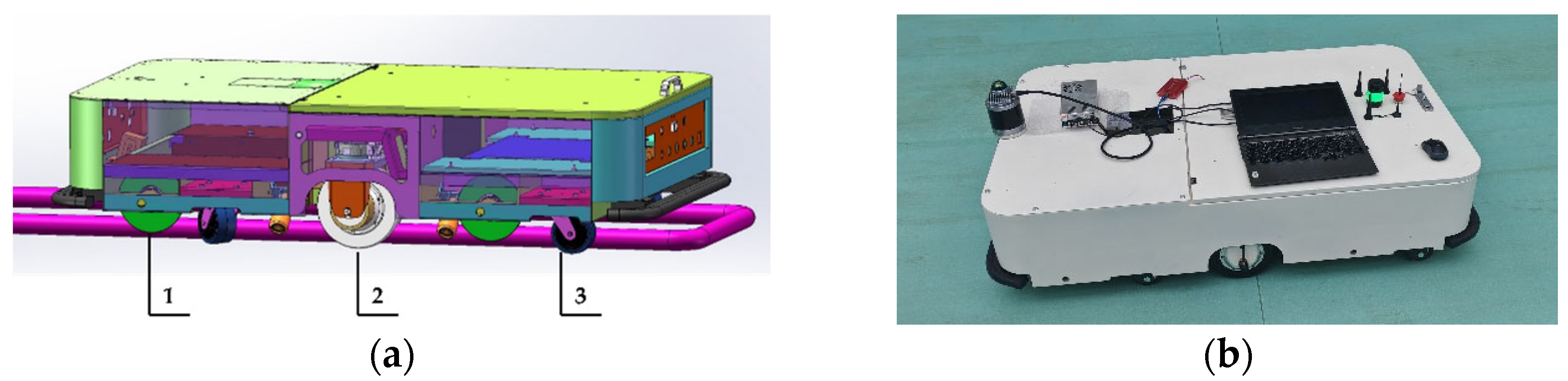

To meet the operational demands of mixed rail and ground surfaces in multi-span greenhouses, we have developed a dual-mode drive chassis with aisle-rail coordination for mobile robots, as shown in

Figure 2. The chassis features a composite wheelset architecture that integrates an inner rail drive wheel, an outer ground drive wheel, and a top profile-tracking steering-suspension module. Each component is powered by an independent motor, enabling multimodal motion capabilities, including longitudinal travel, directional turning, and lateral rail-switching. Rail support wheel sets are mounted at the front and rear, while ground-mounted caster wheel assemblies provide additional support, ensuring stable load distribution and postural stability in both rail-running and ground-driving modes. Overall, the chassis maintains reliable ground maneuverability and leverages rail-imposed geometric constraints to enhance localization accuracy and trajectory tracking, providing a robust hardware platform for continuous cross-region operation in greenhouse environments.

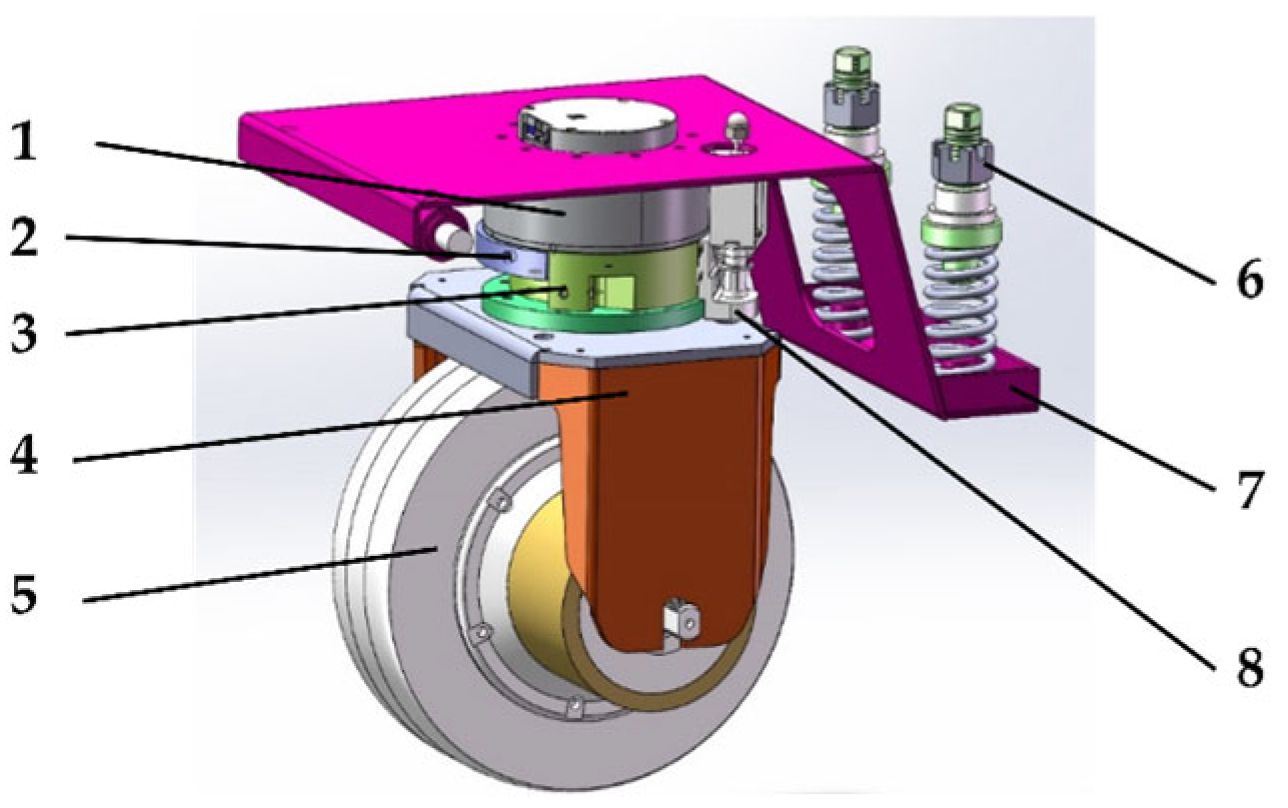

In greenhouse environments, mobile robots were subjected to impact loads when traversing dynamic terrains such as rail entry and exit, which induced postural disturbances and compromised tire-ground adhesion. To alleviate these effects, the drive-wheel connection plate was redesigned with integrated elastic elements, including springs and dampers, forming an energy-storing and shock-buffering linkage structure that absorbed impacts and attenuated vibrations during terrain transitions. Furthermore, to accommodate the lateral maneuverability required for inter-row operations, the chassis wheelset was equipped with a steering motor and a dedicated linkage mechanism, resulting in a wheel-rotation module that enabled efficient reorientation and lateral displacement on inter-row tracks, thereby reducing dependence on whole-vehicle steering during rail-switching maneuvers. Collectively, these enhancements markedly improved the robot’s stability and traversability under critical conditions, including cross-track transitions and inter-row lateral maneuvers. The design of the enhanced profile-tracking steering-suspension module is illustrated in

Figure 3.

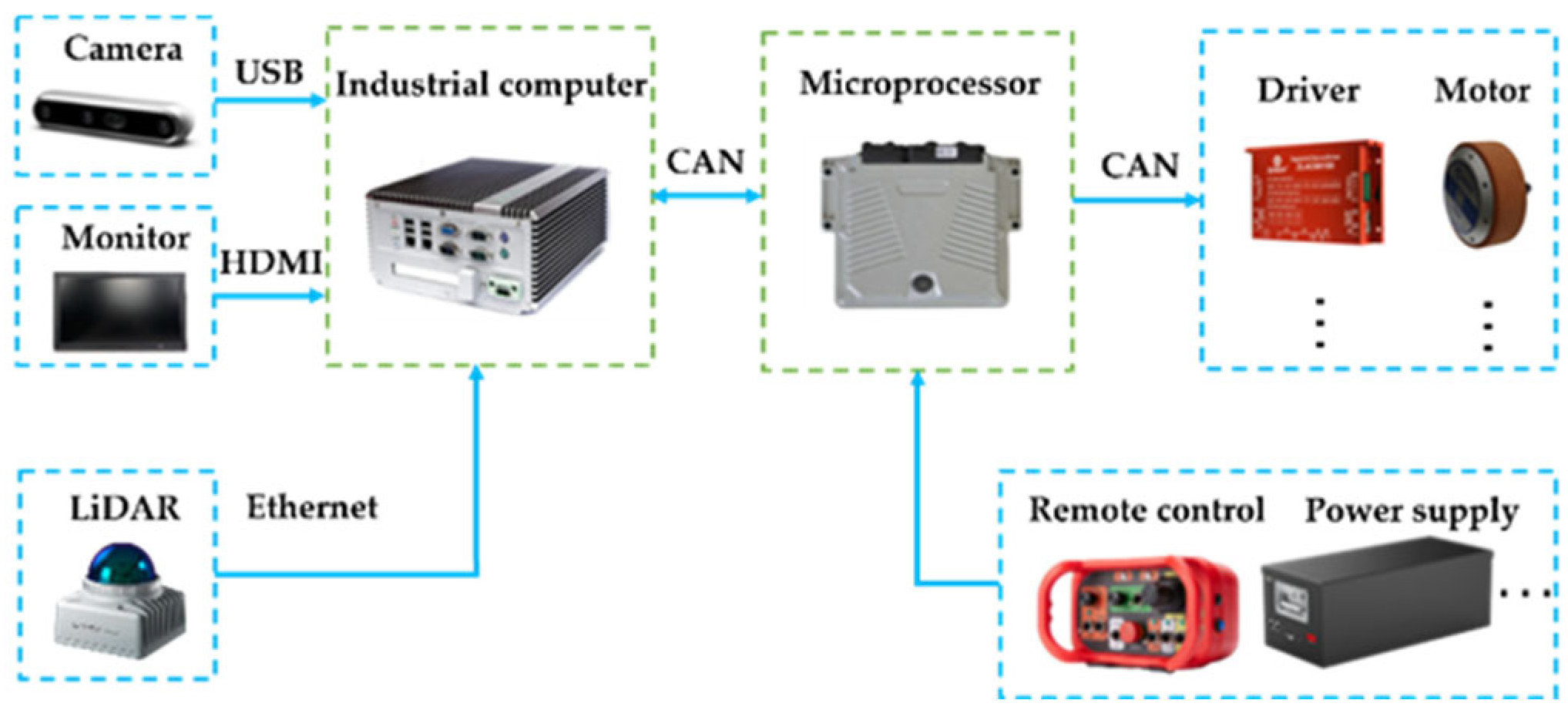

Considering overall performance and economy, this study utilizes a solid-state LiDAR (model Mid-360; Livox, Shenzhen, China) as the primary perception unit for map construction in greenhouse environments. Compared to mechanically scanned LiDARs, this sensor affords low cost, a compact footprint, and high responsiveness, while also supporting Ethernet-based direct interfacing with an Industrial Personal Computer (IPC) to enable time-synchronized acquisition and output of point-cloud data and measurements from the integrated IMU. In parallel, rail identification is carried out using a D415 depth camera (model RealSense D415; Intel Corporation, Santa Clara, CA, USA) to capture structured visual (RGB-D) information. Sensor streams are processed on the IPC to generate target waypoints and trajectory commands, which are transmitted to the low-level controller. At the chassis level, a suspension-drive mechanism is employed to handle mixed rail or ground conditions. A geared DC motor drives longitudinal motion, while a servo motor is used for steering and postural adjustments. The IPC functions as the core control unit, managing real-time multi-sensor data acquisition and timestamp synchronization, environment mapping, rail detection algorithms, motion planning, command dissemination, and system-state monitoring. The overall control architecture is shown in

Figure 4.

This study further summarizes the key performance indicators of the robot under real greenhouse operating conditions. The computing platform, based on IPC (Intel Core i7-10700, 2.9 GHz, 8 cores, 16 GB RAM), enables real-time multi-sensor fusion and SLAM processing, while integration with an NVIDIA GTX 1660 Super GPU (6 GB VRAM) extends the system’s capability to advanced visual perception and deep-learning inference. The sensing suite is energy-efficient, with power consumption of approximately 7 W for LiDAR and 2 W for the depth camera. The chassis incorporates dual 800 W hub servo motors and dual 350 W two-stage reduction steering motors, supporting a maximum payload capacity of 300 kg. In greenhouse environments with mixed rail–ground navigation, the robot sustains continuous operation for approximately 2.5–3.0 h.

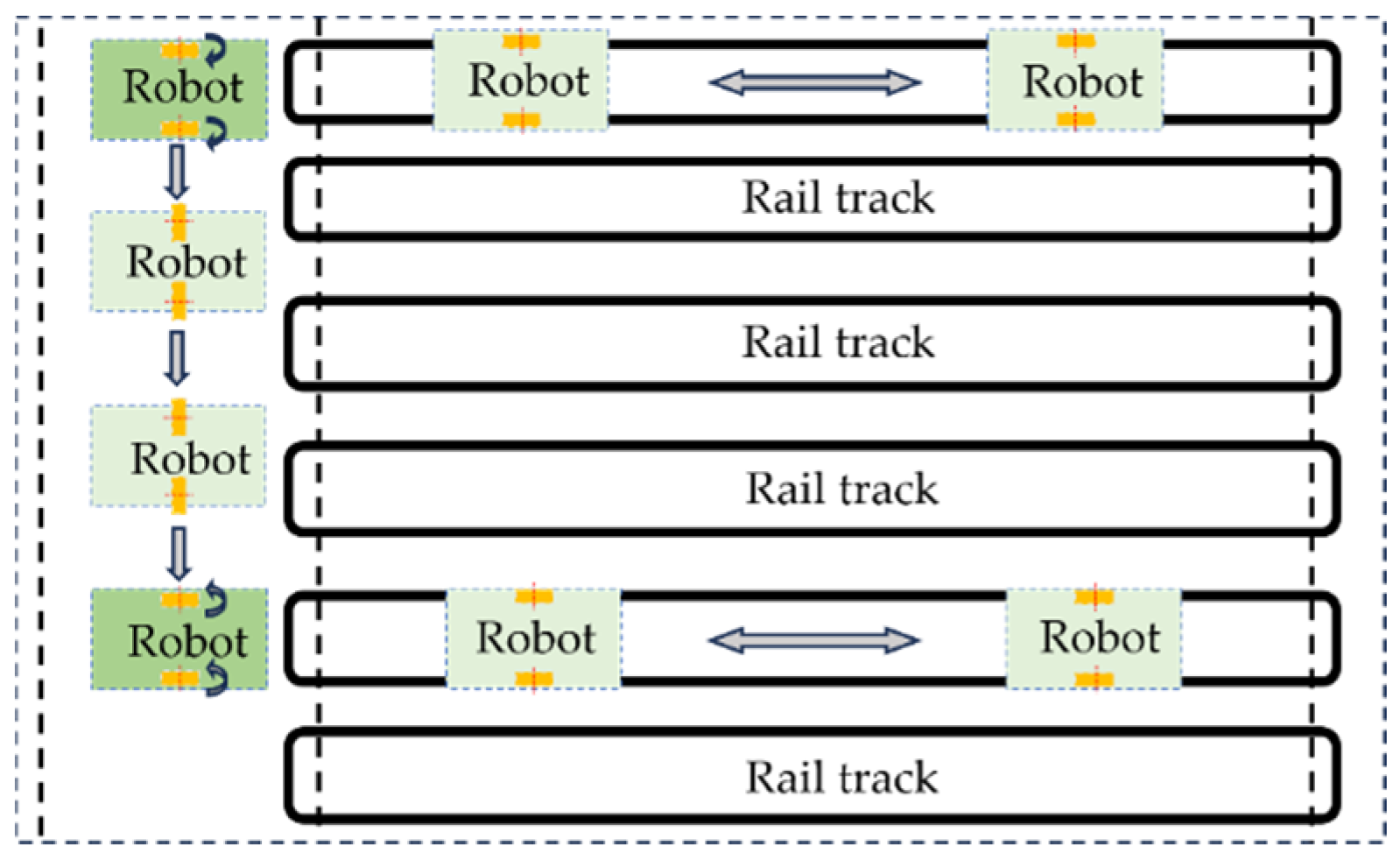

In the intralogistics area, the mobile robot must navigate dynamic obstacles, such as logistics equipment and personnel. Upon arrival at the target ridge end in the cultivation area, visual recognition is used for rail alignment. During on-rail operation, the robot follows a straight-line trajectory along the prescribed path. When departing the rail for row switching, a dedicated rotation motor actuates the profile-tracking wheelset to perform lateral rail switching, allowing inter-row or cross-ridge maneuvers while maintaining a nearly constant vehicle orientation. After completing the cross-ridge operation, the wheelset reorients to its nominal state, and the robot resumes longitudinal travel along the rail. This operational strategy enhances the adaptability and ensures continuity of tasks in complex greenhouse environments. The layout of the multi-span greenhouse is shown in

Figure 5.

2.3. Global Mapping in the Intralogistics Area via Optimized LIO-SAM

To address the challenges posed by glass-induced reflections and sparse feature availability in the intralogistics area of multi-span greenhouses, this study adopts a fusion scheme integrating a solid-state LiDAR with an IMU and introduces targeted optimizations to the LIO-SAM algorithm [

22] to improve mapping accuracy and robustness. The original LIO-SAM framework was developed for mechanically rotating LiDARs, where point clouds are sequentially organized by scan lines and acquisition order. Feature extraction and motion-distortion correction for edge and planar points rely on the assumption of local geometric continuity among adjacent points within the same scan line. In contrast, the solid-state LiDAR employs an optical phased array for non-repetitive scanning, generating point clouds without scan-line indices. Consequently, the spatiotemporal adjacency of points cannot be directly mapped to the scan-line order of mechanical LiDARs. Directly applying such data to the unmodified LIO-SAM framework would result in projection-model mismatches and failures in feature extraction.

To address this, we utilize the official driver SDK to republish the raw point cloud in a custom message format, adding precise timestamps and intensity values to each point. This enables subsequent motion de-skewing and temporal resampling. The functionality is implemented in a driver node that performs real-time data acquisition and format conversion. In the front-end preprocessing module, the received custom data are temporally resampled and mapped onto a virtual scanline lattice, where each point is assigned equivalent ring identifiers and column indices. In this way, prior to entering the feature-extraction stage of LIO-SAM, we restore its reliance on scan sequencing and local geometric continuity. Importantly, this adaptation is confined to data ingestion and feature preprocessing, with the back-end optimization and the overall LIO-SAM architecture remaining unmodified. This preserves the real-time performance and modularity of the system while enhancing the applicability of solid-state LiDAR.

To address the decline in mapping accuracy observed in the intralogistics area, we introduce the following enhancements to LIO-SAM within a solid-state LiDAR-IMU fusion framework:

- (1)

Preprocessing optimization. An intensity-threshold filter is applied to suppress specular artifacts caused by high-reflectance glass façades, improving point-cloud geometric consistency and feature reliability. Given the low-speed operation in greenhouses, the weight of the IMU preintegration factor is moderately reduced to attenuate high-frequency jitter and slow drift in the estimated trajectory.

- (2)

Strengthened feature extraction. An adaptive curvature-thresholding strategy based on distribution quantiles is adopted, designating low-curvature points as planar candidates and high-curvature points as edge candidates. In feature-sparse environments, such as long corridors or open spaces, the thresholds are relaxed to ensure sufficient constraints are included in registration and back-end optimization, maintaining stable performance across diverse scene densities.

- (3)

Corridor-prior constraint factor. To address trajectory drift from lateral geometric under-constraint in symmetric corridors, we introduce a handcrafted prior within the factor-graph optimization. Specifically, a weak directional-consistency constraint aligned with the odometry-inferred principal direction is applied, supplemented by a lateral-position regularization term. This suppresses drift caused by structural symmetry and enhances stability and trajectory accuracy in degenerate environments.

- (4)

Upgraded mapping and loop closure. The Scan Context module is enhanced by enlarging the search radius and refining the matching criterion, thereby reducing the false-match rate and improving loop-closure robustness. In addition, keyframe intervals are shortened and the local-map cache is extended to improve temporal continuity and spatial coverage, compensating for vertical feature discontinuities due to the narrow vertical field of view of solid-state LiDAR.

- (5)

Lightweight implementation and post-processing. To satisfy real-time requirements on embedded platforms, we apply 5:1 keyframe downsampling, which preserves acceptable accuracy given the substantial inter-frame overlap at low robot speeds. Following map construction, interactive_slam is employed for operator-in-the-loop local corrections to remove residual specular artifacts and distortions from glass reflections. The optimized core parameters are presented in

Table 1.

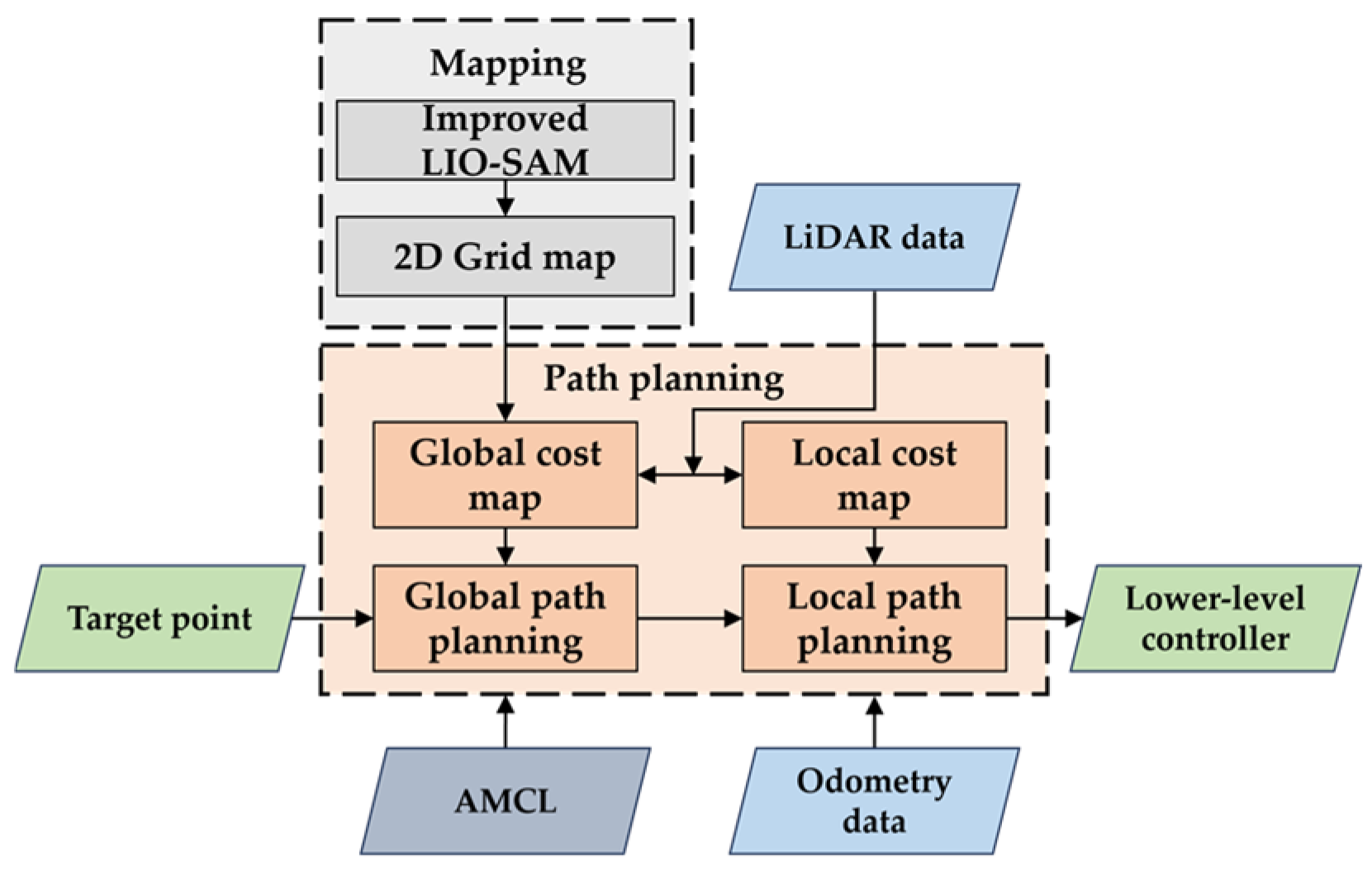

2.4. Path Planning for Mobile Robots in the Intralogistics Area

The robot’s path planning and navigation are implemented using the ROS Navigation framework, built on the move_base package. This framework integrates external sensor data with an occupancy-grid representation, offering extensible navigation through plugin-based global and local planners, along with layered costmaps. Adaptive Monte Carlo Localization (AMCL) fuses odometry and LiDAR scans to estimate the robot’s real-time pose within a known map. The resulting localization plays a critical role in updating the global costmap and establishing the initial condition for path planning. Upon receiving a target pose, the A-Star planner generates a connected, cost-optimal global path on the global costmap. The DWA performs real-time optimization of the local trajectory, while LiDAR and IMU observations continuously update the local costmap, improving obstacle avoidance and trajectory tracking in dynamic and confined environments. The resulting path is translated into chassis-executable linear and angular velocity setpoints, which are transmitted via the Controller Area Network (CAN) bus to the low-level controller, enabling closed-loop actuation of the drive and steering subsystems, as shown in

Figure 6.

2.5. Design of Visual-Servo Inter-Row Rail Switching in the Cultivation Area

To enable inter-row traversal and rail switching in the cultivation area, a rail detection and pure pursuit control strategy based on YOLOv8n was developed. The detection system was implemented using the Ultralytics YOLOv8n framework, whose modular Backbone–Neck–Head architecture provides an effective balance between detection accuracy and inference efficiency [

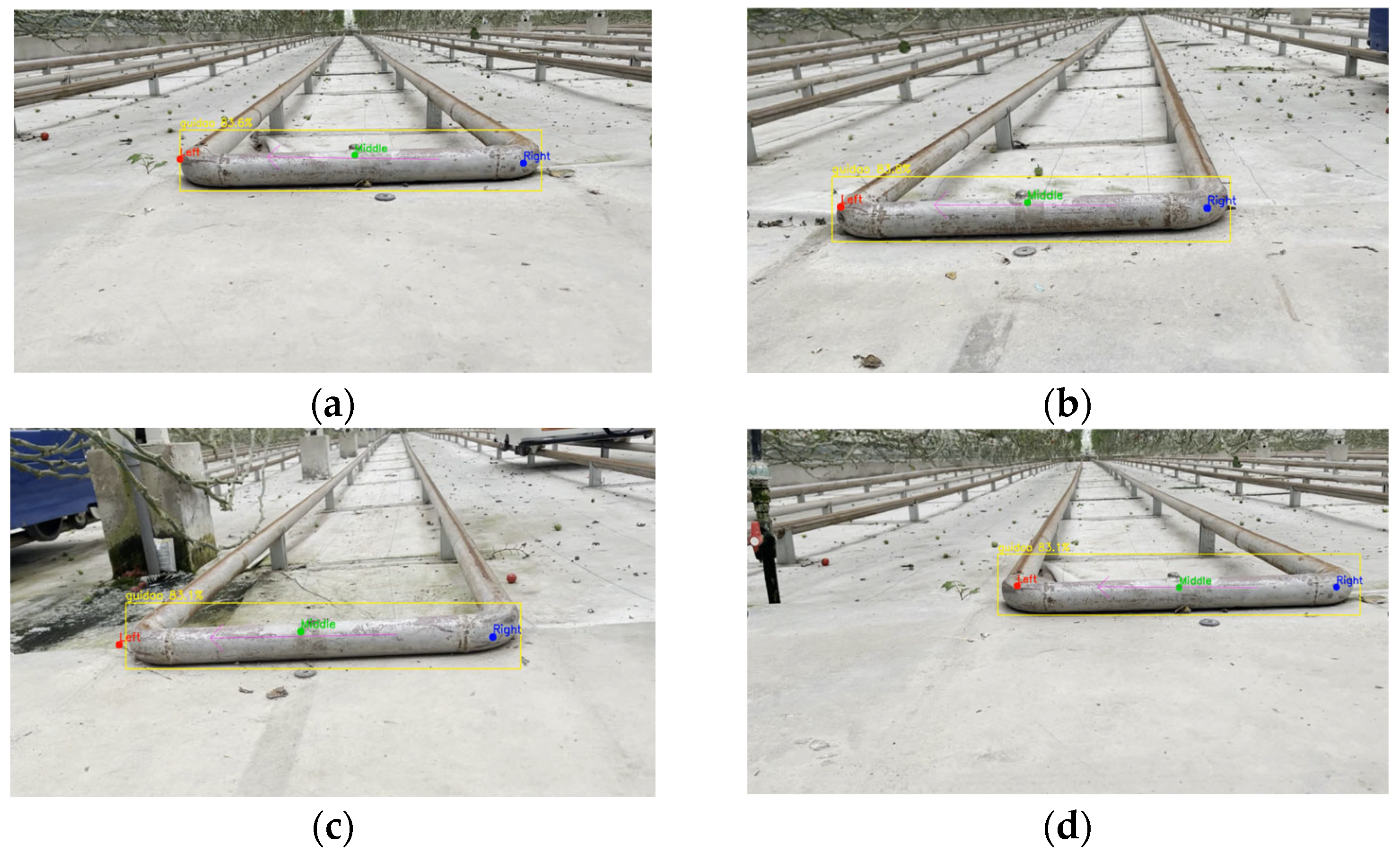

23]. For dataset construction, images were acquired using a depth camera across multiple time periods and under complex, interference-prone greenhouse conditions, followed by manual annotation with LabelImg software(version 1.8.6). Considering the spatial distribution of rails in planar images, the dataset was categorized into “Left,” “Middle,” and “Right” classes to distinguish among different rails in multi-rail environments and avoid misclassification.

To enhance dataset diversity and improve model generalization, deliberate variations in acquisition conditions were introduced. Specifically, the camera was positioned at an operational height of approximately 0.6 m, with images captured under different inclination angles. The dataset also incorporated diverse illumination conditions, including natural daylight, partial shading, and artificial supplementary lighting, as well as artifacts such as rail-surface reflections, soil residues, foliage occlusion, and background clutter. This variability was intended to strengthen the model’s robustness against environmental disturbances in greenhouse scenarios. A dataset of 1200 images was constructed and divided into training, validation, and test sets at a 7:2:1 ratio. Images were resized to 640 × 640 pixels via bilinear interpolation. Training hyperparameters were determined through grid search, including a batch size of 4, an SGD optimizer (learning rate 0.00251, momentum 0.938, weight decay 0.0006), and 300 training epochs. The resulting model achieved a recall of 98.5%, a precision of 98.6%, an F1-score of 98.5% (balancing sensitivity and accuracy), an mAP@50 of 98.2%, and an mAP@50-95 of 73.9%. These results collectively highlight the high accuracy and robustness of the proposed detection system in rail recognition tasks.

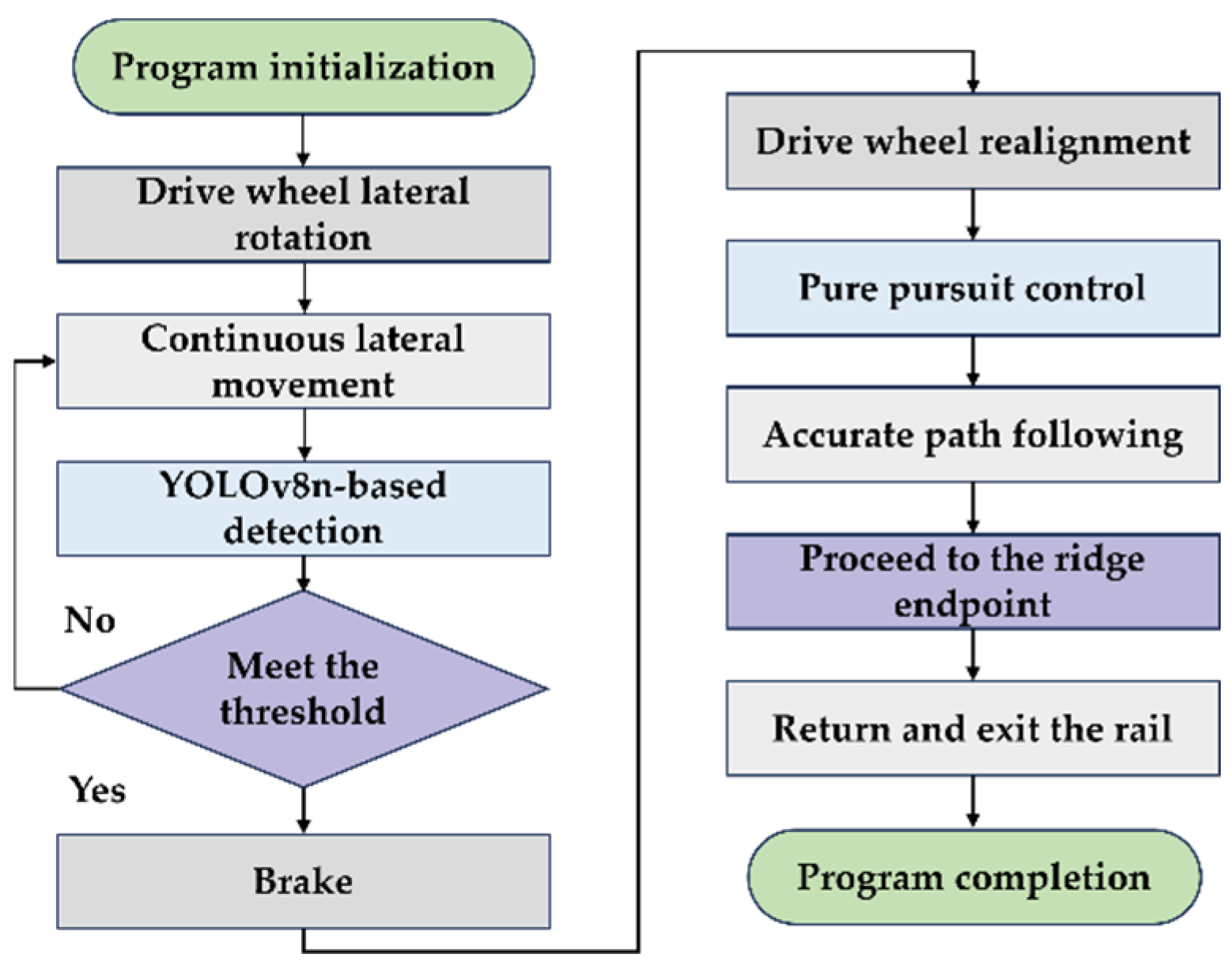

To address the interleaved configuration of inter-row rails and walking aisles in multi-span greenhouses, we implement an aisle-rail coordinated workflow. In terms of hardware, a steering-motor-actuated wheelset rotation mechanism is mounted above the drive wheel, allowing a 90° reorientation to realize lateral motion. This allows the robot to directly insert into adjacent rails without reorienting the vehicle body, thereby improving cross-ridge efficiency and operational continuity, as shown in

Figure 7. The rail-entry sequence proceeds as follows. First, the robot aligns parallel to the target rail in the intralogistics aisle, and the host IPC issues a CAN command to rotate the steering motor, switching the wheelset to a lateral configuration. Next, the drive wheels perform lateral translation while maintaining the vehicle’s attitude, positioning the chassis at the desired row. A depth camera, coupled with YOLOv8n, performs real-time rail detection and extracts the rail-center coordinates. Once the detection is within a predefined field-of-view threshold, the system brakes and restores the wheelset to its longitudinal configuration. Finally, using the rail center at the braking moment and the fitted rail direction as references, a pure pursuit controller calculates pose and heading deviations, generates target linear and angular velocities, and closes the loop with the drive motor to achieve precise rail entry. Upon reaching the end of the rail, the robot halts and returns to the aisle, completing one inter-row operational cycle, as shown in

Figure 8.

During the lateral translation phase, the onboard camera continuously captures rail images, and a YOLOv8n-based object detector is employed for real-time identification and localization of the rail center point, as shown in

Figure 9.

The proposed mechanism combines deep vision-based detection with dynamic motion control. By maintaining high-accuracy rail recognition and precise entry localization, it significantly improves the flexibility and efficiency of cross-ridge rail-switching maneuvers, offering a practical and engineering-ready solution for autonomous operation in complex greenhouse environments.

4. Discussion

In maps constructed with the optimized LIO-SAM pipeline, the geometric boundaries between crop rows are sharply delineated with no appreciable positional distortion (

Figure 10). The maps also exhibit extended spatial coverage, capturing the principal structural elements and spatial organization of the multi-span greenhouse. In highly repetitive greenhouse structures, the optimized LIO-SAM demonstrates enhanced robustness and environmental adaptability. Compared with 2D mapping methods, 3D LiDAR SLAM point-cloud maps offer higher spatial resolution and richer detail, enabling precise estimates of inter-row spacing, trellis height, obstacle volumes, and clearances. These properties yield a more reliable environmental representation to support path planning, obstacle avoidance, and operational decision-making in complex agricultural tasks.

At higher speeds, larger inter-sample pose variation combined with bias-estimation latency leads to short-term localization drift (

Table 3). Moreover, actuator-wheel interactions amplify residual orientation errors, further reducing navigation accuracy. Despite the increase in error at higher speed, the system maintains high localization reliability in greenhouse environments, and its accuracy remains sufficient for low-speed operational tasks.

The degradation in localization accuracy arises primarily from the shorter dwell time of the rail endpoint within the camera’s field of view at higher speeds, which induces YOLOv8n detection latency or misses (

Table 4). The associated reduction in detected rail-center samples undermines straight-line fitting of the rail heading, thereby inflating localization error. Furthermore, the combined effect of longitudinal inertia and lateral translation during execution amplifies lateral deviation, ultimately reducing entry accuracy.

Overall, the proposed vision-servo rail-switching strategy achieves high success rates and efficiency under low-speed conditions, making it well suited for autonomous greenhouse tasks such as harvesting, inspection, and crop protection. At higher speeds, however, its performance is limited by compounded detection latency, localization drift, and execution-induced deviations, emphasizing the need for further improvements in perception fidelity and control design. Nevertheless, the method substantially enhances inter-row traversal and cross-rail maneuverability, providing a strong foundation for greenhouse automation and operational efficiency.

Future research will focus on improving perception accuracy in high-speed scenarios, where multi-modal sensing approaches (e.g., LiDAR, stereo vision, and depth cameras) may help mitigate detection delays and localization drift. The integration of advanced control strategies, such as adaptive and model predictive control, could further improve maneuverability under dynamic uncertainties. Extending the framework to cooperative multi-robot systems would enable task allocation, coordinated navigation, and information sharing, enhancing scalability for large-scale greenhouse operations. In addition, long-term field trials across different crop types, seasonal conditions, and greenhouse layouts will be essential to validate robustness and generalizability. These directions point toward advancing the intelligence, adaptability, and efficiency of autonomous greenhouse operations.

5. Conclusions

This study addressed the challenges of autonomous operation in multi-span glass greenhouses by proposing an integrated localization-navigation framework that accommodates the structural complexity and operational demands of both intralogistics and cultivation areas. At the hardware level, the drive and steering wheelsets were optimized to enable a high-efficiency lateral rail-switching mechanism, and a Controller Area Network (CAN)-based distributed control architecture was incorporated to support reliable operation across heterogeneous greenhouse scenarios.

To overcome the susceptibility of solid-state LiDAR to reflective artifacts in glass environments, we introduced a series of LIO-SAM optimizations, including reflection-intensity filtering, adaptive feature-extraction thresholds, and enhanced loop-closure detection. These refinements reduced the mean relative mapping error by 15%. Within the navigation stack, an A-Star global planner and DWA local obstacle avoidance were integrated with AMCL-based pose correction, achieving a lateral localization error of 6.01 cm and a heading error of 2.56° at 0.4 m/s, thereby meeting the requirements for autonomous mobility in intralogistics operations.

In the cultivation area, where interlaced rails and internal corridors complicate navigation, a coordinated scheme coupling lateral wheelset steering with YOLOv8n-based rail detection was developed to achieve cross-ridge switching without reorienting the entire vehicle. At speeds below 0.4 m/s, the maximum lateral error was limited to 12 cm and the heading error to 6°. With pure-pursuit-based visual-servo control, rail-switching efficiency improved by 25.2% at 0.2 m/s. Furthermore, the markerless design reduces manual maintenance costs, underscoring its practical engineering value.

Through platform-level integration and field validation, the proposed system demonstrated strong performance in localization accuracy, rail-switching efficiency, and navigation robustness. Future work will explore dynamic obstacle avoidance, cooperative multi-robot path planning, and broader deployment in large-scale greenhouses, thereby advancing the intelligence, adaptability, and efficiency of facility-agriculture operations.