1. Introduction

Autonomous ground robots are emerging as an important complementary solution to existing Remote Sensing (RS) infrastructure. These mobile platforms are capable of carrying advanced sensors at close proximity to crops and soil, enabling high-frequency, high-precision measurements that are less constrained by weather conditions and flight regulations than aerial platforms, but more constrained by terrain complexity and soil conditions. Their ability to operate autonomously and repeatedly in predefined patterns makes them particularly well suited for integration into ongoing data acquisition workflows in precision agriculture.

Remote sensing enables the monitoring of key crop parameters such as chlorophyll content, soil moisture, growth dynamics, and the occurrence of diseases and pests. Multispectral, hyperspectral, thermal, and radar sensors are used to record data across different electromagnetic spectrum ranges. Analysis of light reflection in specific spectral bands allows for the identification of plant stress conditions—including nutrient deficiencies, water stress, and infections—enabling early-stage corrective actions.

This review article focuses on the operational integration of remote sensing technologies with autonomous robotic platforms, emphasizing how such systems work in tandem to improve data quality, temporal resolution, and agronomic responsiveness.

Practical applications of such integrated systems include early weed detection, soil moisture and compaction mapping, detection of nutrient deficiencies, crop growth monitoring, and site-specific application of inputs such as fertilizers or pesticides. Robotic platforms are particularly well suited to repetitive tasks in row crops and orchards, where consistent and high-resolution measurements are essential for effective intervention. For example, repeated multispectral scanning via robot-mounted sensors can help identify stress patterns within fields before they are visible to the human eye or detectable by overhead systems.

From a systems engineering perspective, integrating RS tools with robotics presents both opportunities and challenges. Sensor selection, payload balancing, terrain-adaptive navigation, data synchronization, and real-time communication with farm management systems are all critical factors influencing operational success. Moreover, the integration of AI (Artificial Intelligence) and Machine Learning (ML) algorithms into robotic systems enables on-board data interpretation, real-time decision support, and autonomous task execution—marking a shift toward intelligent, adaptive agronomic systems.

Despite the clear benefits of autonomous robots as data acquisition platforms in precision agriculture, the scientific literature lacks comprehensive evaluations of their integration within existing remote sensing frameworks and their operational impact on agronomic decision-making. Most previous studies have focused on either remote sensing technology (satellite and Unmanned Aerial Vehicle (UAV)) or robotic systems in isolation, without a systematic assessment of how these mobile platforms bridge data acquisition gaps or enhance precision agriculture models.

This article addresses these gaps by reviewing recent advances in the integration of remote sensing and robotics in precision agriculture. We evaluate available sensor technologies for robotic deployment, examine real-world applications in crop and soil monitoring, and discuss the system-level challenges, opportunities, and limitations associated with implementing these integrated platforms. By focusing on workflow-level integration and operational use cases, this review aims to inform future research and development directions in automated, data-driven agriculture.

2. Methods (Scoping/Systematic Review)

A structured scoping review was conducted to systematically capture and critically appraise the integration of remote sensing (RS) technologies and autonomous robotics in precision agriculture and orchard management. The methodological framework followed the scoping review model of Arksey and O’Malley [

1], as further advanced by Tricco et al. [

2], and aligned with the PRISMA 2020 reporting guidelines to ensure transparency, reproducibility, and comprehensive coverage (Page et al. [

3]). The protocol was defined a priori, focusing specifically on workflows that link perception to action—i.e., studies in which RS sensors deployed on autonomous platforms directly inform or trigger agronomic operations within the same decision–execution loop.

Searches were conducted in the Web of Science Core Collection, Scopus, IEEE Xplore, ACM Digital Library, and PubMed. The structured review corpus covered publications from January 2010 to July 2025, reflecting the period of rapid growth in autonomous robotics and their integration with remote sensing in agriculture. Earlier foundational works published prior to 2010 were included selectively where they introduced core methods, indices, or models that remain in current use (e.g., classical radiative transfer models, vegetation indices, or soil physics formulations). The search strategy combined controlled vocabulary with free-text terms encompassing sensing modalities, robotic platforms, agricultural tasks, and workflow integration. Representative query strings included the following:

“remote sensing” AND robot* AND “precision agriculture” OR orchard*;

multispectral OR hyperspectral OR LiDAR OR radar AND robot* AND weed OR disease OR spray* OR irrigation;

“edge computing” OR “on-board computing” AND agriculture AND robot;

“perception-decision-execution” OR “sense-plan-act” AND farm*.

Studies were included if they met at least one of the following criteria:

Peer-reviewed journal articles or full conference papers reporting field or operational trials in agriculture or orchards;

Research integrating RS with an autonomous or highly automated ground platform and explicitly linking measurements to decisions or actuation;

Comparative evaluations of sensors or platforms addressing the perception → decision → execution loop.

Exclusion criteria were the following:

Purely laboratory or greenhouse-only studies without field transfer;

Simulation-only research without empirical validation;

Studies focused exclusively on aerial platforms (UAVs, satellites) without ground-level robotic integration;

Articles lacking methodological transparency or without sufficient detail for appraisal.

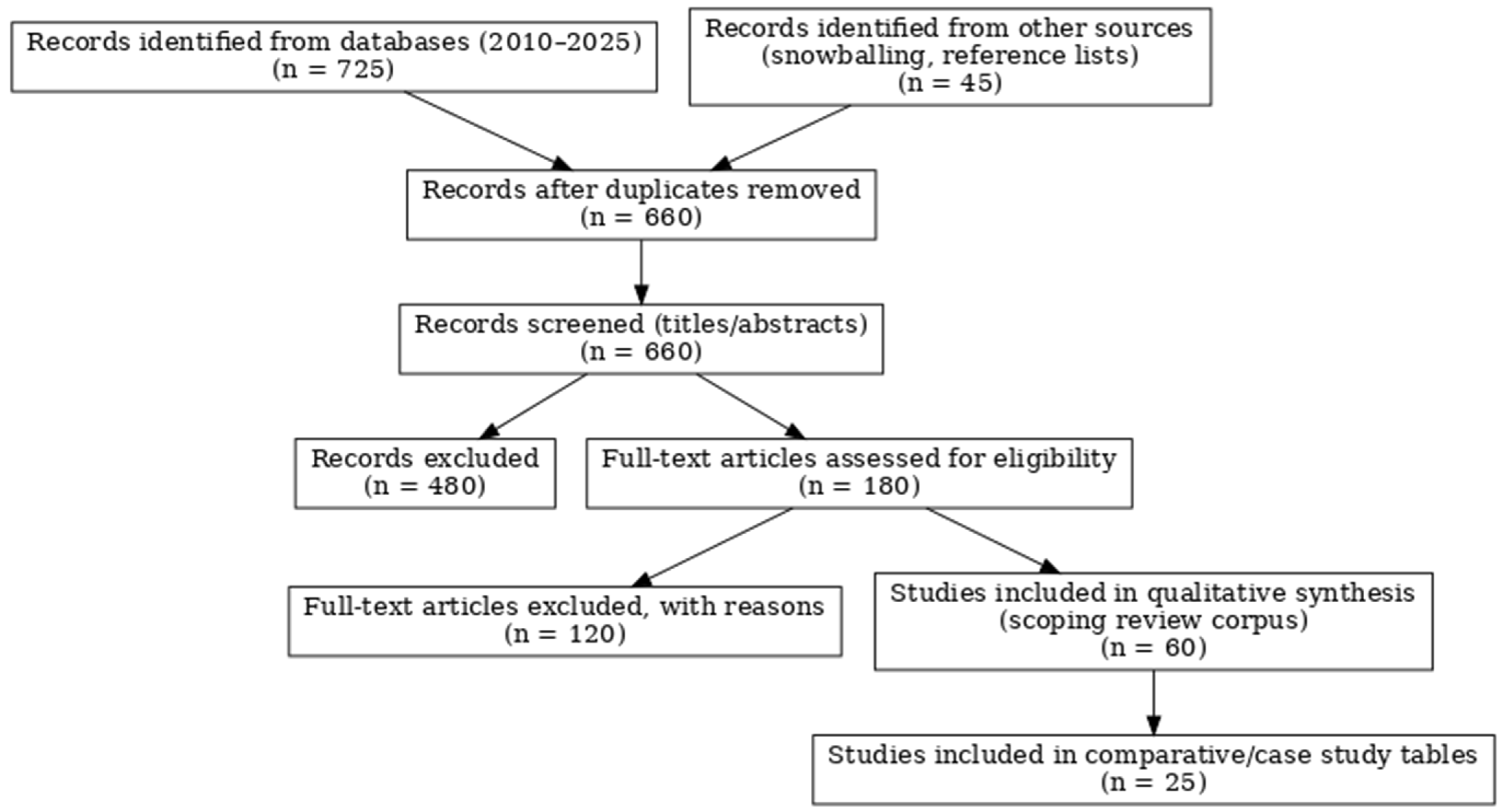

Two reviewers independently screened titles and abstracts; disagreements were resolved by discussion and consensus. Full texts were assessed against the eligibility criteria. Duplicate records across databases were removed. The selection process is documented in a PRISMA flow diagram detailing identification, screening, eligibility, and inclusion, with numerical counts and reasons for exclusion (

Figure 1). Additionally, reference lists of all included articles and relevant recent reviews were snowballed to identify further eligible studies.

For each eligible study, data relevant to the following conditions were extracted:

Crop type and production setting (field vs. orchard);

Platform category (satellite, UAV, autonomous ground robot, stationary proximal);

Sensor modalities and spectral ranges;

Calibration and preprocessing procedures;

Navigation and localization methods;

Communication and data transmission links;

On-board vs. off-board computing architectures;

Perception and decision-making algorithms;

Actuation mechanisms and agronomic interventions;

Latency and throughput of decision pipelines;

Experimental scale, duration, and context (research plot vs. commercial farm);

Reported performance metrics (e.g., detection accuracy, spraying precision, coverage rate);

Cost considerations, adoption challenges, and governance aspects.

Given heterogeneity across applications and metrics, findings were synthesized narratively with structured cross-comparison, rather than meta-analysis. Patterns and bottlenecks along the acquisition → preprocessing/fusion → decision → execution workflow were emphasized. Where comparable performance metrics existed, ranges were reported (e.g., detection accuracies, precision rates), consistent with scoping review practice.

Although formal risk-of-bias scoring is not required in scoping reviews, reporting completeness, calibration transparency, dataset availability, and external validity were assessed using a checklist adapted from technology evaluation frameworks. Specific attention was paid to standardization of in situ references, generalizability across sites and crops, and safety considerations in autonomous operation. This appraisal informed the discussion of evidence robustness and research priorities, but was not applied as an exclusion criterion.

3. Remote Sensing in Precision Agriculture

3.1. Crop Condition Monitoring (RS Baseline)

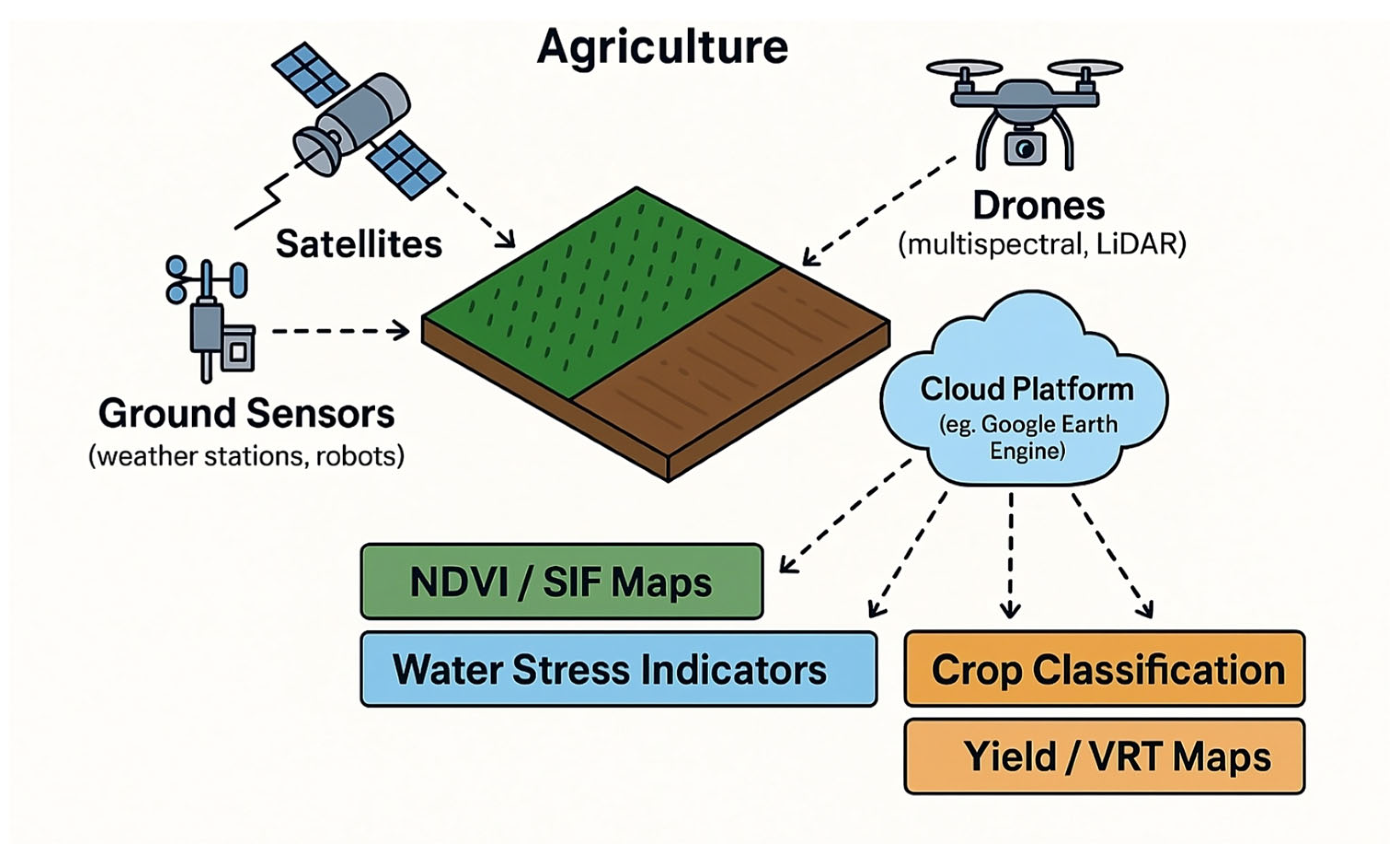

Remote sensing has become a cornerstone of precision agriculture, enabling timely crop monitoring, yield forecasting, and pre-symptomatic stress detection across local to global scales (see

Figure 2). Since the 1970s, satellite Earth observation has provided consistent, synoptic coverage that underpins operational crop condition assessment and early warning systems. Recent advances in satellite constellations, UAVs, and proximal (ground-based) sensing have improved spatial and temporal resolution and broadened the scope of measurable agronomic variables (Fritz et al. [

4]; Nakalembe et al. [

5]).

Contemporary crop monitoring commonly targets crop-type mapping, vegetation condition assessment, and yield estimation, with a growing emphasis on identifying management practices (e.g., irrigation, tillage, multi-cropping). Cloud platforms such as Google Earth Engine, Amazon Web Services (AWS), and Microsoft AI for Earth facilitate scalable processing and integration of Earth Observation (EO) with meteorological, soil, and topographic data, enabling more comprehensive monitoring frameworks (Defourny et al. [

6]). Beyond multispectral imagery, hyperspectral data are increasingly used for varietal discrimination and biochemical traits, airborne LiDAR for canopy structure in perennial systems, and Synthetic Aperture Radar (SAR) for all-weather mapping and crop-sequence analysis in cloud-prone regions (Bellón et al. [

7]).

Widely used metrics—including Normalized Difference Vegetation Index (NDVI), Leaf Area Index (LAI), Solar-Induced Fluorescence (SIF), and canopy cover/biomass proxies—capture key morphological and physiological attributes that relate to water and nutrient status, pest and disease pressure, and overall growth dynamics (Mohammed et al. [

8]; Bieganowski et al. [

9]). However, the limited availability of standardized in situ datasets, frequent reliance on expert-driven model calibration, and the predominance of small-area, single-sensor studies constrain transferability and inflate uncertainty in regional and global applications (Panek-Chwastyk et al. [

10]; Dąbrowska-Zielińska et al. [

11]; Łągiewska et al. [

12]). Initiatives such as GEOGLAM/JECAM aim to harmonize field protocols and improve cross-site model calibration, though data-sharing and privacy constraints continue to limit full openness. The Institute of Geodesy and Cartography contributes by integrating EO with field observations to strengthen validation and streamline monitoring workflows (Panek-Chwastyk et al. [

13,

14]).

In summary, RS provides the baseline information layer for crop condition assessment, while persistent gaps relate to standardized ground truth, cross-sensor fusion, and uncertainty propagation. Below, we classify sensor families and analytical methods.

Section 4 examines how these RS streams are operationalized on robotic platforms within farm workflows.

3.2. Sensor Taxonomy and Capabilities

Current methods emphasize the use of high-resolution imagery for identifying management practices such as irrigation, tillage, and crop rotations. Airborne Light Detection and Ranging (LiDAR) and hyperspectral data are increasingly used for tree structure analysis and varietal classification, while SAR sensors support mapping in cloud-prone areas. Current sensor trends include Very High Resolution (VHR) optical for agroforestry and field structure, high-frequency acquisitions for phenology-dependent practices, hyperspectral for varietal and biochemical traits, airborne LiDAR for 3D canopy and tillage structure, and C-/L-band SAR for rotation and multi-cropping in cloud-prone regions. Below, we classify sensor families and their capabilities—optical, thermal, LiDAR, SAR, proximal plant/soil, and weather—as the foundation for the analytical pipelines discussed in

Section 3.3 and

Section 3.5 (Bellón et al. [

7]).

3.2.1. Optical Sensors (RGB, Multispectral, Hyperspectral)

The development stage of cultivated plants can be assessed based on their biogeophysical properties, which are treated as indicators of vegetation condition. For this purpose, various multispectral vegetation indices (VIs) and other quantitative metrics have been developed to reflect the morphological, physiological, and biogeophysical characteristics of crops. Among the most commonly used indicators are the Normalized Difference Vegetation Index (NDVI), the leaf area index (LAI), the Vegetation Health Index (VHI), Aboveground Biomass (AGB), Leaf Chlorophyll Content (LCC), Leaf Equivalent Water Thickness (LEWT), Crop Canopy Cover (CCC), solar-induced chlorophyll fluorescence (SIF), crop water deficit, and Net Primary Productivity (NPP). These metrics are widely applied for evaluating crop growth status and for assessing the influence of agroclimatic conditions, pests and diseases, water stress, and management practices—thereby supporting early warning systems and informed agricultural decision-making (Mohammed et al. [

8]; Wu et al. [

15]).

Multispectral sensing scales well but saturates in dense canopies and is sensitive to soil background/illumination; hyperspectral sensing improves biochemical sensitivity at higher data and calibration costs.

3.2.2. Thermal Infrared

Measuring the plant surface temperature allows the calculation of water stress indices such as the Crop Water Stress Index (CWSI), which are useful in irrigation systems. In vegetable crops, thermal imagery can quickly detect canopy temperature anomalies that may precede visible discoloration or wilting (Zhu et al. [

16]).

Thermal sensors play a key role in agricultural remote sensing, enabling the assessment of the physiological condition of plants through the analysis of their surface temperature (Dąbrowska-Zielińska [

17]; Dąbrowska-Zielińska et al. [

11]; Souza et al. [

18]; Torres-Quezada et al. [

19]).

The application of thermal sensors in agriculture requires precise calibration under variable atmospheric conditions to minimize the influence of external factors such as solar radiation, air humidity, or ambient temperature. Integrating thermal sensors with other sensing technologies—e.g., multispectral or hyperspectral—enhances analytical accuracy and provides a more comprehensive assessment of crop health (Noguera et al. [

20]).

Atmospheric humidity and temperature are particularly relevant in the context of thermal imaging, which is used for detecting plant water stress and physiological anomalies. High water vapor content in the atmosphere leads to absorption of radiation in the near- and mid-infrared range, which can distort surface temperature readings of leaves and soil, especially when using passive thermal sensors. Moreover, ambient temperature fluctuations and the heating of the sensor platform’s structural elements can affect thermal detector stability, necessitating the use of compensation algorithms and precise calibration procedures (Messina & Modica [

21]).

Thermal indicators are powerful for water stress but require careful atmospheric correction and micrometeorological context to avoid false positives.

3.2.3. LiDAR

LiDAR (Light Detection and Ranging) technology plays a key role in modern remote sensing methods, enabling three-dimensional modeling of vegetation structure, including trees and fruits in orchards. LiDAR systems use laser pulses to precisely measure the distance between the sensor and the surface of an object, allowing for the generation of detailed point clouds that reconstruct the plant architecture. The application of this technology in orchard management enables accurate analysis of tree canopy structure, identification of growth parameters, and assessment of biomass and yield potential (Dong and Chen [

22]; Wang and Menenti [

23]). Advanced point cloud processing algorithms enable canopy segmentation and identification of zones with varying leaf and fruit density, which is essential for the management of agronomic practices (Sandonís-Pozo et al. [

24]). LiDAR technology is also used for precise estimation of fruit count and size without the need for manual measurement. The 3D models generated from point clouds allow for the determination of fruit volume and distribution within the tree canopy, enabling more accurate yield prediction and quality assessment (Murcia et al. [

25]). Despite the high effectiveness of LiDAR systems, their implementation in agriculture and orchard production faces certain limitations, including high equipment costs and the complexity of data analysis; moreover, processing large datasets requires advanced computing infrastructure and efficient artificial intelligence algorithms (Rivera [

26]).

Quantitative use depends on accurate co-registration with optics and robust point cloud segmentation; processing loads remain non-trivial.

3.2.4. Radar SAR (C/L-Band)

The use of Synthetic Aperture Radar (SAR) data enables the detection of changes in land cover structure, including the analysis of crop rotation and the identification of multi-cropping systems, even under conditions of limited optical data availability due to persistent cloud cover (Bellón et al. [

7]).

Backscatter is confounded by surface roughness and canopy structure; speckle and polarization choices (VV/VH) materially affect retrievals.

3.2.5. Proximal Plant and Soil Sensors

The main categories of sensors used in precision agriculture include soil sensors, plant sensors, and environmental sensors. Soil sensors—such as moisture sensors—enable the optimization of irrigation and contribute to water savings. Nutrient sensors allow for precise fertilization, reducing input losses and minimizing environmental impact. Soil health sensors monitor parameters such as pH, electrical conductivity, and organic matter content, providing valuable information about soil fertility. These sensors operate by measuring soil electrical conductivity or the dielectric constant, both of which vary with moisture content.

With advances in technology, the miniaturization and improved energy efficiency of sensors have facilitated their widespread deployment, supporting the development of sustainable and high-efficiency agricultural production systems (Songara & Patel [

27]).

Volumetric soil moisture sensors are advanced devices used to measure the total volume of water present in the soil. They are characterized by high accuracy and reliability, making them valuable tools in agriculture, environmental monitoring, and scientific research. The operating principle of volumetric soil moisture sensors is based on the measurement of the soil’s dielectric constant, which reflects its ability to store electric charge in an electric field (Souza et al. [

18]). Wet soil contains more water, which has a higher dielectric constant than dry soil. By comparing the measured dielectric constant of the soil with a reference value for completely dry soil, the sensor can accurately calculate the volumetric water content.

In precision agriculture, these sensors are essential for monitoring irrigation, preventing water deficits, and minimizing water loss. This supports effective resource management and promotes sustainable agricultural production.

Tensiometers are specialized instruments used to measure the matric potential (tension) of soil, which reflects the force with which the soil retains water. They are widely employed in agriculture, horticulture, and environmental research to monitor soil moisture and optimize irrigation practices. Tensiometers provide critical information about the soil’s water-holding capacity, enabling farmers and researchers to make informed decisions regarding irrigation timing and water resource management.

The operating principle of a tensiometer is based on capillary action. The device consists of a porous ceramic tip or tube placed in the soil, connected to a transparent, hermetically sealed tube equipped with a manometer or data logger. The ceramic element is filled with water and buried at a specific depth in the soil. As the soil dries, water moves from the tensiometer into the surrounding soil through capillary forces (Young [

28]). The capillary above the ceramic tip is connected to a manometer, which measures the pressure generated by the water loss. The resulting negative pressure is directly correlated with the soil’s matric potential. Increasing tension values reflect drier soil conditions and can signal the onset of irrigation need, but tensiometers are only reliable up to approximately −80 kPa and therefore do not capture severe drought conditions, particularly in sandy soils where readings may plateau or become unreliable. Conversely, as soil moisture increases, the pressure within the tensiometer decreases.

Plant health sensors are specialized devices designed for monitoring and assessing the condition of crops under field conditions. They play a crucial role in precision agriculture by providing real-time information on various physiological and biochemical parameters of plants. As a result, farmers can make informed decisions to optimize crop growth, prevent diseases, and maximize yields (Bhagat et al. [

29]).

Monitoring key plant health indicators enables early detection of potential threats, allowing for targeted interventions and supporting sustainable agricultural practices. The main types of plant health sensors, outlined in

Table 1, include the following:

Disease detection sensors—these analyze physiological changes in plants or detect the presence of pathogens, enabling the identification of infections at an early stage.

Pest monitoring sensors—these detect the presence of insects and other pests, supporting the implementation of targeted crop protection strategies.

Plant stress sensors—these measure plant responses to various stress factors, such as leaf temperature or chlorophyll fluorescence, allowing for the assessment of crop condition and the level of both abiotic and biotic stress.

Table 1.

Data collected by weather and environmental sensors.

Table 1.

Data collected by weather and environmental sensors.

| Sensor Names | Types | Applications | Advantages |

|---|

| Atmospheric Pressure Sensors | Mercury Barometers | Weather forecasting: meteorological monitoring | Provide real-time data for predicting atmospheric conditions |

| Aneroid Barometers | Environmental monitoring: climate studies | Assist in the analysis of long-term climatic changes |

| Digital Barometers | Aviation and aerospace: altitude measurements | High measurement precision used in aerial navigation |

| Wind Speed and Direction Sensors | Cup Anemometers | Renewable energy: wind farm site assessment | Simple and durable design, widely used in the wind energy industry |

| Vane Anemometers | Environmental monitoring: wind direction | Accurate wind direction measurement used in meteorology and agriculture |

| Ultrasonic Anemometers | Meteorological stations: scientific research | High accuracy, no moving parts, low maintenance requirements |

| Precipitation Sensors | Tipping Bucket Rain Gauges | Hydrology: rainfall monitoring | Simple construction enables rainfall intensity analysis |

| Weighing Rain Gauges | Agriculture: irrigation management | Accurate rainfall mass measurement for precise hydrological analysis |

| Optical Rain Gauges | Flood warning systems: rainfall detection | Non-contact measurement, ideal for urban monitoring |

| Solar Radiation Sensors | Pyranometers | Renewable energy: monitoring solar panel efficiency | Direct measurement of solar irradiance |

| Pyrheliometers | Climate research: solar constant measurement | Precise determination of direct solar radiation |

| Spectroradiometers | Agricultural research: crop health assessment | Detailed spectral analysis enables plant stress detection |

| Evapotranspiration (ET) Sensors | Eddy Covariance Systems | Environmental monitoring: ecosystem studies | Direct measurement of turbulent fluxes, used in natural ecosystems |

| Water Tank Sensors | Water management: irrigation optimization | Enable efficient water resource management |

| Intelligent Gas Sensors | Electrochemical Sensors | Air quality monitoring: pollutant detection | Provide real-time data on gas concentrations |

| Metal Oxide Sensors (MOSs) | Industrial safety: gas leak detection | Detection of hazardous gases in industrial environments |

| NDIR (Nondispersive Infrared) Sensors | Climate research: greenhouse gas measurements | High sensitivity and selectivity in greenhouse gas detection |

The use of plant health sensors in precision agriculture contributes to efficient resource management, reduced use of plant protection products, and improved yield quality and quantity.

Weather and environmental sensors monitor climatic conditions that directly influence plant growth and health. Temperature and humidity sensors provide data on the microclimate at the field level, enabling precise management of local atmospheric conditions. Rain gauges measure precipitation, supporting informed decision-making regarding water management and irrigation. Anemometers assess wind speed and direction, which is critical for planning the application of crop protection products and minimizing chemical drift.

The integration of smart sensors into precision agriculture allows for real-time monitoring of soil and crop conditions, accounting for spatial variability and enabling data-driven decision-making. Efficient resource management contributes to cost optimization and enhances agricultural productivity. The data collected by these sensors, summarized in

Table 1, are analyzed using data analytics methods and artificial intelligence algorithms, facilitating predictive modeling and precise interventions that support sustainable and environmentally friendly agricultural practices (Das et al. [

30]). Ultimately, the use of smart sensors in precision agriculture promotes yield enhancement, resource conservation, and long-term agricultural sustainability in the context of growing global food demands.

Proximal sensors offer high fidelity at point scale, but representative field-level inference requires dense sampling designs and standardized QC/metadata; without this, transferability to operational mapping remains limited. Having outlined sensor families and their trade-offs,

Section 3.4 details analytical pipelines (indices, radiative transfer, ML) and their implications for fusion and uncertainty.

3.3. Spectral Indices and Biophysical Variables

Vegetation indices and their related biophysical variables provide compact, interpretable signals of crop status derived from optical measurements. Indices such as the NDVI, the SAVI, the EVI, the REI, as well as the LAI and SIF are widely used to assess crop condition, detect water stress, and identify plant diseases; their interpretation requires careful consideration of crop system specificity, weather conditions, and the spectral characteristics of the applied sensors (Xue and Su [

31]).

The LAI remains a key parameter linking remote sensing tools with practical crop production management. Its use enables not only the remote diagnosis of crop conditions but also the strategic assessment of productive potential and informed decision-making regarding resource management toward integrated and sustainable agricultural development. An integrated approach combining multispectral, hyperspectral, LiDAR, and thermal data supports a comprehensive assessment of plant conditions; a key challenge remains the calibration of LAI estimation models across diverse growing conditions and management systems (Singh et al. [

32]).

Among more diagnostic indices, the PRI (531/570 nm) reflects xanthophyll cycle efficiency and is sensitive to short-term changes in photosynthetic performance, while SIF captures solar-induced chlorophyll fluorescence—changes in red/far-red emission indicate weakening of photosynthesis. SIF measurements combined with thermal and chlorophyll indices enable the detection of diseases at very early stages of infection; e.g., infections of olive trees by Xylella fastidiosa were identified with >80% accuracy using a combination of SIF, thermography, and chlorophyll indices (Mohammed et al. [

8]; Zarco-Tejada et al. [

33]). In practice, specialized indices (RDVI, MTVI, PSSR) are tailored to crop/stress types, and the diagnostic relevance of particular indices evolves with disease progression (Abdulridha et al. [

34]).

Empirical evidence supports the utility of moisture-sensitive indices in biomass assessment: in the GrasSat project, the NDVI and NDII were used to forecast fresh biomass yields in grasslands; the NDII correlated at r = 0.79 and the NDVI at r = 0.71, with the best performance when soil moisture and habitat type were included in the model (Dąbrowska-Zielińska et al. [

11]).

Practical limitations and interpretation caveats.

Index-based products can saturate in dense canopies and are sensitive to soil background and illumination geometry, which complicates cross-site transfer; red-edge indices often improve sensitivity at high biomass levels, while Solar-Induced Fluorescence (SIF) and the (Photochemical Reflectance Index) PRI provide earlier functional signals of stress (Xue & Su [

31]). In operational settings, limited, non-standardized in situ references and uneven ground-truth quality inflate uncertainty and hinder model transferability across crops and regions (Łągiewska et al. [

12]). A concise summary of the indices and their primary biophysical sensitivities, typical pitfalls, and recommended use cases is presented in

Table 2.

To convert these signals into robust, actionable products, rigorous preprocessing and analytical pipelines are required—radiometric/atmospheric correction, cross-sensor calibration, radiative transfer modeling (e.g., PROSAIL), machine learning workflows, and explicit uncertainty mapping—which we detail in

Section 3.4.

3.4. Analytical Pipelines and Data Fusion

3.4.1. Preprocessing and Cross-Sensor Calibration

Effective use of multi-platform data requires rigorous radiometric, atmospheric, spatial, and spectral calibration before any analysis. Operational workflows routinely apply Sen2Cor (for Sentinel-2) and ENVI-based atmospheric correction (e.g., FLAASH/QUAC in ENVI version 5.5), georectification with Ground Control Points/Digital Elevation Models (GCPs/DEMs), and standardized index computation (e.g., NDVI, NDRE, MCARI) to ensure comparability across sensors and dates (Moravec et al. [

35]). These steps are essential when blending satellite, UAV, and robot-borne imagery into one analytical stream. Temporal synchronization and spectral harmonization are equally important, because platforms often differ in revisit, band placement, and ground sampling distance; aligning robot-mounted sensors to the analysis Coordinate Reference System (CRS) via GCP/DEM-aided registration enables within-row variability analysis, particularly in orchards with dense canopies (Barbedo [

36]). When this preprocessing pipeline is followed, data fusion with machine learning or Bayesian models can produce robust soil and vegetation parameter maps that support targeted management and early stress detection (Sishodia et al. [

37]).

3.4.2. Modeling Approaches: Physical Models and Machine Learning

Two complementary families of methods convert reflectance and ancillary inputs into agronomic information. First, radiative transfer models such as PROSAIL (PROSPECT + SAIL) link leaf/canopy biochemical and structural traits to measured reflectance across multi/hyperspectral ranges, enabling retrieval of the LAI, chlorophyll levels, and leaf water content, and supporting precise vegetation mapping when coupled with airborne or proximal hyperspectral data (Croft et al. [

38]). Second, machine learning and deep learning pipelines—from SVMs and random forests to Convolutional Neural Network (CNN) architectures like U-Net Convolutional Network (U-Net) and You Only Look Once (YOLO)—handle classification and segmentation tasks (e.g., weed detection, fruit localization, disease symptom mapping) and discover non-linear patterns in time series (PCA, k-means, t-SNE) (dos Santos Ferreira et al. [

39]). In mobile sensing contexts, edge computing evaluates these models on the robot in near real time, enabling rapid responses and adaptation of agronomic practices to local conditions (Shahbazi et al. [

40]). In practice, physical models offer interpretability and physics-based generalization, whereas ML/DL excel with complex, heterogeneous data—but depend on domain-matched training sets and cross-sensor harmonization to transfer across crops and regions (Boota et al. [

41]).

3.4.3. Multi-Source Fusion and Operational Integration (GISs/DSSs/FMISs)

Agricultural datasets originate from satellites, UAVs, ground robots, weather stations, and in situ probes, and must be combined in GIS/DSS/FMIS environments to become actionable. GISs support spatial modeling of environmental parameters, creation of variability maps and management zones, and forecasting of temporal change; coupling EO with crop models (APSIM, DSSAT) and PROSAIL strengthens predictive and diagnostic value (Boota et al. [

41]; Nawar [

42]). At the farm management layer, sensor and imagery streams are aggregated to generate yield variability maps, delineate zones of differing productivity, and recommend variable rates for fertilizers and plant protection products; at larger scales, digital platforms (e.g., FAO/Copernicus Agriculture) increasingly combine EO + IoT + AI, supporting remote crop management, water resource planning, and automated risk assessment—foundations for higher degrees of automation (Fritz et al. [

4]). In orchard systems, edge-IoT networks process data locally in low-connectivity areas and interface with autonomous vehicles that execute spraying or harvesting based on sensor-driven prescriptions; tighter links to digital twin representations are emerging, in which a virtual orchard is continuously updated by imagery and sensor feeds (Maheswari et al. [

43]).

3.4.4. Spatial Interpolation and Uncertainty—Closing the Loop to Decisions

Calibration/validation against reference field measurements remains essential to judge model accuracy and operational reliability. Geostatistical methods such as Inverse Distance Weighting (IDW) and kriging provide continuous surfaces and, in the case of kriging, an explicit uncertainty map derived from the modeled semivariogram; IDW uses distance-decaying weights with empirically tuned parameters and standard accuracy metrics (R

2, RMSE) (Pereira et al. [

44]). In production workflows, uncertainty flags should propagate from preprocessing (atmospheric residuals, BRDF, co-registration error) through modeling and fusion, so that downstream GIS/DSS layers (e.g., VRT maps) carry confidence information alongside recommendations. The limiting factor for transferability is often the lack of standardized in situ protocols and sparse ground truth, which inflates uncertainty across crops and regions—an issue addressed by harmonized field procedures and data-sharing initiatives (GEOGLAM/JECAM), and one that can be anchored with your own in situ work (Łągiewska et al. [

12]; Panek-Chwastyk et al. [

14]). Finally, moving from batch analytics to low-latency pipelines requires edge devices (embedded GPUs/ML accelerators) for fast segmentation and stress detection on board the robot, with heavier tasks offloaded to the cloud; FMIS integration then translates insights into timely actions (e.g., VRT, micro-irrigation), shortening the perception-to-execution path (Wu et al. [

15].

Section 3.5 compares acquisition platforms and quantifies coverage–resolution–latency trade-offs.

3.5. Acquisition Platforms: Satellites, UAVs, and Ground Robots—A Comparative View

Two primary approaches to the acquisition of remote sensing data in precision agriculture are distinguished in the literature: aerial (satellite and UAV-based) and ground-based (mobile platforms and stationary sensors) (

Table 3). Each of these methods presents specific advantages and limitations that must be carefully considered during the planning of research activities and implementation strategies (Rouault et al. [

45])

In practice, the most effective solutions are hybrid systems that integrate aerial data—used for general crop condition assessment—with high-accuracy ground-based measurements, which enable localized adjustments to agronomic interventions.

Mobile remote sensing systems offer high-spatial-resolution data acquisition, overcoming limitations of satellite imagery spatial scales (Sentinel-2: 10–20 m; Landsat-8: ~30 m) and high costs of very-high-resolution satellites (WorldView-3: 31 cm), which restrict detailed crop-level analysis (Zarco-Tejada et al. [

33]). UAVs provide centimeter-level resolution but are limited by flight duration, regulatory constraints, weather sensitivity, and operational costs, impeding large-area coverage (Huete et al. [

47]). Ground-based mobile systems provide continuous, highly precise measurements at the millimeter scale, unaffected by atmospheric conditions, allowing stable and repeatable data capture (Navarro-Hellín et al. [

48]). Integration of robotic sensing platforms with farm management systems facilitates real-time data transmission and decision support, bypassing the need for the extensive postprocessing typical in satellite and UAV imagery workflows. This integration supports real-time agronomic adaptations through variable-rate technology (VRT) and enhances detection sensitivity for early plant stress indicators via multispectral, hyperspectral, and thermal sensors, outperforming coarser-resolution satellite data in capturing subtle spectral variations (Zarco-Tejada et al. [

33]).

In agricultural practice, complex mobile device systems integrating various sensors are being developed. For example, the RoMu4o project introduces a multifunctional orchard robot equipped with a six-degree-of-freedom robotic arm and a hyperspectral spectrometer for direct analysis of collected leaves. Another example is the RobHortic robot—a remotely operated ground vehicle for vegetable crop inspection, equipped with a set of cameras: RGB, multispectral, and hyperspectral (400–1000 nm) directed at the plants (Cubero et al. [

49]). This synergistic approach underpins modern precision agriculture, enabling dynamic crop monitoring and predictive management.

4. Integration of RS and Robotics in Agricultural Practice

The integration of remote sensing (RS) technologies with autonomous robotic platforms is revolutionizing precision agriculture by enabling high-resolution, real-time monitoring and management of crop and soil conditions. This chapter presents key applications of such integration, including spatial variability analysis of soil and plant parameters, nitrogen resource mapping for precision fertilization, and the fusion of in situ data with Geographic Information Systems (GISs) and yield mapping systems to optimize agricultural practices.

4.1. Spatial Variability Analysis of Soil and Plant Parameters

Field measurements consistently demonstrate substantial spatial heterogeneity in soil chemical and physical properties, such as pH, total and potentially mineralizable nitrogen content (N-min), availability of phosphorus, potassium, and magnesium, cation exchange capacity (CEC), moisture content, and clay fraction. Capturing this variability through dense sampling grids is essential for accurate characterization of local edaphic conditions and serves as a critical reference for calibrating remote sensing-based diagnostic models (Green & Ampt [

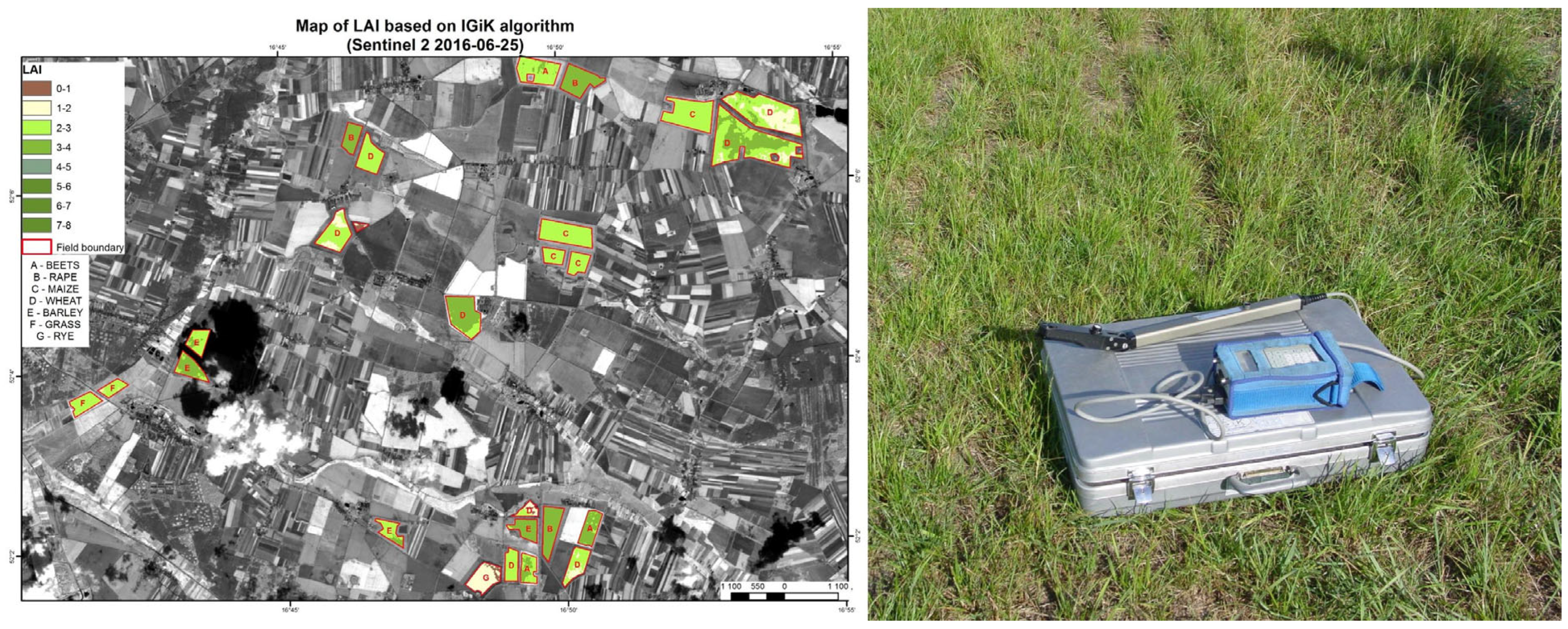

50]). Ground-based autonomous robots equipped with multisensor arrays—including visible to near-infrared (VIS-NIR) spectrometers, soil moisture probes, and electrochemical sensors—enable continuous, real-time acquisition of soil properties at fine spatial scales. Simultaneously, aboveground plant parameters, such as the leaf area index (LAI) (

Figure 3), pigment-based vegetation indices (e.g., SIPI, MCARI), chlorophyll content, and water stress indices (e.g., WBI, MSI) can be recorded and spatially correlated with soil data to provide an integrated soil–plant health assessment (Ünal et al. [

51]).

This high-resolution insight into field heterogeneity directly supports nitrogen mapping and precision fertilization practices. In situ measurements of total nitrogen and N-min, collected by mobile autonomous robots equipped with proximal sensing technologies (NIR spectrometry, EC probes, optical sensors), enable dynamic, adaptive nitrogen mapping (Vakilian & Massah [

52]). Integration of robotic sensor data with remote sensing-derived indices (e.g., NDRE, REIP, GNDVI) ensures plant- and field-scale insight into nitrogen dynamics (Lucas Guillén [

53]).

Advanced spatial interpolation and data analysis methods, including regression, PCA, spatial segmentation, and anomaly detection, are used to interpret nitrogen status and support variable-rate technology (VRT) fertilization. Multi-temporal data allows the generation of long-term nitrogen reference maps, improving fertilization efficiency and environmental sustainability.

4.2. Integration with Geographic Information Systems and Yield Mapping

The fusion of in situ data acquired by autonomous mobile platforms with Geographic Information Systems (GISs) facilitates advanced spatial modeling and comprehensive analysis of agrochemical and crop physiological variability. Soil parameters—such as pH, electrical conductivity, mineral nitrogen content, cation exchange capacity, and texture—alongside crop morphological and physiological indicators (e.g., spectral indices, leaf area, water stress) are georeferenced using precise GNSS positioning.

This integrated data supports the generation of spatial variability maps that can be cross-referenced with yield maps collected by combine harvesters equipped with yield monitoring systems or with remote sensing-derived biomass estimates. Correlation and regression analyses, including multivariate and geostatistical approaches (conditional kriging, co-kriging), identify key factors influencing productivity at fine spatial scales (Hengl et al. [

54]).

Temporal analysis of yield data over multiple seasons reveals persistent zones of high or low productivity, enabling the establishment of operational management zones tailored for optimized input application, including fertilization, liming, and irrigation. This approach enhances agronomic decision-making, optimizing resource use efficiency and promoting sustainable agricultural production while preserving environmental quality.

Precision spraying robots integrate RTK-guided actuation with multisensor perception to enable site-specific crop protection and minimize off-target deposition. A detailed case study is provided in

Section 5.3 (Precision Spraying), where we discuss implementation details and performance within the overall workflow.

5. Robotic Systems: Case Studies and Comparative Analysis

5.1. Weed Detection and Elimination: Digital FarmHand; FarmWise Vulcan

Autonomous robots equipped with multispectral sensors and LiDAR can identify weeds with high precision, enabling their mechanical removal without the use of herbicides. This capability is enhanced through the application of neural networks, such as YOLOv5. An illustrative example is the Digital FarmHand robot developed by the University of Sydney, which utilizes advanced object detection algorithms to distinguish weeds from crops and mechanically eliminate them, thereby reducing the reliance on chemical herbicides and contributing to more sustainable agricultural practices. While promising, reported performance largely comes from pilot studies, and large-scale field validation is still scarce (“Digital Farmhand boosts food security in the Pacific—The University of Sydney,” n.d. [

55]).

Another example of an advanced agricultural robot is the FarmWise Vulcan, designed for precision weeding and combining state-of-the-art AI technology with operational efficiency and versatility. Equipped with a sophisticated vision system and AI algorithms, the Vulcan can identify and remove weeds with millimeter-level accuracy while safeguarding crops. The robot is capable of weeding over 25 acres per day, operating at speeds ranging from 1.5 to 4 miles per hour, which enables efficient and large-scale field maintenance. These figures are manufacturer-reported, and independent peer-reviewed validation studies remain limited (“FarmWise

®|Feeding Our World and Our Future,” n.d. [

56]).

5.2. Real-Time Soil Analysis

Autonomous robots can be equipped with electrochemical sensors for measuring pH and near-infrared (NIR) spectrometers for analyzing nitrogen and phosphorus contents in the soil. Sensors and IoT devices collect real-time data on soil chemical composition, moisture, and the availability of macro- and micronutrients. Additionally, unmanned aerial vehicles (UAVs) conduct aerial surveys to detect changes in soil health over large areas, enabling early identification of potential problems. Robotic soil sampling systems automate the collection of samples at various depths, improving the accuracy of soil analysis and reducing labor costs. These systems also allow for real-time soil analysis, enabling farmers to adjust fertilization and irrigation practices dynamically.

The integration of robotic systems in agriculture leads to greater efficiency, precision crop management, and cost savings through optimized input use. The data collected are used to generate nutrient distribution maps that support fertilization optimization. These systems integrate with GPS data, allowing for georeferenced soil mapping. An example of such a solution is the Smartcore robot developed by Rogo Ag, which collects precise soil samples for laboratory analysis, assisting farmers in nutrient management decision-making (“Soil Sampling Services,” n.d. [

57]).

5.3. Precision Spraying

Autonomous row-crop robots increasingly pair centimeter-level localization (GNSS/RTK) with vision-based plant/weed detection to deliver site-specific crop protection. Instead of broadcast spraying, controllers actuate individual nozzles or micro-dosing mechanisms along pre-mapped plant/row coordinates, reducing off-target deposition and enabling direct use of RS-derived prescription maps. A representative implementation is the FarmDroid FD20, which uses seed-position tracking and RTK to micro-spray on- and inter-row targets; manufacturer data indicate substantial input savings relative to blanket application. Quantitative performance is summarized in the robot comparison in

Table 4.

AgAR is an autonomous agricultural robot developed by COMING Computer Engineering in collaboration with the Faculty of Mechanical Engineering in Niš, Serbia. Equipped with advanced optical sensors, stereoscopic cameras, and GPS/RTK systems, AgAR accurately monitors crop conditions by analyzing plant health, soil quality, and the presence of pests.

Leveraging artificial intelligence and a LiDAR system, the robot supports farmers in making rapid decisions related to fertilization, irrigation, and crop protection, thus enhancing agricultural efficiency and promoting sustainable resource management. Its modular design enables adaptation to various terrain conditions and user-specific requirements.

Autonomous robots can be equipped with acoustic sensors that detect insect activity based on sound analysis. One notable example is the “WhisperSense” project, which employs a network of acoustic sensors to monitor insect-generated sounds in agricultural environments. This system analyzes acoustic signals in real time, enabling the localization and identification of pests even under low-light conditions where traditional vision-based methods may fail (Bagde [

58]).

6. Workflow Challenges in Integrating RS and Autonomous Robots (Perception–Decision–Execution)

6.1. Perception Layer: Acquisition, Calibration, Environment

Accurate multi-platform fusion begins with rigorous radiometric, atmospheric, spatial, spectral, and temporal harmonization. In practice, radiometric/atmospheric corrections (e.g., Sen2Cor, FLAASH/QUAC) and precise georectification with GCPs/DEMs precede standardized index computation (NDVI, NDRE, MCARI) to ensure cross-sensor/date comparability (Xue & Su [

31]; Sishodia et al. [

37]; Colomina & Molina [

59]).

Cloud cover and solar illumination are among the most critical environmental variables influencing the quality of passive imaging, which includes both multispectral and hyperspectral observations. Uneven lighting conditions can cause substantial variability in spectral reflectance values, leading to distortions in vegetation indices such as the NDVI, SAVI, or EVI, and consequently to misinterpretations of crop physiological status (Song et al. [

60]). During periods of fluctuating cloudiness and low solar angles, the use of atmospheric correction methods, data normalization techniques, or active sensors—such as LiDAR or SAR—that operate independently of illumination conditions becomes essential (Feng et al. [

61]).

Wind exerts its influence mainly as a source of mechanical instability for UAV platforms and ground-based mobile robots. Atmospheric turbulence can cause micro-movements of the camera, image blur, and loss of sharpness, which reduce the accuracy of both geometric and spectral measurements (Eltner et al. [

62]). To mitigate these effects, gimbal stabilizers, high-frequency inertial sensors, and image motion compensation algorithms based on photogrammetric techniques and temporal frame alignment are employed.

Terrain topography affects the geometry of recorded data and the spatial variability of reflected radiation intensity. Phenomena such as topographic shadows, differences in exposure, and variability in illumination angles introduce distortions in vegetation indices, particularly in mountainous or steeply sloped areas (Colombo [

63]). In such conditions, the application of digital elevation models (DEMs) for topographic correction, along with the integration of data from various platforms—satellite, aerial, and ground-based—is recommended to ensure a consistent and accurate representation of crop conditions.

Topography also affects the mobility of autonomous robotic systems, which may encounter difficulties in maintaining a stable trajectory in terrain with variable relief. This nuance clarifies that terrain still matters despite the relative advantages of ground robots compared to aerial sensing platforms. Steep slopes, uneven surfaces, and physical obstacles increase the risk of localization errors, thereby disrupting uniform coverage of the measurement area. Therefore, it becomes necessary to implement navigation systems supported by exoceptive sensors and trajectory deviation correction algorithms, which improve data recording accuracy even under challenging field conditions (Colombo [

63]).

Sudden weather changes, such as heat waves, frosts, or temporary light deficiencies, may induce physiological stress in plants, the effects of which are often complex and dependent on both the phenological stage and crop genotype. For example, in Central Europe in 2016, wheat growth conditions fell significantly below average, primarily due to insufficient photosynthetically active radiation (PAR), which reduced the plants’ assimilation potential (“FAO Knowledge Repository”). Thermal stress is particularly detrimental during flowering and grain filling stages, where temperatures exceeding 32 °C can lead to substantial yield reductions (Barlow et al. [

64]). Winter crops, despite their greater cold tolerance, are vulnerable to cold snaps during the stem elongation phase, which frequently results in frost damage and degradation of grain quality parameters (Xiao et al. [

65]).

Currently applied remote sensing solutions in orchard management face several significant limitations. Many sensors, particularly hyperspectral ones, generate massive amounts of data, considerably increasing both computational and storage requirements (Khanal et al. [

46]). At the same time, advanced sensors (e.g., hyperspectral, LiDAR) are expensive, and access to high-spatial-resolution satellite imagery is often constrained by cost or minimum order area. Additionally, the frequency of satellite overpasses and the occurrence of favorable weather conditions (e.g., cloud-free days) are limited, which weakens the continuity of monitoring.

Another challenge is the complexity of remote sensing data processing. Multidimensional image analysis requires specialized software and high computing power, especially when using machine learning algorithms. The enormous data volumes pose problems related to storage, transmission, and refinement (e.g., noise reduction, atmospheric corrections). Furthermore, high investment costs and the need for staff training slow the adoption of innovative remote sensing systems (“Smart Farming Using AI, IoT, and Remote Sensing” [

66]).

Orchard-specific terrain conditions—such as dense and irregular tree canopies, strong lighting contrasts, and heterogeneous backgrounds (soil, grass)—further complicate accurate image segmentation. Research indicates difficulties in maintaining drones at a safe distance from branches and in detecting small elements (e.g., fruits, leaves, pests) within tree canopies (Popescu et al. [

67]).

An additional limitation is the lack of unified data standards, which hinders comparability between different platforms and sensors. In the case of orchard crops, species and age heterogeneity cause substantial spectral reflectance variability, making generalization of results difficult. Seasonality also poses a problem—the presence of leaves and fruits at specific times of the year means that some data may be outdated or inadequate for a given diagnostic purpose. Moreover, many solutions remain in experimental phases, with no access to commercially available tools adapted to horticultural practice, which limits adoption at the farm level. All these factors hinder large-scale early detection of diseases and the assessment of fruit condition.

6.2. Algorithmic Layer: Physics-Based vs. ML/DL, Data Needs, Transferability

Robust pipelines combine physics-based models with machine learning. Coupling EO with crop models (APSIM, DSSAT) and radiative transfer models (e.g., PROSAIL) enhances predictive/diagnostic value: PROSAIL links leaf/canopy traits to reflectance and supports retrieval of the LAI and chlorophyll and leaf water content (Croft et al. [

38]).

In parallel, ML/DL pipelines—SVM/RF and CNN architectures (U-Net, YOLO)—handle weed detection, fruit localization, and disease symptom mapping, while unsupervised methods (PCA, k-means, t-SNE) reveal latent patterns (dos Santos Ferreira et al. [

39]). Yet “complex dataset necessitate precise calibration and validation; comparison with reference field measurements is essential for accuracy,” with IDW/kriging used for mapping and uncertainty (Pereira et al. [

44]). Transferability remains limited without standardized in situ references; dense proximal observations collected by robots help reduce domain shift across crops/regions.

6.3. Compute and Communications: Edge, Cloud, Interoperability

To keep the latency to action low, preliminary inference runs on the edge (embedded GPUs/ML accelerators) for fast segmentation and stress detection, while the cloud handles model training, long-term analytics, multi-farm aggregation, and FMIS integration (Shahbazi et al. [

40]; Maheswari et al. [

43]). Edge inference for fast segmentation and stress detection, combined with cloud training/aggregation, shortens the perception-to-action loop and scales across fields (Wu et al. [

15]). Hybrid comms combine local processing with 4G/5G/IoT uplinks and farm Wi-Fi/Ethernet; ISOXML/ADAPT ensure that robot outputs ingest cleanly into FMISs (Getahun et al. [

68]).

Without edge prioritization and standards-compliant outputs, perception-to-prescription pipelines stall on bandwidth or break at the FMIS boundary; open formats and disciplined metadata are therefore prerequisites for scaling beyond pilots (Botta et al. [

41]).

6.4. Decision and Execution: GISs/DSSs/FMISs, VRT, Uncertainty

GISs/DSSs support spatial modeling, variability maps, management zones, and temporal forecasts; coupling RS with PROSAIL/APSIM/DSSAT improves diagnostics and context for decision-making (Mulla [

69]). Imagery/sensor feeds then produce prescriptions for variable-rate technology (VRT); low-latency perception-to-execution links enable site-specific spraying with major input reductions—e.g., RTK-guided micro-spraying with nozzle-level actuation (see

Section 5.3) (Zarco-Tejada et al. [

33]; FarmDroid FD20 spec [

70]).

Uncertainty awareness must be explicit: geostatistical frameworks (IDW/kriging) yield both estimates and uncertainty surfaces; Quality Control (QC) flags from preprocessing (atmosphere/BRDF/registration) should propagate through modeling/fusion so prescriptions carry confidence alongside rates (Hengl et al. [

54]).

6.5. Interoperability, Governance, Scalability

Automation depends on standards and data governance. ISOXML/ADAPT-compliant outputs and consistent metadata lower transaction costs and enable FMIS/DSS consumption; edge/cloud partitioning plus open formats are prerequisites for operational scale. At larger scales, digital agriculture platforms (FAO/Copernicus) demonstrate orchestration of RS + IoT + AI for risk assessment and resource planning—patterns that on farms translate into interoperable, auditable actuation (Botta et al. [

41]).

7. AI- and IoT-Driven Automation for RS–Robot Integration

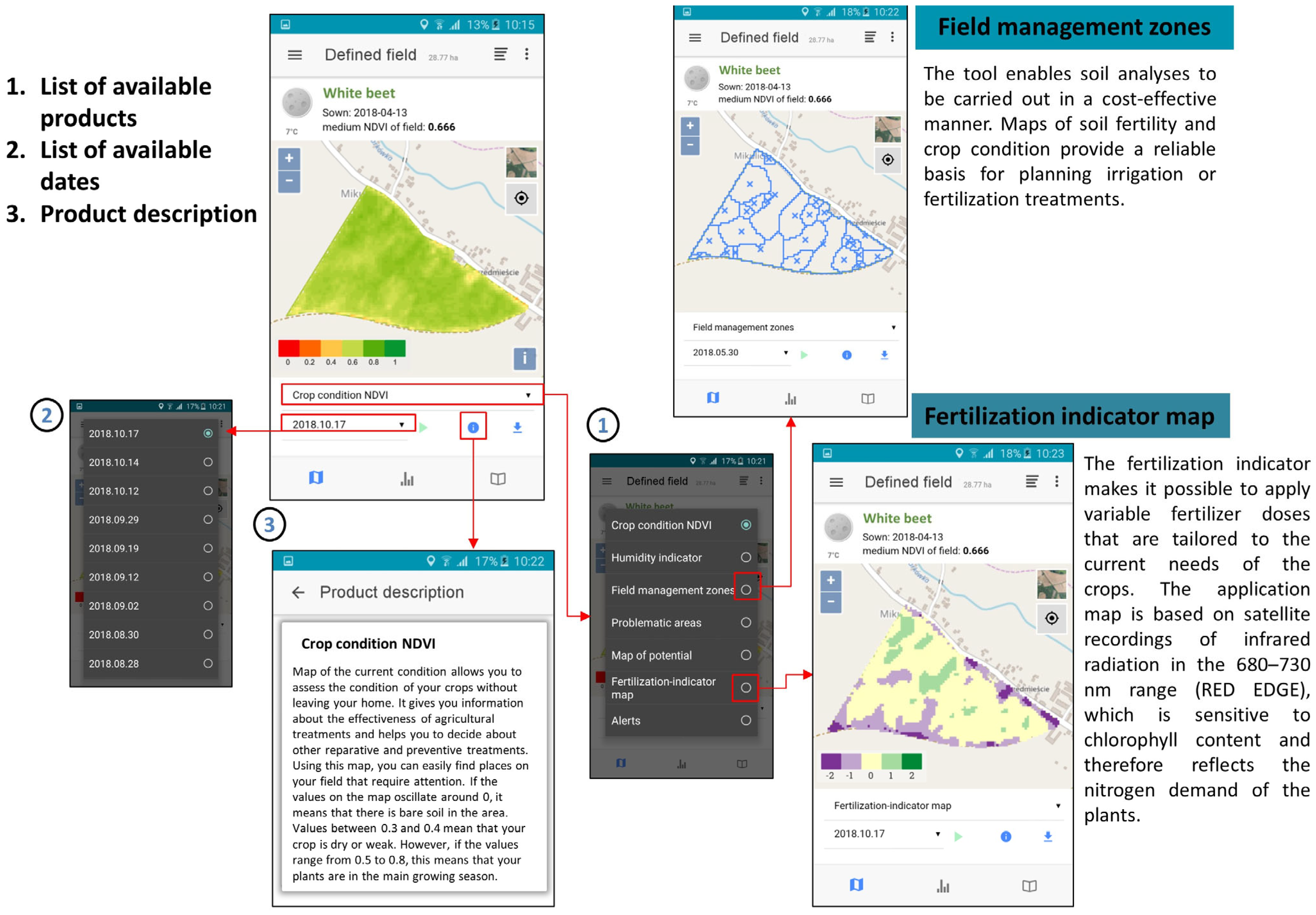

The integration of remote sensing with the Internet of Things (IoT) and automation is becoming a key trend in modern orchard management. Orchards are increasingly equipped with sensor networks comprising field devices—such as weather stations, soil moisture and pH sensors, and cameras monitoring microclimate and plant health—which continuously measure key parameters (air and soil temperature, humidity, chemical composition) and indicators of plant condition. These data streams enable dynamic control of irrigation, fertilization, and crop protection systems (“Smart Farming Using AI, IoT, and Remote Sensing” [

66]). For example, the combined analysis of soil moisture data and vegetation imagery allows for automatic adjustment of irrigation schedules based on the real-time needs of the orchard (Fuentes-Peñailillo et al. [

71]), thereby reducing both over- and under-irrigation and fertilizer overapplication (

Figure 4).

Integration also occurs at the farm management level. Sensor and imagery data are fed into Geographic Information Systems (GISs) and Decision Support Systems (DSSs), enabling comprehensive monitoring. These systems aggregate data in real time and support agronomic decision-making, such as by generating yield variability maps, delineating zones of differing productivity, and recommending variable rates of fertilizers or plant protection products. On a global scale, initiatives like digital agricultural platforms (e.g., FAO and the Copernicus Agriculture project) increasingly combine remote sensing data with IoT and artificial intelligence. These platforms support remote crop management, water resource planning, and automated risk assessment, laying the foundation for full automation of agricultural operations.

In orchard systems, the role of so-called edge IoT is growing—wireless sensor networks capable of local data processing without the need for constant connection to a central server, enabling operation in areas with limited connectivity. These systems can also interface with autonomous vehicles, such as spray robots or fruit harvesters, which execute tasks based on sensor data. The implementation of systems that couple remote sensing imagery with predictive machine control is a key development path in the context of Agriculture 4.0. In the future, tighter integration with “digital twin” platforms is anticipated, in which the physical orchard has a continuously updated virtual representation, based on sensor and imagery-derived data.

Future Development Paths Using Artificial Intelligence

In the future, advanced artificial intelligence (AI) and machine learning (ML) techniques will become increasingly important. In the analysis of satellite and aerial imagery, deep learning architectures are prevailing. Convolutional neural networks (CNNs), such as YOLO, Faster R-CNN, and U-Net, enable highly accurate detection and segmentation of orchard elements (e.g., trees, fruits, stress symptoms), with significantly greater effectiveness than traditional algorithms (Maheswari et al. [

43]). For example, semantic image segmentation allows the generation of detailed maps of ripe fruit distribution or disease spread within an orchard. The application of hybrid architectures—combining CNNs analyzing RGB images with networks processing NIR bands—further enhances the detectability of specific vegetation features. A notable drawback of these methods is the need for large, carefully annotated training datasets and considerable computational resources. However, these challenges indicate the development direction—such as the use of transfer learning techniques or generative models for automated data augmentation.

In the area of prediction and expert systems, the role of prognostic models is growing. Recurrent neural networks (RNNs), including LSTM and GRU, as well as ensemble algorithms (e.g., random forests, gradient boosting), are increasingly used to integrate remote sensing data (e.g., time series of vegetation indices) with meteorological and soil parameters. These models enable the forecasting of yield volumes, flowering dates, or disease risks well in advance. In parallel, unsupervised methods such as PCA or t-SNE, and clustering algorithms (e.g., k-means), are used to identify latent patterns in agricultural datasets. This supports automatic anomaly detection—for example, areas of water stress or weak plant growth that may not be visible using traditional techniques. Expert systems and fuzzy logic solutions are also being developed for autonomous control of irrigation or crop protection, relying on decision rules and domain-specific knowledge. A notable example includes intelligent irrigation systems that control pumps in real time based on soil moisture sensors and weather forecasts (Hoque et al. [

72]).

Among ongoing research initiatives, several projects integrating remote sensing, robotics, and AI are worth highlighting. Demonstrator platforms such as Smart Agri Hubs and the Copernicus for Agriculture program combine automated satellite image analysis with local sensor networks and AI algorithms to build digital twins of farms. Demonstration sites of precision farms are also being established, where autonomous robots collect visual, soil, and weather data, and AI systems make intervention decisions. Globally, initiatives are growing that leverage Earth observation satellites and AI in agriculture (e.g., Google Earth Engine for Agriculture, AI for Earth), indicating a trend toward the full digitization and automation of orchard production. Future research will likely focus on further development of deep learning algorithms (including reinforcement and transfer learning) tailored to orchard-specific challenges, optimization of real-time processing (edge computing), and the integration of data from novel sources—such as UAV/LiDAR hybrid drones or networked environmental sensors.

A promising direction is the application of reinforcement learning in the control of autonomous robots performing data acquisition and agrotechnical operations based on visual and sensor data. Hybrid models are also being developed, combining statistical approaches (e.g., multiple regression) with machine learning techniques to improve both prediction accuracy and model interpretability. In the temporal analysis of satellite data, transformer networks and attention-based architectures are gaining importance due to their superior performance with irregular time series. Furthermore, a critical development pathway involves the creation of open labeled orchard datasets, which will enable the training of AI models under diverse soil and climatic conditions.

IoT + RS + robots can implement closed-loop management (sense → decide → act) at sub-meter scales. The most robust architectures combine edge inference for low latency, cloud analytics for fleet-level learning, FMIS interoperability for prescriptions, and digital twins for continuous scenario testing and auditability.

8. Discussion

8.1. Analytical Benefits

The use of remote sensing offers measurable efficiency and environmental benefits. Firstly, it enables more effective use of resources—soil, water, and fertilizers. High-resolution imagery allows for detailed mapping of site-specific variability (e.g., soil moisture, nutrient levels, disease occurrence), which facilitates site-specific application of inputs such as fertilizers or irrigation. Machine learning and the Internet of Things (IoT) support the precise control of agricultural machinery, significantly reducing losses and chemical runoff (Songara & Patel [

27]). This reduces production costs while maintaining or even increasing yields; studies indicate that the implementation of remote sensing technologies can lead to higher yields with lower input costs, thus improving production efficiency (Barbedo et al. [

36]).

Secondly, remote sensing optimizes crop protection. Multispectral imagery enables the generation of maps showing weed distribution, localized plant stress, or early symptoms of diseases and pests. Image-based detection systems facilitate site-specific spraying (e.g., nozzle group systems controlled based on processed images), which leads to a significant reduction in pesticide and herbicide use. For instance, using a precision spraying system with real-time sensors reduced the application of plant protection products in soybean and maize crops by more than half, while maintaining previous yield levels. Detailed monitoring of crop conditions also reduces preventive treatments, as actions can be limited to areas where intervention is actually needed (Zanin et al. [

73]).

Thirdly, the quality of decision-making data is significantly improved. The availability of continuous, frequent measurements (e.g., from satellites, UAVs, or ground-based platforms) provides farmers with near-real-time access to multispectral images and predictive vegetation indices. These data are integrated in cloud-based environments and Farm Management Information Systems (FMISs), which offer real-time reporting and visualization tools (Getahun et al. [

68]). This enables faster decision-making and improved planning of agronomic operations. At the same time, the environmental footprint is reduced—automation and individualized treatment applications help limit groundwater contamination and greenhouse gas emissions.

8.2. Workflow Bottlenecks (Perception–Decision–Execution)

Cloud cover and illumination asymmetries bias passive optical indices (NDVI/SAVI/EVI), while slope-induced shadows and BRDF effects require DEM-based topographic correction or fallback to active sensors (LiDAR/SAR) (Song et al. [

60]; Feng et al. [

61]). Thermal retrievals are confounded by atmospheric humidity and temperature, demanding compensation and careful calibration (Messina & Modica [

21]). Wind-induced platform motion degrades geometry/spectra and is mitigated through gimbals, high-rate IMUs, and motion compensation. At the ground level, topography also affects robot mobility—slopes and roughness inflate localization error and risk uneven coverage; DEM-aware routing, SLAM/LiDAR mapping, and deviation correction are required to sustain data fidelity (Colombo [

63]; Li et al. [

74]).

Hyperspectral modules provide fine biochemical sensitivity but generate massive data volumes and higher processing costs; practical pipelines need on-device preprocessing, dimensionality reduction, and edge–cloud partitioning to keep the latency to action low (Dong & Chen [

22]; Wang & Menenti [

23]). On the algorithmic side, physics-based retrievals (e.g., PROSAIL) offer interpretability but require well-specified priors, whereas ML/DL excels on heterogeneous streams yet depends on domain-matched training data and standardized in situ references to generalize across crops/regions. Robotic proximal sensing helps densify ground truth and reduce domain shift (Croft et al. [

38]).

8.3. Methodological Transparency and Transferability

Current crop monitoring methods face numerous limitations, particularly regarding access to in situ data and reliance on expert-driven analyses, which can reduce the precision of forecasts and result in outputs with a high degree of uncertainty. The need to collect new ground-truth data for each study location poses a significant challenge for model parameterization and the assessment of model accuracy. This limitation negatively affects the feasibility of global yield forecasting and necessitates close collaboration with national and local institutions (Łągiewska et al. [

75]). An additional challenge lies in the limited availability of in situ data beyond national borders, which significantly hampers model calibration and the execution of global-scale analyses. To address these issues, GEOGLAM has implemented an in situ data coordination strategy aimed at standardizing data and enhancing the use of partner resources, in alignment with the principles of the GEOSS; nevertheless, full data sharing remains a considerable challenge. Despite ongoing initiatives, such as the JECAM, which promote best practices in data sharing, full openness of in situ datasets remains unlikely (Panek-Chwastyk et al. [

10]; Dąbrowska-Zielińska et al. [

11]).

As outlined in the Methods (Scoping/Systematic Review) Section, a structured scoping review aligned with PRISMA-ScR and reported under PRISMA 2020 guidelines was adopted to improve transparency and reproducibility (Tricco et al. [

2]; Page et al. [

3]). The review focused on studies that connect perception to action—systems employing RS sensors on autonomous platforms to inform or trigger agronomic operations in the same workflow.

The complexity of remote sensing data analysis also necessitates precise calibration and validation. Comparison with reference field measurements is essential for assessing model accuracy and reliability. The use of data quality standards and geostatistical methods (e.g., kriging, inverse distance weighting—IDW) enables accurate mapping of environmental conditions and provides explicit uncertainty surfaces for decision-making (Pereira et al. [

44]).

In the context of mobile remote sensing, autonomous robots—equipped with optical, hyperspectral, thermal, LiDAR, and soil sensors—enable acquisition of ultra-high-resolution data unattainable for satellite or UAV systems; on-board edge computing allows rapid analysis and supports adaptation of agronomic practices to local conditions. Such proximal measurements densify ground truth and can improve cross-site transferability when fused with satellite/UAV time series under harmonized preprocessing (Sen2Cor/FLAASH, GCP/DEM registration) and standardized indices (NDVI, NDRE, MCARI) (Shahbazi et al. [

40]; Zhao et al. [

76]).

8.4. Adoption, Economics, and Governance

Economics shape feasibility. Large farms can justify integrated sensing and actuation stacks; smallholders often cannot. Robotics-as-a-Service (RaaS) offers a path to access without ownership, but profitability, risk-sharing, and coverage remain open questions; policy incentives and clear data-governance rules (farmer control of data) are prerequisites for trust and uptake. Sustainability benefits (reduced chemicals/water, lower emissions) are tangible, yet life-cycle impacts (batteries, e-waste) and equity of access must be addressed to avoid widening the digital divide.

8.5. Research Priorities

When designing an autonomous robot for horticultural and vegetable applications, it is essential to incorporate multi-modal sensors enabling complementary plant observation. The primary sensor should be a high-resolution RGB camera (spectral range 400–700 nm) for general field imaging. Additionally, the robot should be equipped with a multispectral camera covering at least standard visible and near-infrared bands (e.g., red and NIR), enabling the calculation of vegetation indices (e.g., NDVI, NDRE) that assess plant health. To evaluate moisture conditions and thermal stress, a thermal infrared camera (spectral range ~8–12 µm) is recommended. Measuring plant surface temperature allows the calculation of water stress indices such as the Crop Water Stress Index (CWSI), which are useful in irrigation systems.

Hyperspectral sensors provide high-value data by recording hundreds of narrow bands across the visible and near-infrared spectrum. Hyperspectral data sensing (e.g., 400–1000 nm) enables the detection of subtle spectral changes associated with diseases and nutrient deficiencies that are often invisible to standard cameras (Terentev et al. [

77]). Hyperspectral modules (e.g., push-broom type), whether mounted on UAVs or short-range ground robots, offer comprehensive spectral characterization, such as fruit ripening assessment in orchards. However, hyperspectral data processing produces large volumes of information and typically requires dimensionality reduction through advanced mathematical models or machine learning algorithms.

The robot should also be equipped with a 3D LiDAR system to accurately reconstruct the spatial structure of crops. LiDAR data enables measurements of plant height and density, canopy shape, and tree dimensions (Terentev et al. [

77]). In orchards, this allows for the automatic generation of tree maps and tracking of growth, while also supporting robot navigation between rows. It has even been stated that LiDAR is crucial for orchard navigation, as it provides a complete three-dimensional representation of the environment, including ground topography and canopy distribution (Li et al. [

74]). In vegetable cultivation, LiDAR may support stem length measurements and spatial distribution of plants in rows, although it is less critical than in tall orchards. Additionally, ultrasonic sensors or mechanically controlled infrared barriers may be used for direct stem density measurement, which is particularly useful for assessing plant coverage in vegetable crops.

The acquisition system must ensure georeferencing of all data: each image or LiDAR point must be linked to the robot’s GPS/inertial navigation system. The speed and sampling frequency should be selected to ensure complete coverage of the operational area (e.g., multiple row passes). For data synchronization, time calibration algorithms and atmospheric correction (for satellite or UAV imagery), as well as in-field camera calibration, are recommended.

Data processing should be implemented on multiple levels. Edge computing devices (e.g., embedded GPUs or dedicated ML accelerators) are recommended for preliminary image analysis and rapid classification (e.g., vegetation/soil segmentation, disease symptom detection). On board the robot, deep learning algorithms (e.g., convolutional neural networks—CNNs) can be applied for weed detection or stress recognition, enabling immediate response (e.g., site-specific spraying). Recommended typical algorithms include CNNs for image analysis, and random forests or gradient boosting for parameter regression (e.g., biomass estimation based on vegetation indices). More computationally demanding tasks (model training on large datasets, long-term analysis) should be executed in the cloud or dedicated computing centers. Cloud architecture enables data aggregation from multiple robots and farms, as well as integration with FMIS software for decision support (e.g., yield forecasts, plant protection recommendations). Virtual platforms also enable the sharing and comparison of historical datasets, improving the quality of analysis.

In terms of communication, the robot should combine both local and remote approaches. Critical operational information (e.g., disease alerts, driving trajectory) should be processed locally, while large datasets should be transmitted to backend systems. It is recommended to equip the robot with wireless communication modules (e.g., 4G/5G or dedicated IoT networks) for cloud transmission, as well as ports for connectivity to the farm’s local network (Wi-Fi, Ethernet). It is also essential to adhere to interoperability standards (e.g., ISOXML/ADAPT) to ensure that data collected by the robot can be seamlessly integrated with existing farm management systems.

Finally, it is necessary to account for differences between orchard and vegetable production systems. In orchards, the robot operates in irregular, tree-dense terrain, making 3D sensors (LiDAR) and wide-angle cameras a priority for mapping tall canopies and navigating between tree rows (Li et al. [