Banana Bunch Weight Estimation and Stalk Central Point Localization in Banana Orchards Based on RGB-D Images

Abstract

1. Introduction

- (1)

- We present a method for detecting and localizing banana bunches and stalks in orchards based on a neural network and structured light technology to provide location information for banana-harvesting robots.

- (2)

- We propose a banana bunch weight estimation model. The size and weight of banana bunches are estimated from RGB-D image data. Intelligent monitoring of banana growth information can help farmers implement harvest decisions.

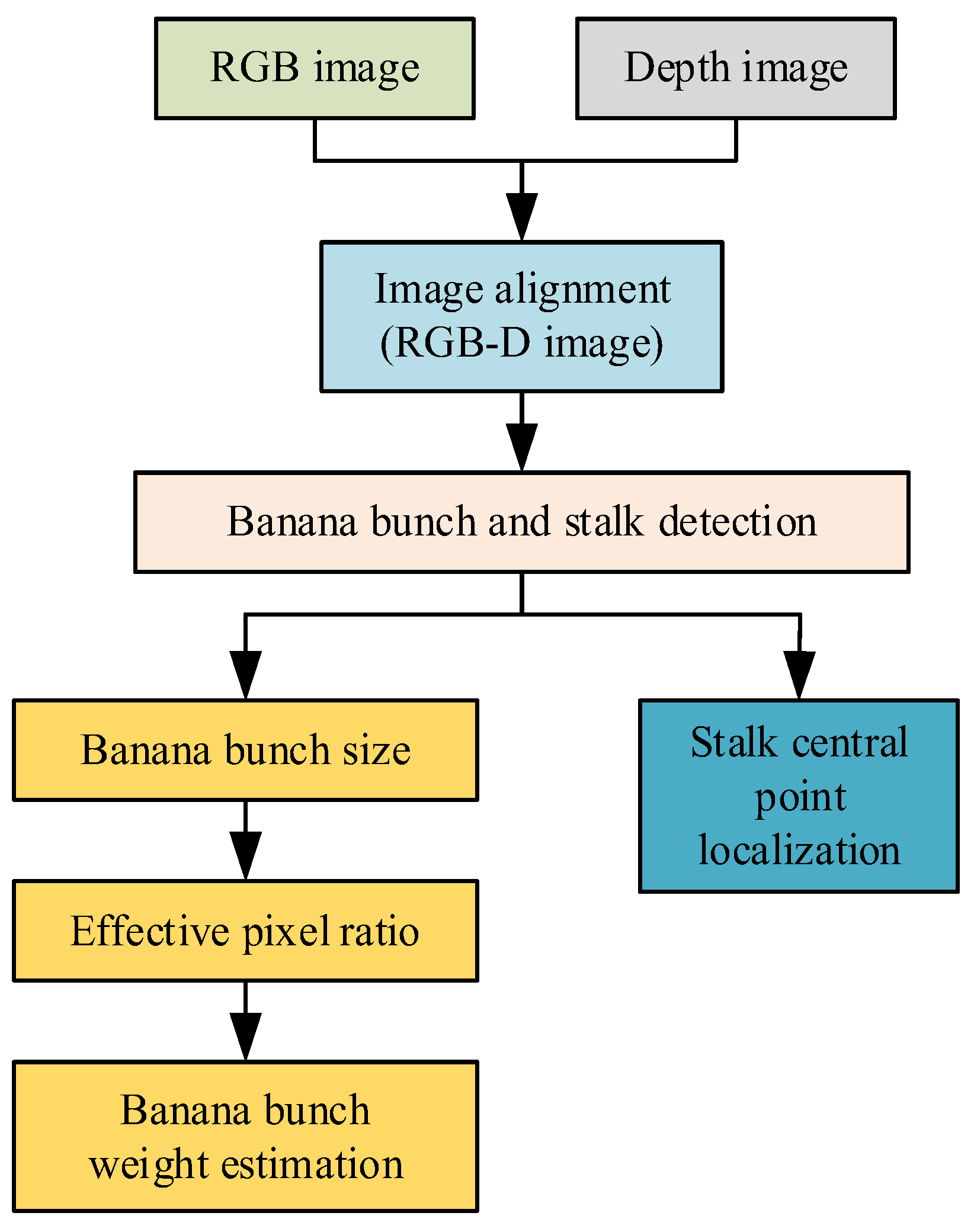

2. Materials and Methods

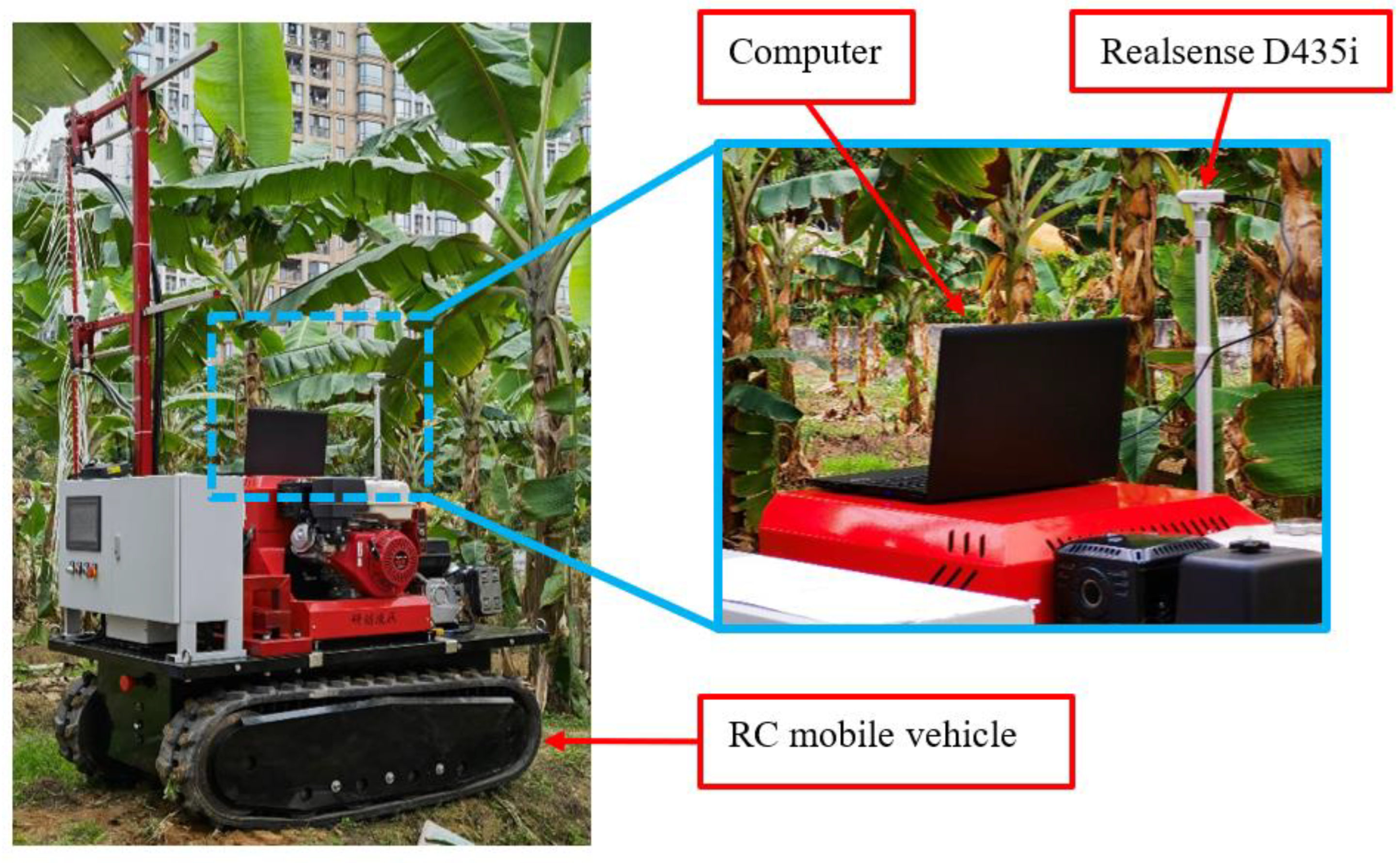

2.1. Sensor System

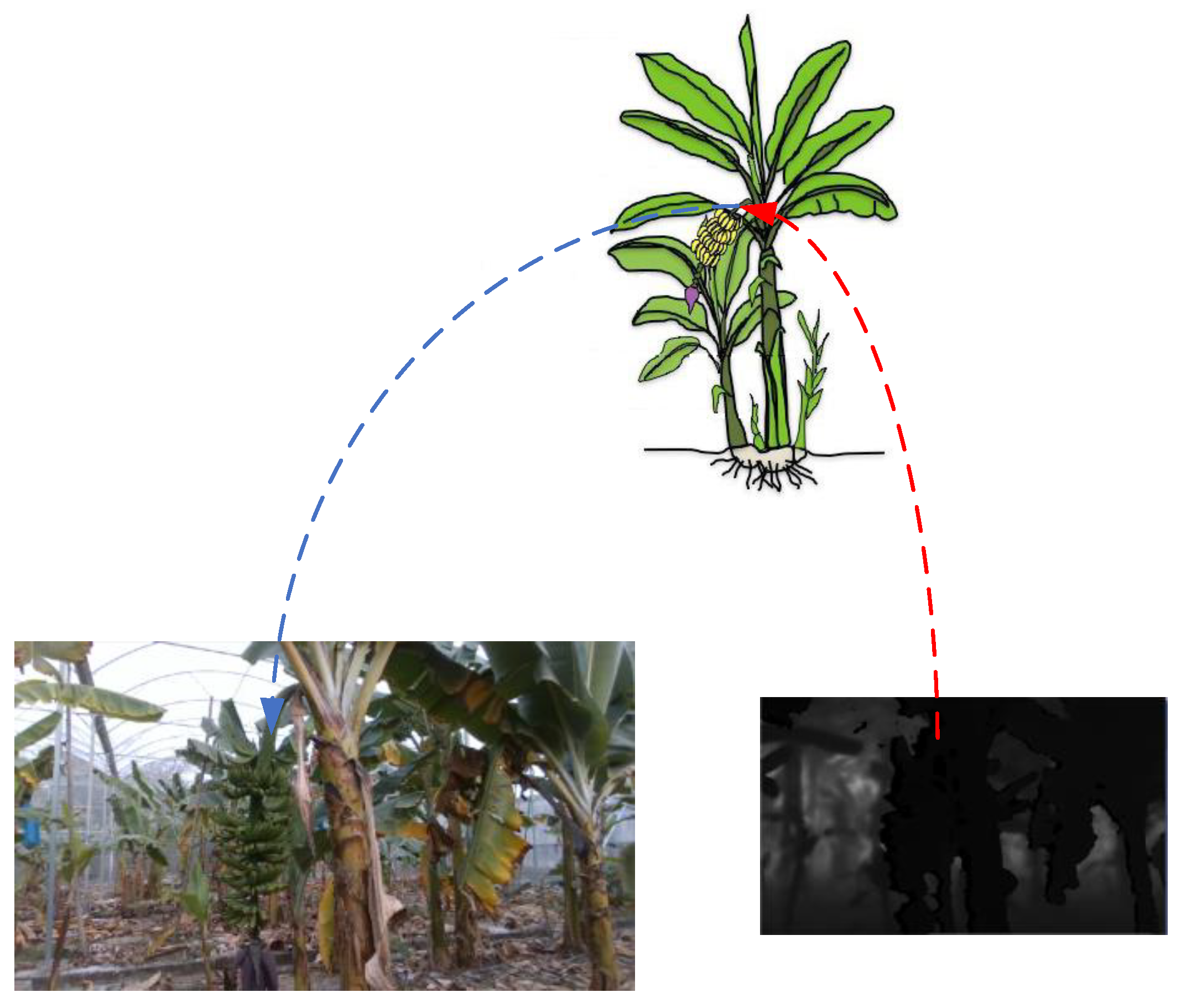

2.2. Image Alignment

2.3. Detection Method

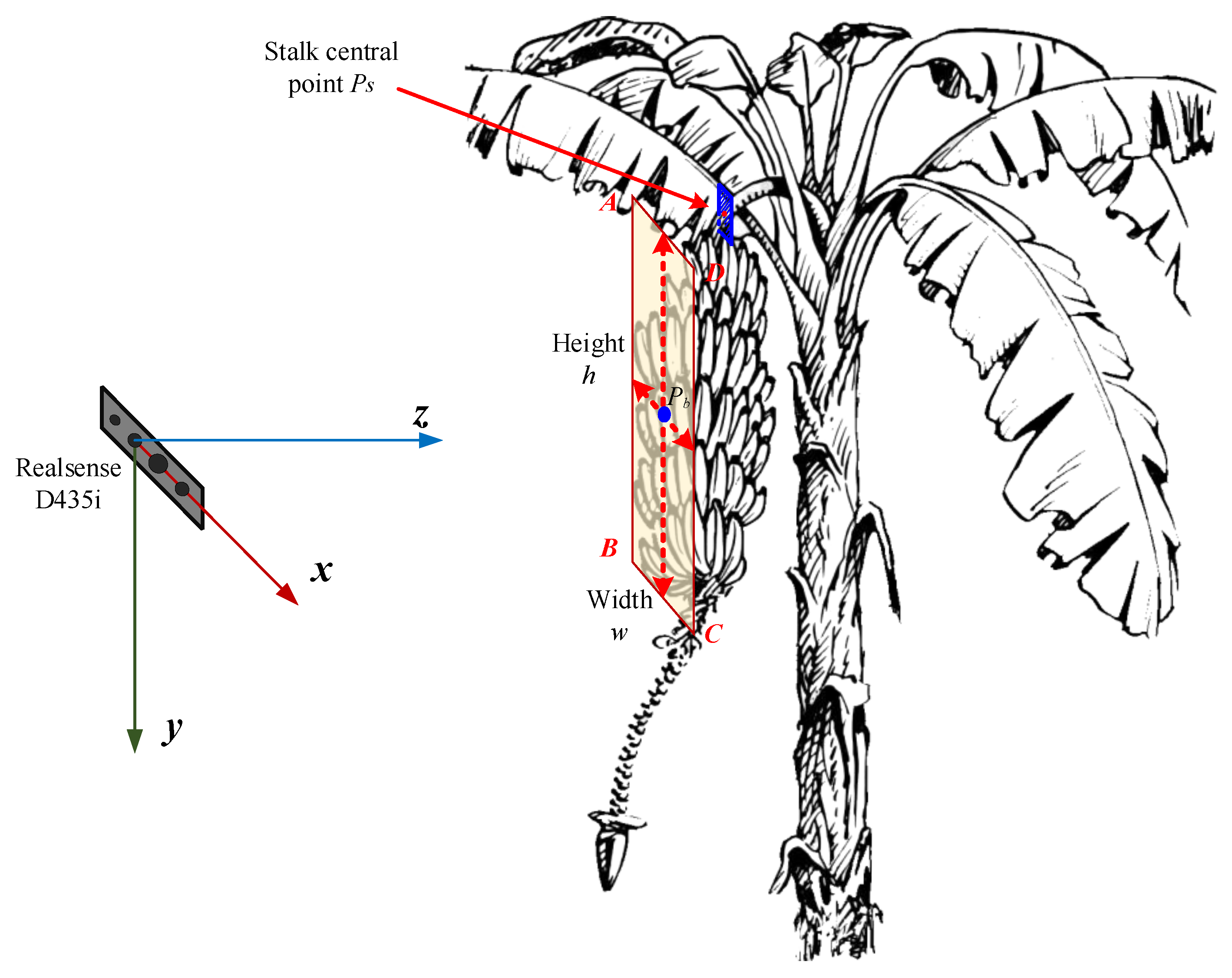

2.4. The Localization of the Banana Stalk Central Point and the Bunch Size Acquisition

2.5. Banana Bunch Weight Estimation

2.5.1. Effective Pixel Ratio

2.5.2. Analysis of Banana Bunch Weight Parameters

3. Experimental Results and Discussion

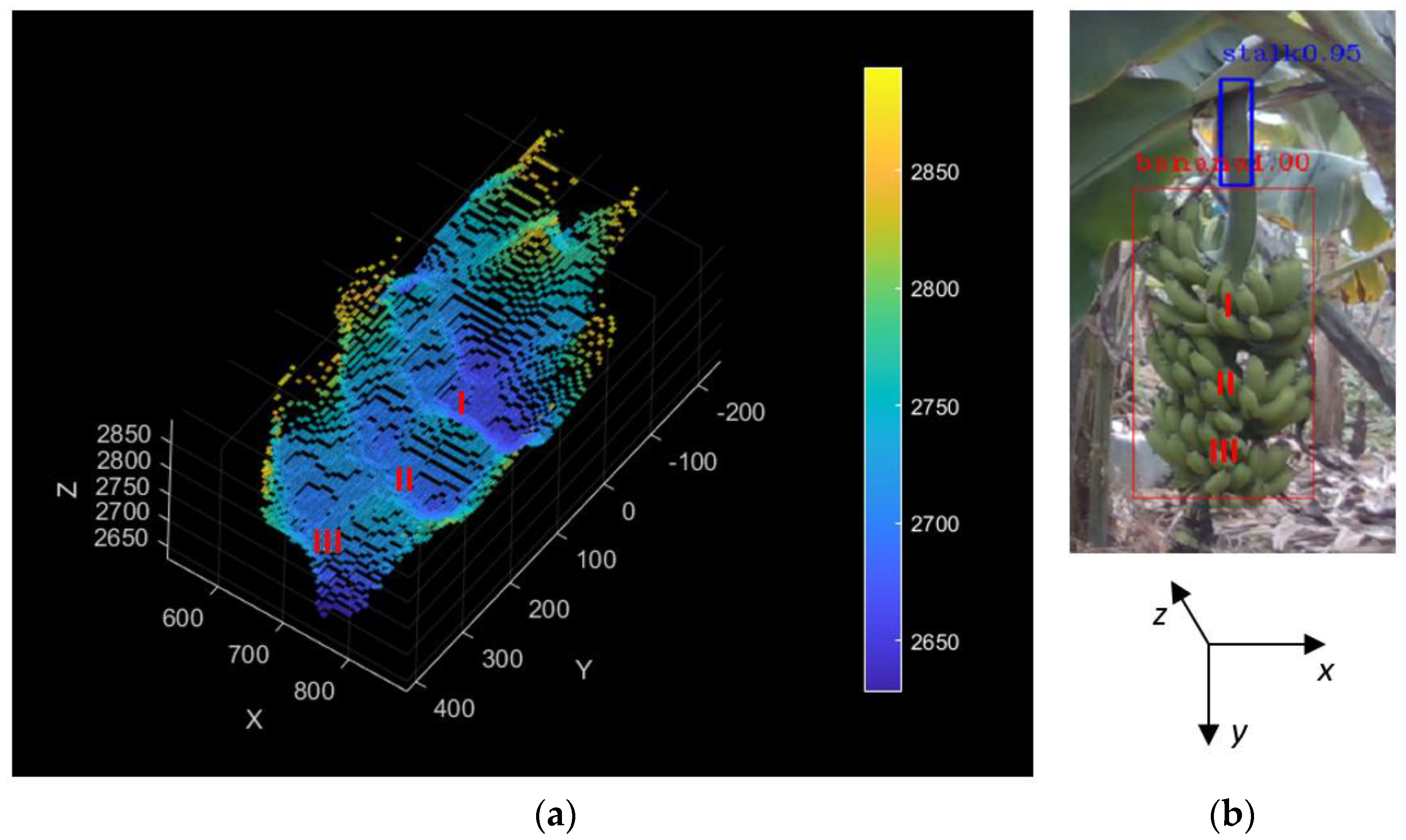

3.1. Results of the Banana Stalk Central Point Localization

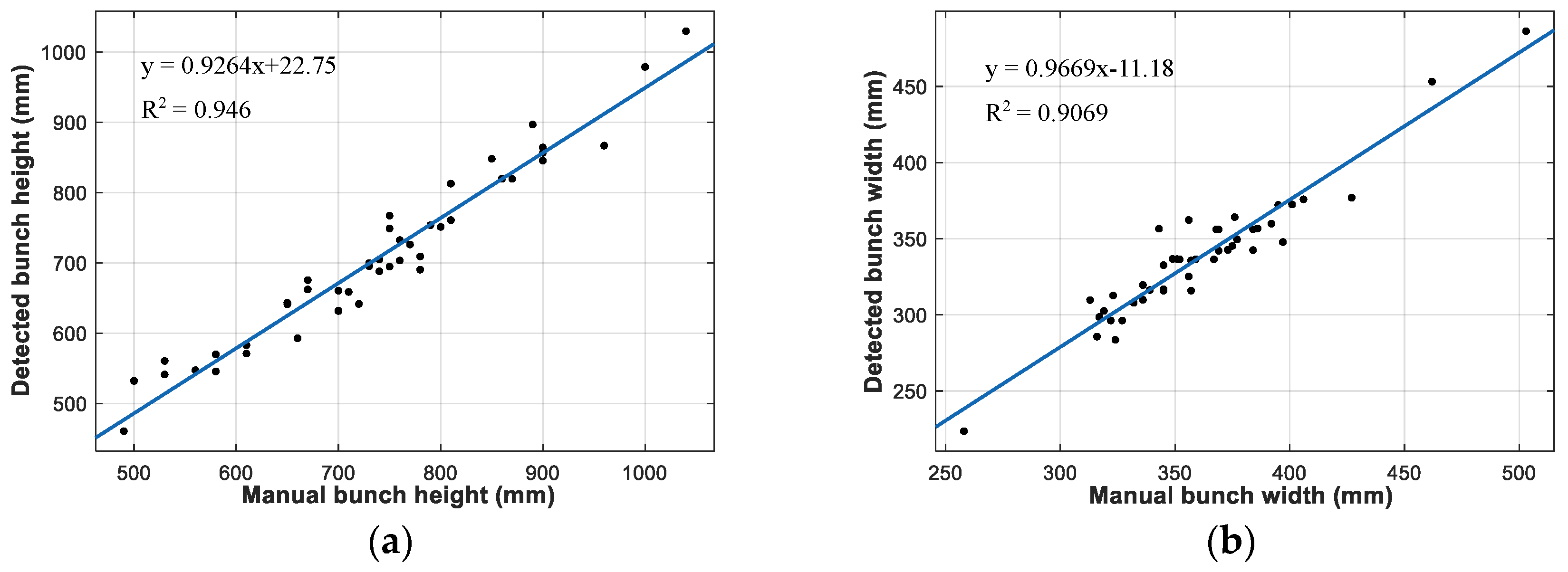

3.2. Results of the Bunch Size Estimation

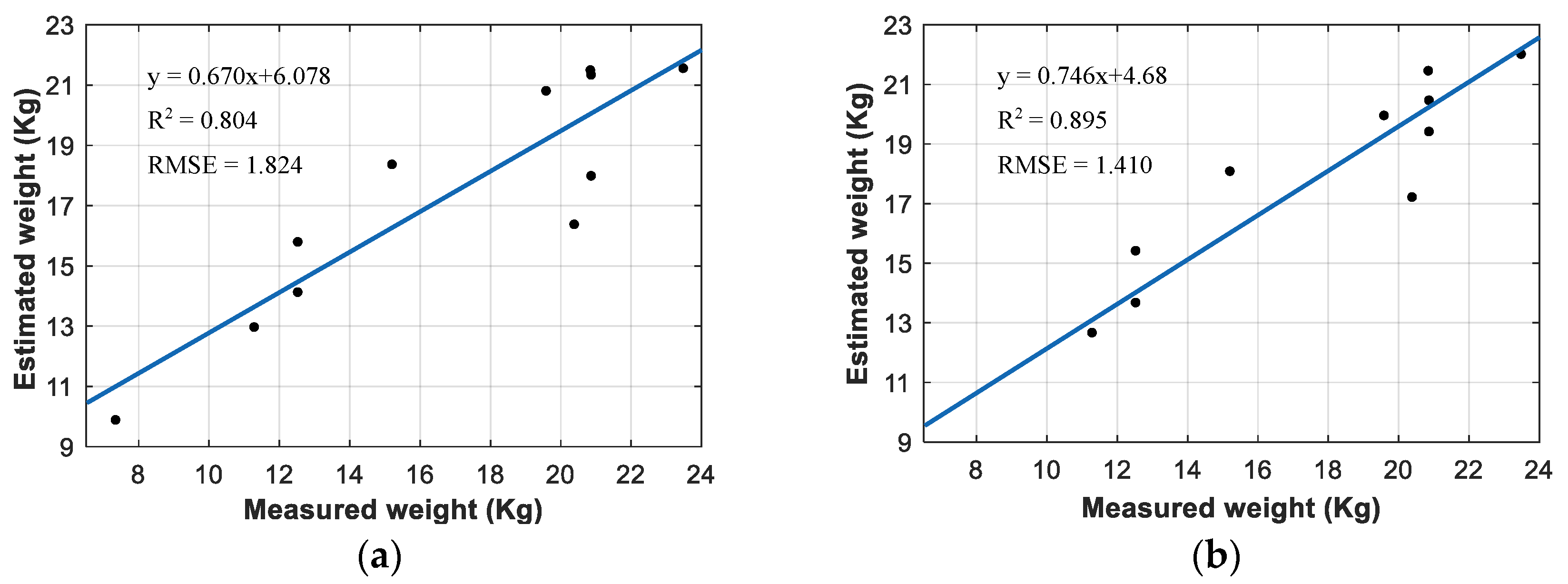

3.3. Results of the Bunch Weight Estimation

4. Conclusions

- (1)

- A method based on depth cameras and deep learning algorithms was described to detect and locate banana bunches and stalks in the natural environment. The average localization error was 22.875 mm, showing the method’s ability to provide information for intelligent banana harvesting.

- (2)

- A machine vision method was presented to obtain the size information of banana bunches. The R2 values for predictions from the of height and width against measured values were 0.946 and 0.9069, respectively. This method can be used for bunch weight estimation, and it is useful for intelligent management in the banana growth period.

- (3)

- A weight estimation model of banana bunches was established, and an effective pixel ratio was proposed to characterize the fruit morphology and obtain more accurate three-dimensional information about banana bunches. Through experimental comparison, it was found that the weight estimation model based on the parameters h + Vp is suitable for estimating the weight of banana bunches in orchards. The R2 of the banana bunch weight estimation model was 0.8947 and the RMSE was 1.4102 kg.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and localization methods for vision-based fruit picking robots: A review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef] [PubMed]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1–37. [Google Scholar] [CrossRef]

- Fu, L.; Gao, F.; Wu, J.; Li, R.; Karkee, M.; Zhang, Q. Application of consumer RGB-D cameras for fruit detection and localization in field: A critical review. Comput. Electron. Agric. 2020, 177, 105687. [Google Scholar] [CrossRef]

- Font, D.; Pallejà, T.; Tresanchez, M.; Runcan, D.; Moreno, J.; Martínez, D.; Teixidó, M.; Palacín, J. A Proposal for automatic fruit Harvesting by Combining a low cost Stereovision Camera and a Robotic Arm. Sensors 2014, 14, 11557–11579. [Google Scholar] [CrossRef] [PubMed]

- Si, Y.; Liu, G.; Feng, J. Location of apples in trees using stereoscopic vision. Comput. Electron. Agric. 2015, 112, 68–74. [Google Scholar] [CrossRef]

- Ji, W.; Meng, X.; Qian, Z.; Xu, B.; Zhao, D. Branch localization method based on the skeleton feature extraction and stereo matching for apple harvesting robot. Int. J. Adv. Robot. Syst. 2017, 14, 256010465. [Google Scholar] [CrossRef]

- Xiong, J.; He, Z.; Lin, R.; Liu, Z.; Bu, R.; Yang, Z.; Peng, H.; Zou, X. Visual positioning technology of picking robots for dynamic litchi clusters with disturbance. Comput. Electron. Agric. 2018, 151, 226–237. [Google Scholar] [CrossRef]

- Wang, C.; Luo, T.; Zhao, L.; Tang, Y.; Zou, X. Window zooming–based localization algorithm of fruit and vegetable for harvesting robot. IEEE Access 2019, 7, 103639–103649. [Google Scholar] [CrossRef]

- Kusumam, K.; Krajník, T.; Pearson, S.; Duckett, T.; Cielniak, G. 3D-vision based detection, localization, and sizing of broccoli heads in the field. J. Field Robot. 2017, 34, 1505–1518. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.; Verma, B. On-tree mango fruit size estimation using RGB-D images. Sensors 2017, 17, 2738. [Google Scholar] [CrossRef] [PubMed]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Yu, L.; Xiong, J.; Fang, X.; Yang, Z.; Chen, Y.; Lin, X.; Chen, S. A litchi fruit recognition method in a natural environment using RGB-D images. Biosyst. Eng. 2021, 204, 50–63. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Wang, C. Three-dimensional reconstruction of guava fruits and branches using instance segmentation and geometry analysis. Comput. Electron. Agric. 2021, 184, 106107. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Ai, P.; Chen, Z.; Yang, Z.; Zou, X. Rachis detection and three-dimensional localization of cut off point for vision-based banana robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Wu, F.; Yang, Z.; Mo, X.; Wu, Z.; Tang, W.; Duan, J.; Zou, X. Detection and counting of banana bunches by integrating deep learning and classic image-processing algorithms. Comput. Electron. Agric. 2023, 209, 107827. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Vilaplana, V.; Rosell-Polo, J.R.; Morros, J.; Ruiz-Hidalgo, J.; Gregorio, E. Multi-modal deep learning for Fuji apple detection using RGB-D cameras and their radiometric capabilities. Comput. Electron. Agric. 2019, 162, 689–698. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Gongal, A.; Karkee, M.; Amatya, S. Apple fruit size estimation using a 3D machine vision system. Inf. Process. Agric. 2018, 5, 498–503. [Google Scholar] [CrossRef]

- Milella, A.; Marani, R.; Petitti, A.; Reina, G. In-field high throughput grapevine phenotyping with a consumer-grade depth camera. Comput. Electron. Agric. 2019, 156, 293–306. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; From, P.J. Instance segmentation and localization of strawberries in farm conditions for automatic fruit harvesting. IFAC-PapersOnLine 2019, 52, 294–299. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef]

- Rong, J.; Wang, P.; Yang, Q.; Huang, F. A field-tested harvesting robot for oyster mushroom in greenhouse. Agronomy 2021, 11, 1210. [Google Scholar] [CrossRef]

- Bai, T.; Zhang, N.; Mercatoris, B.; Chen, Y. Improving jujube fruit tree yield estimation at the field scale by assimilating a single landsat remotely-sensed LAI into the WOFOST model. Remote Sens. 2019, 11, 1119. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Auat Cheein, F.; Guevara, J.; Llorens, J.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R. Fruit detection, yield prediction and canopy geometric characterization using LiDAR with forced air flow. Comput. Electron. Agric. 2020, 168, 105121. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.; Ruiz-Hidalgo, J.; Vilaplana, V.; et al. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; IJsselmuiden, J.; Kim, G. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Morros, J.; Ruiz-Hidalgo, J.; Vilaplana, V.; Gregorio, E. Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Comput. Electron. Agric. 2020, 169, 105165. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Liu, H.; Yang, L.; Zhang, D. Real-time visual localization of the picking points for a ridge-planting strawberry harvesting robot. IEEE Access 2020, 8, 116556–116568. [Google Scholar] [CrossRef]

- Yu, Z.; Wang, S.; Zhang, B. A camera/ultrasonic sensors based trunk localization system of semi-structured orchards. In Proceedings of the 2021 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Delft, The Netherlands, 12–16 July 2021. [Google Scholar]

- Wu, G.; Li, B.; Zhu, Q.; Huang, M.; Guo, Y. Using color and 3D geometry features to segment fruit point cloud and improve fruit recognition accuracy. Comput. Electron. Agric. 2020, 174, 105475. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Wittstruck, L.; Kühling, I.; Trautz, D.; Kohlbrecher, M.; Jarmer, T. UAV-based RGB imagery for hokkaido pumpkin (cucurbita max.) detection and yield estimation. Sensors 2021, 21, 118. [Google Scholar] [CrossRef]

- Fu, L.; Duan, J.; Zou, X.; Lin, J.; Zhao, L.; Li, J.; Yang, Z. Fast and accurate detection of banana fruits in complex background orchards. IEEE Access 2020, 8, 196835–196846. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, R.; Zhu, L.; Zhang, S.; Li, X. A method of fast segmentation for banana stalk exploited lightweight multi-feature fusion deep neural network. Machines 2021, 9, 66. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Chen, S.; Ye, Y.; Ai, P.; Yang, Z. Multi-target recognition of bananas and automatic positioning for the inflorescence axis cutting point. Front. Plant Sci. 2021, 12, 705021. [Google Scholar] [CrossRef]

- Cai, L.; Liang, J.; Xu, X.; Duan, J.; Yang, Z. Banana pseudostem visual detection method based on improved YOLOV7 detection algorithm. Agronomy 2023, 13, 999. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Huang, Z.; Zhou, H.; Wang, C.; Lian, G. Three-dimensional perception of orchard banana central stock enhanced by adaptive multi-vision technology. Comput. Electron. Agric. 2020, 174, 105508. [Google Scholar] [CrossRef]

- Woomer, P.L.; Bekunda, M.A.; Nkalubo, S.T. Estimation of banana yield based on bunch phenology. Afr. Crop Sci. J. 1999, 7, 341–348. [Google Scholar] [CrossRef]

- Rodríguez González, C.; Cayón Salinas, D.G.; Mira Castillo, J.J. Effect of number of functional leaves at flowering on yield of banana Grand Naine (Musa AAA Simmonds). Rev. Fac. Nac. Agron. 2012, 65, 6591–6597. [Google Scholar]

- Joyce, D.R.S.; Moacir, P.; Filipe, A.R.; Willian, S.L.; Sergio, L.R.D.; Sebastião, D.O.E.S.; Crysttian, A.P. Correlation between morphological characters and estimated bunch weight of the Tropical banana cultivar. Afr. J. Biotechnol. 2012, 11, 10682–10687. [Google Scholar]

- Stevens, B.; Diels, J.; Brown, A.; Bayo, S.; Ndakidemi, P.A.; Swennen, R. Banana biomass estimation and yield forecasting from non-destructive measurements for two contrasting cultivars and water regimes. Agronomy 2020, 10, 1435. [Google Scholar] [CrossRef]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. YOLO-Banana: A lightweight neural network for rapid detection of banana bunches and stalks in the natural environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Michael, G. Banana Tree SVG Vector. Available online: https://svg-clipart.com/cartoon/kT9FxQS-banana-tree-clipart (accessed on 13 November 2021).

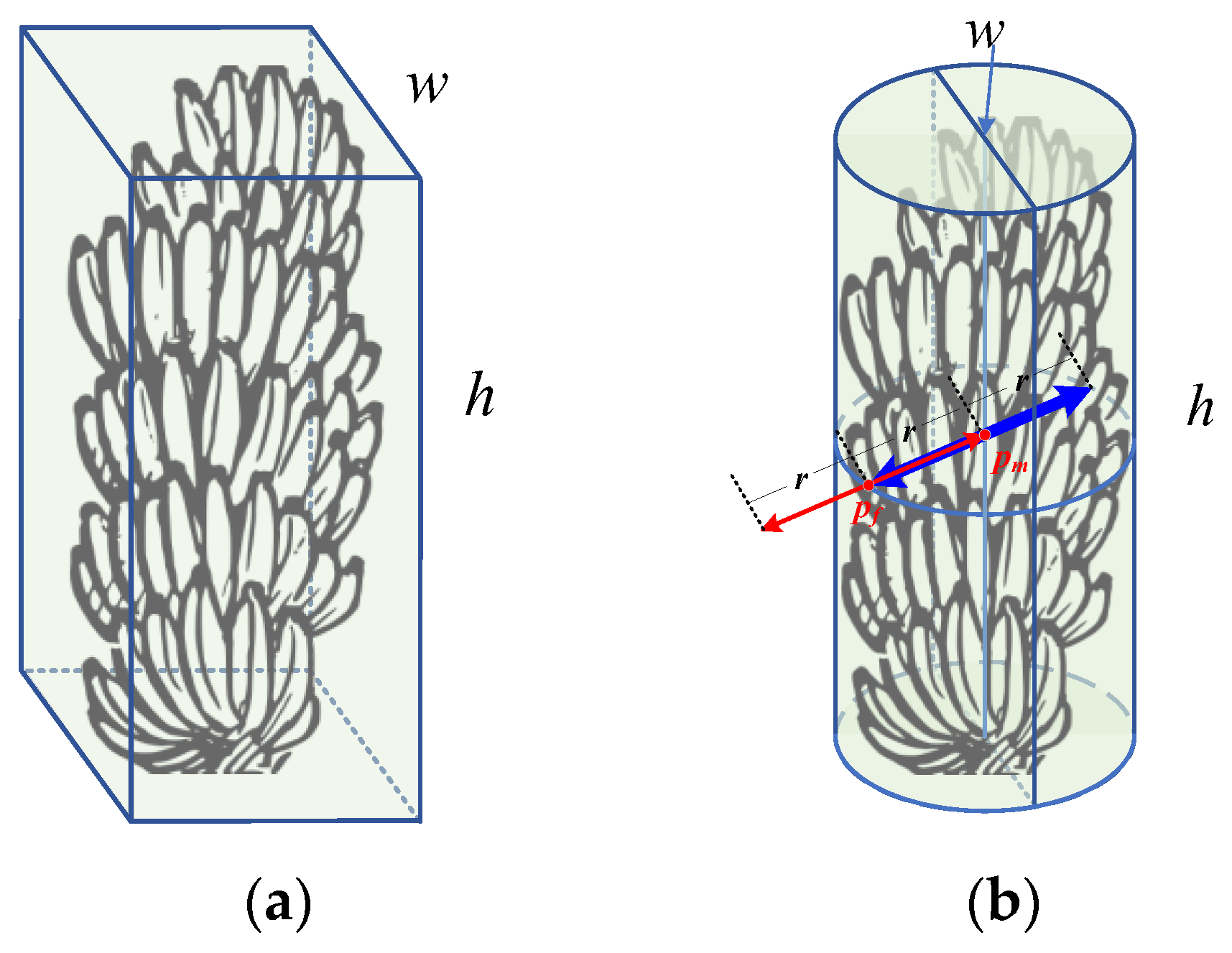

| Parameter | Explanation |

|---|---|

| w | The width of the banana bunch |

| h | The height of the banana bunch |

| P | Effective pixel ratio |

| S | Area of front rectangle of cuboid (w × h) |

| V | Volume of cuboid (w2 × h) |

| Sc | Curved surface area of the half cylinder (0.5Π × w × h) |

| Vc | Cylinder volume |

| Sp | Area estimation of the banana bunch in the field of view |

| Vp | Banana bunch volume estimation |

| No. | Variable | Regression Equation | R2 | RMSE |

|---|---|---|---|---|

| 1 | w | Wbanana = 0.0345 w + 4.201 | 0.141 | 3.501 |

| 2 | h | Wbanana = 0.0269 h − 2.393 | 0.787 | 1.745 |

| 3 | S | Wbanana = 7.181 × 10−5S + 1.722 | 0.682 | 2.128 |

| 4 | Sp | Wbanana = 4.921 × 10−5Sp + 9.439 | 0.697 | 2.081 |

| 5 | V | Wbanana = 8.137 × 10−8V + 8.615 | 0.512 | 2.637 |

| 6 | Vp | Wbanana = 2.471 × 10−7Vp + 7.245 | 0.534 | 2.577 |

| No. | Variable | Regression Equation | R2 | RMSE |

|---|---|---|---|---|

| 1 | h + S | Wbanana = −2.584 + 0.0224 h + 1.459 × 10−5S | 0.811 | 1.661 |

| 2 | h + V | Wbanana = −2.11 + 0.0235 h + 1.699 × 10−8V | 0.813 | 1.653 |

| 3 | h + Sp | Wbanana = −2.163 + 0.0219 h + 1.081 × 10−5Sp | 0.812 | 1.658 |

| 4 | h + Vp | Wbanana = −2.301 + 0.0233 h + 5.331 × 10−8Vp | 0.814 | 1.647 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, L.; Yang, Z.; Deng, F.; Zhang, J.; Xiao, Q.; Fu, L.; Duan, J. Banana Bunch Weight Estimation and Stalk Central Point Localization in Banana Orchards Based on RGB-D Images. Agronomy 2024, 14, 1123. https://doi.org/10.3390/agronomy14061123

Zhou L, Yang Z, Deng F, Zhang J, Xiao Q, Fu L, Duan J. Banana Bunch Weight Estimation and Stalk Central Point Localization in Banana Orchards Based on RGB-D Images. Agronomy. 2024; 14(6):1123. https://doi.org/10.3390/agronomy14061123

Chicago/Turabian StyleZhou, Lei, Zhou Yang, Fuqin Deng, Jianmin Zhang, Qiong Xiao, Lanhui Fu, and Jieli Duan. 2024. "Banana Bunch Weight Estimation and Stalk Central Point Localization in Banana Orchards Based on RGB-D Images" Agronomy 14, no. 6: 1123. https://doi.org/10.3390/agronomy14061123

APA StyleZhou, L., Yang, Z., Deng, F., Zhang, J., Xiao, Q., Fu, L., & Duan, J. (2024). Banana Bunch Weight Estimation and Stalk Central Point Localization in Banana Orchards Based on RGB-D Images. Agronomy, 14(6), 1123. https://doi.org/10.3390/agronomy14061123