Abstract

With the continuous growth of the global population and the increasing demand for crop yield, enhancing crop productivity has emerged as a crucial research objective on a global scale. Weeds, being one of the primary abiotic factors impacting crop yield, contribute to approximately 13.2% of annual food loss. In recent years, Unmanned Aerial Vehicle (UAV) technology has developed rapidly and its maturity has led to widespread utilization in improving crop productivity and reducing management costs. Concurrently, deep learning technology has become a prominent tool in image recognition. Convolutional Neural Networks (CNNs) has achieved remarkable outcomes in various domains, including agriculture, such as weed detection, pest identification, plant/fruit counting, maturity grading, etc. This study provides an overview of the development of UAV platforms, the classification of UAV platforms and their advantages and disadvantages, as well as the types and characteristics of data collected by common vision sensors used in agriculture, and discusses the application of deep learning technology in weed detection. The manuscript presents current advancements in UAV technology and CNNs in weed management tasks while emphasizing the existing limitations and future trends in its development process to assist researchers working on applying deep learning techniques to weed management.

1. Introduction

With the continuous growth of the global population and the increasing demand for crop yield, how to improve crop yield has become an important global research target. In the process of crop growth, light, soil fertility, moisture, the occurrence of pests and diseases, weed invasion, etc., will affect the yield of crops. By combining advanced computer information technology and agricultural production, precision agriculture and smart agriculture have become important mainstays of sustainable agricultural production. Solutions for crop disease and pest detection, crop classification, crop yield prediction, and weed detection are proposed for increasing crop yield in agriculture [1]. In the actual production process, weeds are an important factor in the reduction of crop yield. Weeds are a kind of natural competitor process of crop growth. They compete with crops for water and nutrients, block sunlight, spread diseases and pests, occupy the growing space of crops, etc., which affects the growth of crops and thus affecting the yield of crops [2]. The annual food loss due to weeds is approximately 13.2% [3], so timely and effective weed management is key to improving crop yields and agricultural productivity.

Methods and drawbacks of traditional weed management. In response to this problem, people have been studying how to control weeds and increase crop yields. In the past crop cultivation process, artificial weeding methods included mechanical, crop rotation, and chemical methods. Manual weeding is very effective and inexpensive for crop cultivation in small areas, but for crop cultivation in large areas, this method is very time-consuming and inefficient [4]. Among them, herbicides are commonly used in weed management. These chemical weed management methods are effective in actual farmland management, but their adverse effects on the environment and the human body cannot be ignored [5,6]. In addition, due to the diversity of weed species, a single weeding method is often difficult to cope with in various situations. Weed detection is an important direction in modern agriculture. The existing weed detection technology is based on the processing of farmland image data, so the accuracy of image data is of great significance to the development of this technology. However, relying on manual data collection is not only inefficient and effective in coverage, but also, the inherent error of manual data collection will lead to inaccurate data, which will affect the performance of weed detection. Consequently, there is an urgent requirement for more efficient and intelligent methods for weed management, which has become a prominent research focus.

In recent years, with the ceaseless development of agricultural technology and computer technology, agriculture has been continuously advancing towards the era of smart agriculture 4.0, and its scope of application has become more and more extensive. For example, plant disease and insect detection [7], crop yield prediction [8], crop type identification [9], water stress, crop growth detection, and phenological period detection are all realized through computer vision technology [10,11,12]. As for weeds, an important factor affecting crop yield, researchers have also begun to study how to use modern technology to manage weeds through scientific methods. Suitable for modern weed detection methods emerged. Modern weed detection technology mostly relies on computer vision. Identifying weeds and crops through algorithms can control weeds more effectively and reduce the negative impact on the environment [13]. Efficient remote sensing technology can achieve accurate and timely crop and weed status information [14]. Researchers have used various machine learning technologies to achieve accurate weed detection based on satellite-based remote sensing data and have achieved remarkable results [15,16]. However, low temporal/spatial resolution will produce bad results on image quality, and weather conditions will also affect image acquisition. The above shortcomings will reduce the performance of the algorithm and lead to weed detection errors. The traditional approach to weed detection management tasks from aerial images is based on classical machine learning algorithms such as Support Vector Machine algorithms (SVMs), Random Forest algorithms (RFs), Extreme Gradient Boosting (XGB), and k-means algorithms [17,18,19]. The above technologies manually extract features from images through different feature extraction methods. Gray-Level Co-occurrence Matrix (GLDM), Scale Invariant Feature Transform (SIFT), Local Binary Pattern (LBP), and other methods to extract image features such as texture, region, and color are time-consuming and inefficient. Therefore, deep learning provides new opportunities for fast feature image extraction [14].

The rapid development of UAV technology provides new possibilities for weed management in farmland. UAVs are flexible, fast, and efficient and can be widely used in various terrains and environments. It can cover large areas of farmland quickly. In addition, drones can be equipped with high-resolution cameras and sensors to obtain detailed images and data on farmland. Deep learning, on the other hand, is a technique that mimics the way the human brain works, learning large amounts of data to identify and classify objects. It has achieved remarkable results in many fields, including image recognition, speech recognition, natural language processing, and so on. Therefore, the application of UAV and deep learning technology to farmland weed management can realize the automatic identification, classification, and weed mapping of weeds to achieve efficient and intelligent weed control. Common remote sensing platforms are divided into space remote sensing platforms, aviation remote sensing platforms, and ground remote sensing platforms according to their height, among which UAV belongs to the aerial remote sensing platform. Compared with other remote sensing platforms, UAVs have obvious advantages (Table 1), such as high flexibility, strong availability, low cost, real-time, accurate positioning, and real-time image acquisition. Unlike the space remote sensing platform, the cost is high, the data cannot be used at any time, and the crop yield is not affected by the ground remote sensing platform. Therefore, drones are widely used in various tasks in the field of agriculture.

Table 1.

Advantages and disadvantages of the existing remote-sensing platforms.

The utilization of drones and deep learning technologies in weed management is a technical focal point that can enhance efficiency, sustainability, technological innovation, and agricultural modernization. Addressing the challenges and issues encountered during practical application can optimize the technical scheme. In weed management, data collection quantity and quality, data processing accuracy, data analysis effectiveness, and real-life applicability are all current technical obstacles that need to be overcome. Literature [20] explored how drones equipped with advanced sensors and cameras can effectively detect and evaluate weed infestation while utilizing artificial intelligence to capture high-resolution farmland images for weed detection and classification. This study significantly contributes to understanding the concept of weed detection/classification. Literature [21] discussed remote sensing technology’s application in detecting weeds in paddy fields by comparing traditional methods with automatic detection using remote sensing platforms while introducing common weed species/algorithms used for classification purposes. This paper improves researchers’ comprehension of rice field weeds’ challenges while also highlighting UAV remote sensing technology’s advantages/limitations for automatic weed detection. Literature [22] emphasized precise weed control through UAV remote sensing technology by discussing a unique perspective on combining UAV image acquisition and machine learning technology for further processing via a plant identification method specific to sustainable vegetable management.

How to use drone technology and deep learning technology to improve the efficiency and sustainability of weed management is a technical hotspot, which can also promote technological innovation and agricultural modernization. By studying the problems and challenges in its practical application, it is helpful to further optimize the technical scheme. In weed management, the quantity and quality of data collection, the accuracy of data processing, the effectiveness of data analysis, and the applicability in real life are all technical problems that need to be overcome at present. In the literature, we study how drones equipped with advanced sensors and cameras can effectively detect and assess weed infestations and review the high performance of training drones to capture high-resolution images of farmland through artificial intelligence and use them for weed detection and classification, which is very meaningful for understanding the concept of weed detection and classification. The application of remote sensing technology in weed detection in paddy fields was discussed, and the traditional weed detection method was compared with the automatic detection method using a remote sensing platform. The methods of weed management and common weed species in paddy fields were introduced, and the algorithms commonly used in weed and crop classification were discussed. The paper improved researchers’ understanding of the challenges posed by weeds in rice fields and also allowed them to understand the advantages and limitations of using drones for automatic weed detection. Special attention is paid to precise weed control using UAV and sensor technology, and a weed plate identification method combining UAV image acquisition and machine learning technology for further processing is discussed, which provides a unique perspective for the application of UAV and sensor technology to sustainable vegetable management. However, none of them specifically investigated the application of deep learning algorithms to tasks such as weed detection, weed mapping, and weed classification.

In this review paper, we mainly reviewed the application of UAV technology and deep learning technology in weed management and achieved results. To our knowledge, this is the first review paper focusing on the use of convolutional neural network-based drone images for weed management, which also includes field tasks such as precise weed location and precise pesticide spraying through the combination of drone technology and deep learning technology. The rest of this article is arranged as follows. Section 2 discusses the development status of the UAV platform and the research status of UAV technology in weed management. Section 3 discusses the research progress of deep learning techniques in weed management and introduces each deep learning algorithm. Section 4 reviews advanced technologies based on drones and deep learning techniques applied in weed management. Parts 5 and 6 are the discussion and summary of the full text, respectively.

2. UAV Platform and Application

An unmanned aerial vehicle (UAV) is an unmanned aircraft operated by radio remote control equipment and a self-provided program control device. Its first application can be traced back to the early 20th century, mainly in the military field [23], which is mainly used for enemy reconnaissance and target aircraft training. With the continuous progress of modern science and technology, the UAV platform has also developed rapidly, not only at the technical level, and has made remarkable progress. The application field is also constantly expanding. In the field of agriculture, drones can be used for crop phenology prediction, crop yield, field monitoring, pest monitoring, precision spraying, fertilization, seeding, remote sensing monitoring, plant protection, and other aspects [24,25,26,27], effectively improving the efficiency of agricultural production. This section will give a brief introduction to the various types of UAVs and their applications in the field of agriculture.

2.1. Types of UAV Platforms

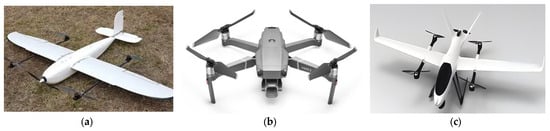

Most of the early UAV platforms adopted the fixed-wing design and were operated remotely through ground control stations, which were characterized by long endurance, large load, fast speed, and strong wind resistance. Subsequently, two new UAV platforms, multi-rotor UAV and vertical takeoff and landing UAV, appeared. These are characterized by vertical takeoff and landing and have low requirements on the site, expanding the application range of UAVs. Therefore, UAV platforms used in agriculture can be divided into three categories: fixed-wing UAV, multi-rotor UAV, and VTOL UAV (Table 2) [7].

Table 2.

Advantages and disadvantages of UAV platforms.

2.1.1. Fixed-Wing UAV

Fixed-wing UAVs maintain flight through the lift generated by the wings, which has high flight efficiency and endurance. The characteristics of fixed-wing UAVs determine that they are more competent for automatic cruise, field detection, phenological period detection, and other agricultural tasks. Yang et al. [24] used a fixed-wing UAV (MTD-100, CHN) to collect data on rice fields. It is equipped with inertial measurement units, GPS sensors, and flight control units that can be programmed to fly on automatic cruise lines, and multi-spectral and RGB cameras to capture rice images on different dates to identify the stages of rice growth. The flight path of the drone can also be set by setting the takeoff position and landing position. In [28], Raeva et al. used sense Fly fixed-wing drone eBee to collect corn and barley data. The drone flew the mission according to the preset route created in eMotion v.2. By setting a takeoff location, start waypoint, and location for landing or home waypoint, the drone route is planned. However, although fixed-wing UAVs can achieve automatic cruise, they have high requirements on the site and lack flexibility in flight operations, so they are rarely used in the agricultural field.

2.1.2. Multi-Rotor UAV

A Multi-rotor UAV, also known as a multi-axis UAV, is a special unmanned rotorcraft with three or more rotoraxes. The drones generate lift by turning rotors through electric motors on each axis. By changing the relative speed between different rotors, the size of the single-axis propulsion force is changed to control the motion trajectory of the UAV. Using low-altitude multi-rotor technology, drones can help quickly gather real-time farmland information. In [29], Xu et al. built a multi-rotor UAV farmland crop information management platform. The development of drone technology and the miniaturization of sensors have changed the way remote sensing is used, extending this earth science discipline to other fields, such as precision agriculture [30]. A multi-rotor UAV is the most used UAV in the agricultural field at present [31]. It has the ability of vertical takeoff, landing, and hovering and has strong maneuverability. It is mainly suitable for low-altitude, low-speed tasks in the agricultural field that require vertical takeoff, landing, and hovering. It can meet most of the agricultural data collection work, but its small load cannot meet the high data requirements of the task.

2.1.3. Vertical Takeoff and Landing UAV

Compared with multi-rotor UAVs and fixed-wing UAVs, vertical takeoff and landing UAVs (VTOL UAVs) have the advantages of good aerodynamic efficiency, high cruise speed, long flight time, and low requirements on the flatness and area of the landing site. VTOL UAVs also have problems such as large aerodynamic interference of tilting propellers, high design strength requirements of VTOL structure, complex power system matching under various flight states, etc. It is impossible to conduct a complete and reliable safety assessment of VTOL UAVs, and it cannot guarantee the healthy development of VTOL UAVs [30]. A review of VTOL UAV research and its application prospects in precision agriculture. Different types of VTOL UAVs are classified according to their aerodynamic layout and takeoff mode, such as rotors, tilting airfoil, and tail–seat. Using the eBee X senseFly drone with Red, Green, and Blue (RGB) S.O.D.A. camera and the BirdsEyeView Aerobotics Vertical Takeoff and Landing (VTOL) drone firefy6 Pro with MicaSense reddge MX camera, Images of the maturation stage (24 July) of the hop phenology stage were collected, and the canopy area of hops was calculated based on the images [32]. Compared with fixed-wing UAVs and multi-rotor UAVs, VTOL UAVs are less used in the agricultural field. In Table 2, we focus on the UAVs commonly used in the agricultural field in the existing literature, among which multi-rotor UAVs are the most commonly used types of UAVs in image acquisition by researchers. The common types of drones are shown in Figure 1.

Figure 1.

Examples of different types of drones. (a) Fixed-wing UAV, (b) Multi-rotor UAV, (c) Vertical Takeoff and Landing UAV.

2.1.4. Brief Summary

In short, each type of UAV has its advantages and disadvantages and is suitable for different applications in different scenarios. The fixed-wing UAV has the advantages of fast speed and long endurance, which can be used for long-term cruises. Its takeoff and landing need the runway, which is complicated to operate. Multi-rotor UAVs are the most widely used in the agricultural sector. They were originally designed for defense and military purposes, can take off and land vertically, operate easily, and are suitable for operation in small spaces and complex environments. But its flight speed is slow, and its endurance is short. The vertical takeoff and landing UAV integrates the advantages of the above two UAVs and can achieve fast flight and long range, but it also can perform vertical takeoff and landing and simple operation. However, because it needs to be carried out in multi-rotor mode, it will increase its weight, reduce flight performance, and cannot carry more complex vision sensors.

2.2. Drone Platform Vision Sensor

The sensor is one of the basic components of the UAV, and the UAV collects various kinds of data through various sensors such as air pressure sensors, used to measure atmospheric pressure to infer altitude; GPS modules, used to determine the position of the UAV, navigation, and track planning; vision sensors, including cameras, infrared cameras, and other optical sensors, are used by UAVs to collect images, videos, and other data, and are widely used in aerial photography, monitoring, survey, and other tasks. As a result, drones can be equipped with different types of vision sensors, including RGB sensors, hyperspectral sensors, multi-spectral sensors, infrared sensors, and thermal imaging. Due to the small load characteristics of multi-rotor UAVs, fixed-wing UAVs and VTOL UAVs are more suitable for more complex cameras. In this section, we will highlight the vision sensors that are most commonly used in the literature for UAV data acquisition. Three common types of sensors are listed in Figure 2.

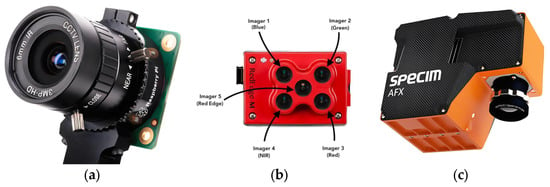

Figure 2.

Examples of different vision sensors. (a) RGB sensors, (b) Multi-spectral sensors, (c) Hyperspectral sensors.

2.2.1. RGB Sensor

RGB sensors are one of the most widely used types of vision sensors for drones to complete various tasks in the agricultural sector. It can detect the color of the object and convert the signal into a digital signal, which has the characteristics of high precision, high speed, low power consumption, low price, high spatial resolution, and so on. These sensors are used to calculate vegetation indices, such as Green/red Vegetation Index (GRVI), greenness index (GI), and excessive greenness (ExG), with acceptable or high accuracy [33]. In addition, RGB sensors are increasingly used in object recognition, phenology, crop yield estimation, and weed mapping. In [25], Ashapure et al. used a DJI Phantom 2 UAV equipped with an inbuilt 3-axis gimbal stabilized RGB sensor with an image size of 4384 × 3288 pixels RGB sensor is used to collect experimental cotton field data. Based on multi-temporal remote sensing data, a machine learning framework for cotton yield estimation is developed. In [34], Ge et al. established a rice breeding yield estimation model using RGB images based on drones. The DJI Phantom 4 Pro UAV (DJI Technology Co., Ltd., Shenzhen, China) equipped with a 1-in CMOS 20-megapixel camera was used to collect rice data. When combining phenological data with RGB images, RF models show greater accuracy. RGB sensors are making progress not only in crop yield estimation but also in weed mapping. In [17], the author discussed a method for automatically mapping oat weeds using drone images. In this paper, the SenseFly eBee Plus RTK drone equipped with a SenseFly S.O.D.A. RGB camera was used to collect oat field data. In [35], Rozenberg et al. discussed the use of low-cost drones to map giant ryegrass in Bahia Ranch, Florida. A DJI Phantom 4 drone equipped with an RGB camera was used for data acquisition of the giant black spike grass. The study found that by combining the analysis of the supervised algorithm, the map of the giant black grass cover was successfully drawn, and the correlation with the ground real cover was 0.91. In this kind of task, the image requirements are not high. The RGB sensor carried by the UAV is used to collect images that have high spatial resolution and good clarity. However, a large amount of image data needs to be collected during the experiment for early training and later model testing, and image selection and processing are crucial to the influence of model accuracy in this process.

2.2.2. Hyperspectral Sensor

A hyperspectral sensor is a device that combines spectral features and imaging techniques to obtain continuous, narrow-band image data with high spectral resolution. Hyperspectral imaging technology is the advanced field of remote sensing earth observation technology. It is a kind of optical remote sensing technology developed based on imaging spectroscopy, which can realize the comprehensive observation of spatial information, spectral information, and radiation information. Its main feature is to obtain the continuous spectral information of the object while imaging the object in space. Hyperspectral imaging based on the UAV is a relatively new remote sensing technology. Banerjee et al. [36] addressed the challenges of sensor calibration, data acquisition, radiometric correction for light changes, splicing, and geometric correction methods for UAV hyperspectral imaging in highly heterogeneous environments such as swamps. In recent years, airborne hyperspectral sensors have proven to be useful analytical tools in agriculture due to their high spectral resolution. Using the hyperspectral reflection data of the UAV, the performance of several wavelength selection methods based on partial least squares (PLS) regression was compared to distinguish the two irrigation systems commonly used in olive groves [37]. UAV remote sensing is widely used in precision agriculture because of its flexibility and higher spatial and spectral resolution. The hyperspectral image data of the canopy during the flowering and filling stages of winter wheat crops were obtained by low-altitude UAV, and the yield of winter wheat was predicted by machine learning [38].

2.2.3. Multi-Spectral Sensor

A multi-spectral sensor is a kind of sensor that can acquire multiple spectral information simultaneously. Compared with the traditional single-spectral sensor, the multi-spectral sensor can acquire and record the information of multiple wavelengths at the same time so that we can understand and analyze the spectral characteristics of the target more comprehensively. During sensor measurements, the downwash airflow field of the UAV interferes with the crop canopy. To solve this problem [39], Yao et al., by using computational fluid dynamics (CFD), numerical simulation, and a three-dimensional airflow field tester, studied the method of monitoring crop growth with a UAV-based multi-spectral sensor. This method improves the accuracy and stability of the measurement results of the unmanned dual-band crop growth sensor. Stempliuk et al. [40] explore the benefits of applying SIFT point-based mutual information (MI) to the registration of agricultural remote sensing multi-spectral images evaluated on a self-developed public image database through a fixed-wing UAV equipped with a multi-spectral sensor. The multi-spectral sensor operates within a range of parameters suitable for crop inspection in real life.

2.2.4. Brief Summary

RGB sensors are mainly used for color imaging, capturing the information of the three-color channels of red, green, and blue, providing rich color information, and are often used in scenes requiring high-quality color imaging, such as judging crop growth conditions. The nutritional status of crops can also be judged through the analysis of color information. The multi-spectral sensor can obtain information on visible light, near-infrared, and other spectral bands and is often used in the field of agriculture to monitor the growing environment of crops and soil conditions, as well as to distinguish and identify different ground objects. Hyperspectral sensors can obtain continuous spectral information in a finer continuous range and can provide detailed spectral details, making the identification and classification of ground objects more accurate. In the experiment, the appropriate sensor should be selected according to the experimental site, the research object, and the research method.

3. Application of Deep Learning to Weed Management

Machine learning is a core research area of artificial intelligence and one of the most active research branches in computer science in recent years [41]. At present, machine learning technology is not only widely used in many fields of computer science but has also become an important support technology for some cross-disciplines. Machine learning has experienced two innovations: shallow learning and deep learning. Deep learning is a new field in machine learning research, which uses multi-layer artificial neural networks to perform a series of tasks, including computer vision, natural language processing, etc. It is a more powerful learning algorithm, and deep learning obtains multi-level feature representation through nonlinear modules. Compared with traditional Support Vector Machine, Random Forest, and other methods, deep features and abstract features can be extracted without manual intervention [42]. Deep learning involves a variety of neural networks. Common neural networks include Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Generative Adversarial Network (GAN), and Graph Neural Network (GNN) [43,44]. This section will focus on convolutional neural networks and their applications.

3.1. Convolutional Neural Network

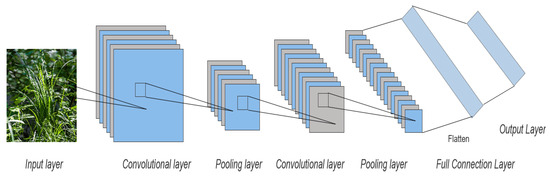

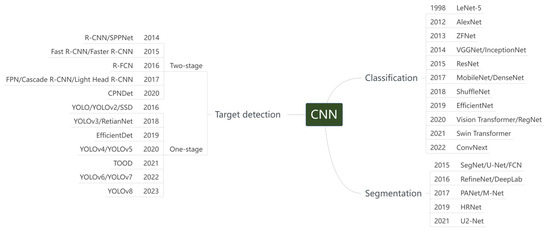

With the continuous development of deep learning technology, it has been applied more and more widely in various fields, among which CNN is one of the representative algorithms of deep learning, which has been applied more and more in image feature extraction and has made great progress. Different from the traditional shallow neural network, CNN has a multi-layer neural network structure, and each layer network consists of a different number of convolutional nuclei with different receptive field sizes. The convolutional layer and the previous layer in CNN are connected by local connection and weight sharing, which greatly reduces the complexity of the network and the number of weights. There are two main processes of CNN: One is the feature extraction process, which consists of alternating connections between the convolution layer and the pooling layer. The convolution layer abstracts the color, edge, and image feature information of the upper data. The pooling layer reduces the dimensionality of the data, reduces the feature information of the image, retains only the most important feature information, divides the features, and obtains the statistical information of each region, which helps to improve the generalization ability of the model [45]. The other is the process of classification, including the full connection layer and classification layer. The fully connected layer integrates the features extracted from the previous layer, associates the learned feature representation with labels, and each node of the layer is connected with all nodes of the previous layer and converts the output two-dimensional feature map into a one-dimensional vector to prepare for the subsequent classification task. The classification layer is responsible for completing the classification function of the model, which is usually composed of a number of fully connected layers, each of which performs linear and nonlinear transformations on the input data and gradually abstracts the higher-level feature representation. In the classification layer, the output of the network is usually a probability distribution for each category, and the model updates the parameters by comparing the difference between the probability distribution and the true label to minimize the classification error. Figure 3 shows the Basic convolutional neural network structure diagram. Convolutional neural networks can be divided into object detection, image classification, and image segmentation according to their application targets and task properties. With the continuous in-depth research of scholars, various types of networks are constantly being developed and optimized to improve their performance in different tasks. As a result, many variations of pre-trained neural networks are common in research, and we will focus on three network models in agriculture in this section. Figure 4 summarizes the development history of various network algorithms commonly used in convolutional neural networks.

Figure 3.

The Basic convolutional neural network structure diagram.

Figure 4.

The development history of various network algorithms commonly used in convolutional neural networks.

3.2. Application of Convolutional Neural Networks

3.2.1. Object Detection

Object detection is an important task in the field of computer vision, which aims to identify an area of interest appearing in an image or video, determine its location and size, and classify it to identify the object. The target detection algorithm mainly includes three steps: feature extraction, boundary box regression, and target classification. Traditional object detection algorithms usually use hand-designed features, such as SIFT, HOG, etc. Deep learning-based object detection algorithms automatically learn and extract hierarchical features in images through CNN. This end-to-end training method can better utilize large-scale data sets for feature learning and better capture the texture and detail information of targets. Thus, the performance of the target detection algorithm is improved [14]. With the continuous in-depth study of researchers, a series of object detection algorithms based on CNN are developed. The main technical routes of these algorithms are divided into two categories: One is the two-stage target detection algorithm, which was developed earlier and generates a series of candidate regions using neural networks in the training process and then categorizes and adjusts the position of each candidate region. Because the training process is divided into two stages, the calculation speed is relatively slow. Representative algorithms include R-CNN, Fast R-CNN, and Faster R-CNN. The other is the one-stage object detection algorithm, which was developed late. By generating object boundary box and category information directly on the input image, the calculation speed is greatly improved, and the accuracy is also significantly improved. Its representative algorithms are the YOLO series algorithm and the SSD algorithm. This section focuses on the application of the target detection algorithm in weed management.

In [46], Chen et al. discussed the challenges in the application of weed detection in sesame fields and proposed an improved model, YOLO-sesame. The model is based on the YOLO algorithm, which combines attention mechanism, local important pooling, and adaptive spatial feature fusion structure. The results showed that the F1 score of the YOLO-sesame model was 0.91 and 0.92, the mAP value was 96.16%, and the detection frame rate was 36.8 frames per second. To assess the accuracy and effectiveness of the YOLOv4-Tiny model used for weed detection, the researchers processed the images using various YOLO (You Only Look Once) variants. The results showed that the accuracy rate, recall rate, and F1 score of the model were superior to other models, which were 0.89, 0.96, and 0.92, respectively. This study considered YOLOv4-Tiny to be a promising weed detection model [47]. Punithavathi et al. [48] proposed a new precision agriculture weed detection and classification (CVDL-WDC) model based on computer vision and deep learning. The model includes two processes: target detection based on multi-scale fast R-CNN and weed classification based on optimal Extreme Learning Machine (ELM). The best results were obtained at a training/test data ratio of 80:20, with the CVDL-WDC technology achieving an ACCUY and FSCORE of 98.33% and 98.34%, respectively. Guo et al. [49] proposed a vision-based network named WeedNet-R for weed identification and location in sugar beet fields. By adding a context module to the neck of RetinaNet and combining context information from multiple feature graphs, WeedNet-R expands the effective receptive field of the network and solves the problems of monotonous detection scenes and low detection accuracy. WeedNet-R is superior to other target detection algorithms in terms of detection accuracy while maintaining detection speed and not significantly increasing model parameters.

3.2.2. Image Segmentation

Image segmentation algorithm is another basic problem in the field of computer vision. Its principle is to divide the digital image into multiple non-overlapping regions or objects according to corresponding features, which is a key step in image processing and image analysis. Traditional image segmentation algorithms include the threshold segmentation algorithm, region segmentation algorithm, and edge segmentation algorithm. The image segmentation algorithm based on CNN is an advanced image segmentation technology. Its core idea is to transform the image segmentation task into a pixel-based classification problem. Using CNN to extract and classify features along with images, it can automatically learn complex features in images and process various objects. This algorithm uses a Full Convolutional Network (FCN) as the infrastructure, and researchers have also developed a variety of high-performance image segmentation algorithms, such as U-Net, DeepLab, Mask R-CNN, etc. This section will focus on the application of image segmentation technology based on convolutional neural networks in weed management.

Alom et al. [50] proposed a cyclic U-Net model and a cyclic residual U-Net model named RU-Net and R2U-Net, respectively. The proposed model utilizes the power of U-Net, residual networks, and cyclic convolutional neural networks. Currently, whenever possible, farmers manually remove weeds or spray herbicides throughout the field to control them. Kulkarni et al. [51] focused on the use of convolutional neural networks, image processing, and the Internet of Things to detect weeds in crops. CNN has achieved the most advanced performance in many aspects of human life. Khan et al. [52] proposed a semantic segmentation method based on a Cascaded Encoder-Decoder Network (CED-Net) to distinguish weeds from crops. Fawakherji et al. [53] proposed a set of deep learn-based methods designed to enable robots to efficiently perform accurate weed/crop classification from RGB or RGB + NIR (near-infrared) images. Firstly, an encoder-decoder segmentation network is designed for “plant-type nonspecific” segmentation between vegetation and soil. Using autonomous robots to reduce weeds is a promising alternative solution, although their implementation requires precise detection and identification of crops and weeds to allow for effective action. Champ et al. [54] trained and evaluated an instance segmentation convolutional neural network designed to segment and identify each plant specimen visible in images produced by agricultural robots. Moazzam et al. [55] developed a new VGG-Beet convolutional neural network, which is based on the universal VGG16 (visual graphics group) CNN model and has 11 convolutional layers. U-net ++ network, a state-of-the-art convolutional neural network (CNN) algorithm rarely used in precision agriculture, is used for semantic segmentation of weed images [56]. The U-Net ++ model provided 65% weed IoU, while the U-Net ++ model provided 56% weed IoU.

3.2.3. Image Classification

Image classification algorithm automatically classifies input images into predefined categories through computer programs, which is one of the important algorithms in machine learning algorithms and is also one of the research hotspots in the field of image processing, which is widely used in agriculture. Traditional image classification algorithms, such as support vector machine (SVM), decision tree (DT), random forest (RF), K-nearest neighbor (KNN), etc., rely on early data processing and image dimensionality reduction to improve the accuracy and efficiency of the model. With the rapid development of CNN, image classification models based on CNN are constantly updated and upgraded [57]. Commonly used image classification algorithms include ConNeXt, GoogleNet, AlexNet, ResNet, etc., all of which have performed well in this task. This section will focus on the application of image classification algorithms in weed management.

To solve the time-consuming and cumbersome process of labeling data in deep learning models, the author [58] proposed a semi-supervised learning method that combines labeled and unlabeled data. They introduced a new ConvNeXt-based deep neural network architecture to deal with weed classification problems and combined it with consistent regularization to improve the model’s performance. The experimental results show that the accuracy and F1 score of this model are better than those of other models, which are 92.33 and 90.37, respectively. Olsen et al. [59] used the benchmark deep learning models Inception-v3 and ResNet-50 to present a baseline for classification performance on the dataset. Abdulsalam et al. [60] proposed an alternative approach that involves combining a pre-trained network (in this case, ResNet-50) and a YOLO v2 object detector for field weed detection/classification. At the same time, in order to effectively control weeds, it is necessary to accurately understand the species and growth stage of weeds. Vypirailenko et al. [61] used transfer learning methods applied to Dense Convolutional Neural Networks to identify weeds and determine their growth stages. P et al. [62] adopted a CNN algorithm to process the target image. A new crop/weed classification model is established, which includes three main stages: preprocessing, feature extraction, and classification. Firstly, the input image is preprocessed with contrast enhancement. The extracted features and RGB images (a total of five channels) were classified by an “optimized convolutional neural network (CNN)”. To enhance the classification effect, the HW-SLA model is used to optimize the weight and activation function of CNN. Makanapura et al. [63] aimed to classify seedlings of crop and weed species. They implemented the classification framework using three different deep convolutional neural network architectures, namely ResNet50V2, MobileNetV2, and EfficientNetB0. Tao et al. [64] studied a CNN-SVM hybrid classifier for weed identification in winter rape fields. The proposed VGG-SVM model has higher classification accuracy, stronger robustness, and real-time performance (Table 3).

Table 3.

Comparison of different applications based on convolutional neural networks.

3.2.4. Brief Summary

The application of CNN can be divided into the following steps: data preprocessing, feature extraction, classifier design and training, model evaluation, and optimization. CNN has a strong feature extraction ability, which can accurately extract the features of weeds from the complex image background. The design of the classifier is flexible and varied, and different classification algorithms can be selected according to the actual needs. With strong generalization ability, weed detection can be carried out under different environments and light conditions. However, there are some challenges in the application process:

- There are many kinds of weeds and different kinds of weeds have great differences in morphology and color, which brings difficulties to feature extraction and classifier design;

- In practical application, due to the influence of lighting, shadow, occlusion, and other factors, the quality of weed images is often poor, which will affect the accuracy of model recognition;

- The training of convolutional neural networks requires a large amount of labeled data, which increases the difficulty and cost of data collection and labeling.

4. Application of Drones and Deep Learning Techniques to Weed Management

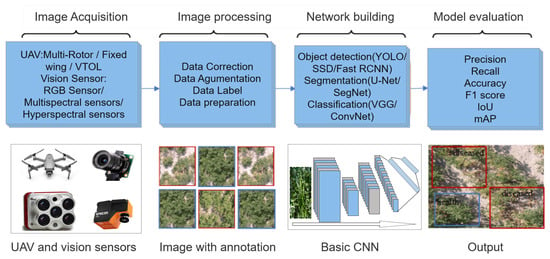

Drone technology and deep learning technology are two hot topics in the field of agricultural science and technology today; they are constantly developing in their respective fields and are widely used in various tasks in the field of agriculture. In weed management, deep learning technology can be used to identify different types of weeds, process farmland data, quickly and accurately locate the location of weeds or the difference between weeds and crops, and finally achieve accurate weeding operations. Therefore, the use of deep learning in weed management is increasing, and researchers have made a lot of efforts to study how to use deep learning algorithms in combination with other techniques to improve the efficiency of weed management tasks. Some studies combine deep learning technology with satellite remote sensing technology [67], but satellite images have the defects of low spatial and temporal resolution and no detailed information, which will affect the accuracy of deep learning algorithms and are more suitable for the research and analysis of large objects. The low-altitude drone platform can collect more detailed information about the type and status of weeds, which is superior to satellite images. Drones are flexible, efficient, and accurate. According to its characteristics, the application of UAV in weed management mainly focuses on weed data collection [68], field monitoring [69], assisted weed mapping [70], weed crop row detection [71], and herbicide spraying. The UAV is equipped with various sensors to realize real-time monitoring of farmland, data collection, auxiliary mapping, and crop row detection, providing more accurate data support for farmland management. Compared with traditional spraying methods, UAV spraying can greatly improve the efficiency and spraying effect of agricultural operations, reduce the use of pesticides, and reduce environmental pollution. In recent years, UAV-based remote sensing technology and deep learning technology have changed the mode of agricultural operations, and they provide strong technical support for intelligent weed management in farmland. Researchers have made numerous experiments and hypotheses about how to combine these two advanced technologies. The experimental data proved that the efficiency, precision, and environmental protection of weed management were greatly improved. This section will focus on the application of technologies based on drones and deep learning to weed management in farmland. Figure 5 shows the workflow of combining UAV and Convolutional neural network technologies.

Figure 5.

The workflow of combining UAV and Convolutional neural network technologies.

4.1. Weed Detection and Classification

With the continuous development of science and technology, intelligent technology is more and more widely used in farmland weed management. Weed detection and classification, as an important link in farmland weed management, is of great significance for improving farmland yield and reducing the use of pesticides. The process of weed detection and classification includes image acquisition, image processing, image feature extraction, detection and classification algorithm selection, model training, detection and classification result evaluation, etc. At present, the commonly used algorithms are YOLO series algorithms, Vision Transformers, VGG16, U-Net, ResNet101, and so on. This section focuses on the application of convolutional neural networks in weed detection and classification.

The convolutional neural network model based on object detection was trained and evaluated on low-altitude unmanned aerial vehicle (UAV) images for late weed detection in soybean fields [72]. With the advent of unmanned aerial vehicles (UAVs), the ability to acquire spatial data at the desired spatiotemporal resolution becomes possible, and the resulting input data meets the high standards of weed management. Reedha et al. [73] aimed to show that attention-based deep networks are a promising approach to the above problems in the context of unmanned aerial systems identifying weeds and crops. With a small amount of labeled training data, the ViT model performed better than the most advanced CNN-based models, EfficientNet and ResNet, achieving a maximum accuracy of 99.8% for the ViT model. To be effective in Drone-based systems, weed detection systems must meet stringent power consumption, overall detection performance, real-time capabilities, weight, and size requirements. Shahi et al. [74] proposed a classification method to accumulate and classify existing crop disease detection work with UAV images. Genze et al. [75] proposed DeBlurWeedSeg, which is a comprehensive debluration and segmentation model for weed and crop segmentation in motion-blurred images. This is of high practical significance as the lower error rate in weed and crop segmentation allows for better weed control, for example, when using robots for mechanical weeding.

Soybean is a major cash crop, and the growth of weeds will lead to a decrease in soybean production. Researchers have conducted in-depth studies on the detection of weeds in soybean fields, among which the weed detection experiments in soybean fields are as follows. Li et al. [76] proposed a weed detection model based on improved YOLOv7- Yolov7-Fweed, which adopted F-ReLU as the activation function of the convolutional module. The MaxPool multihead self-attention (M-MHSA) module was added to improve the accuracy of weed identification. The results of continuous monitoring of soybean leaf area and dry matter mass showed that reduced herbicide could effectively control weed growth without affecting soybean growth. Alessandro et al. [77] focused on the use of drones and deep learning techniques in agriculture. They used a DJI Phantom 3 Professional drone for image acquisition, using a grayscale symbiosis matrix (GLCM) to store the texture information of the images. The fragments were classified using convolutional neural networks (ConvNets) and support vector machines (SVMs). The results show that this method has high classification accuracy and sensitivity. Xu et al. [78] proposed a new method for weed detection in soybean fields using unmanned aerial vehicle (UAV) images. This study combines the visible color index with an instance segmentation method based on the encoder and decoder structures. To this end, the segmentation results of different color indices are compared, and the proposed model is compared with the classical model. Among them, the G/R index performed best, with an average accuracy (aAcc) of 0.978, an average IoU (mIoU) of 0.939, and an average accuracy (mAcc) of 0.972. The ResNet101_v backbone is superior to the U-net and ResNet101 backbone in aAcc, mIoU, and mAcc.

In addition to weed management in soybean fields, weed management in wheat fields is also the main research direction. Wheat, as one of the most widely planted major food crops in the world, is of great significance for ensuring global food security. Therefore, as one of the main reasons affecting wheat yield, researchers have paid attention to the problem of weed detection in wheat fields and carried out in-depth research. Among them are weed detection experiments in wheat fields, which are as follows. Zhao et al. [79] proposed a deep learning method to accurately detect small and overlapping wheat ears in drone images. The method, known as OSWSDet, integrates the orientation of the wheat stalk into the YOLO framework by introducing a circular smoothing label (CSL) and a microscale detection layer. These improvements improve the ability to detect small-sized wheat ears and prevent detection errors. The experimental results show that the average accuracy is 90.5%. Zhou et al. [80] proposed a method based on deep neural networks to segment wheat and weeds in images. The authors evaluated the performance of the segmentation network using quantitative criteria such as overall accuracy, accuracy, recall, and intersection ratio (IoU). They compared different image classification and semantic segmentation neural networks, and the final result was that the improved U-net was the best for wheat segmentation. Its accuracy, recall rate, and IoU were 95.76%, 92.02%, 89.81%, and 88.98%, respectively. Jonas et al. [81] collected high-resolution image data of nine wheat fields with different management and climatic conditions by using drones. They found that object-based image analysis achieved an accuracy of 0.88 and 0.72 in ground-based and Drone-based images, respectively. The study highlights the importance of high-ground resolution for weed detection and suggests that future research should focus on developing ground-based, site-specific weed management systems for wheat.

In addition, there is weed detection and classification in other crops. Bishwa et al. [82] used a UAV to collect weed images in morning glory and cotton to generate synthetic images and trained them with the Mask R-CNN model, proving the effectiveness of using synthetic images to train weed models to detect models. Cai et al. [83] proposed a weed segmentation network that used the drone platform to identify weeds in pineapple fields. The ECA module was inserted into the model to improve the performance of the weed detection model without significantly changing the size of the model. Wang et al. [84] collected RGB and 5-band image data of three kinds of weeds and three kinds of crops and used the pre-trained AlexNet network to carry out transfer learning to achieve efficient learning with small samples and high accuracy. The results show that the classification accuracy of six small samples is 96.02%. In recent years, new weed detection techniques have been developed to improve the speed and accuracy of weed detection, resolving the contradiction between promoting soil health and achieving adequate weed control to achieve agricultural benefits. Table 4 summarizes recent advances in weed classification and detection.

4.2. Weed Mapping

Weed mapping is an agricultural technique that can identify and count weeds through remote sensing image analysis of farmland to achieve precise weeding. This technology can help farmers better manage their fields and improve the yield and quality of their crops. In weed mapping, the commonly used methods include methods based on machine learning, methods based on image processing, methods based on drones, etc. The efficiency of weed mapping can be improved by the organic combination of various techniques. Combining the current research hotspot deep learning algorithm and drone technology, drone technology provides the ability to obtain high-resolution images and videos, which can be input as training data into deep learning algorithms to train deep learning models to detect weeds in farmland. The combination of the two advanced technologies provides an effective weed management method for farmers, and the method is expected to be more widely used in the agricultural field with the continuous development and improvement of the technology. This section will focus on the remarkable achievements of the combination of UAV technology and deep learning technology in weed mapping.

In the task of weed mapping, one of the most important tasks is to map weed distribution to provide accurate geographic information and decision support for subsequent weed management. Lam et al. [85] proposed an open-source workflow for automated weed mapping using commercial unmanned aerial vehicles (UAVs). With the advent of unmanned aerial vehicles (UAVs), the ability to acquire spatial data at the desired spatiotemporal resolution becomes possible, and the resulting input data meets the high standards of weed management. Gasparovic et al. [17] tested four independent classification algorithms for creating weed maps, combined with automatic and manual methods, as well as object-based and pixel-based classification methods used on two subsets, respectively. Rozenberg et al. [86] used a simple unmanned aerial vehicle (UAV) to survey 11 commercial fields of dried onion (Allium cepa L.) to examine late-season weed classification and investigate weed spatial patterns. Camargo et al. [87] proposed a drone weed and crop classification algorithm based on a deep residual convolutional neural network (ResNet-18).

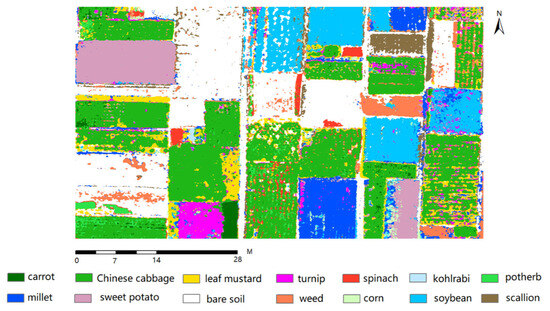

Future work should target the integration of the mapping process on the drone platform, guide the autonomous mapping of the drone, and ensure that the model can be transferred to other crop fields. Mohidem et al. [88] aimed to demonstrate the current situation and future trend of the application of UAVs in weed detection in crop fields. Integrated weed management, combined with the use of drones, has improved weed monitoring in a more efficient and environmentally friendly way. Torres-Sanchez et al. [89] aimed to explore their potential for broadleaf and grass weed detection in wide-row herbaceous crops such as sunflower and cotton. Zou et al. [90] studied a field weed density evaluation method based on UAV imaging and improved U-Net. A method for calculating and mapping weed density in the interfield was proposed. This method can be used to effectively evaluate the density of weeds in the field from drone images, thus providing key information for precise weeding. In [91], Khoshboresh-Masouleh et al. developed a lightweight end-to-end trainable guided features-based deep learning approach called DeepMultiFuse. The method can be used to improve weed segmentation performance (to identify weeds in sugar beet fields) using multi-spectral drone images designed to meet these requirements. Deep learning (DL) algorithms, properly trained to handle the real-world complexity of drone data in agriculture, can provide an effective alternative to standard CV methods for accurate weed identification models. Gallo et al. [92] used unmanned aerial systems to collect more than 3000 RGB images of chicchio cultivation at different stages of crop and weed growth and symbiosis into 12,113 bounding box annotations for weed target identification (Mercurialis annua). Huang et al. [93] studied the comparison between deep learning and object-based image analysis (OBIA) in weed mapping of UAV images. The UAV images were captured on four different dates on two different rice fields. Object-based image analysis (OBIA) and deep learning methods are applied to weed mapping tasks in UAV images. Gao et al. [94] developed a deep convolutional network that is capable of predicting field and aerial images from UAVs for weed segmentation and mapping when only field images are provided during the training phase. The experimental results show that the developed deep convolutional neural network can be used to classify weeds in field and aerial images, and satisfactory results have been obtained. Figure 6 shows an example of a plant map generated based on the ARCNN and multi-temporal UAV RGB datasets. Table 4 summarizes recent advances in weed mapping.

Figure 6.

Vegetable map generated from the proposed ARCNN and multi-temporal UAV RGB datasets [95].

Table 4.

Comparison of different UAV-based and convolutional neural network applications in weed management.

Table 4.

Comparison of different UAV-based and convolutional neural network applications in weed management.

| Ref. | Crop | Task | Types | Location | Dataset | Network | Improvement | UAV Types | F1 Score | Precision | Recall | Acc | Metrics |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [73] | Beet/parsley/ Spinach/Weed | Classification | RGB | Loire Region, France | 19,265 | Vision Transformers | Transfer learning | Pilgrim UAV | 97.1% | 98.5% | 95.7% | ||

| [75] | Sorghum/Weed | Segmentation | RGB | sorghum field, Southern Germany | 1300 | DeBlurWeedSeg | Deblurring | DJI Mavic 2 Pro | DS: 0.9048 | ||||

| [76] | Soybean/Weed | Detection | RGB | Heihe City, Heilongjiang Province, China | 3000 | YOLOv7-FWeed | M-MHSA | DJI Mavic 3M | 93.07% | 94.96% | 91.25% | mAP: 96.6% | |

| [77] | Soybean/Weed | Classification | RGB | Campo Grande, Brazil | 15,336 | ConvNets | SLIC Superpixel | DJI Phantom 3 Professional | 99.5% | ||||

| [78] | Soybean/Weed | Segmentation | RGB | Madison, Wisconsin, USA | 1181 | Self-built | ResNet101_v/DSASPP | DJI Phantom 4 V2 Pro | 90.5% | ||||

| [79] | Wheat spike/Weed | Detection | RGB | Rugao City, Jiangsu Province, China | 7000 | OSWSDet | YOLOv5 | DJITM Matrice TM 210 | 96% | 90% | AP: 90.5% | ||

| [82] | Cotton/Weed | Detection | RGB | Texas, USA | 1230 | Mask R-CNN | GAN/ transfer learning | Hylio AG-110 | mAPm: 0.8mAPb: 0.83 | ||||

| [83] | Pineapple/Weed | Identification | RGB | Zhangjiang, Guangdong Province, China | 2176 | PSPNet | ECA module | DJI mavic 2 | 87.79% | mPA: 88.6%mIOU: 80% | |||

| [84] | Ecological Irrigation Area | Classification | RGB/ Multispectral | Zhuozhou farm, Beijing City, China | 3266 | self-constructed CNN | MAML/ transfer learning | DJI M100 DJI Phantom 3 Pro | 96.02% | ||||

| [85] | Grassland | Mapping | RGB | Salmorth, Germany | 6773 | VGG16 | OBIA | DJI Phantom 3 Pro DJI Phantom 4 Pro | 78.7% | 71.43% | 87.50% | 92.1% | |

| [87] | Winter Wheat/ Weed | Mapping | RGB | Brunswick, Germany | 16,500 | DCNN | ResNet-18 | Hexa-copter system | 93.5% | 92.67% | 94% | ||

| [90] | Weed | Segmentation | RGB | Beijing, China | 100 | U-Net | Dilation convolution | DJI MAVIC 2 | 84.29% | 80.85% | 98.84% | IoU: 93.40% | |

| [91] | Sugar beet/Weed | Segmentation | Multispectral | Rheinbach, Germany Eschikon, Switzerland | DeepMultiFuse | Inspire-2 | 97.3% | mIOU: 97.1% | |||||

| [92] | Beet/Chicory/Weed | Detection | RGB | Central Belgium | 3373 | YOLOv7 | DJI phantom4 pro | 61.3% | 54.8% | mAP: 56.6% |

4.3. Drone Spray

In weed management, in addition to the practical application research on weed detection and classification and weed mapping based on UAV and convolutional neural network technology described above, it can also be applied to UAV spraying. The use of drones to spray pesticides to remove weeds is fast, can evenly cover the entire application area, accurate spraying, can also reduce pesticide residues and avoid pollution of water resources, and greatly improve the efficiency of weeding. At present, drones are considered a modern spraying technology, which helps to achieve efficient spraying. In autonomous Drone-based spraying systems, the ability to accurately identify the sprayed area (crops and orchards) becomes even more important. The combination of UAV pesticide spraying and convolutional neural network can take weed images and input them into the network model for real-time weed detection. Based on weed identification results, drones can accurately spray pesticides onto weeds, avoiding unnecessary damage to crops. At the same time, it can also control the flight trajectory and spray amount of the drone.

In order to better realize the UAV spraying task, researchers have done a lot of related studies. Basso et al. [96] used the Normalized Vegetation Index (NDVI) algorithm to detect the exact location of the chemicals needed. Using this information, the automatic spray control system performs on-time applications as the drone navigates over the crop. Liu et al. [97] summarized the trends in this field in the past few years. UAVs can be used in the agricultural sector, which will reduce the possible time and dangerous impact of spraying pesticides and fertilizers. Devi et al. [98] briefly reviewed the implementation of UAVs for crop monitoring and pesticide spraying. Field spraying of post-herbicide is an effective way to reduce herbicide input and weed control costs. Yu et al. [99] explored the feasibility of detecting crabgrass species (Digitaria spp.) by using AlexNet, GoogLeNet, VGGNet, and other deep convolutional neural networks (DCNN) image classification. Yan et al. [100] carried out a comparative study on the control effect of thrips on cowpeas and the determination of operator exposure by aerial and ground spraying in Hainan Province. When the amount of water sprayed by UAV was 22.5, 30, and 37.5 L/ha, the control effect on thrips on the first day was 69.79%, 80.15%, and 80.58%, respectively. Wu et al. [101] described two aspects of using traditional image processing methods and deep learning-based methods to solve weed detection problems. Various weed detection methods in recent years are summarized, the advantages and disadvantages of existing methods are analyzed, and several related plant leaves, weed data sets, and weeding machines are introduced. Due to the differences in target and operation between ordinary drones and sprayer drones, not every obstacle detection and collision avoidance method is suitable for sprayer drones. In this regard, Ahmed et al. [27] attempted to review the most relevant developments in all relevant branches of the agricultural sprayer drone obstacle avoidance scenario, including the structural details of the drone sprayer. The most relevant public challenges in current drone sprayer solutions are enumerated, thus paving the way for future researchers to define a roadmap for designing a new generation of affordable autonomous sprayer drone solutions. Gomez et al. [102] proved their effectiveness through experimental results. Precise spraying of herbicide after emergence, according to the weed control spectrum, can greatly reduce herbicide input. Jin et al. [103] studied deep learning to detect the weed control spectrum of turfgrass herbicides. This method can be used in automatic point spraying systems of intelligent sprayers based on machine vision. Unmanned aerial vehicle (UAV) variable rate spraying offers a precise and adaptable alternative strategy to overcome these challenges. Hanif et al. [104] discussed the research trend of semi-automatic methods and the application of precise spraying platforms in a specific land. Drones are being used for weed control in wheat fields, and studies have shown that their application is more efficient and standardized than traditional knapsack sprayers. However, there are still some challenges, such as low spray volume and droplet density, affecting weed control effectiveness. Different herbicides, application rates, and spraying rates were tested, and results showed significant effects on weed management. Drone spraying is better than manual spraying, and some herbicides have obvious inhibition on weed growth. In addition, drone spraying has been shown to increase wheat yields. Further research is needed to optimize drone spraying for weed control [105]. Chen et al. [106] summarized the current research status and progress of UAV application technology and discussed the atomization characteristics of the UAV application system, focusing on pesticide spray application. Biglia et al. [107] summarized the combined application of big data analysis and UAV aerial spraying supporting technologies in the scientific research field, such as UAV technology, precision agricultural spraying technology, bleaching reduction technology, and swarm UAV collaborative technology, which can inspire international innovation. The focus of the experimental research is to investigate the effects of different UAV spray system configurations on canopy spray deposition and coverage. Table 4 summarizes recent advances in drone spray.

4.4. Brief Summary

The combined use of drones and image processing technology may help to effectively control different weed species that disturb crops, with associated environmental benefits. The application of UAVs in weed management mainly focuses on weed data collection, field monitoring, auxiliary weed mapping, weed crop row detection, and herbicide spraying. In weed management, deep learning techniques can be used to identify different types of weeds. The low-altitude drone platform can gather more detailed information about weed type and status, which is superior to satellite imagery. This section focuses on the application of convolutional neural networks in weed management. In the context of unmanned aerial systems, attention-based deep networks are a promising approach to solving the above problems. To function in Drone-based systems, weed detection systems must meet stringent power consumption, overall detection performance, real-time capabilities, weight, and size requirements. Drone-based remote sensing technology and deep learning technology have changed the agricultural business model. Weed mapping is an agricultural technique to identify and count weeds by analyzing remote sensing images of farmland. This technology can help farmers better manage their fields and improve the yield and quality of their crops. With the advent of unmanned aerial vehicles (UAVs), the ability to acquire spatial data at the desired spatiotemporal resolution becomes possible. DeepMultiFuse was developed to improve weed segmentation performance using multi-spectral drone images. This study provides a feasible solution for the field of agricultural weed detection. Unmanned aerial vehicle (UAV) variable rate spraying offers a precise and adaptable alternative strategy to overcome these challenges. UAV spraying is better than manual spraying, and some herbicides have obvious inhibition of weed growth. Table 4 shows a comparison of different UAV-based and convolutional neural network applications in weed management.

5. Conclusions

In the previous section, we reviewed the application of deep learning methods combined with drone technology in weed management in farmland. In this section, we begin with an analysis of the methods under investigation. It then highlights what we consider to be limitations in this area.

5.1. Data Acquisition

Data is the basis of deep learning algorithms, which can automatically learn the characteristics and rules of data through the training of a large number of data so as to achieve the prediction and classification of unknown data and other tasks. The core of deep learning is the neural network, and the input and output of the neural network are data. The larger the amount of data, the better the training effect of the deep learning algorithm. In terms of data collection, researchers will use existing general data sets, such as the COCO data set, the ImageNet dataset, and the Kaggle competition dataset, which are frequently used. However, in actual weed management, neural network model recognition is required to have high accuracy, and the general data set cannot meet the training requirements. Only by collecting images from drones and building special data sets can this problem be solved. However, in the process of data acquisition, the flexibility of the UAV and the resolution and quality of the image cannot be guaranteed. When using drones to collect data, you should pay attention to the hardware equipment. Genze et al. [75] used a DJI Mavic 2 Pro equipped with a 20 MP Hasselblad camera to carry out the task of RGB image acquisition at an altitude of 5 m, using two different drone settings. Hover and Capture and Capture at Equal Distance: this method not only expands the range of data acquisition but also ensures a clear outline of weeds. Rasmussen et al. [108] utilized a DJI Phantom 3 equipped with a Sony 12.4 megapixel RGB camera with a 1/2.3 “CMOS sensor and GNSS without ground reference flying at an altitude of 40 m. The Phantom 4 multirotor drone collected high-resolution weed data from plots of winter wheat and spring barley. The DJI Phantom 4 multirotor drone collected high-resolution weed data from plots of winter wheat and spring barley. Its ground resolution (GSD) is 17 mm pixel−1, the frontal overlap of images concerning the flight direction was 80%, and the lateral overlap was 70%. Orthomosaics were generated by the commercial photogrammetry software Pix4Dmapper (Pix4D, Lausanne, Switzerland, https://pix4d.com/). Eide et al. [109] used two DJI M600 Pro drones as data collection equipment, equipped with Zenmuse XT2 RGB/Thermal camera and Micasense Red-Edge MX dual camera system, respectively. In order to obtain images with better resolution, data were collected at 8 m and 10 m [110]. They believe that the selection of suitable drones and vision sensors and the planning of flight paths are very meaningful for the quality of the data. It can be seen from the above literature that, at present, various types of UAVs designed and produced by Shenzhen DJI Technology Co., Ltd. (Shenzhen, China) appear more frequently in the experiments conducted by researchers. Images that meet the experimental requirements can be collected through suitable hardware equipment, and the accuracy of the model can be improved to the maximum.

5.2. Dataset

This section is a detailed introduction to some of the publicly available weed datasets, and different datasets can be used for different tasks. Table 5 summarizes the main contents of the dataset, including name, quantity, URL, and recommendations for the use of the dataset.

Table 5.

Partially available public image datasets.

Weed25 [111]: This dataset contains 14 families, including 14,035 images of 25 different weed species. The image amount of each weed species was nearly 500–550. Both monocot and dicot weed image resources were included in this dataset. The dataset is available at https://pan.baidu.com/s/1rnUoDm7IxxmX1n1LmtXNXw (accessed on 25 October 2023); the password is rn5h.

Annotated food crops and weed images: The dataset consists of 1118 images (.jpg files) along with 7853 XML manually annotated annotations (.xml files) in them. The type of images is digital, and the format is RGB. The dataset is divided into two main classes: food crops 411 annotations and weeds 7442 annotations. An Intel RealSense D435 camera and a Sony W800 digital camera were used to acquire images in field conditions. The dataset is available at http://creativecommons.org/licenses/by/4.0/ (accessed on 11 June 2020).

Crop line dataset: Images were acquired by a DJI Phantom 3 Pro drone that embeds a 36-megapixel (MP) RGB camera at an altitude of 20 m. This dataset included the number of samples 673 for weeds and 4861 for crops [71].

The dataset is available at doi:10.3390/rs10111690 (accessed on 17 July 2023).

CoFly-WeedDB: The CoFly-WeedDB contains 201 RGB images from the attached camera of DJI Phantom Pro 4 from a cotton field in Larissa, Greece, during the first stages of plant growth. This dataset can be employed to train and evaluate AI-based methods for weed detection and object segmentation and counting, image analysis, etc. The dataset contains four categories, which are Purslane, Johnson grass, Field bindweed, and Background [112].

The dataset is available at https://zenodo.org/records/6697343#.YrQpwHhByV4 (accessed on 5 September 2022).

Multiclass Weeds Dataset: The Multiclass Weeds Dataset for Image Segmentation comprises two species of weeds: Soliva Sessilis (Field Burrweed) and Thlaspi Arvense L. (Field Pennycress). Weed images were acquired during the early growth stage under field conditions in a brinjal farm located in Gorakhpur, Uttar Pradesh, India. The dataset contains 7872 augmented images and corresponding masks. Images were captured using various smartphone cameras and stored in RGB color format in JPEG format. The captured images were labeled using the labelme tool to generate segmented masks. Subsequently, the dataset was augmented to generate the final dataset [113].

The dataset is available at https://figshare.com/s/934923139007f467c47e (accessed on 15 November 2023).

CWD30: This dataset comprises over 219,770 high-resolution images of 20 weed species and 10 crop species, encompassing various growth stages, multiple viewing angles, and environmental conditions. The dataset is available at https://arxiv.org/abs/2305.10084 (accessed on 17 May 2023)

Weed dataset: This dataset includes the corn weed dataset, lettuce weed dataset, and radish weed dataset. The corn weed dataset was taken from the natural environment of the corn seedlings field. The dataset of corn weed includes 1200 pieces of bluegrass, 1200 pieces of cirsium setosums, 1200 pieces of sedges, 1200 pieces of chenopodium albums, and 1200 pieces of corns. The lettuce weed dataset was taken at the vegetable base in Shandong Province, China. The data collection in total is 500 lettuce seedling images and 300 weed images, acquired from a 30cm height. The radish weed dataset is taken from a public dataset. The dataset of radish weed included 200 radish seedlings and 200 associated weeds. The dataset is available at https://gitee.com/Monster7/weed-datase/ (accessed on 26 February 2020) (Table 5).

5.3. Vision Sensor

Unmanned aerial vehicles equipped with hyperspectral, multi-spectral, and RGB sensors for capturing images are important tools for weed detection and species classification in agricultural remote sensing. Hyperspectral images with different spatial, temporal, and spectral resolutions are collected by UAV, and important spectral bands are identified by discriminant analysis. Multi-spectral images collected at higher spatial resolution using drones have been shown to successfully detect and distinguish between weeds and crops. Different types of data can be used in different scenes. For example, in [89], recognition can be completed through images with high spatial resolution, which can be realized by using UAV-based RGB images. Hyperspectral data contains the reflected spectral information of each pixel at different wavelengths, such as reflection intensity and spectral curve shape. In [114], weeds and sorghum are similar in color, shape, and size, making it difficult to distinguish them. Therefore, when classifying and identifying weed species, six bands are used to detect the differences between weeds and sorghum to improve the identification accuracy. Multi-spectral data contains spectral information of multiple bands, such as chlorophyll content and water content, and is used in weed detection in China. Using image fusion technology to increase the available information can effectively improve the data quality and the accuracy of the training model. In [115], multi-spectral data and RGB images were fused and input into the deep convolutional neural network as training data to identify resistant weeds. Spectral imaging captures light across the electromagnetic spectrum, while multi-spectral imaging captures a small number of spectral bands. Hyperspectral imaging collects the complete spectrum at each pixel [37,67,116,117,118].

5.4. Algorithm Selection

The appropriate algorithm is selected according to different research objects. Common tasks based on drone and deep learning technology in weed management are currently divided into weed identification, weed classification, weed mapping, weed detection, and Drone-based spraying. For each task, a suitable algorithm should be selected for data training according to the research object, research scene, and data collection method, so as to maximize the accuracy of the algorithm.

For weed identification and classification tasks, commonly used convolutional neural networks include LeNet, AlexNet [119], ConvNets [77], VGG [120], GoogLeNet, ResNet [121], DenseNet, MobileNet, etc. In [121], researchers used time series drone images to accurately identify S. alterniflora. They used the ResNet50 model to identify S. alterniflora with the recognition accuracy of 97.61%, the recall rate of 95.22%, and an F1 score of 96.40%. QIAO et al. [122] proposed a novel CNN-based network, MnNet, which consists of AlexNet local response normalization (LRN), GoogLeNet, and continuous convolution of initial VGG models. The self-made Mikania micrantha Kunth dataset has a high accuracy, which is more suitable for wild mikania Micrantha identification.