Locality Preserved Selective Projection Learning for Rice Variety Identification Based on Leaf Hyperspectral Characteristics

Abstract

1. Introduction

2. Materials

2.1. Experimental Site

2.2. Experimental Design

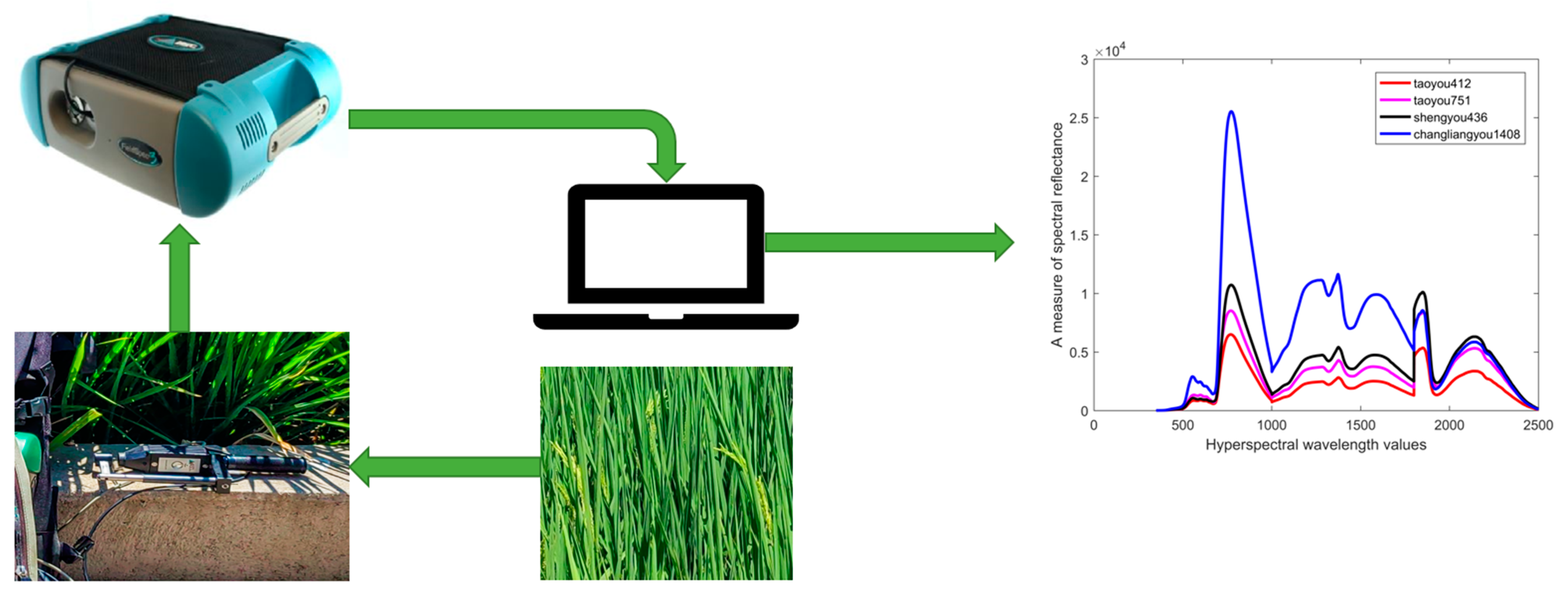

2.3. Data Acquisition

3. Methods

3.1. Locality Preserved Selective Projection Learning (LPSPL)

| Algorithm 1: LPSPL method |

| Input: Rice hyperspectral data , the number of iterations Output: Low-dimensional features and projection Step 1: Initialize the projection to the identity matrix. Step 2: For t = 1:

Step 3: Project the samples onto the low-dimensional subspace to obtain for classification. |

3.2. LPSPL for Rice Variety Identification

3.3. Support Vector Machine Classifier

3.4. Control Methods and Evaluation Indicators

3.4.1. Control Methods

3.4.2. Evaluation Indicators

4. Experimental Results

4.1. Datasets

4.2. Experiment Setup

4.3. Feature Selection Based on LPSPL

4.4. Comparison of the Classification Accuracy with Other Methods

4.5. Comparison of the f1-Score with Other Methods

4.6. Comparison of the Kappa Coefficients with Other Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J. Rice breeding: Never off the table. Natl. Sci. Rev. 2016, 3, 275. [Google Scholar] [CrossRef][Green Version]

- Wu, J.Z.; Yang, L.; Sun, L.J. Research on rapid and non-destructive identification of rice varieties based on THz-ATR technology. J. Chin. Cereals Oils Assoc. 2022, 37, 246–251. [Google Scholar]

- Wang, F.Q.; Fan, X.C.; Zhang, Y. Establishment and application of an SNP molecular identification system for grape cultivars. J. Integr. Agric. 2022, 21, 1044–1057. [Google Scholar] [CrossRef]

- Meng, Y.S.; Ning, Z.H.; Hui, L.I. SSR fingerprinting of 203 sweetpotato (Ipomoea batatas (L.) Lam.) varieties. J. Integr. Agric. 2018, 17, 86–93. [Google Scholar] [CrossRef]

- Kuang, M.; Wei, S.J.; Wang, Y.Q. Development of a core set of SNP markers for the identification of upland cotton cultivars in China. J. Integr. Agric. 2016, 15, 954–962. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Zhang, C.Y.; Zhang, B. Establishment and application of an accurate identification method for fragrant soybeans. J. Integr. Agric. 2021, 20, 1193–1203. [Google Scholar] [CrossRef]

- Tian, R.C.; Lu, J.W. Application of spectroscopic techniques in the identification of rice varieties. Food Sci. Technol. Econ. 2019, 44, 73–76. [Google Scholar]

- Zhao, Q.; Zhang, Z.; Huang, Y. TPE-RBF-SVM model for soybean categories recognition in selected hyperspectral bands based on Extreme Gradient Boosting Feature Importance Values. Agriculture 2022, 12, 1452. [Google Scholar] [CrossRef]

- Lu, J.; Tian, R.; Wen, S.; Guan, C. Selection of agronomic parameters and construction of prediction models for oleic acid contents in rapeseed using hyperspectral data. Agronomy 2023, 13, 2233. [Google Scholar] [CrossRef]

- Hu, T.; Chen, Y.N.; Li, D.; Long, C.F.; Wen, Z.D.; Hu, R.; Chen, G.H. Rice variety identification based on the leaf hyperspectral feature via LPP-SVM. Int. J. Pattern Recognit. Artif. Intell. 2022, 36, 2350001. [Google Scholar] [CrossRef]

- Wu, N.; Zhang, Y.; Na, R. Variety identification of oat seeds using hyperspectral imaging: Investigating the representation ability of deep convolutional neural network. RSC Adv. 2019, 9, 12635–12644. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Zhu, S.; Zhang, C.; Bao, Y.; Gao, P.; He, Y. Variety identification of raisins using near-infrared hyperspectral imaging. Molecules 2018, 23, 2907. [Google Scholar] [CrossRef] [PubMed]

- Jin, B.; Zhang, C.; Jia, L. Identification of rice seed varieties based on near-infrared hyperspectral imaging technology combined with deep learning. ACS Omega 2022, 7, 4735–4749. [Google Scholar] [CrossRef]

- Wang, Q.; Li, Q.; Li, X.L. Hyperspectral band selection via adaptive subspace partition strategy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4940–4950. [Google Scholar] [CrossRef]

- Wu, X.; Xu, X.Y.; Liu, J.H. Supervised feature selection with orthogonal regression and feature weighting. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1831–1838. [Google Scholar] [CrossRef]

- Nie, F.P.; Xiang, S.M.; Liu, Y. Orthogonal vs. uncorrelated least squares discriminant analysis for feature extraction. Pattern Recognit. Lett. 2012, 33, 485–491. [Google Scholar] [CrossRef]

- Zhu, G.K.; Huang, Y.C.; Lei, J.S. Unsupervised hyperspectral band selection by dominant set extraction. IEEE Trans. Geosci. Remote Sens. 2015, 54, 227–239. [Google Scholar] [CrossRef]

- Wang, J.; Zhou, J.; Huang, W.Q. Attend in bands: Hyperspectral band weighting and selection for image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4712–4727. [Google Scholar] [CrossRef]

- Ji, Y.; Sun, L.; Li, Y. Non-destructive classification of defective potatoes based on hyperspectral imaging and support vector machine. Infrared Phys. Technol. 2019, 99, 71–79. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, H.; Rao, Z. Hyperspectral imaging technology combined with deep forest model to identify frost-damaged rice seeds. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2020, 229, 117973. [Google Scholar] [CrossRef]

- Wang, L.; Liu, D.; Pu, H. Use of hyperspectral imaging to discriminate the variety and quality of rice. Food Anal. Methods 2015, 8, 515–523. [Google Scholar] [CrossRef]

- Pang, Y.; Yuan, Y. Outlier-resisting graph embedding. Neurocomputing 2010, 73, 968–974. [Google Scholar] [CrossRef]

- Liu, N.; Lai, Z.H.; Li, X.C. Locality preserving robust regression for jointly sparse subspace learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 2274–2287. [Google Scholar] [CrossRef]

- Zhang, L.; Sun, H.; Li, H. Identification of rice-weevil (Sitophilus oryzae L.) damaged wheat kernels using multi-angle NIR hyperspectral data. J. Cereal Sci. 2021, 101, 103313. [Google Scholar] [CrossRef]

- Zhu, S.; Chao, M.; Zhang, J. Identification of soybean seed varieties based on hyperspectral imaging technology. Sensors 2019, 19, 5225. [Google Scholar] [CrossRef]

- He, X.; Feng, X.; Sun, D. Rapid and nondestructive measurement of rice seed vitality of different years using near-infrared hyperspectral imaging. Molecules 2019, 24, 2227. [Google Scholar] [CrossRef]

- Shao, Q.; Chen, Y.H.; Yang, S.T. Hyperspectral image identification of maize varieties based on random forest algorithm. Geogr. Geogr. Inf. Sci. 2019, 35, 34–39. [Google Scholar]

- Liu, Y.; Gao, Q.X.; Gao, X.B.; Shao, L. L2,1-norm discriminant manifold learning. IEEE Access 2018, 6, 40723–40734. [Google Scholar]

- Tang, C.; Liu, X.W. Feature selective projection with low-rank embedding and dual Laplacian regularization. IEEE Trans. Knowl. Data Eng. 2020, 32, 1747–1760. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar]

- Gu, Y.X.; Ding, S.F. Advances in support vector machine research. Comput. Sci. 2011, 38, 14–17. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Indicators | Variety | |

|---|---|---|

| Early Rice | Late Rice | |

| available nitrogen | 83.24 mg/kg | 90.83 mg/kg |

| available phosphorus | 41.29 mg/kg | 44.42 mg/kg |

| effective potassium | 90 mg/kg | 110 mg/kg |

| PH values | 4.8 | 5.4 |

| organic matter | 23.43 g/kg | 25.22 g/kg |

| total nitrogen | 10.67 g/kg | 10.33 g/kg |

| total phosphorus | 1.40 g/kg | 1.42 g/kg |

| total potassium | 0.56 g/kg | 0.59 g/kg |

| Truth | Predict | |

|---|---|---|

| Positive Example | Negative Example | |

| positive example | TP | FN |

| negative example | FP | TN |

| Dataset | Early Rice Dataset | Late Rice Dataset |

|---|---|---|

| Index of the selected bands | 120, 121, 123, 124, 135, 136, 137, 138, 139, 140, 221, 223, 224, 227, 229, 268, 273, 275, 276, 274, 277, 278, 321, 322, 323, 325, 324, 326, 329, 328. | 97, 98, 113, 114, 179, 182, 183, 191, 192, 195, 202, 246, 247, 250, 251, 252, 253, 254, 255, 256, 257, 258, 259, 260, 262, 261, 266, 317, 319, 320. |

| Species Category | Prediction Set Accuracy (Training Set Proportion Is 30%) | Prediction Set Accuracy (Training Set Proportion Is 50%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | PCA | LPP | t-SNE | LPSPL | Baseline | PCA | LPP | t-SNE | LPSPL | |

| Z1 | 36.19 | 34.29 | 38.10 | 38.10 | 37.14 | 48.00 | 56.00 | 54.67 | 55.33 | 65.33 |

| Z2 | 41.90 | 42.86 | 37.14 | 48.57 | 44.76 | 61.33 | 72.00 | 50.67 | 56.00 | 58.67 |

| Z3 | 37.14 | 43.81 | 47.62 | 42.38 | 43.81 | 60.00 | 61.33 | 69.33 | 60.00 | 68.00 |

| Z4 | 36.19 | 39.05 | 38.10 | 41.90 | 35.24 | 53.33 | 54.67 | 60.00 | 48.00 | 58.67 |

| Z5 | 50.48 | 44.76 | 49.52 | 45.71 | 42.86 | 65.33 | 60.00 | 68.00 | 56.00 | 66.67 |

| Z6 | 39.05 | 44.76 | 32.86 | 26.19 | 46.67 | 47.33 | 62.00 | 50.67 | 48.67 | 56.00 |

| Z7 | 48.57 | 44.29 | 34.29 | 33.81 | 44.29 | 51.33 | 58.00 | 50.67 | 59.33 | 49.33 |

| Z8 | 45.71 | 47.62 | 40.00 | 47.62 | 39.05 | 66.67 | 56.00 | 54.67 | 64.00 | 68.00 |

| Z9 | 53.33 | 57.14 | 54.29 | 49.52 | 63.81 | 62.67 | 70.67 | 65.33 | 57.33 | 73.33 |

| Z10 | 51.43 | 50.48 | 60.95 | 50.47 | 64.76 | 74.67 | 68.00 | 72.00 | 73.33 | 76.00 |

| OA | 44.00 | 44.90 | 43.29 | 42.43 | 46.24 | 59.07 | 61.87 | 59.60 | 57.80 | 64.00 |

| Species Category | Prediction Set Accuracy (Training Set Proportion Is 70%) | Prediction Set Accuracy (Training Set Proportion Is 90%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | PCA | LPP | t-SNE | LPSPL | Baseline | PCA | LPP | t-SNE | LPSPL | |

| Z1 | 66.67 | 71.11 | 75.56 | 70.00 | 84.44 | 93.33 | 100.00 | 80.00 | 83.33 | 100.00 |

| Z2 | 77.78 | 73.33 | 84.44 | 75.56 | 84.44 | 93.33 | 80.00 | 93.33 | 86.67 | 100.00 |

| Z3 | 66.67 | 68.89 | 71.11 | 64.44 | 77.78 | 100.00 | 93.33 | 100.00 | 93.33 | 100.00 |

| Z4 | 75.56 | 77.78 | 71.11 | 77.78 | 84.44 | 93.33 | 86.67 | 93.33 | 100.00 | 86.67 |

| Z5 | 73.33 | 82.22 | 75.56 | 75.56 | 71.11 | 86.67 | 100.00 | 86.67 | 100.00 | 100.00 |

| Z6 | 73.33 | 73.33 | 62.22 | 70.00 | 77.78 | 96.67 | 83.33 | 96.67 | 90.00 | 96.67 |

| Z7 | 65.56 | 77.78 | 70.00 | 78.89 | 73.33 | 86.67 | 86.67 | 90.00 | 96.67 | 83.33 |

| Z8 | 68.89 | 68.89 | 77.78 | 88.89 | 71.11 | 73.33 | 100.00 | 100.00 | 93.33 | 100.00 |

| Z9 | 75.56 | 88.89 | 80.00 | 77.78 | 93.33 | 100.00 | 100.00 | 100.00 | 100.00 | 93.33 |

| Z10 | 86.67 | 88.89 | 73.33 | 82.22 | 91.11 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| OA | 73.00 | 78.78 | 74.11 | 76.11 | 80.89 | 92.33 | 93.00 | 94.00 | 94.33 | 96.00 |

| Species Category | Prediction Set Accuracy (Training Set Proportion Is 30%) | Prediction Set Accuracy (Training Set Proportion Is 50%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | PCA | LPP | t-SNE | LPSPL | Baseline | PCA | LPP | t-SNE | LPSPL | |

| W1 | 46.67 | 49.52 | 55.24 | 51.43 | 50.48 | 68.00 | 66.67 | 64.00 | 64.00 | 76.00 |

| W2 | 50.48 | 55.24 | 54.29 | 41.91 | 66.67 | 61.33 | 72.00 | 71.33 | 60.00 | 77.33 |

| W3 | 40.00 | 40.95 | 33.33 | 41.91 | 45.71 | 61.33 | 57.33 | 58.67 | 56.00 | 53.33 |

| W4 | 41.90 | 55.24 | 50.48 | 49.52 | 54.29 | 64.00 | 68.00 | 72.00 | 72.00 | 78.67 |

| W5 | 47.62 | 47.62 | 52.38 | 43.81 | 52.38 | 61.33 | 60.00 | 65.33 | 53.33 | 66.67 |

| W6 | 48.57 | 39.05 | 35.24 | 41.91 | 40.00 | 50.67 | 61.33 | 58.67 | 62.67 | 60.00 |

| W7 | 66.67 | 56.19 | 58.10 | 63.81 | 67.62 | 84.00 | 76.00 | 76.00 | 78.67 | 80.00 |

| W8 | 40.95 | 41.90 | 40.00 | 48.57 | 47.62 | 65.33 | 62.67 | 66.67 | 64.00 | 70.67 |

| W9 | 57.14 | 65.71 | 64.76 | 50.47 | 78.10 | 65.33 | 64.00 | 76.00 | 69.33 | 85.33 |

| W10 | 48.57 | 49.52 | 60.95 | 69.52 | 61.90 | 57.33 | 78.67 | 73.33 | 77.33 | 66.67 |

| OA | 48.86 | 50.10 | 50.48 | 50.29 | 56.48 | 63.87 | 66.67 | 68.20 | 65.73 | 71.47 |

| Species Category | Prediction Set Accuracy (Training Set Proportion Is 70%) | Prediction Set Accuracy (Training Set Proportion Is 90%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | PCA | LPP | t-SNE | LPSPL | Baseline | PCA | LPP | t-SNE | LPSPL | |

| W1 | 77.78 | 73.33 | 82.22 | 86.67 | 88.89 | 100.00 | 86.67 | 100.00 | 93.33 | 86.67 |

| W2 | 84.44 | 82.22 | 80.00 | 84.44 | 88.89 | 100.00 | 100.00 | 100.00 | 93.33 | 100.00 |

| W3 | 75.56 | 84.44 | 77.78 | 80.00 | 75.56 | 100.00 | 93.33 | 100.00 | 93.33 | 100.00 |

| W4 | 64.44 | 88.89 | 84.44 | 86.67 | 86.67 | 86.67 | 100.00 | 93.33 | 100.00 | 100.00 |

| W5 | 84.44 | 75.56 | 80.00 | 71.11 | 88.89 | 100.00 | 93.33 | 100.00 | 93.33 | 100.00 |

| W6 | 57.78 | 77.78 | 77.78 | 71.11 | 75.56 | 86.67 | 100.00 | 93.33 | 83.33 | 100.00 |

| W7 | 80.00 | 80.00 | 80.00 | 88.89 | 88.89 | 93.33 | 93.33 | 93.33 | 100.00 | 100.00 |

| W8 | 73.33 | 73.33 | 77.78 | 75.56 | 86.67 | 93.33 | 93.33 | 93.33 | 100.00 | 100.00 |

| W9 | 84.44 | 86.67 | 93.33 | 86.67 | 97.78 | 80.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| W10 | 80.00 | 93.33 | 84.44 | 66.67 | 75.56 | 93.33 | 100.00 | 93.33 | 93.33 | 93.33 |

| OA | 76.22 | 81.56 | 81.78 | 79.78 | 85.33 | 93.33 | 96.00 | 96.67 | 95.00 | 98.00 |

| Method | Proportion of Early Rice Training Set | Proportion of Late Rice Training Set | ||||||

|---|---|---|---|---|---|---|---|---|

| 0.3 | 0.5 | 0.7 | 0.9 | 0.3 | 0.5 | 0.7 | 0.9 | |

| Baseline | 0.3378 | 0.5452 | 0.7 | 0.9148 | 0.4318 | 0.5985 | 0.7358 | 0.9259 |

| PCA | 0.3879 | 0.5763 | 0.7457 | 0.9222 | 0.4455 | 0.6297 | 0.7951 | 0.9556 |

| LPP | 0.3699 | 0.5511 | 0.7124 | 0.9333 | 0.4498 | 0.6467 | 0.7996 | 0.963 |

| t-SNE | 0.4369 | 0.5831 | 0.7637 | 0.9395 | 0.5223 | 0.6602 | 0.7948 | 0.9447 |

| LPSPL | 0.4027 | 0.6 | 0.7877 | 0.9556 | 0.5164 | 0.683 | 0.8371 | 0.9778 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Long, C.-F.; Wen, Z.-D.; Deng, Y.-J.; Hu, T.; Liu, J.-L.; Zhu, X.-H. Locality Preserved Selective Projection Learning for Rice Variety Identification Based on Leaf Hyperspectral Characteristics. Agronomy 2023, 13, 2401. https://doi.org/10.3390/agronomy13092401

Long C-F, Wen Z-D, Deng Y-J, Hu T, Liu J-L, Zhu X-H. Locality Preserved Selective Projection Learning for Rice Variety Identification Based on Leaf Hyperspectral Characteristics. Agronomy. 2023; 13(9):2401. https://doi.org/10.3390/agronomy13092401

Chicago/Turabian StyleLong, Chen-Feng, Zhi-Dong Wen, Yang-Jun Deng, Tian Hu, Jin-Ling Liu, and Xing-Hui Zhu. 2023. "Locality Preserved Selective Projection Learning for Rice Variety Identification Based on Leaf Hyperspectral Characteristics" Agronomy 13, no. 9: 2401. https://doi.org/10.3390/agronomy13092401

APA StyleLong, C.-F., Wen, Z.-D., Deng, Y.-J., Hu, T., Liu, J.-L., & Zhu, X.-H. (2023). Locality Preserved Selective Projection Learning for Rice Variety Identification Based on Leaf Hyperspectral Characteristics. Agronomy, 13(9), 2401. https://doi.org/10.3390/agronomy13092401