Improving Nitrogen Status Diagnosis and Recommendation of Maize Using UAV Remote Sensing Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Design and Field Management

2.2. Ground Data Collection and Analysis

2.2.1. Plant Sampling and Measurement

2.2.2. Calculation of the Nitrogen Nutrition Index

2.2.3. Determination of NNI Threshold Values

2.2.4. N Recommendation Strategy

2.3. UAV Remote Sensing Data Collection and Analysis

2.3.1. UAV Image Acquisition and Processing

2.3.2. Multispectral Image Processing and Vegetation Index

2.3.3. RGB Image Data Processing

2.4. Statistical Analysis and Model Development

3. Results

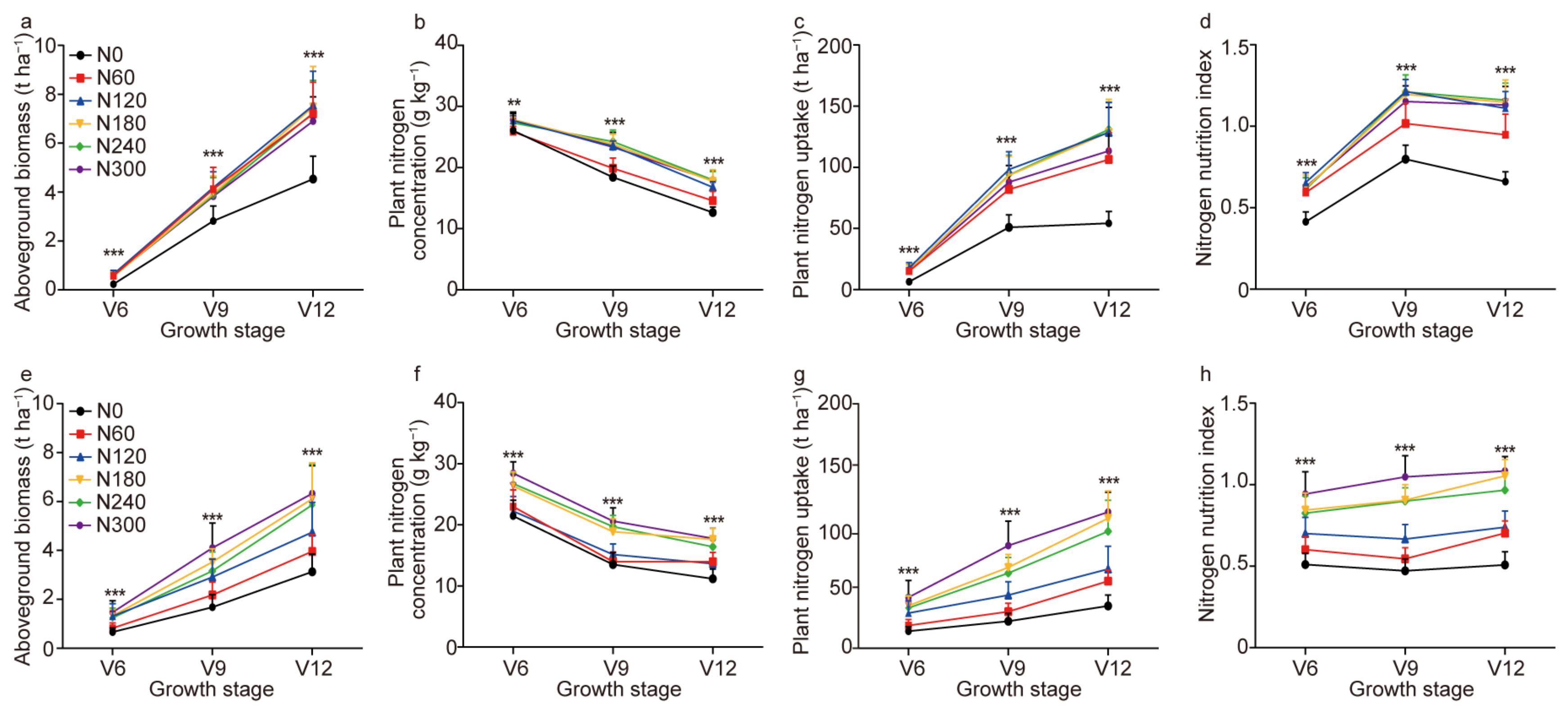

3.1. Variation in Maize Nitrogen Status Indicators and Yield

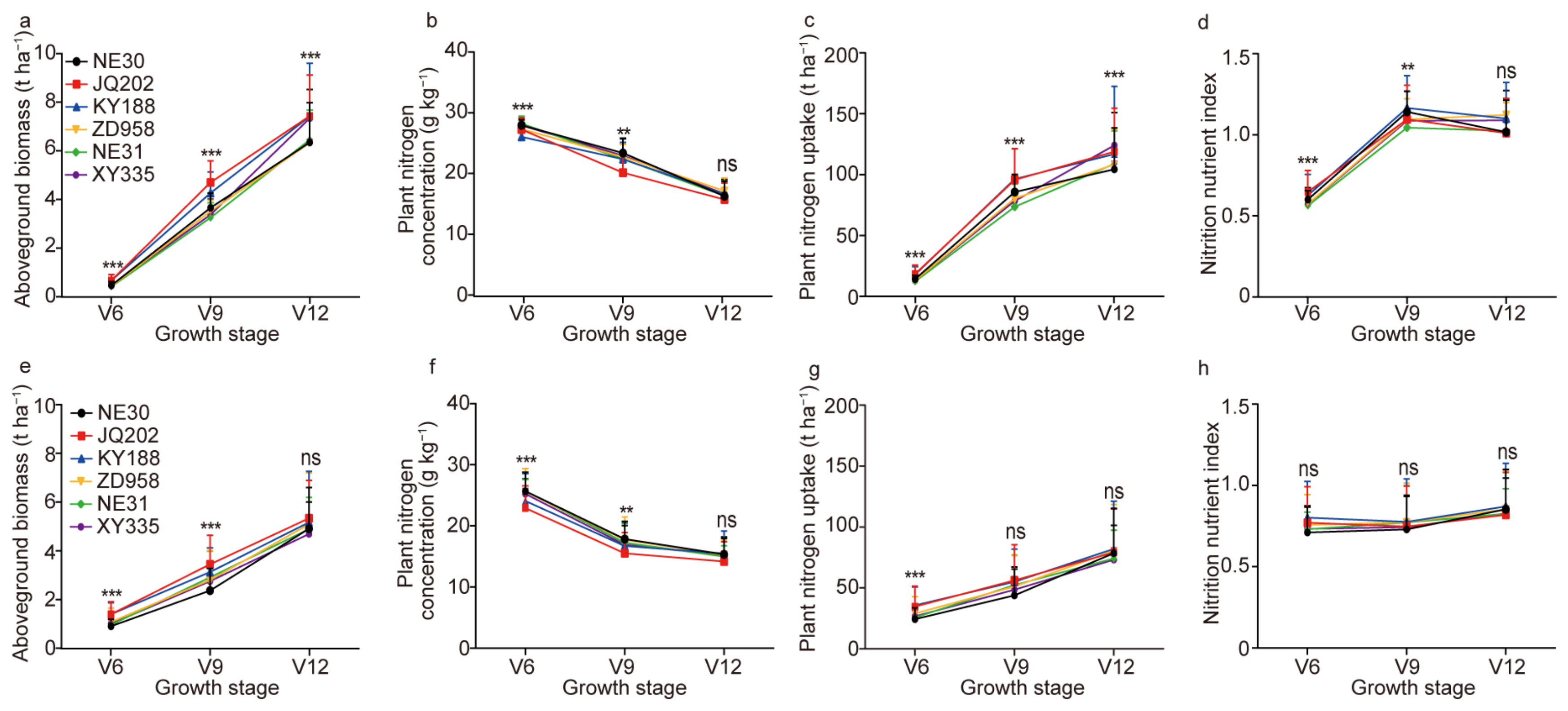

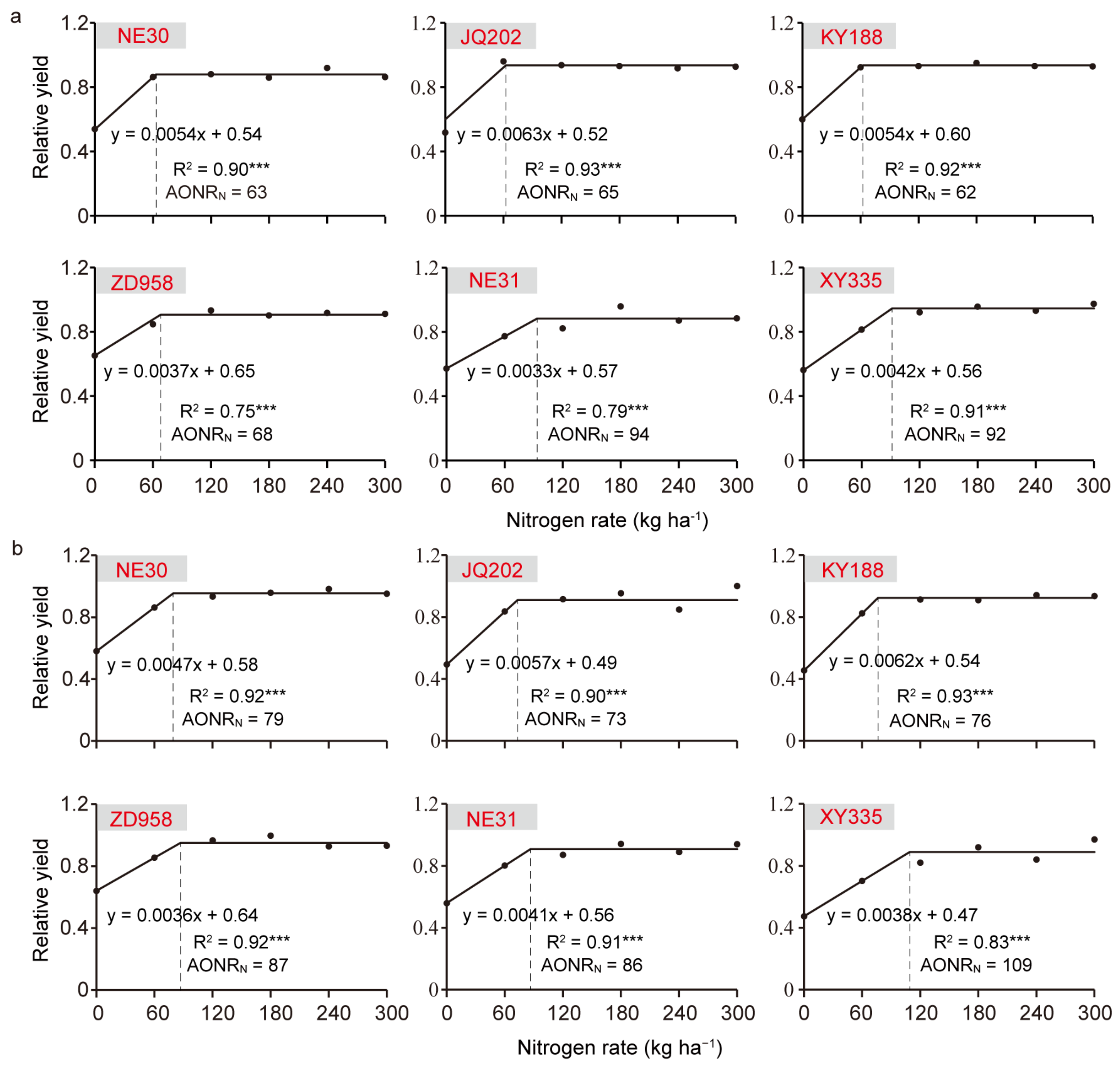

3.2. NNI Threshold Values for Different Hybrids

3.3. Performance of the Random Forest Model for Estimating N Status Indicators

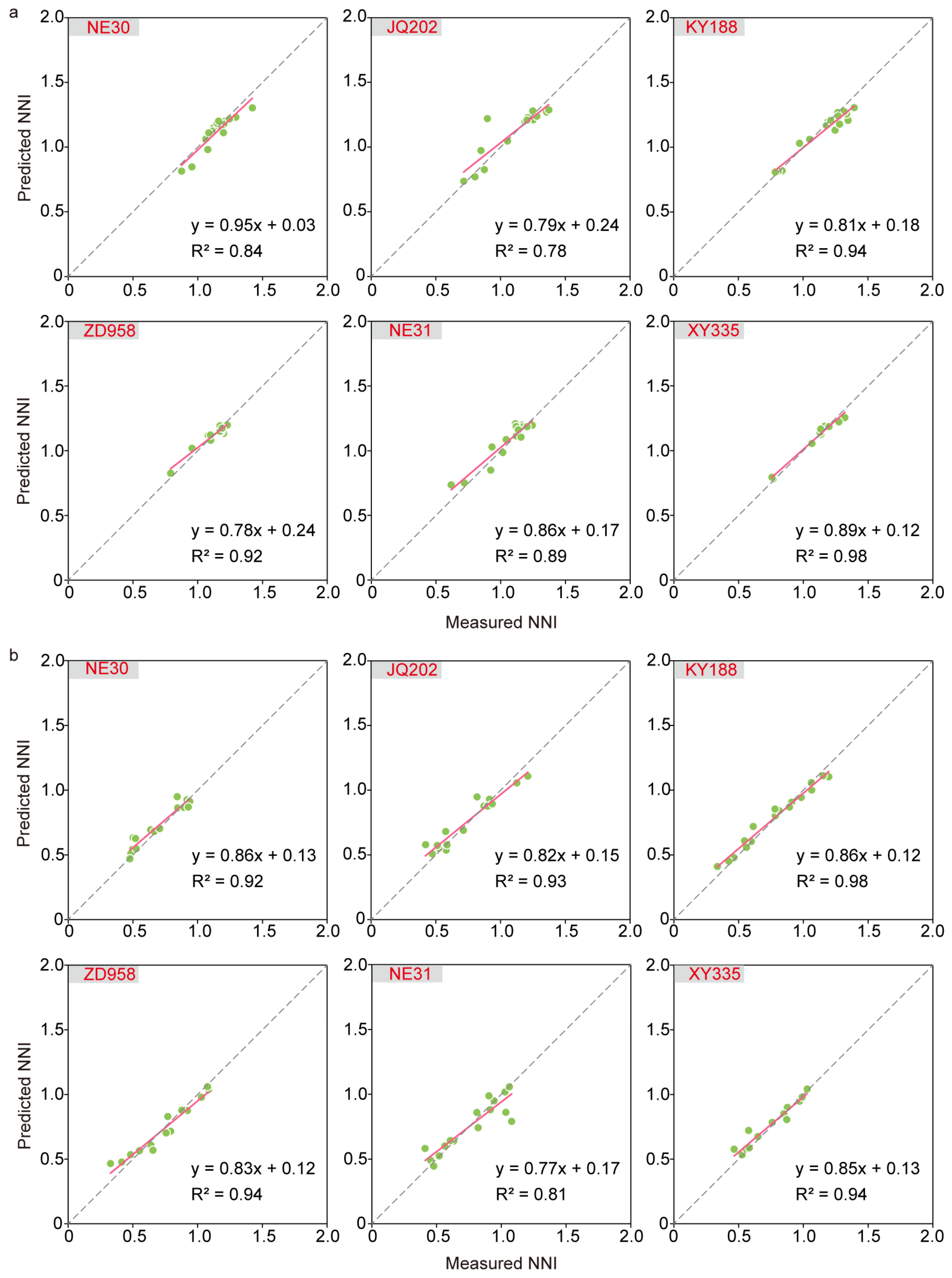

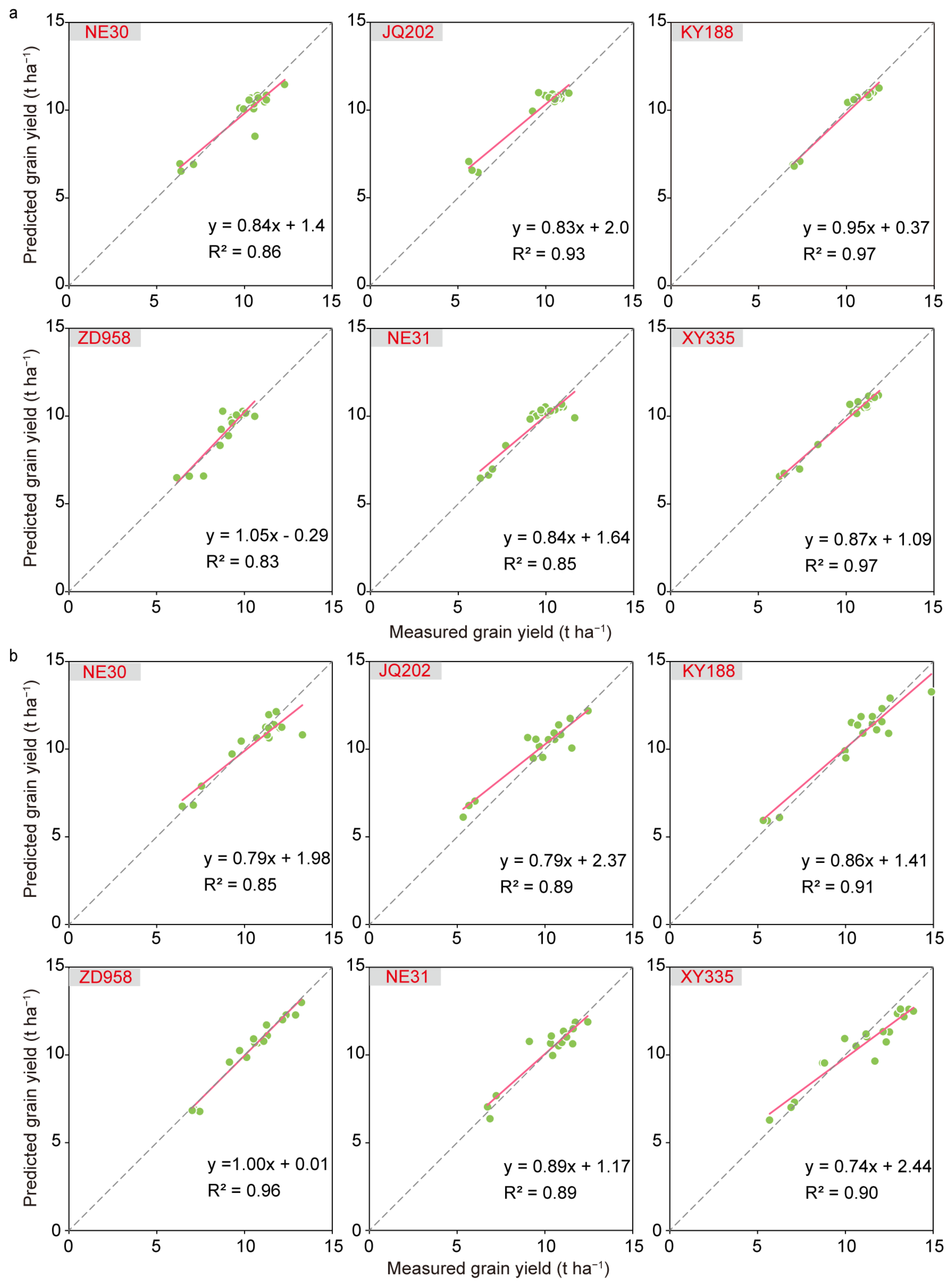

3.4. Performance of the RF Model for Yield Prediction

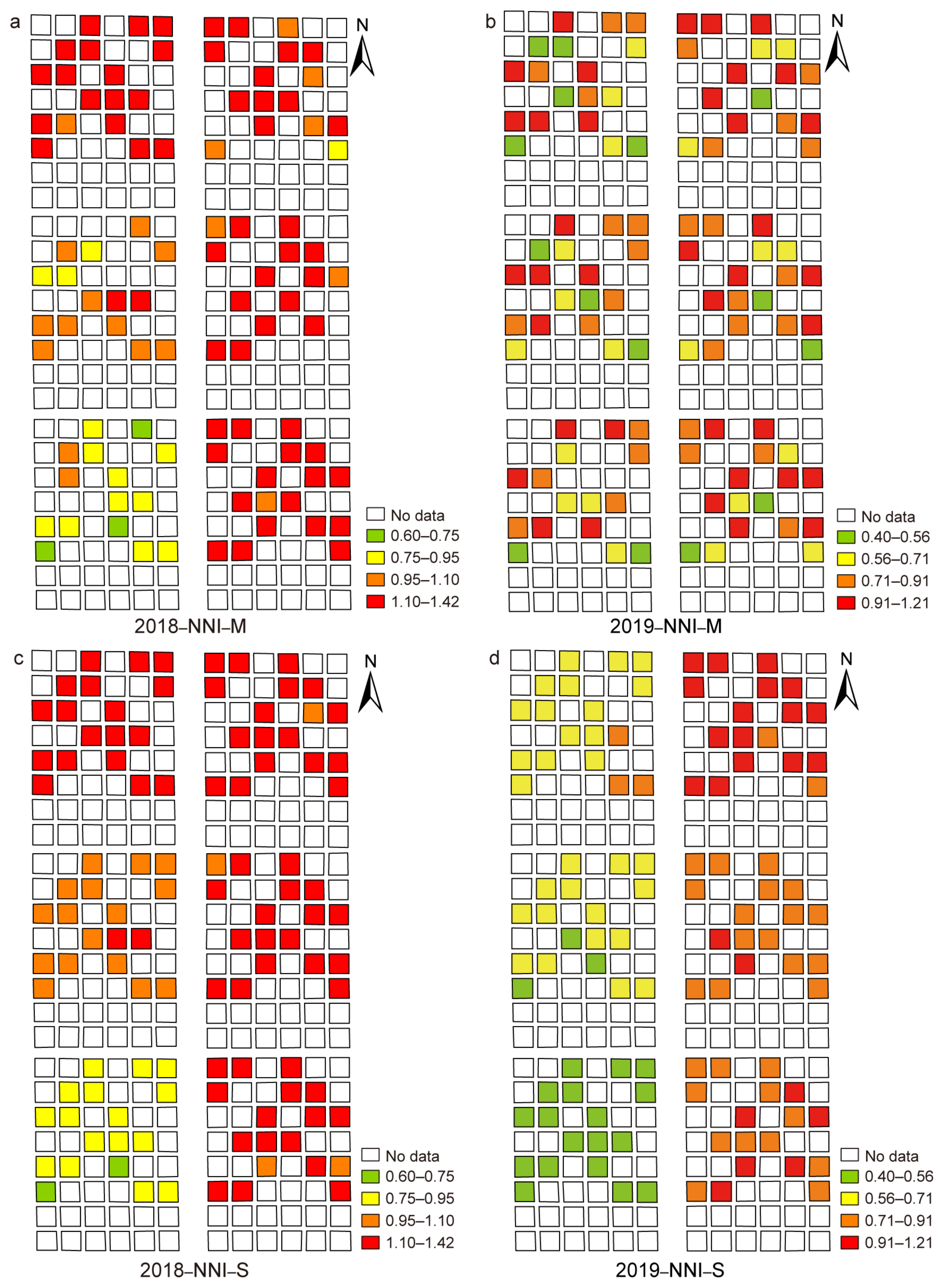

3.5. Diagnosis of N Nutrition Status at the Field Scale

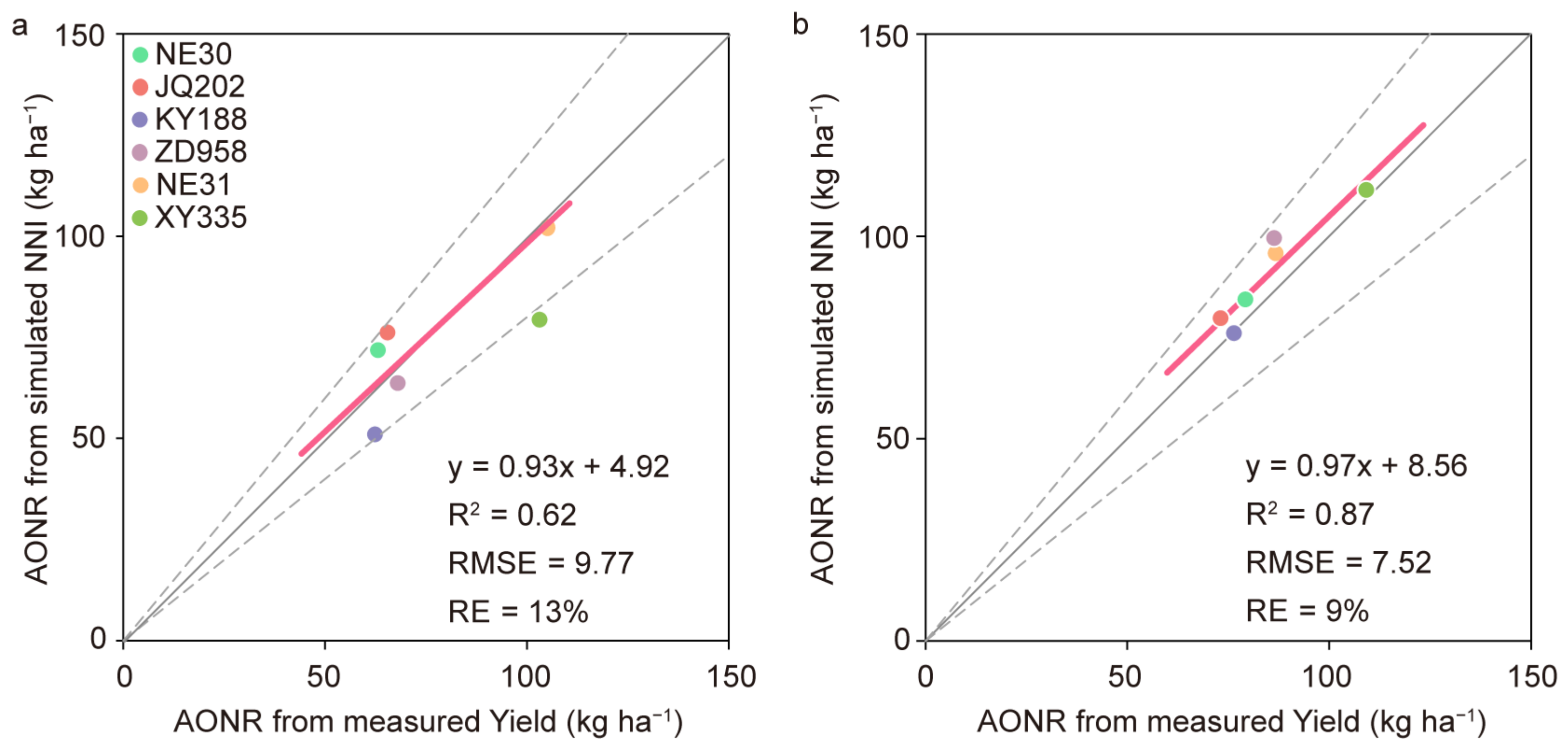

3.6. Evaluation of the AONR Determination Based on the RF Model Estimation of NNI and Yield

4. Discussion

4.1. Hybrid Differences in NNI Threshold Values

4.2. UAV Remote Sensing Data Fusion Using Machine Learning for N Status Diagnosis

4.3. Implications for Maize Management and Breeding

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- FAO. Free Access to Food and Agriculture Statistics. Available online: http://www.fao.org (accessed on 30 June 2019).

- Ju, X.-T.; Xing, G.-X.; Chen, X.-P.; Zhang, S.-L.; Zhang, L.-J.; Liu, X.-J.; Cui, Z.-L.; Yin, B.; Christie, P.; Zhu, Z.-L.; et al. Reducing environmental risk by improving N management in intensive Chinese agricultural systems. Proc. Natl. Acad. Sci. USA 2009, 106, 3041–3046. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.; Liu, J.; Liu, Z.; Chen, Z.; Ren, W.; Gong, X.; Wang, L.; Cai, H.; Pan, Q.; Yuan, L.; et al. Breeding for high-yield and nitrogen use efficiency in maize: Lessons from comparison between Chinese and US cultivars. Adv. Agron. 2021, 166, 251–275. [Google Scholar] [CrossRef]

- Hartmann, T.E.; Yue, S.; Schulz, R.; He, X.; Chen, X.; Zhang, F.; Müller, T. Yield and N use efficiency of a maize–wheat cropping system as affected by different fertilizer management strategies in a farmer’s field of the North China Plain. Field Crops Res. 2015, 174, 30–39. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Feng, G.; Gao, X.; Liu, B.; Liu, Y.; Li, F.; Khosla, R.; Mulla, D.J.; Zhang, F. Improving nitrogen use efficiency with minimal environmental risks using an active canopy sensor in a wheat-maize cropping system. Field Crops Res. 2017, 214, 365–372. [Google Scholar] [CrossRef]

- Wang, S.; Yang, L.; Su, M.; Ma, X.; Sun, Y.; Yang, M.; Zhao, P.; Shen, J.; Zhang, F.; Goulding, K.; et al. Increasing the agricultural, environmental and economic benefits of farming based on suitable crop rotations and optimum fertilizer applications. Field Crops Res. 2019, 240, 78–85. [Google Scholar] [CrossRef]

- Quemada, M.; Gabriel, J.L.; Zarco-Tejada, P. Airborne Hyperspectral Images and Ground-Level Optical Sensors as Assessment Tools for Maize Nitrogen Fertilization. Remote Sens. 2014, 6, 2940–2962. [Google Scholar] [CrossRef]

- Yao, Y.; Miao, Y.; Cao, Q.; Wang, H.; Gnyp, M.L.; Bareth, G.; Khosla, R.; Yang, W.; Liu, F.; Liu, C. In-Season Estimation of Rice Nitrogen Status with an Active Crop Canopy Sensor. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4403–4413. [Google Scholar] [CrossRef]

- Lemaire, G.; Jeuffroy, M.; Gastal, F. Diagnosis tool for plant and crop N status in vegetative stage. Eur. J. Agron. 2008, 28, 614–624. [Google Scholar] [CrossRef]

- Xia, T.; Miao, Y.; Wu, D.; Shao, H.; Khosla, R.; Mi, G. Active Optical Sensing of Spring Maize for In-Season Diagnosis of Nitrogen Status Based on Nitrogen Nutrition Index. Remote Sens. 2016, 8, 605. [Google Scholar] [CrossRef]

- Zhao, B.; Duan, A.; Ata-Ul-Karim, S.T.; Liu, Z.; Chen, Z.; Gong, Z.; Zhang, J.; Xiao, J.; Liu, Z.; Qin, A.; et al. Exploring new spectral bands and vegetation indices for estimating nitrogen nutrition index of summer maize. Eur. J. Agron. 2018, 93, 113–125. [Google Scholar] [CrossRef]

- Dong, R.; Miao, Y.; Wang, X.; Chen, Z.; Yuan, F.; Zhang, W.; Li, H. Estimating Plant Nitrogen Concentration of Maize using a Leaf Fluorescence Sensor across Growth Stages. Remote Sens. 2020, 12, 1139. [Google Scholar] [CrossRef]

- Huang, S.; Miao, Y.; Zhao, G.; Yuan, F.; Ma, X.; Tan, C.; Yu, W.; Gnyp, M.L.; Lenz-Wiedemann, V.I.; Rascher, U.; et al. Satellite Remote Sensing-Based In-Season Diagnosis of Rice Nitrogen Status in Northeast China. Remote Sens. 2015, 7, 10646–10667. [Google Scholar] [CrossRef]

- Fabbri, C.; Mancini, M.; Marta, A.D.; Orlandini, S.; Napoli, M. Integrating satellite data with a Nitrogen Nutrition Curve for precision top-dress fertilization of durum wheat. Eur. J. Agron. 2020, 120, 126148. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer—A case study of small farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef]

- Qiao, L.; Gao, D.; Zhang, J.; Li, M.; Sun, H.; Ma, J. Dynamic Influence Elimination and Chlorophyll Content Diagnosis of Maize Using UAV Spectral Imagery. Remote Sens. 2020, 12, 2650. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned Aerial Vehicle to Estimate Nitrogen Status of Turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar] [CrossRef]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s within-Field Spatial Variations Using Color Images Acquired from UAV-camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Sun, B.; Wang, C.; Yang, C.; Xu, B.; Zhou, G.; Li, X.; Xie, J.; Xu, S.; Liu, B.; Xie, T.; et al. Retrieval of rapeseed leaf area index using the PROSAIL model with canopy coverage derived from UAV images as a correction parameter. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102373. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined Spectral and Spatial Modeling of Corn Yield Based on Aerial Images and Crop Surface Models Acquired with an Unmanned Aircraft System. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Wang, F.; Yi, Q.; Hu, J.; Xie, L.; Yao, X.; Xu, T.; Zheng, J. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102397. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Combining Unmanned Aerial Vehicle (UAV)-Based Multispectral Imagery and Ground-Based Hyperspectral Data for Plant Nitrogen Concentration Estimation in Rice. Front. Plant Sci. 2018, 9, 936. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Gu, X.; Pang, Y.; Chen, B.; Liu, L. Estimation of Forest Aboveground Biomass and Leaf Area Index Based on Digital Aerial Photograph Data in Northeast China. Forests 2018, 9, 275. [Google Scholar] [CrossRef]

- Lu, B.; He, Y.; Liu, H.H.T. Mapping vegetation biophysical and biochemical properties using unmanned aerial vehicles-acquired imagery. Int. J. Remote Sens. 2017, 39, 5265–5287. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Li, Z.; Song, X.; Li, Z.; Xu, X.; Wang, P.; Zhao, C. Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sens. 2020, 12, 3778. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2019, 237, 111599. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Gatto, S.; Whelan, B.; Sartori, L.; Marinello, F. Ten years of corn yield dynamics at field scale under digital agriculture solutions: A case study from North Italy. Comput. Electron. Agric. 2021, 185, 106126. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

- Ransom, C.J.; Kitchen, N.R.; Camberato, J.J.; Carter, P.R.; Ferguson, R.B.; Fernández, F.G.; Franzen, D.W.; Laboski, C.A.; Myers, D.B.; Nafziger, E.D.; et al. Statistical and machine learning methods evaluated for incorporating soil and weather into corn nitrogen recommendations. Comput. Electron. Agric. 2019, 164, 104872. [Google Scholar] [CrossRef]

- Li, D.; Miao, Y.; Ransom, C.J.; Bean, G.M.; Kitchen, N.R.; Fernández, F.G.; Sawyer, J.E.; Camberato, J.J.; Carter, P.R.; Ferguson, R.B.; et al. Corn Nitrogen Nutrition Index Prediction Improved by Integrating Genetic, Environmental, and Management Factors with Active Canopy Sensing Using Machine Learning. Remote Sens. 2022, 14, 394. [Google Scholar] [CrossRef]

- Li, M.; He, S.; Wang, J.; Liu, Z.; Xie, G.H. An NIRS-based assay of chemical composition and biomass digestibility for rapid selection of Jerusalem artichoke clones. Biotechnol. Biofuels 2018, 11, 334. [Google Scholar] [CrossRef]

- Liang, X.-G.; Zhang, J.-T.; Zhou, L.-L.; Li, X.-H.; Zhou, S.-L. Critical Nitrogen Dilution Curve and Nitrogen Nutrition Index for Summer-Maize in North China Plain. Acta Agron. Sin. 2013, 39, 292. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Wang, H.; Huang, S.; Cheng, S.; Khosla, R.; Jiang, R. Non-destructive estimation of rice plant nitrogen status with Crop Circle multispectral active canopy sensor. Field Crops Res. 2013, 154, 133–144. [Google Scholar] [CrossRef]

- Lu, J.; Miao, Y.; Shi, W.; Li, J.; Yuan, F. Evaluating different approaches to non-destructive nitrogen status diagnosis of rice using portable RapidSCAN active canopy sensor. Sci. Rep. 2017, 7, 14073. [Google Scholar] [CrossRef]

- Rouse, J.W.J.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. In Proceedings of the Third ERTS Symphony, Colombia, WA, USA, 1 January 1974; pp. 309–317. [Google Scholar]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Erdle, K.; Mistele, B.; Schmidhalter, U. Comparison of active and passive spectral sensors in discriminating biomass parameters and nitrogen status in wheat cultivars. Field Crops Res. 2011, 124, 74–84. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Yu, N.; Li, L.; Schmitz, N.; Tian, L.F.; Greenberg, J.A.; Diers, B.W. Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by Random Forest. R News 2002, 2, 18–22. [Google Scholar]

- Hornung, A.; Khosla, R.; Reich, R.; Inman, D.; Westfall, D.G. Comparison of Site-Specific Management Zones: Soil-Color-Based and Yield-Based. Agron. J. 2006, 98, 407–415. [Google Scholar] [CrossRef]

- Ciampitti, I.A.; Fernandez, J.; Tamagno, S.; Zhao, B.; Lemaire, G.; Makowski, D. Does the critical N dilution curve for maize crop vary across genotype × environment × management scenarios? A Bayesian analysis. Eur. J. Agron. 2020, 123, 126202. [Google Scholar] [CrossRef]

- Chen, Z.; Miao, Y.; Lu, J.; Zhou, L.; Li, Y.; Zhang, H.; Lou, W.; Zhang, Z.; Kusnierek, K.; Liu, C. In-Season Diagnosis of Winter Wheat Nitrogen Status in Smallholder Farmer Fields Across a Village Using Unmanned Aerial Vehicle-Based Remote Sensing. Agronomy 2019, 9, 619. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, X.; Wang, X.; Tahir Ata-Ul-Karim, S.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Does the Organ-Based N Dilution Curve Improve the Predictions of N Status in Winter Wheat? Agriculture 2020, 10, 500. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Maresma, Á.; Ariza, M.; Martínez, E.; Lloveras, J.; Martínez-Casasnovas, J.A. Analysis of Vegetation Indices to Determine Nitrogen Application and Yield Prediction in Maize (Zea mays L.) from a Standard UAV Service. Remote Sens. 2016, 8, 973. [Google Scholar] [CrossRef]

- Rischbeck, P.; Elsayed, S.; Mistele, B.; Barmeier, G.; Heil, K.; Schmidhalter, U. Data fusion of spectral, thermal and canopy height parameters for improved yield prediction of drought stressed spring barley. Eur. J. Agron. 2016, 78, 44–59. [Google Scholar] [CrossRef]

- Colombo, R.; Bellingeri, D.; Fasolini, D.; Marino, C.M. Retrieval of leaf area index in different vegetation types using high resolution satellite data. Remote Sens. Environ. 2003, 86, 120–131. [Google Scholar] [CrossRef]

- Pelizari, P.A.; Spröhnle, K.; Geiß, C.; Schoepfer, E.; Plank, S.; Taubenböck, H. Multi-sensor feature fusion for very high spatial resolution built-up area extraction in temporary settlements. Remote Sens. Environ. 2018, 209, 793–807. [Google Scholar] [CrossRef]

- Moghimi, A.; Pourreza, A.; Zuniga-Ramirez, G.; Williams, L.E.; Fidelibus, M.W. A Novel Machine Learning Approach to Estimate Grapevine Leaf Nitrogen Concentration Using Aerial Multispectral Imagery. Remote Sens. 2020, 12, 3515. [Google Scholar] [CrossRef]

- Liang, T.; Duan, B.; Luo, X.; Ma, Y.; Yuan, Z.; Zhu, R.; Peng, Y.; Gong, Y.; Fang, S.; Wu, X. Identification of High Nitrogen Use Efficiency Phenotype in Rice (Oryza sativa L.) Through Entire Growth Duration by Unmanned Aerial Vehicle Multispectral Imagery. Front. Plant Sci. 2021, 12, 740414. [Google Scholar] [CrossRef]

- Wieland, M.; Pittore, M. Performance Evaluation of Machine Learning Algorithms for Urban Pattern Recognition from Multi-spectral Satellite Images. Remote Sens. 2014, 6, 2912–2939. [Google Scholar] [CrossRef]

- Hrisko, J.; Ramamurthy, P.; Gonzalez, J.E. Estimating heat storage in urban areas using multispectral satellite data and machine learning. Remote Sens. Environ. 2020, 252, 112125. [Google Scholar] [CrossRef]

- Fletcher, R.S.; Reddy, K. Random forest and leaf multispectral reflectance data to differentiate three soybean varieties from two pigweeds. Comput. Electron. Agric. 2016, 128, 199–206. [Google Scholar] [CrossRef]

- Schwalbert, R.A.; Amado, T.; Corassa, G.; Pott, L.P.; Prasad, P.; Ciampitti, I.A. Satellite-based soybean yield forecast: Integrating machine learning and weather data for improving crop yield prediction in southern Brazil. Agric. For. Meteorol. 2020, 284, 107886. [Google Scholar] [CrossRef]

- Iatrou, M.; Karydas, C.; Iatrou, G.; Pitsiorlas, I.; Aschonitis, V.; Raptis, I.; Mpetas, S.; Kravvas, K.; Mourelatos, S. Topdressing Nitrogen Demand Prediction in Rice Crop Using Machine Learning Systems. Agriculture 2021, 11, 312. [Google Scholar] [CrossRef]

- Liu, B.-Y.; Lin, B.-J.; Li, X.-X.; Virk, A.L.; Yves, B.N.; Zhao, X.; Dang, Y.P.; Zhang, H.-L. Appropriate farming practices of summer maize in the North China Plain: Reducing nitrogen use to promote sustainable agricultural development. Resour. Conserv. Recycl. 2021, 175, 105889. [Google Scholar] [CrossRef]

- Wen, Z.; Xu, W.; Li, Q.; Han, M.; Tang, A.; Zhang, Y.; Luo, X.; Shen, J.; Wang, W.; Li, K.; et al. Changes of nitrogen deposition in China from 1980 to 2018. Environ. Int. 2020, 144, 106022. [Google Scholar] [CrossRef]

- Shu, M.; Shen, M.; Zuo, J.; Yin, P.; Wang, M.; Xie, Z.; Tang, J.; Wang, R.; Li, B.; Yang, X.; et al. The Application of UAV-Based Hyperspectral Imaging to Estimate Crop Traits in Maize Inbred Lines. Plant Phenom. 2021, 2021, 9890745. [Google Scholar] [CrossRef]

- Grzybowski, M.; Wijewardane, N.K.; Atefi, A.; Ge, Y.; Schnable, J.C. Hyperspectral reflectance-based phenotyping for quantitative genetics in crops: Progress and challenges. Plant Commun. 2021, 2, 100209. [Google Scholar] [CrossRef]

- Jiang, L.; Sun, L.; Ye, M.; Wang, J.; Wang, Y.; Bogard, M.; Lacaze, X.; Fournier, A.; Beauchêne, K.; Gouache, D.; et al. Functional mapping of N deficiency-induced response in wheat yield-component traits by implementing high-throughput phenotyping. Plant J. 2019, 97, 1105–1119. [Google Scholar] [CrossRef]

| Trait | Sensor Type | 2018 | 2019 | ||||

|---|---|---|---|---|---|---|---|

| RMSE | R2 | RE | RMSE | R2 | RE | ||

| AGB | MS | 0.11 | 0.63 | 9.6 | 0.70 | 0.5 | 26.4 |

| MS+GLCM | 0.10 | 0.63 | 9.5 | 0.68 | 0.52 | 25.8 | |

| MS+GC+GLCM | 0.07 | 0.85 | 6.2 | 0.49 | 0.79 | 17.1 | |

| PNC | MS | 1.91 | 0.64 | 8.8 | 2.09 | 0.72 | 12.8 |

| MS+GLCM | 1.95 | 0.63 | 8.9 | 2.11 | 0.70 | 12.9 | |

| MS+GC+GLCM | 1.24 | 0.81 | 5.6 | 1.38 | 0.85 | 8.1 | |

| PNU | MS | 14.11 | 0.55 | 16.5 | 12.63 | 0.72 | 28.1 |

| MS+GLCM | 14.26 | 0.54 | 16.7 | 12.58 | 0.72 | 28 | |

| MS+GC+GLCM | 9.88 | 0.78 | 11.6 | 9.00 | 0.87 | 17.7 | |

| NNI | MS | 0.11 | 0.63 | 9.6 | 0.11 | 0.80 | 16.3 |

| MS+GLCM | 0.10 | 0.63 | 9.5 | 0.11 | 0.79 | 16.4 | |

| MS+GC+GLCM | 0.10 | 0.64 | 9.4 | 0.11 | 0.79 | 16.4 | |

| Yield | MS | 0.81 | 0.78 | 8.59 | 1.08 | 0.73 | 10.84 |

| MS+GLCM | 0.82 | 0.78 | 8.63 | 1.08 | 0.73 | 10.83 | |

| MS+GC+GLCM | 0.82 | 0.77 | 8.62 | 1.08 | 0.73 | 10.83 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, J.; Ren, W.; Liu, X.; Zha, H.; Wu, X.; He, C.; Sun, J.; Zhu, M.; Mi, G.; Chen, F.; et al. Improving Nitrogen Status Diagnosis and Recommendation of Maize Using UAV Remote Sensing Data. Agronomy 2023, 13, 1994. https://doi.org/10.3390/agronomy13081994

Liang J, Ren W, Liu X, Zha H, Wu X, He C, Sun J, Zhu M, Mi G, Chen F, et al. Improving Nitrogen Status Diagnosis and Recommendation of Maize Using UAV Remote Sensing Data. Agronomy. 2023; 13(8):1994. https://doi.org/10.3390/agronomy13081994

Chicago/Turabian StyleLiang, Jiaxing, Wei Ren, Xiaoyang Liu, Hainie Zha, Xian Wu, Chunkang He, Junli Sun, Mimi Zhu, Guohua Mi, Fanjun Chen, and et al. 2023. "Improving Nitrogen Status Diagnosis and Recommendation of Maize Using UAV Remote Sensing Data" Agronomy 13, no. 8: 1994. https://doi.org/10.3390/agronomy13081994

APA StyleLiang, J., Ren, W., Liu, X., Zha, H., Wu, X., He, C., Sun, J., Zhu, M., Mi, G., Chen, F., Miao, Y., & Pan, Q. (2023). Improving Nitrogen Status Diagnosis and Recommendation of Maize Using UAV Remote Sensing Data. Agronomy, 13(8), 1994. https://doi.org/10.3390/agronomy13081994