Recognition of Edible Fungi Fruit Body Diseases Based on Improved ShuffleNetV2

Abstract

:1. Introduction

2. Materials and Methods

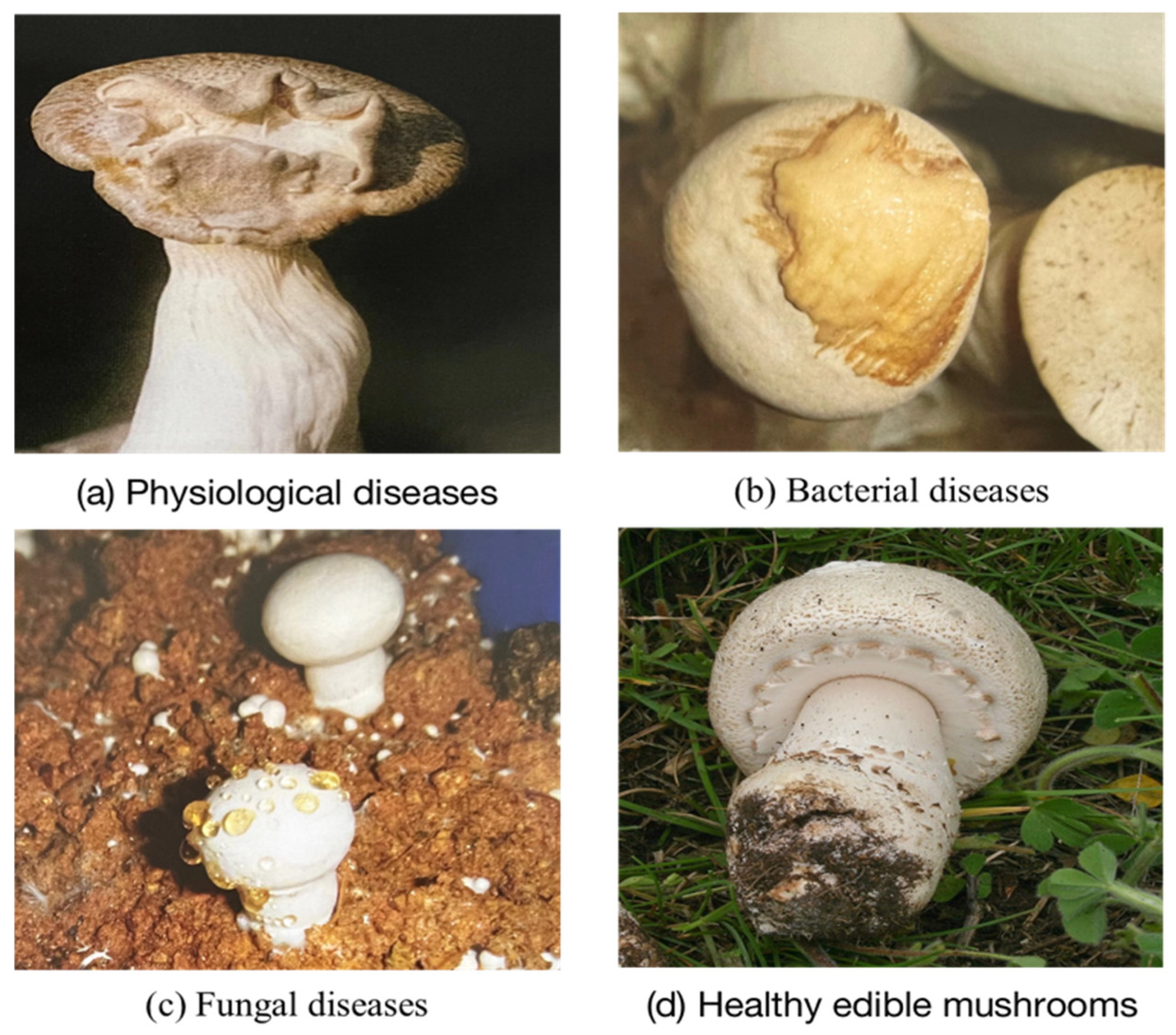

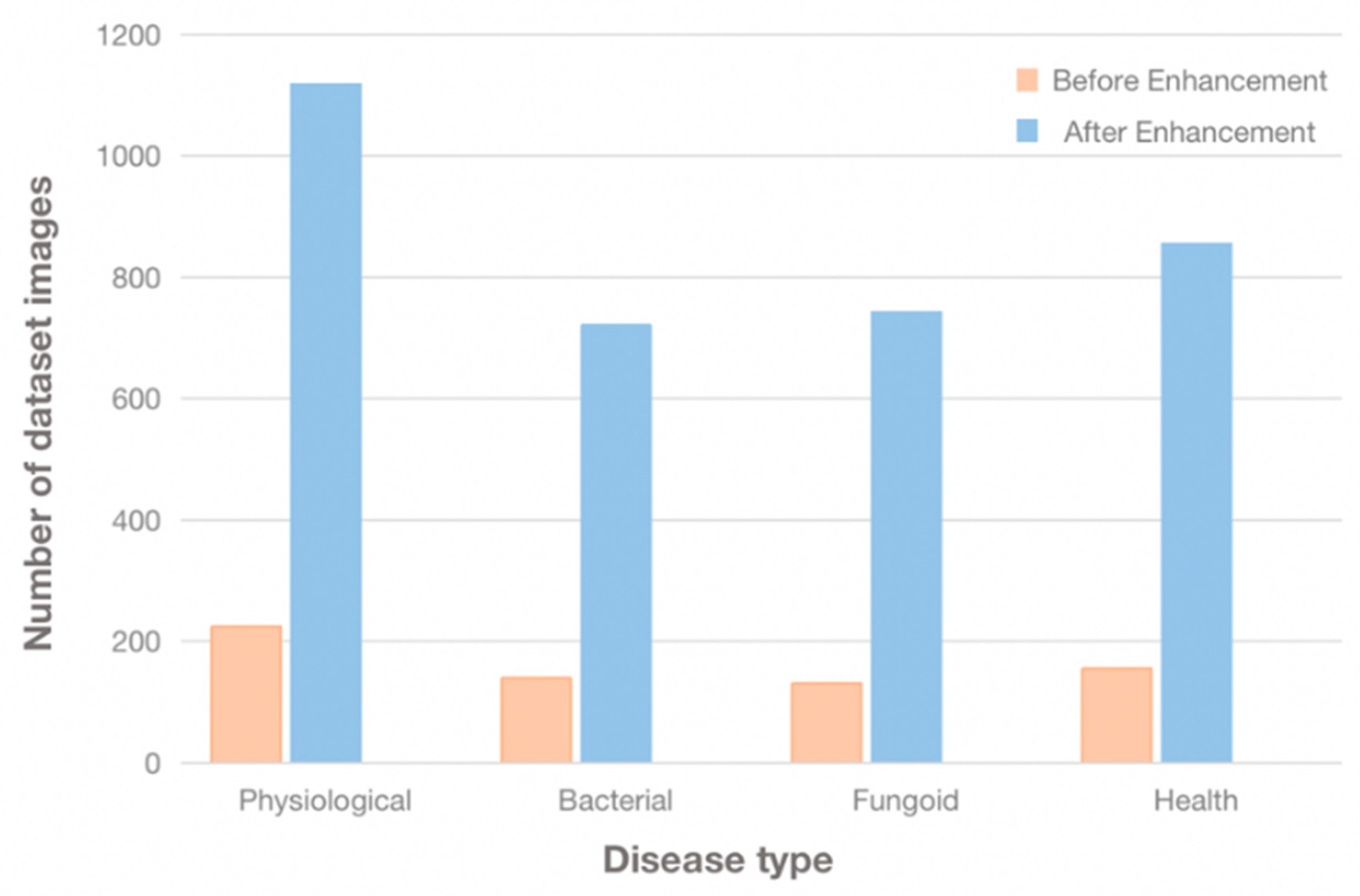

2.1. Construction of the Dataset

2.2. Data Preprocessing

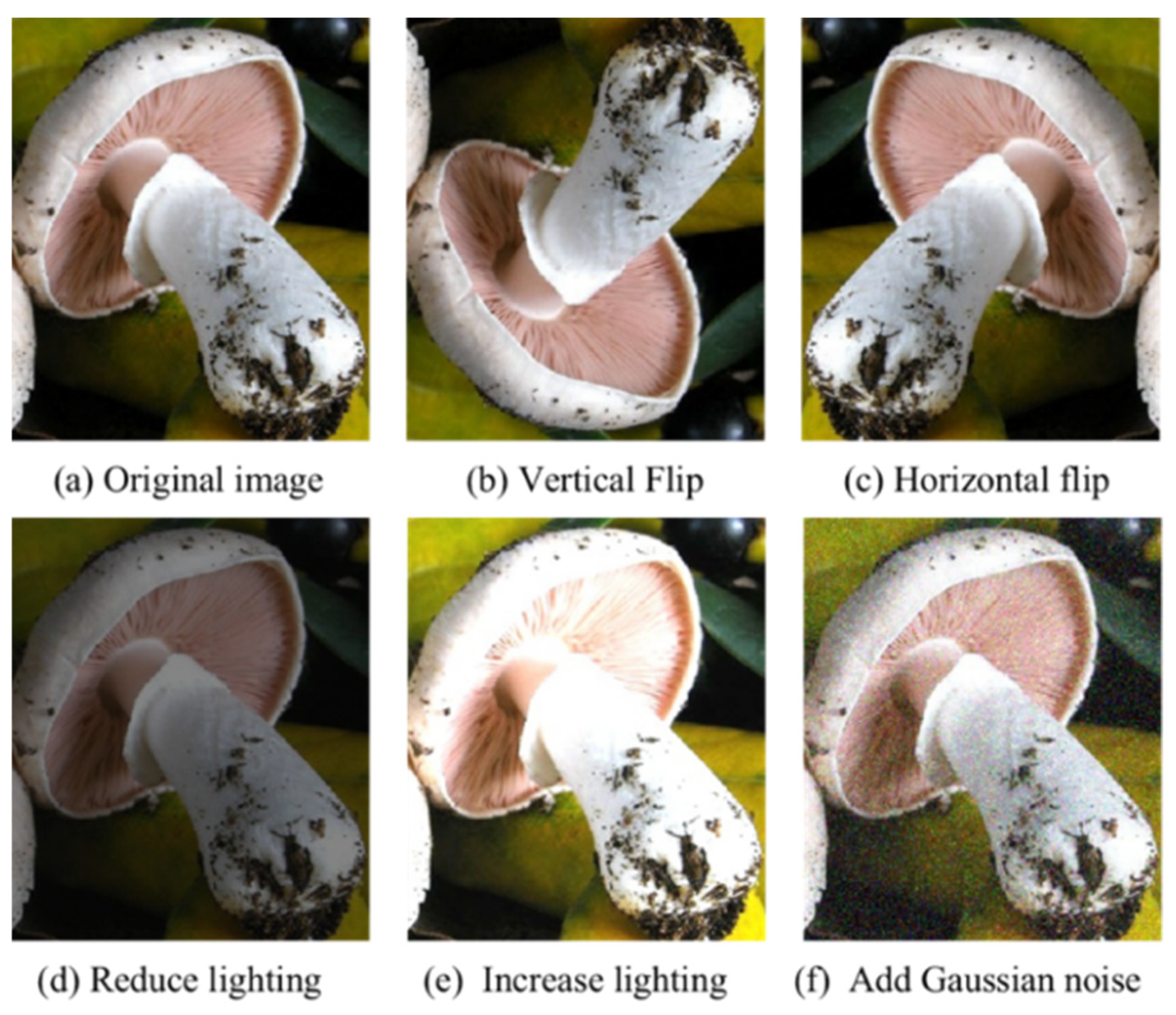

2.2.1. Data Augmentation

2.2.2. Dataset Partitioning

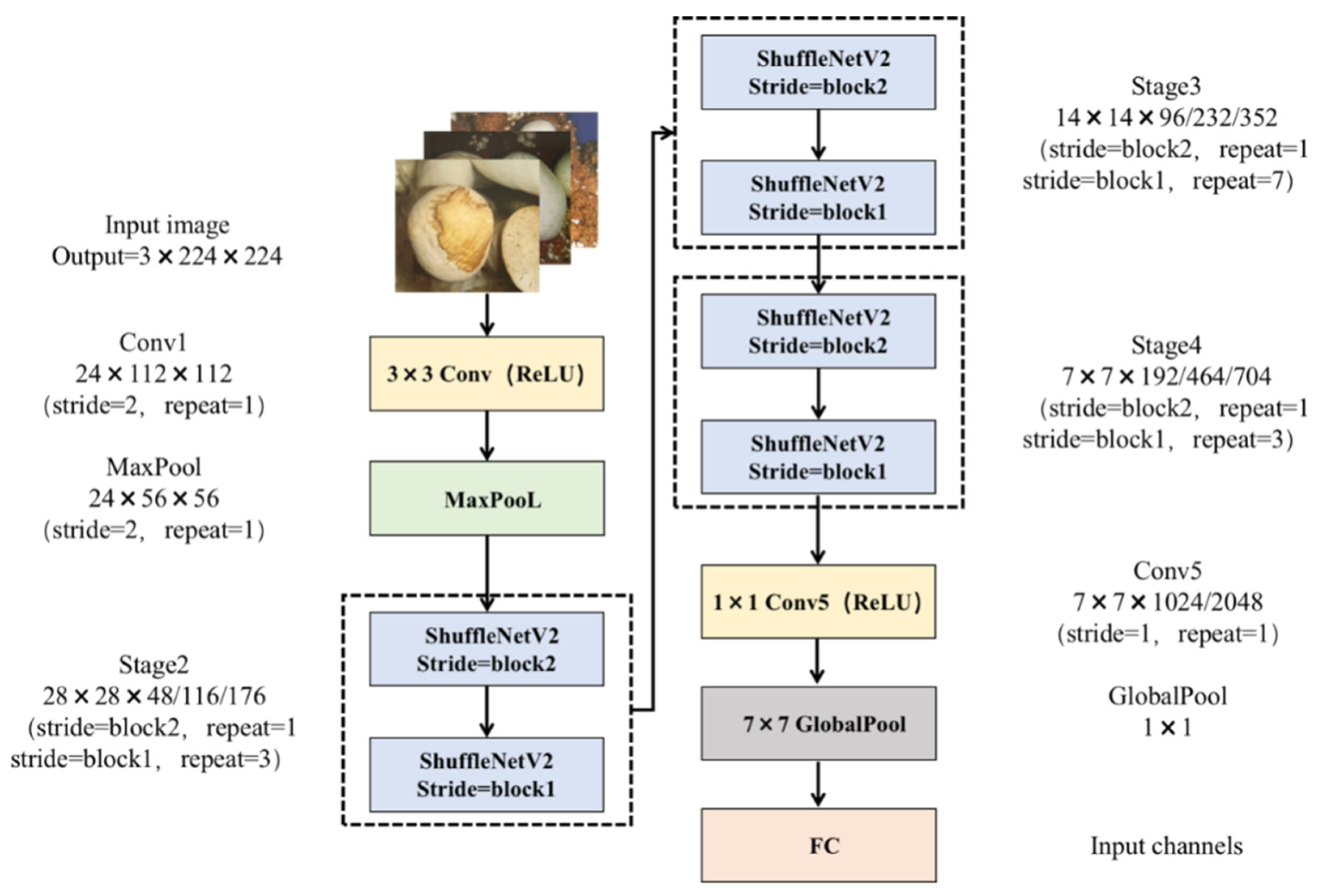

3. Recognition Model of Fruit Body Diseases of Edible Fungi

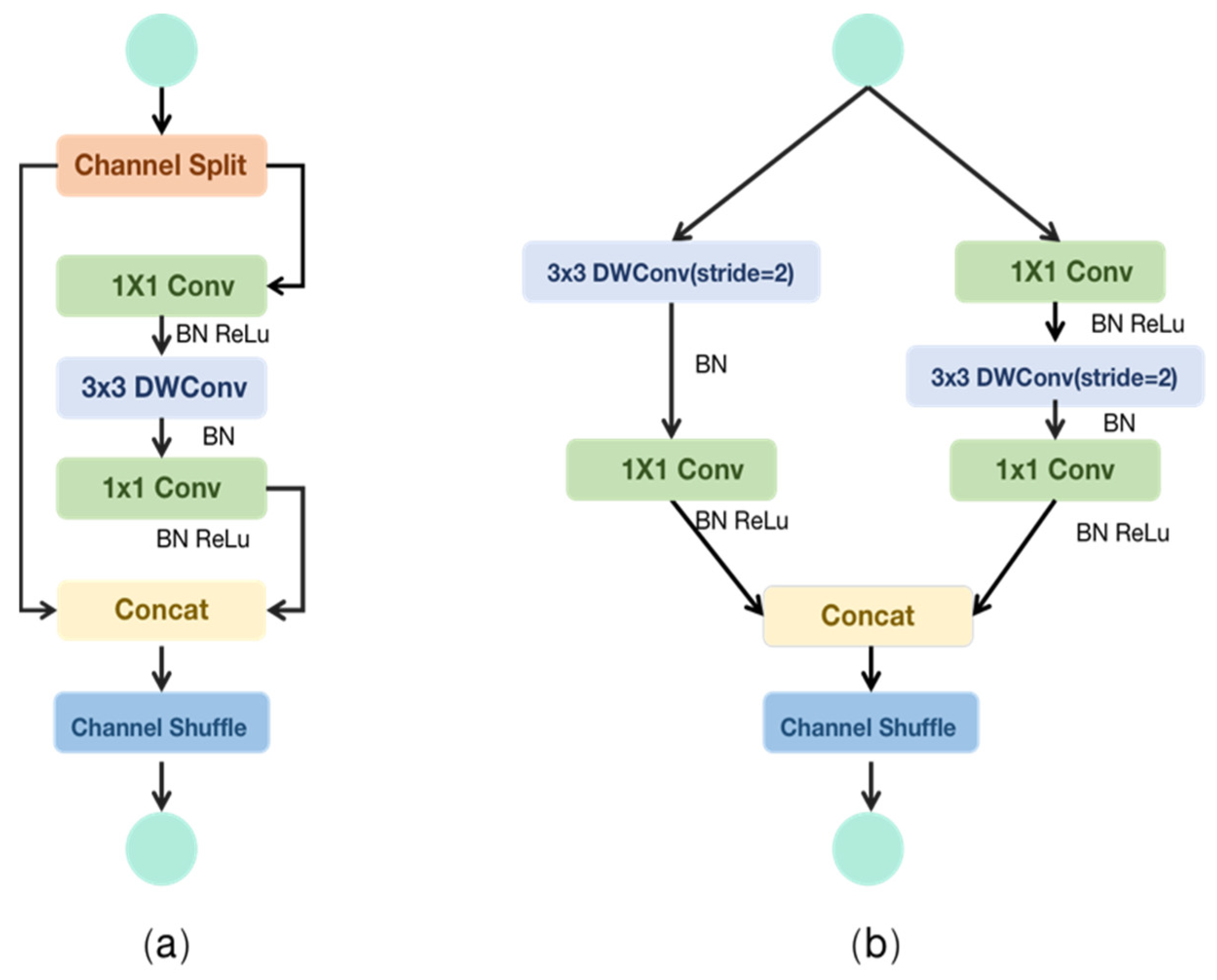

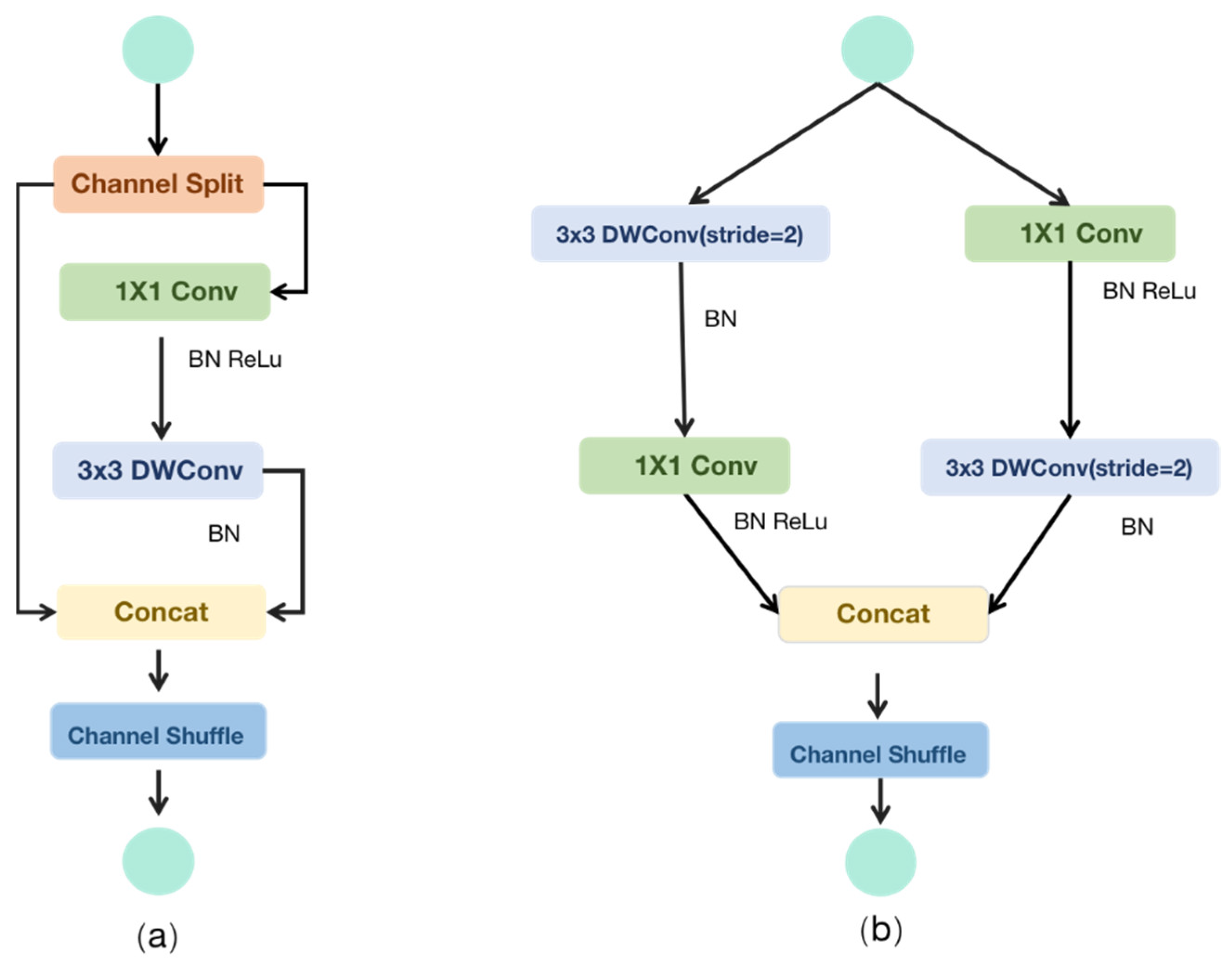

3.1. ShuffleNetV2 Model

3.2. Model Improvement

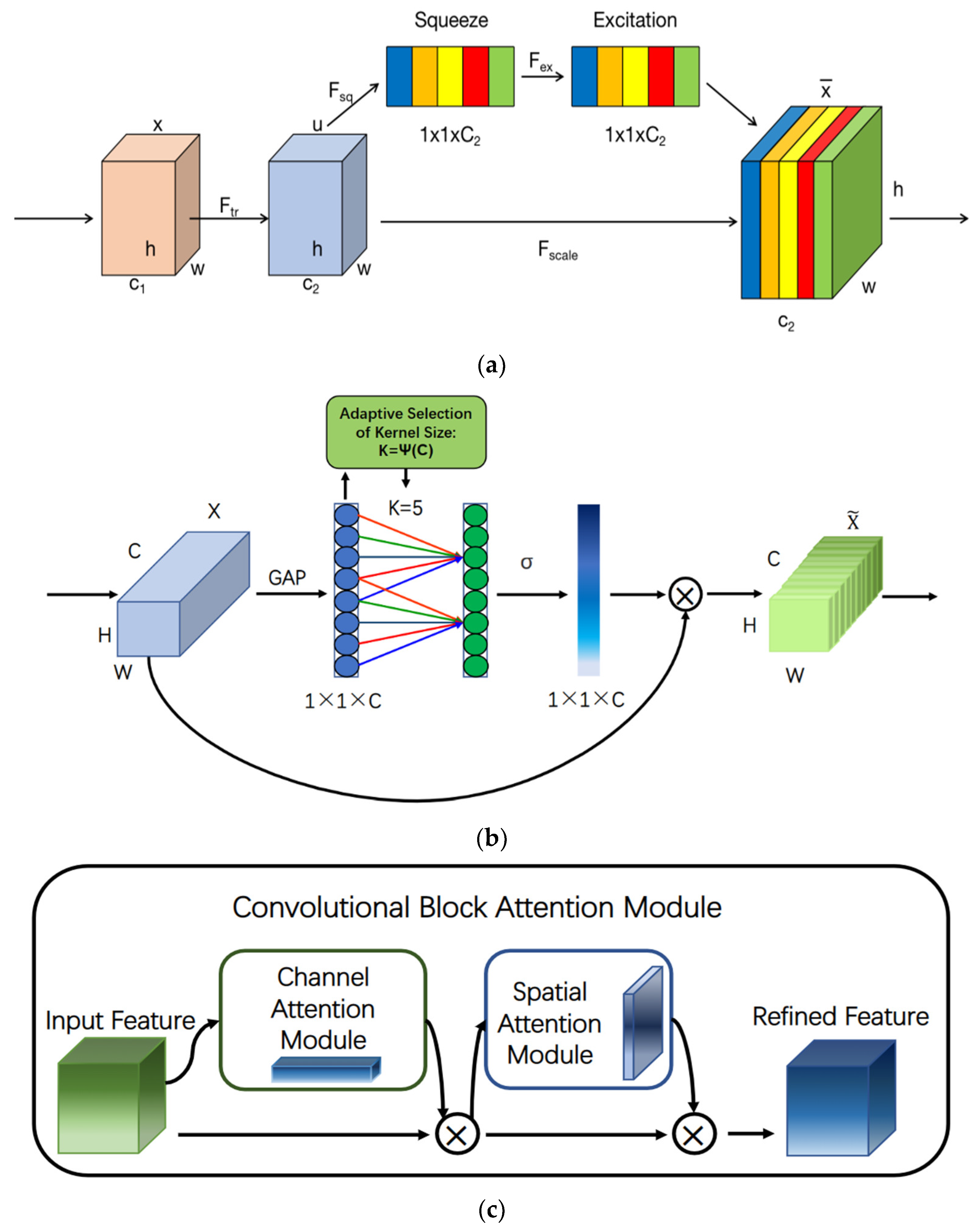

3.2.1. Attention Mechanism

3.2.2. Simplify Model Structure

3.3. Experimental Environment and Hyperparameters

3.4. Evaluation Index

- (1)

- Accuracy represents the proportion of correct results predicted by the model in the total samples. The calculation formula is

- (2)

- Precision represents the proportion of positive samples predicted correctly by the model in the predicted positive samples. The calculation formula is

- (3)

- Recall represents the proportion of positive samples predicted by the model to the actual positive samples. The calculation formula is

- (4)

- Macro-F1 represents the harmonic mean of precision and recall, and it reflects the comprehensive performance of the model. The calculation formula is

4. Experimental Results and Analysis

4.1. Model Performance Evaluation with Different Attention Mechanisms

4.2. Ablation Experiments for the ShuffleNetV2-Lite+SE Model

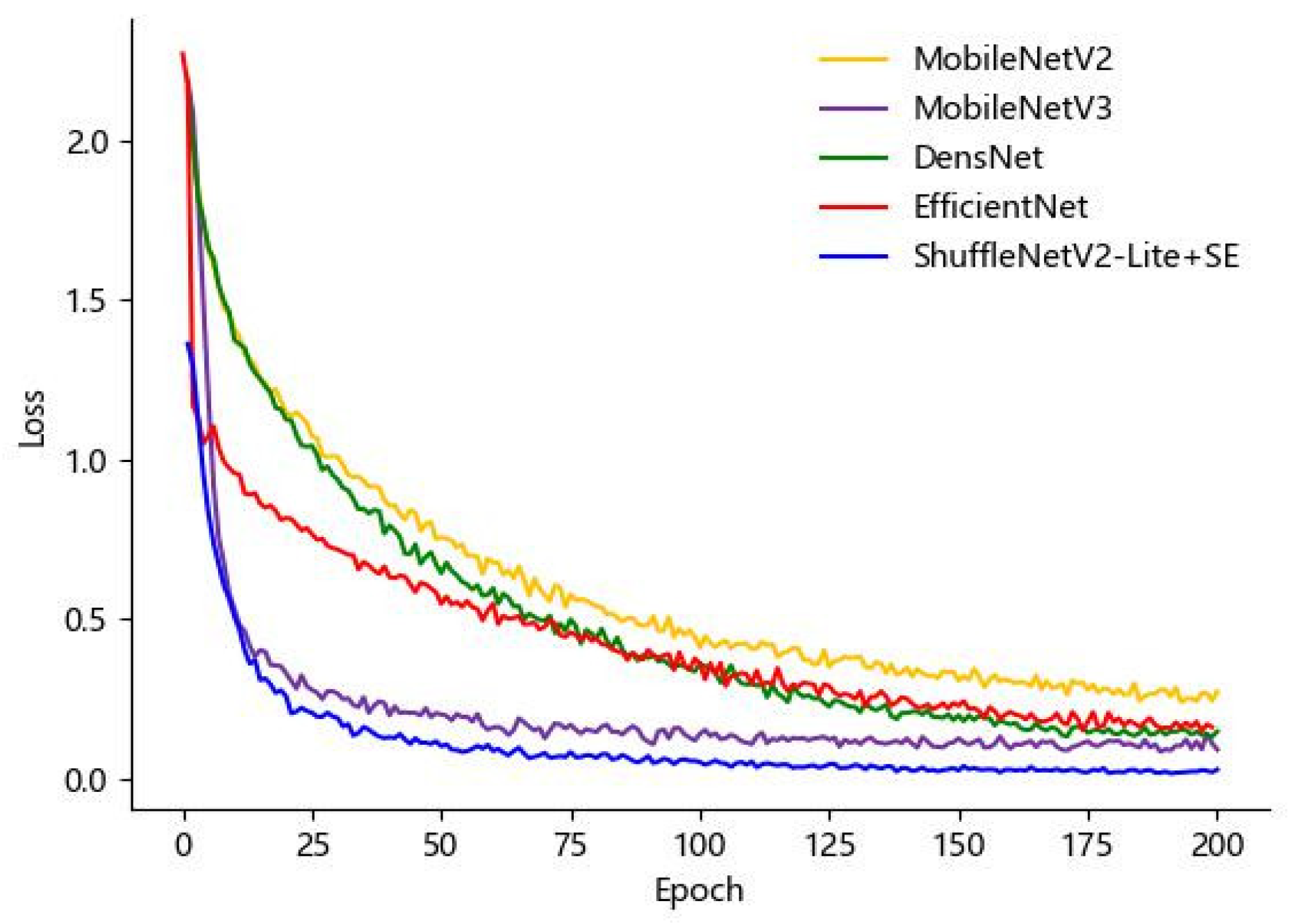

4.3. Performance Comparison of ShuffleNetV2-Lite+SE with Other Models

5. Conclusions

- (1)

- The constructed edible fungi fruit body disease dataset contains images of three types of diseases and one type of health condition, with a total of 649 images. After data augmentation, the total number of images in the dataset is 3439.

- (2)

- The CBAM module, ECA module, and SE module are embedded into ShuffleNetV2, respectively, to enhance the information interaction between channels and improve the model’s performance. Experimental results indicate that the recognition accuracy of the ShuffleNetV2+SE model on the test set is 2.43 percent higher than that of the original model. Compared with the ShuffleNetV2+CBAM model and the ShuffleNetV2+ECA model, the recognition accuracy of the Shufflenetv2+SE model is improved by 2.43 percent; the number of model parameters of the ShuffleNetV2+SE model is reduced by 0.1 MB and 0.5 MB, and the average iteration time is reduced by 10 s and 14 s, respectively.

- (3)

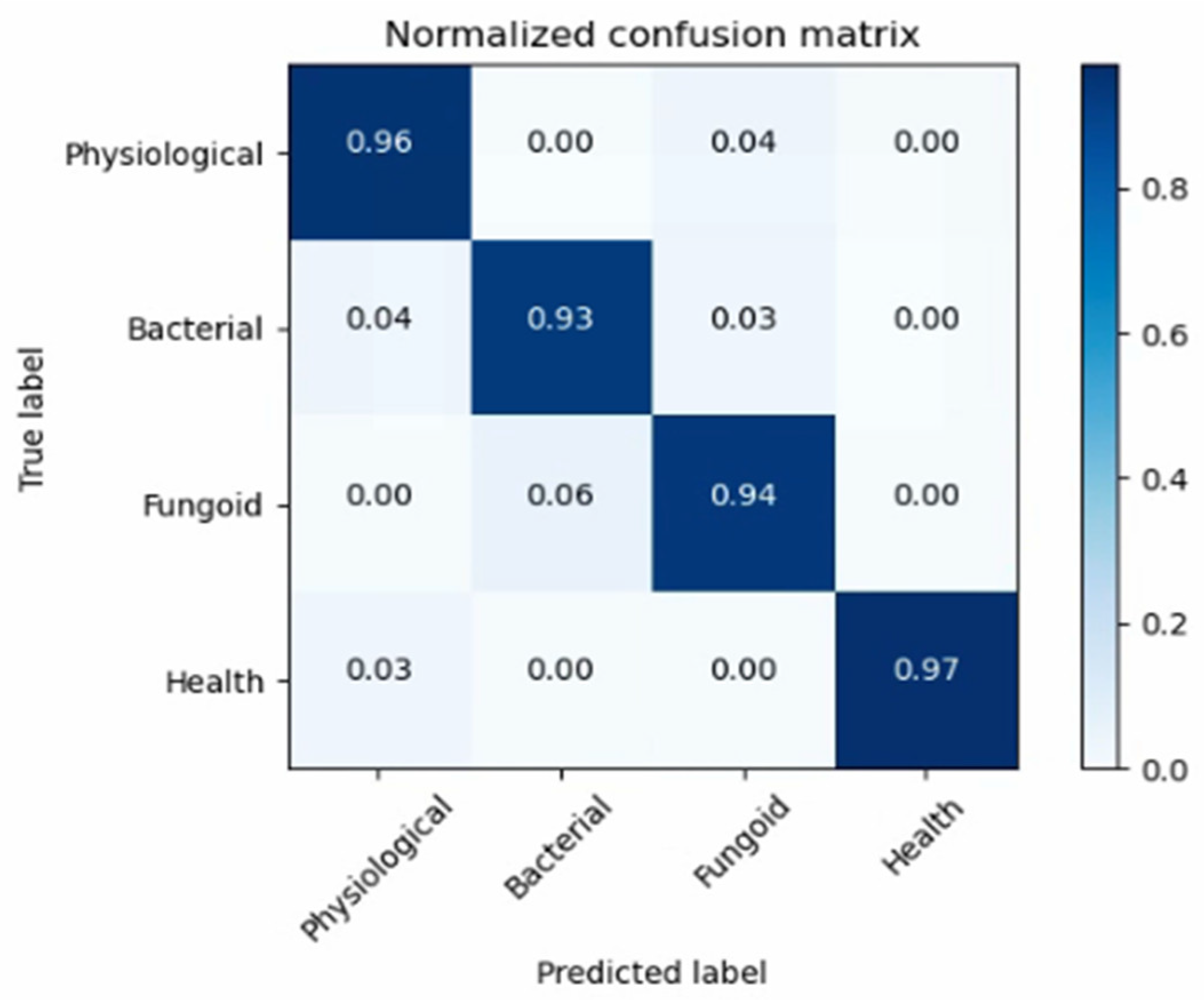

- The attention SE module is introduced into the ShuffleNetV2 model, and the structure of the model is optimized to obtain the ShuffleNetV2+SE model, the optimized ShuffleNet-Lite model, and the ShufflenetV2-Lite+SE model, respectively. Experimental results indicate that the accuracy, precision, recall, and the Macro-F1 value of the ShuffleNetV2-Lite+SE model reach 96.19%, 96.43%, 96.07%, and 96.24%, respectively, which is higher than those of other models. Compared with the ShuffleNetV2+SE model with the highest accuracy, our model reduces the number of model parameters by 11.11%, and the average iteration time by 12.77%, so it has lower model complexity than other models.

- (4)

- The ShuffleNetV2-Lite+SE model is compared with representative lightweight convolutional neural networks, including MobileNetV2, MobileNetV3, DenseNet, and EfficientNet. Compared with the best-performing MobileNetV3 model, the parameters of the ShufflenetV2-Lite+SE model are reduced by 68.63%, and the average iteration time is reduced by 31.67%. The experimental results show that the ShuffleNetV2 Lite+SE model has higher accuracy and lower model complexity, and has certain advantages compared to the existing relevant research. It can be deployed on mobile terminal devices to promote real-time and accurate recognition of diseases in edible fungi fruit bodies.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arman, H.; Shreya, G.; Devarai, S.K. Current trends in health-promoting potential and biomaterial applications of edible mushrooms for human wellness. Food Biosci. 2023, 51, 102290. [Google Scholar]

- Rachele, D.C.; Liam, P.; Teresina, M. A systematic review on drivers influencing consumption of edible mushrooms and innovative mushroom-containing products. Appetite 2023, 182, 106454. [Google Scholar]

- Zhao, J.; Wang, T.; Zhang, C.; Han, X.; Yan, J.; Gan, B. A comparative analysis of the umami taste of five fresh edible mushrooms by simulating the chemical environment of oral digestion in vitro. LWT 2023, 176, 114522. [Google Scholar] [CrossRef]

- Xu, J.; Xu, D.; Hu, Q.; Ma, N.; Pei, F.; Su, A.; Ma, G. Immune regulatory functions of biologically active proteins from edible fungi. Front. Immunol. 2023, 13, 1034545. [Google Scholar] [CrossRef] [PubMed]

- Bhushan, A.; Kulshreshtha, M. The Medicinal Mushroom Agaricus bisporus: Review of Phytopharmacology and Potential Role in the Treatment of Various Diseases. J. Nat. Sci. Med. 2018, 1, 4–9. [Google Scholar]

- Bok, Y.Y.; Ji, O.M.; Lee, O.Y.; Gyun, S.P.; Yeul, J.K.; Sik, K.W. Development trend of the mushroom industry. J. Mushroom 2016, 14, 142–154. [Google Scholar]

- Yin, H.; Yi, W.; Hu, D. Computer vision and machine learning applied in the mushroom industry: A critical review. Comput. Electron. Agric. 2022, 198, 107015. [Google Scholar] [CrossRef]

- Zhou, L.; Guo, S.; Shu, M.; Liang, L.; Li, Y. Study on three major effects and guarantee system for quality management of edible mushroom products. IOP Conf. Ser. Earth Environ. Sci. 2018, 185, 012019. [Google Scholar] [CrossRef]

- Changtian, L.; Shuai, X. Edible mushroom industry in China: Current state and perspectives. Appl. Microbiol. Biotechnol. 2022, 106, 3949–3955. [Google Scholar]

- Bellettini, M.B.; Bellettini, S.; Fiorda, F.A.; Pedor, A.C.; Bach, F.; Fabela-Moron, M.F.; Hoffmann-Ribani, R. Diseases and pests noxious to Pleurotus spp. mushroom crops. Rev. Argent. Microbiol. 2018, 50, 216–226. [Google Scholar] [CrossRef]

- In, L.D.; Hwan, L.J.; Ho, J.S.; Jong, O.S.; Chui, D.I. Crop Disease Diagnosis with Deep Learning-Based Image Captioning and Object Detection. Appl. Sci. 2023, 13, 3148. [Google Scholar]

- Yasuhito, O. Sustainability perspectives for future continuity of mushroom production: The bright and dark sides. Front. Sustain. Food Syst. 2022, 6, 447. [Google Scholar]

- Li, E.; Wang, L.; Xie, Q.; Gao, R.; Su, Z.; Li, Y. A novel deep learning method for maize disease identification based on small sample-size and complex background datasets. Ecol. Inform. 2023, 75, 102011. [Google Scholar] [CrossRef]

- Xu, L.; Cao, B.; Ning, S.; Zhang, W.; Zhao, F. Peanut leaf disease identification with deep learning algorithms. Mol. Breed. 2023, 43, 25. [Google Scholar] [CrossRef]

- Xu, P.; Fu, L.; Xu, K.; Sun, W.; Tan, Q.; Zhang, Y.; Zha, X.; Yang, R. Investigation into maize seed disease identification based on deep learning and multi-source spectral information fusion techniques. J. Food Compos. Anal. 2023, 119, 105254. [Google Scholar] [CrossRef]

- Edoardo, R.; Cinzia, V.; Alessio, F. Quantile-distribution functions and their use for classification, with application to naïve Bayes classifiers. Stat. Comput. 2023, 33, 55. [Google Scholar]

- Moch, L.; Syaiful, R.H.; Mochammad, H.; Faishol, A.M.; Nikmatus, S.Z. Feature Extraction and Naïve Bayes Algorithm for Defect Classification of Manalagi Apples. J. Phys. Conf. Ser. 2022, 2394, 012014. [Google Scholar]

- Ye, Z.; Zuo, T.; Chen, W.; Li, Y.; Lu, Z. Textual emotion recognition method based on ALBERT-BiLSTM model and SVM-NB classification. Soft Comput. 2023, 27, 5063–5075. [Google Scholar] [CrossRef]

- Lei, X.; Zhang, W. Logistic regression algorithm to identify candidate disease genes based on reliable protein-protein interaction network. Sci. China Inf. Sci. 2021, 64, 179101. [Google Scholar] [CrossRef]

- Archana, S.K.; Srinivasan, R.B.; Prabakar, N.T.; Francis, A.S. A novel method to improve computational and classification performance of rice plant disease identification. J. Supercomput. 2022, 78, 8925–8945. [Google Scholar] [CrossRef]

- Yogeswararao, G.; Malmathanraj, R.; Palanisamy, P. Fractional weighted nuclear norm based two dimensional linear discriminant features for cucumber leaf disease recognition. Multimed. Tools Appl. 2022, 81, 38735–38755. [Google Scholar] [CrossRef]

- Prabu, M.; Chelliah, B.J. An intelligent approach using boosted support vector machine based arithmetic optimization algorithm for accurate detection of plant leaf disease. Pattern Anal. Appl. 2022, 26, 367–379. [Google Scholar] [CrossRef]

- Jitendra, T.V.; Tausif, D.; Tarun, S. Identification of Plant Diseases Using Multi-Level Classification Deep Model. Int. J. Ambient. Comput. Intell. (IJACI) 2022, 13, 21. [Google Scholar]

- Singh, T.P.; Pritee, K.; Tanuja, S.; Apaiajita, O. Trends in vision-based machine learning techniques for plant disease identification: A systematic review. Expert Syst. Appl. 2022, 208, 118117. [Google Scholar]

- Amudha, M.; Brindha, K. Multi Techniques for Agricultural Image Disease Classification and Detection: A Review. Nat. Environ. Pollut. Technol. 2022, 21, 2165–2175. [Google Scholar] [CrossRef]

- Ganbayar, B.; Hyun, N.S.; Ryoung, P.K. Deep learning-based plant classification and crop disease classification by thermal camera. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 10474–10486. [Google Scholar]

- Islam, M.A.; Sikder, M.H. A Deep Learning Approach to Classify the Potato Leaf Disease. J. Adv. Math. Comput. Sci. 2022, 37, 143–155. [Google Scholar] [CrossRef]

- Karthik, R.; Dinesh, T.R.; Shivam, B.; Sreejan, C. A novel deep learning architecture for disease classification in Arabica coffee plants. Concurr. Comput. Pract. Exp. 2023, 35, e7625. [Google Scholar]

- Rehman, S.; Khan, M.A.; Alhaisoni, M.; Armghan, A.; Alenezi, F.; Alqahtani, A.; Vesal, K.; Nam, Y. Fruit Leaf Diseases Classification: A Hierarchical Deep Learning Framework. Comput. Mater. Contin. 2023, 75, 1179–1194. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Y.; Wang, D. Convolution Network Enlightened Transformer for Regional Crop Disease Classification. Electronics 2022, 11, 3174. [Google Scholar] [CrossRef]

- Loti, N.N.A.; Noor, M.R.M.; Chang, S.-W. Integrated analysis of machine learning and deep learning in chili pest and disease identification. J. Sci. Food Agric. 2021, 101, 3582–3594. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y.A. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Bhuiyan, M.A.B.; Abdullah, H.M.; Arman, S.E.; Rahman, S.S.; Al Mahmud, K. BananaSqueezeNet: A very fast, lightweight convolutional neural network for the diagnosis of three prominent banana leaf diseases. Smart Agric. Technol. 2023, 4, 100214. [Google Scholar] [CrossRef]

- Lin, J.; Chen, X.; Pan, R.; Cao, T.; Cai, J.; Chen, Y.; Peng, X.; Cernava, T.; Zhang, X. GrapeNet: A Lightweight Convolutional Neural Network Model for Identification of Grape Leaf Diseases. Agriculture 2022, 12, 887. [Google Scholar] [CrossRef]

- Wang, T.; Xu, H.; Hai, Y.; Cui, Y.; Chen, Z. An Improved Crop Disease Identification Method Based on Lightweight Convolutional Neural Network. J. Electr. Comput. Eng. 2022, 2022, 1–16. [Google Scholar] [CrossRef]

- Bao, W.; Yang, X.; Liang, D.; Hu, G.; Yang, X. Lightweight convolutional neural network model for field wheat ear disease identification. Comput. Electron. Agric. 2021, 189, 106367. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, X.; Lin, J.; Pan, R.; Cao, T.; Cai, J.; Yu, D.; Cernava, T.; Zhang, X. DFCANet: A Novel Lightweight Convolutional Neural Network Model for Corn Disease Identification. Agriculture 2022, 12, 2047. [Google Scholar] [CrossRef]

- Chen, J.; Wang, W.; Zhang, D.; Zeb, A.; Nanehkaran, Y.A. Attention embedded lightweight network for maize disease recognition. Plant Pathol. 2020, 70, 630–642. [Google Scholar] [CrossRef]

- Zeng, W.; Li, M. Crop leaf disease recognition based on Self-Attention convolutional neural network. Comput. Electron. Agric. 2020, 172, 105341. [Google Scholar] [CrossRef]

- Atila, Ü.; Uçar, M.; Akyol, K. Plant leaf disease classification using EfficientNet deep learning model. Ecol. Inform. 2021, 61, 101182. [Google Scholar] [CrossRef]

- Euncheol, K.; Yeongtae, H.; Seok, O.I. Mushroom Image Recognition using Convolutional Neural Network and Transfer Learning. KIISE Trans. Comput. Pract. 2018, 24, 53–57. [Google Scholar]

- Zhang, S.; Yang, H.; Yang, C.; Yuan, W.; Li, X.; Wang, X.; Zhang, Y.; Cai, X.; Sheng, Y.; Deng, X.; et al. Edge Device Detection of Tea Leaves with One Bud and Two Leaves Based on ShuffleNetv2-YOLOv5-Lite-E. Agronomy 2023, 13, 577. [Google Scholar] [CrossRef]

- Türkmen, S.; Heikkilä, J. An efficient solution for semantic segmentation: ShuffleNet V2 with atrous separable convolutions. In Proceedings of the Image Analysis: 21st Scandinavian Conference, SCIA 2019, Norrköping, Sweden, 11–13 June 2019. [Google Scholar]

- Wei, J.; Yu, P.; Bo, X.; Juncheng, W. A Real-Time Apple Targets Detection Method for Picking Robot Based on ShufflenetV2-YOLOX. Agriculture 2022, 12, 856. [Google Scholar]

- Li, X.; Wen, Z.; Hua, Q. Vehicle License Plate Recognition Using Shufflenetv2 Dilated Convolution for Intelligent Transportation Applications in Urban Internet of Things. Wirel. Commun. Mob. Comput. 2022, 2022, 3627246. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Chen, L.; Jiao, H. Garbage classification system based on improved ShuffleNet v2. Resour. Conserv. Recycl. 2022, 178, 106090. [Google Scholar] [CrossRef]

- Liu, Z.; Jiang, J.; Du, Y.; Xu, Z. A Band Influence Algorithm for Hyperspectral Band Selection to Classify Moldy Peanuts. IEEE Access 2021, 9, 147527–147536. [Google Scholar] [CrossRef]

- Min, B.; Kim, T.; Shin, D.; Shin, D. Data Augmentation Method for Plant Leaf Disease Recognition. Appl. Sci. 2023, 13, 1465. [Google Scholar] [CrossRef]

- Wan, X.; Zhang, X.; Liu, L. An Improved VGG19 Transfer Learning Strip Steel Surface Defect Recognition Deep Neural Network Based on Few Samples and Imbalanced Datasets. Appl. Sci. 2021, 11, 2606. [Google Scholar] [CrossRef]

- Mateusz, B.; Atsuto, M.; Mazurowski, M.A. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [Google Scholar]

- Parab, M.; Bhanushali, A.; Ingle, P.; Kumar, B.N.P. Image Enhancement and Exposure Correction Using Convolutional Neural Network. SN Comput. Sci. 2023, 4, 204. [Google Scholar] [CrossRef]

- Valentina, M.A.; Mircea, T.R.; Serban, M.; Mihaeia, C. On Spectral-Spatial Classification of Hyperspectral Images Using Image Denoising and Enhancement Techniques, Wavelet Transforms and Controlled Data Set Partitioning. Remote Sens. 2022, 14, 1475. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083v2. [Google Scholar]

| Condition | Training Set | Test Set |

|---|---|---|

| Physiological | 895 | 224 |

| Bacterial | 576 | 145 |

| Fungoid | 594 | 149 |

| Health | 684 | 172 |

| Environment | Parameters |

|---|---|

| OS | Windows 10 |

| CPU | Intel Xeon Gold 6246R @ 3.40 GHz 32 cores |

| Memory | 128 GB |

| Deep learning framework | Pytorch-GPU 1.12.1 |

| GPU | NVIDIA Quadro RTX 8000 |

| CUDA version | CUDA Toolkit 11.3 |

| Pytorch version | Pytorch 0.9.1 |

| Parameters | Values |

|---|---|

| Epoch | 200 |

| Batch size | 64 |

| Learning rate | 0.01 |

| Optimizer | Adam |

| Model | Accuracy (%) | Params (MB) | Time/Epoch (s) |

|---|---|---|---|

| ShuffleNetV2 | 91.34 | 1.4 | 45 |

| ShuffleNetV2+CBAM | 92.91 | 1.9 | 57 |

| ShuffleNetV2+ECA | 93.15 | 2.3 | 61 |

| ShuffleNetV2+SE | 93.77 | 1.8 | 47 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | Macro-F1 (%) | Params (MB) | Time/Epoch (s) |

|---|---|---|---|---|---|---|

| ShuffleNetV2 | 91.34 | 91.54 | 92.21 | 90.88 | 1.4 | 45 |

| ShuffleNetV2+SE | 93.77 | 93.84 | 93.59 | 93.71 | 1.8 | 47 |

| ShuffleNetV2-Lite | 93.88 | 93.17 | 94.70 | 93.93 | 1.2 | 40 |

| ShuffleNetV2-Lite+SE | 96.19 | 96.43 | 96.07 | 96.25 | 1.6 | 41 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | Macro-F1 (%) | Params (MB) | Time/Epoch (s) |

|---|---|---|---|---|---|---|

| MobileNetV2 | 85.72 | 85.33 | 85.93 | 85.63 | 3.5 | 66 |

| MobileNetV3 | 91.72 | 91.38 | 91.40 | 91.39 | 5.1 | 60 |

| DenseNet | 88.50 | 88.58 | 87.04 | 87.80 | 7.8 | 62 |

| EfficientNet | 89.29 | 89.46 | 89.98 | 89.84 | 5.3 | 71 |

| ShuffleNetV2-Lite+SE | 96.19 | 96.43 | 96.07 | 96.25 | 1.6 | 41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Zhang, Y.; Cao, H.; Yang, D.; Zhou, L.; Yu, H. Recognition of Edible Fungi Fruit Body Diseases Based on Improved ShuffleNetV2. Agronomy 2023, 13, 1530. https://doi.org/10.3390/agronomy13061530

Xu X, Zhang Y, Cao H, Yang D, Zhou L, Yu H. Recognition of Edible Fungi Fruit Body Diseases Based on Improved ShuffleNetV2. Agronomy. 2023; 13(6):1530. https://doi.org/10.3390/agronomy13061530

Chicago/Turabian StyleXu, Xingmei, Yuqi Zhang, Hongcheng Cao, Dawei Yang, Lei Zhou, and Helong Yu. 2023. "Recognition of Edible Fungi Fruit Body Diseases Based on Improved ShuffleNetV2" Agronomy 13, no. 6: 1530. https://doi.org/10.3390/agronomy13061530

APA StyleXu, X., Zhang, Y., Cao, H., Yang, D., Zhou, L., & Yu, H. (2023). Recognition of Edible Fungi Fruit Body Diseases Based on Improved ShuffleNetV2. Agronomy, 13(6), 1530. https://doi.org/10.3390/agronomy13061530