Peanut Defect Identification Based on Multispectral Image and Deep Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Peanut Defects Dataset Preparation

2.1.1. Multispectral Characteristic Wavelength

2.1.2. Acquisition System

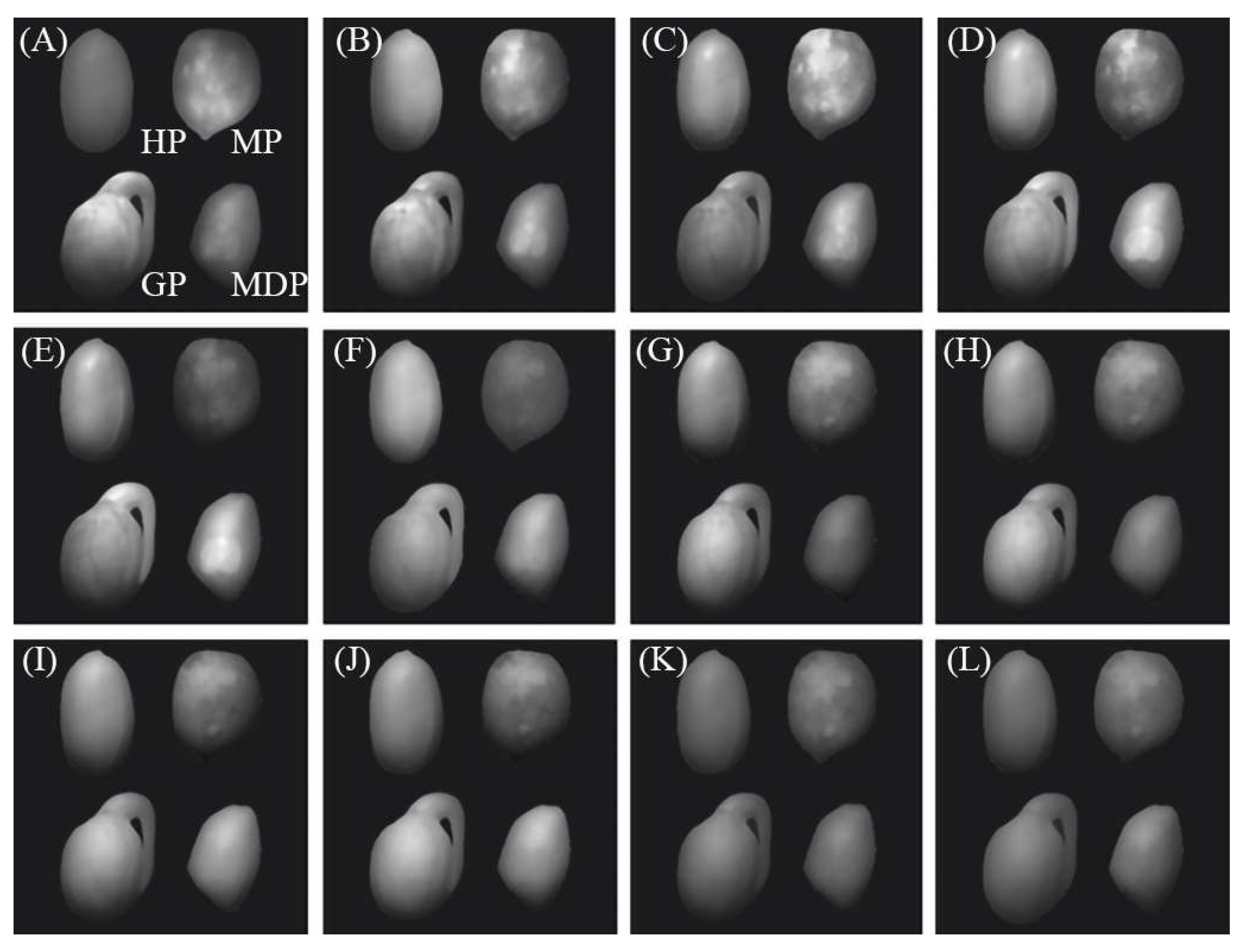

2.1.3. Dataset Acquisition and Labeling

2.2. Target Identification Model

2.2.1. Network Structure

2.2.2. The Texture Extraction Mechanism Based on Pooling Operation

2.2.3. Texture Attention Module

- Texture-based channel attention module

- Spatial attention module based on texture

2.2.4. Texture Enhancement Module

2.2.5. Model Evaluation

3. Results

3.1. Training Platform and Parameter Settings

3.2. Model Training and Testing

3.3. Ablation Experiments

4. Conclusions

- (1)

- To improve the identification of peanut defects in images, we determined the spectral features of various peanut defects, such as mold, mechanical damage, and embryo, using experimental methods. We used these spectral features as the light source to enhance the identification of peanut defects in images. Furthermore, we designed a uniform light source system based on a symmetrical integral sphere structure to reduce the impact of light source pollution, such as light spots and shadows.

- (2)

- At the algorithm level, we introduced object recognition algorithms to automate the classification and localization of peanut defects, providing necessary guidance information for subsequent sorting equipment. As traditional convolutional networks have weak feature recognition abilities for distinguishing healthy peanuts from those with mold and mechanical damage, we specifically designed a texture attention mechanism and a texture enhancement module. The experimental results show that the proposed scheme achieves a maximum mAP of 99.97% (the code and dataset are available at https://github.com/HyperSystemAndImageProc/Multi-target-Identification-of-Peanut-Defects. Accessed on 29 July 2022).

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Balasubramanian, P.; Mariappan, V.; Lourdusamy, D.K.; Chinnamuthu, C.; Swetha, S. Peanut as a smart food and their nutrients aspects in planet: A review. Agric. Rev. 2020, 41, 403–407. [Google Scholar] [CrossRef]

- Syed, F.; Arif, S.; Ahmed, I.; Khalid, N. Groundnut (peanut) (Arachis hypogaea). In Oilseeds: Health Attributes and Food Applications; Springer: Singapore, 2021; pp. 93–122. [Google Scholar] [CrossRef]

- Zhao, X.; Chen, J.; Du, F. Potential use of peanut by-products in food processing: A review. J. Food Sci. Technol. 2012, 49, 521–529. [Google Scholar] [CrossRef]

- Diao, E.; Dong, H.; Hou, H.; Zhang, Z.; Ji, N.; Ma, W. Factors influencing aflatoxin contamination in before and after harvest peanuts: A review. J. Food Res. 2015, 4, 148. [Google Scholar] [CrossRef]

- Darko, C.; Kumar Mallikarjunan, P.; Kaya-Celiker, H.; Frimpong, E.A.; Dizisi, K. Effects of packaging and pre-storage treatments on aflatoxin production in peanut storage under controlled conditions. J. Food Sci. Technol. 2018, 55, 1366–1375. [Google Scholar] [CrossRef]

- Sun, K.; Zhang, Y.J.; Tong, S.Y.; Wang, C.B. Study on rice grain mildewed region recognition based on microscopic computer vision and YOLO-v5 model. Res. Sq. 2022, 11, 4031. [Google Scholar] [CrossRef] [PubMed]

- Osipov, A.; Shumaev, V.; Ekielski, A.; Gataullin, T.; Suvorov, S.; Mishurov, S.; Gataullin, S. Identification and classification of mechanical damage during continuous harvesting of root crops using computer vision methods. IEEE Access 2022, 10, 28885–28894. [Google Scholar] [CrossRef]

- Wang, C.; Xiao, Z. Potato surface defect detection based on deep transfer learning. Agriculture 2021, 11, 863. [Google Scholar] [CrossRef]

- ElMasry, G.; Mandour, N.; Al-Rejaie, S.; Belin, E.; Rousseau, D. Recent applications of multispectral imaging in seed phenotyping and quality monitoring: An overview. Sensors 2019, 19, 1090. [Google Scholar] [CrossRef] [PubMed]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation: A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Wu, Q.; Xie, L.; Xu, H. Determination of toxigenic fungi and aflatoxins in nuts and dried fruits using imaging and spectroscopic techniques. Food Chem. 2018, 252, 228–242. [Google Scholar] [CrossRef]

- Wu, Q.; Xu, H. Design and development of an on-line fluorescence spectroscopy system for detection of aflatoxin in pistachio nuts. Postharvest Biol. Technol. 2020, 159, 111016. [Google Scholar] [CrossRef]

- Noguera, M.; Millan, B.; Aquino, A.; Andújar, J.M. Methodology for Olive Fruit Quality Assessment by Means of a Low-Cost Multispectral Device. Agronomy 2022, 12, 979. [Google Scholar] [CrossRef]

- Huang, W.; Li, J.; Wang, Q.; Chen, L. Development of a multispectral imaging system for online detection of bruises on apples. J. Food Eng. 2015, 146, 62–71. [Google Scholar] [CrossRef]

- Yang, Y.; Zhao, X.; Huang, M.; Wang, X.; Zhu, Q. Multispectral image based germination detection of potato by using supervised multiple threshold segmentation model and canny edge detector. Comput. Electron. Agric. 2021, 182, 106041. [Google Scholar] [CrossRef]

- Bartolić, D.; Mutavdžić, D.; Carstensen, J.M.; Stanković, S.; Nikolić, M.; Krstović, S.; Radotić, K. Fluorescence spectroscopy and multispectral imaging for fingerprinting of aflatoxin-B1 contaminated (Zea mays L.) seeds: A preliminary study. Sci. Rep. 2022, 12, 4849. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Prabhath, G.W.K.; Dissanayake, D.W.S.C.B.; Herath, V.R.; Godaliyadda, G.M.R.I.; Ekanayake, M.P.B.; Demini, D.; Madhujith, T. Validation of multispectral imaging for the detection of selected adulterants in turmeric samples. J. Food Eng. 2020, 266, 109700. [Google Scholar] [CrossRef]

- Stuart, M.B.; Stanger, L.R.; Hobbs, M.J.; Pering, T.D.; Thio, D.; McGonigle, A.J.; Willmott, J.R. Low-cost hyperspectral imaging system: Design and testing for laboratory-based environmental applications. Sensors 2020, 20, 3293. [Google Scholar] [CrossRef]

- Yu, P.; Huang, M.; Zhang, M.; Zhu, Q.; Qin, J. Rapid detection of moisture content and shrinkage ratio of dried carrot slices by using a multispectral imaging system. Infrared Phys. Technol. 2020, 108, 103361. [Google Scholar] [CrossRef]

- Zhong-zhi, H.; Yan-zhao, L.; Jing, L.; You-gang, Z. Quality grade-testing of peanut based on image processing. In Proceedings of the 2010 Third International Conference on Information and Computing 2020, Wuxi, China, 4–6 June 2010. [Google Scholar] [CrossRef]

- Li, Z.; Niu, B.; Peng, F.; Li, G.; Yang, Z.; Wu, J. Classification of peanut images based on multi-features and SVM. IFAC-PapersOnLine 2018, 51, 726–731. [Google Scholar] [CrossRef]

- Yang, H.; Ni, J.; Gao, J.; Han, Z.; Luan, T. A novel method for peanut variety identification and classification by improved VGG16. Sci. Rep. 2021, 11, 1–17. [Google Scholar] [CrossRef]

- Jiang, J.; Qiao, X.; He, R. Use of Near-Infrared hyperspectral images to identify moldy peanuts. J. Food Eng. 2016, 169, 284–290. [Google Scholar] [CrossRef]

- Liu, Z.; Jiang, J.; Qiao, X.; Qi, X.; Pan, Y.; Pan, X. Using convolution neural network and hyperspectral image to identify moldy peanut kernels. LWT 2020, 132, 109815. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar] [CrossRef]

- Basri, H.; Syarif, I.; Sukaridhoto, S.; Falah, M.F. Intelligent system for automatic classification of fruit defect using faster region-based convolutional neural network (Faster RCNN). Jurnal Ilmiah Kursor 2019, 10, 1–12. [Google Scholar] [CrossRef]

- Xi, R.; Hou, J.; Lou, W. Potato bud detection with improved Faster RCNN. Trans. ASABE 2020, 63, 557–569. [Google Scholar] [CrossRef]

- Bari, B.S.; Islam, M.N.; Rashid, M.; Hasan, M.J.; Razman, M.A.M.; Musa, R.M.; Nasir, A.F.A.; Majeed, A.P.A. A real-time approach of diagnosing rice leaf disease using deep learning-based Faster RCNN framework. PeerJ Comput. Sci. 2021, 7, e432. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Niu, T.; He, D. Tomato young fruits detection method under near color background based on improved Faster RCNN with attention mechanism. Agriculture 2021, 11, 1059. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dey, S.; Chakraborty, C.; Tiwari, S. Grape disease detection network based on multi-task learning and attention features. IEEE Sens. J. 2021, 21, 17573–17580. [Google Scholar] [CrossRef]

- Du, L.; Sun, Y.; Chen, S.; Feng, J.; Zhao, Y.; Yan, Z.; Zhang, X.; Bian, Y. A novel object detection model based on Faster R-CNN for spodoptera frugiperda according to feeding trace of Corn leaves. Agriculture 2022, 12, 248. [Google Scholar] [CrossRef]

- Qu, H.; Wang, M.; Zhang, C.; Wei, Y. A study on Faster RCNN-based subway pedestrian detection with ACE enhancement. Algorithms 2018, 11, 192. [Google Scholar] [CrossRef]

- Zheng, H.; Chen, J.; Chen, L.; Li, Y.; Yan, Z. Feature enhancement for multi-scale object detection. Neural Process. Lett. 2020, 51, 1907–1919. [Google Scholar] [CrossRef]

- Zheng, Q.; Wang, L.; Wang, F. Object detection algorithm based on feature enhancement. Meas. Sci. Technol. 2021, 32, 085401. [Google Scholar] [CrossRef]

- Su, W.H.; Sun, D.W. Multispectral imaging for plant food quality analysis and visualization. Compr. Rev. Food Sci. Food Saf. 2018, 17, 220–239. [Google Scholar] [CrossRef]

- Peli, E. Contrast in complex images. JOSA A 1990, 7, 2032–2040. [Google Scholar] [CrossRef] [PubMed]

- Sawyer, T.W.; Luthman, A.S.; Bohndiek, S.E. Evaluation of illumination system uniformity for wide-field biomedical hyperspectral imaging. J. Opt. 2017, 19, 045301. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Yang, Y.; Guo, R.; Yang, J.; Yue, J.; Wang, Y. A high-precision detection method of hydroponic lettuce seedlings status based on improved Faster RCNN. Comput. Electron. Agric. 2021, 182, 106054. [Google Scholar] [CrossRef]

- Armi, L.; Fekri-Ershad, S. Texture image analysis and texture classification methods: A review. arXiv 2019, arXiv:1904.06554. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

| Network | Class | IoU (%) | P (%) | R (%) | AP (%) |

|---|---|---|---|---|---|

| Original | HP | 84.03 0.05 | 92.25 0.20 | 90.20 0.32 | 99.73 0.11 |

| MP | 84.06 0.07 | 92.25 0.10 | 90.23 0.24 | 99.84 0.16 | |

| MDP | 82.05 0.14 | 90.06 0.40 | 89.05 0.14 | 99.78 0.09 | |

| GP | 74.49 0.21 | 82.58 0.16 | 80.58 0.17 | 99.80 0.12 | |

| Ours | HP | 84.82 0.41 | 91.06 0.35 | 92.27 0.15 | 99.95 0.14 |

| MP | 83.49 0.11 | 90.75 0.19 | 91.05 0.18 | 99.95 0.04 | |

| MDP | 83.60 0.09 | 91.40 0.04 | 90.52 0.08 | 99.97 0.07 | |

| GP | 80.23 0.13 | 87.73 0.09 | 88.03 0.08 | 99.99 0.09 |

| Backbone Network | mIoU (%) | mP (%) | mR (%) | mAP (%) |

|---|---|---|---|---|

| VGG16 | 78.88 0.14 | 86.82 0.35 | 85.03 0.17 | 99.50 0.14 |

| ResNet50 | 80.23 0.51 | 89.56 0.71 | 85.56 0.21 | 99.81 0.20 |

| ResNet101 | 73.61 0.28 | 81.81 0.24 | 79.54 0.14 | 99.44 0.09 |

| VGG16 + TAM | 80.31 0.67 | 88.64 0.37 | 86.47 0.82 | 99.84 0.17 |

| ResNet50 + TAM | 80.89 0.31 | 89.15 0.35 | 86.97 0.19 | 99.93 0.15 |

| ResNet101 + TAM | 76.84 0.18 | 86.81 0.17 | 81.95 0.23 | 99.53 0.11 |

| VGG16 + TEM | 81.89 0.34 | 89.35 0.15 | 88.77 0.27 | 99.96 0.09 |

| ResNet50 + TEM | 80.07 0.41 | 88.08 0.29 | 86.37 0.29 | 99.85 0.12 |

| ResNet101 + TEM | 81.39 0.37 | 88.89 0.26 | 88.01 0.41 | 99.89 0.23 |

| VGG16 + TAM + TEM | 82.34 0.49 | 89.45 0.20 | 89.67 0.53 | 99.97 0.12 |

| ResNet50 + TAM + TEM | 82.10 0.28 | 89.79 0.43 | 88.56 0.47 | 99.95 0.23 |

| ResNet101+ TAM + TEM | 80.66 0.27 | 90.00 0.47 | 86.11 0.41 | 99.67 0.16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Ding, Z.; Song, J.; Ge, Z.; Deng, Z.; Liu, Z.; Wang, J.; Bian, L.; Yang, C. Peanut Defect Identification Based on Multispectral Image and Deep Learning. Agronomy 2023, 13, 1158. https://doi.org/10.3390/agronomy13041158

Wang Y, Ding Z, Song J, Ge Z, Deng Z, Liu Z, Wang J, Bian L, Yang C. Peanut Defect Identification Based on Multispectral Image and Deep Learning. Agronomy. 2023; 13(4):1158. https://doi.org/10.3390/agronomy13041158

Chicago/Turabian StyleWang, Yang, Zhao Ding, Jiayong Song, Zhizhu Ge, Ziqing Deng, Zijie Liu, Jihong Wang, Lifeng Bian, and Chen Yang. 2023. "Peanut Defect Identification Based on Multispectral Image and Deep Learning" Agronomy 13, no. 4: 1158. https://doi.org/10.3390/agronomy13041158

APA StyleWang, Y., Ding, Z., Song, J., Ge, Z., Deng, Z., Liu, Z., Wang, J., Bian, L., & Yang, C. (2023). Peanut Defect Identification Based on Multispectral Image and Deep Learning. Agronomy, 13(4), 1158. https://doi.org/10.3390/agronomy13041158