A Novel Operational Rice Mapping Method Based on Multi-Source Satellite Images and Object-Oriented Classification

Abstract

:1. Introduction

- (1)

- To propose a method that has operational potential for rice mapping based on the remote sensing data availability traits in cloudy and rainy areas;

- (2)

- To obtain high quality rice parcel boundaries serving as analyzing units, and for the method for optimizing parameters in the automatic multi-scale segmentation procedure to be established and evaluated;

- (3)

- To evaluate the performance of the proposed method and compare it with conventional methods that are solely based on multi-temporal optical images or multi-temporal SAR images. In addition, a “random pick” strategy is used to generate a SAR image series on key growing stages of rice in order to test the adaptability of the novel method in an operational scenario.

2. Materials and Methods

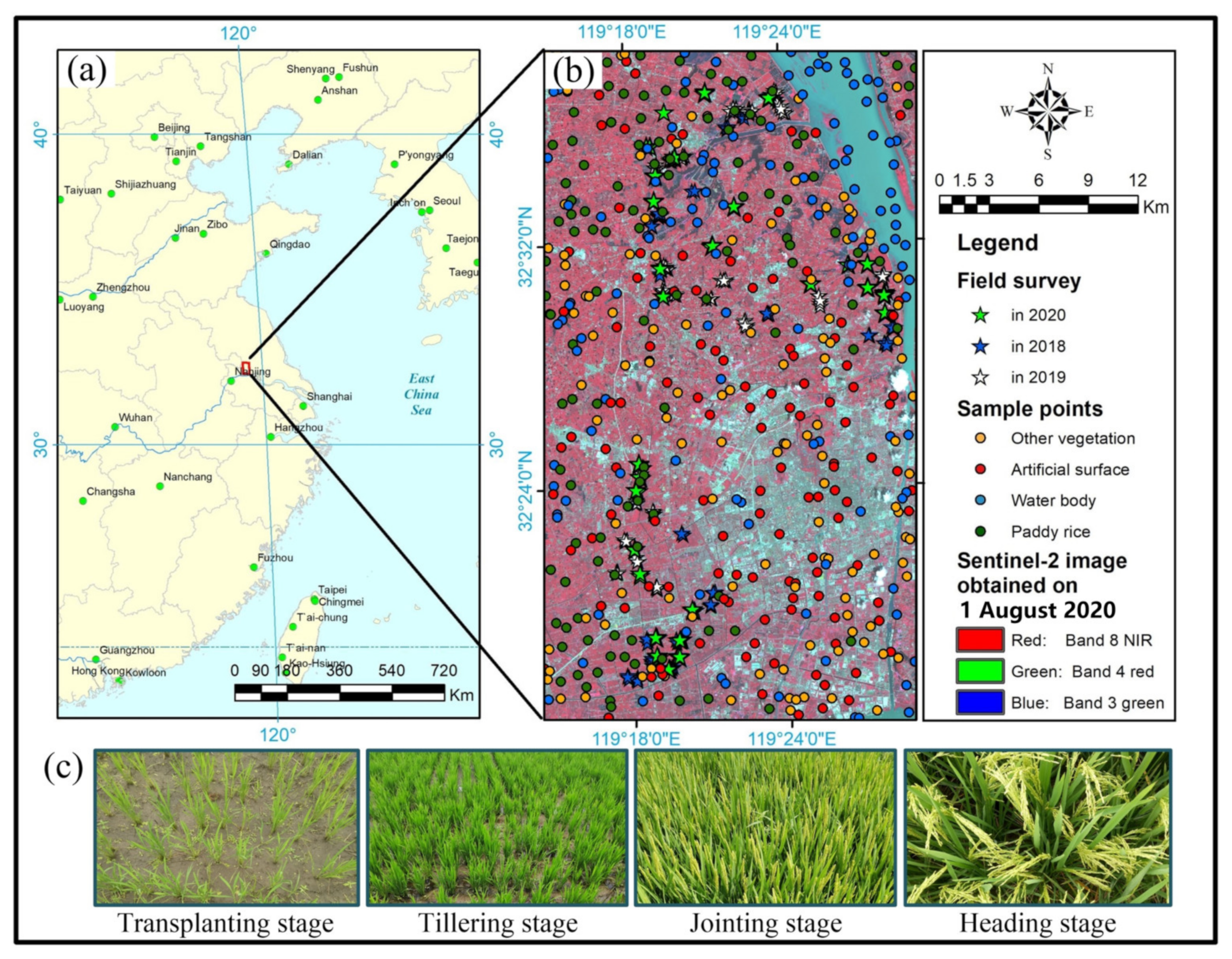

2.1. Study Area

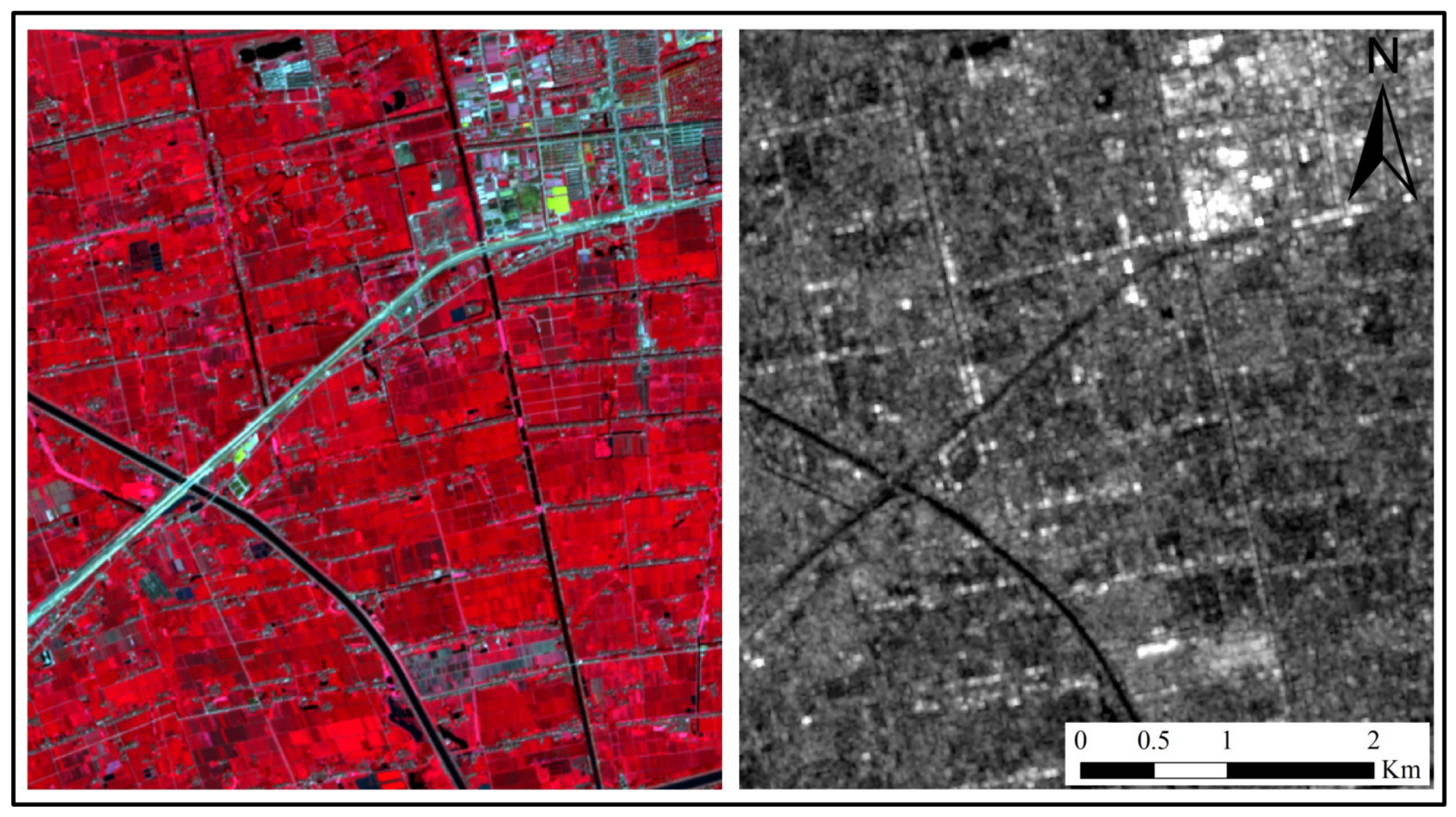

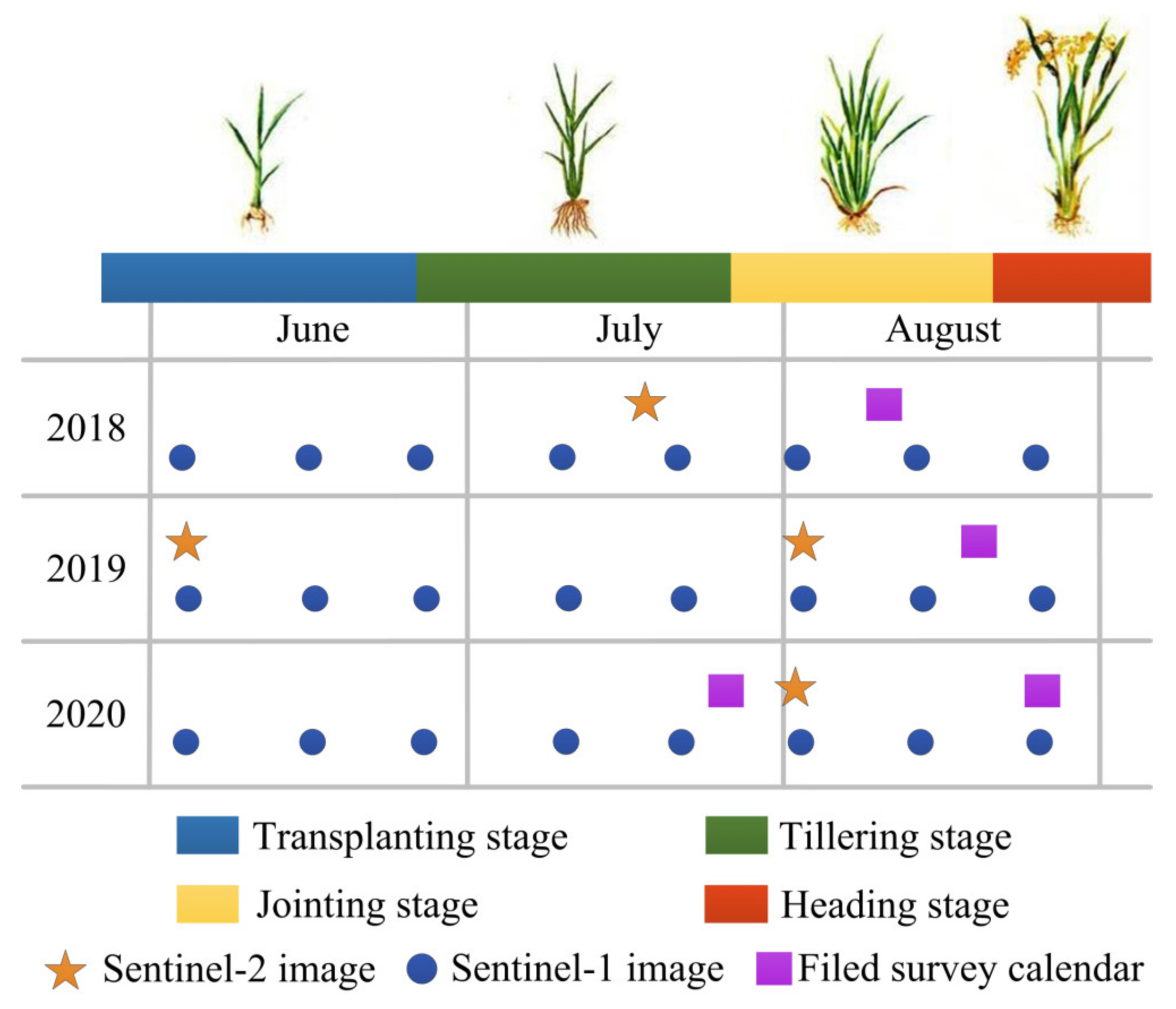

2.2. Remote Sensing and Field Survey Data

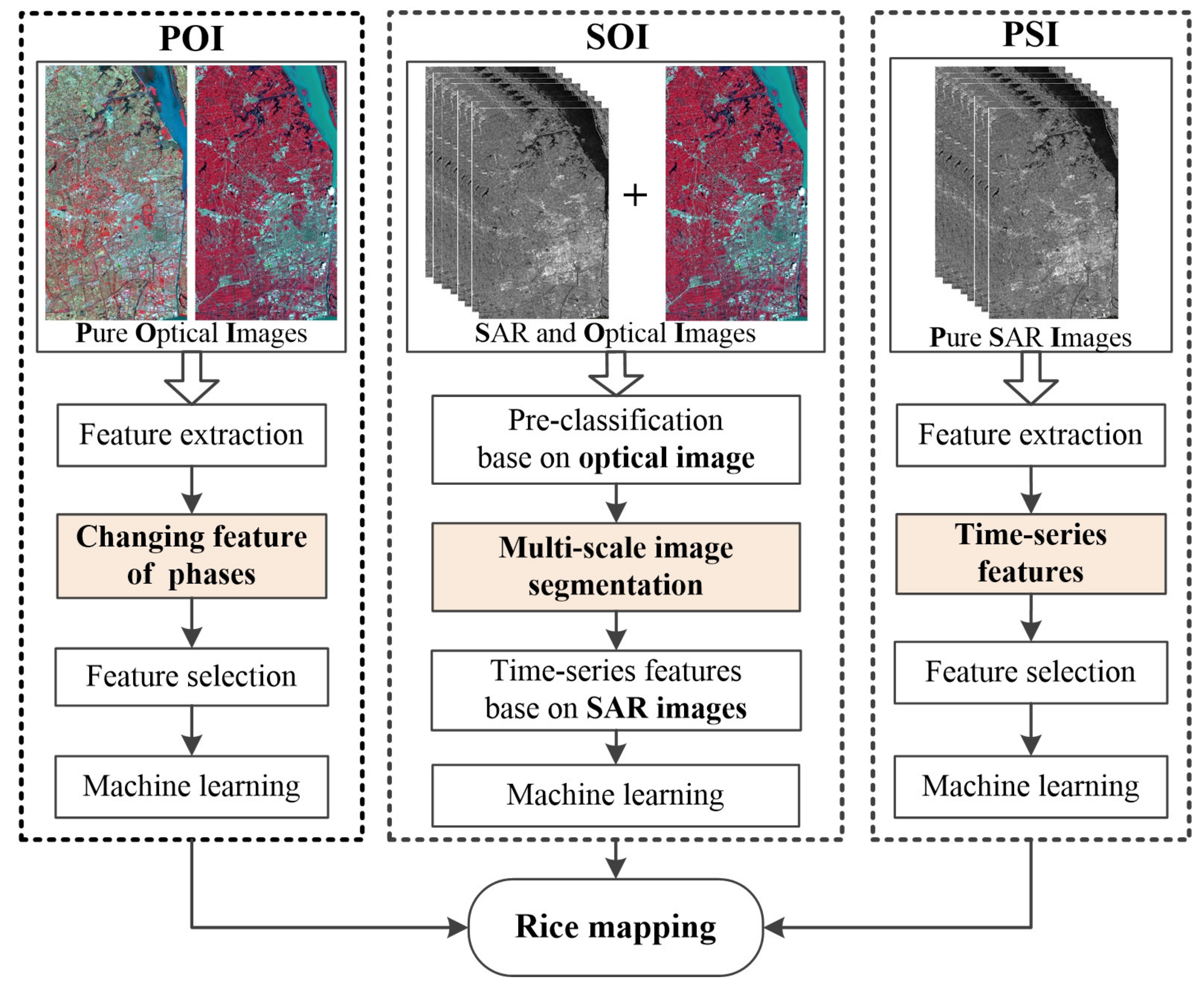

2.3. Methods for Rice Mapping

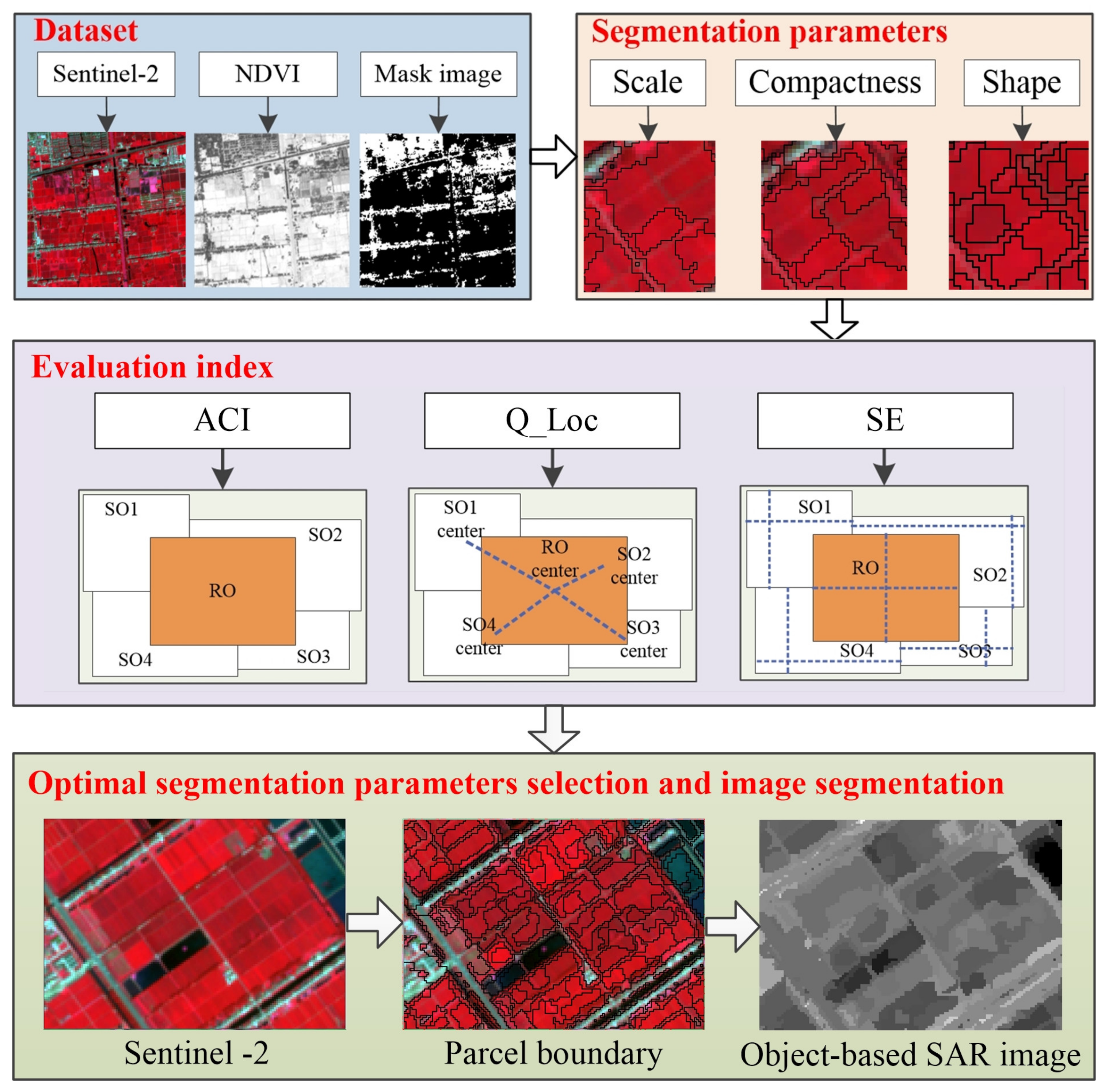

2.3.1. Rice Mapping Method by Fusing SAR and Optical Images (SOI)

- (1)

- Preliminary classification based on single-phase optical image

- (2)

- Optical image segmentation and object-based feature extraction from SAR images

- (3)

- Rice mapping and evaluation of methods’ adaptability to operational scenario

2.3.2. Rice Mapping Methods Based on Pure Optical Images (POI) and Pure SAR Images (PSI) for Comparison

2.4. Feature Selection for Rice Mapping

- (1)

- Candidate optical and SAR features

- (2)

- Selection of features for preliminary classification

- (3)

- Selection of temporal change optical features

- (4)

- Selection of SAR features

2.5. Multi-Scale Image Segmentation

2.6. Classification Method and Evaluation

3. Results

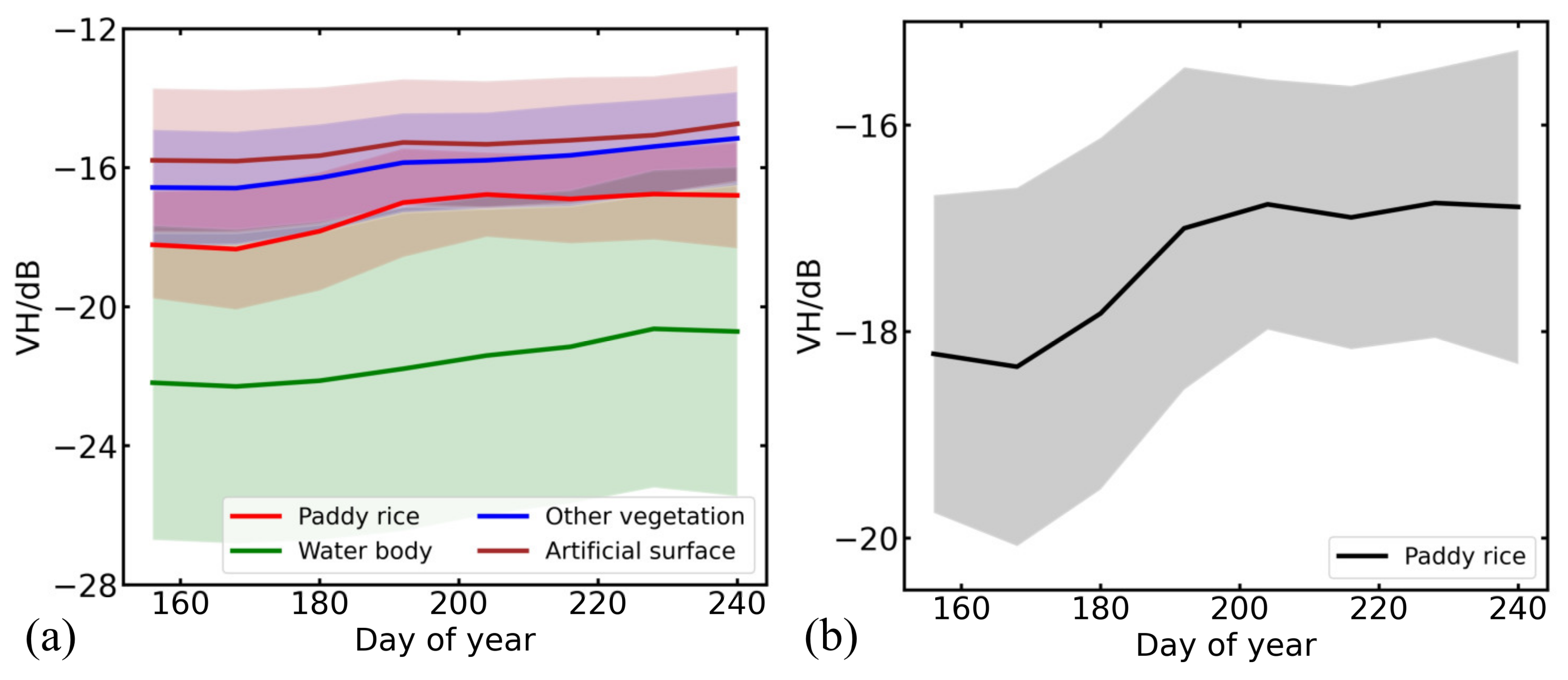

3.1. Optimal Features for Rice Mapping

3.2. Rice Parcel Boundaries Yielded by Multi-Scale Segmentation

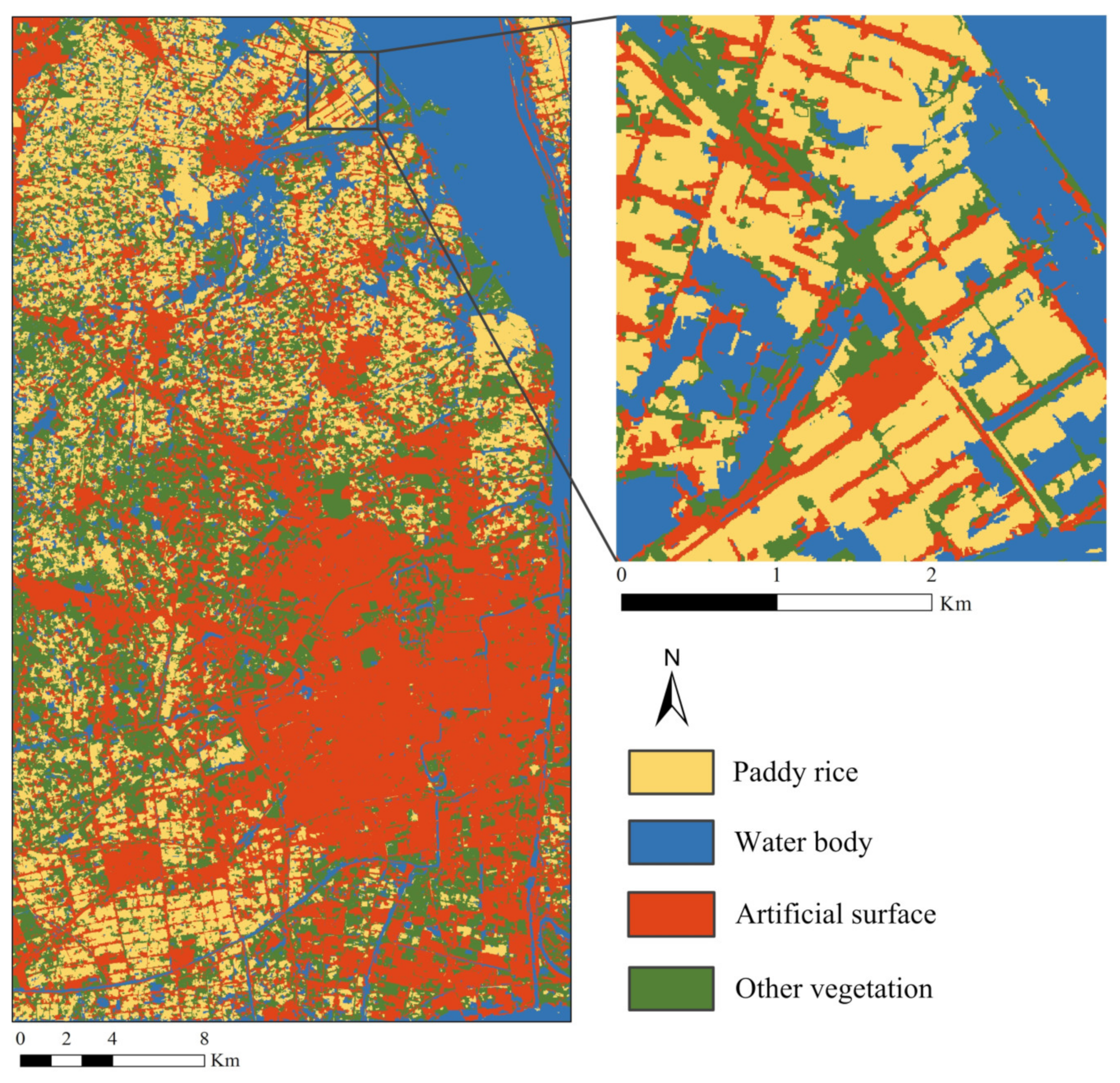

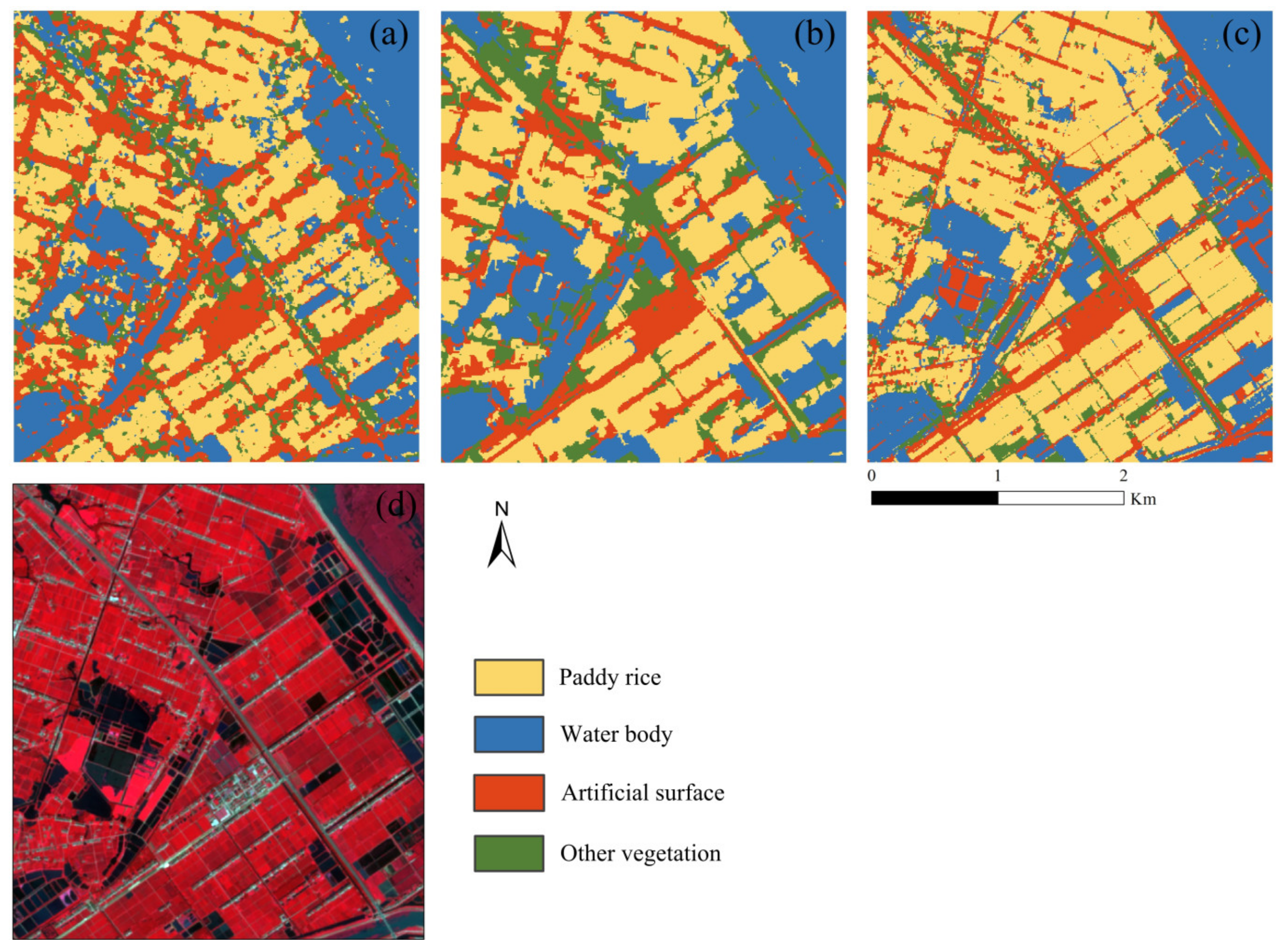

3.3. Evaluation of Rice Mapping Results

4. Discussion

4.1. Role of Optical and SAR Features for Rice Mapping

4.2. Optimization of Multi-Scale Segmentation

4.3. The Effectiveness of the SOI

5. Conclusions

- (1)

- The proposed SOI method can achieve accurate extraction of rice area. The method makes full use of the parcel boundary information derived by optical images and growth and phonological traits of rice from SAR images. The mapping accuracy of SOI is significantly higher than PSI and POI.

- (2)

- An adaptive rice parcel boundary extraction method based on multi-scale segmentation was proposed. A comprehensive segmentation quality index, together with an orthogonal experiment, were used to obtain the optimal segmentation parameters and generate the parcel boundaries for rice mapping.

- (3)

- Further, the adaptability of SOI in the operational scenario is examined according to a “random pick” strategy. Based on both the polarization and texture features, the SOI method exhibited strong adaptability to the uncertainty of the acquisition time of remote sensing images.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food security: The challenge of feeding 9 billion people. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A scalable satellite-based crop yield mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Peng, D.L.; Huete, A.R.; Huang, J.F.; Wang, F.M.; Sun, H.S. Detection and estimation of mixed paddy rice cropping patterns with MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 13–23. [Google Scholar] [CrossRef]

- Talema, T.; Hailu, B.T. Mapping rice crop using sentinels (1 SAR and 2 MSI) images in tropical area: A case study in Fogera wereda, Ethiopia. Remote Sens. Appl. Soc. Environ. 2020, 18, 100290. [Google Scholar] [CrossRef]

- Ali, A.M.; Savin, I.; Poddubsky, A.; Abouelghar, M.; Saleh, N.; Abutaleb, K.; El-Shirbeny, M.; Dokuin, P. Integrated Method for Rice Cultivation Monitoring Using Sentinel-2 Data and Leaf Area Index. Egypt. J. Remote Sens. Space Sci. 2020, 24, 431–441. [Google Scholar] [CrossRef]

- Dong, J.W.; Xiao, X.M.; Menarguez, M.A.; Zhang, G.L.; Qin, Y.W.; Thau, D.; Biradar, C.; Moore, B., III. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [Green Version]

- Phung, H.P.; Nguyen, L.D.; Thong, N.H.; Thuy, L.T.; Apan, A.A. Monitoring rice growth status in the Mekong Delta, Vietnam using multitemporal Sentinel-1 data. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef] [Green Version]

- Zhan, P.; Zhu, W.Q.; Li, N. An automated rice mapping method based on flooding signals in synthetic aperture radar time series. Remote Sens. Environ. 2021, 252, 112112. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Wang, F.M.; Huang, J.F.; Yang, L.B.; Kanu, A.S. Accuracies of support vector machine and random forest in rice mapping with Sentinel-1A, Landsat-8 and Sentinel-2A datasets. Geocarto Int. 2020, 35, 1088–1108. [Google Scholar] [CrossRef]

- Xiao, W.; Xu, S.C.; He, T.T. Mapping Paddy Rice with Sentinel-1/2 and Phenology-, Object-Based Algorithm-A Implementation in Hangjiahu Plain in China Using GEE Platform. Remote Sens. 2021, 13, 990. [Google Scholar] [CrossRef]

- Yang, H.J.; Pan, B.; Wu, W.F.; Tai, J.H. Field-based rice classification in Wuhua county through integration of multi-temporal Sentinel-1A and Landsat-8 OLI data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 226–236. [Google Scholar] [CrossRef]

- He, Y.L.; Dong, J.W.; Liao, X.Y.; Sun, L.; Wang, Z.P.; You, N.S.; Li, Z.C.; Fu, P. Examining rice distribution and cropping intensity in a mixed single-and double-cropping region in South China using all available Sentinel 1/2 images. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102351. [Google Scholar] [CrossRef]

- Yang, L.B.; Wang, L.M.; Abubakar, G.A.; Huang, J.F. High-Resolution Rice Mapping Based on SNIC Segmentation and Multi-Source Remote Sensing Images. Remote Sens. 2021, 13, 1148. [Google Scholar] [CrossRef]

- Yang, L.B.; Mansaray, L.R.; Huang, J.F.; Wang, L.M. Optimal Segmentation Scale Parameter, Feature Subset and Classification Algorithm for Geographic Object-Based Crop Recognition Using Multisource Satellite Imagery. Remote Sens. 2019, 11, 514. [Google Scholar] [CrossRef] [Green Version]

- Thenkabail, P.S.; Lyon, J.G.; Huete, A. Hyperspectral Remote Sensing of Vegetation; CRC Press: Boca Raton, FL, USA, 2011; pp. 336–348. [Google Scholar]

- Caballero, G.R.; Platzeck, G.; Pezzola, A.; Casella, A.; Winschel, C.; Silva, S.S.; Luduena, E.; Pasqualotto, N.; Delegido, J. Assessment of Multi-Date Sentinel-1 Polarizations and GLCM Texture Features Capacity for Onion and Sunflower Classification in an Irrigated Valley: An Object Level Approach. Agron. J. 2020, 10, 845. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Jiang, Z.Y.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. Nasa Spec. Publ. 1974, 351, 309. [Google Scholar]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for Remote Quantification of Biophysical Characteristics of Vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.Q. A Study on Information Extraction of Water Body with the Modified Normalized Difference Water Index (MNDWI). J. Remote Sens. 2005, 9, 7, (In Chinese with English Abstract). [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Mesner, N.; Ostir, K. Investigating the impact of spatial and spectral resolution of satellite images on segmentation quality. J. Appl. Remote Sens. 2014, 8, 083696. [Google Scholar] [CrossRef]

- Bo, S.K.; Han, X.C.; Ding, L. Automatic Selection of Segmentation Parameters for Object Oriented Image Classification. Geomat. Inf. Sci. Wuhan Univ. 2009, 34, 514–517, (In Chinese with English Abstract). [Google Scholar]

- Zhu, C.J.; Yang, S.Z.; Cui, S.C. Accuracy evaluating method for object-based segmentation of high resolution remote sensing image. High Power Laser Part. Beams 2015, 27, 27061007, (In Chinese with English Abstract). [Google Scholar]

- Sui, D.S.; Cui, Z.S. Application of orthogonal experimental design and Tikhonov regularization method for the identification of parameters in the casting solidification process. Acta Metall. Sin. 2009, 22, 9. [Google Scholar] [CrossRef] [Green Version]

- Zhan, Q.M.; Molenaar, M.; Tempfli, K.; Shi, W.Z. Quality assessment for geo-spatial objects derived from remotely sensed data. Int. J. Remote Sens 2005, 26, 2953–2974. [Google Scholar] [CrossRef]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote. Sens 2008, 76, 289–299. [Google Scholar] [CrossRef]

- Zhang, J.C.; He, Y.H.; Yuan, L.; Liu, P.; Zhou, X.F.; Huang, Y.B. Machine Learning-Based Spectral Library for Crop Classification and Status Monitoring. Agronomy 2019, 9, 496. [Google Scholar] [CrossRef] [Green Version]

- Rasanen, A.; Rusanen, A.; Kuitunen, M.; Lensu, A. What makes segmentation good? A case study in boreal forest habitat mapping. Int. J. Remote Sens 2013, 34, 8603–8627. [Google Scholar] [CrossRef]

- Costa, H.; Foody, G.M.; Boyd, D.S. Supervised methods of image segmentation accuracy assessment in land cover mapping. Remote Sens. Environ. 2018, 205, 338–351. [Google Scholar] [CrossRef] [Green Version]

- Mansaray, L.R.; Zhang, D.; Zhou, Z.; Huang, J. Evaluating the potential of temporal Sentinel-1A data for paddy rice discrimination at local scales. Remote Sens. Lett. 2017, 8, 967–976. [Google Scholar] [CrossRef]

- Mandal, D.; Kumar, V.; Bhattacharya, A.; Rao, Y.S.; Siqueira, P.; Bera, S. Sen4Rice: A processing chain for differentiating early and late transplanted rice using time-series Sentinel-1 SAR data with Google Earth engine. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1947–1951. [Google Scholar] [CrossRef]

| Sentinel-1 | Sentinel-2 | |||

|---|---|---|---|---|

| Indicators | Information | Band | Wavelength (μm) | Resolution (m) |

| Mode | IW | Band1 | 0.443–0.453 | 60 |

| Polarization | VV, VH | Band2 | 0.458–0.523 | 10 |

| Band3 | 0.543–0.578 | 10 | ||

| Band | C | Band4 | 0.650–0.680 | 10 |

| Resolution | 10 m | Band5 | 0.698–0.713 | 20 |

| Band6 | 0.733–0.748 | 20 | ||

| Centre frequency | 5.4 GHz | Band7 | 0.773–0.793 | 20 |

| Band8 | 0.785–0.900 | 10 | ||

| Product type | Ground Range Detected (GRDH) | Band9 | 0.935–0.955 | 60 |

| Band10 | 1.360–1.390 | 60 | ||

| Pass direction | Ascending | Band11 | 1.565–1.655 | 20 |

| Band12 | 2.100–2.280 | 20 | ||

| Satellites | Category | Features | Reference |

|---|---|---|---|

| Sentinel-2 | Spectral bands | Band2-Blue | / |

| Band3-Green | |||

| Band4-Red | |||

| Band5-Vegetation Red Edge | |||

| Band6-Vegetation Red Edge | |||

| Band7-Vegetation Red Edge | |||

| Band8-NIR | |||

| Band9-Water vapor | |||

| Band11-SWIR | |||

| Band12-SWIR | |||

| VIs | EVI = 2.5 × (NIR − Red)/(NIR + 6 × Red − 7.5 × Blue + 1) | [17] | |

| EVI2 = 2.5 × (NIR − Red)/(NIR + 2.4 × Red + 1) | [18] | ||

| SR = NIR/R | [19] | ||

| NDVI = (NIR − Red)/(NIR + Red) | [20] | ||

| SAVI = (NIR − Red) × (1 + L)/(NIR + Red + L) | [21] | ||

| WDRVI = (0.1 × NIR − Red)/(0.1 × NIR + Red) | [22] | ||

| MNDWI = (Green − SWIR)/(Green + SWIR) | [23] | ||

| VARIred-edge = (Red-edge − Red)/(Red-edge + Red) | [24] | ||

| Sentinel-1 | Polarization | VV | / |

| VH | |||

| Textural features | Mean | [16] | |

| Variance | |||

| Homogeneity | |||

| Contrast | |||

| Dissimilarity | |||

| Entropy | |||

| Second Moment | |||

| Correlation |

| Category | Features | t-Test | Cross-Correlation |

|---|---|---|---|

| Spectral bands | Band2 | ||

| Band3 | |||

| Band4 | ▲ | ■ | |

| Band5 | |||

| Band6 | ▲ | ||

| Band7 | ▲ | ||

| Band8 | ▲ | ||

| Band9 | ▲ | ||

| Band11 | ▲ | ■ | |

| Band12 | ▲ | ||

| VIs | EVI | ▲ | ■ |

| EVI2 | ▲ | ||

| SR | |||

| NDVI | |||

| SAVI | ▲ | ■ | |

| WDRVI | |||

| MNDWI | ▲ | ■ | |

| VARIred-edge | ▲ |

| Features | Date (2018) | |||||||

|---|---|---|---|---|---|---|---|---|

| Phase1 (0603) | Phase2 (0615) | Phase3 (0627) | Phase4 (0709) | Phase5 (0721) | Phase6 (0802) | Phase7 (0814) | Phase8 (0826) | |

| VV | ▲ | ▲ | ▲ | |||||

| VV_Mean | ▲ | ▲ | ▲ | |||||

| VV_Variance | ▲ | ▲ | ||||||

| VV_Homogeneity | ||||||||

| VV_Contrast | ||||||||

| VV_Dissimilarity | ▲ | ▲ | ||||||

| VV_Entropy | ▲ | ▲ | ||||||

| VV_Second Moment | ||||||||

| VV_Correlation | ||||||||

| VH | ▲ | ▲ | ||||||

| VH_Mean | ▲ | |||||||

| VH_Variance | ▲ | ▲ | ||||||

| VH_Homogeneity | ▲ | ▲ | ▲ | |||||

| VH_Contrast | ▲ | |||||||

| VH_Dissimilarity | ▲ | ▲ | ▲ | |||||

| VH_Entropy | ▲ | ▲ | ||||||

| VH_Second Moment | ▲ | ▲ | ||||||

| VH_Correlation | ▲ | ▲ | ||||||

| Combinations | Randomly Selected Features |

|---|---|

| PPF | VH_Phase2, VV_Phase5, VH_Phase7, VV_Phase8 |

| PTF | VV_Mean_Phase1, VV_Dissimilarity_Phase4, VH_Entropy_Phase6, VV_Second Moment_Phase8, |

| PFTF | VH_Homogeneity_Phase2, VV_Phase5, VH_Variance_Phase6, VV_Phase8 |

| Classifier | Date | OA (%) | OA Average | Kappa | Rice | |||

|---|---|---|---|---|---|---|---|---|

| PA (%) | PA Average | UA (%) | UA Average | |||||

| CART | 2018 | 90.61 | 91.85 | 0.85 | 87.22 | 85.37 | 88.40 | 87.88 |

| 2019 | 94.04 | 0.91 | 84.05 | 87.23 | ||||

| 2020 | 90.89 | 0.87 | 84.85 | 88.01 | ||||

| SVM | 2018 | 92.42 | 93.95 | 0.88 | 91.33 | 92.83 | 95.03 | 90.48 |

| 2019 | 94.64 | 0.92 | 92.18 | 84.03 | ||||

| 2020 | 94.80 | 0.92 | 94.97 | 92.37 | ||||

| Classifier | Strategy | OA (%) | Kappa | Rice | |

|---|---|---|---|---|---|

| PA (%) | UA (%) | ||||

| CART | POI | 94.02 | 0.91 | 96.66 | 76.05 |

| SOI | 94.04 | 0.91 | 84.05 | 87.23 | |

| PSI | 82.35 | 0.72 | 71.64 | 65.62 | |

| SVM | POI | 97.83 | 0.97 | 97.71 | 95.61 |

| SOI | 94.64 | 0.92 | 92.18 | 84.03 | |

| PSI | 84.89 | 0.76 | 72.78 | 65.29 | |

| Truth | ||||||

|---|---|---|---|---|---|---|

| Paddy Rice | Water Body | Artificial Surface | Other Vegetation | Total | ||

| Classification | Paddy rice | 927 | 215 | 12 | 65 | 1219 |

| Water body | 17 | 3598 | 8 | 46 | 3669 | |

| Artificial surface | 14 | 2 | 1680 | 31 | 1727 | |

| Other vegetation | 1 | 5 | 17 | 607 | 630 | |

| Total | 959 | 3820 | 1717 | 749 | 7245 | |

| Combinations | Date | OA (%) | OA Average | Kappa | Rice | |||

|---|---|---|---|---|---|---|---|---|

| PA (%) | PA Average | UA (%) | UA Average | |||||

| PPF | 2018 | 88.12 | 90.09 | 0.81 | 79.69 | 85.10 | 85.70 | 87.43 |

| 2019 | 92.74 | 0.89 | 90.93 | 86.94 | ||||

| 2020 | 89.41 | 0.84 | 84.67 | 89.66 | ||||

| PTF | 2018 | 84.57 | 85.58 | 0.76 | 76.02 | 72.71 | 58.98 | 60.20 |

| 2019 | 86.63 | 0.79 | 70.80 | 58.08 | ||||

| 2020 | 85.55 | 0.79 | 71.32 | 63.55 | ||||

| PFTF | 2018 | 89.62 | 88.81 | 0.84 | 87.12 | 85.60 | 91.63 | 90.02 |

| 2019 | 86.03 | 0.78 | 84.88 | 92.71 | ||||

| 2020 | 90.79 | 0.86 | 84.79 | 85.71 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, Y.; Zhang, J.; Yang, L.; Zhou, X.; Li, H.; Zhou, X. A Novel Operational Rice Mapping Method Based on Multi-Source Satellite Images and Object-Oriented Classification. Agronomy 2022, 12, 3010. https://doi.org/10.3390/agronomy12123010

Shen Y, Zhang J, Yang L, Zhou X, Li H, Zhou X. A Novel Operational Rice Mapping Method Based on Multi-Source Satellite Images and Object-Oriented Classification. Agronomy. 2022; 12(12):3010. https://doi.org/10.3390/agronomy12123010

Chicago/Turabian StyleShen, Yanyan, Jingcheng Zhang, Lingbo Yang, Xiaoxuan Zhou, Huizi Li, and Xingjian Zhou. 2022. "A Novel Operational Rice Mapping Method Based on Multi-Source Satellite Images and Object-Oriented Classification" Agronomy 12, no. 12: 3010. https://doi.org/10.3390/agronomy12123010

APA StyleShen, Y., Zhang, J., Yang, L., Zhou, X., Li, H., & Zhou, X. (2022). A Novel Operational Rice Mapping Method Based on Multi-Source Satellite Images and Object-Oriented Classification. Agronomy, 12(12), 3010. https://doi.org/10.3390/agronomy12123010