A Model for Identifying Soybean Growth Periods Based on Multi-Source Sensors and Improved Convolutional Neural Network

Abstract

:1. Introduction

2. Materials and Methods

2.1. Image Acquisition

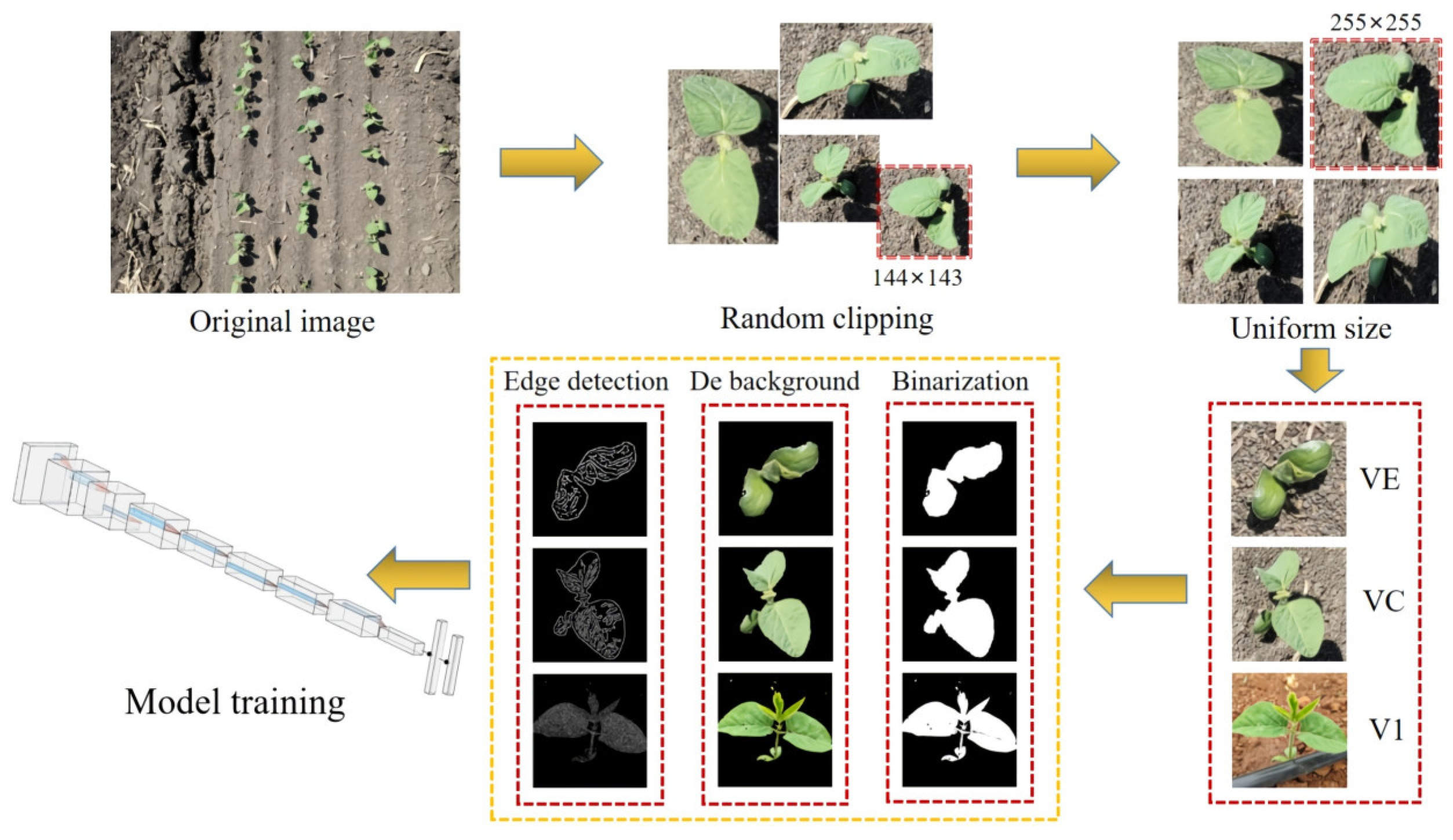

2.2. Dataset Construction

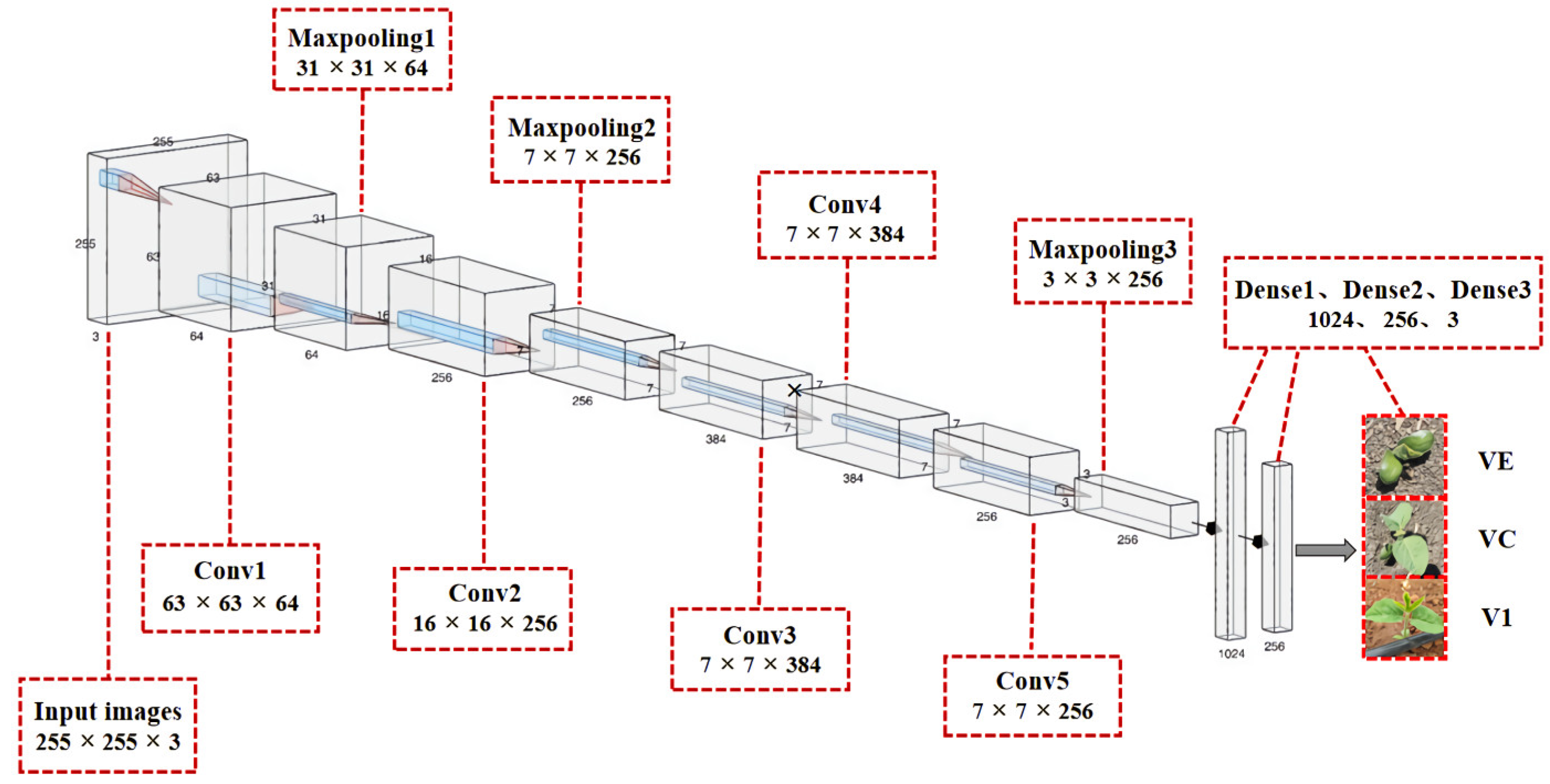

2.3. Model Establishment

2.4. Model Optimization

3. Results

3.1. Evaluating Indicator

3.2. Analysis of Hyperparameters Combination Experiment

3.3. Performance Comparison of Different Image Datasets

3.4. Field Experiment Validation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Soltani, A.; Robertson, M.J.; Torabi, B.; Yousefi-Daz, M.; Sarparast, R. Modelling seedling emergence in chickpea as influenced by temperature and sowing depth. Agric. For. Meteorol. 2006, 138, 156–167. [Google Scholar] [CrossRef]

- Guan, H.; Liu, M.; Ma, X. Automatic soybean disease diagnosis model based on image correction technology. J. Jiangsu Univ. Nat. Sci. Ed. 2018, 39, 409–413. [Google Scholar]

- Lan, Y.; Deng, X.; Zeng, G. Advances in diagnosis of crop diseases, pests and weeds by UAV remote sensing. Smart Agric. 2019, 1, 1. [Google Scholar]

- Lv, S.; Li, D.; Xian, R. Research status of deep learning in agriculture of China. Comput. Eng. Appl. 2019, 55, 24–33. [Google Scholar]

- Liu, D.; Li, S.; Cao, Z. State-of-the-art on deep learning and its application in image object classification and detection. Comput. Sci. 2016, 43, 13–23. [Google Scholar]

- Li, M.; Wang, J.; Li, H.; Hu, Z.; Yang, X.; Huang, X.; Zeng, W.; Zhang, J.; Fang, S. Method for identifying crop disease based on CNN and transfer learning. Smart Agric. 2019, 1, 46–55. [Google Scholar]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Shao, Z.; Yao, Q.; Tang, J.; Li, H.; Yang, B.; Lv, J.; Chen, Y. Research and development of the intelligent identification system of agricultural pests for mobile terminals. Sci. Agric. Sin. 2020, 53, 3257–3268. [Google Scholar]

- Brahimi, M.; Boukhalfa, K.; Moussaoui, A. Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 2017, 31, 299–315. [Google Scholar] [CrossRef]

- Arif, M.; Tahir, F.; Fatima, U.; Nadeem, S.; Mohyuddin, A.; Ahmad, M.; Maryum, A.; Rukh, M.; Suffian, M.; Sattar, J. Novel synthesis of sensor for selective detection of Fe+ 3 ions under various solvents. J. Indian Chem. Soc. 2022, 99, 100754. [Google Scholar] [CrossRef]

- Hou, J.; Yao, E.; Zhu, H. Classification of castor seed damage based on convolutional neural network. Trans. Chin. Soc. Agric. Mach. 2020, 51, 440–449. [Google Scholar]

- Zhao, Z.; Song, H.; Zhu, J.; Lu, L.; Sun, L. Identification algorithm and application of peanut kernel integrity based on convolution neural network. Trans. Chin. Soc. Agric. Eng. 2018, 34, 195–201. [Google Scholar]

- Niu, X.; Gao, B.; Nan, X.; Shi, Y. Detection of tomato leaf disease based on improved DenseNet convolutional neural network. Jiangsu J. Agric. Sci. 2022, 38, 129–134. [Google Scholar]

- Zhou, Q.; Ma, L.; Cao, L.; Yu, H. Identification of tomato leaf diseases based on improved lightweight convolutional neural networks MobileNetV3. Smart Agric. 2022, 4, 47–56. [Google Scholar]

- Shen, Y.; Yin, Y.; Zhao, C.; Li, B.; Wang, J.; Li, G.; Zhang, Z. Image recognition method based on an improved convolutional neural network to detect impurities in whea. IEEE Access 2019, 7, 162206–162218. [Google Scholar] [CrossRef]

- Wu, B.; Zheng, G.; Chen, Y. An improved convolution neural network-based model for classifying foliage and woody components from terrestrial laser scanning data. Remote Sens. 2020, 12, 1010. [Google Scholar] [CrossRef] [Green Version]

- Arif, M.; Rauf, A.; Tahir, F.; Saeed, A.; Nadeem, S.; Mohyuddin, A. Synthesis and optical study of highly sensitive calix [4] based sensors for heavy metal ions detection probes. Chem. Data Collect. 2022, 42, 100956. [Google Scholar] [CrossRef]

- Fan, X.; Zhou, J.; Xu, Y.; Peng, X. Corn disease recognition under complicated background based on improved convolutional neural network. Trans. Chin. Soc. Agric. Mach. 2021, 52, 210–217. [Google Scholar]

- Xu, J.; Shao, M.; Wang, Y.; Han, W. Recognition of corn leaf spot and rust based on transfer learning with convolutional neural network. Trans. Chin. Soc. Agric. Mach. 2020, 51, 230–236. [Google Scholar]

- Li, Y.; Dong, H. Classification of remote-sensing image based on convolutional neural network. CAAI Trans. Intell. Syst. 2018, 13, 550–556. [Google Scholar]

- Huang, L.; Shao, S.; Lu, X.; Guo, X.; Fan, J. Segmentation and registration of lettuce multispectral image based on convolutional neural network. Trans. Chin. Soc. Agric. Mach. 2021, 52, 186–194. [Google Scholar]

- Yu, P.; Zhao, J. Image recognition algorithm of convolutional neural networks based on matrix 2-norm pooling. J. Graph. 2016, 37, 694–701. [Google Scholar]

- Basha, S.; Dubey, S.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119. [Google Scholar] [CrossRef] [Green Version]

- Zhao, L.; Hou, F.; Lv, Z.; Zhu, H.; Ding, X. Image recognition of cotton leaf diseases and pests based on transfer learning. Trans. Chin. Soc. Agric. Eng. 2020, 36, 184–191. [Google Scholar]

- Zhang, R.; Li, Z.; Hao, J.; Sun, L.; Li, H.; Han, P. Image recognition of peanut pod grades based on transfer learning with convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 171–180. [Google Scholar]

- Long, M.; Ouyang, C.; Liu, H.; Fu, Q. Image recognition of Camellia oleifera diseases based on convolutional neural network & transfer learning. Trans. Chin. Soc. Agric. Eng. 2018, 34, 194–201. [Google Scholar]

- Yang, G.; Bao, Y.; Liu, Z. Localization and recognition of pests in tea plantation based on image saliency analysis and convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2017, 33, 156–162. [Google Scholar]

- Zhu, B.; Li, M.; Liu, F.; Jia, A.; Mao, X.; Guo, Y. Modeling of canopy structure of field-grown maize based on UAV images. Trans. Chin. Soc. Agric. Mach. 2021, 52, 170–177. [Google Scholar]

- Mu, L.; Gao, Z.; Cui, Y.; Li, K.; Liu, H.; Fu, L. Kiwifruit detection of far-view and occluded fruit based on improved AlexNet. Trans. Chin. Soc. Agric. Mach. 2019, 50, 24–34. [Google Scholar]

- Zhang, W.; Wen, J. Research on leaf image identification based on improved AlexNet neural network. In Proceedings of the 2nd International Conference on Signal Processing and Computer Science (SPCS 2021), Qingdao, China, 20–22 August 2021. [Google Scholar]

- Xiao, X.; Yang, H.; Yi, W.; Wan, Y.; Huang, Q.; Luo, J. Application of improved AlexNet in image recognition of rice pests. Sci. Technol. Eng. 2021, 21, 9447–9454. [Google Scholar]

- Dong, C.; Zhang, Z.; Yue, J.; Zhou, L. Classification of strawberry diseases and pests by improved AlexNet deep learning networks. In Proceedings of the 13th International Conference on Advanced Computational Intelligence (ICACI), Wanzhou, China, 14–16 May 2021; Volume 5, pp. 14–16. [Google Scholar]

- Wang, X.; Wu, Z.; Sun, Y.; Zhang, X.; Wang, Y.; Jiang, Y. Intelligent identification of heat stress in tomato seedlings based on chlorophyll fluorescence imaging technology. Trans.Chin. Soc. Agric. Eng. 2022, 38, 171–179. [Google Scholar]

- Ni, J.; Yang, H.; Li, J.; Han, Z. Variety identification of peanut pod based on improved AlexNet. J. Pean. Sci. 2021, 50, 14–22. [Google Scholar]

- Li, Y. Research on classification and recognition of growing period of winter wheat in central China plain based on deep feature learning and multi-level R-CNN. North China Univ. Water Resour. Electr. Power. 2021, 3, 1–58. [Google Scholar]

- Fu, L.; Huang, H.; Wang, H.; Huang, S.; Chen, D. Classification of maize growth stages using the Swin Transformer model. Trans. Chin. Soc. Agric. Eng. 2022, 38, 191–200. [Google Scholar]

- Xu, D.; Zhao, J.; Li, N. On scattering characteristics of winter wheat at different phenological period based on Sentinel-1A SAR images. In Proceedings of the IET International Radar Conference (IET IRC 2020), online, 4–6 November 2020; pp. 1478–1482. [Google Scholar]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K.A. Evaluation of Cotton Emergence Using UAV-Based Narrow-Band Spectral Imagery with Customized Image Alignment and Stitching Algorithms. Remote Sens. 2020, 12, 1764. [Google Scholar] [CrossRef]

| Period | Number of Training Set Images | Number of Testing Set Images | Image Label |

|---|---|---|---|

| VE | 2250 | 750 | 0 |

| VC | 2250 | 750 | 1 |

| V1 | 2250 | 750 | 2 |

| Serial Number | Factors | Accuracy | ||

|---|---|---|---|---|

| Learning Rate | Dropout | Batch Size | ||

| 1 | 0.001 | 0.5 | 32 | 98.63% |

| 2 | 0.001 | 0.6 | 64 | 98.77% |

| 3 | 0.001 | 0.7 | 128 | 98.39% |

| 4 | 0.001 | 0.8 | 256 | 97.12% |

| 5 | 0.0001 | 0.5 | 64 | 99.47% |

| 6 | 0.0001 | 0.6 | 32 | 99.58% |

| 7 | 0.0001 | 0.7 | 256 | 88.25% |

| 8 | 0.0001 | 0.8 | 128 | 99.14% |

| 9 | 0.005 | 0.5 | 128 | 68.44% |

| 10 | 0.005 | 0.6 | 256 | 40.11% |

| 11 | 0.005 | 0.7 | 32 | 36.52% |

| 12 | 0.005 | 0.8 | 64 | 37.63% |

| 13 | 0.01 | 0.5 | 256 | 66.98% |

| 14 | 0.01 | 0.6 | 128 | 39.08% |

| 15 | 0.01 | 0.7 | 64 | 47.25% |

| 16 | 0.01 | 0.8 | 32 | 43.42% |

| Datasets | Running Time | Average Loss | Average Accuracy |

|---|---|---|---|

| RGB Images | 0.41 s/step | 0.0132 | 99.58% |

| Binary Images | 1.21 s/step | 0.0978 | 94.53% |

| Background-removed Images | 0.54 s/step | 0.0123 | 99.61% |

| Canny Edge Detection Images | 1.13 s/step | 0.0294 | 99.52% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Li, Q.; Yu, C.; He, Y.; Qi, L.; Shi, W.; Zhang, W. A Model for Identifying Soybean Growth Periods Based on Multi-Source Sensors and Improved Convolutional Neural Network. Agronomy 2022, 12, 2991. https://doi.org/10.3390/agronomy12122991

Li J, Li Q, Yu C, He Y, Qi L, Shi W, Zhang W. A Model for Identifying Soybean Growth Periods Based on Multi-Source Sensors and Improved Convolutional Neural Network. Agronomy. 2022; 12(12):2991. https://doi.org/10.3390/agronomy12122991

Chicago/Turabian StyleLi, Jinyang, Qingda Li, Chuntao Yu, Yan He, Liqiang Qi, Wenqiang Shi, and Wei Zhang. 2022. "A Model for Identifying Soybean Growth Periods Based on Multi-Source Sensors and Improved Convolutional Neural Network" Agronomy 12, no. 12: 2991. https://doi.org/10.3390/agronomy12122991

APA StyleLi, J., Li, Q., Yu, C., He, Y., Qi, L., Shi, W., & Zhang, W. (2022). A Model for Identifying Soybean Growth Periods Based on Multi-Source Sensors and Improved Convolutional Neural Network. Agronomy, 12(12), 2991. https://doi.org/10.3390/agronomy12122991