Development and Evaluation of a Watermelon-Harvesting Robot Prototype: Vision System and End-Effector

Abstract

1. Introduction

2. Materials and Methods

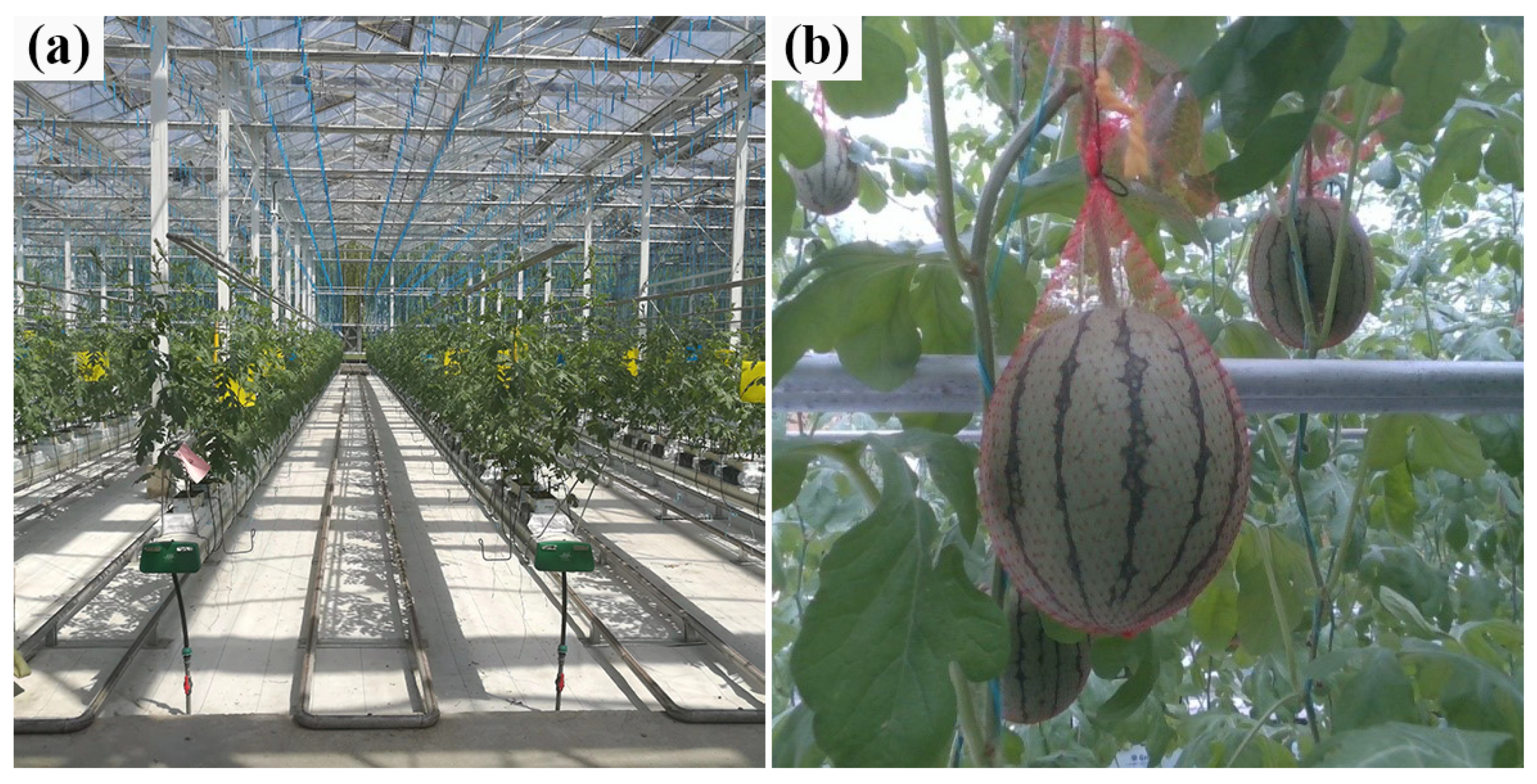

2.1. Greenhouse Environment and Fruit Parameters

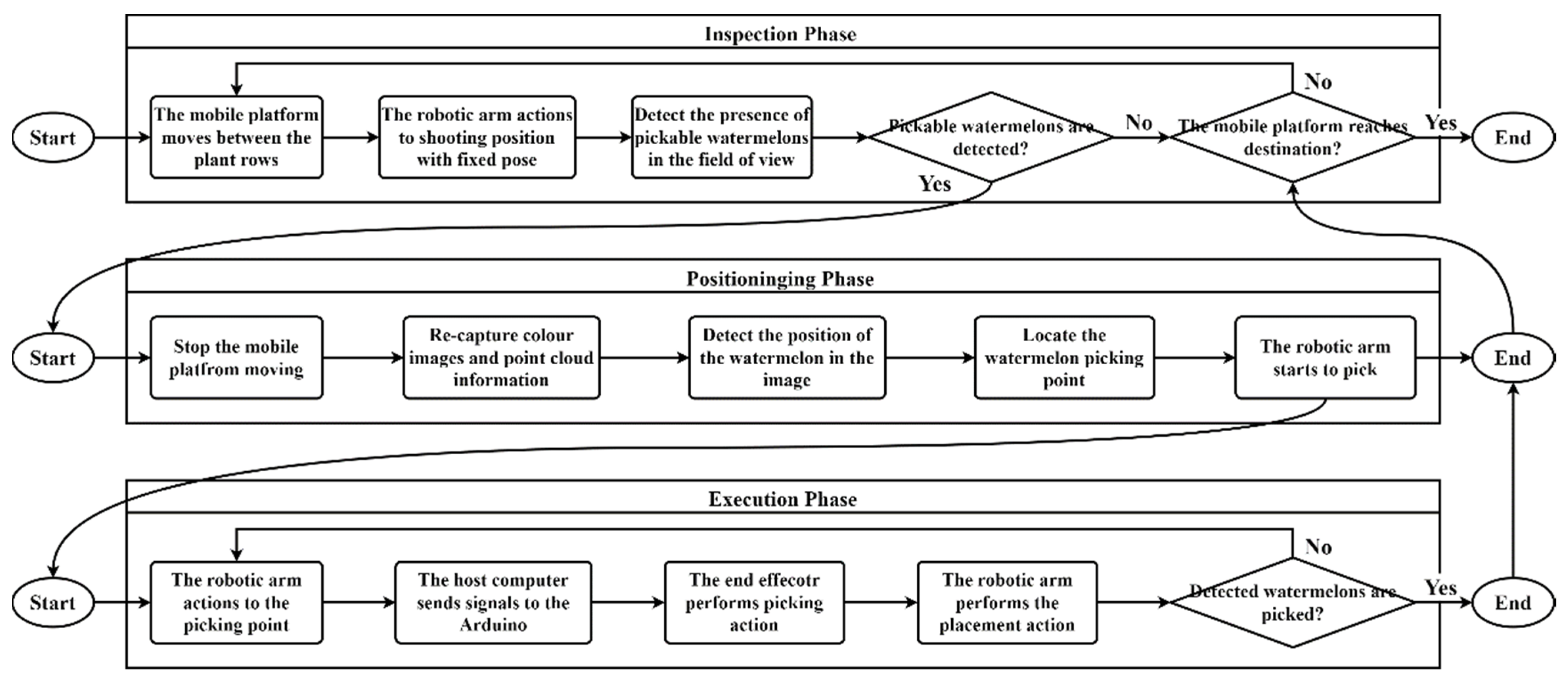

2.2. System Design and Operation

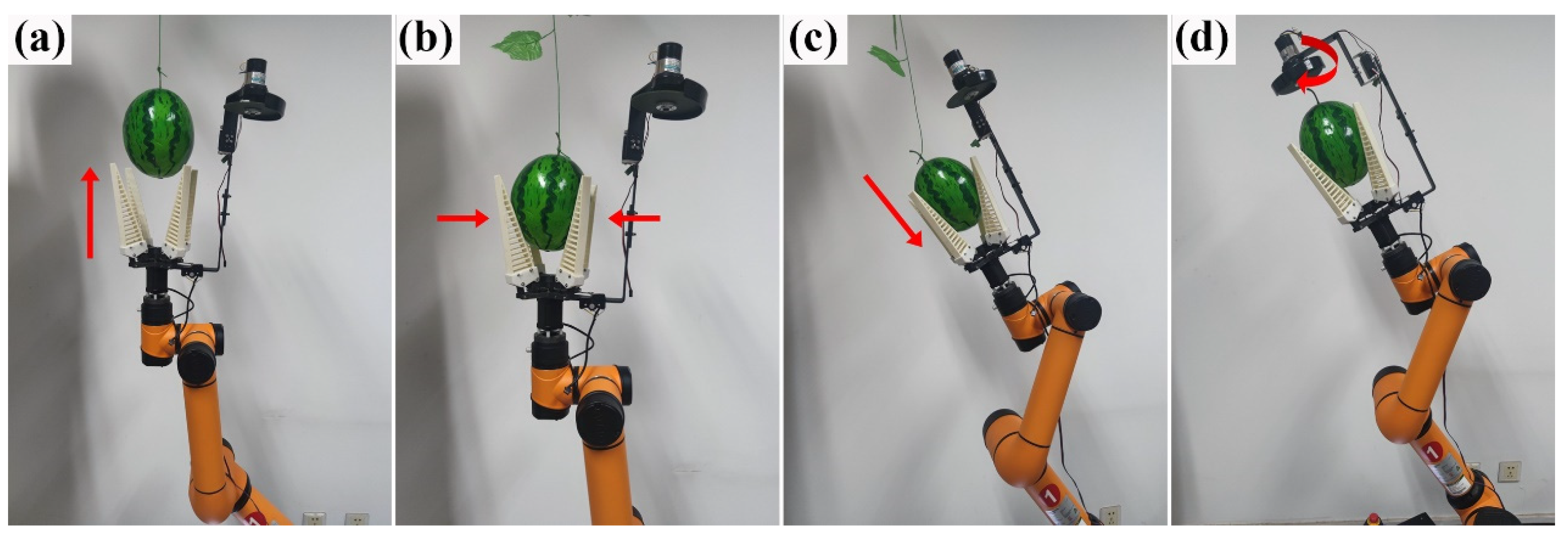

2.3. Design of the Watermelon Picking End-Effector

2.4. Watermelon Detection and Localization

2.4.1. Construction of the Object Detector

2.4.2. Watermelon Grasping Point Localization Method

3. Experiments and Results

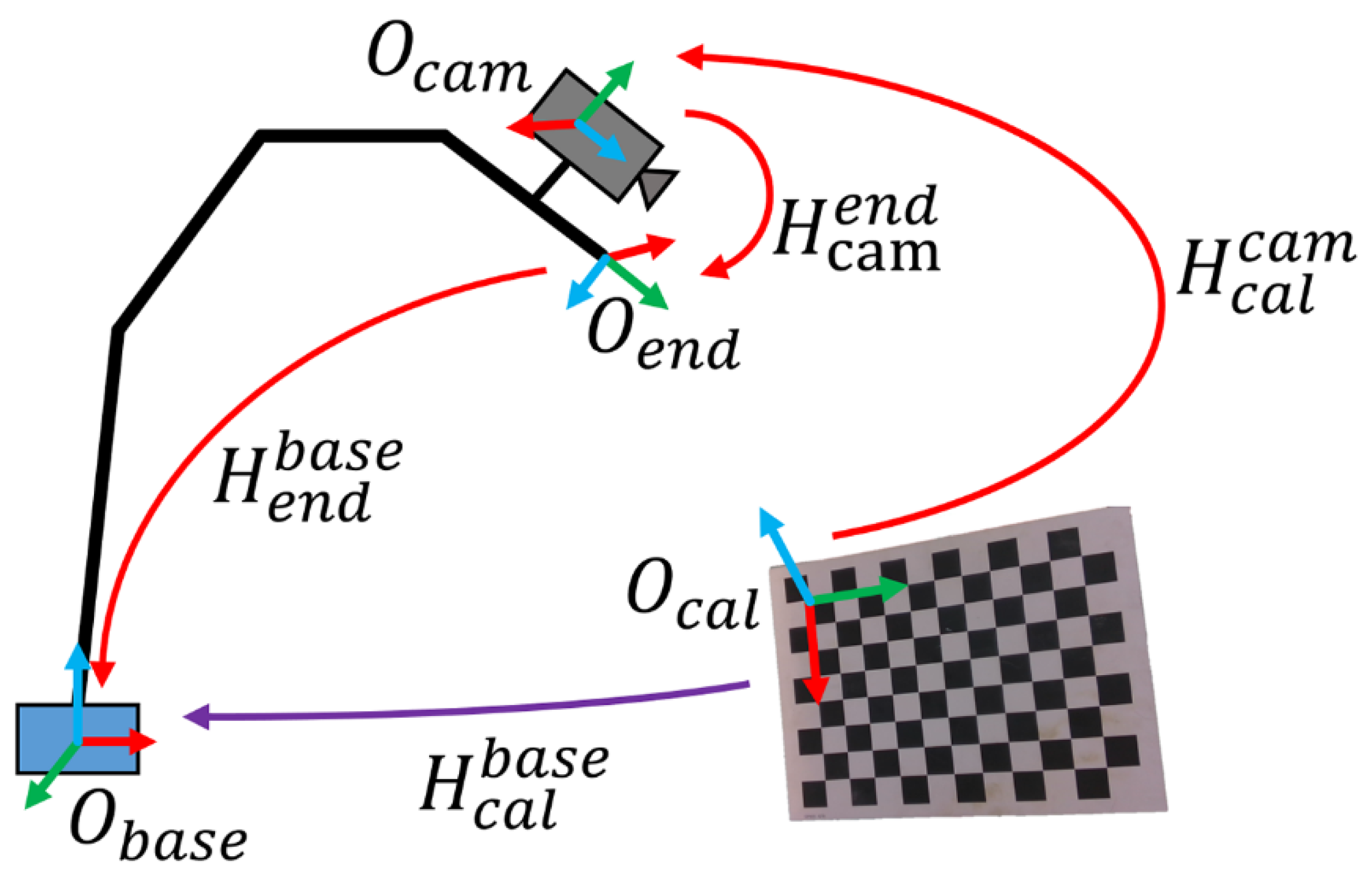

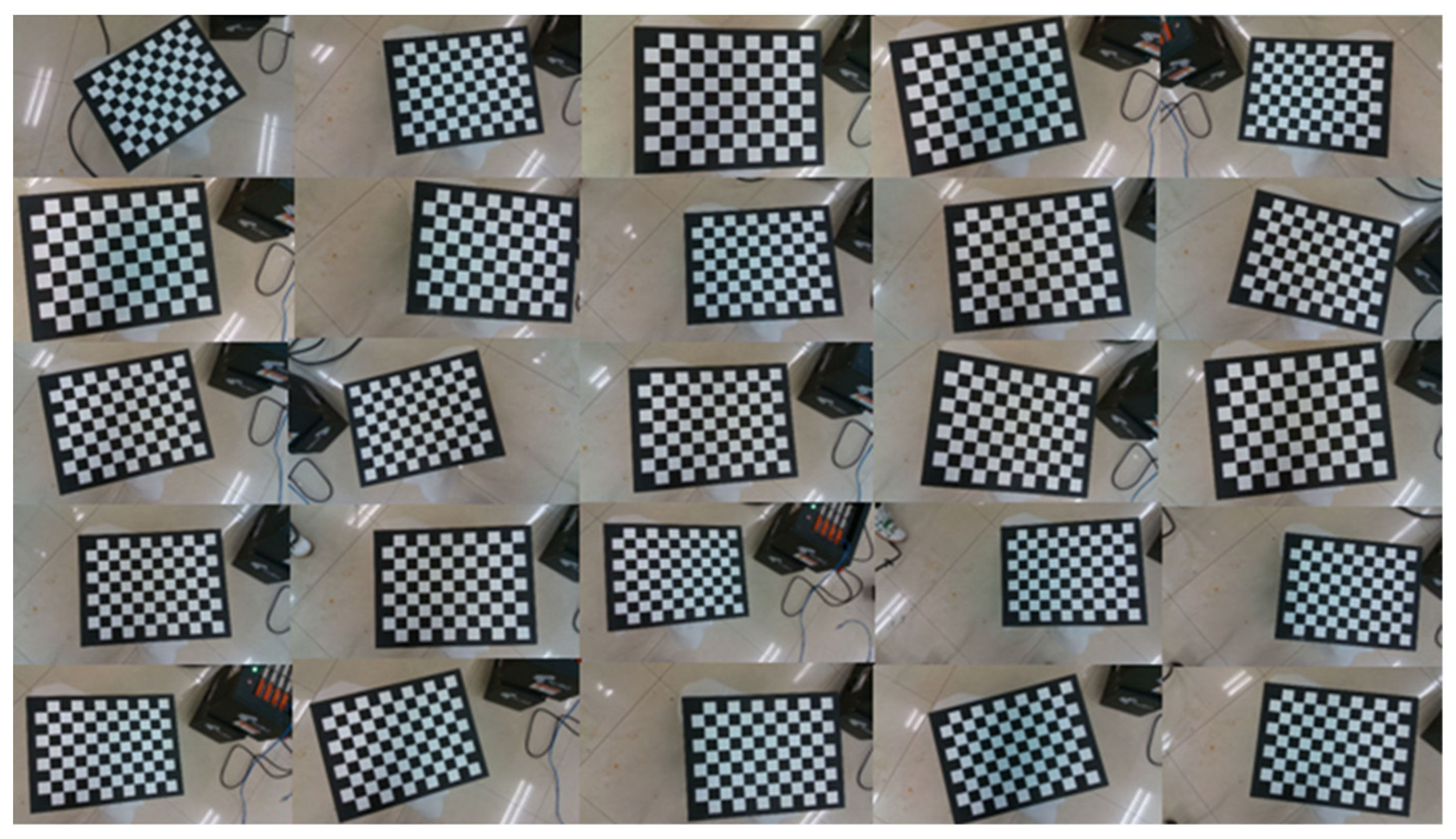

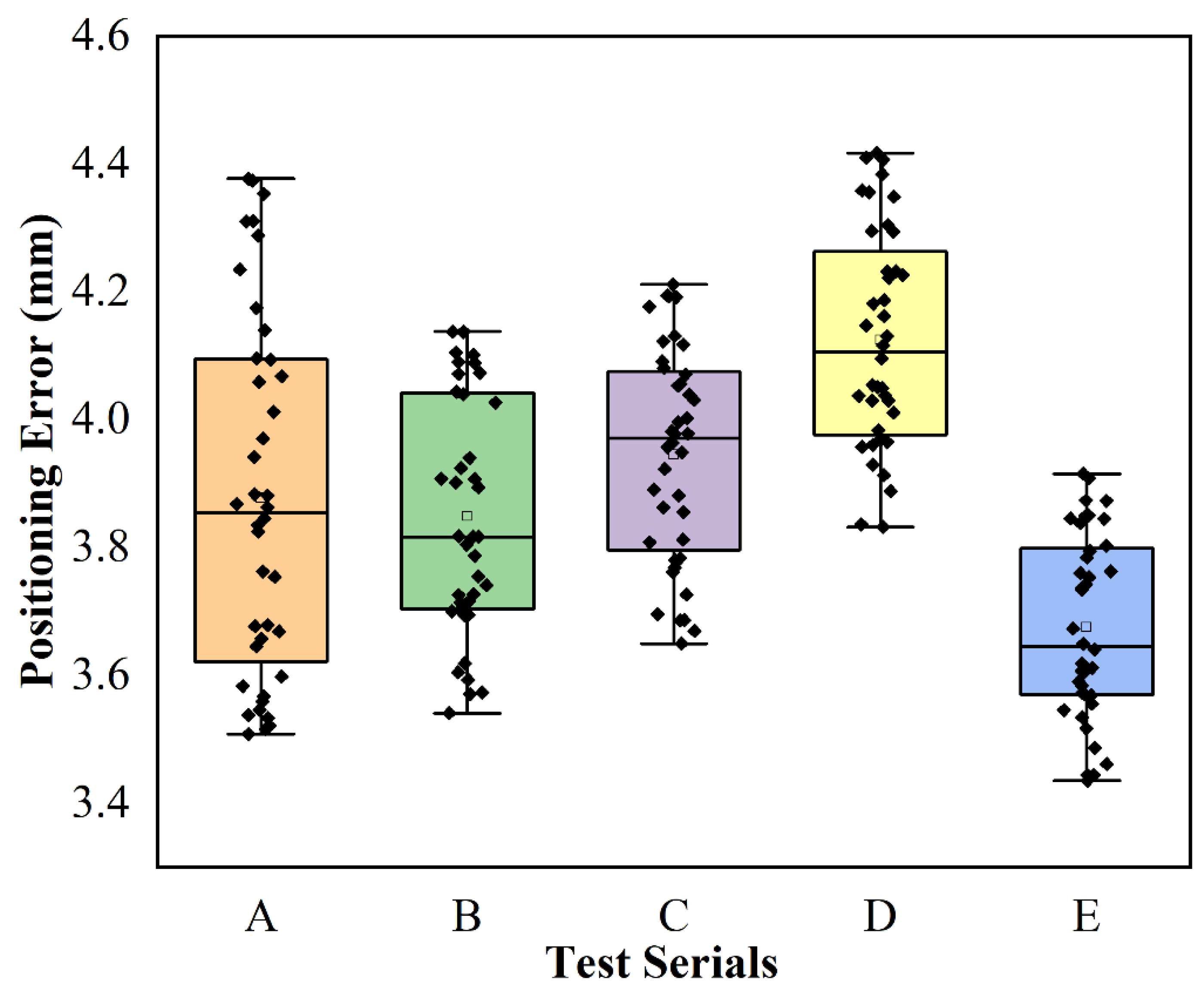

3.1. Hand-Eye Calibration

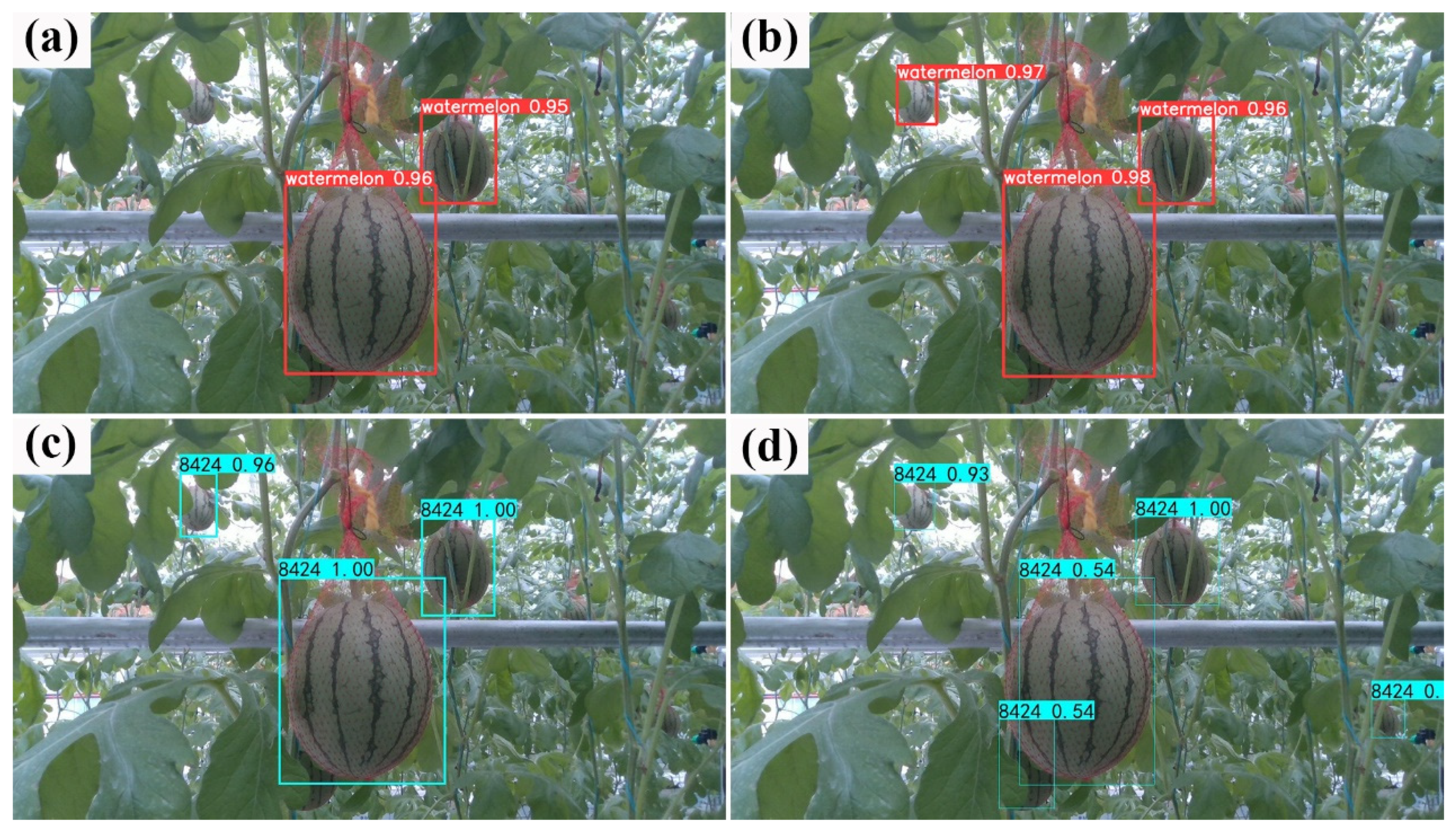

3.2. Evaluation of the Detection and Localization Method

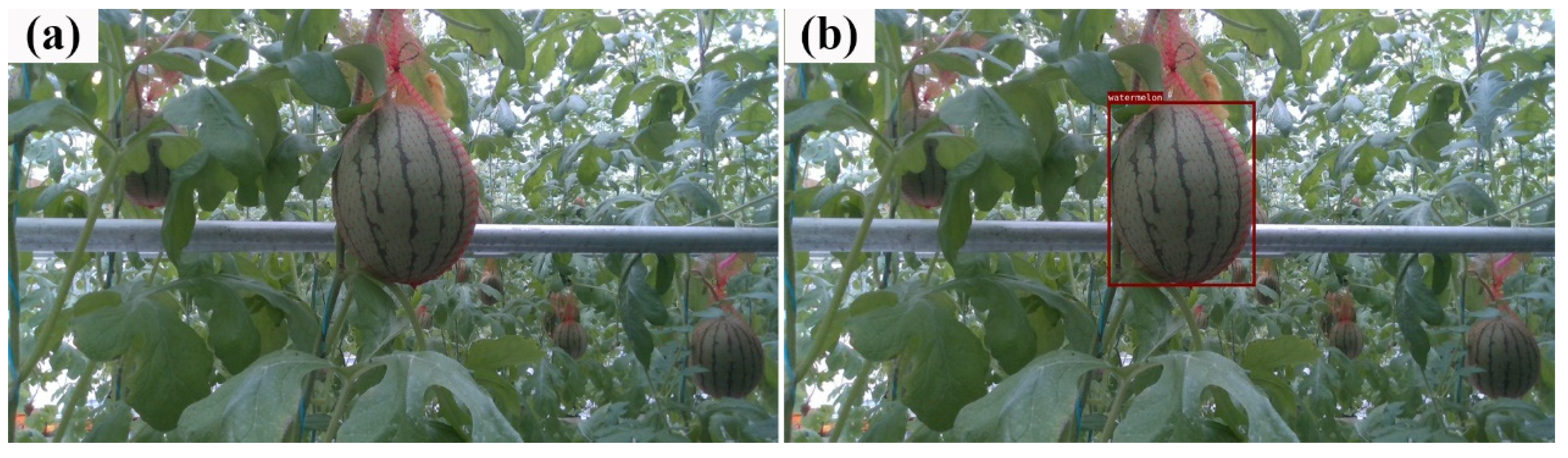

3.2.1. Image Acquisition and Dataset Creation

3.2.2. Model Training and Testing

3.3. Evaluation of the Watermelon-Harvesting Robot Prototype

3.3.1. End-Effector Clamping Performance Verification

3.3.2. Evaluation of Automatic Harvesting

3.4. Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- FAO. 2022. Available online: https://www.fao.org (accessed on 2 September 2022).

- Bac, C.W.; van Henten, E.J.; Hemming, J.; Edan, Y. Harvesting Robots for High-value Crops: State-of-the-art Review and Challenges Ahead. J. Field Robot. 2014, 31, 888–911. [Google Scholar] [CrossRef]

- Tsolakis, N.; Bechtsis, D.; Bochtis, D. AgROS: A Robot Operating System Based Emulation Tool for Agricultural Robotics. Agronomy 2019, 9, 403. [Google Scholar] [CrossRef]

- Zhang, B.; Xie, Y.; Zhou, J.; Wang, K.; Zhang, Z. State-of-the-art robotic grippers, grasping and control strategies, as well as their applications in agricultural robots: A review. Comput. Electron. Agric. 2020, 177, 105694. [Google Scholar] [CrossRef]

- Kootstra, G.; Wang, X.; Blok, P.M.; Hemming, J.; van Henten, E. Selective Harvesting Robotics: Current Research, Trends, and Future Directions. Curr. Robot. Rep. 2021, 2, 95–104. [Google Scholar] [CrossRef]

- Schertz, C.E.; Brown, G.K. Basic Considerations in Mechanizing Citrus Harvest. Trans. ASAE 1968, 11, 343–0346. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Auat Cheein, F. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Guevara, J.; Auat, F.; Sanz-Cortiella, R.; Escolà, A.; Llorens, J.; Morros, J.-R.; Ruiz-Hidalgo, J.; Vilaplana, V.; et al. Fruit detection in an apple orchard using a mobile terrestrial laser scanner. Biosyst. Eng. 2019, 187, 171–184. [Google Scholar] [CrossRef]

- Kondo, N.; Yata, K.; Iida, M.; Shiigi, T.; Monta, M.; Kurita, M.; Omori, H. Development of an End-Effector for a Tomato Cluster Harvesting Robot. Eng. Agric. Environ. Food 2010, 3, 20–24. [Google Scholar] [CrossRef]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-free path planning for a guava-harvesting robot based on recurrent deep reinforcement learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar] [CrossRef]

- He, Z.; Ma, L.; Wang, Y.; Wei, Y.; Ding, X.; Li, K.; Cui, Y. Double-Arm Cooperation and Implementing for Harvesting Kiwifruit. Agriculture 2022, 12, 1763. [Google Scholar] [CrossRef]

- Reed, J.N.; Tillett, R.D. Initial experiments in robotic mushroom harvesting. Mechatronics 1994, 4, 265–279. [Google Scholar] [CrossRef]

- Reed, J.N.; Miles, S.J.; Butler, J.; Baldwin, M.; Noble, R. AE—Automation and Emerging Technologies: Automatic Mushroom Harvester Development. J. Agric. Eng. Res. 2001, 78, 15–23. [Google Scholar] [CrossRef]

- Qian, Y.; Jiacheng, R.; Pengbo, W.; Zhan, Y.; Changxing, G. Real-time detection and localization using SSD method for oyster mushroom picking robot. In Proceedings of the 2020 IEEE International Conference on Real-time Computing and Robotics (RCAR), Asahikawa, Japan, 28–29 September 2020; pp. 158–163. [Google Scholar]

- Rong, J.; Wang, P.; Yang, Q.; Huang, F. A Field-Tested Harvesting Robot for Oyster Mushroom in Greenhouse. Agronomy 2021, 11, 1210. [Google Scholar] [CrossRef]

- Baeten, J.; Donné, K.; Boedrij, S.; Beckers, W.; Claesen, E. Autonomous Fruit Picking Machine: A Robotic Apple Harvester. In Field and Service Robotics: Results of the 6th International Conference; Laugier, C., Siegwart, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 531–539. [Google Scholar]

- Kang, H.; Zhou, H.; Chen, C. Visual Perception and Modeling for Autonomous Apple Harvesting. IEEE Access 2020, 8, 62151–62163. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. [Google Scholar] [CrossRef]

- Leu, A.; Razavi, M.; Langstädtler, L.; Ristić-Durrant, D.; Raffel, H.; Schenck, C.; Gräser, A.; Kuhfuss, B. Robotic Green Asparagus Selective Harvesting. IEEE/ASME Trans. Mechatron. 2017, 22, 2401–2410. [Google Scholar] [CrossRef]

- Williams, H.A.M.; Jones, M.H.; Nejati, M.; Seabright, M.J.; Bell, J.; Penhall, N.D.; Barnett, J.J.; Duke, M.D.; Scarfe, A.J.; Ahn, H.S.; et al. Robotic kiwifruit harvesting using machine vision, convolutional neural networks, and robotic arms. Biosyst. Eng. 2019, 181, 140–156. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Yang, L.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Wu, F.; Duan, J.; Ai, P.; Chen, Z.; Yang, Z.; Zou, X. Rachis detection and three-dimensional localization of cut off point for vision-based banana robot. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar] [CrossRef]

- Sakai, S.; Osuka, K.; Fukushima, H.; Iida, M. Watermelon harvesting experiment of a heavy material handling agricultural robot with LQ control. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; Volume 761, pp. 769–774. [Google Scholar]

- Hu, Z.; Zhang, X.; Zhang, W.; Wang, L. Precise control of clamping force for watermelon picking end-effector. Trans. Chin. Soc. Agric. Eng. 2014, 30, 43–49. [Google Scholar]

- Ji, W.; Zhang, J.; Xu, B.; Tang, C.; Zhao, D. Grasping mode analysis and adaptive impedance control for apple harvesting robotic grippers. Comput. Electron. Agric. 2021, 186, 106210. [Google Scholar] [CrossRef]

- Gao, J.; Zhang, F.; Zhang, J.; Yuan, T.; Yin, J.; Guo, H.; Yang, C. Development and evaluation of a pneumatic finger-like end-effector for cherry tomato harvesting robot in greenhouse. Comput. Electron. Agric. 2022, 197, 106879. [Google Scholar] [CrossRef]

- Jun, J.; Kim, J.; Seol, J.; Kim, J.; Son, H.I. Towards an Efficient Tomato Harvesting Robot: 3D Perception, Manipulation, and End-Effector. IEEE Access 2021, 9, 17631–17640. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, Y.; Yang, C.; Zhao, H.; Chen, G.; Zhang, Z.; Fu, S.; Zhang, M.; Xu, H. End-effector with a bite mode for harvesting citrus fruit in random stalk orientation environment. Comput. Electron. Agric. 2019, 157, 454–470. [Google Scholar] [CrossRef]

- Bac, C.W.; Hemming, J.; van Tuijl, B.A.J.; Barth, R.; Wais, E.; van Henten, E.J. Performance Evaluation of a Harvesting Robot for Sweet Pepper. J. Field Robot. 2017, 34, 1123–1139. [Google Scholar] [CrossRef]

- Afonso, M.; Fonteijn, H.; Fiorentin, F.S.; Lensink, D.; Mooij, M.; Faber, N.; Polder, G.; Wehrens, R. Tomato Fruit Detection and Counting in Greenhouses Using Deep Learning. Front. Plant Sci. 2020, 11, 571299. [Google Scholar] [CrossRef]

- Zhang, C.; Zou, K.; Pan, Y. A Method of Apple Image Segmentation Based on Color-Texture Fusion Feature and Machine Learning. Agronomy 2020, 10, 972. [Google Scholar] [CrossRef]

- Mokhtar, U.; Ali, M.A.S.; Hassenian, A.E.; Hefny, H. Tomato leaves diseases detection approach based on Support Vector Machines. In Proceedings of the 2015 11th International Computer Engineering Conference (ICENCO), Cairo, Egypt, 29–30 December 2015; pp. 246–250. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Darwin, B.; Dharmaraj, P.; Prince, S.; Popescu, D.E.; Hemanth, D.J. Recognition of Bloom/Yield in Crop Images Using Deep Learning Models for Smart Agriculture: A Review. Agronomy 2021, 11, 646. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Automation in Agriculture by Machine and Deep Learning Techniques: A Review of Recent Developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Chakraborty, S.K.; Chandel, N.S.; Jat, D.; Tiwari, M.K.; Rajwade, Y.A.; Subeesh, A. Deep learning approaches and interventions for futuristic engineering in agriculture. Neural Comput. Appl. 2022, 34, 20539–20573. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Tsai, R.Y.; Lenz, R.K. A new technique for fully autonomous and efficient 3 d robotics hand/eye calibration. IEEE Trans. Robot. Autom. 1989, 5, 345–358. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision–ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Sun, Q.; Chai, X.; Zeng, Z.; Zhou, G.; Sun, T. Noise-tolerant RGB-D feature fusion network for outdoor fruit detection. Comput. Electron. Agric. 2022, 198, 107034. [Google Scholar] [CrossRef]

- Xiong, Y.; From, P.J.; Isler, V. Design and Evaluation of a Novel Cable-Driven Gripper with Perception Capabilities for Strawberry Picking Robots. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 7384–7391. [Google Scholar]

| Fruit Variety | Pedicel Diameter (mm) | Pedicel Length (mm) | Fruit Width (mm) | Fruit Length (mm) | Fruit Weight (kg) |

|---|---|---|---|---|---|

| L600 | 5.5 | 98 | 150 | 186 | 1.89 |

| 8424 | 5.6 | 99 | 153 | 167 | 1.97 |

| Model | Size (Pixels) | Speed (ms) | Recall | Precision | mAP |

|---|---|---|---|---|---|

| YOLOv5s | 640 | 8.6 | 94.6 | 87.4 | 94.1 |

| YOLOv5s–CBAM | 640 | 8.9 | 94.9 | 89.8 | 96.3 |

| YOLOv4 | 416 | 40.9 | 95.1 | 89.2 | 95.6 |

| SSD | 512 | 27.5 | 93.0 | 86.8 | 92.2 |

| Varieties | Number of Experiments | Successful Clamping | Maximum Shearing Time (s) | Average Shearing Time (s) |

|---|---|---|---|---|

| L600 | 30 | 28 | 5.5 | 5.0 |

| 8424 | 30 | 29 | 5.9 | 5.2 |

| Number of Experiments | Partial Obstruction | Average Positioning Error | Successful Clamping | Successful Shearing |

|---|---|---|---|---|

| 60 | × | 8.7 | 56 | 56 |

| 60 | ✓ | 14.6 | 51 | 51 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rong, J.; Fu, J.; Zhang, Z.; Yin, J.; Tan, Y.; Yuan, T.; Wang, P. Development and Evaluation of a Watermelon-Harvesting Robot Prototype: Vision System and End-Effector. Agronomy 2022, 12, 2836. https://doi.org/10.3390/agronomy12112836

Rong J, Fu J, Zhang Z, Yin J, Tan Y, Yuan T, Wang P. Development and Evaluation of a Watermelon-Harvesting Robot Prototype: Vision System and End-Effector. Agronomy. 2022; 12(11):2836. https://doi.org/10.3390/agronomy12112836

Chicago/Turabian StyleRong, Jiacheng, Jun Fu, Zhiqin Zhang, Jinliang Yin, Yuzhi Tan, Ting Yuan, and Pengbo Wang. 2022. "Development and Evaluation of a Watermelon-Harvesting Robot Prototype: Vision System and End-Effector" Agronomy 12, no. 11: 2836. https://doi.org/10.3390/agronomy12112836

APA StyleRong, J., Fu, J., Zhang, Z., Yin, J., Tan, Y., Yuan, T., & Wang, P. (2022). Development and Evaluation of a Watermelon-Harvesting Robot Prototype: Vision System and End-Effector. Agronomy, 12(11), 2836. https://doi.org/10.3390/agronomy12112836