Automatic Estimation of Apple Orchard Blooming Levels Using the Improved YOLOv5

Abstract

1. Introduction

2. Materials and Methods

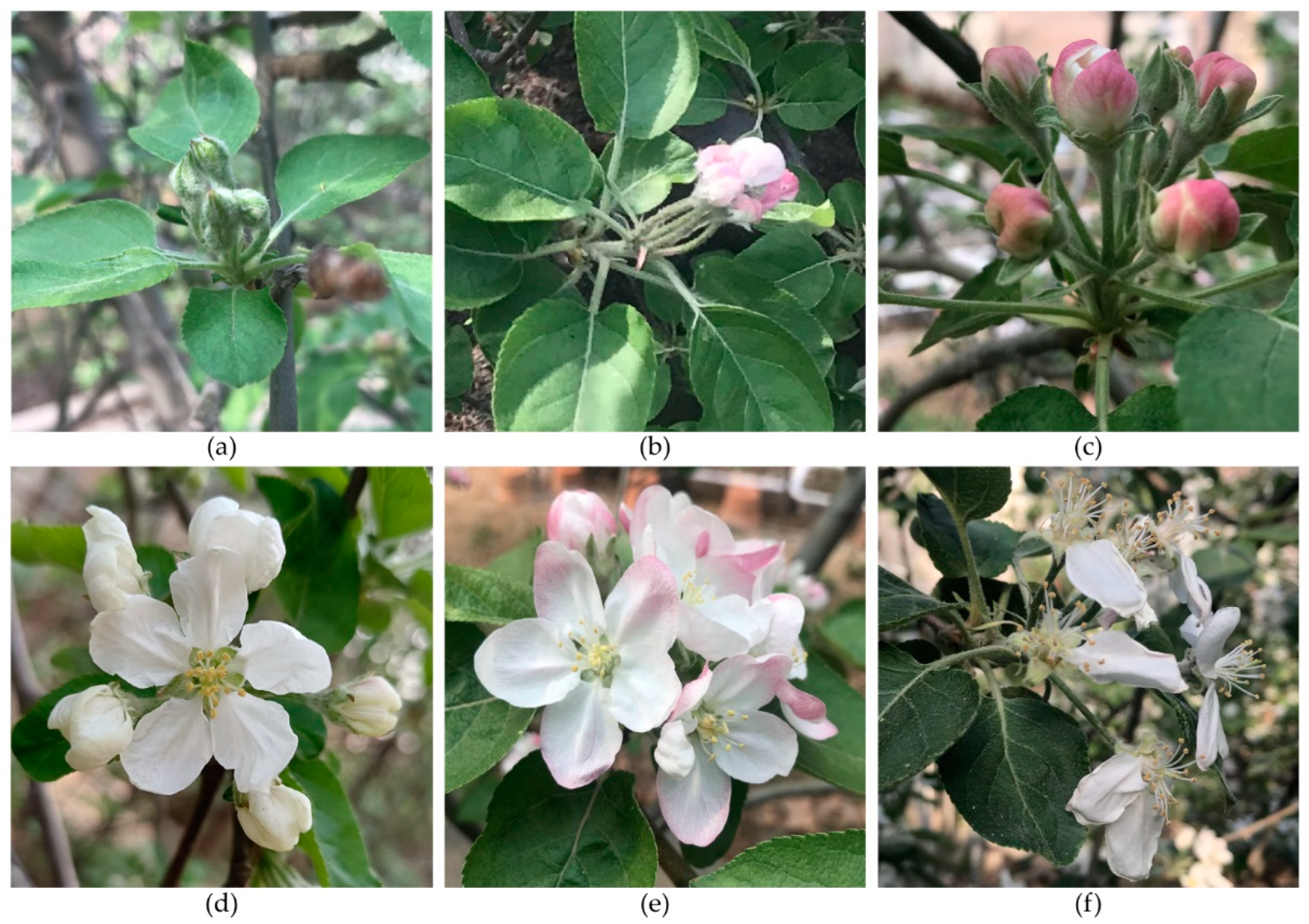

2.1. Definition of Blooming Level

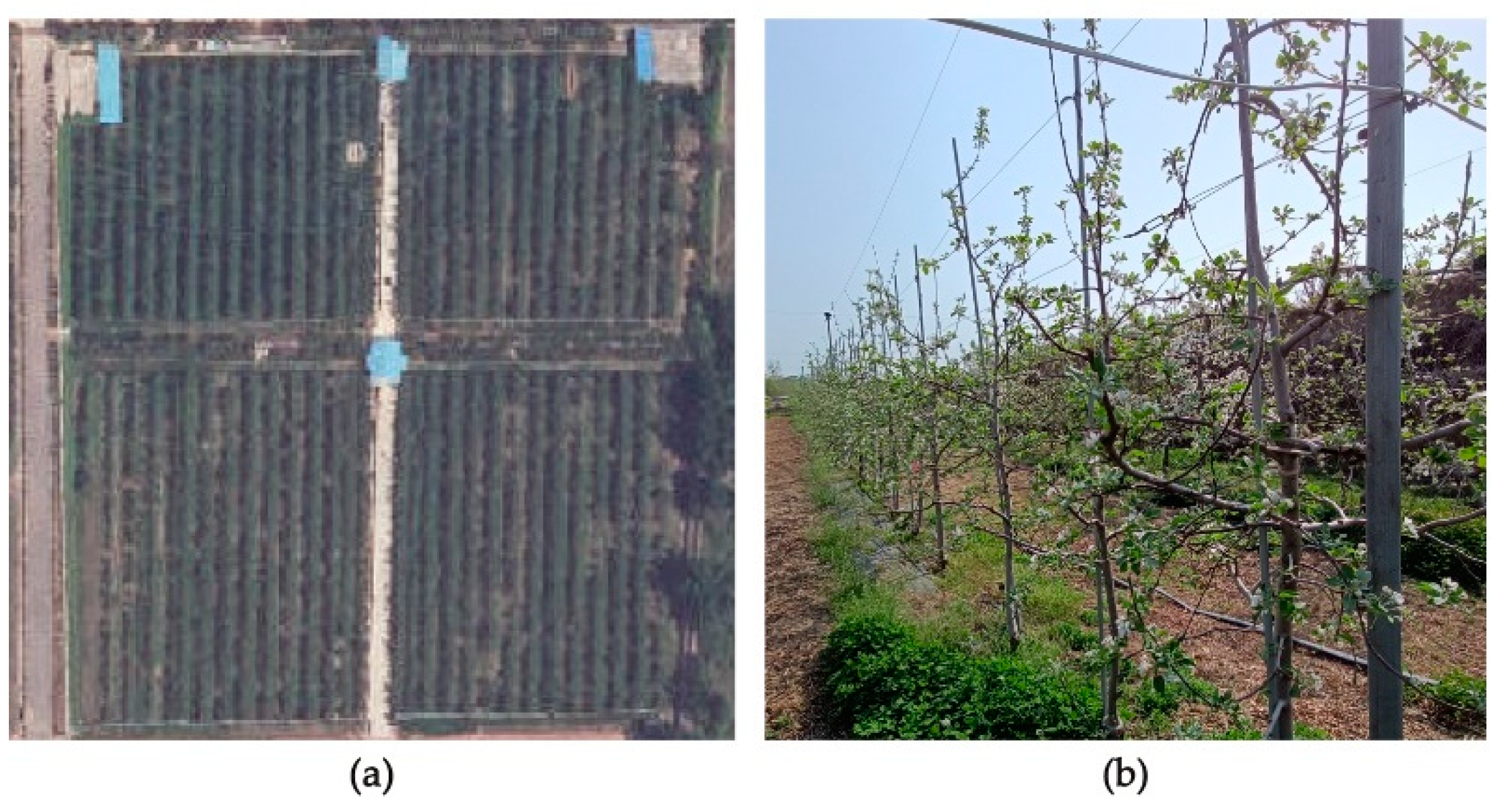

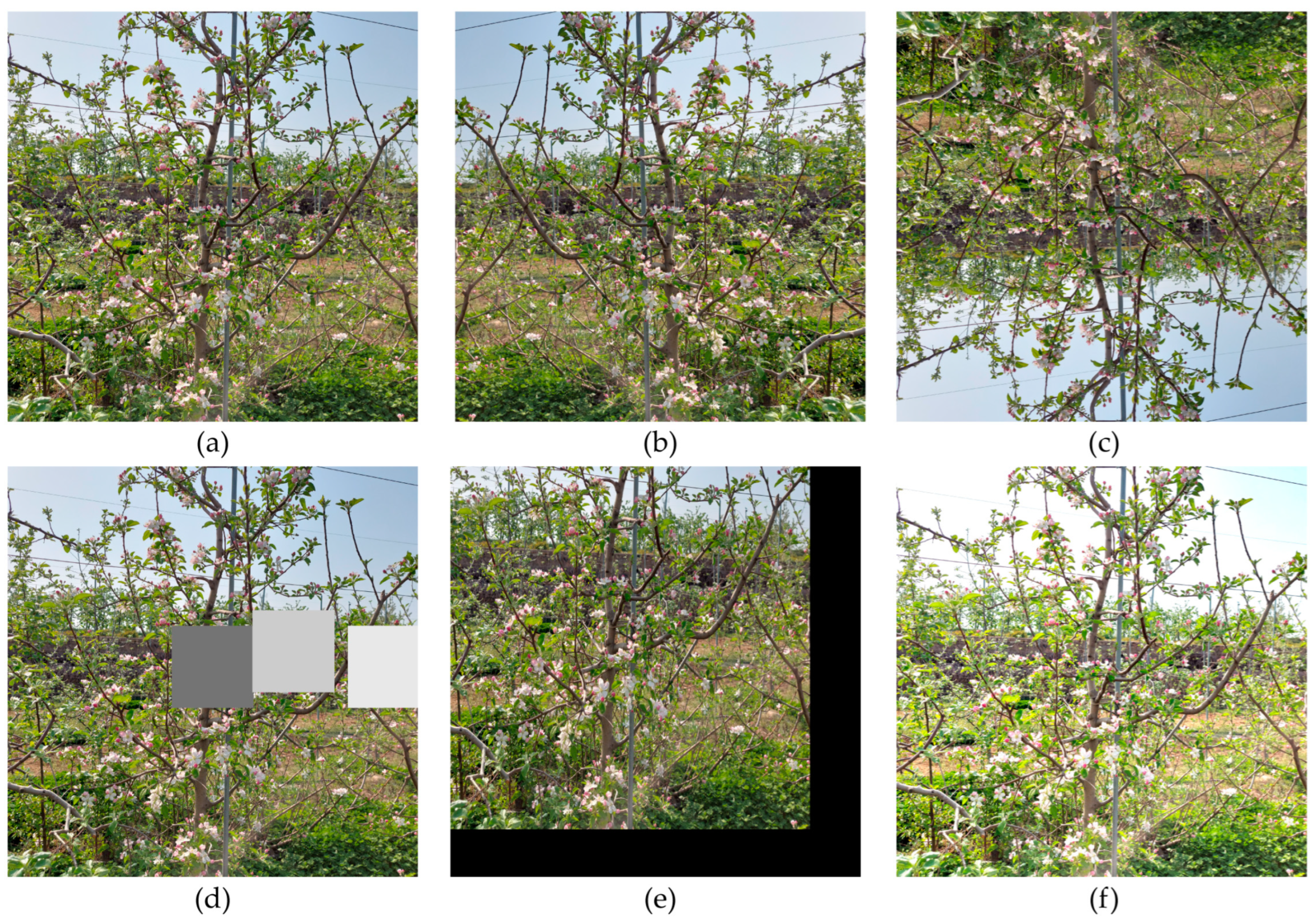

2.2. Image Collection and Dataset Building

2.2.1. Image Collection

2.2.2. Dataset

2.3. Algorithm Process

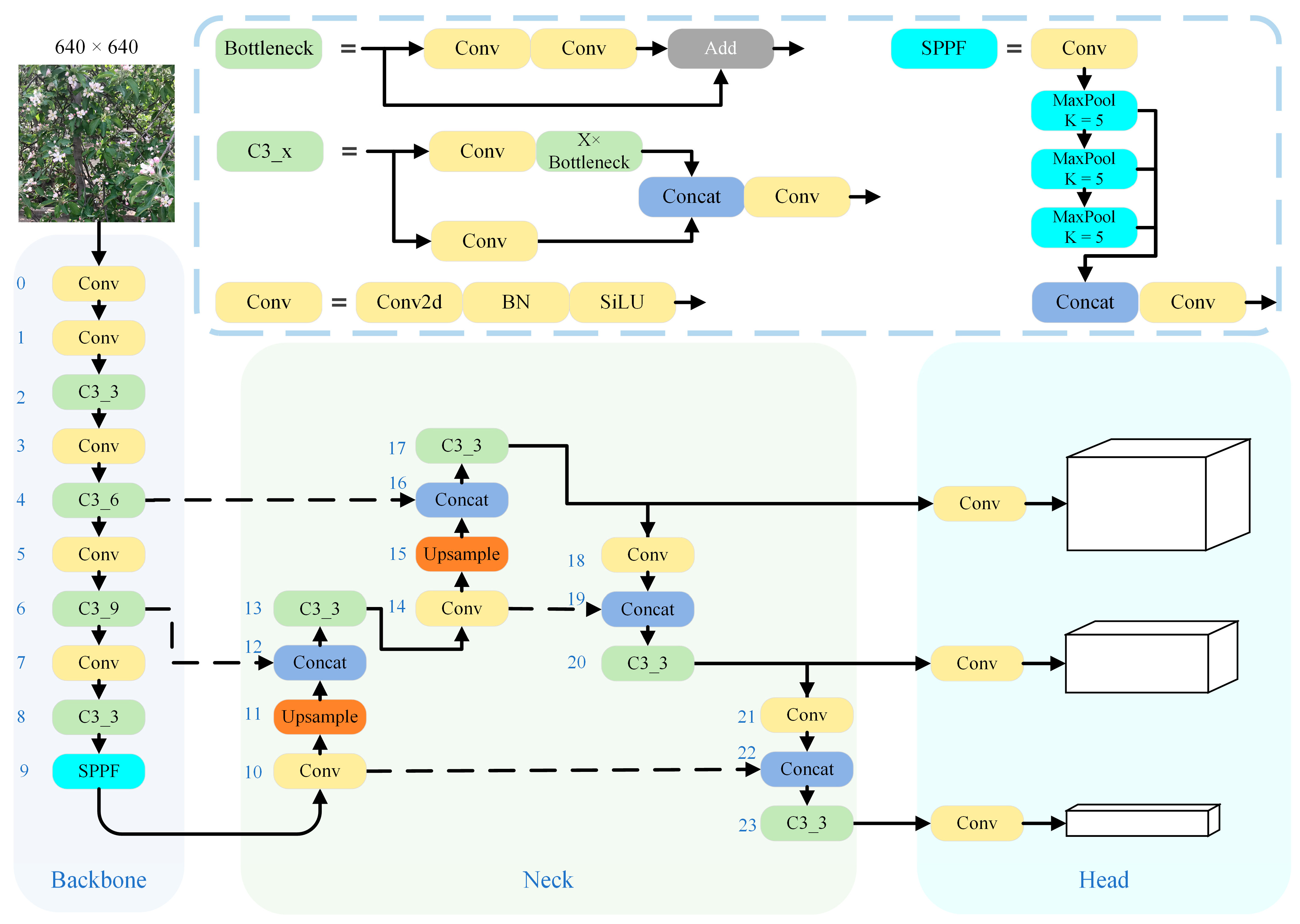

2.4. YOLOv5 Model

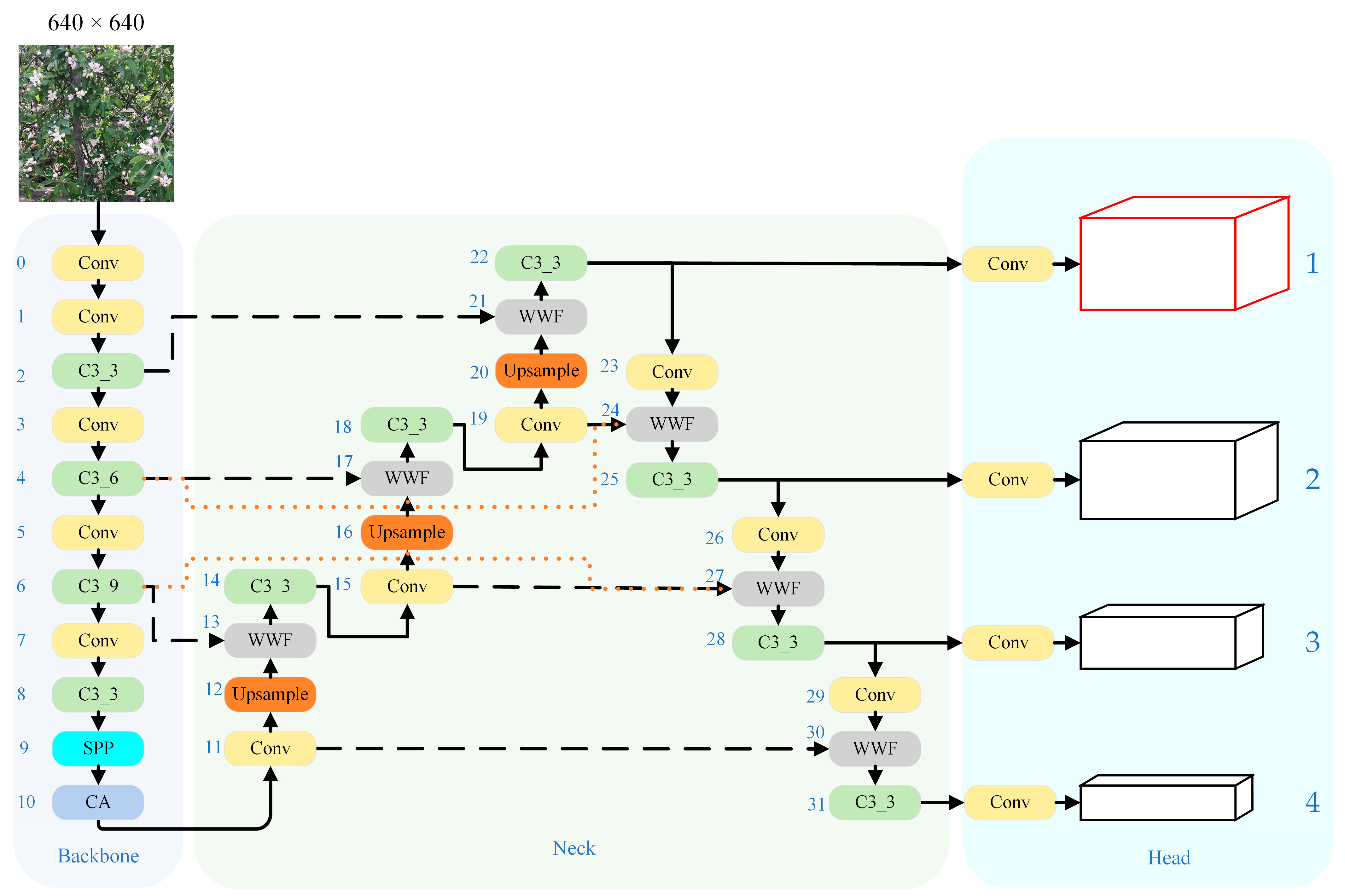

2.5. Apple Flower Detection Model

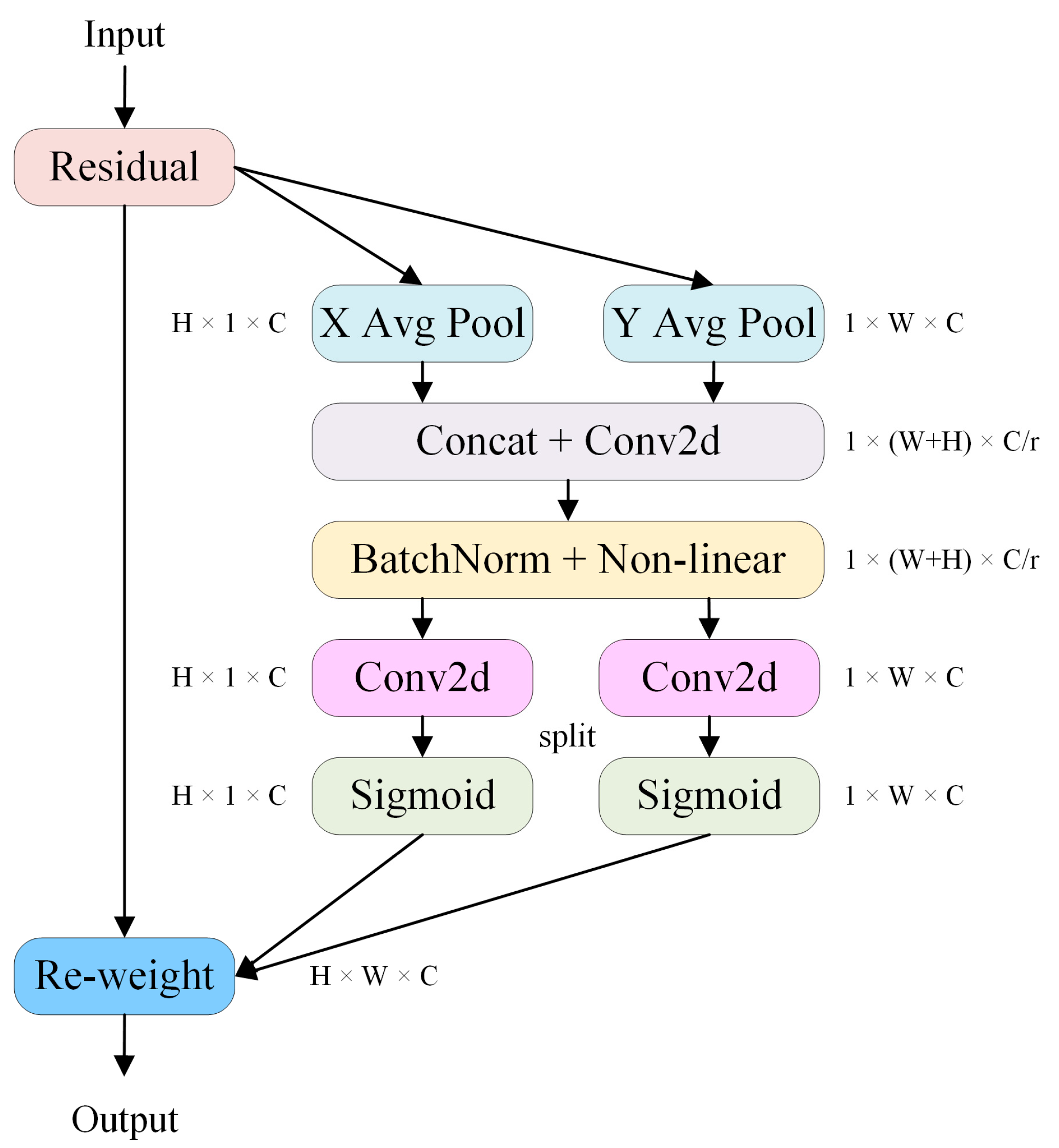

2.5.1. Coordinate Attention Layer

2.5.2. Small Object Detection Head

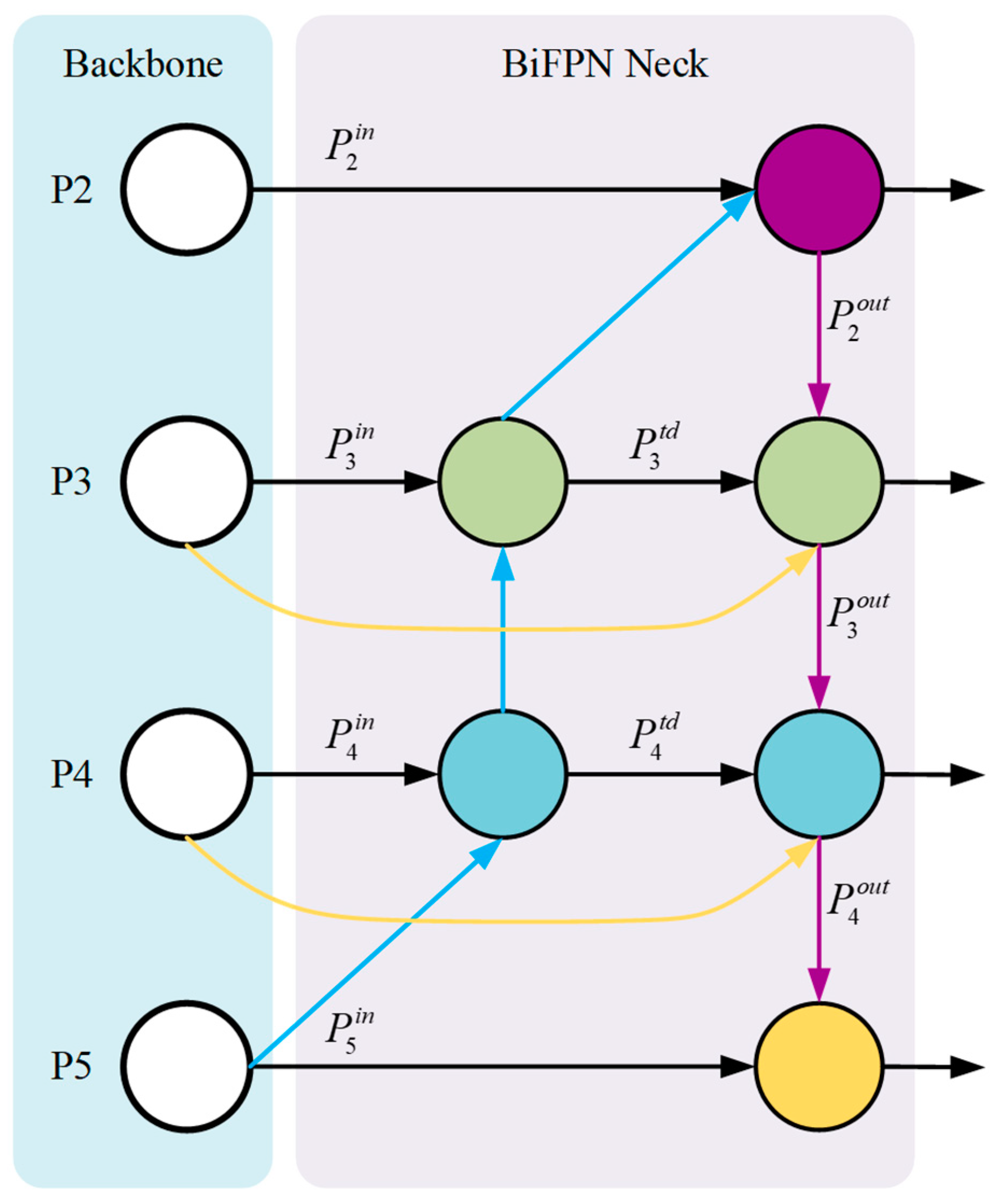

2.5.3. Improved Feature Fusion Structure

3. Results

3.1. Model Training

3.2. Performance Evaluation of Flower Detectors

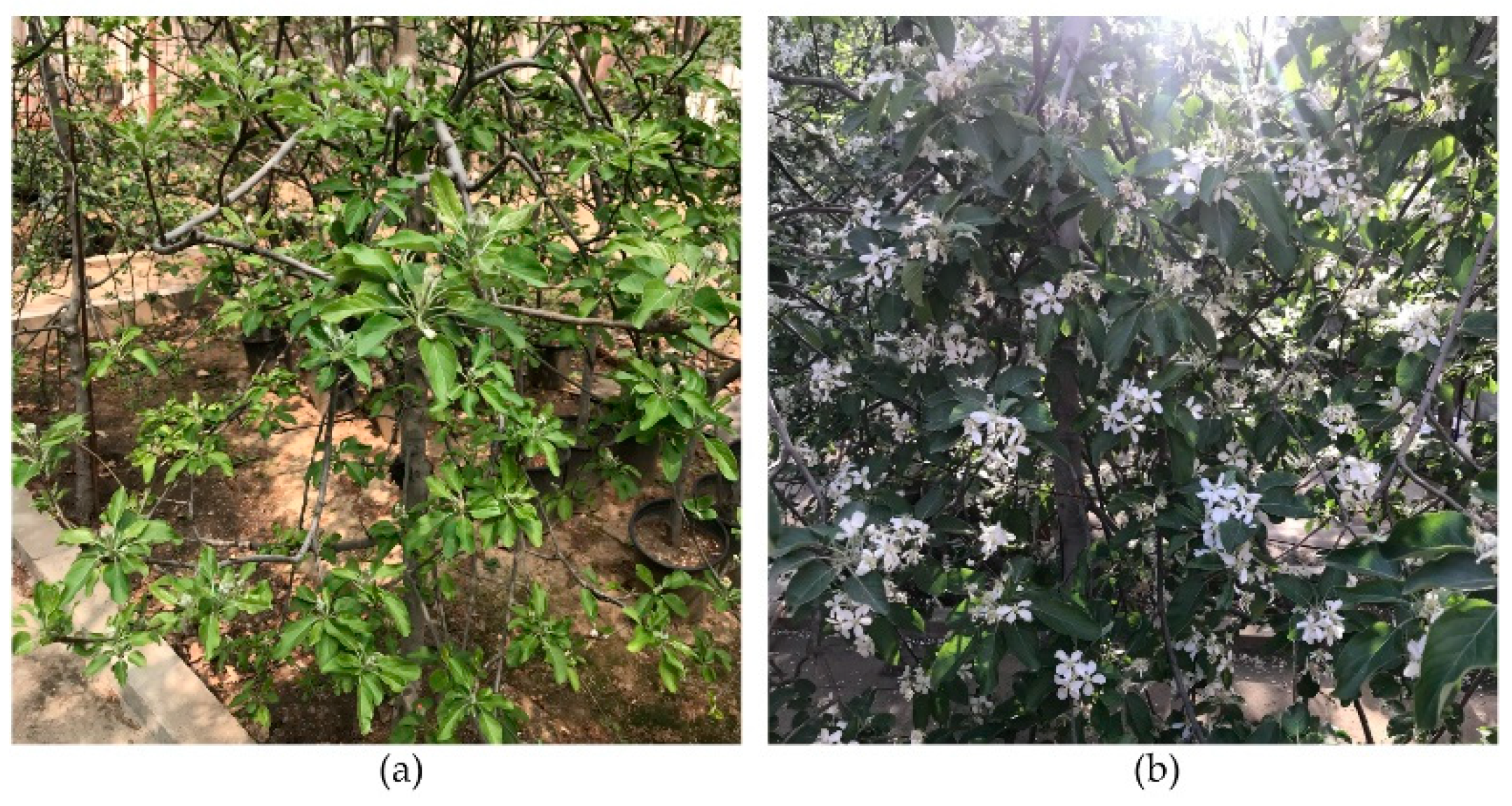

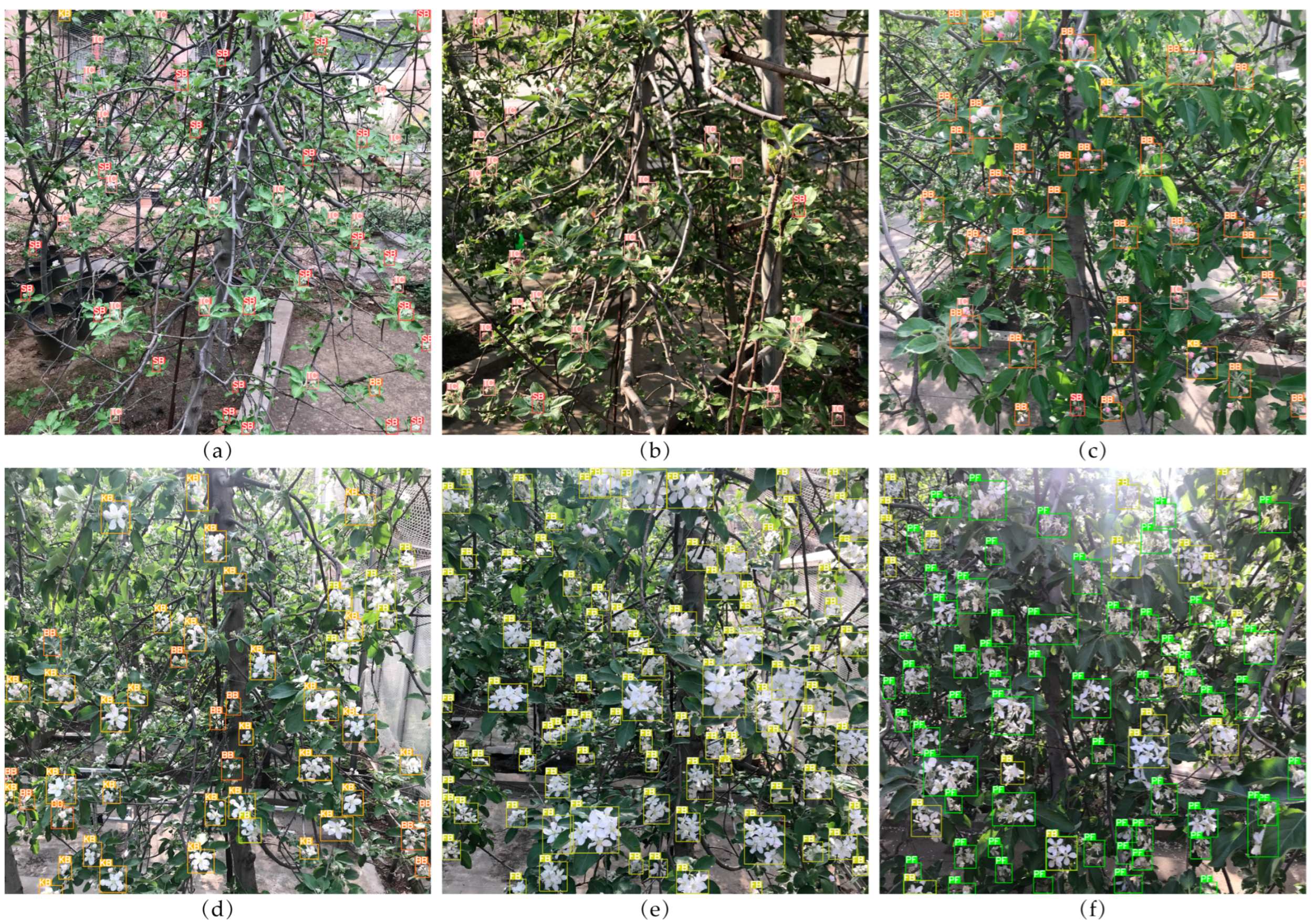

3.3. Apple Flower Detection in Natural Environment

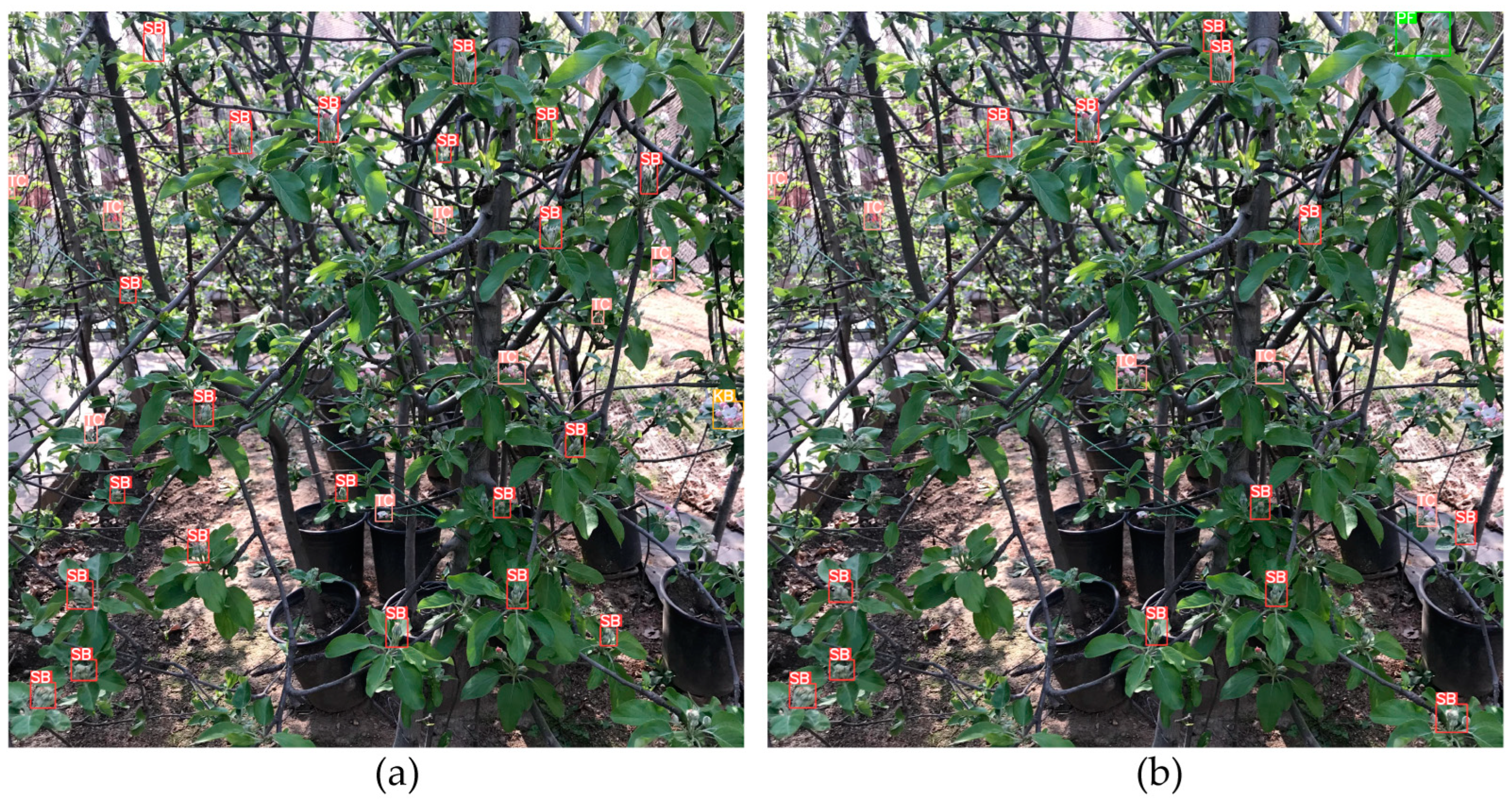

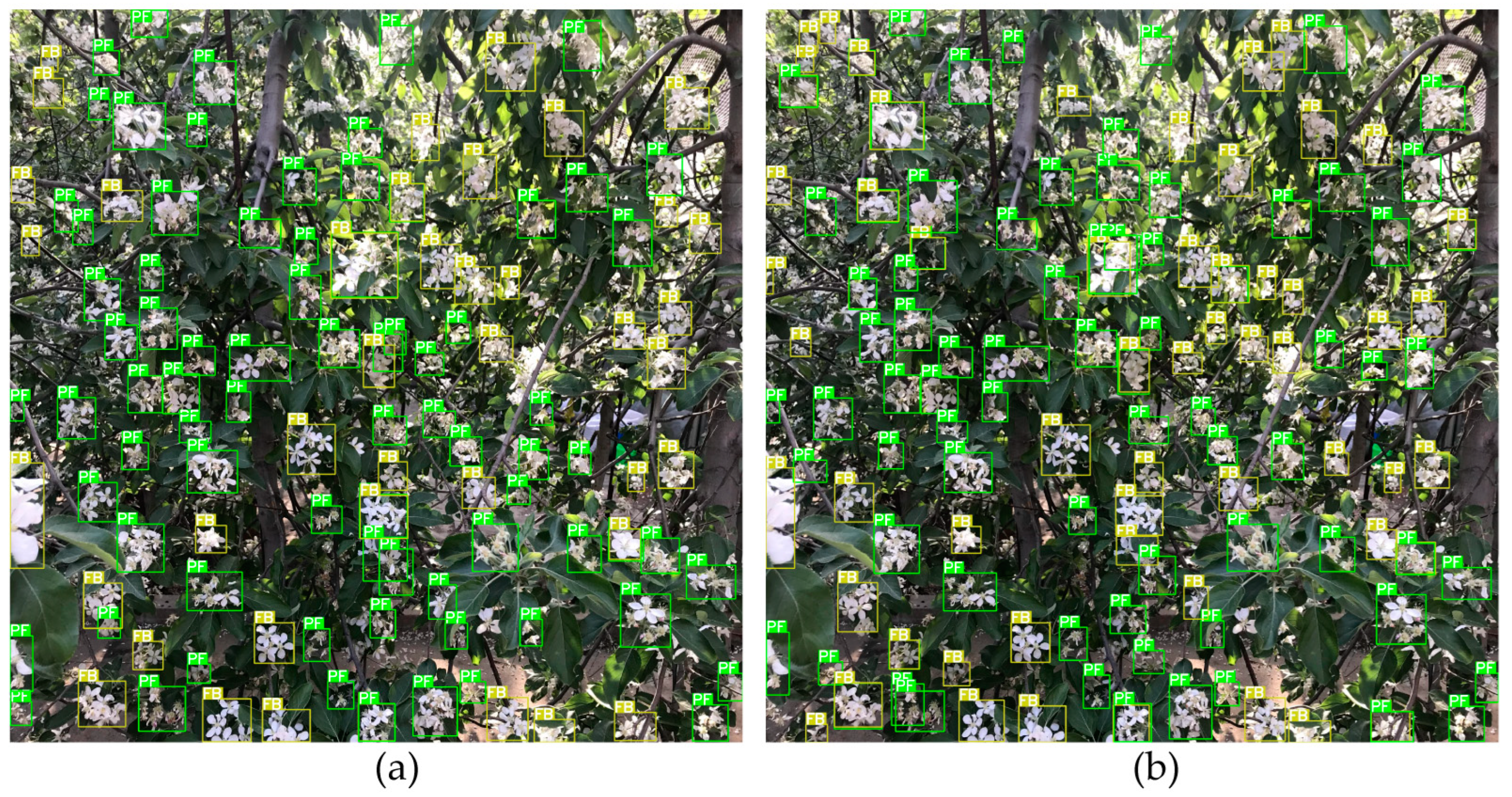

3.4. Inter-Annual Knowledge Transfer

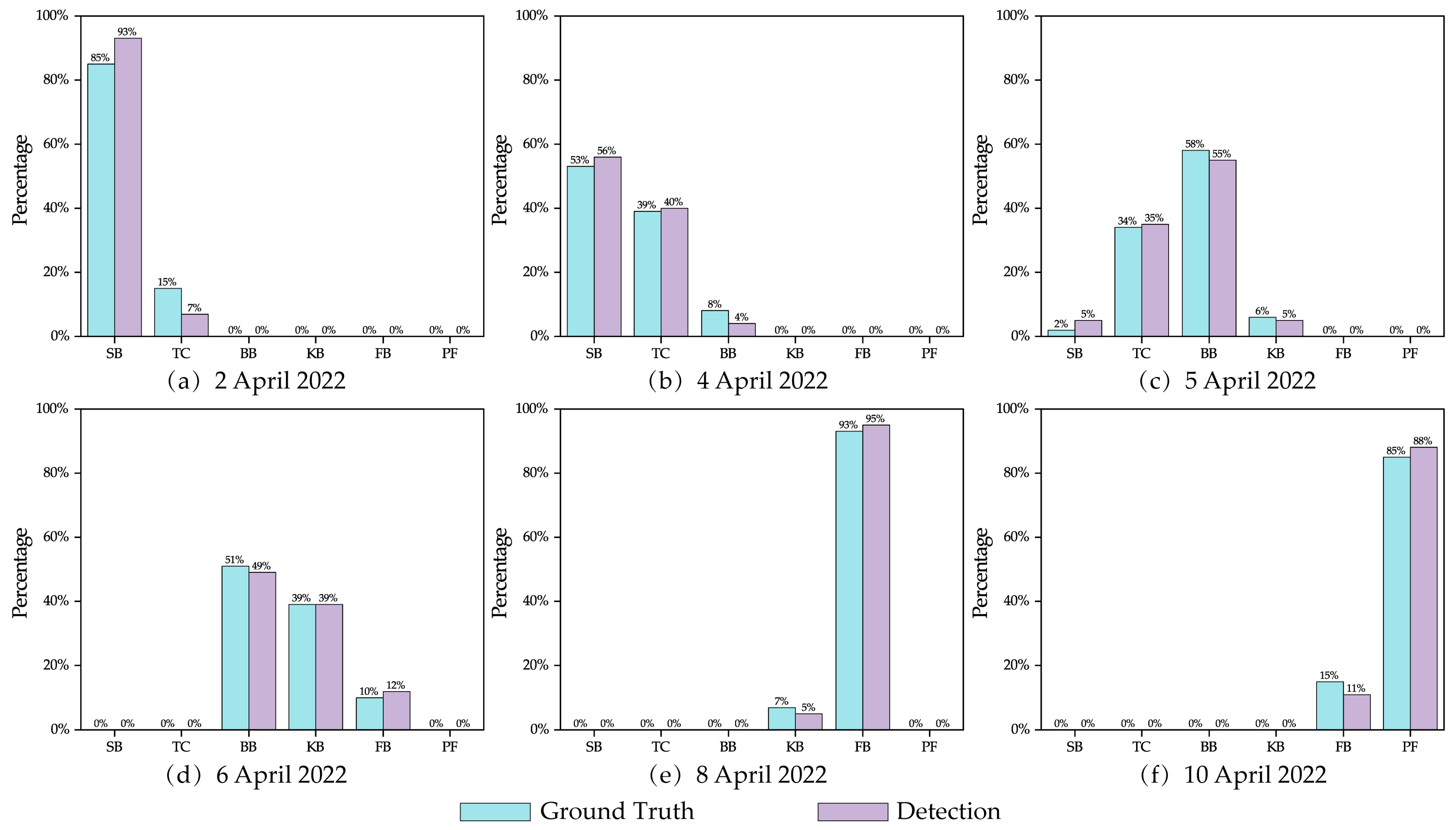

3.5. Blooming Level Estimation

3.6. Blooming Peak Day

4. Discussion

4.1. Comparison of Improved Model with YOLOv5s

4.2. Comparison of Proposed Model with Baseline Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Link, H. Significance of flower and fruit thinning on fruit quality. Plant Growth Regul. 2000, 31, 17–26. [Google Scholar] [CrossRef]

- Aggelopoulou, K.D.; Wulfsohn, D.; Fountas, S.; Gemtos, T.A.; Nanos, G.D.; Blackmore, S. Spatial variation in yield and quality in a small apple orchard. Precis. Agric. 2009, 11, 538–556. [Google Scholar] [CrossRef]

- Suo, G.-D.; Xie, Y.-S.; Zhang, Y.; Cai, M.-Y.; Wang, X.-S.; Chuai, J.-F. Crop load management (CLM) for sustainable apple production in China. Sci. Hortic. 2016, 211, 213–219. [Google Scholar] [CrossRef]

- Solomakhin, A.A.; Blanke, M.M. Mechanical flower thinning improves the fruit quality of apples. J. Sci. Food Agric. 2010, 90, 735–741. [Google Scholar] [CrossRef]

- DeLong, C.N.; Yoder, K.S.; Cochran, A.E.; Kilmer, S.W.; Royston, W.S.; Combs, L.D.; Peck, G.M. Apple Disease Control and Bloom-Thinning Effects by Lime Sulfur, Regalia, and JMS Stylet-Oil. Plant Health Prog. 2018, 19, 143–152. [Google Scholar] [CrossRef]

- Greene, D.W. Chemicals, Timing, and Environmental Factors Involved in Thinner Efficacy on Apple. HortScience 2002, 37, 477–481. [Google Scholar] [CrossRef]

- Wang, X.; Tang, J.; Whitty, M. DeepPhenology: Estimation of apple flower phenology distributions based on deep learning. Comput. Electron. Agric. 2021, 185, 106123. [Google Scholar] [CrossRef]

- Mamat, N.; Othman, M.F.; Abdoulghafor, R.; Belhaouari, S.B.; Mamat, N.; Mohd Hussein, S.F. Advanced Technology in Agriculture Industry by Implementing Image Annotation Technique and Deep Learning Approach: A Review. Agriculture 2022, 12, 1033. [Google Scholar] [CrossRef]

- Sozzi, M.; Cantalamessa, S.; Cogato, A.; Kayad, A.; Marinello, F. Automatic bunch detection in white grape varieties using YOLOv3, YOLOv4, and YOLOv5 deep learning algorithms. Agronomy 2022, 12, 319. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Aggelopoulou, A.D.; Bochtis, D.; Fountas, S.; Swain, K.C.; Gemtos, T.A.; Nanos, G.D. Yield prediction in apple orchards based on image processing. Precis. Agric. 2010, 12, 448–456. [Google Scholar] [CrossRef]

- Hočevar, M.; Širok, B.; Godeša, T.; Stopar, M. Flowering estimation in apple orchards by image analysis. Precis. Agric. 2013, 15, 466–478. [Google Scholar] [CrossRef]

- Comas, A.T.; Valente, J.; Kooistra, L. Automatic Apple Tree Blossom Estimation from Uav Rgb Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 631–635. [Google Scholar] [CrossRef]

- Piani, M.; Bortolotti, G.; Manfrini, L. Apple orchard flower clusters density mapping by unmanned aerial vehicle RGB acquisitions. In Proceedings of the 2021 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Perugia, Italy, 3–5 November 2021; pp. 92–96. [Google Scholar]

- Petrellis, N.; Antonopoulos, C.; Keramidas, G.; Voros, N. Mobile Plant Disease Classifier, Trained with a Small Number of Images by the End User. Agronomy 2022, 12, 1732. [Google Scholar] [CrossRef]

- Wang, F.; Sun, Z.; Chen, Y.; Zheng, H.; Jiang, J. Xiaomila Green Pepper Target Detection Method under Complex Environment Based on Improved YOLOv5s. Agronomy 2022, 12, 1477. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, A.; Liu, J.; Faheem, M. A Comparative Study of Semantic Segmentation Models for Identification of Grape with Different Varieties. Agriculture 2021, 11, 997. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Qi, C.; Nyalala, I.; Chen, K. Detecting the Early Flowering Stage of Tea Chrysanthemum Using the F-YOLO Model. Agronomy 2021, 11, 834. [Google Scholar] [CrossRef]

- Xia, X.; Chai, X.; Zhang, N.; Sun, T. Visual classification of apple bud-types via attention-guided data enrichment network. Comput. Electron. Agric. 2021, 191, 106504. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Multispecies Fruit Flower Detection Using a Refined Semantic Segmentation Network. IEEE Robot. Autom. Lett. 2018, 3, 3003–3010. [Google Scholar] [CrossRef]

- Mu, X.; He, L. Mask R-CNN Based King Flowers Identification for Precise Apple Pollination. In Proceedings of the 2021 ASABE Annual International Virtual Meeting, St. Joseph, MI, USA, 12–16 July 2021. [Google Scholar]

- Sun, K.; Wang, X.; Liu, S.; Liu, C. Apple, peach, and pear flower detection using semantic segmentation network and shape constraint level set. Comput. Electron. Agric. 2021, 185, 106150. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef]

- Bhattarai, U.; Bhusal, S.; Majeed, Y.; Karkee, M. Automatic blossom detection in apple trees using deep learning. IFAC-Pap. 2020, 53, 15810–15815. [Google Scholar] [CrossRef]

- Wang, X.; Tang, J.; Whitty, M. Side-view apple flower mapping using edge-based fully convolutional networks for variable rate chemical thinning. Comput. Electron. Agric. 2020, 178, 105673. [Google Scholar] [CrossRef]

- Zhang, Y.; He, S.; Wa, S.; Zong, Z.; Liu, Y. Using Generative Module and Pruning Inference for the Fast and Accurate Detection of Apple Flower in Natural Environments. Information 2021, 12, 495. [Google Scholar] [CrossRef]

- Bhattarai, U.; Karkee, M. A weakly-supervised approach for flower/fruit counting in apple orchards. Comput. Ind. 2022, 138, 103635. [Google Scholar] [CrossRef]

- Farjon, G.; Krikeb, O.; Hillel, A.B.; Alchanatis, V. Detection and counting of flowers on apple trees for better chemical thinning decisions. Precis. Agric. 2019, 21, 503–521. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Instance segmentation of apple flowers using the improved mask R–CNN model. Biosyst. Eng. 2020, 193, 264–278. [Google Scholar] [CrossRef]

- Dennis, F., Jr. The history of fruit thinning. Plant Growth Regul. 2000, 31, 1–16. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed Detection in Maize Fields by UAV Images Based on Crop Row Preprocessing and Improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv5: v6.0. Available online: https://github.com/ultralytics/yolov5 (accessed on 19 May 2022).

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Losada, J.M.; Herrero, M. Flower strategy and stigma performance in the apple inflorescence. Sci. Hortic. 2013, 150, 283–289. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

| Years | Date | Blooming Level | Total Images | Weather |

|---|---|---|---|---|

| 2021 | 11 April 2021 | FB | 203 | Light Rain |

| 12 April 2021 | FB | 452 | Cloud | |

| 13 April 2021 | FB/PF | 382 | Clear | |

| 14 April 2021 | PF | 462 | Cloud | |

| 2022 | 1 April 2022 | SB | 337 | Clear |

| 2 April 2022 | SB/TC | 613 | Clear | |

| 3 April 2022 | TC | 221 | Clear | |

| 4 April 2022 | TC/BB | 493 | Clear | |

| 5 April 2022 | BB/KB | 297 | Clear | |

| 6 April 2022 | KB/FB | 321 | Clear | |

| 7 April 2022 | FB | 502 | Clear | |

| 8 April 2022 | FB | 236 | Clear | |

| 9 April 2022 | FB | 212 | Clear | |

| 10 April 2022 | FB/PF | 500 | Cloud | |

| 11 April 2022 | PF | 452 | Cloud |

| Training Parameters | Values |

|---|---|

| Input Image Size | 640 × 640 |

| Batch Size | 4 |

| Iterations | 160,000 |

| Momentum | 0.937 |

| Weight Decay Rate | 0.0005 |

| Initial Learning Rate | 0.01 |

| Years | mAP (%) | AP (%) | |

|---|---|---|---|

| FB | PF | ||

| 2021 | 86.4 | 87.2 | 85.6 |

| 2022 | 86.6 | 87.5 | 85.8 |

| Model | mAP (%) | AP (%) | |||||

|---|---|---|---|---|---|---|---|

| SB | TC | BB | KB | FB | PF | ||

| YOLOv5s | 75.8 | 40.0 | 74.9 | 84.6 | 82.2 | 87.2 | 85.7 |

| Ours | 77.5 | 44.8 | 76.7 | 85.8 | 84.1 | 87.5 | 85.8 |

| Model | mAP (%) | AP (%) | |||||

|---|---|---|---|---|---|---|---|

| SB | TC | BB | KB | FB | PF | ||

| Faster R-CNN | 73.4 | 37.0 | 71.4 | 83.1 | 79.6 | 84.6 | 84.5 |

| SSD | 73.9 | 39.5 | 70.5 | 83.8 | 80.2 | 85.1 | 84.2 |

| YOLOv3 | 74.6 | 40.4 | 72.9 | 83.6 | 81.3 | 85.0 | 84.7 |

| Ours | 77.5 | 44.8 | 76.7 | 85.8 | 84.1 | 87.5 | 85.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Su, R.; Wang, Y.; Chen, G.; Wang, Z.; Yin, P.; Wang, J. Automatic Estimation of Apple Orchard Blooming Levels Using the Improved YOLOv5. Agronomy 2022, 12, 2483. https://doi.org/10.3390/agronomy12102483

Chen Z, Su R, Wang Y, Chen G, Wang Z, Yin P, Wang J. Automatic Estimation of Apple Orchard Blooming Levels Using the Improved YOLOv5. Agronomy. 2022; 12(10):2483. https://doi.org/10.3390/agronomy12102483

Chicago/Turabian StyleChen, Zhaoying, Rui Su, Yuliang Wang, Guofang Chen, Zhiqiao Wang, Peijun Yin, and Jinxing Wang. 2022. "Automatic Estimation of Apple Orchard Blooming Levels Using the Improved YOLOv5" Agronomy 12, no. 10: 2483. https://doi.org/10.3390/agronomy12102483

APA StyleChen, Z., Su, R., Wang, Y., Chen, G., Wang, Z., Yin, P., & Wang, J. (2022). Automatic Estimation of Apple Orchard Blooming Levels Using the Improved YOLOv5. Agronomy, 12(10), 2483. https://doi.org/10.3390/agronomy12102483