Grape Bunch Detection at Different Growth Stages Using Deep Learning Quantized Models

Abstract

:1. Introduction

- A publicly available dataset (https://doi.org/10.5281/zenodo.5114142) (accessed on 23 August 2021) containing 1929 images and annotations of grape bunches at different growth stages, captured by different cameras in several illumination conditions;

- A benchmark of Deep Learning (DL) quantized models for grape bunch detection at different growth stages;

- The deployment of the models in a low-cost and low-power hardware embedded device.

2. Related Work

3. Deep-Learning-Based Grape Bunch Detection

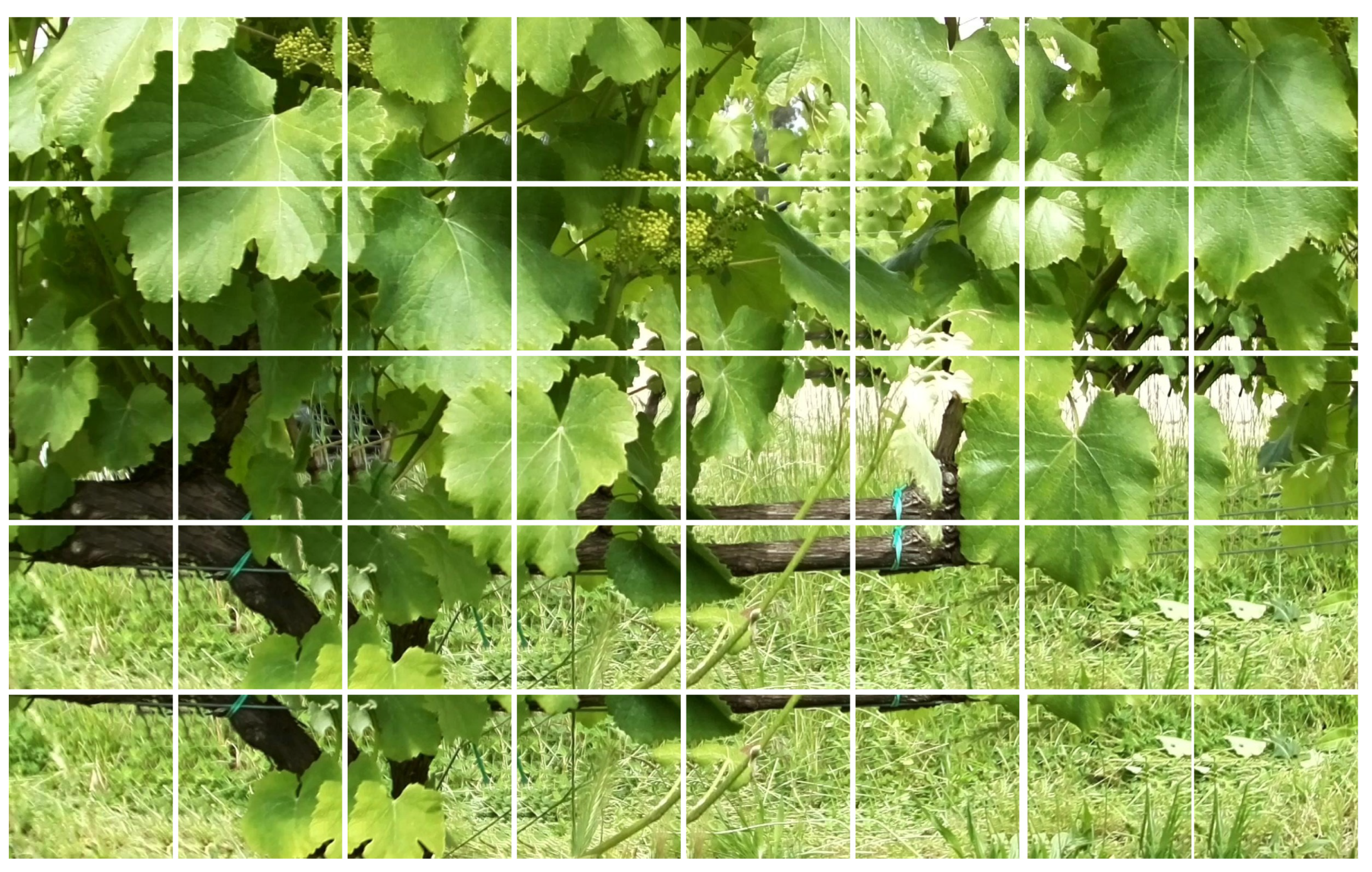

- Data collection: video data recorded by cameras mounted on top of an agricultural robotic platform; image extraction and storage from videos in order to build the input dataset;

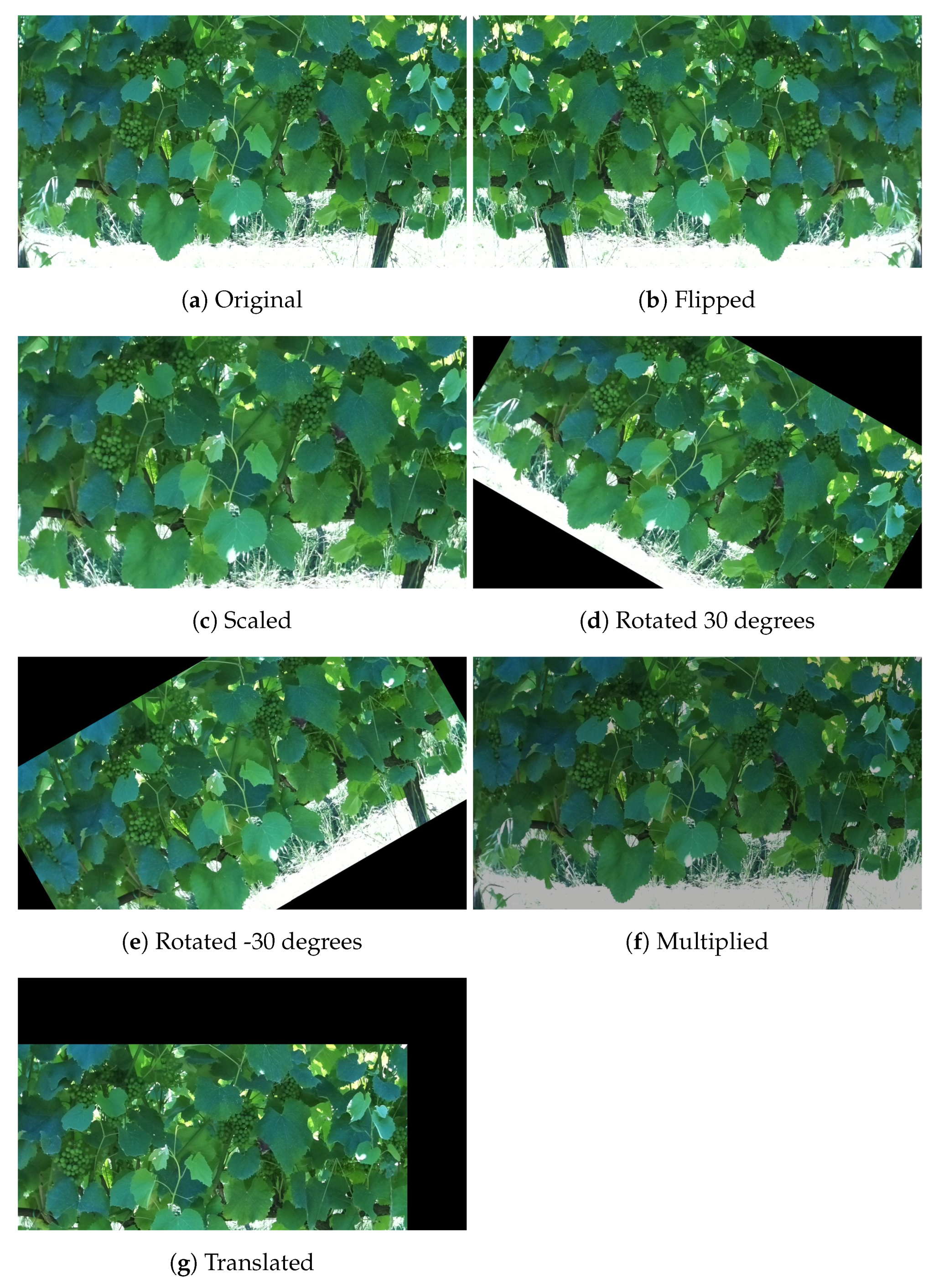

- Dataset generation: image annotation by drawing bounding boxes around grape bunches in images considering two different classes; image augmentation by the application of several operations to the images and annotations to increase the dataset size and avoid overfitting when training the DL models; image splitting of the image size, to avoid losing resolution due to the image resize operation performed by the models to their kernel size (in this case, 300 × 300 px, with three channels);

- Model training and deployment: training and quantization of the DL models to deploy them in a low-cost and low-power embedded device with the main goal of performing time-effective grape bunch detection in images.

3.1. Data Collection

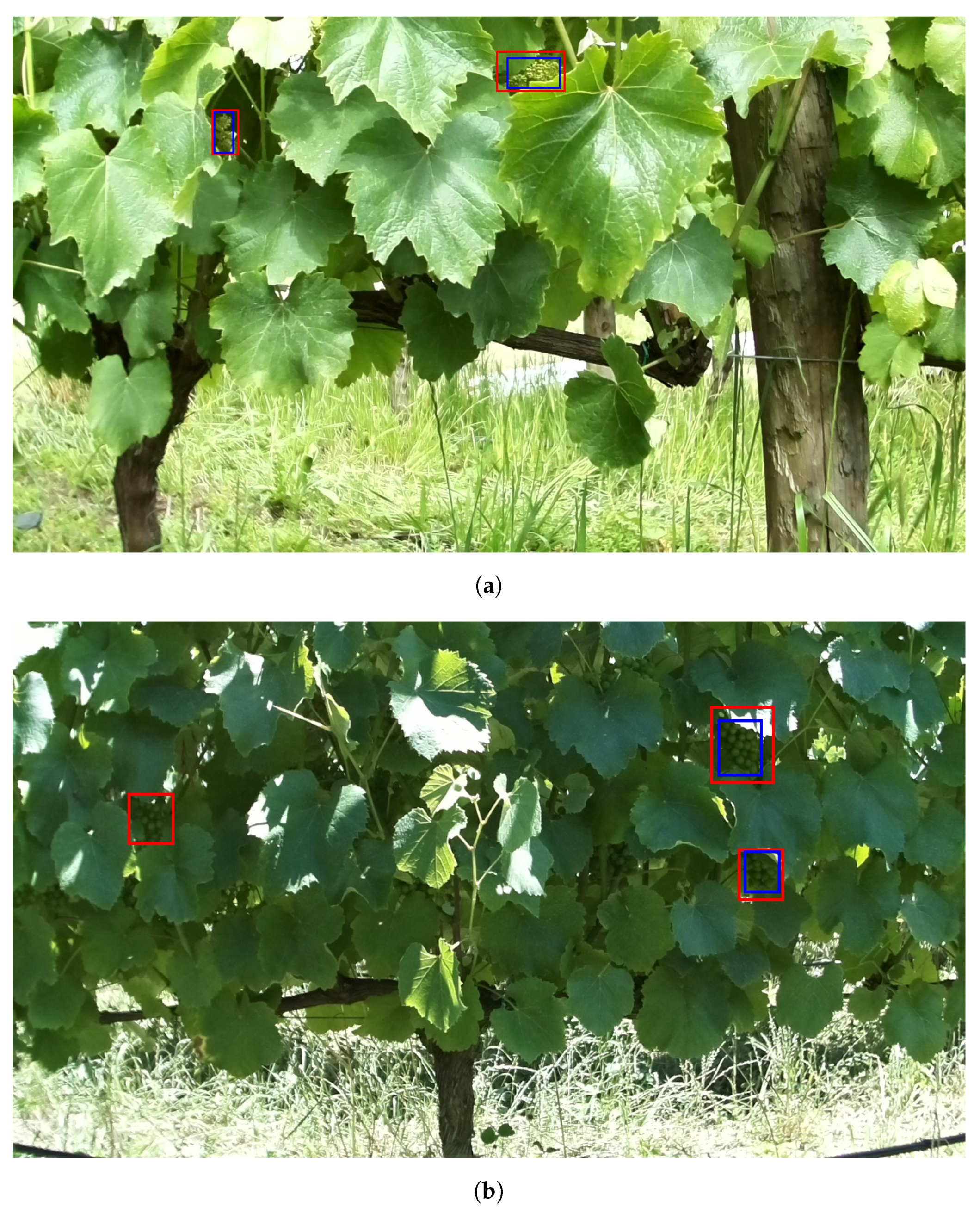

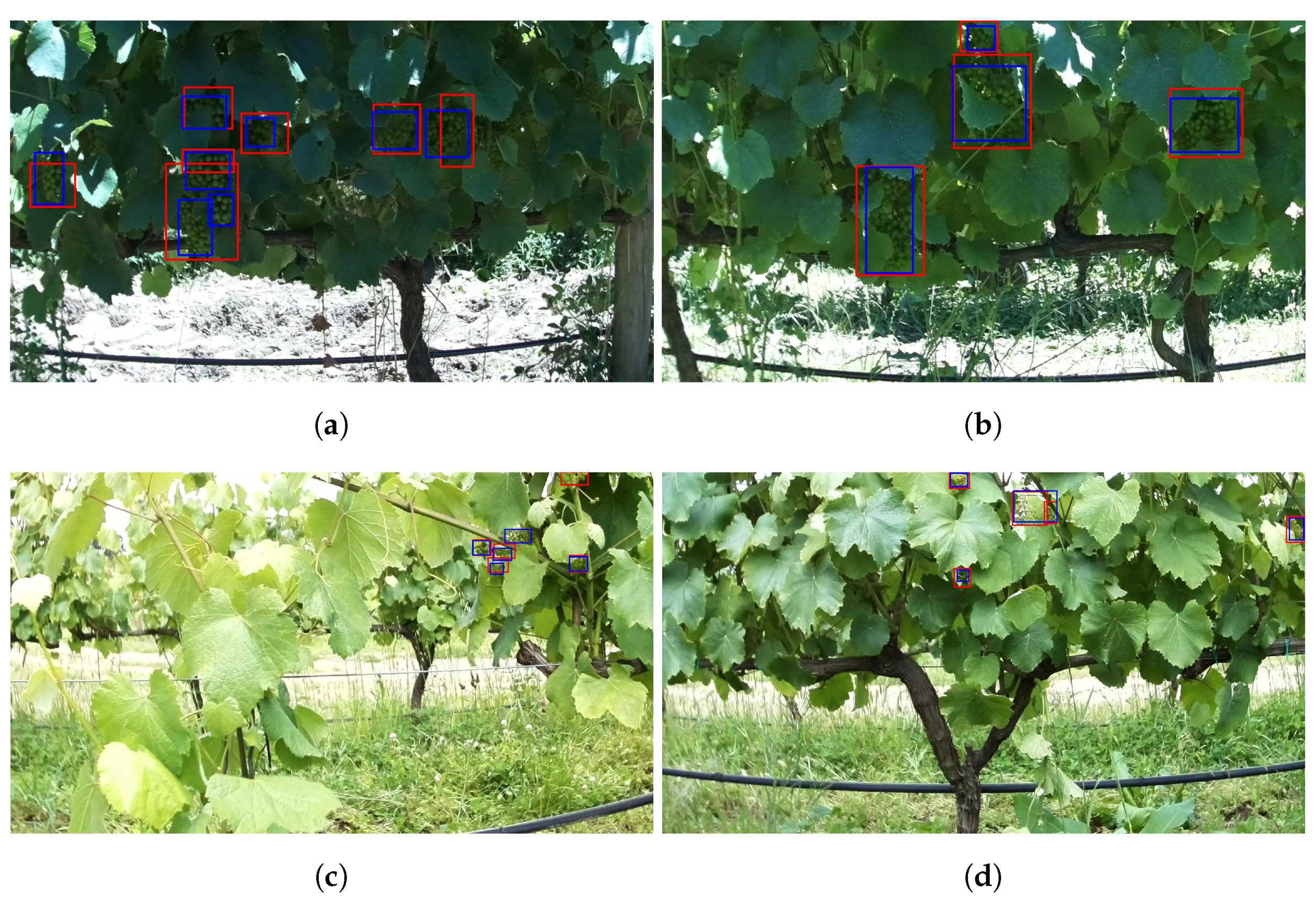

3.2. Dataset Generation

- 1

- Rotation;

- 2

- Translation;

- 3

- Scale;

- 4

- Flipping;

- 5

- Multiplication.

3.3. Models’ Training and Deployment

4. Results

4.1. Methodology

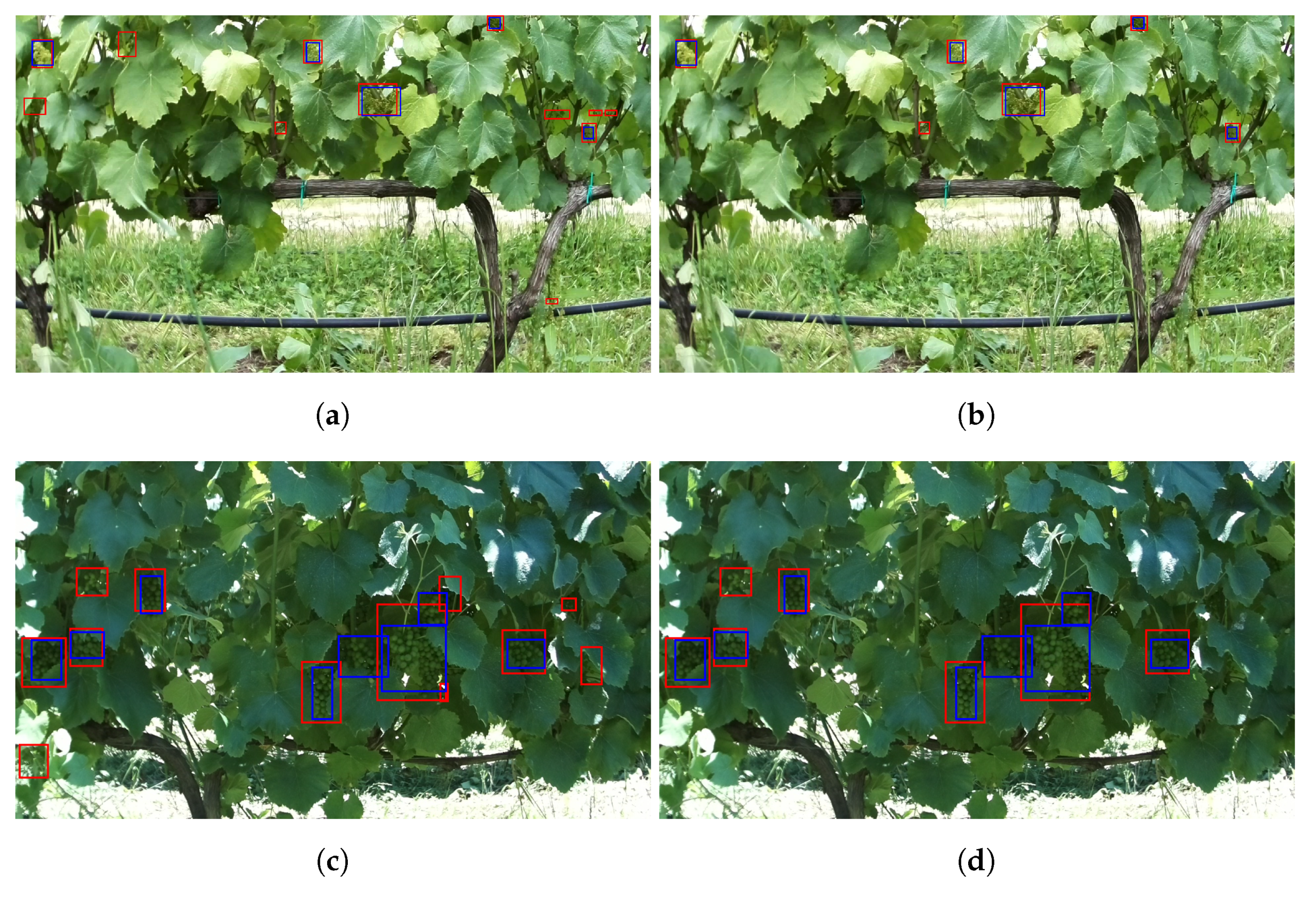

- Interception over Union (IoU): a measure based on the Jaccard index that calculates the overlap between two bounding boxes using the ground truth and the predicted bounding boxes;

- True Positive (TP): a valid detection, i.e., IoU ≥ threshold;

- False Positive (FP): an invalid detection, i.e., IoU < threshold;

- False Negative (FN): a ground truth bounding box not detected.

- Precision: defined as the ability of a given model to detect only relevant objects, precision is calculated as the percentage of TP and is given by:

- Recall: defined as the ability of a given model to find all the ground truth bounding boxes, recall is calculated as the percentage of TP detected divided by all the ground truths and is given by:

- F1 score: defined as the harmonic mean between precision and recall, F1 score is given by:

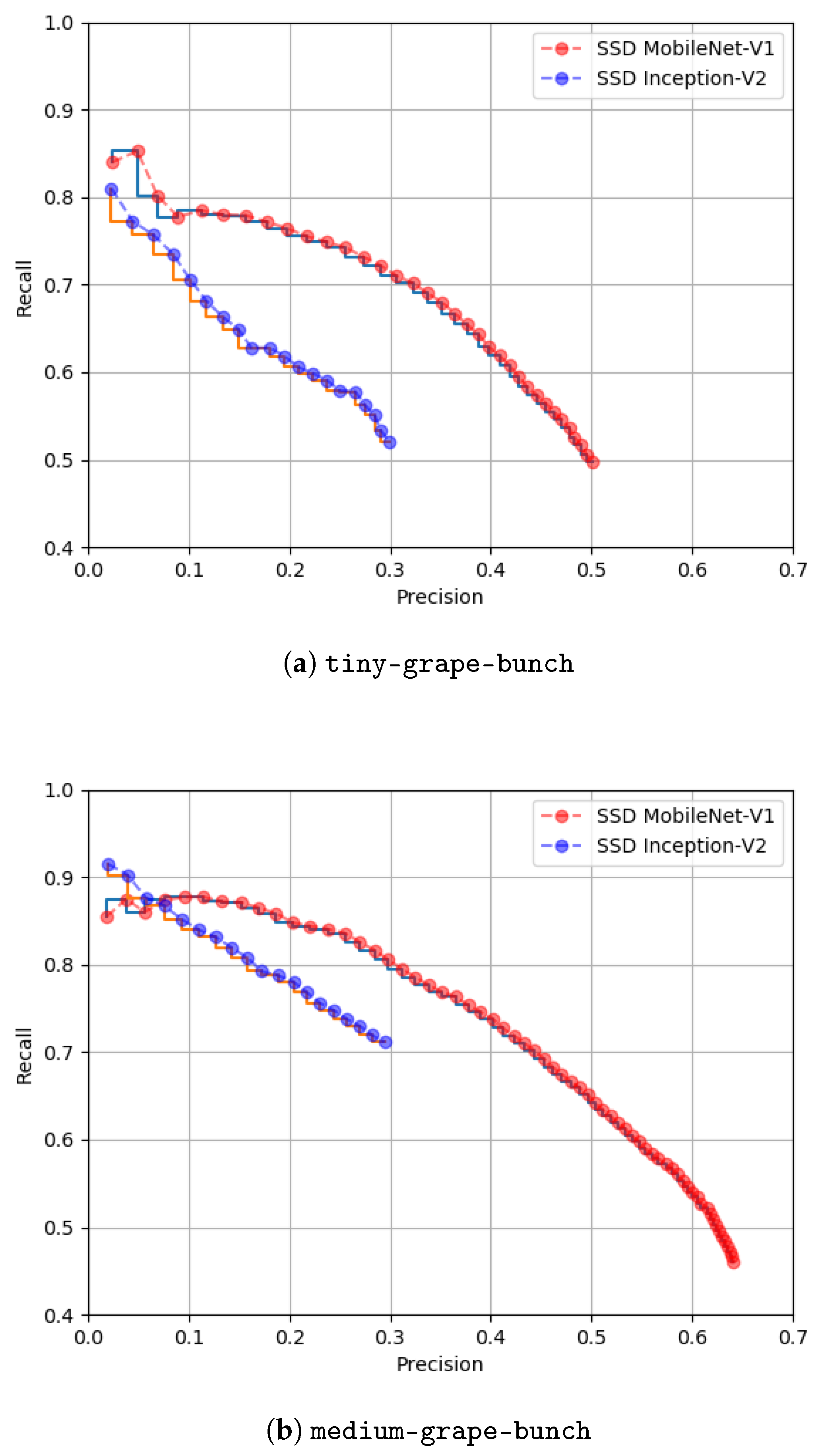

- Precision × recall curve: a curve plotted for each object class that shows the tradeoff between precision and recall;

- AP: calculated as the area under the precision × recall curve. A high area represents both high precision and recall;

- mAP: calculated as the mean AP for all the object classes;

- Inference time: defined in this work as the amount of time that a model takes to process a tile or an image, on average.

4.2. Evaluation

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Duckett, T.; Pearson, S.; Blackmore, S.; Grieve, B.; Chen, W.H.; Cielniak, G.; Cleaversmith, J.; Dai, J.; Davis, S.; Fox, C.; et al. Agricultural Robotics: The Future of Robotic Agriculture. arXiv 2018, arXiv:cs.RO/1806.06762. [Google Scholar]

- Billingsley, J.; Visala, A.; Dunn, M. Robotics in Agriculture and Forestry. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1065–1077. [Google Scholar] [CrossRef] [Green Version]

- Andresen, T.; de Aguiar, F.B.; Curado, M.J. The Alto Douro Wine Region greenway. Landsc. Urban Plan. 2004, 68, 289–303. [Google Scholar] [CrossRef]

- Patrício, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Bargoti, S.; Underwood, J.P. Image segmentation for fruit detection and yield estimation in apple orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Santos, L.; Santos, F.N.; Oliveira, P.M.; Shinde, P. Deep Learning Applications in Agriculture: A Short Review. In Proceedings of the Robot 2019: Fourth Iberian Robotics Conference, Porto, Portugal, 20–22 November 2020; Silva, M.F., Luís Lima, J., Reis, L.P., Sanfeliu, A., Tardioli, D., Eds.; Springer: Cham, Switzerland, 2020; pp. 139–151. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef] [Green Version]

- Taheri-Garavand, A.; Nasiri, A.; Fanourakis, D.; Fatahi, S.; Omid, M.; Nikoloudakis, N. Automated In Situ Seed Variety Identification via Deep Learning: A Case Study in Chickpea. Plants 2021, 10, 1406. [Google Scholar] [CrossRef]

- Nasiri, A.; Taheri-Garavand, A.; Fanourakis, D.; Zhang, Y.D.; Nikoloudakis, N. Automated Grapevine Cultivar Identification via Leaf Imaging and Deep Convolutional Neural Networks: A Proof-of-Concept Study Employing Primary Iranian Varieties. Plants 2021, 10, 1628. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-Edge AI: Intelligentizing Mobile Edge Computing, Caching and Communication by Federated Learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Pinto de Aguiar, A.S.; Neves dos Santos, F.B.; Feliz dos Santos, L.C.; de Jesus Filipe, V.M.; Miranda de Sousa, A.J. Vineyard trunk detection using deep learning—An experimental device benchmark. Comput. Electron. Agric. 2020, 175, 105535. [Google Scholar] [CrossRef]

- Aguiar, A.S.; Santos, F.N.D.; De Sousa, A.J.M.; Oliveira, P.M.; Santos, L.C. Visual Trunk Detection Using Transfer Learning and a Deep Learning-Based Coprocessor. IEEE Access 2020, 8, 77308–77320. [Google Scholar] [CrossRef]

- Aguiar, A.S.; Monteiro, N.N.; Santos, F.N.d.; Solteiro Pires, E.J.; Silva, D.; Sousa, A.J.; Boaventura-Cunha, J. Bringing Semantics to the Vineyard: An Approach on Deep Learning-Based Vine Trunk Detection. Agriculture 2021, 11, 131. [Google Scholar] [CrossRef]

- Magalhães, S.A.; Castro, L.; Moreira, G.; dos Santos, F.N.; Cunha, M.; Dias, J.; Moreira, A.P. Evaluating the Single-Shot MultiBox Detector and YOLO Deep Learning Models for the Detection of Tomatoes in a Greenhouse. Sensors 2021, 21, 3569. [Google Scholar] [CrossRef] [PubMed]

- Fanourakis, D.; Kazakos, F.; Nektarios, P.A. Allometric Individual Leaf Area Estimation in Chrysanthemum. Agronomy 2021, 11, 795. [Google Scholar] [CrossRef]

- Taheri-Garavand, A.; Nejad, A.R.; Fanourakis, D.; Fatahi, S.; Majd, M.A. Employment of artificial neural networks for non-invasive estimation of leaf water status using color features: A case study in Spathiphyllum wallisii. Acta Physiol. Plant. 2021, 43, 78. [Google Scholar] [CrossRef]

- Dias, P.A.; Tabb, A.; Medeiros, H. Apple flower detection using deep convolutional networks. Comput. Ind. 2018, 99, 17–28. [Google Scholar] [CrossRef] [Green Version]

- Koirala, A.; Walsh, K.B.; Wang, Z.X.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1–29. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Zheng, Y.Y.; Kong, J.L.; Jin, X.B.; Wang, X.Y.; Su, T.L.; Zuo, M. CropDeep: The Crop Vision Dataset for Deep-Learning-Based Classification and Detection in Precision Agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [Green Version]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:cs.CV/1506.01497. [Google Scholar] [CrossRef] [Green Version]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [Green Version]

- Kirk, R.; Cielniak, G.; Mangan, M. L*a*b*Fruits: A Rapid and Robust Outdoor Fruit Detection System Combining Bio-Inspired Features with One-Stage Deep Learning Networks. Sensors 2020, 20, 275. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Li, X.; Wu, H.; Xin, B.; Tang, J.; Petrie, P.R.; Whitty, M. A robust automated flower estimation system for grape vines. Biosyst. Eng. 2018, 172, 110–123. [Google Scholar] [CrossRef]

- Diago, M.P.; Sanz-Garcia, A.; Millan, B.; Blasco, J.; Tardaguila, J. Assessment of flower number per inflorescence in grapevine by image analysis under field conditions. J. Sci. Food Agric. 2014, 94, 1981–1987. [Google Scholar] [CrossRef] [PubMed]

- Palacios, F.; Bueno, G.; Salido, J.; Diago, M.P.; Hernández, I.; Tardaguila, J. Automated grapevine flower detection and quantification method based on computer vision and deep learning from on-the-go imaging using a mobile sensing platform under field conditions. Comput. Electron. Agric. 2020, 178, 105796. [Google Scholar] [CrossRef]

- Pérez-Zavala, R.; Torres-Torriti, M.; Cheein, F.A.; Troni, G. A pattern recognition strategy for visual grape bunch detection in vineyards. Comput. Electron. Agric. 2018, 151, 136–149. [Google Scholar] [CrossRef]

- Reis, M.; Morais, R.; Peres, E.; Pereira, C.; Contente, O.; Soares, S.; Valente, A.; Baptista, J.; Ferreira, P.; Bulas Cruz, J. Automatic detection of bunches of grapes in natural environment from color images. J. Appl. Log. 2012, 10, 285–290. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Whitty, M. Automatic grape bunch detection in vineyards with an SVM classifier. J. Appl. Log. 2015, 13, 643–653. [Google Scholar] [CrossRef]

- Cecotti, H.; Rivera, A.; Farhadloo, M.; Pedroza, M.A. Grape detection with convolutional neural networks. Expert Syst. Appl. 2020, 159, 113588. [Google Scholar] [CrossRef]

- Santos, T.T.; de Souza, L.L.; dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef] [Green Version]

- Xiong, J.; Liu, Z.; Lin, R.; Bu, R.; He, Z.; Yang, Z.; Liang, C. Green Grape Detection and Picking-Point Calculation in a Night-Time Natural Environment Using a Charge-Coupled Device (CCD) Vision Sensor with Artificial Illumination. Sensors 2018, 18, 969. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kangune, K.; Kulkarni, V.; Kosamkar, P. Grapes Ripeness Estimation using Convolutional Neural network and Support Vector Machine. In Proceedings of the 2019 Global Conference for Advancement in Technology (GCAT), Bangalore, India, 18–20 October 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Aquino, A.; Millan, B.; Gutiérrez, S.; Tardáguila, J. Grapevine flower estimation by applying artificial vision techniques on images with uncontrolled scene and multi-model analysis. Comput. Electron. Agric. 2015, 119, 92–104. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Computer Vision Annotation Tool (CVAT). Available online: https://github.com/openvinotoolkit/cvat (accessed on 6 August 2021).

- Tzutalin. LabelImg. Git Code. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 6 August 2021).

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:cs.CV/1512.00567. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the ECCV 2014, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} symposium on operating systems design and implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Tucker, G.; Wu, M.; Sun, M.; Panchapagesan, S.; Fu, G.; Vitaladevuni, S. Model Compression Applied to Small-Footprint Keyword Spotting. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016; pp. 1878–1882. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Wu, H.; Judd, P.; Zhang, X.; Isaev, M.; Micikevicius, P. Integer quantization for deep learning inference: Principles and empirical evaluation. arXiv 2020, arXiv:2004.09602. [Google Scholar]

- Kaarmukilan, S.; Hazarika, A.; Poddar, S.; Rahaman, H. An Accelerated Prototype with Movidius Neural Compute Stick for Real-Time Object Detection. In Proceedings of the 2020 International Symposium on Devices, Circuits and Systems (ISDCS), Howrah, India, 4–6 March 2020; pp. 1–5. [Google Scholar]

- Dinelli, G.; Meoni, G.; Rapuano, E.; Benelli, G.; Fanucci, L. An FPGA-Based Hardware Accelerator for CNNs Using On-Chip Memories Only: Design and Benchmarking with Intel Movidius Neural Compute Stick. Int. J. Reconfig. Comput. 2019, 2019, 1–13. [Google Scholar] [CrossRef] [Green Version]

| Reference | Application | Performance |

|---|---|---|

| Liu et al. [28] (2018) | Automated grape flower counting to determine potential yields at early stages. | Accuracy of 84.3% for flower estimation. |

| Diago et al. [29] (2014) | Assessment of flower number per inflorescence in grapevine. | Precision exceeding 90.0%. |

| Palacios et al. [30] (2020) | Estimation of the number of flowers at the bloom. | F1 score of 73.0% for individual flower detection. |

| Pérez-Zavala et al. [31] (2018) | Grape bunch detection for automating grapevine growth monitoring, spraying, leaf thinning, and harvesting tasks. | AP of 88.6% and Average Recall (AR) of 80.3%. |

| Reis et al. [32] (2012) | Support harvesting procedures by grape bunch detection. | 97.0% and 91.0% correct classifications for red and white grapes. |

| Liu and Whitty et al. [33] (2015) | Precise yield estimation in vineyards by detecting bunches of red grapes in images. | Accuracy of 88.0% and recall of 91.6%. |

| Cecotti et al. [34] (2020) | Study of the best CNN architecture to detect grapes in images. | Accuracy of 99.0% for both red and white grapes. |

| Santos et al. [35] (2020) | Infer the crop state for yield prediction, precision agriculture, and automated harvesting. | F1 score of 91.0% for instance grape segmentation. |

| Xiong et al. [36] (2018) | Develop a technology for night-time fruit picking using artificial illumination. | Accuracy of 91.7% for green grape detection. |

| Kangune et al. [37] (2019) | Grape ripeness estimation. | Classification accuracy of 79.5% between ripened and unripened grapes. |

| Aquino et al. [38] (2015) | Early yield prediction and flower estimation in vineyards. | Precision and recall were 83.4% and 85.0%. |

| Augmentation Operation | Description |

|---|---|

| Rotation | Rotates the image by +30 and −30 degrees. |

| Translation | Translates the image by −30% to +30% on the x- and y-axis. |

| Scale | Scales the image to a value of 50 to 150% of their original size. |

| Flipping | Mirrors the image horizontally. |

| Multiply | Multiplies all pixels in an image with a random value sampled once per image, |

| which can be used to make images lighter or darker. |

| Class | # of Objects | # of Objects in Augmented Images | # of Objects in Split Images |

|---|---|---|---|

| tiny_grape_bunch | 2497 | 13,393 | 25,349 |

| medium_grape_bunch | 4292 | 25,189 | 51,272 |

| Model | Confidence (%) | Class | Precision (%) | Recall (%) | F1 Score (%) | AP (%) | mAP (%) |

|---|---|---|---|---|---|---|---|

| SSD MobileNet-V1 | 30 | tiny-grape-bunch | 17.38 | 61.72 | 27.12 | 40.38 | 44.93 |

| medium-grape-bunch | 28.53 | 66.44 | 39.92 | 49.48 | |||

| SSD Inception-V2 | 30 | tiny-grape-bunch | 35.81 | 44.88 | 39.83 | 26.95 | 28.32 |

| medium-grape-bunch | 64.62 | 37.59 | 47.53 | 29.68 | |||

| SSD MobileNet-V1 | 50 | tiny-grape-bunch | 49.28 | 50.44 | 49.85 | 36.29 | 42.47 |

| medium-grape-bunch | 45.59 | 64.26 | 53.34 | 48.64 | |||

| SSD Inception-V2 | 50 | tiny-grape-bunch | 51.36 | 30.57 | 38.33 | 20.50 | 22.48 |

| medium-grape-bunch | 70.86 | 29.90 | 42.06 | 24.45 | |||

| SSD MobileNet-V1 | 70 | tiny-grape-bunch | 78.12 | 11.85 | 20.58 | 9.86 | 22.45 |

| medium-grape-bunch | 71.95 | 41.99 | 53.03 | 35.04 | |||

| SSD Inception-V2 | 70 | tiny-grape-bunch | 67.17 | 12.05 | 20.44 | 9.30 | 12.19 |

| medium-grape-bunch | 79.12 | 17.46 | 28.60 | 15.08 |

| Model | IoU (%) | Class | Precision (%) | Recall (%) | F1 Score (%) | AP (%) | mAP (%) |

|---|---|---|---|---|---|---|---|

| SSD MobileNet-V1 | 20 | tiny-grape-bunch | 63.73 | 65.22 | 64.47 | 56.87 | 66.96 |

| medium-grape-bunch | 61.72 | 87.01 | 72.22 | 77.05 | |||

| SSD Inception-V2 | 20 | tiny-grape-bunch | 71.90 | 42.80 | 53.66 | 36.42 | 37.22 |

| medium-grape-bunch | 92.57 | 39.06 | 54.94 | 38.01 | |||

| SSD MobileNet-V1 | 40 | tiny-grape-bunch | 57.17 | 58.51 | 57.83 | 47.01 | 55.78 |

| medium-grape-bunch | 54.96 | 77.47 | 64.30 | 64.55 | |||

| SSD Inception-V2 | 40 | tiny-grape-bunch | 64.25 | 38.24 | 47.95 | 30.50 | 31.98 |

| medium-grape-bunch | 85.14 | 35.93 | 50.53 | 33.45 | |||

| SSD MobileNet-V1 | 60 | tiny-grape-bunch | 37.54 | 38.41 | 37.97 | 22.39 | 24.79 |

| medium-grape-bunch | 32.17 | 45.34 | 37.64 | 27.19 | |||

| SSD Inception-V2 | 60 | tiny-grape-bunch | 33.38 | 19.87 | 24.91 | 8.72 | 9.79 |

| medium-grape-bunch | 45.78 | 19.32 | 27.17 | 10.85 |

| Model | Inference Time per Tile (ms) | Inference Time per Image (ms) |

|---|---|---|

| SSD MobileNet-V1 | 6.29 | 93.12 |

| SSD Inception-V2 | 26.07 | 385.69 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguiar, A.S.; Magalhães, S.A.; dos Santos, F.N.; Castro, L.; Pinho, T.; Valente, J.; Martins, R.; Boaventura-Cunha, J. Grape Bunch Detection at Different Growth Stages Using Deep Learning Quantized Models. Agronomy 2021, 11, 1890. https://doi.org/10.3390/agronomy11091890

Aguiar AS, Magalhães SA, dos Santos FN, Castro L, Pinho T, Valente J, Martins R, Boaventura-Cunha J. Grape Bunch Detection at Different Growth Stages Using Deep Learning Quantized Models. Agronomy. 2021; 11(9):1890. https://doi.org/10.3390/agronomy11091890

Chicago/Turabian StyleAguiar, André Silva, Sandro Augusto Magalhães, Filipe Neves dos Santos, Luis Castro, Tatiana Pinho, João Valente, Rui Martins, and José Boaventura-Cunha. 2021. "Grape Bunch Detection at Different Growth Stages Using Deep Learning Quantized Models" Agronomy 11, no. 9: 1890. https://doi.org/10.3390/agronomy11091890

APA StyleAguiar, A. S., Magalhães, S. A., dos Santos, F. N., Castro, L., Pinho, T., Valente, J., Martins, R., & Boaventura-Cunha, J. (2021). Grape Bunch Detection at Different Growth Stages Using Deep Learning Quantized Models. Agronomy, 11(9), 1890. https://doi.org/10.3390/agronomy11091890