Cut-Edge Detection Method for Rice Harvesting Based on Machine Vision

Abstract

1. Introduction

- the irregular cut-edge.

- great variability in relatively smaller areas caused by rice texture.

- dynamic changes in image brightness and color temperature.

- blurry images and weakened texture features caused by the harvester vibrations.

- the interference in the image.

2. Materials and Methods

2.1. Image Collection

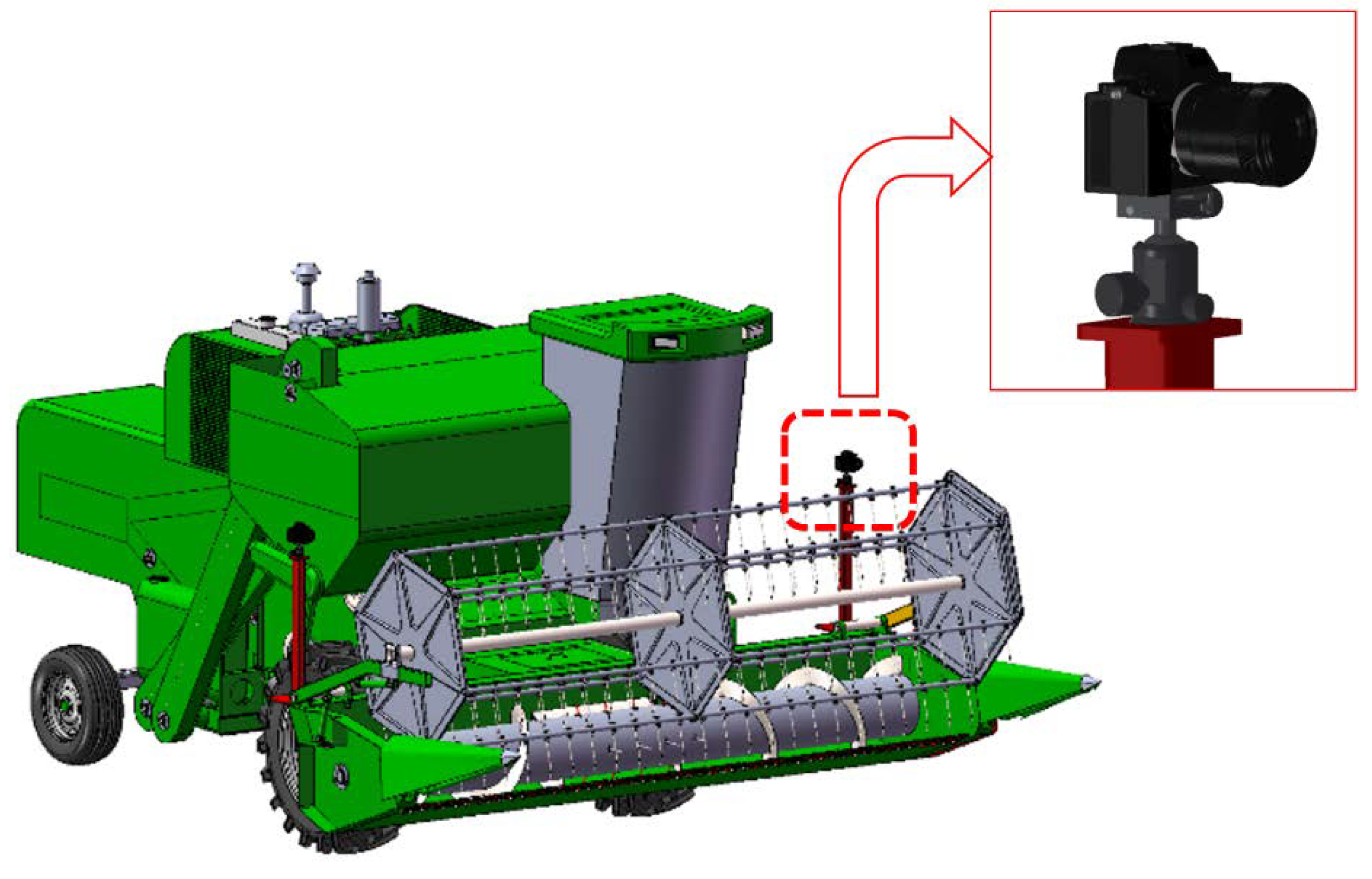

2.1.1. Image Collection System

2.1.2. Prior Conditions for the Picture

- The picture contained one or more cut-edges and there was only one cut-edge at the bottom of the picture.

- The target cut-edge started from the bottom of the screen and extended to the distance without a return.

- The target cut-edge was a single-valued function of row coordinates.

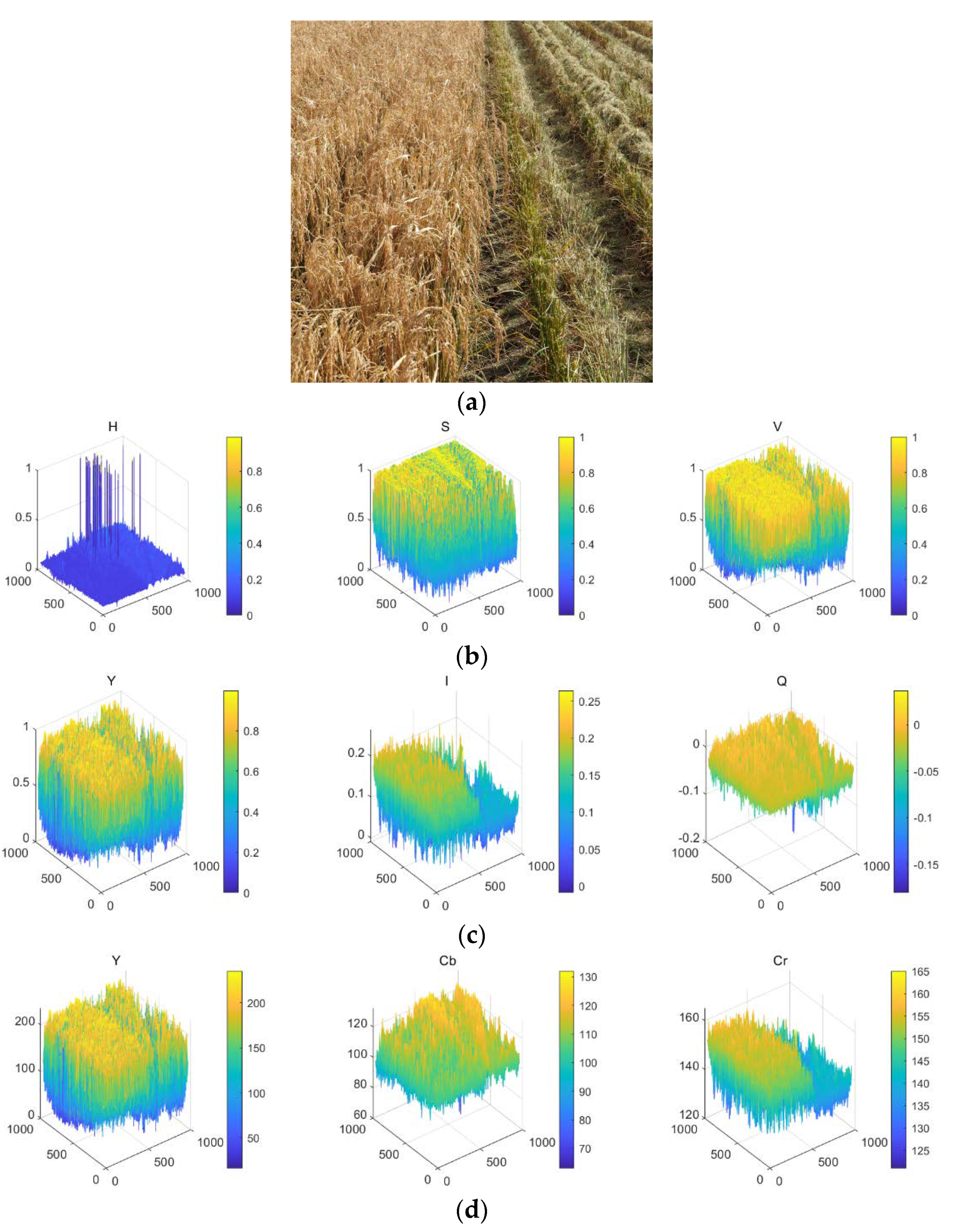

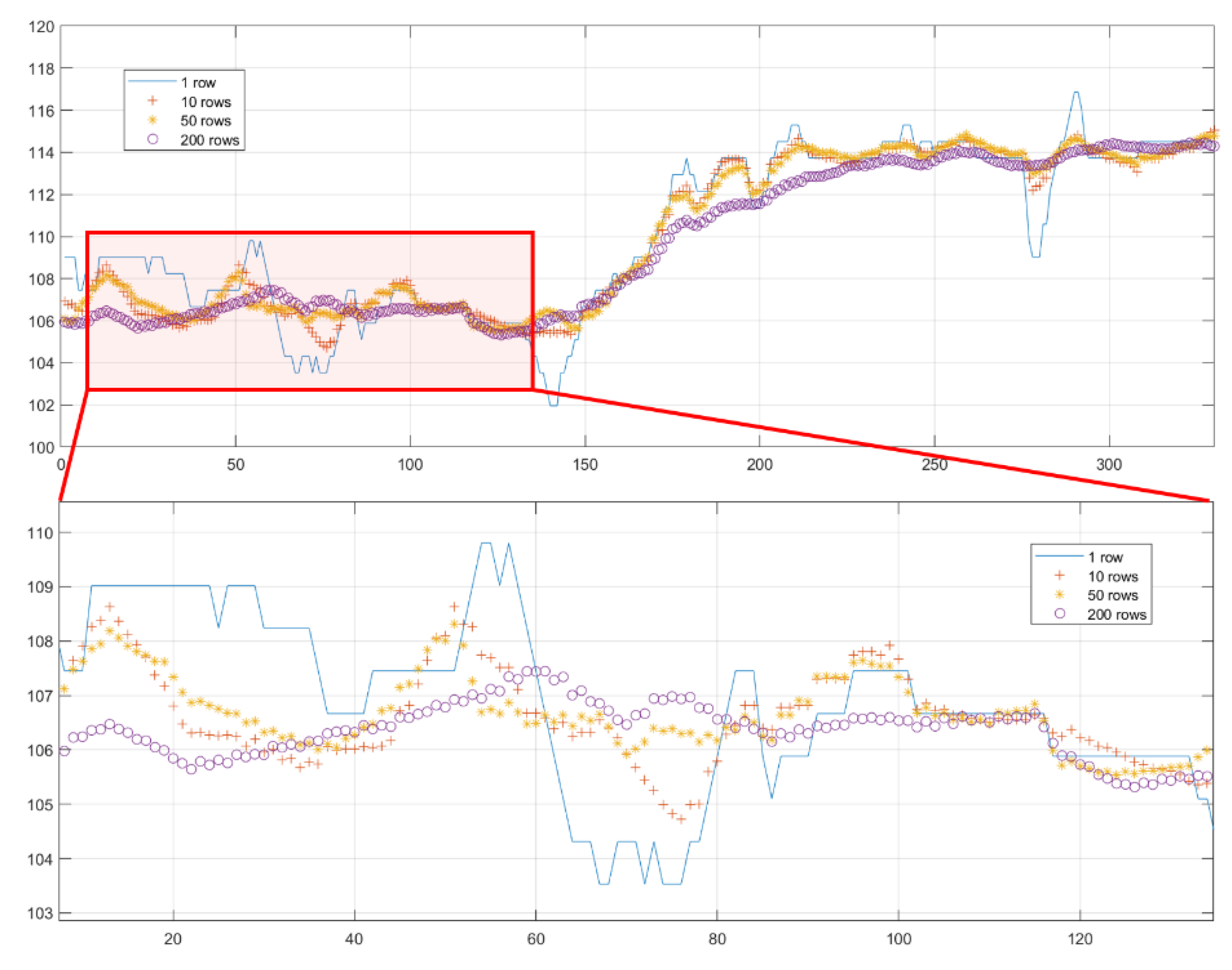

2.2. Grayscale Feature Factor Section

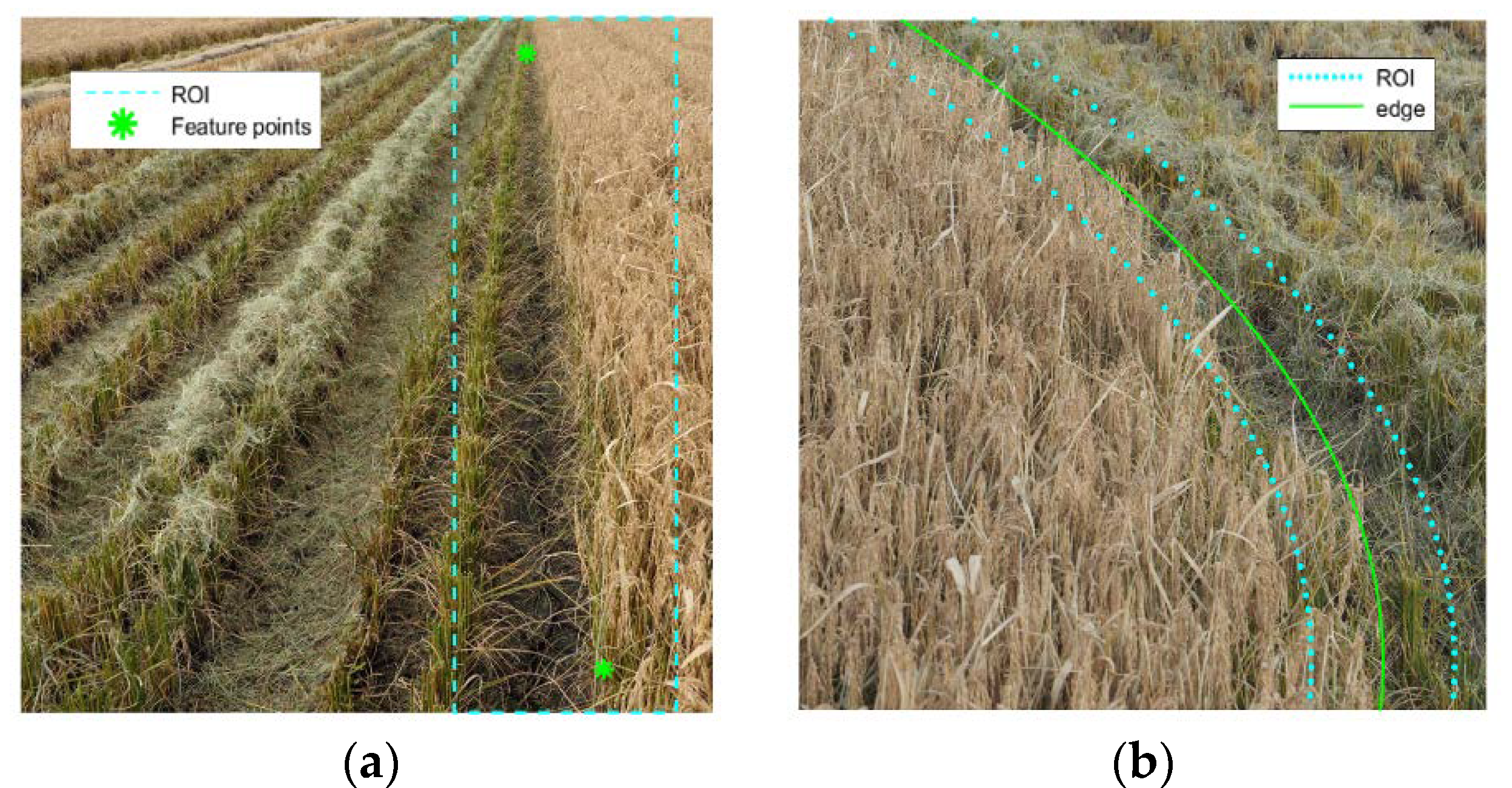

2.3. Region of Interest (ROI) Extraction

- There was only one cut-edge starting from the bottom and extending to the top.

- There were only cut and uncut areas that existed.

- The region containing the target cut-edge was as small as possible.

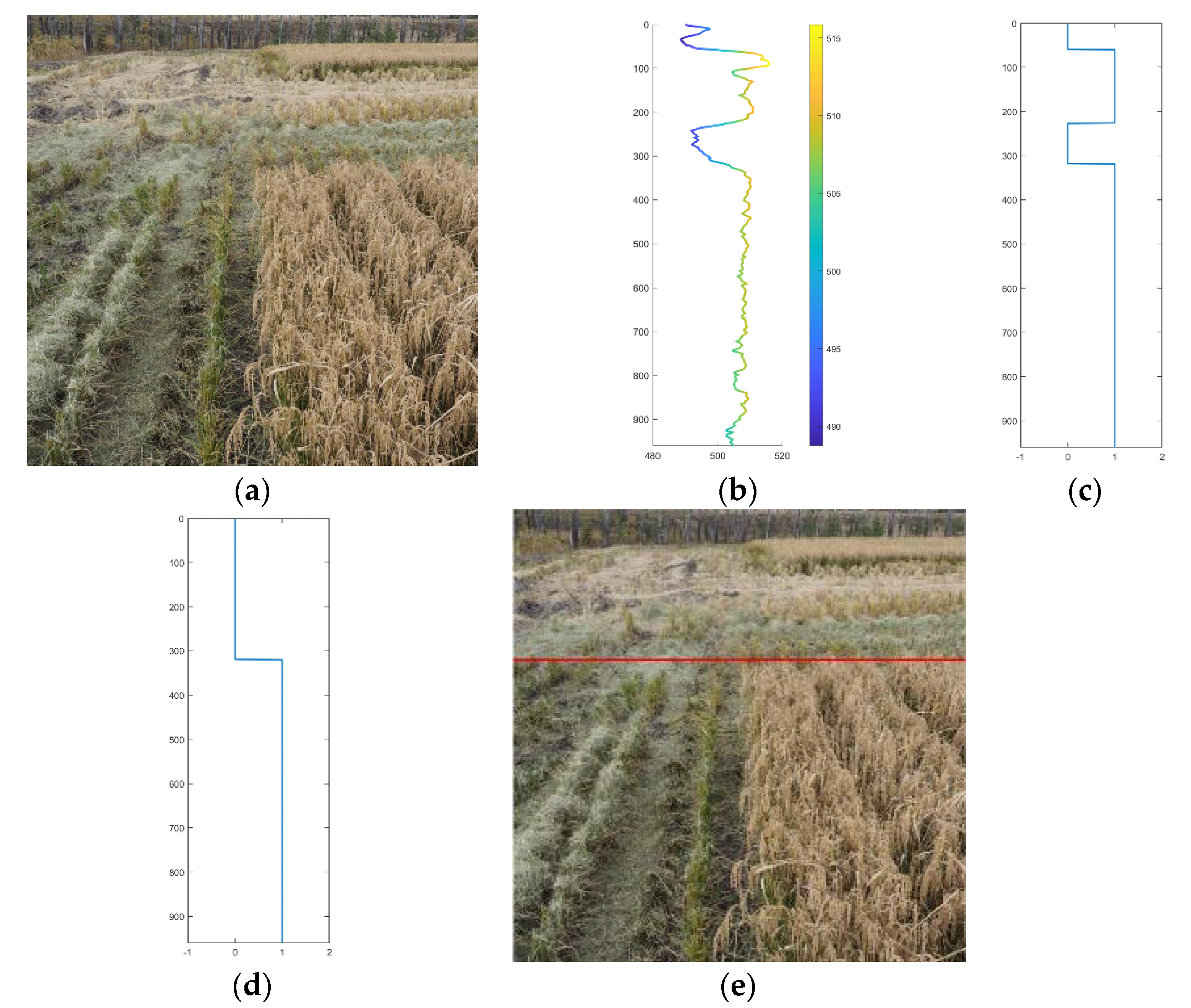

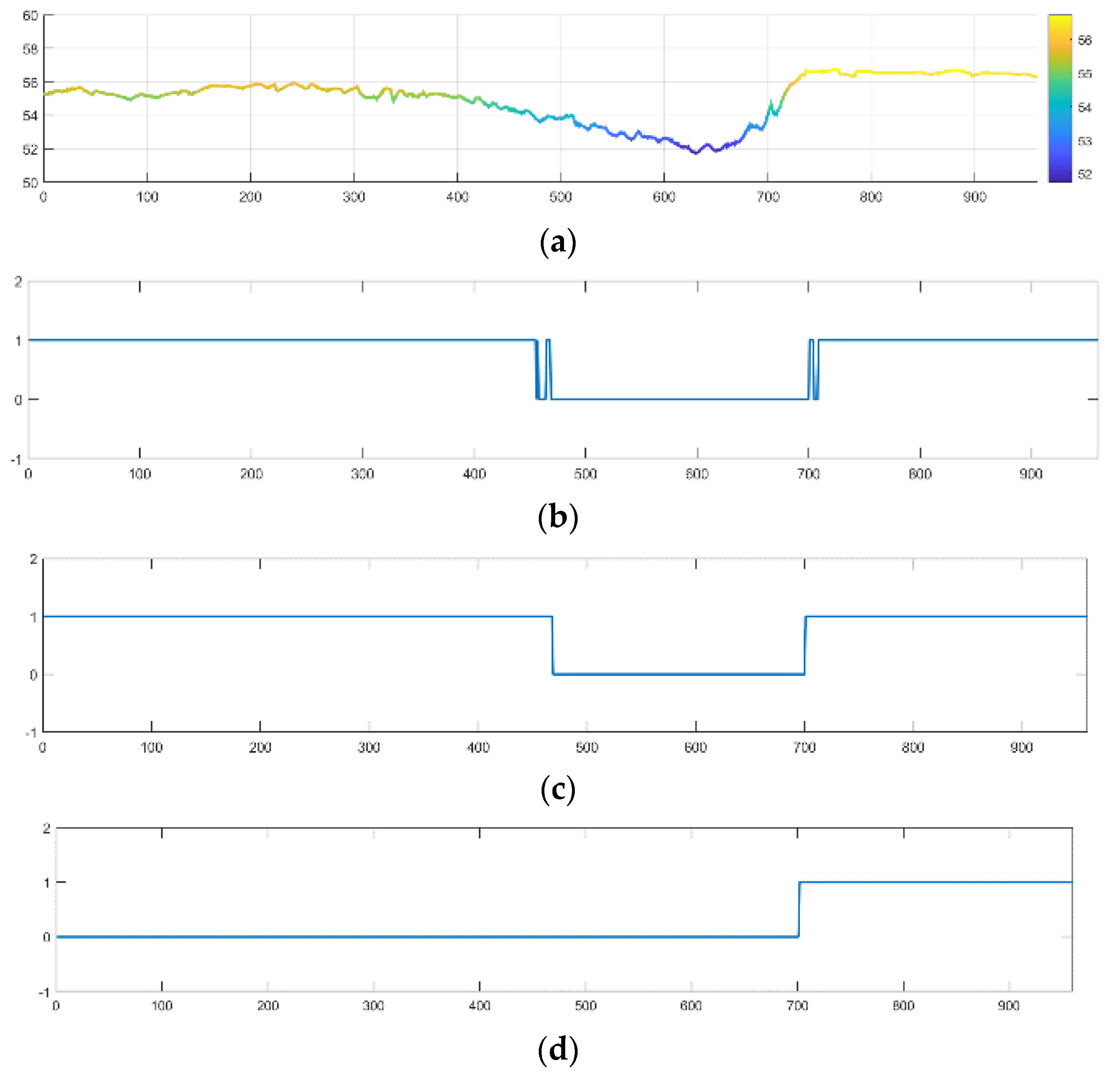

2.3.1. End-of-Row Detection

2.3.2. Target Crop Row Selection

2.3.3. ROI Extraction

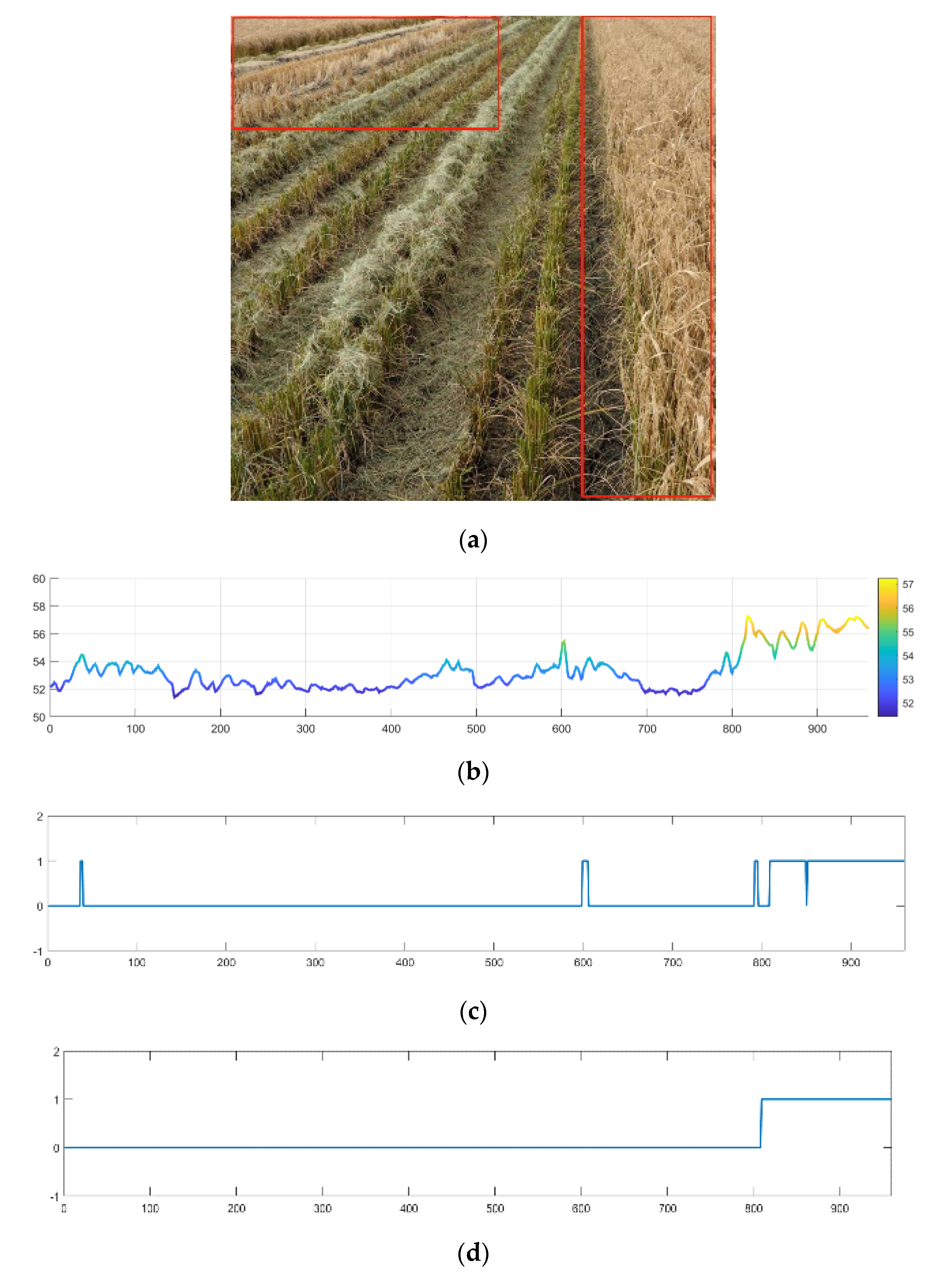

2.4. Dividing Point Extraction

2.4.1. The Vertical Projection

2.4.2. Dividing Points Extraction

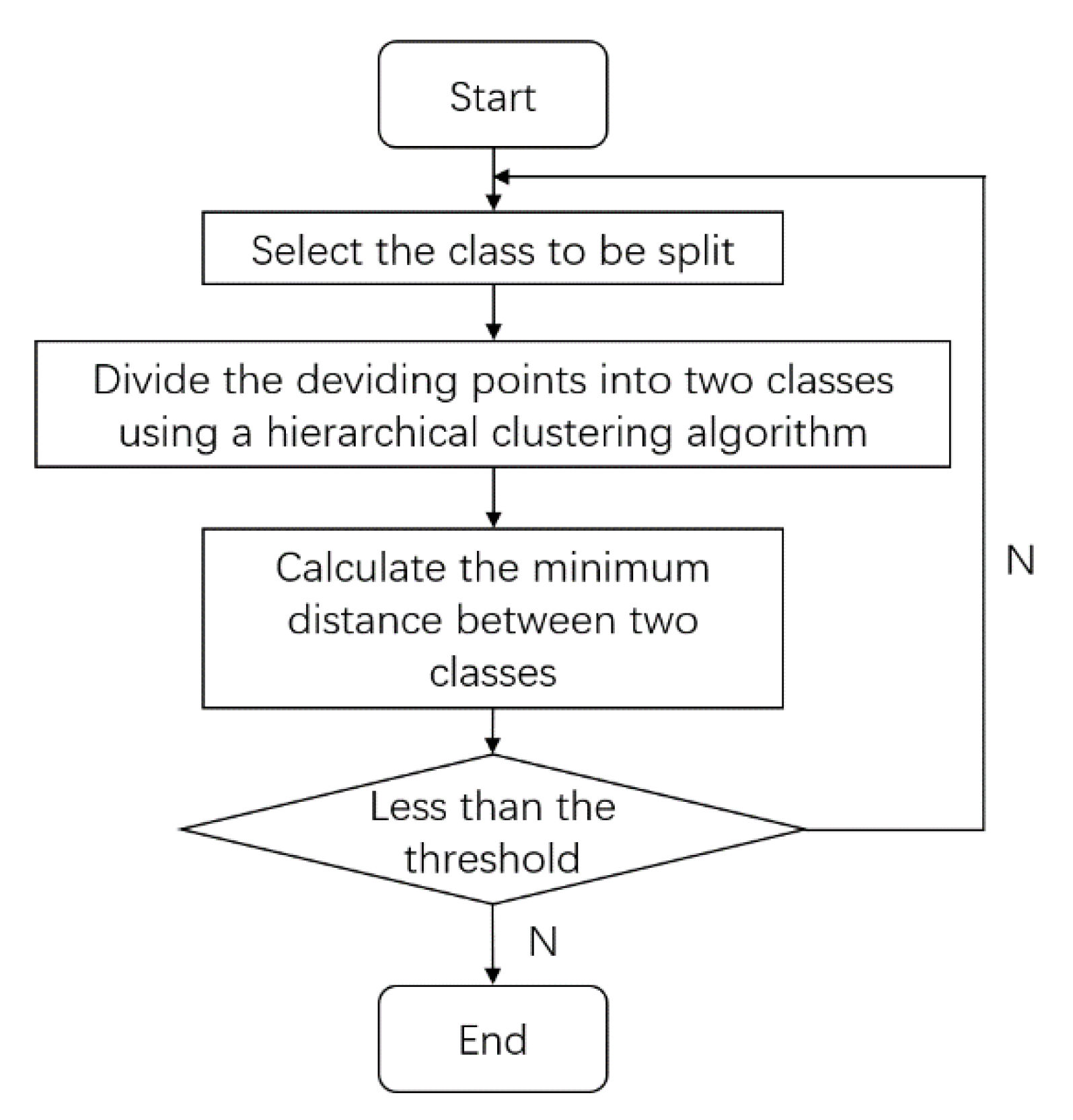

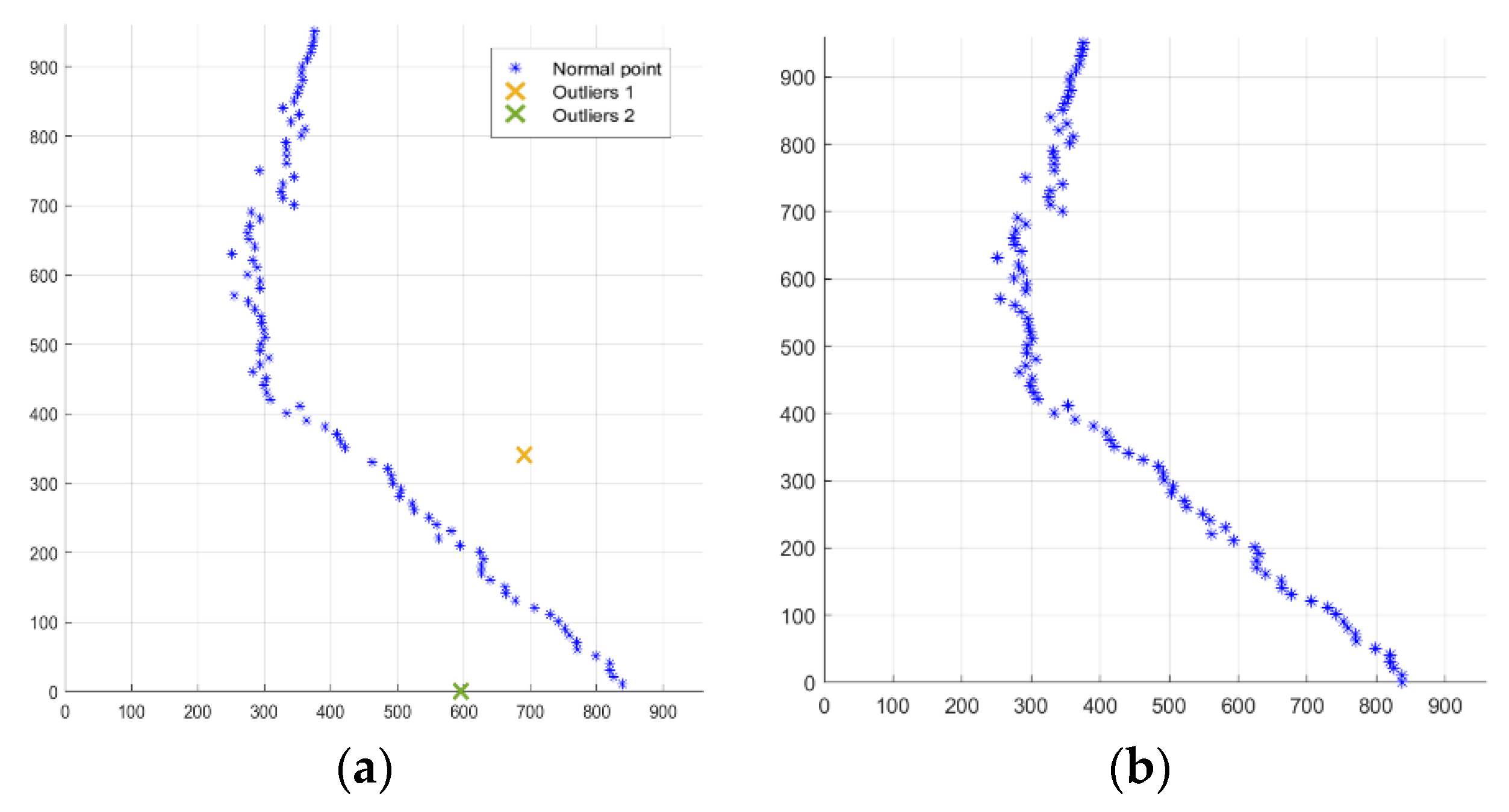

2.5. Outlier Handling

2.6. Edge Fitting

3. Results

3.1. Grayscale Feature Factor Comparison

3.2. ROI Extraction

3.3. Dividing Points Extraction

3.4. Outliers Detection

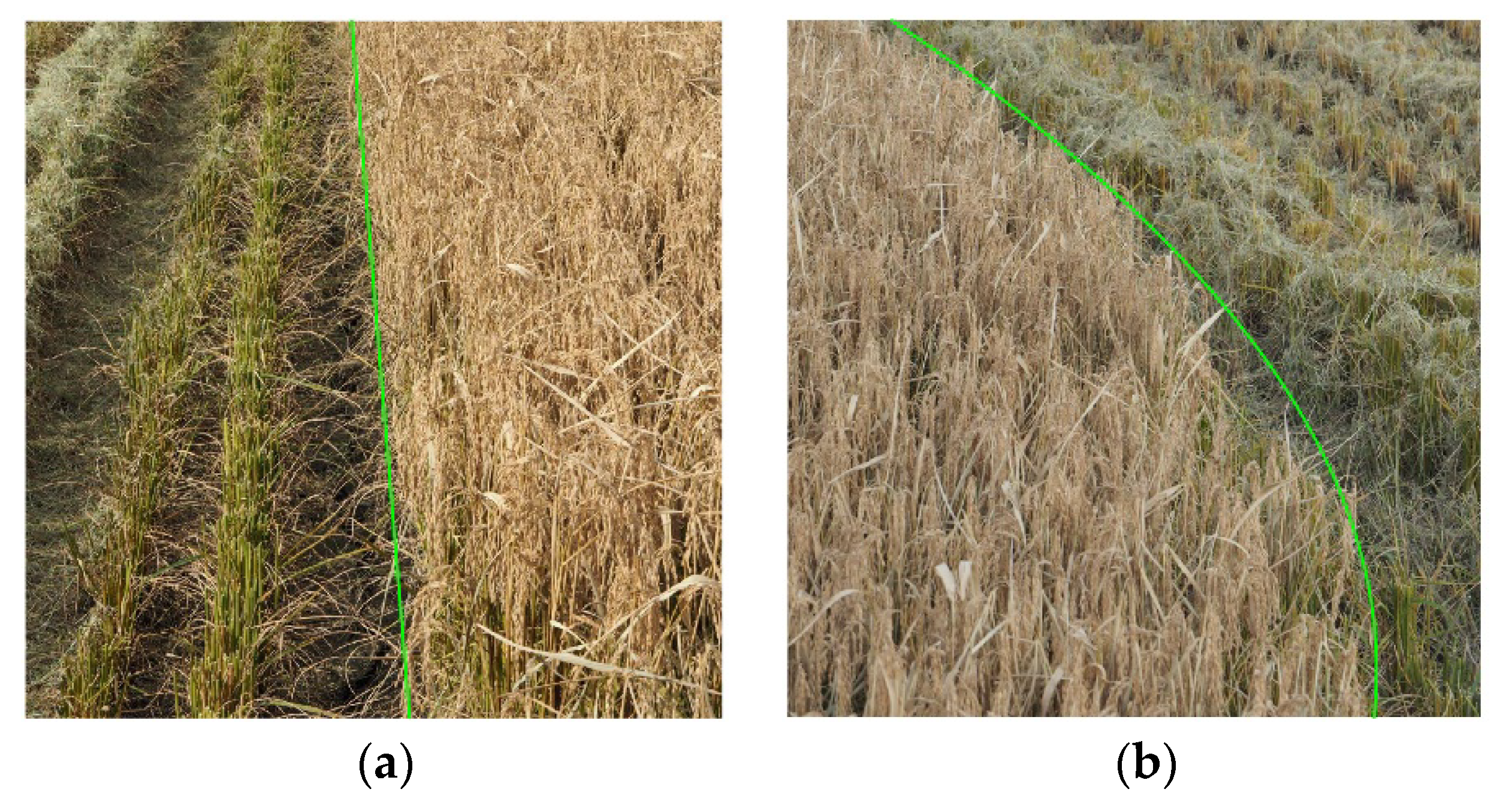

3.5. Cut Edge Fitting

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dong, Z.Z.; Duan, J.; Wang, M.L.; Zhao, J.B.; Wang, H. On Agricultural Machinery Operation System of Beidou Navigation System. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference, Chongqing, China, 12–14 October 2018; pp. 1748–1751. [Google Scholar]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Chen, J.; Yang, G.J.; Xu, K.; Cai, Y.Y. On Research on Combine Harvester Positioning algorithm and Aided-navigation System. In Proceedings of the International Conference on Advances in Mechanical Engineering and Industrial Informatics, Zhengzhou, China, 11–12 April 2015; pp. 848–853. [Google Scholar]

- Long, N.B.; Wang, K.W.; Cheng, R.Q.; Yang, K.L.; Bai, J. Fusion of Millimeter wave Radar and RGB-Depth sensors for assisted navigation of the visually impaired. In Proceedings of the Conference on Millimeter Wave and Terahertz Sensors and Technology XI, Berlin, Germany, 10–11 September 2018. [Google Scholar]

- Yayan, U.; Yucel, H.; Yazici, A. A Low Cost Ultrasonic Based Positioning System for the Indoor Navigation of Mobile Robots. J. Intell. Robot. Syst. 2015, 78, 541–552. [Google Scholar] [CrossRef]

- Zampella, F.; Bahillo, A.; Prieto, J.; Jimenez, A.R.; Seco, F. Pedestrian navigation fusing inertial and RSS/TOF measurements with adaptive movement/measurement models: Experimental evaluation and theoretical limits. Sens. Actuators A Phys. 2013, 203, 249–260. [Google Scholar] [CrossRef]

- Ball, D.; Upcroft, B.; Wyeth, G.; Corke, P.; English, A.; Ross, P.; Patten, T.; Fitch, R.; Sukkarieh, S.; Bate, A. Vision-based Obstacle Detection and Navigation for an Agricultural Robot. J. Field Robot. 2016, 33, 1107–1130. [Google Scholar] [CrossRef]

- Hacioglu, A.; Unal, M.F.; Altan, O.; Yorukoglu, M.; Yildiz, M.S. Contribution of GNSS in Precision Agriculture. In Proceedings of the 8th International Conference On Recent Advances in Space Technologies, Istanbul, Turkey, 19–22 June 2017; pp. 513–516. [Google Scholar]

- Malavazi, F.B.P.; Guyonneau, R.; Fasquel, J.B.; Lagrange, S.; Mercier, F. LiDAR-only based navigation algorithm for an autonomous agricultural robot. Comput. Electron. Agric. 2018, 154, 71–79. [Google Scholar] [CrossRef]

- Mark, O.; Anthony, S. First Results in Vision-Based Crop Line Tracking. In Proceedings of the Robotics & Automation, Minneapolis, MN, USA, 22–28 April 1996; pp. 951–956. [Google Scholar]

- Mark, O.; Anthony, S. Vision-Based Perception for an Automated Harvester. In Proceedings of the Intelligent Robots and Systems, Grenoble, France, 8–13 September 1997; pp. 1838–1844. [Google Scholar]

- Debain, C.; Chateau, T.; Berducat, M.; Martinet, P. A Guidance-Assistance System for Agricultural Vehicles. Comput. Electron. Agric. 2000, 25, 29–51. [Google Scholar] [CrossRef]

- Benson, E.R.; Reid, J.F.; Zhang, Q. Machine Vision-based Guidance System for Agricultural Grain Harvesters using Cut-edge Detection. Biosyst. Eng. 2003, 86, 389–398. [Google Scholar] [CrossRef]

- Cornell University Library, eCommons. Available online: https://ecommons.cornell.edu/handle/1813/10608 (accessed on 11 July 2007).

- Zhang, L.; Wang, S.M.; Chen, B.Q.; Zhang, H.X. Crop-edge Detection Based on Machine Vision. N. Z. J. Agric. Res. 2007, 50, 1367–1374. [Google Scholar]

- Michihisa, I.; Yu, I.; Masahiko, S.; Ryohei, M. Cut-edge and Stubble Detection for Auto-Steering System of Combine Harvester using Machine Vision. IFAC Proc. Vol. 2010, 43, 145–150. [Google Scholar]

- Ding, Y.C.; Chen, D.; Wang, S.M. The Mature Wheat Cut and Uncut Edge Detection Method Based on Wavelet Image Rotation and Projection. Afr. J. Agric. Res. 2011, 6, 2609–2616. [Google Scholar]

- Zhang, T.; Xia, J.F.; Wu, G.; Zhai, J.B. Automatic Navigation Path Detection Method for Tillage Machines Working on High Crop Stubble Fields Based on Machine Vision. Int. J. Agric. Biol. Eng. 2014, 7, 29–37. [Google Scholar]

- Wonjae, C.; Michihisa, L.; Masahiko, S.; Ryohei, M.; Hiroki, K. Using Multiple Sensors to Detect Uncut Crop Edges for Autonomous Guidance Systems of Head-Feeding Combine Harvesters. Eng. Agric. Environ. Food 2014, 7, 115–121. [Google Scholar]

- Cornell University Library. Available online: https://arxiv.org/abs/1501.02376 (accessed on 10 January 2015).

- Kneip, J.; Fleischmann, P.; Berns, K. Crop Edge Detection Based on Stereo Vision. Intell. Auton. Syst. 2018, 123, 639–651. [Google Scholar]

- Kise, M.; Zhang, Q.; Mas, F.R. A Stereovision-based Crop Row Detection Method for Tractor-automated Guidance. Biosyst. Eng. 2005, 90, 357–367. [Google Scholar] [CrossRef]

- Kebapci, H.; Yanikoglu, B.; Unal, G. Plant Image Retrieval Using Color, Shape and Texture Features. Comput. J. 2011, 54, 1475–1490. [Google Scholar] [CrossRef]

- Garcia-Santillan, I.; Guerrero, J.M.; Montalvo, M.; Pajares, G. Curved and Straight Crop Row Detection by Accumulation of Green Pixels from Images in Maize Fields. Precis. Agric. 2018, 19, 18–41. [Google Scholar] [CrossRef]

- Rohit, M.; Ashish, M.K. Digital Image Processing Using SCILAB; Springer: Berlin, Germany, 2018; pp. 131–142. [Google Scholar]

- Shiva, S.; Dzulkifli, M.; Tanzila, S.; Amjad, R. Recognition of Partially Occluded Objects Based on the Three Different Color Spaces (RGB, YCbCr, HSV). 3D Res. 2015, 6, 22. [Google Scholar]

| Method | Crop | Reference |

|---|---|---|

| Color segmentation | Alfalfa hay | M. Ollis [10,11], 1996 |

| Texture segmentation | Grass | C. Debain [12], 2000 |

| Grayscale segmentation | Corn | E.R. Benson [13], 2003 |

| Stereo vision detection | Corn | F. Rovira-Más [14], 2007 |

| Luminance segmentation | Wheat, corn | Z. Lei [15], 2007 |

| Grayscale segmentation | Rice | M. Iida [16], 2010 |

| Wavelet transformation | Wheat | Y. Ding [17], 2011 |

| Color segmentation | Wheat, rice, rapeseed | Z. Tian [18], 2014 |

| Color segmentation | Rice | W. Cho [19], 2014 |

| Color segmentation | Wheat | M.Z. Ahmad [20], 2015 |

| Point cloud segmentation | Wheat, rapeseed | J. Kneip [21], 2020 |

| Grayscale Feature Factor | Variation Coefficient of Cut Area | Variation Coefficient of Uncut Area | Ratio of Mean |

|---|---|---|---|

| HSV-H | 0.2263 | 0.0974 | 0.8441 |

| HSV-S | 0.3778 | 0.3011 | 0.9291 |

| NTSC-I | 0.0463 | 0.0358 | 0.924 |

| NTSC-Q | 0.0391 | 0.0172 | 0.9844 |

| YCbCr-Cb | 0.0599 | 0.0346 | 0.9443 |

| YCbCr-Cr | 0.0235 | 0.0189 | 0.9169 |

| Index | Error in Pixels | Error in Centimeters | Standard Deviation |

|---|---|---|---|

| value | 4.72 | 2.84 | 18.49 |

| Fit Method | Linear Polynomial R² > 0.95 | Quadratic Polynomial R² > 0.95 | Quadratic Polynomial 0.75 < R² < 0.95 |

|---|---|---|---|

| Amount | 82 | 15 | 3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Cao, R.; Peng, C.; Liu, R.; Sun, Y.; Zhang, M.; Li, H. Cut-Edge Detection Method for Rice Harvesting Based on Machine Vision. Agronomy 2020, 10, 590. https://doi.org/10.3390/agronomy10040590

Zhang Z, Cao R, Peng C, Liu R, Sun Y, Zhang M, Li H. Cut-Edge Detection Method for Rice Harvesting Based on Machine Vision. Agronomy. 2020; 10(4):590. https://doi.org/10.3390/agronomy10040590

Chicago/Turabian StyleZhang, Zhenqian, Ruyue Cao, Cheng Peng, Renjie Liu, Yifan Sun, Man Zhang, and Han Li. 2020. "Cut-Edge Detection Method for Rice Harvesting Based on Machine Vision" Agronomy 10, no. 4: 590. https://doi.org/10.3390/agronomy10040590

APA StyleZhang, Z., Cao, R., Peng, C., Liu, R., Sun, Y., Zhang, M., & Li, H. (2020). Cut-Edge Detection Method for Rice Harvesting Based on Machine Vision. Agronomy, 10(4), 590. https://doi.org/10.3390/agronomy10040590