NewsSumm: The World’s Largest Human-Annotated Multi-Document News Summarization Dataset for Indian English

Abstract

1. Introduction

- Introducing NewsSumm, with detailed construction and annotation protocols ensuring reproducibility;

- Providing linguistic, statistical, and comparative analyses positioning NewsSumm relative to global benchmarks;

- Benchmarking state-of-the-art summarization models (BART, PEGASUS, T5) on NewsSumm with aggregate and category-specific evaluation;

- NewsSumm is fully accessible on Zenodo (https://zenodo.org/records/17670865 accessed on 21 November 2025), supporting open research and broad reproducibility.

2. Related Work

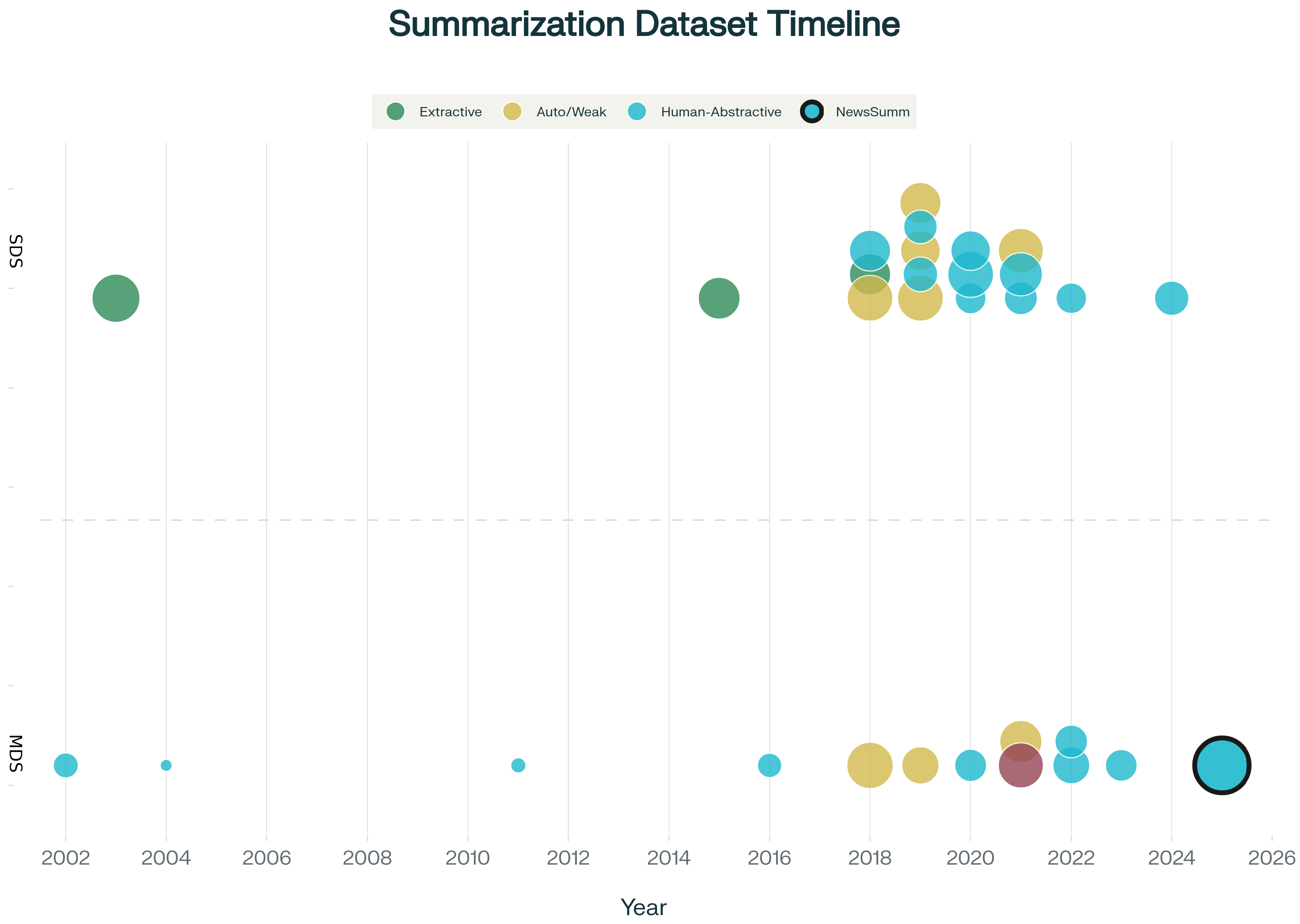

2.1. Multi-Document Summarization Datasets

2.2. Abstractive vs. Extractive Summarization Paradigms

2.3. Datasets for Factual Consistency and Evaluation

3. Dataset Construction and Annotation

3.1. Collection and Annotation Pipeline

3.2. Annotation Guidelines and Quality Assurance

3.3. Data Cleaning

3.4. Alignment with Global Standards

4. Dataset Statistics and Analysis

4.1. Temporal Distribution

4.2. Category Distribution

4.3. Number of Articles per Year from 2000 to 2025

4.4. Compression Ratios

4.5. Example Entry

4.6. Analytical Insights of NewsSumm

5. Comparison with Existing Datasets

5.1. Dataset Landscape

5.2. Linguistic and Cultural Specificity

5.3. Domain, Temporal Breadth, and Event Modeling

- (articles);

- (topical categories);

- (years).

5.4. Annotation Quality and Ethical Construction

6. Benchmarking Experiments

6.1. Experimental Setup

6.2. Results

6.3. Cross-Lingual Generalization of Western-Trained Models on NewsSumm

6.4. Extractive Baseline Comparison

7. Applications

8. Limitations

9. Future Work

10. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDS | Multi-Document Summarization |

| NLP | Natural Language Processing |

| AI | Artificial Intelligence |

| ML | Machine Learning |

| LLM | Large Language Model |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| BLEU | Bilingual Evaluation Understudy |

| BERTScore | Bidirectional Encoder Representations from Transformers Score |

| CNN | Convolutional Neural Network |

| PEGASUS | Pre-training with Extracted Gap-sentences for Abstractive Summarization |

| T5 | Text-To-Text Transfer Transformer |

References

- DeYoung, J.; Martinez, S.C.; Marshall, I.J.; Wallace, B.C. Do Multi-Document Summarization Models Synthesize? Trans. Assoc. Comput. Linguist. 2024, 12, 1043–1062. [Google Scholar] [CrossRef]

- Ahuja, O.; Xu, J.; Gupta, A.; Horecka, K.; Durrett, G. ASPECTNEWS: Aspect-Oriented Summarization of News Documents. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022; pp. 6494–6506. [Google Scholar]

- Alambo, A.; Lohstroh, C.; Madaus, E.; Padhee, S.; Foster, B.; Banerjee, T.; Thirunarayan, K.; Raymer, M. Topic-Centric Unsupervised Multi-Document Summarization of Scientific and News Articles. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020. [Google Scholar]

- Benedetto, I.; Cagliero, L.; Ferro, M.; Tarasconi, F.; Bernini, C.; Giacalone, G. Leveraging large language models for abstractive summarization of Italian legal news. Artif. Intell. Law 2025. [Google Scholar] [CrossRef]

- See, A.; Liu, P.J.; Manning, C.D. Get To The Point: Summarization with Pointer-Generator Networks. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1073–1083. [Google Scholar]

- Rao, A.; Aithal, S.; Singh, S. Single-Document Abstractive Text Summarization: A Systematic Literature Review. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar] [CrossRef]

- Narayan, S.; Cohen, S.B.; Lapata, M. Don’t Give Me the Details, Just the Summary! Topic-Aware Convolutional Neural Networks for Extreme Summarization. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1797–1807. [Google Scholar]

- Li, H.; Zhang, Y.; Zhang, R.; Chaturvedi, S. Coverage-Based Fairness in Multi-Document Summarization. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Albuquerque, NM, USA, 29 April–4 May 2025. [Google Scholar]

- Fabbri, A.; Li, I.; She, T.; Li, S.; Radev, D. Multi-News: A Large-Scale Multi-Document Summarization Dataset and Abstractive Hierarchical Model. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Langston, O.; Ashford, B. Automated Summarization of Multiple Document Abstracts and Contents Using Large Language Models. TechRxiv 2024. [Google Scholar] [CrossRef]

- Wang, M.; Wang, M.; Yu, F.; Yang, Y.; Walker, J.; Mostafa, J. A Systematic Review of Automatic Text Summarization for Biomedical Literature and EHRs. J. Am. Med. Inform. Assoc. 2021, 28, 2287–2297. [Google Scholar] [CrossRef]

- Yu, Z.; Sun, N.; Wu, S.; Wang, Y. Research on Automatic Text Summarization Using Transformer and Pointer-Generator Networks. In Proceedings of the 2025 4th International Symposium on Computer Applications and Information Technology (ISCAIT), Xi’an, China, 21 March 2025; pp. 1601–1604. [Google Scholar]

- Gliwa, B.; Mochol, I.; Biesek, M.; Wawer, A. SAMSum Corpus: A Human-Annotated Dialogue Dataset for Abstractive Summarization. In Proceedings of the 2nd Workshop on New Frontiers in Summarization, Hong Kong, China, 4 November 2019; pp. 70–79. [Google Scholar]

- Cao, M.; Dong, Y.; Wu, J.; Cheung, J.C.K. Factual Error Correction for Abstractive Summarization Models. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 6251–6258. [Google Scholar]

- Kryscinski, W.; McCann, B.; Xiong, C.; Socher, R. Evaluating the Factual Consistency of Abstractive Text Summarization. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 9332–9346. [Google Scholar]

- Luo, Z.; Xie, Q.; Ananiadou, S. Factual Consistency Evaluation of Summarization in the Era of Large Language Models. Expert Syst. Appl. 2024, 254, 124456. [Google Scholar] [CrossRef]

- Pagnoni, A.; Balachandran, V.; Tsvetkov, Y. Understanding Factuality in Abstractive Summarization with FRANK: A Benchmark for Factuality Metrics. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 4812–4829. [Google Scholar]

- Wang, A.; Cho, K.; Lewis, M. Asking and Answering Questions to Evaluate the Factual Consistency of Summaries. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 5008–5020. [Google Scholar]

- Tulajiang, P.; Sun, Y.; Zhang, Y.; Le, Y.; Xiao, K.; Lin, H. A Bilingual Legal NER Dataset and Semantics-Aware Cross-Lingual Label Transfer Method for Low-Resource Languages. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025, 24, 1–21. [Google Scholar] [CrossRef]

- Mi, C.; Xie, S.; Li, Y.; He, Z. Loanword Identification in Social Media Texts with Extended Code-Switching Datasets. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025, 24, 1–19. [Google Scholar] [CrossRef]

- Mohammadalizadeh, P.; Safari, L. A Novel Benchmark for Persian Table-to-Text Generation: A New Dataset and Baseline Experiments. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025, 24, 1–17. [Google Scholar] [CrossRef]

- Beltagy, I.; Peters, M.E.; Cohan, A. Longformer: The Long-Document Transformer. arXiv 2020, arXiv:2004.05150. [Google Scholar] [CrossRef]

- Fodor, J.; Deyne, S.D.; Suzuki, S. Compositionality and Sentence Meaning: Comparing Semantic Parsing and Transformers on a Challenging Sentence Similarity Dataset. Comput. Linguist. 2025, 51, 139–190. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Zandie, R.; Mahoor, M.H. Topical Language Generation Using Transformers. Nat. Lang. Eng. 2023, 29, 337–359. [Google Scholar] [CrossRef]

- Al-Thubaity, A. A Novel Dataset for Arabic Domain Specific Term Extraction and Comparative Evaluation of BERT-Based Models for Arabic Term Extraction. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025, 24, 1–12. [Google Scholar] [CrossRef]

- Kurniawan, K.; Louvan, S. IndoSum: A New Benchmark Dataset for Indonesian Text Summarization. In Proceedings of the 2018 International Conference on Asian Language Processing (IALP), Bandung, Indonesia, 15–17 November 2018. [Google Scholar]

- Sharma, E.; Li, C.; Wang, L. BIGPATENT: A Large-Scale Dataset for Abstractive and Coherent Summarization. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 2204–2213. [Google Scholar]

- Malik, M.; Zhao, Z.; Fonseca, M.; Rao, S.; Cohen, S.B. CivilSum: A Dataset for Abstractive Summarization of Indian Court Decisions. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC USA, 10 July 2024; pp. 2241–2250. [Google Scholar]

- Wang, H.; Li, T.; Du, S.; Wei, X. Mixed Information Bottleneck for Location Metonymy Resolution Using Pre-trained Language Models. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025. [Google Scholar] [CrossRef]

- Gutiérrez-Hinojosa, S.J.; Calvo, H.; Moreno-Armendáriz, M.A.; Duchanoy, C.A. Sentence Embeddings for Document Sets in DUC 2002 Summarization Task; IEEE Dataport: Piscataway, NJ, USA, 2018. [Google Scholar]

- Rush, A.M.; Chopra, S.; Weston, J. A Neural Attention Model for Abstractive Sentence Summarization. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 379–389. [Google Scholar]

- Fatima, W.; Rizvi, S.S.R.; Ghazal, T.M.; Kharma, Q.M.; Ahmad, M.; Abbas, S.; Furqan, M.; Adnan, K.M. Abstractive Text Summarization in Arabic-Like Script Using Multi-Encoder Architecture and Semantic Extraction Techniques. IEEE Access 2025, 13, 104977–104991. [Google Scholar] [CrossRef]

- Grusky, M.; Naaman, M.; Artzi, Y. Newsroom: A Dataset of 1.3 Million Summaries with Diverse Extractive Strategies. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 708–719. [Google Scholar]

- Kim, B.; Kim, H.; Kim, G. Abstractive Summarization of Reddit Posts with Multi-level Memory Networks. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 2519–2531. [Google Scholar]

- Gupta, V.; Bharti, P.; Nokhiz, P.; Karnick, H. SumPubMed: Summarization Dataset of PubMed Scientific Articles. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing: Student Research, Online, 1–6 August 2021; pp. 292–303. [Google Scholar]

- Xia, T.C.; Bertini, F.; Montesi, D. Large Language Models Evaluation for PubMed Extractive Summarisation. ACM Trans. Comput. Healthc. 2025, 3766905. [Google Scholar] [CrossRef]

- Cohan, A.; Dernoncourt, F.; Kim, D.S.; Bui, T.; Kim, S.; Chang, W.; Goharian, N. A Discourse-Aware Attention Model for Abstractive Summarization of Long Documents. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 615–621. [Google Scholar]

- Koupaee, M.; Wang, W.Y. WikiHow: A Large Scale Text Summarization Dataset. arXiv 2018, arXiv:1810.09305. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, N.; Liu, Y.; Fabbri, A.; Liu, J.; Kamoi, R.; Lu, X.; Xiong, C.; Zhao, J.; Radev, D.; et al. Fair Abstractive Summarization of Diverse Perspectives. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024. [Google Scholar]

- Huang, K.-H.; Laban, P.; Fabbri, A.; Choubey, P.K.; Joty, S.; Xiong, C.; Wu, C.-S. Embrace Divergence for Richer Insights: A Multi-Document Summarization Benchmark and a Case Study on Summarizing Diverse Information from News Articles. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024; pp. 570–593. [Google Scholar]

- Datta, D.; Soni, S.; Mukherjee, R.; Ghosh, S. MILDSum: A Novel Benchmark Dataset for Multilingual Summarization of Indian Legal Case Judgments. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 5291–5302. [Google Scholar]

- Zhao, C.; Zhou, X.; Xie, X.; Zhang, Y. Hierarchical Attention Graph for Scientific Document Summarization in Global and Local Level. arXiv 2024, arXiv:2405.10202. [Google Scholar] [CrossRef]

- To, H.Q.; Liu, M.; Huang, G.; Tran, H.-N.; Greiner-Petter, A.; Beierle, F.; Aizawa, A. SKT5SciSumm—Revisiting Extractive-Generative Approach for Multi-Document Scientific Summarization. arXiv 2024, arXiv:2402.17311. [Google Scholar]

- Lu, Y.; Dong, Y.; Charlin, L. Multi-XScience: A Large-Scale Dataset for Extreme Multi-Document Summarization of Scientific Articles. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 8068–8074. [Google Scholar]

- Deutsch, D.; Dror, R.; Roth, D. A Statistical Analysis of Summarization Evaluation Metrics Using Resampling Methods. Trans. Assoc. Comput. Linguist. 2021, 9, 1132–1146. [Google Scholar] [CrossRef]

- Jiang, X.; Dreyer, M. CCSum: A Large-Scale and High-Quality Dataset for Abstractive News Summarization. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024; pp. 7306–7336. [Google Scholar]

- Zhu, M.; Zeng, K.; Wang, M.; Xiao, K.; Hou, L.; Huang, H.; Li, J. EventSum: A Large-Scale Event-Centric Summarization Dataset for Chinese Multi-News Documents. Proc. AAAI Conf. Artif. Intell. 2025, 39, 26138–26147. [Google Scholar] [CrossRef]

- Li, M.; Qi, J.; Lau, J.H. PeerSum: A Peer Review Dataset for Abstractive Multi-Document Summarization. arXiv 2022, arXiv:2203.01769. [Google Scholar]

- Zhong, M.; Yin, D.; Yu, T.; Zaidi, A.; Mutuma, M.; Jha, R.; Awadallah, A.H.; Celikyilmaz, A.; Liu, Y.; Qiu, X.; et al. QMSum: A New Benchmark for Query-Based Multi-Domain Meeting Summarization. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 5905–5921. [Google Scholar]

- Giarelis, N.; Mastrokostas, C.; Karacapilidis, N. Abstractive vs. Extractive Summarization: An Experimental Review. Appl. Sci. 2023, 13, 7620. [Google Scholar] [CrossRef]

- Shakil, H.; Farooq, A.; Kalita, J. Abstractive Text Summarization: State of the Art, Challenges, and Improvements. Neurocomputing 2024, 603, 128255. [Google Scholar] [CrossRef]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting Hallucinations in Large Language Models Using Semantic Entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef] [PubMed]

- Chrysostomou, G.; Zhao, Z.; Williams, M.; Aletras, N. Investigating Hallucinations in Pruned Large Language Models for Abstractive Summarization. Trans. Assoc. Comput. Linguist. 2024, 12, 1163–1181. [Google Scholar] [CrossRef]

- Alansari, A.; Luqman, H. Large Language Models Hallucination: A Comprehensive Survey. arXiv 2025, arXiv:2510.06265. [Google Scholar] [CrossRef]

- Gang, L.; Yang, W.; Wang, T.; He, Z. Corpus Fusion and Text Summarization Extraction for Multi-Feature Enhanced Entity Alignment. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025, 24, 89. [Google Scholar] [CrossRef]

- Fabbri, A.R.; Kryściński, W.; McCann, B.; Xiong, C.; Socher, R.; Radev, D. SummEval: Re-Evaluating Summarization Evaluation. Trans. Assoc. Comput. Linguist. 2021, 9, 391–409. [Google Scholar] [CrossRef]

- Shi, H.; Xu, Z.; Wang, H.; Qin, W.; Wang, W.; Wang, Y.; Wang, Z.; Ebrahimi, S.; Wang, H. Continual Learning of Large Language Models: A Comprehensive Survey. ACM Comput. Surv. 2025, 58, 120. [Google Scholar] [CrossRef]

- Hindustan Times. Available online: https://www.hindustantimes.com/ (accessed on 5 November 2025).

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P.J. PEGASUS: Pre-Training with Extracted Gap-Sentences for Abstractive Summarization. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2019. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-Training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar]

| Model | Training Corpus | ROUGE-L (NewsSumm Test) |

|---|---|---|

| PEGASUS (base) | CNN/DailyMail, XSum | 29.4 |

| PEGASUS (fine-tuned) | NewsSumm | 39.6 |

| Model Type | Model | ROUGE-1 | ROUGE-2 | ROUGE-L |

|---|---|---|---|---|

| Extractive | LexRank | 24.30 | 10.20 | 21.80 |

| Extractive | TextRank | 23.10 | 9.80 | 20.50 |

| Abstractive | PEGASUS | 42.87 | 20.45 | 39.62 |

| Abstractive | BART | 40.12 | 18.67 | 37.30 |

| Abstractive | T5 | 39.05 | 17.54 | 35.98 |

| Steps | Responsible | Key Criteria | Outcome |

|---|---|---|---|

| Annotator Training | Senior Team | Comprehension, exercises | Eligibility |

| Manual Summarization | Annotator | Abstraction, factuality | Draft summary |

| Peer Review | Peer Annotator | Coherence, adherence | Feedback/Approve |

| Senior Audit | Senior Editor | Protocol adherence | Approve/Revision |

| Feedback & Revision | Annotator | Incorporate feedback | Final/Resubmit |

| Final Check | Senior Team | All criteria met | Accept/Exclude |

| Attribute | Values in Percentage |

|---|---|

| University degree | 61 |

| Journalism/writing experience | 34 |

| English C1+ proficiency | 91 |

| Hindi fluency | 80 |

| Geographically diverse | 21 states |

| Metric | Value |

|---|---|

| Total articles | 317,498 |

| Total sentences in articles | 4,652,998 |

| Total words in articles | 106,112,021 |

| Total tokens in articles | 123,674,109 |

| Total sentences in summaries | 1,481,410 |

| Total words in summaries | 30,268,515 |

| Total tokens in summaries | 34,845,065 |

| Average sentences per article | 14.66 |

| Average words per article | 334.21 |

| Average tokens per article | 389.53 |

| Average sentences per summary | 4.67 |

| Average words per summary | 95.45 |

| Average tokens per summary | 109.82 |

| Time span | 2000–2025 |

| Number of unique categories | 5121 |

| Field | Article Sample |

|---|---|

| News published in prominent newspapers of India | Yes |

| Newspaper Name | Hindustan Times [59] |

| Published Date | 11 May 2017 |

| URL | Link to Article (https://www.hindustantimes.com/more-lifestyle/the-truth-is-out-there-tales-from-india-s-ufo-investigators/story-9crhwh8tiWKU4LSvwCfrpM.html#google_vignette/ accessed on 10 October 2025) |

| Headline | The truth is out there: Tales from India’s UFO investigators |

| Article Text | At the stroke of the midnight hour, when the world slept, Sunita Yadav awoke to a levitating alien. She watched, petrified, as it hovered a foot above the ground just behind her home. Standing over 4ft tall, with grey skin and big, black eyes, it proceeded from the Yadavs’ backyard toward their front door before—as her son Hitesh remembers—it “just vanished”...... |

| Human Summary | A young tech student in Gurgaon, Hitesh Yadav, has dedicated years to investigating UFO sightings after claiming to witness a levitating alien as a child in Panchkula. Through the Disclosure Team India and UFO Magazine India, he and others collect and assess reports, blending scientific curiosity and skepticism. Fellow enthusiast Ramkrishan Vaishnav, inspired by early scientific pursuits and regional sightings, continues research while probing India’s unique patterns of UFO phenomena, especially in Rajasthan and Ladakh. Both exemplify India’s growing, reasoned approach to ufology. |

| News Category | National News |

| Dataset | Articles/Clusters | Human | Multi | Years | Cats. | Domain | Accessibility |

|---|---|---|---|---|---|---|---|

| NewsSumm (Proposed) | 317,498 | Yes | Yes | 2000–2025 | 20+ | Indian Eng. | Open (on request) |

| CNN/DailyMail | 287,227 | Extracted | No | 2007–2015 | 1 | W. News | Open |

| XSum | 226,711 | Yes | No | 2016–2018 | 1 | W. News | Open |

| MultiNews | 56,216 clusters | Auto | Yes | 2013–2018 | 1 | W. News | Open |

| WikiSum | 1.7 M clusters | Auto | Yes | Wiki-wide | Many | Wikipedia | Open |

| CCSUM | 1 M clusters | Auto/Human | Yes | 2016–2021 | Many | Multiling. News | Open |

| EventSum | 330,000 clusters | Auto | Yes | 2000–2022 | Events | Chinese News | Open |

| CivilSum | 50,000 cases | Yes | Yes | 1950–2020 | Legal | Indian Courts | Open |

| WikiHow | 230,843 | Extracted | No | — | How-To | Instructional | Open |

| SAMSum | 16,000 dialogues | Yes | No | 2015–2019 | Dialogs | Conversational | Open |

| Feature | NewsSumm (Indian English) | CNN/XSum (Western English) |

|---|---|---|

| Vocabulary | crore, lakh, petrol, prepone, dowry, ration card, RTI, Lok Sabha, Chief Minister | million, gasoline, postpone, welfare, Congress, Governor, Senate |

| Formal Register | Often formal; influenced by British usage (e.g., “thereafter”, “amongst”, “pursuant”) | More casual; influenced by American English (e.g., “after that”, “among”) |

| Code-Switching | Frequent Hindi/Urdu terms or cultural words (e.g., “aam aadmi”, “swachh”) | Rarely present; mostly standard English |

| Naming Conventions | Frequent use of full names and titles (e.g., “Shri Narendra Modi”, “IAS officer”) | Typically shorter names or titles (e.g., “President Biden”) |

| Sentence Structure | Longer, complex, hierarchical clauses; formal style | Shorter, simpler, conversational structure |

| Idiomatic Usage | Region-specific idioms (e.g., “vote bank”, “bandh”, “fast unto death”) | Standardized idioms (e.g., “campaign promise”, “strike”, “protest”) |

| Numerical References | Uses crore, lakh; large-unit numerals | Uses thousand, million, billion |

| Political & Administrative References | Lok Sabha, Rajya Sabha, Panchayat, MLA, Collector, Tehsildar | Congress, Parliament, Senate, Mayor, Governor |

| Model | ROUGE-1 | ROUGE-2 | ROUGE-L |

|---|---|---|---|

| PEGASUS | 42.87 | 20.45 | 39.62 |

| BART | 40.12 | 18.67 | 37.30 |

| T5 | 39.05 | 17.54 | 35.98 |

| Category | PEG ROUGE-L | PEG FactCC | BART ROUGE-L | BART FactCC | T5 ROUGE-L | T5 FactCC |

|---|---|---|---|---|---|---|

| Politics | 44.1 | 78.2 | 41.3 | 73.8 | 42.5 | 75.1 |

| Business | 47.3 | 81.0 | 44.5 | 76.5 | 45.6 | 77.8 |

| National | 45.9 | 79.6 | 43.0 | 75.2 | 44.2 | 76.5 |

| Sports | 48.7 | 82.5 | 46.2 | 78.9 | 47.4 | 80.3 |

| International | 42.2 | 76.3 | 39.9 | 70.4 | 41.0 | 73.1 |

| Health | 46.1 | 80.1 | 43.7 | 75.0 | 44.8 | 77.0 |

| Technology | 45.0 | 79.5 | 43.3 | 73.9 | 43.9 | 75.4 |

| Education | 44.8 | 77.9 | 41.5 | 72.1 | 43.0 | 74.2 |

| Environment | 43.5 | 76.5 | 40.1 | 71.8 | 42.2 | 73.6 |

| Law | 45.3 | 78.7 | 42.0 | 74.0 | 43.6 | 75.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Motghare, M.; Agarwal, M.; Agrawal, A. NewsSumm: The World’s Largest Human-Annotated Multi-Document News Summarization Dataset for Indian English. Computers 2025, 14, 508. https://doi.org/10.3390/computers14120508

Motghare M, Agarwal M, Agrawal A. NewsSumm: The World’s Largest Human-Annotated Multi-Document News Summarization Dataset for Indian English. Computers. 2025; 14(12):508. https://doi.org/10.3390/computers14120508

Chicago/Turabian StyleMotghare, Manish, Megha Agarwal, and Avinash Agrawal. 2025. "NewsSumm: The World’s Largest Human-Annotated Multi-Document News Summarization Dataset for Indian English" Computers 14, no. 12: 508. https://doi.org/10.3390/computers14120508

APA StyleMotghare, M., Agarwal, M., & Agrawal, A. (2025). NewsSumm: The World’s Largest Human-Annotated Multi-Document News Summarization Dataset for Indian English. Computers, 14(12), 508. https://doi.org/10.3390/computers14120508