Evaluating Deployment of Deep Learning Model for Early Cyberthreat Detection in On-Premise Scenario Using Machine Learning Operations Framework †

Abstract

1. Introduction

- comparison of open source MLops platforms and selection of the most suitable one for our use case representing deployment in a small to mid-size organization requiring high level of data privacy, security, and limited hardware and personnel capacities

- evaluating suitability of deployment on selected MLops platform

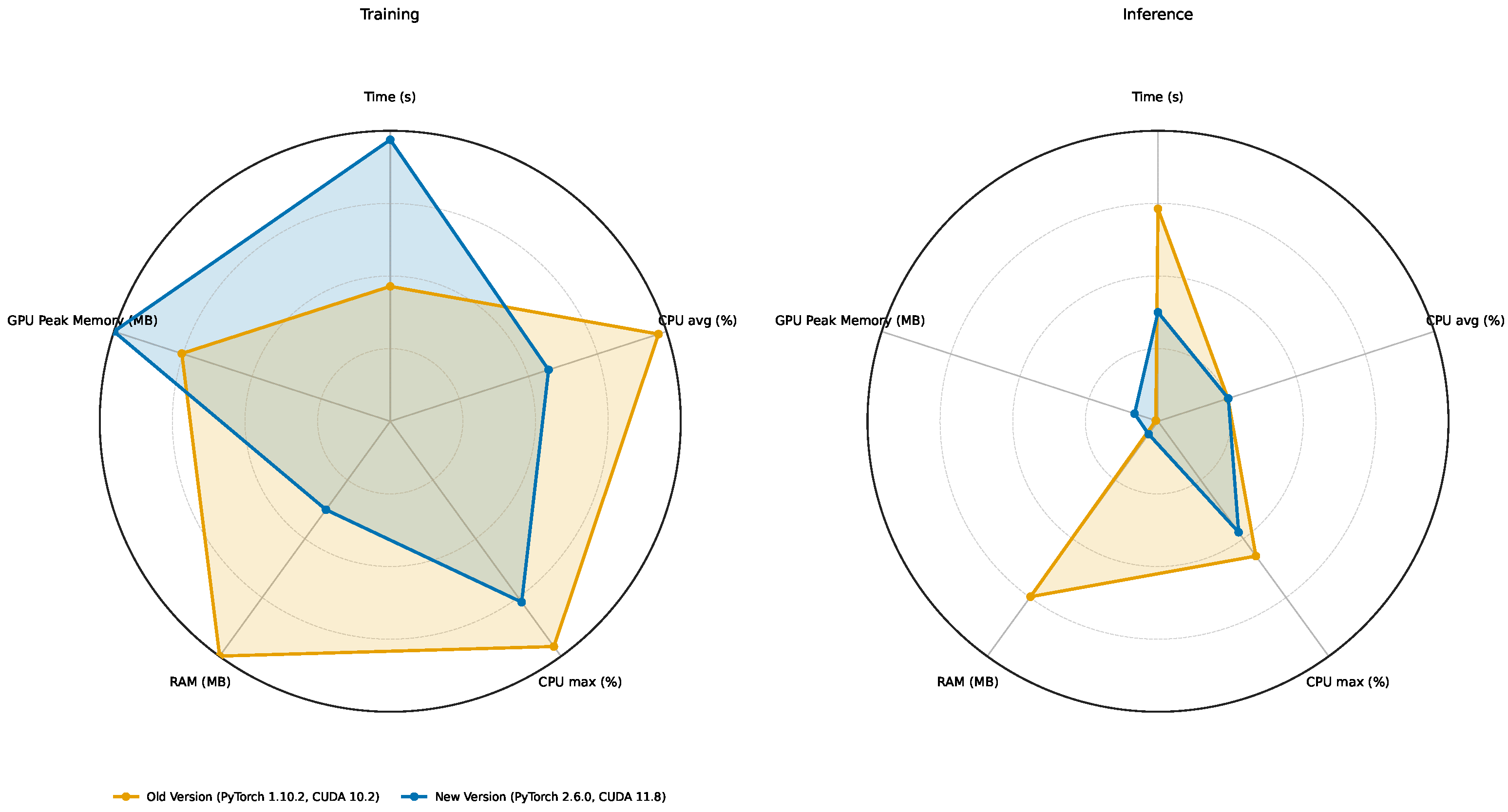

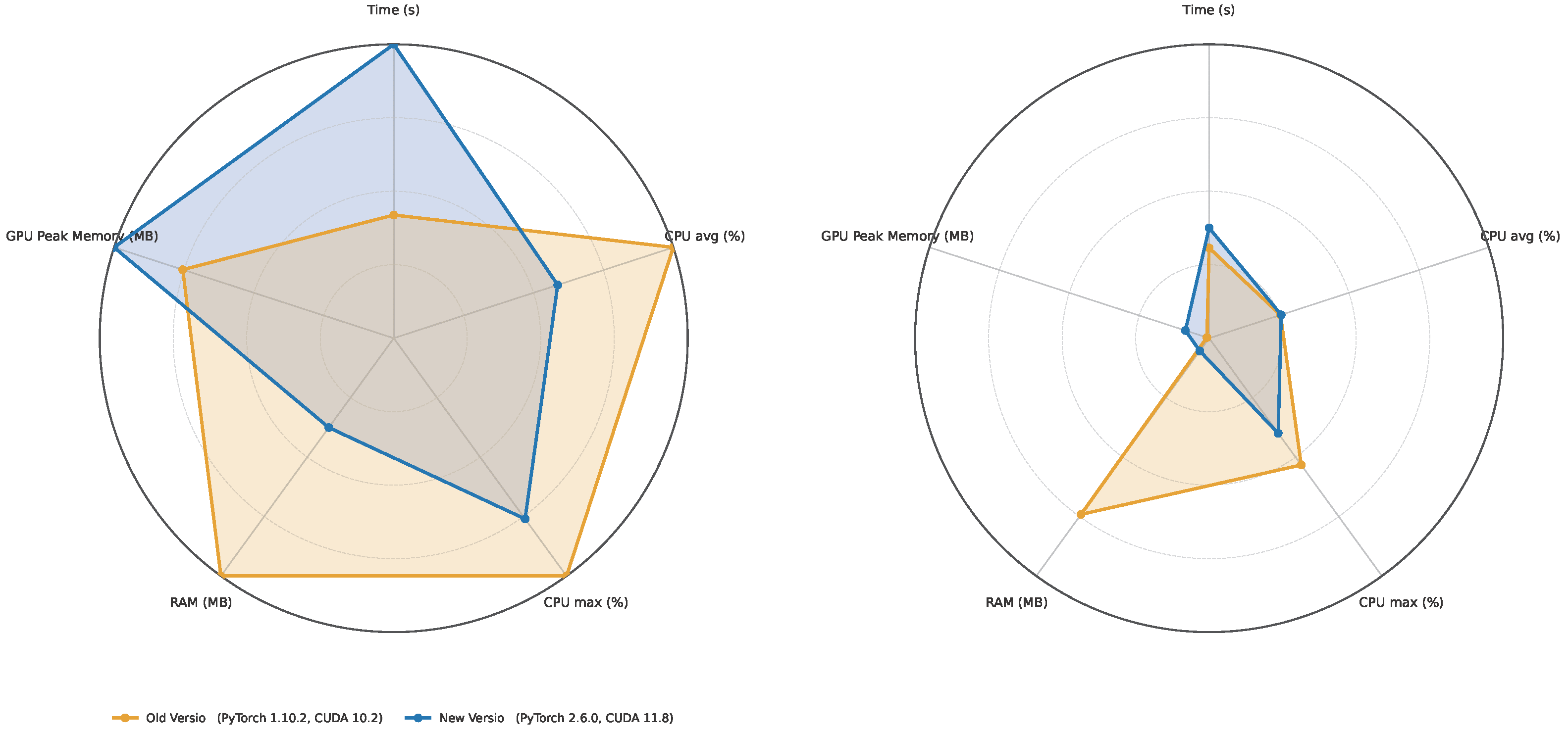

- examining the influence of various factors, particularly the deployment platform and version of software libraries, on overall performance of the solution evaluated through various metrics such as runtime and CPU, RAM, and GPU utilization

2. Background

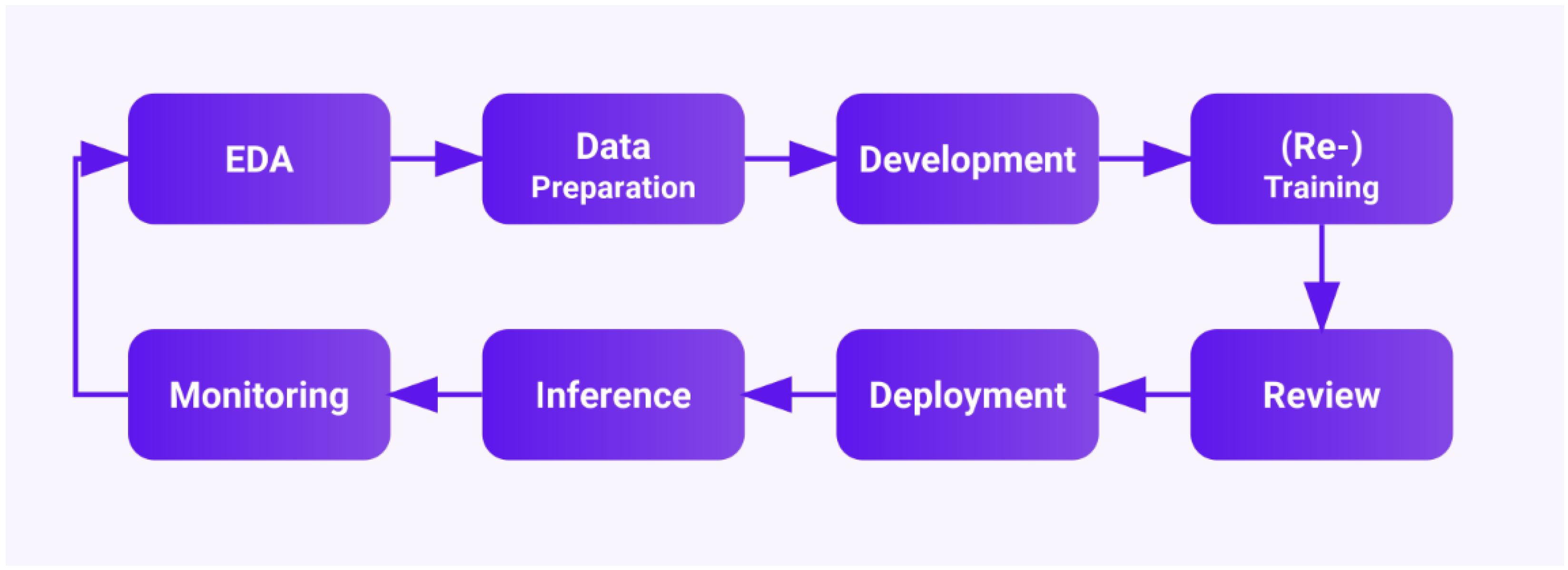

2.1. DevOps Core Principles and MLOps Integration

- CI/CD adapted for ML workflows. In MLOps, CI means not only running unit tests on software but also running model training and validation tests automatically on each code change. Similarly, CD means automatically deploying trained models to production after validation [7]. An ideal MLOps CI/CD pipeline might work as follows:

- code and/or data changes trigger a pipeline run;

- data validation and preprocessing steps are executed;

- the model is evaluated against test datasets;

- if metrics meet criteria, the model is packaged and deployed to a production serving environment;

- the model performance in production is continuously monitored for concept drift or anomalies.

- Additional versioning. Unlike DevOps, which primarily versions source code, MLOps must handle dataset versioning and model versioning in addition to code, since the behavior of a model depends both on code and data [8]. The MLOps practice incorporates tools like data version control (DVC), Pachyderm, or platform-specific solutions (e.g., ClearML Data, MLflow’s model registry) to track dataset versions and model binaries. ClearML, for example, tags each dataset and model with a unique ID and retains lineage (which data and code produced which model), enabling teams to roll back to an earlier model or dataset if needed. This is analogous to Git for code but applied to large binary data. Moreover, model versioning in MLOps includes storing model metadata such as training parameters and evaluation metrics alongside the model file, often in a model registry. This registry concept (e.g., SageMaker Model Registry or MLflow Model Registry) integrates with CI/CD so that a certain model version can be automatically deployed or compared.

- Automated testing and validation. MLOps requires testing of model quality in addition to code functionality, ensuring the model’s predictions are accurate [9], usually by testing against specific metrics. For example, an MLOps pipeline might include a step to compute the model’s accuracy, precision/recall, or false-positive rate on a hold-out security test set.

- Continuous monitoring and feedback loops. Because ML models can degrade over time as data distributions shift, MLOps emphasizes continuous monitoring of model prediction distributions, data drift, and performance metrics [10]. For example, in the context of cyberthreat detection, this means that if the model performance drops (detection rate falls below defined threshold), the MLOps pipeline can alert engineers or start retraining with the latest data on available threats.

- DataOps—focuses on data engineering operations, addressing challenges in data quality, preparation, and pipeline automation [9].

- ModelOps—concentrates on the lifecycle management of analytical models in production environments [9].

- AIOps—describes AI systems in IT operations, though in industry, the MLOps terminology is used the most since about 2021 [9].

2.2. MLOps Maturity Models

- Level 0: Manual processes dominate model development and deployment, with limited automation and reproducibility.

- Level 1: Partial automation exists within the ML pipeline, typically focusing on either training or deployment processes.

- Level 2: Full CI/CD pipeline automation integrates all ML components, enabling continuous training, testing, and deployment of models.

- Level 1: No MLOps implementation; processes remain ad hoc and manual.

- Level 2: DevOps practices exist for software development, but they are not extended to ML components.

- Level 3: Automated model training capabilities are established.

- Level 4: Automated model deployment supplements the automated training process.

- Level 5: Complete automation throughout the ML lifecycle incorporates continuous training, deployment, and monitoring with feedback loops.

2.3. Architectural Approaches in MLOps

3. MLOps for Cyberthreat Detection

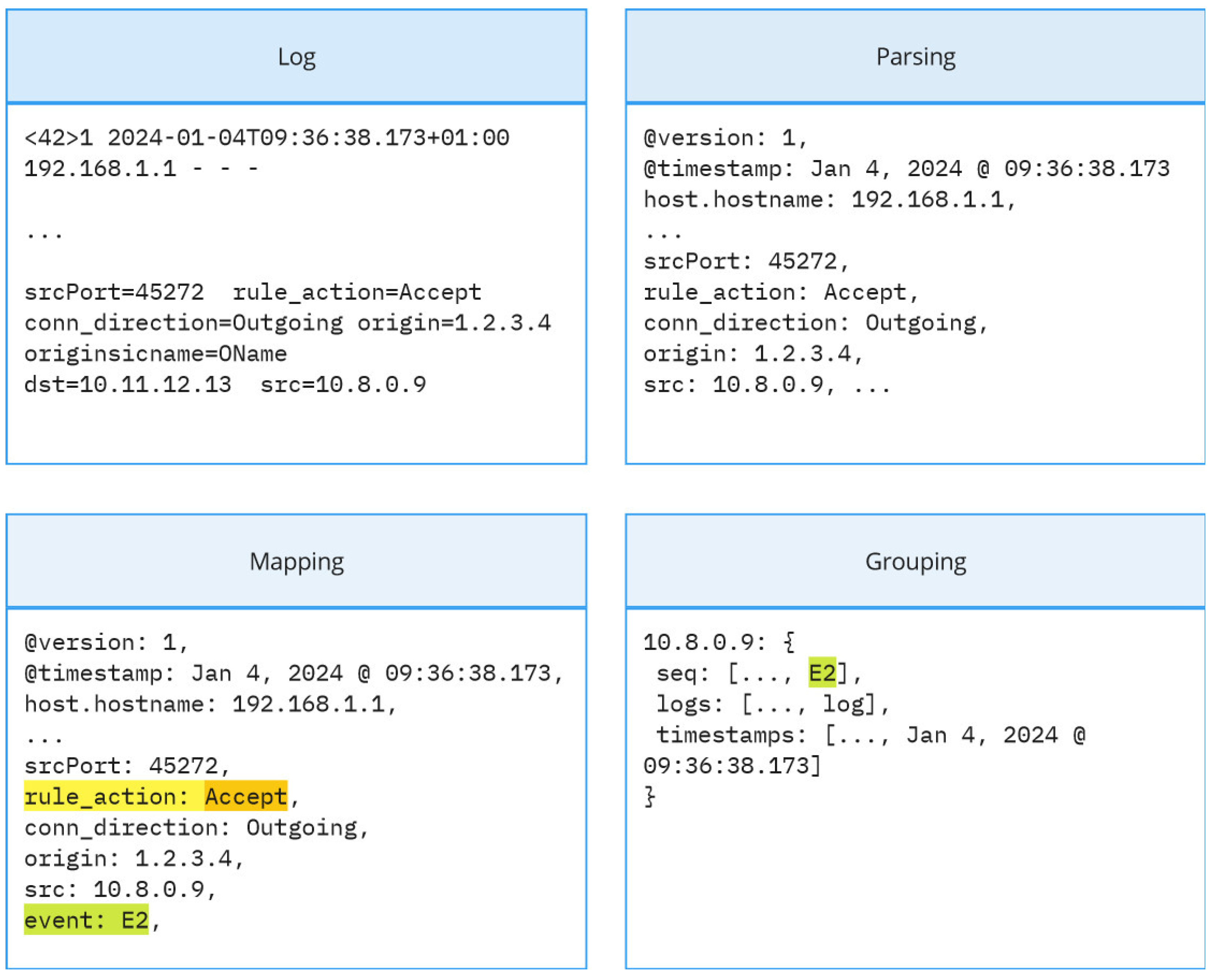

3.1. Challenges in Threat Detection Through Log Analysis

3.2. MLOps Requirements for Threat Detection Systems

3.3. Model for Cyberthreat Detection

- Malicious activity: 4,550,022 logs (26.34% of the dataset)

- Benign activity: 12,722,608 logs (73.66% of the dataset)

- is the early detection rate for the entire dataset.

- is the early detection rate for the i-th true positive instance.

- is the total number of true positive instances.

4. On-Premise Deployment and MLOps Technologies

4.1. Overview of Current MLOps Software Ecosystem

4.2. Comparison of MLOps Platforms for On-Premise Deployment

- Kubeflow offers comprehensive capabilities, but requires substantial infrastructure expertise and Kubernetes proficiency [21]

- ClearML provides simplified deployment procedures while maintaining robust experiment tracking and orchestration capabilities [22]

- MLflow [23] and OpenShift can be integrated to construct complete pipelines

- -

- MLflow is an open-source platform for managing the ML lifecycle, including experimentation, reproducibility, deployment, and model registry.

- -

- Kubeflow is a Kubernetes-native platform for orchestrating ML workflows with dedicated services for training, pipelines, and notebook management

- -

- ClearML is a unified platform for the entire ML lifecycle with modular components for experiment tracking, orchestration, data management, and model serving

- -

- OpenShift is an Enterprise Kubernetes platform with MLOps capabilities through integrations with Kubeflow and other ML tools.

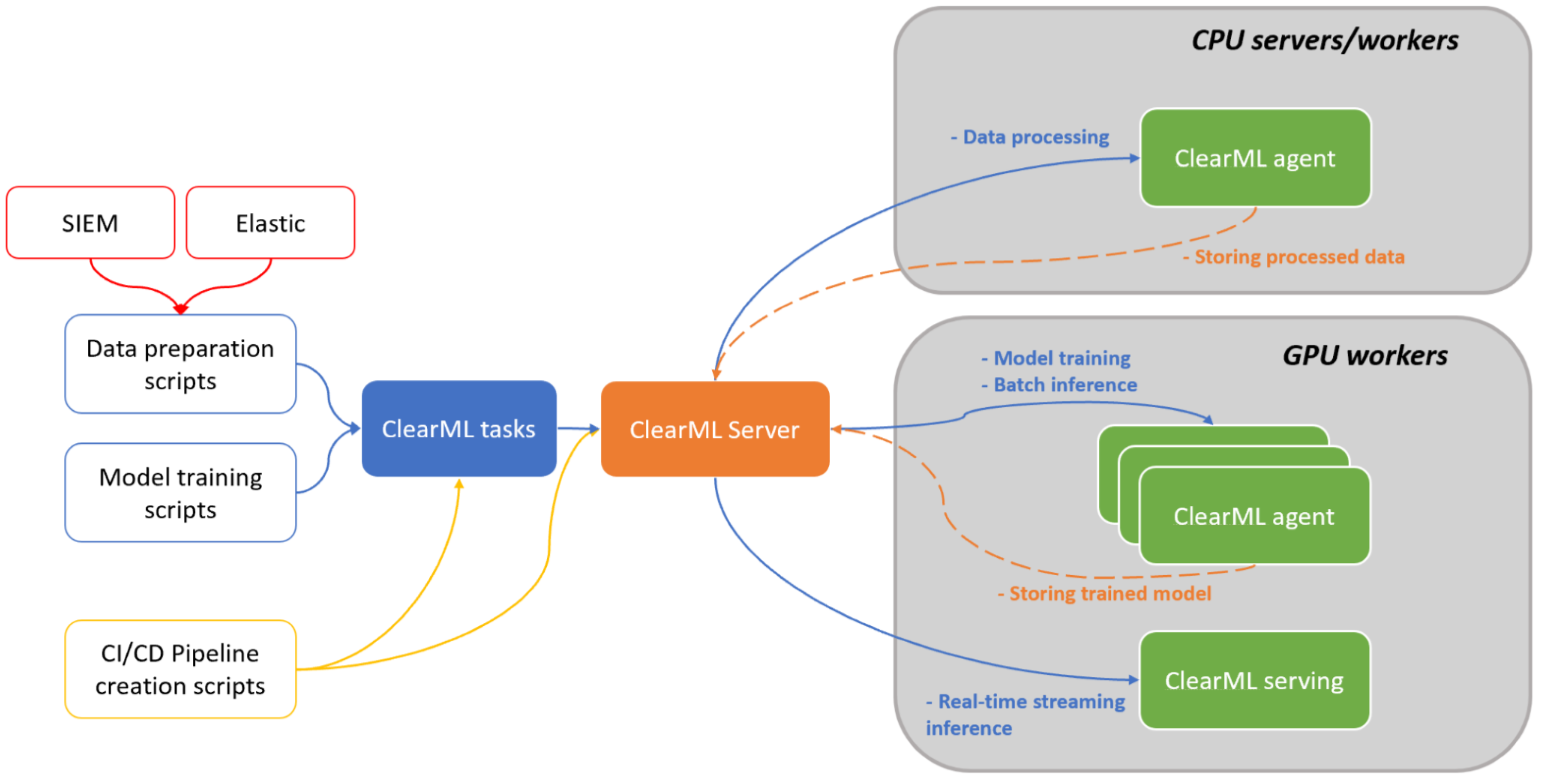

4.3. ClearML and Its Capabilities for On-Premise Deployment

4.3.1. Architecture and Components

4.3.2. Orchestration and Pipelines

4.3.3. Model Serving and Deployment

5. Materials and Methods

5.1. Implementation Framework

5.2. Experimental Setup

- A traditional methodology without MLOps integration, using direct Python script execution and manual artifact management;

- A ClearML-integrated pipeline with orchestration and Agent tasking.

- A version of PyTorch and NVIDIA CUDA for which our model was initially developed—PyTorch 1.10.2/CUDA 10.2;

- The substantially newer version that still supported the original source code—PyTorch 2.6.0/CUDA 11.8.

- bare metal with older framework

- bare metal with newer framework

- containerized execution with older framework

- containerized execution with newer framework;

- Overall time of process execution in seconds;

- Average CPU utilization (%);

- Maximum CPU utilization (%);

- RAM usage (MB);

- Peak GPU Memory Utilization (MB).

6. Results and Discussion

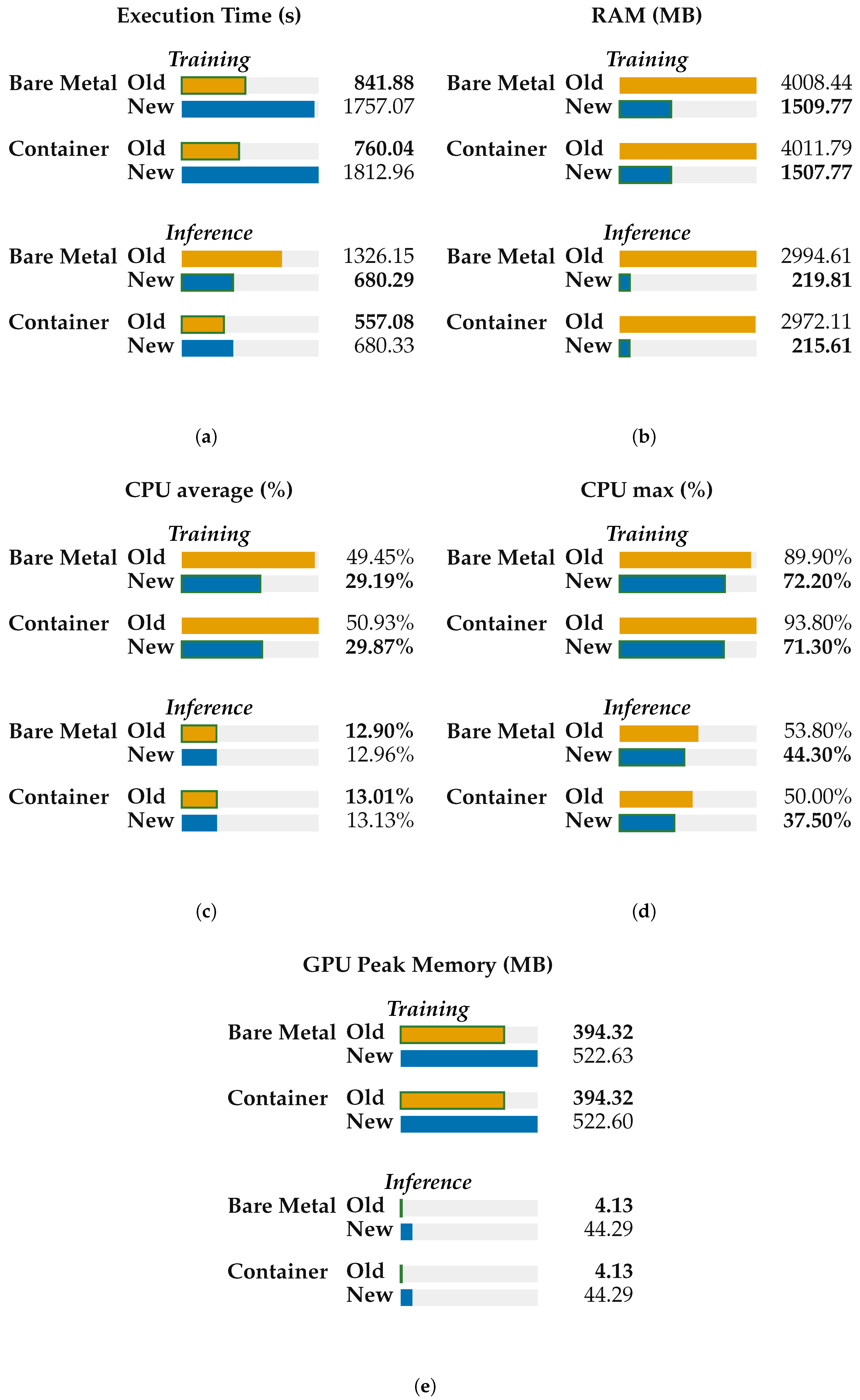

6.1. Comparison of Traditional and ClearML-Integrated Approaches in Hardware Resource Utilization

- Deployment vs. runtime. Containerization does not uniformly outperform bare metal. For training, the container is faster only with PyTorch 1.10.2/CUDA 10.2 (∼9.7% faster); with PyTorch 2.6.0/CUDA 11.8, it is slightly slower (∼3.2%). For inference, the container is much faster with PyTorch 1.10.2 (∼58.0%), while with PyTorch 2.6.0, the container and the bare metal are at parity (∼0% difference). The framework version exerts a larger influence on runtime than the deployment model.

- Resource utilization across deployments. At a fixed framework version, containers and bare metal show near-identical resource profiles.

- CPU: During training, the average CPU utilization falls from ∼50% (1.10.2) to ∼29% (2.6.0) in both deployments (a ∼41% drop); the maximum CPU also decreases (by 20–24%). During inference, the average CPU remains ∼13% for all cases; container CPU maxima are lower than the bare metal by ∼7% (1.10.2) and ∼15% (2.6.0).

- Host RAM: Moving from 1.10.2 to 2.6.0 significantly reduces RAM consumption in both deployments (training: ∼4.0 GB → ∼1.5 GB, ∼62% reduction; inference: ∼3.0 GB → ∼0.22 GB, ∼93% reduction). In a fixed version, the differences between container and bare-metal RAM are within ∼2%.

- GPU peak memory: In a fixed version, container and bare metal are effectively identical. Across versions, 2.6.0 increases peak GPU memory (training: ∼+33%; inference: ∼10.7×), independent of deployment.

- Version dominance. Changing the framework from PyTorch 1.10.2/CUDA 10.2 to PyTorch 2.6.0/CUDA 11.8 has the largest effect: the training time more than doubles (+109–139% depending on deployment). For inference, the upgrade nearly halves the time on bare metal (∼49% faster) but increases the execution time in containers (∼22% slower).

- Container parity and possible advantage. When controlling for version, containers stay within a few percent of bare metal for training (−9.7% to +3.2%) and range from parity (2.6.0) to large gains (1.10.2) for inference. There is no systematic container-induced slowdown or resource penalty.

- The fastest vs. leanest configurations. The lowest observed times for both training and inference occur with the Container (PyTorch 1.10.2/CUDA 10.2). If minimizing host RAM is the priority, PyTorch 2.6.0 (either deployment) would be preferable; under 2.6.0, inference latencies are at parity between deployments.

- CPU vs. GPU bottlenecks. The marked slowdown in training under 2.6.0 coincides with lower average CPU utilization (to ∼29%), indicating GPU-side kernel behavior, synchronization, or possibly, library-level factors rather than host–CPU saturation.

6.2. Theoretical Scalability

6.3. Limitations of the Study

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SIEM | Security information and event management |

| LSTM | Long short-term memory |

| IDS | Intrusion detection system |

| IPS | Intrusion prevention system |

| CI | Continuous integration |

| CD | Continuous delivery/deployment |

| ML | Machine learning |

| DVC | Data version control |

| EDR | Early detection rate |

| RAM | Random access memory |

| GPU | Graphics processing unit |

| CPU | Central processing unit |

| CUDA | Compute unified device architecture |

| DAG | Directed acyclic graph |

| OS | Operating system |

Appendix A

| Feature | MLflow | Kubeflow | ClearML | Red Hat OpenShift |

|---|---|---|---|---|

| Software Requirements | Python 3.6+, optional database | Kubernetes v1.14+, Docker, Linux | Python 3.6+, Docker (for server deployment), MongoDB, Elasticsearch, Redis (come preinstalled in ClearML Server Docker images) | Kubernetes platform; RHEL/CentOS/Fedora CoreOS; Docker/CRI-O runtime. |

| Core Components | Tracking, Model Registry, Projects, Models | Pipelines, Notebooks, Training Operators, KServe, Katib, Metadata | Experiment Manager, MLOps/LLMOps Orchestration, Data Management, Model Serving | Container orchestration, CI/CD, DevOps integration, OpenShift AI |

| Architecture | Modular components with Python APIs and UI interfaces | Kubernetes-based microservice architecture | Three-layer solution with Infrastructure Control, AI Development Center, and GenAI App Engine | Kubernetes-based container platform with enterprise enhancements |

References

- Ralbovský, A.; Kotuliak, I. Early Detection of Malicious Activity in Log Event Sequences Using Deep Learning. In Proceedings of the 2025 37th Conference of Open Innovations Association (FRUCT), Narvik, Norway, 14–16 May 2025; pp. 246–253. [Google Scholar] [CrossRef]

- Kreuzberger, D.; Kühl, N.; Hirschl, S. Machine Learning Operations (MLOps): Overview, Definition, and Architecture. IEEE Access 2023, 11, 31866–31879. [Google Scholar] [CrossRef]

- Khan, A.; Mohamed, A. Optimizing Cybersecurity Education: A Comparative Study of On-Premises and Cloud-Based Lab Environments Using AWS EC2. Computers 2025, 14, 297. [Google Scholar] [CrossRef]

- Amazon. What Is MLOps?—Machine Learning Operations Explained—AWS. Available online: https://aws.amazon.com/what-is/mlops/ (accessed on 10 March 2025).

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M.; Dennison, D. Hidden Technical Debt in Machine Learning Systems. Adv. Neural Inf. Process. Syst. 2015, 28, 2494–2502. [Google Scholar]

- Horvath, K.; Abid, M.R.; Merino, T.; Zimmerman, R.; Peker, Y.; Khan, S. Cloud-Based Infrastructure and DevOps for Energy Fault Detection in Smart Buildings. Computers 2024, 13, 23. [Google Scholar] [CrossRef]

- Subramanya, R.; Sierla, S.; Vyatkin, V. From DevOps to MLOps: Overview and Application to Electricity Market Forecasting. Appl. Sci. 2022, 12, 9851. [Google Scholar] [CrossRef]

- Skogström, H. What Is The Difference Between DevOps And MLOps? Available online: https://valohai.com/blog/difference-between-devops-and-mlops/ (accessed on 10 March 2025).

- Bayram, F.; Ahmed, B.S. Towards Trustworthy Machine Learning in Production: An Overview of the Robustness in MLOps Approach. ACM Comput. Surv. 2025, 57, 1–35. [Google Scholar] [CrossRef]

- Key Requirements for an MLOps Foundation. Available online: https://cloud.google.com/blog/products/ai-machine-learning/key-requirements-for-an-mlops-foundation (accessed on 10 March 2025).

- Symeonidis, G.; Nerantzis, E.; Kazakis, A.; Papakostas, G.A. MLOps—Definitions, Tools and Challenges. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Virtual, 26–29 January 2022; pp. 453–460. [Google Scholar] [CrossRef]

- Hewage, N.; Meedeniya, D. Machine Learning Operations: A Survey on MLOps Tool Support. arXiv 2022, arXiv:2202.10169. [Google Scholar] [CrossRef]

- Amou Najafabadi, F.; Bogner, J.; Gerostathopoulos, I.; Lago, P. An Analysis of MLOps Architectures: A Systematic Mapping Study. In Proceedings of the Software Architecture: 18th European Conference, ECSA 2024, Luxembourg, 3–6 September 2024; pp. 69–85. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, J.; Gu, W.; Su, Y.; Lyu, M.R. Experience Report: Deep Learning-based System Log Analysis for Anomaly Detection. arXiv 2022, arXiv:2107.05908. [Google Scholar] [CrossRef]

- Partida, D. Integrating Machine Learning Techniques for Real-Time Industrial Threat Detection. Available online: https://gca.isa.org/blog/integrating-machine-learning-techniques-for-real-time-industrial-threat-detection (accessed on 10 March 2025).

- Togbe, M.U.; Chabchoub, Y.; Boly, A.; Barry, M.; Chiky, R.; Bahri, M. Anomalies Detection Using Isolation in Concept-Drifting Data Streams. Computers 2021, 10, 13. [Google Scholar] [CrossRef]

- Markevych, M.; Dawson, M. A Review of Enhancing Intrusion Detection Systems for Cybersecurity Using Artificial Intelligence (AI). Int. Conf. Knowl. Based Organ. 2023, 29, 2023. [Google Scholar] [CrossRef]

- Du, M.; Li, F.; Zheng, G.; Srikumar, V. DeepLog: Anomaly Detection and Diagnosis from System Logs through Deep Learning. In Proceedings of the Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, New York, NY, USA, 30 October–3 November 2017; pp. 1285–1298. [Google Scholar] [CrossRef]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. Loganomaly: Unsupervised detection of sequential and quantitative anomalies in unstructured logs. In Proceedings of the Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 4739–4745. [Google Scholar]

- Yang, L.; Chen, J.; Wang, Z.; Wang, W.; Jiang, J.; Dong, X.; Zhang, W. Semi-Supervised Log-Based Anomaly Detection via Probabilistic Label Estimation. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Madrid, Spain, 22–30 May 2021; pp. 1448–1460. [Google Scholar] [CrossRef]

- Kubeflow Documentation. Available online: https://www.kubeflow.org/docs/ (accessed on 10 March 2025).

- ClearML Documentation. Available online: https://clear.ml/docs/latest/docs/ (accessed on 10 March 2025).

- Documentation. Available online: https://mlflow.org/docs/latest/index.html (accessed on 13 March 2025).

- Boyang, Y. MLOPS in a multicloud environment: Typical Network Topology. arXiv 2024, arXiv:2407.20494. [Google Scholar] [CrossRef]

- Scanning AI Production Pipeline—Neural Guard. Available online: https://clear.ml/blog/scanning-ai-production-pipeline-neural-guard (accessed on 10 March 2025).

- ClearML. ClearML Agent Documentation. Available online: https://github.com/allegroai/clearml-docs/blob/main/docs/clearml_agent.md (accessed on 10 March 2025).

- ClearML GitHub Repository. Available online: https://github.com/clearml/clearml (accessed on 10 March 2025).

- ClearML Agents and Queues Documentation. Available online: https://clear.ml/docs/latest/docs/fundamentals/agents_and_queues (accessed on 10 March 2025).

- Research Computing Services: Parallel Computing—Speedup Limitations. Available online: https://researchcomputingservices.github.io/parallel-computing/02-speedup-limitations/ (accessed on 10 March 2025).

- Chen, X.; Wu, Y.; Xu, L.; Xue, Y.; Li, J. Para-Snort: A Multi-Thread on Multi-Core IA Platform. In Proceedings of the IASTED International Conference on Parallel and Distributed Computing and Systems (PDCS), Cambridge, MA, USA, 2–4 November 2009. [Google Scholar]

- Bak, C.D.; Han, S.J. Efficient Scaling on GPU for Federated Learning in Kubernetes: A Reinforcement Learning Approach. In Proceedings of the 2024 International Technical Conference on Circuits/Systems, Computers, and Communications (ITC-CSCC), Okinawa, Japan, 2–5 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

| Feature | MLflow | Kubeflow | ClearML | Red Hat OpenShift |

|---|---|---|---|---|

| Hardware Requirements | Minimal for local use, scales with workload | Master Node: 4 vCPUs, 16 GB RAM per node; GPUs for GPU workloads. | Server: 4 CPUs, 8 GB RAM, 100 GB storage; Workers: depends on workloads (CPU/GPU as needed). | Master Nodes: 4 vCPUs, 16 GB RAM; Worker Nodes: 2 vCPUs, 8 GB RAM per node. |

| Experiment Tracking | Comprehensive tracking with visualization UI | Basic support through Kubeflow Metadata | Auto-logging of parameters, metrics, artifacts, code, and environment | Limited native capabilities |

| Model Registry | Centralized model store with versioning, stage transitions, and lineage tracking | Limited native capabilities | Integrated model registry with comparison tools and visualization | Relies on integrated tools |

| Orchestration/Pipelines | Limited orchestration capabilities and basic workflow support | Powerful Kubernetes-based workflow and end-to-end pipeline orchestration | GPU/CPU scheduling with auto-scaling for cloud and on-prem, advanced pipeline automation with dependency management | Strong orchestration and CI/CD through Kubernetes and Tekton |

| Data Management | Limited; relies on external tools | Limited; relies on external tools | Built-in data versioning, lineage tracking, and pipeline automation | Integrates with various data solutions |

| Model Serving | Built-in server with integrations for Seldon Core and KServe | KServe (formerly KFServing) | Integrated model serving with monitoring | Supports various serving frameworks |

| Ease of Use | Simple API but complex self-hosting | Steep learning curve (requires Kubernetes knowledge) | User-friendly interface with minimal setup | Complex enterprise platform |

| Scalability | Limited for large experiment volumes | Highly scalable with Kubernetes | Highly scalable with cloud support | Enterprise-level scalability |

| Community Support | Active open-source community | Strong community backed by Google | Growing community with active engagement | Commercial support from Red Hat |

| Licensing and Cost | Open-source; no licensing cost | Open-source; no licensing cost | Open-source with enterprise option | Open-source core with paid enterprise features |

| Deployment Options | Self-hosted or managed via Databricks | On-premise or cloud Kubernetes | Self-hosted, Kubernetes, cloud, or SaaS | On-premise or cloud deployments |

| Monitoring and Logging | Basic monitoring capabilities | Kubernetes-based monitoring | Detailed experiment and resource monitoring | Advanced enterprise monitoring |

| Limitations | Lacks user management and advanced orchestration | Complex setup and maintenance | Tightly coupled components | Too complex for small teams |

| Component | Orchestration Server (HP ProLiant DL380 Gen9) | GPU Worker (Dell OptiPlex 7071) |

|---|---|---|

| CPU | 2× Intel Xeon E5-2650 v3 @ 2.30 GHz (20 cores/40 threads) Scaling: 44% (1200–3000 MHz) | Intel Core i7-9700 @ 3.00 GHz (8 cores/8 threads) Scaling: 83% (800–4700 MHz) |

| RAM | 134.25 GB DDR4 | 64 GB DDR4 |

| GPU | Matrox MGA G200EH (rev 01) | Intel UHD Graphics 630NVIDIA GeForce GTX 1660, 6 GB VRAM |

| Disk | 1.68 TB total | 3.85 TB total |

| OS | Linux Ubuntu 24.04 | Linux Ubuntu 24.04 |

| Metric | Bare Metal (PyTorch 1.10.2, CUDA 10.2) | Bare Metal (PyTorch 2.6.0, CUDA 11.8) | Container (PyTorch 1.10.2, CUDA 10.2) | Container (PyTorch 2.6.0, CUDA 11.8) |

|---|---|---|---|---|

| Time (s) | 841.88 | 1757.07 | 760.04 | 1812.96 |

| CPU avg (%) | 49.45 | 29.19 | 50.93 | 29.87 |

| CPU max (%) | 89.9 | 72.20 | 93.80 | 71.3 |

| RAM (MB) | 4008.44 | 1509.77 | 4011.79 | 1507.77 |

| GPU Peak Memory (MB) | 394.32 | 522.63 | 394.32 | 522.6 |

| Metric | Bare Metal (PyTorch 1.10.2, CUDA 10.2) | Bare Metal (PyTorch 2.6.0, CUDA 11.8) | Container (PyTorch 1.10.2, CUDA 10.2) | Container (PyTorch 2.6.0, CUDA 11.8) |

|---|---|---|---|---|

| Time (s) | 1326.15 | 680.29 | 557.08 | 680.33 |

| CPU avg (%) | 12.90 | 12.96 | 13.01 | 13.13 |

| CPU max (%) | 53.80 | 44.30 | 50.00 | 37.5 |

| RAM (MB) | 2994.61 | 219.81 | 2972.11 | 215.61 |

| GPU Peak Memory (MB) | 4.13 | 44.29 | 4.13 | 44.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ralbovský, A.; Kotuliak, I.; Sobolev, D. Evaluating Deployment of Deep Learning Model for Early Cyberthreat Detection in On-Premise Scenario Using Machine Learning Operations Framework. Computers 2025, 14, 506. https://doi.org/10.3390/computers14120506

Ralbovský A, Kotuliak I, Sobolev D. Evaluating Deployment of Deep Learning Model for Early Cyberthreat Detection in On-Premise Scenario Using Machine Learning Operations Framework. Computers. 2025; 14(12):506. https://doi.org/10.3390/computers14120506

Chicago/Turabian StyleRalbovský, Andrej, Ivan Kotuliak, and Dennis Sobolev. 2025. "Evaluating Deployment of Deep Learning Model for Early Cyberthreat Detection in On-Premise Scenario Using Machine Learning Operations Framework" Computers 14, no. 12: 506. https://doi.org/10.3390/computers14120506

APA StyleRalbovský, A., Kotuliak, I., & Sobolev, D. (2025). Evaluating Deployment of Deep Learning Model for Early Cyberthreat Detection in On-Premise Scenario Using Machine Learning Operations Framework. Computers, 14(12), 506. https://doi.org/10.3390/computers14120506