6DoF Object Tracking based on 3D Scans for Augmented Reality Remote Live Support

Abstract

1. Introduction

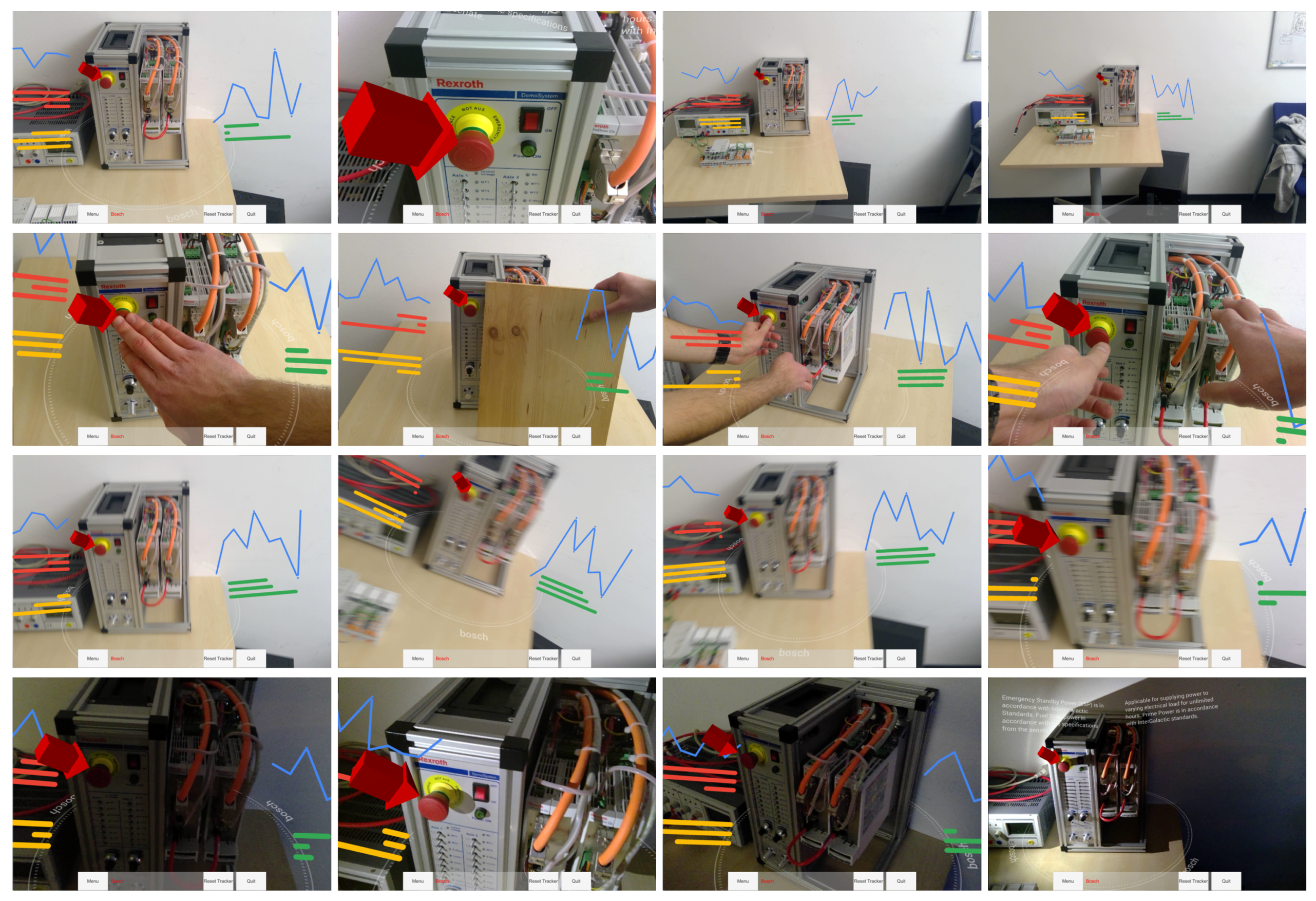

- A robust 3D object tracking framework based on textured 3D scans of the objects

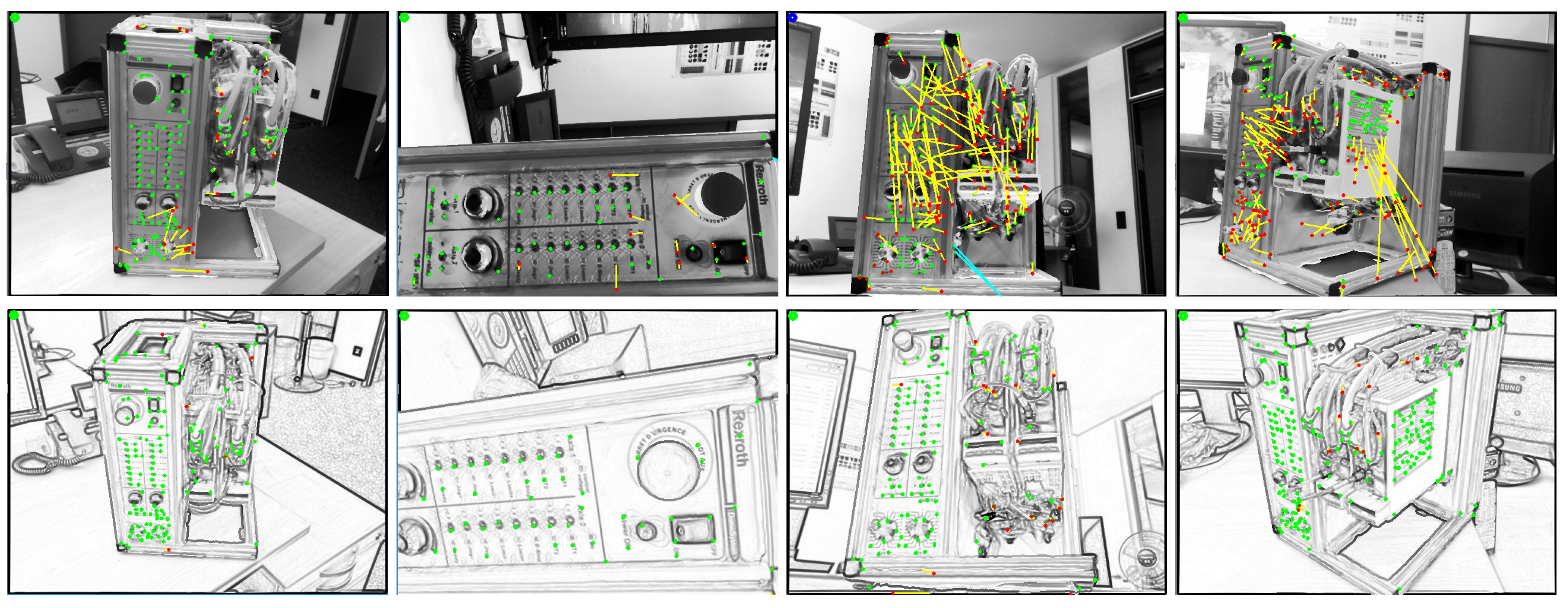

- A fast and robust multi-threaded initialization and reinitialization scheme using ORB features

- Frame to frame tracking with combination of tracking between real images and tracking between rendered and real images for additional robustness

- The use of the pencil filter for the enhancement of illumination invariance of the tracking

- A Remote Live Support architecture with 3D registration of the remote expert annotations

2. 3D Object Tracking

2.1. Problem Formulation

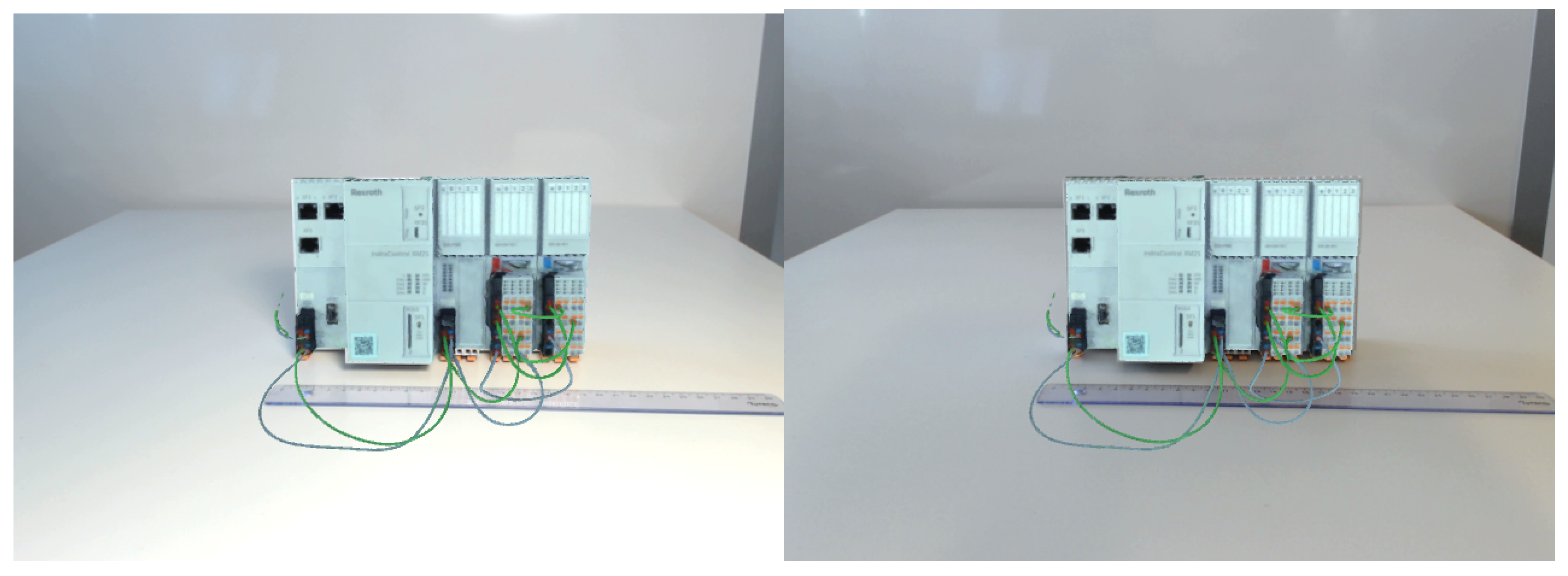

2.2. Object Registration Procedure

2.2.1. 3D Scanning

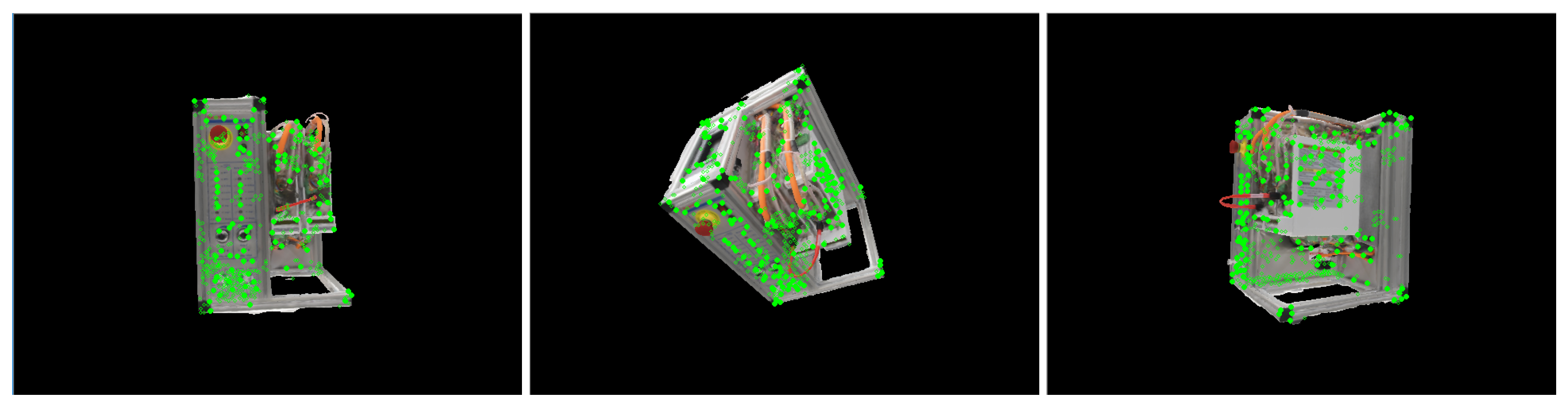

2.2.2. Learning Features for Tracking

2.3. Object Tracking Algorithm

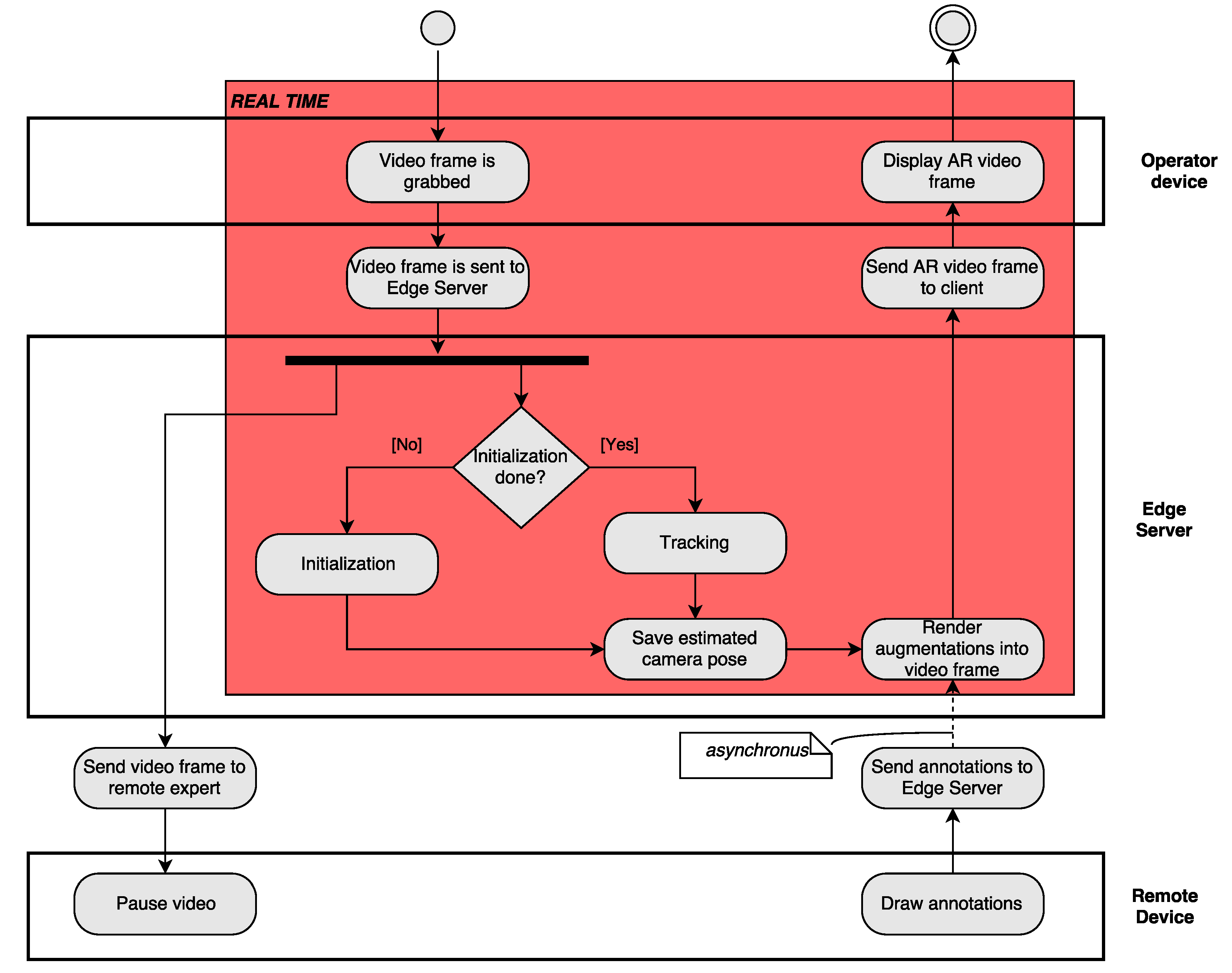

2.3.1. Algorithm Outline

2.3.2. Frame to Frame Tracking

2.3.3. ORB Initializer and Reinitializer

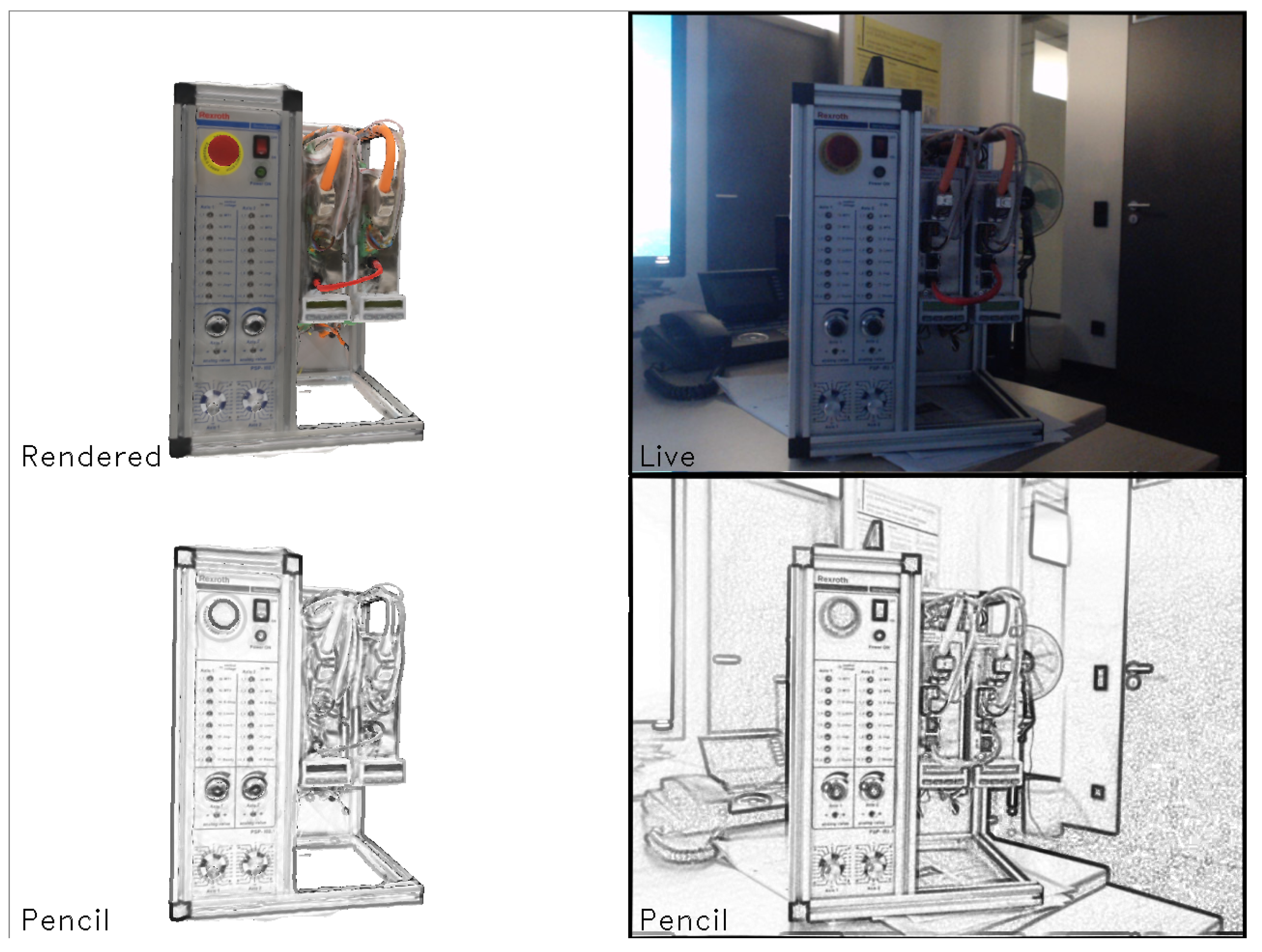

2.3.4. Pencil Filter

3. Remote Live Support Realized with Mobile Edge Computing

3.1. Mobile Edge Computing

3.2. Relevance of MEC for AR

3.3. Remote Live Support System Architecture

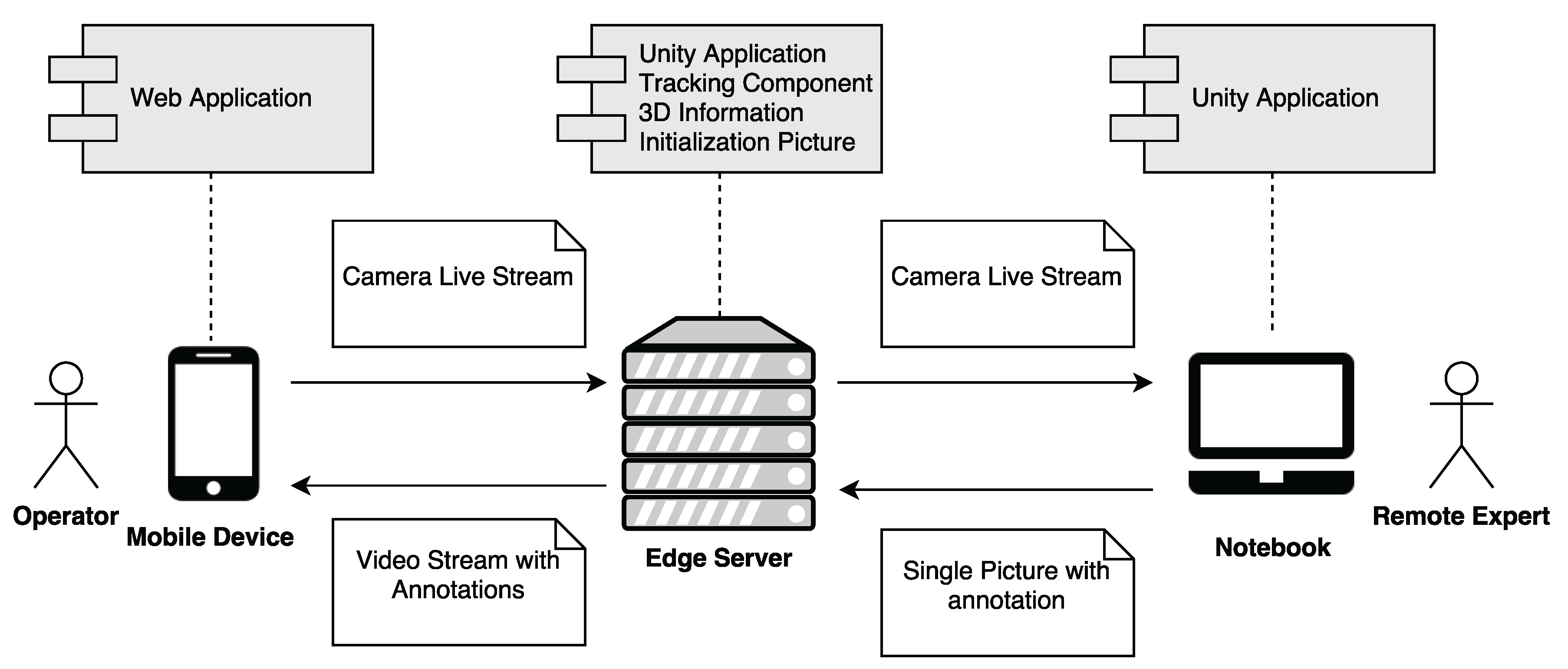

3.3.1. Overview

3.3.2. User Side—Mobile Device

3.3.3. Server Side—Edge Cloud

3.3.4. Remote Expert

3.3.5. 3D Registration of Annotations

4. Evaluation

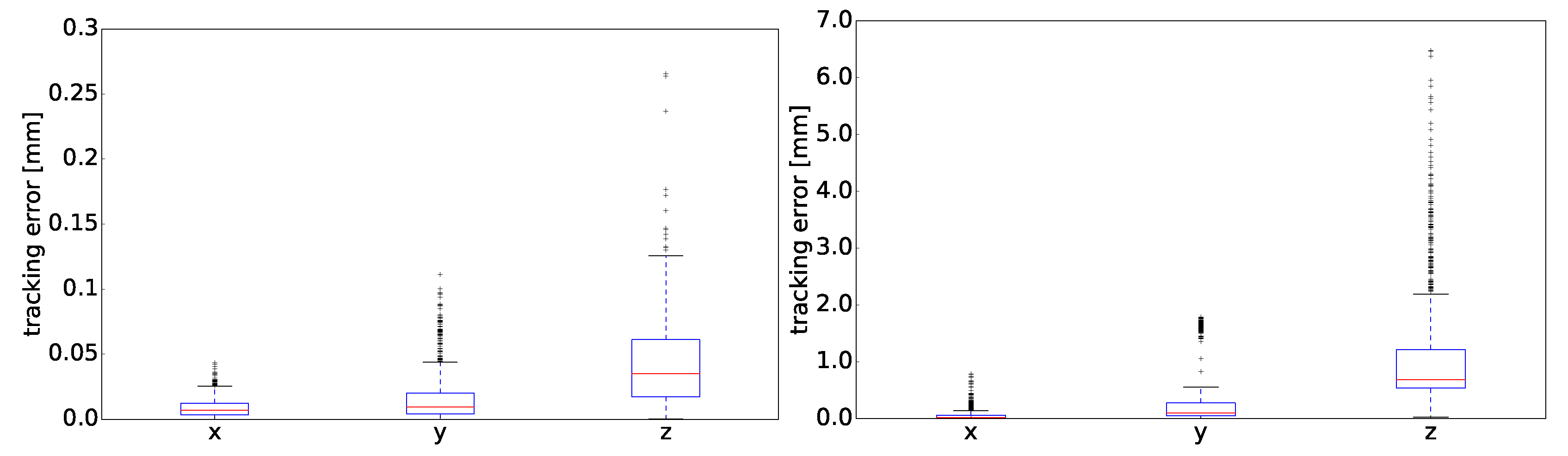

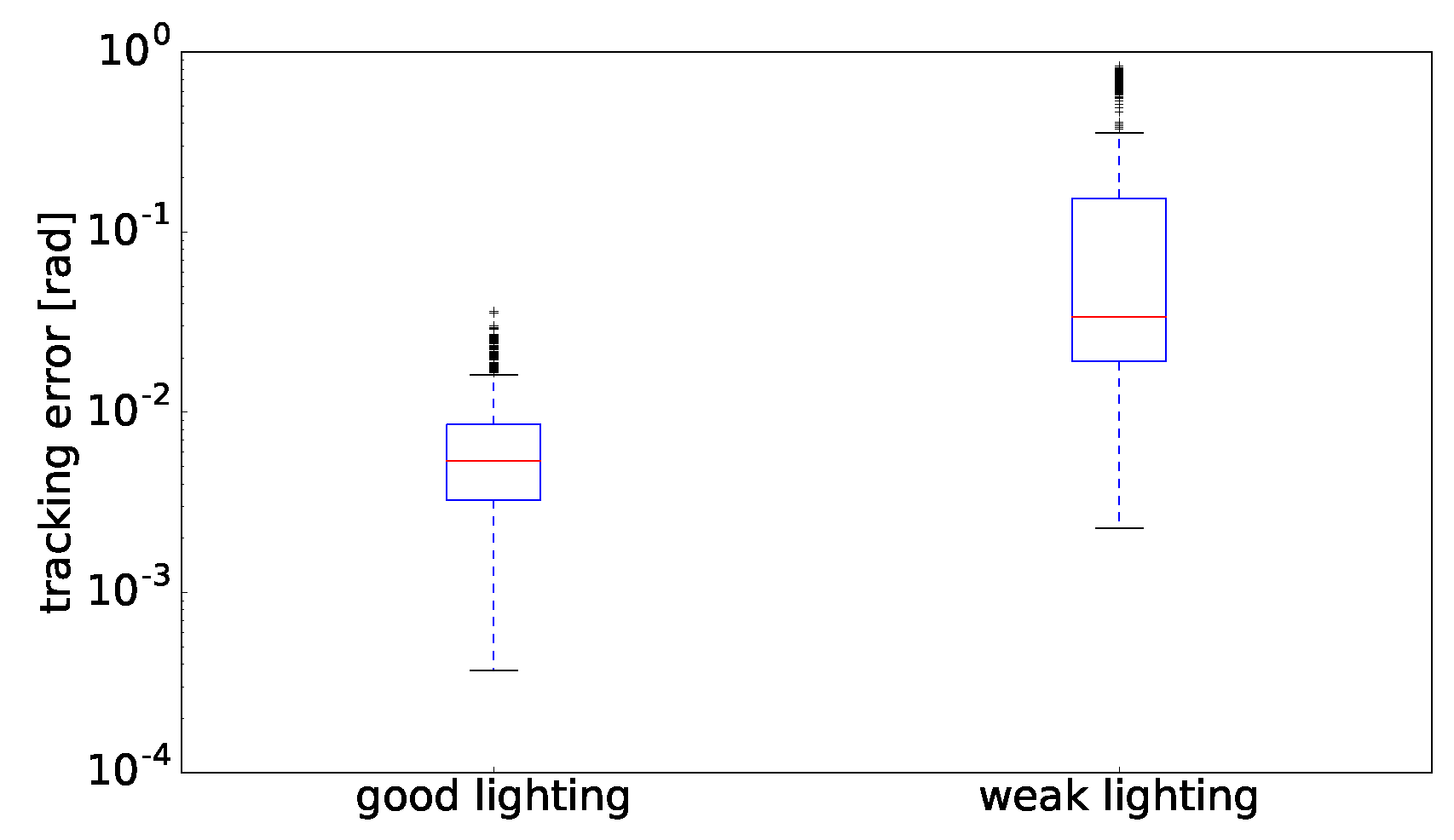

4.1. Tracking Quality

4.2. Runtime Measurements

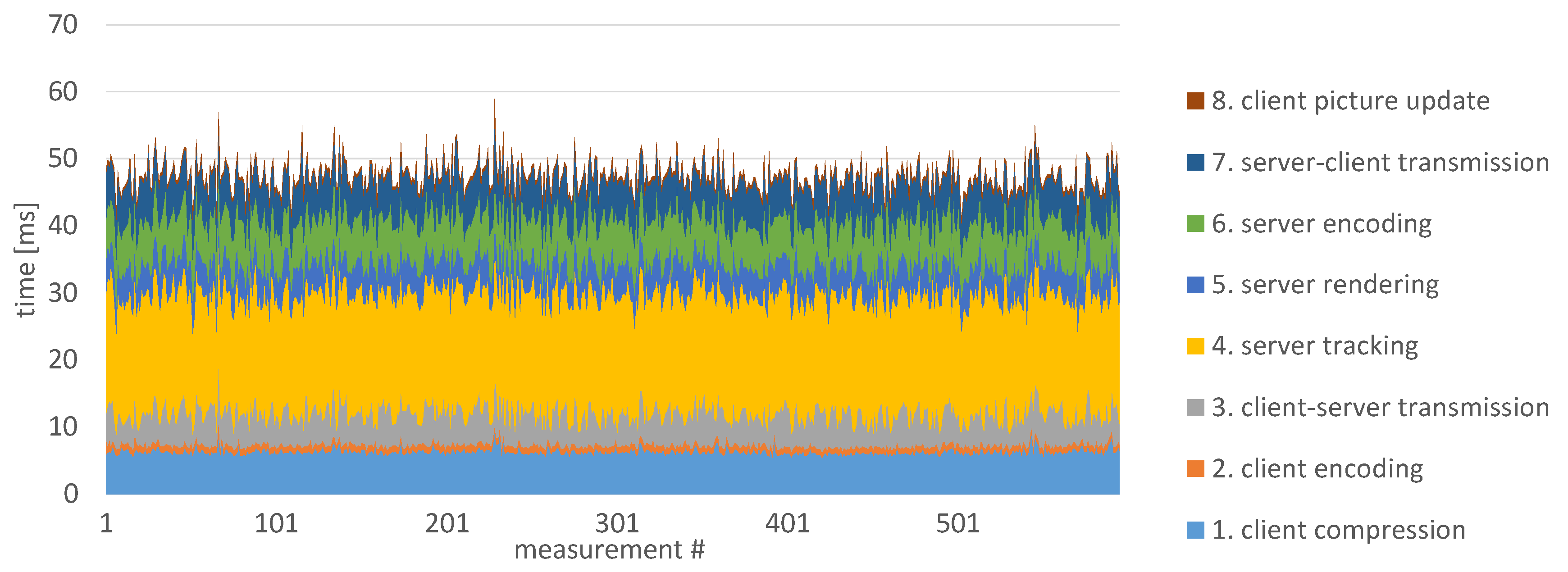

4.2.1. Offloading Delay

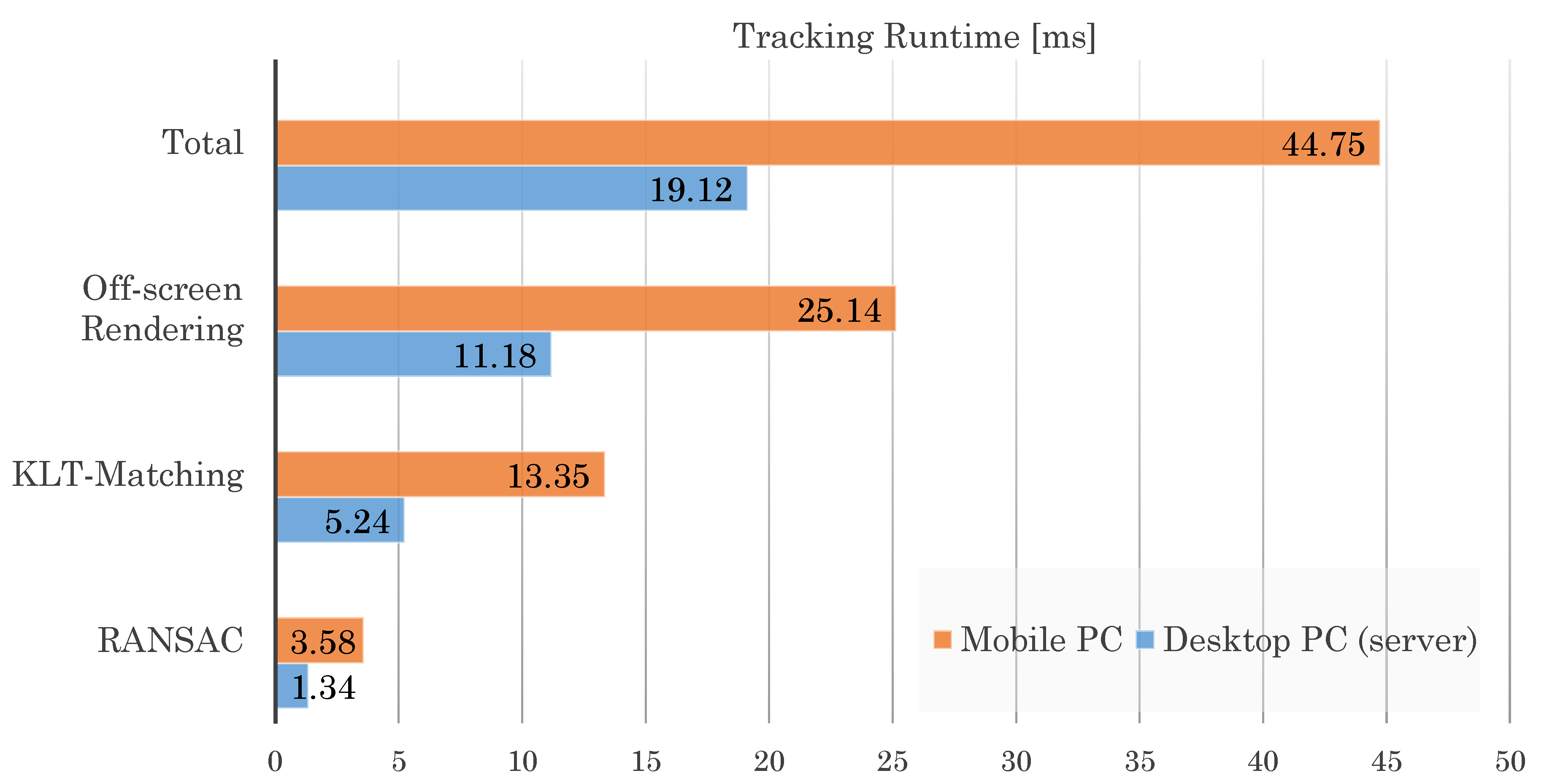

4.2.2. Server vs. Mobile Device Processing Time

5. Discussion

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Barfield, W. Fundamentals of Wearable Computers and Augmented Reality; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Schneider, M.; Rambach, J.; Stricker, D. Augmented reality based on edge computing using the example of remote live support. In Proceedings of the 2017 IEEE International Conference on Industrial Technology (ICIT), Toronto, ON, Canada, 22–25 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1277–1282. [Google Scholar]

- Dunleavy, M.; Dede, C. Augmented reality teaching and learning. In Handbook of Research on Educational Communications and Technology; Springer: New York, NY, USA, 2014; pp. 735–745. [Google Scholar]

- Chen, L.; Day, T.; Tang, W.; John, N.W. Recent Developments and Future Challenges in Medical Mixed Reality. arXiv, 2017; arXiv:1708.01225. [Google Scholar]

- Von Itzstein, G.S.; Billinghurst, M.; Smith, R.T.; Thomas, B.H. Augmented Reality Entertainment: Taking Gaming Out of the Box. In Encyclopedia of Computer Graphics and Games; Springer: New York, NY, USA, 2017; pp. 1–9. [Google Scholar]

- Billinghurst, M.; Clark, A.; Lee, G. A survey of augmented reality. Found. Trends Hum. Comput. Interact. 2015, 8, 73–272. [Google Scholar] [CrossRef]

- Weigel, J.; Viller, S.; Schulz, M. Designing support for collaboration around physical artefacts: Using augmented reality in learning environments. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 405–408. [Google Scholar]

- Rambach, J.; Pagani, A.; Stricker, D. [POSTER] Augmented Things: Enhancing AR Applications leveraging the Internet of Things and Universal 3D Object Tracking. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 103–108. [Google Scholar]

- Rambach, J.; Pagani, A.; Lampe, S.; Reiser, R.; Pancholi, M.; Stricker, D. [POSTER] Fusion of Unsynchronized Optical Tracker and Inertial Sensor in EKF Framework for In-car Augmented Reality Delay Reduction. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 109–114. [Google Scholar]

- Marchand, E.; Uchiyama, H.; Spindler, F. Pose estimation for augmented reality: A hands-on survey. IEEE Trans. Vis. Comput. Graph. 2015, 22, 2633–2651. [Google Scholar] [CrossRef] [PubMed]

- Pagani, A.; Koehler, J.; Stricker, D. Circular markers for camera pose estimation. In Proceedings of the WIAMIS 2011: 12th International Workshop on Image Analysis for Multimedia Interactive Services, Delft, The Netherlands, 13–15 April 2011. [Google Scholar]

- Pagani, A. Reality Models for Efficient Registration in Augmented Reality; Verlag Dr. Hut: München, Germany, 2014. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, 2007, ISMAR 2007, Nara, Japan, 13–16 November 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 225–234. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Drummond, T.; Cipolla, R. Real-time visual tracking of complex structures. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 932–946. [Google Scholar] [CrossRef]

- Wuest, H.; Vial, F.; Stricker, D. Adaptive line tracking with multiple hypotheses for augmented reality. In Proceedings of the 4th IEEE/ACM International Symposium on Mixed and Augmented Reality, Vienna, Austria, 5–8 October 2005; IEEE Computer Society: Los Alamitos, CA, USA, 2005; pp. 62–69. [Google Scholar]

- Vacchetti, L.; Lepetit, V.; Fua, P. Combining edge and texture information for real-time accurate 3D camera tracking. In Proceedings of the 3rd IEEE/ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 2–5 November 2004; IEEE Computer Society: Los Alamitos, CA, USA, 2004; pp. 48–57. [Google Scholar]

- Petit, A.; Marchand, E.; Kanani, K. Tracking complex targets for space rendezvous and debris removal applications. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 4483–4488. [Google Scholar]

- Petit, A.; Marchand, E.; Kanani, K. Augmenting markerless complex 3D objects by combining geometrical and color edge information. In Proceedings of the 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, Australia, 1–4 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 287–288. [Google Scholar]

- Seo, B.K.; Park, H.; Park, J.I.; Hinterstoisser, S.; Ilic, S. Optimal local searching for fast and robust textureless 3D object tracking in highly cluttered backgrounds. IEEE Trans. Vis. Comput. Graph. 2014, 20, 99–110. [Google Scholar] [PubMed]

- Seo, B.K.; Wuest, H. A Direct Method for Robust Model-Based 3D Object Tracking from a Monocular RGB Image. In Computer Vision–ECCV 2016 Workshops; Springer: Amsterdam, The Netherlands, 2016; pp. 551–562. [Google Scholar]

- Vuforia. Augmented Reality. Available online: https://www.vuforia.com/ (accessed on 1 January 2018).

- Besbes, B.; Collette, S.N.; Tamaazousti, M.; Bourgeois, S.; Gay-Bellile, V. An Interactive Augmented Reality System: A Prototype for Industrial Maintenance Training Applications. In Proceedings of the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, 5–8 November 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 269–270. [Google Scholar]

- Javornik, A. Classifications of augmented reality uses in marketing. In Proceedings of the IEEE International Symposium on Mixed and Augmented Realities 2014, Munich, Germany, 10–12 September 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Horejsi, P. Augmented Reality System for Virtual Training of Parts Assembly. In Proceedings of the 25th DAAAM International Symposium on Intelligent Manufacturing and Automation, Vienna, Austria, 26–29 November 2014; Elsevier: Amsterdam, The Netherlands, 2015; Volume 100, pp. 699–706. [Google Scholar]

- Remote Live Support from Scope AR. Available online: www.scopear.com/products/remote-ar/ (accessed on 1 January 2018).

- Augmented Repair App: To Repair a Coffee Machine without a User Manual in Minutes. Now Available with ARKit and ARCore. Available online: www.re-flekt.com/reflekt-remote/ (accessed on 1 January 2018).

- Oculavis—The Remote Process Platform. Available online: www.oculavis.de/ (accessed on 1 January 2018).

- Want, R.; Schilit, B.N.; Jenson, S. Enabling the Internet of Things. IEEE Comput. 2015, 48, 28–35. [Google Scholar] [CrossRef]

- Aleksy, M.; Vartiainen, E.; Domova, V.; Naedele, M. Augmented Reality for Improved Service Delivery. In Proceedings of the 2014 IEEE 28th International Conference on Advanced Information Networking and Applications (AINA), Victoria, BC, Canada, 13–16 May 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 382–389. [Google Scholar]

- Ngatman, M.; Ngadi, M.; Sharif, J. Comprehensive study of transmission techniques for reducing packet loss and delay in multimedia over ip. Int. J. Comput. Sci. Netw. Secur. 2008, 8, 292–299. [Google Scholar]

- Hasper, P.; Petersen, N.; Stricker, D. Remote execution vs. simplification for mobile real-time computer vision. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; IEEE: Piscataway, NJ, USA, 2014; Volume 3, pp. 156–161. [Google Scholar]

- Melnyk, S.; Tesfay, A.; Schotten, H.; Rambach, J.; Stricker, D.; Petri, M.; Ehrig, M.; Augustin, T.; Franchi, N.; Fettweis, G.; et al. (Eds.) Next Generation Industrial Radio LAN for Tactile and Safety Applications. VDE/ITG Fachtagung Mobilkommunikation, 22. May 9–10, Osnabrueck, Niedersachsen, Germany; VDE/ITG: Osnabrueck, Germany, 2017. [Google Scholar]

- Azuma, R. A Survey of Augmented Reality. In Presence: Teleoperators and Virtual Environments; MIT Press: Cambridge, MA, USA, 1997; Volume 6, pp. 355–385. [Google Scholar]

- Weckbrodt, H. Druckerei-Techniker Bekommen Augengesteuerte Datenbrillen. 2015. Available online: http://oiger.de/2015/10/01/druckerei-techniker-bekommen-augengesteuerte-datenbrillen/155815 (accessed on 1 January 2018).

- Wang, J.; Feng, Y.; Zeng, C.; Li, S. An augmented reality based system for remote collaborative maintenance instruction of complex products. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), Taipei, Taiwan, 18–22 August 2014; pp. 309–314. [Google Scholar]

- Masoni, R.; Ferrise, F.; Bordegoni, M.; Gattullo, M.; Uva, A.E.; Fiorentino, M.; Carrabba, E.; Di Donato, M. Supporting Remote Maintenance in Industry 4.0 through Augmented Reality. Procedia Manuf. 2017, 11, 1296–1302. [Google Scholar] [CrossRef]

- Limbu, B.; Fominykh, M.; Klemke, R.; Specht, M.; Wild, F. Supporting training of expertise with wearable technologies: The WEKIT reference framework. In Mobile and Ubiquitous Learning; Springer: Berlin, Germany, 2018; pp. 157–175. [Google Scholar]

- Koehler, J.; Noell, T.; Reis, G.; Stricker, D. A full-spherical device for simultaneous geometry and reflectance acquisition. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Tampa, FL, USA, 15–17 January 2013; pp. 355–362. [Google Scholar]

- Guo, H.; Zhao, Z.; Chen, M. Efficient iterative algorithm for phase-shifting interferometry. Opt. Lasers Eng. 2007, 45, 281–292. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Noell, T.; Koehler, J.; Reis, G.; Stricker, D. High Quality and Memory Efficient Representation for Image Based 3D Reconstructions. In Proceedings of the 2012 International Conference on Digital Image Computing Techniques and Applications (DICTA), Fremantle, Australia, 3–5 December 2012; pp. 1–8. [Google Scholar]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the 1994 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Nöll, T.; Pagani, A.; Stricker, D. Markerless Camera Pose Estimation—An Overview. Available online: http://drops.dagstuhl.de/opus/volltexte/2011/3096/pdf/7.pdf (accessed on 1 January 2018).

- Lucas, B.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2564–2571. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Lee, J.; Ardakani, H.D.; Yang, S.; Bagheri, B. Industrial big data analytics and cyber-physical systems for future maintenance & service innovation. Procedia CIRP 2015, 38, 3–7. [Google Scholar]

- Fernando, N.; Loke, S.W.; Rahayu, W. Mobile cloud computing: A survey. Future Gener. Comput. Syst. 2013, 29, 84–106. [Google Scholar] [CrossRef]

- Pasman, W.; Jansen, F.W. Distributed Low-latency Rendering for Mobile AR. In Proceedings of the IEEE and ACM International Symposium on Augmented Reality (ISAR 2001), New York, NY, USA, 29–30 October 2001; pp. 107–113. [Google Scholar]

- Brooks, F.P. What’s Real About Virtual Reality? IEEE Comput. Graph. Appl. 1999, 19, 16–27. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rambach, J.; Pagani, A.; Schneider, M.; Artemenko, O.; Stricker, D. 6DoF Object Tracking based on 3D Scans for Augmented Reality Remote Live Support. Computers 2018, 7, 6. https://doi.org/10.3390/computers7010006

Rambach J, Pagani A, Schneider M, Artemenko O, Stricker D. 6DoF Object Tracking based on 3D Scans for Augmented Reality Remote Live Support. Computers. 2018; 7(1):6. https://doi.org/10.3390/computers7010006

Chicago/Turabian StyleRambach, Jason, Alain Pagani, Michael Schneider, Oleksandr Artemenko, and Didier Stricker. 2018. "6DoF Object Tracking based on 3D Scans for Augmented Reality Remote Live Support" Computers 7, no. 1: 6. https://doi.org/10.3390/computers7010006

APA StyleRambach, J., Pagani, A., Schneider, M., Artemenko, O., & Stricker, D. (2018). 6DoF Object Tracking based on 3D Scans for Augmented Reality Remote Live Support. Computers, 7(1), 6. https://doi.org/10.3390/computers7010006