A Survey of 2D Face Recognition Techniques

Abstract

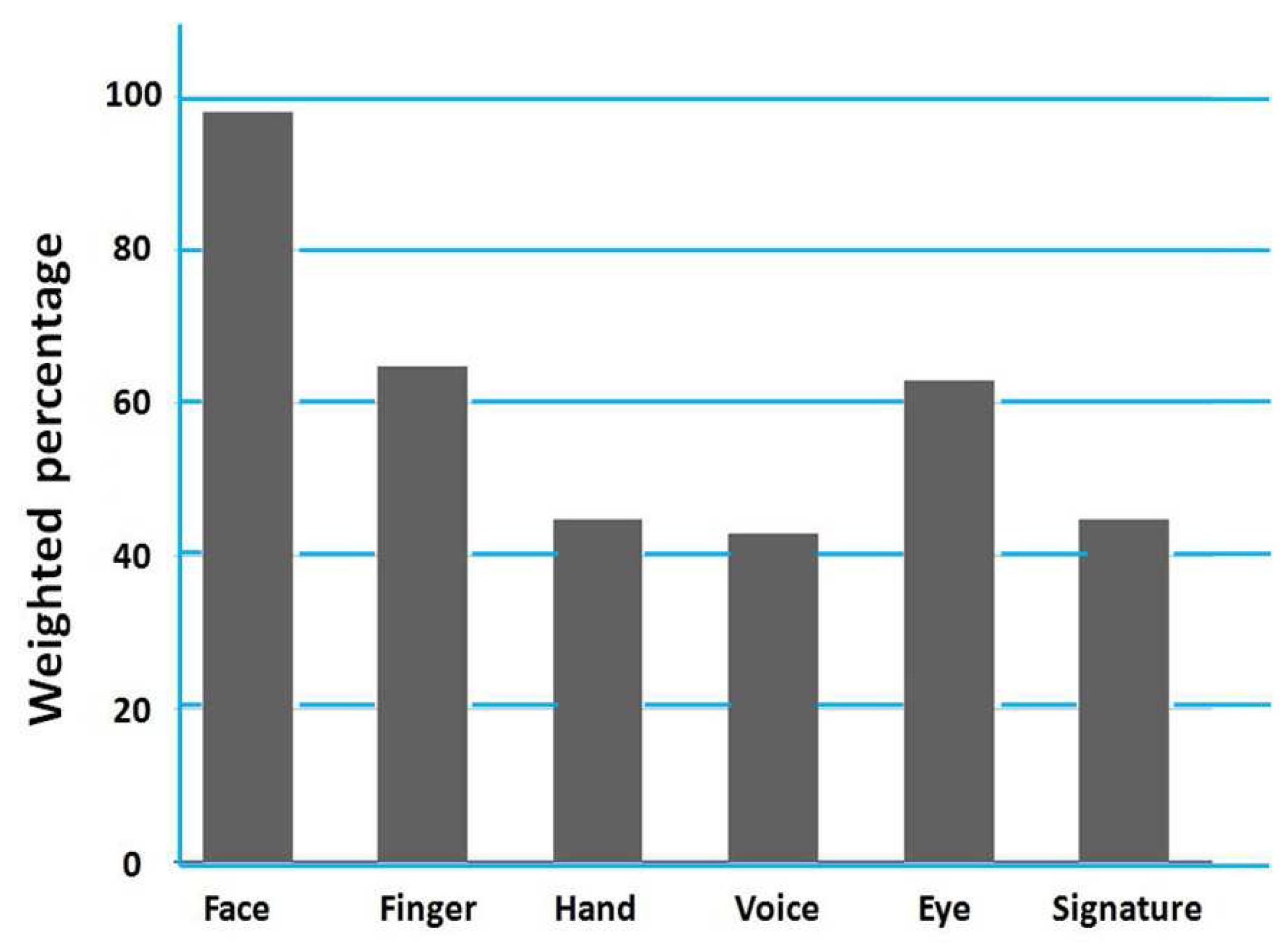

:1. Introduction

- Short time: This is one of the fastest biometric modalities. One can talk about real-time application because you have to go through the biometric system only once.

- High security: Let us take the example of a company that is checking the identities of people at the entry; such a biometric system allows not only employees to check presence at the time, but also any visitor can be added to the biometric system. Therefore, this system does not provide access to individuals not included in the system.

- Automatic system: This system works automatically without being controlled by a person.

- Easy adaptation: It can be easily used in a company. It only requires the installation of the capturing system (camera).

- High success rate: This system has achieved high recognition rates, especially with the emergence of three-dimensional technology, which makes it very difficult to cheat. Subsequently, this gives confidence to the system users.

- Acceptance in public places: It allows getting gigantic databases and, thus, improving the recognition performance.

- Lighting variations: Variations due to lighting sometimes result in a greater image difference than identity change. Therefore, algorithms should consider the lighting variations.

- Facial expression changes: The facial expressions, such as smile, anger, closing eyes and mouth, modify the face geometry and texture and, therefore, the accuracy of facial recognition. Indeed, local recognition methods, using a histogram of characteristics, have been used successfully to overcome problems related to changes in facial expression problems.

- Age change: The texture and shape of the human being face vary with age. Indeed, the shape of the skull and the skin texture change from childhood to adolescence, which represents a problem in face recognition, because the images used in passports and identity cards are not frequently updated.

- Change of scale/resolution: The change of scale is one of the challenges in facial recognition systems. Let us take an example of the monitoring system. This should work well on multiple scales because the subjects are at different distances from the camera. For instance, an object, located 2 m away from the camera has a 10× scale change to another located 20 m away. Indeed, face recognition algorithms usually use interpolation methods to resize images according to the standard scale.

- Pose change: Pose variation mainly refers to the rotation out of the plane. It is a challenge in face recognition systems because of the nature of a 2D or 3D face image. The differences in images caused by the change of poses are sometimes larger than the inter-person image differences. In applications, such as passport control, the images are required to adhere to an existing database. However, in uncontrolled environments, like non-intrusive monitoring, a subject can be found up, down, left or right, causing a rotation out of plane. Indeed, local approaches, such as Elastic bunch graph matching (EBGM) and local Binary Pattern (LBP) , are more robust against variations of poses than holistic approaches. However, their tolerance to pose changes is limited to small rotations.

- Presence of occlusions: The use of accessories (sunglasses, scarves, hats, etc.) that partially obstruct the face area and the movement of the individual itself, such as the movement of the hand, can create an occlusion during which part of the information is lost or replaced. It is worth noting that methods based on the local regions have been successfully used in the case of partial occlusion.

- Image falsification: Some facial recognition systems can be easily fooled by face images. For example, mobile device unlocking, based on facial recognition, can easily be faked with a picture of a person’s facial image, which can be available on the Internet, as well as on social networks.

- Noise: This noise occurs because of the camera sensor during image capturing. The nature of these cameras in the world and the quality of the sensors make this noise inevitable, badly affecting face recognition.

- Blur effect: Movement and atmospheric blur are the main sources of blur in face images. This blurring can be caused either by peoples’ movement (such as surveillance) or by the relative motion between the camera and the captured subject, as is the case in the maritime environment.

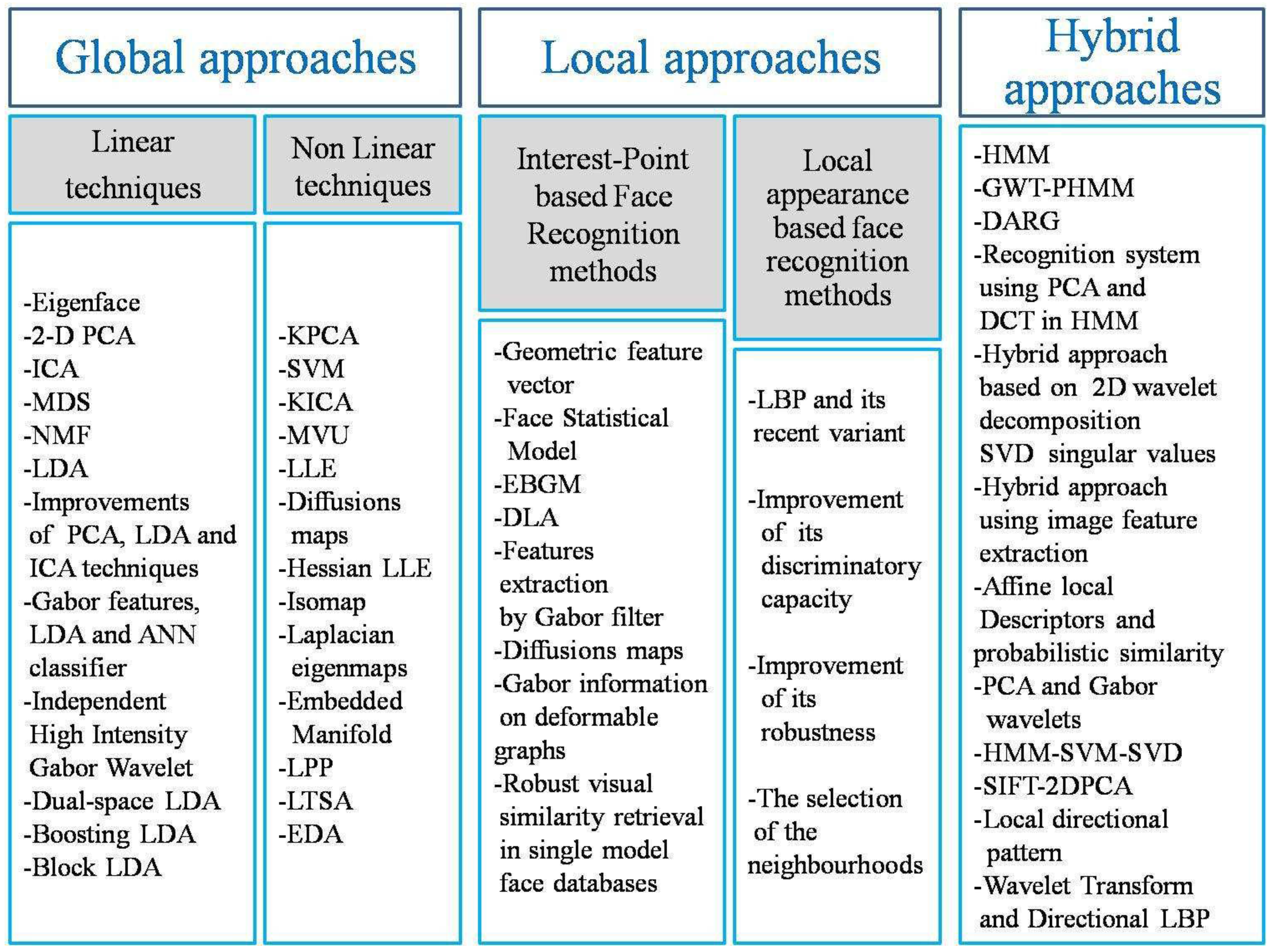

2. 2D Face Recognition Survey

2.1. Global Approaches

2.1.1. Linear Techniques

- Eigenface [11]: This is a very popular approach used for face recognition. It is based on the PCA technique (principal component analysis) allowing the transformation of any training image into an “eigenface”. Its principle is the following: given a set of sample faces images, it essentially aims at finding the main components of these faces. This amounts to determining the eigenvectors of the covariance matrix formed by the set of the sample images. Each example will then be described by a linear combination of these eigenvectors. Figure 3 shows the eigenfaces constructed from the ORL database.

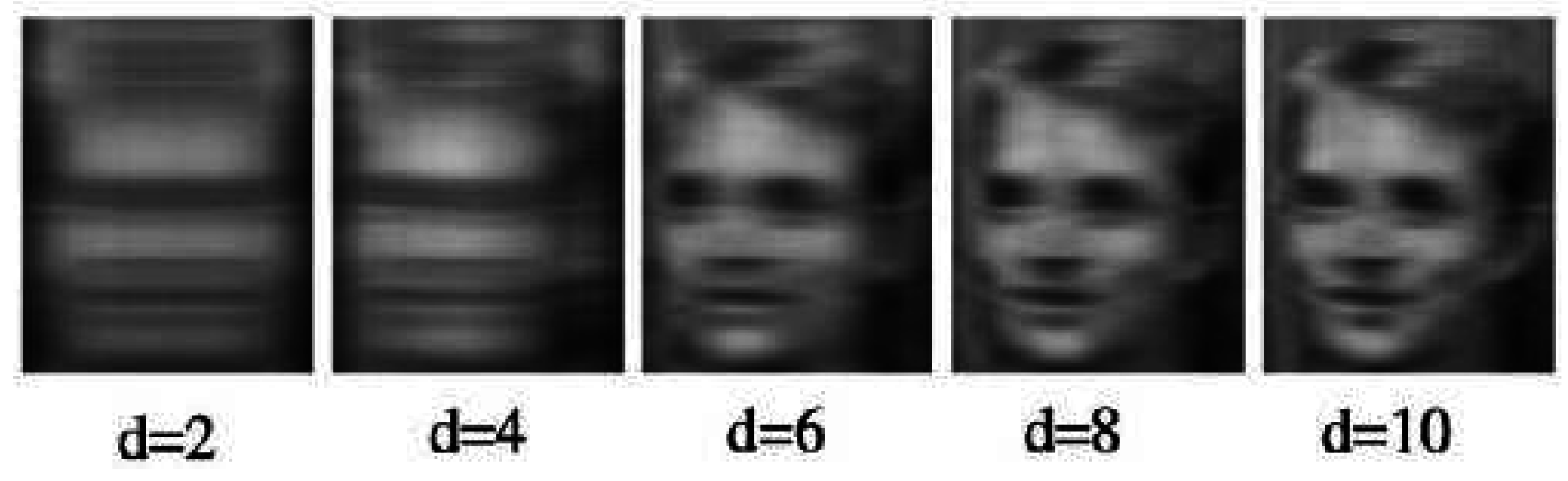

- 2D PCA (two-dimensional PCA) [13]: To avoid losing information about the neighborhood during the transformation of the image into a vector, a two-dimensional PCA method (2D PCA) was proposed. This method takes as input images rather than vectors. Figure 4 shows five reconstructed images from an image of the ORL database by adding the first number of eigenvectors d (d = 2, 4, 6, 8, 10) of sub-images together at the same time.

- Independent Component Analysis (ICA) [14]: This is a method conceived primarily for signal processing. It consists of expressing a set of random variables as a linear combination of N statistically-independent random variables , such as:or in a matrix form, such as:

- Multidimensional scaling (MDS) [15]: This is another well-known technique of linear dimension reduction. Instead of keeping the variance of data during projection, it strives to preserve all distances between each pair of examples seeking a linear transformation that minimizes energy. This minimization problem can be solved by eigenvalue decomposition. Using the Euclidean distance between data, the outputs of the MDS are the same as those of PCA. They are obtained by a rotation followed by a projection.

- Non-negative matrix factorization (NMF) [16]: The non-negative matrix factorization is another method that represents the face without using the notion of class. The algorithm of NMF, such as PCA, treats the face as a linear combination of vectors of the reduced space base. The difference is that NMF does not allow negative elements in the vectors of the base in the combination weight. In other words, certain vectors in space reduced by PCA (eigenfaces) resemble the distorted versions of the entire face, while those reduced by NMF are located objects that better reflect parts of the face.

- Linear discriminant analysis (LDA) [17]: There are other techniques that are also constructed from linear decomposition, such as linear discriminant analysis (LDA). While PCA builds a subspace to represent, in an optimal way, “only” the object “face”, LDA constructs a discriminant subspace to distinguish, in an optimal way, the faces of different people. LDA, also called “Fisher linear discriminant” analysis, is one of the most widely-used approaches for face recognition. It uses the reduction criterion based on the concept of the separability of data per class. LDA includes two stages: the original space reduction by the PCA and the vectors of the final projection space, called “Fisher faces”. The latter are calculated on the basis of the classes’ separability criterion, but in the reduced space. This need for the input space reduction is caused by the total scattering matrix singularity criterion of the LDA approach. Comparative studies show that methods based on the LDA usually give better results than those based on PCA.

- Improvements of PCA, LDA and ICA techniques: Many efforts have been made to improve the linear techniques of subspace analysis for face recognition. For example, the work done in [18] improved PCA to deal with pose variation. The probabilistic subspace was introduced to provide a more significant measure similarity in the probabilistic framework. Besides, the author [19] presented a combination between the D-LDA (direct LDA) and the F-LDA (fractional LDA), a variant of the LDA in which the weighted functions are used to avoid misclassification caused by too close categories’ products. Thus, the author [20] proposed an approach based on the multi-linear tensor decomposition of image sets to resolve the confusion of several factors related to the same face recognition system, such as lighting and pose.

- Independent high intensity Gabor wavelet [21]: To improve face recognition, high intensity feature vectors are extracted from the Gabor wavelet transform of frontal facial images combined together with the ICA [14]. The characteristics of the Gabor wavelet have been recognized as one of the best face recognition representations.

- Gabor features, LDA and ANN classifier [22]: In this work, a methodology was adopted to improve the robustness of the facial recognition system using two popular methods of statistical modeling to represent a face image: PCA and LDA. These techniques allow extracting the discriminative features of a face. A human face image pre-processing was done using Gabor wavelets that eliminate variations due to pose and lighting. PCA and LDA extract discrimination and low dimension feature vectors. The latter was used in the classification phase during which the back-propagation neural network (BPNN) was applied as a classifier. This proposed system was successfully tested on the ORL face database with 400 frontal images of 40 different subjects of variable lighting and facial expressions. Furthermore, a very large number of linear techniques was used to calculate the feature vectors. Among these techniques, we can mention:

- -

- Regularized discriminant analysis (RDA) [23].

- -

- Regression LDA (RLDA) [24].

- -

- Null-space LDA (NLDA) [25].

- -

- Dual-space LDA [26].

- -

- Generalized singular value decomposition [27].

- -

- Boosting LDA [28].

- -

- Discriminant local feature analysis [29].

- -

- Block LDA [30].

- -

- Enhanced fisher linear discriminant (FLD) [31].

- -

- Incremental LDA [32].

- -

- Discriminative common vectors (DCV) [33].

- -

- Bilinear discriminant analysis (BDA) [32].

2.1.2. Non-Linear Techniques

- Kernel principal component analysis, KPCA [34]: This is a non-linear reformulation of the classic linear technology PCA using kernel functions. KPCA calculates the main eigenvectors of the matrix of kernels rather than the covariance matrix. This reformulation of classical PCA can be seen as a realization of PCA on the large space transformed by the associated kernel function. KPCA allows, then, the construction of nonlinear mappings. First, it calculates the matrix of kernel K of points, , whose entries are defined by [35].As the KPCA technique is based on “kernels”, its performance greatly depends on the choice of the kernel function K. The typically-used kernels are linear, then they amount to performing classical PCA, the polynomial kernel or the Gaussian kernel [35]. KPCA was successfully applied in several problems, such as speech recognition [36] or the detection of new elements of a set [34], but the major weakness of KPCA is that the size of the kernel matrix is the square of the number of samples of the training set, which can quickly be prohibitive.

- Support vector machine (SVM) [37]: This is a learning technique effectively used for “pattern” recognition with its high generalization performance without the need to add more knowledge. Intuitively, given a set of points belonging to two classes, SVM finds the hyperplane that separates the largest possible fraction of points of the same class at the same side, while maximizing the distance between two classes to a hyperplane called the optimal separating hyperplane (OSH). It reduces the risk of misclassification not only for examples of the learning set, but also for the invisible example of the test set. SVM can also be considered as a way to train polynomial neural networks or “radial basis” function classifiers. Learning techniques used here are based on the principle of structure risk minimization (SRM), which states that the best generalization capabilities are achieved by minimizing the boundary of the generalization error. The application of SVM in computer vision problem was, afterward, proposed.Years later, the work presented in [38] used the SVM with a binary tree recognition strategy to solve the problems of face recognition. He began by extracting the features and then the functions of discrimination between each pair learned by SVM. After that, the disjoint test sets passed to the recognition system. To construct a binary tree structure, [39] proposed to recognize the test samples.Other nonlinear techniques have also been used in the context of facial recognition:

- -

- KICA (kernel independent component analysis) [40].

- -

- Maximum variance unfolding (MVU) [41].

- -

- Isomap [42].

- -

- Diffusions maps dans [43].

- -

- Local linear embedding (LLE) dans [44].

- -

- Locality preserving projection (LPP) [45].

- -

- Embedded manifold [46].

- -

- Nearest manifold approach [47].

- -

- Discriminant manifold learning [48].

- -

- -

- Hessian LLE [51].

- -

- Local tangent space analysis (LTSA) [52].

- -

- -

- Exponential discriminant analysis (EDA) [55].

2.2. Local Approaches

- Interest-point based on face recognition methods: we first detect the points of interest. Then, we extract features localized on these points.

- Local appearance-based face recognition methods: the face is divided into small regions (or patches) from which local characteristics are directly extracted.

2.2.1. Interest-Point-Based Face Recognition Methods

- Dynamic link architecture (DLA) [56]: This approach is based on the use of a deformable topological graph instead of a fixed topological graph as in [57] in order to propose a facial representation model called DLA. This approach allows varying the graph in scale and position based on the appearance change of the considered face.Indeed, the graph is a rectangular grid localized on the image where the nodes are labeled with the responses of Gabor filters in several directions and several spatial frequencies, called “jets”.However, the edges are labeled by distances, where each edge connects two nodes on the graph. The comparison between two face graphs is performed by deforming and mapping the representative graph of the test image with each of the representative graphs of the reference images.

- Elastic bunch graph matching (EBGM) [58]: This is an extension of DLA in which the nodes of the graphs are located on a number of selected points of the face. For instance, EBGM was one of the most efficient algorithms in the FERET competition in 1996. Similarly, Wiskott et al. [58] used Gabor wavelets to extract the characteristics of the points detected because Gabor filters are robust to illumination changes, distortions and scale variations.

- Geometric feature vector [59]: This technique uses a training set to detect the position of the eye in an image. It first calculates, for each point, the correlation coefficients between the test image and the images of the training set and then it searches the maximum values.

- Face statistical model [60]: This approach used many detectors of specific features for each part of the face, such as eyes, nose, mouth, etc. The work presented in [61] proposed to build statistical models of facial shapes. Despite all of these research works, there are no sufficiently reliable and accurate feature points.

- Feature extraction by Gabor filter [62]: This consists of detecting and representing facial features from Gabor wavelets. For each detected point, two types of information are stored: its position and its characteristics (the features are extracted using Gabor filter on this point). To model the relationship between the characteristic points, a topological graph is built for each face.

2.2.2. Local Appearance-Based Face Recognition Methods

- LBP and its recent variant [73]: The original LBP method labels the image pixels with decimal numbers. LBP encodes the local structure around each pixel compared with its eight neighbors in a (3 × 3) neighborhood by subtracting the value of the central pixel. Therefore, strictly-resultant negative values are encoded with zero and the other with one.For each given pixel, a binary number is obtained by concatenating all of the binary values in a clockwise direction, which starts from one of its top left neighborhoods. The corresponding decimal value of the generated binary number is then used to mark the given pixel derivative binary numbers called LBP codes [74].

2.3. Hybrid Approaches and Methods Based on Statistical Models

- Hidden Markov model (HMM) [78]: The hidden Markov models began to be used in 1975 in different fields, especially in voice recognition. They were fully operated from the 1980s in speech recognition. Then, they were applied in manuscript text recognition, image processing, music and bioinformatics (DNA sequencing, etc.), as well as in cardiology (segmentation of the ECG signal).The hidden Markov models, also called Markov sources or “probabilistic functions of Markov”, are powerful stochastic signals modeling statistic tools. These models have been proven to be efficient since their invention by Baum and his colleagues. They were mainly used in speech processing. They can be defined by a statistical model of the Markov chain. This latter is a statistical model composed of “states” and “transitions”.For face images, significant facial regions (hair, forehead, eyebrows, eyes, nose, mouth and chin) are placed in a natural order from top to bottom even if the image is taken under small rotations.For each of these regions, a state from left to right is affected. The structure of the face model of the state and the non-zero transition probabilities are shown in Figure 5:

- Gabor wavelet transform based on the pseudo hidden Markov model (GWT-PHMM) [21]: This is an approach that combines the multi-resolution capability of Gabor wavelet transform (GWT) with local interactions of facial structures expressed through the pseudo-hidden Markov model (HMM). Unlike the traditional “zigzag scanning” method for feature extraction, a continuous analysis method should be carried out from top left to right then from top to bottom and right to left, and so on, until the bottom right of the image, spiral scanning, which is proposed for a better selection of features. Furthermore, unlike traditional HMM, PHMM does not carry the state of conditional independence of the states of the visible observation sequence hypothesis. This result is achieved thanks to the concept of local structures introduced by the PHMM used to extract face bands and automatically select the most informative features of a facial image. Again, the use of the most informative pixels rather than the whole picture makes this proposed face recognition method reasonably quick.

- Recognition system using PCA and discrete cosine transform (DCT) in HMM [79]: Without using DCT, PCA is directly used to reduce the dimension. First, the details of the face are taken in blocks, and the DCT is applied on these blocks. Then, without using the inverse DCT transform, the PCA method is applied directly to the reduced dimensions and, thus, makes this system faster.

- HMM-LBP [80]: This is a hybrid approach called HMM-LBP permitting the classification of a 2D face image by using the LBP tool (local binary pattern) for feature extraction. It consists of four steps. First, [80] decomposes the face image into blocs. Then, this approach extracts image features using LBP. After that, it calculates probabilities. Finally, it selects the maximum probability.

- Hybrid approach based on 2D wavelet decomposition SVD singular values [81]: This approach presents an effective face recognition system using the eigenvalues of the wavelet transform as feature vectors and the radial basis function neural network (RBF) as a classifier. Using the 2D wavelet transform, face images are decomposed into two levels. Then, the wavelet coefficients’ average is calculated to find the characteristic centers.

- Multi-task learning-based discriminative Gaussian process latent variable model DGPLVM [82]: This is a different approach that relies on a single data source learning to gain more data from multiple sources/domains to improve performance in the target area. In this work, we use asymmetric multi-task learning as it focuses only on improving the performance of the target task. This constraint aims at maximizing the mutual information between the target data distributions of the domain and data from multiple sources/domains. In addition, the Gaussian face model is a reformulation based on the Gaussian process (GP), a method of the nonparametric Bayesian core. Therefore, this model can also adapt its complexity to complex data distributions in the real world without heuristics or parameters’ manual settings.

- Discriminant analysis on Riemannian manifold of Gaussian distributions (DARG) [83]: Its objective consists of capturing the distribution of the underlying data in each set of images in order to facilitate the classification and make it more robust. To this end, [83] represents the set of images as a mixture of m Gaussian models (GMM) comprising a prior number of Gaussian components with probabilities. He sought to discriminate the various Gaussian components of different classes. Given the geometric information, Gaussian components lie on a specific Riemannian manifold. To correctly encode such a Riemannian manifold, DARG uses several distances between Gaussian components and draws a series of provably-defined positive probabilistic cores. With the latter, a weighted discriminate analysis of cores is finally developed to treat Gaussian GMM as samples and their prior probabilities as sample weights.

- Affine local descriptors and probabilistic similarity [84]: This technique combines the affine transform of invariant features SIFT with probabilistic similarity under a great change of perspective. The affine SIFT, an extension of SIFT that detects local invariant descriptors, generates a series of different views using the affine transformation. In this context, it allows a difference of views between the face image of the “gallery”, the “probe” and the face of the probe. However, the human face is not flat because it contains important 3D depth. Obviously, this approach is not effective for large changes in pose. In addition, it combines with probabilistic similarity that obtains the similarity between the face of “probe” and “gallery” based on the sum of squared differences (SSD) distribution in an online learning process.

- PCA and Gabor wavelets [85]: This is a new approach that uses a face recognition algorithm with two steps of recognition based on both global and local features. For the first step of the coarse recognition, the proposed algorithm applies the principal components analysis (PCA) to identify a test image. The recognition step ends at this stage if the result of the confidence level proves to be reliable. Otherwise, the algorithm uses this result to filter images of the top candidates with a high degree of similarity and transmits them to the next recognition step where Gabor filters are used. Since the recognition of a face image with Gabor filter is a heavy calculation task, the contribution of this work is to propose a more flexible and faster hybrid algorithm of face recognition carried out through two stages.

- Manual segmentation-Gabor filter-neural network [86]: This is another feature extraction technique that has given a high recognition rate. In this approach, facial topographical features are extracted using a manual segmentation of the facial regions of the eyes, nose and mouth. Thereafter, the Gabor transform of these regions’ maximum is extracted to calculate the local representation of these regions. In the learning phase, this approach uses the method of nearest neighbor to compute the distances between the three feature vectors of these regions and the corresponding stored vectors.

- HMM-SVM-SVD [87]: This is a combination of two classifiers: SVM and HMM. The former is used with the features of PCA, while the latter is a one-dimensional model in seven states wherein features are based on the singular value decomposition (SVD). This approach uses these combination rules for merging the outputs of SVM and HMM. It was successful with a 100% recognition rate for the ORL database.

- Merging of local and global features based on Gabor-contourlet and PCA [88]: This is a combination of two types of features using local features, extracted by Gabor transform, and global ones, extracted via “contourlet transform”. The recognition step is finally made by the PCA classifier.

- SIFT-2D-PCA [89]: This global approach combines the SIFT, a local feature extraction method, and 2D-PCA, which represents an improvement of PCA. Since SIFT is used to extract distinctive features that are invariant to scale changes, orientation and lighting; it will be beneficial for recognition even if the global features are not available. 2D-PCA is used for the extraction of the global features, as well as for the size reduction.

- Multilayer perceptron-PCA-LBP [90]: This approach applies a very recent recognition method used to show the different changes (lighting, head position, facial expressions). That is why it makes the global and local feature extractions respectively using PCA and LBP. Thus, these global and local features are introduced to the network called MLP (multilayer perceptron). Finally, the classification is made by the BPMLP network (backpropagation multilayer perceptron).

- Local directional pattern [91]: This is a method using the model of local direction. In this approach, the LDP feature to each pixel position is obtained by calculating the response values for the image in the eight different directions. Then, this image LDP is used as an input of the 2D-PCA for feature extraction and representation. However, the nearest neighbor classifier is used for face recognition. Although this method has a good recognition accuracy under various lighting environments, it works only with frontal images.

- Wavelet transform and directional LBP [92]: This begins with the pre-treatment using the wavelet transform in order to get series of different resolutions of sub-images and the wavelet decomposition to get different scale components. Thereafter, a Directional Wavelet LBP (DW-LBP) histogram for the different weighted face image sub-regions is calculated. Chi square is used for matching sequences of the histogram. This method reduces the computational complexity and improves the recognition rate, but it cannot be applied on different poses.

3. Comparison between Global, Local and Hybrid Approaches

4. 2D Face Databases

- The number of images contained in each database is the most important criterion.

- The number of images per individual class: knowing that each individual is designated by a class c, the number of images of a class represents the number of the individual’s representative images. Indeed, images are acquired under different conditions (orientation, facial expression, etc.).

- The size of images.

- Pose and orientations of faces.

- The change of illumination.

- Sex of the acquired persons.

- The presence of artifacts (glasses, beards, etc.).

- The presence of static images or videos.

- The presence of a uniform background.

- The period between shots.

5. Results

6. The Emergence of New Promising Research Directions

- 3D face recognition: Despite the high success rate achieved in 2D face recognition, this latter still has two major unsolved problems, which are illumination and pose variations. To overcome these two issues, 3D face recognition has emerged in order to provide more exact shape information of facial surfaces. For this reason, several recent techniques using 3D data have been proposed [143,144,145,146,147,148]. 3D face recognition has been proposed to have the potential to achieve better accuracy than the 2D field by measuring rigid feature geometry on the face.

- Multimodal face recognition: On the other hand, some recent research works state that the fusion of multimodal 2D and 3D face recognition is more accurate and robust than the single modality [149] and that it improves the performance when compared to single modal face recognition. They investigate the potential benefit of fusing 2D and 3D features [150,151].

- Deep learning techniques: Deep learning techniques [152] have established themselves as a dominant technique in machine learning. Deep neural networks (DNNs) have been top performers on a wide variety of tasks, including image classification, speech recognition and face recognition. In particular, convolutional neural networks (CNN) have recently achieved promising results in face recognition. These deep learning techniques often use the public database LFW (Labeled Faces in the Wild) to train CNNs.

- Infrared imagery: Amongst the various approaches that have been proposed to overcome face recognition limitations, such as pose, facial expression, illumination changes, as well as facial disguises, which can significantly decrease recognition accuracy, infrared (IR) imaging has emerged as a novel promising research direction [153,154]. IR imagery is a modality that has attracted particular attention due to its invariance to illumination changes [155]. Indeed, data acquired using IR cameras have many advantages as compared with common cameras, which operate in the visible spectrum. For instance, Infrared images of faces can be obtained under any lighting condition, even in a completely dark environment, and there is some proof that the infrared technique may achieve a higher degree of robustness to facial expression changes [156].

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Aoun, N.B.; Mejdoub, M.; Amar, C.B. Graph-based approach for human action recognition using spatio-temporal features. J. Vis. Commun. Image Represent. 2014, 25, 329–338. [Google Scholar] [CrossRef]

- El’Arbi, M.; Amar, C.B.; Nicolas, H. Video watermarking based on neural networks. In Proceedings of the 2006 IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 1577–1580.

- El’Arbi, M.; Koubaa, M.; Charfeddine, M.; Amar, C.B. A dynamic video watermarking algorithm in fast motion areas in the wavelet domain. Multimed. Tools Appl. 2011, 55, 579–600. [Google Scholar] [CrossRef]

- Wali, A.; Aoun, N.B.; Karray, H.; Amar, C.B.; Alimi, A.M. A new system for event detection from video surveillance sequences. In Advanced Concepts for Intelligent Vision Systems, Proceedings of the 12th International Conference, ACIVS 2010, Sydney, Australia, 13–16 December 2010; Blanc-Talon, J., Bone, D., Philips, W., Popescu, D., Scheunders, P., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2010; Volume 6475, pp. 110–120. [Google Scholar]

- Koubaa, M.; Elarbi, M.; Amar, C.B.; Nicolas, H. Collusion, MPEG4 compression and frame dropping resistant video watermarking. Multimed. Tools Appl. 2012, 56, 281–301. [Google Scholar] [CrossRef]

- Mejdoub, M.; Amar, C.B. Classification improvement of local feature vectors over the KNN algorithm. Multimed. Tools Appl. 2013, 64, 197–218. [Google Scholar] [CrossRef]

- Dammak, M.; Mejdoub, M.; Zaied, M.; Amar, C.B. Feature vector approximation based on wavelet network. In Proceedings of the 4th International Conference on Agents and Artificial Intelligence, Vilamoura, Portugal, 6–8 February 2012; pp. 394–399.

- Borgi, M.A.; Labate, D.; El’Arbi, M.; Amar, C.B. Shearlet network-based sparse coding augmented by facial texture features for face recognition. In Image Analysis and Processing—ICIAP 2013, Proceedings of the 17th International Conference, Naples, Italy, 9–13 September 2013; Petrosino, A., Ed.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2013; Volume 8157, pp. 611–620. [Google Scholar]

- Hietmeyer, R. Biometric identification promises fast and secure processing of airline passengers. Int. Civ. Aviat. Organ. J. 2000, 17, 10–11. [Google Scholar]

- Morizet, M. Reconnaissance Biométrique Par Fusion Multimodale du Visage et de lÍris. Ph.D. Thesis, ParisTech, Paris, France, 2009. [Google Scholar]

- Turk, A.; Pentland, A.P. Face recognition using eigenfaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Maui, HI, USA, 3–6 June 1991; pp. 586–591.

- Huang, D. Robust Face Recognition based on Three Dimensional Tata. Ph.D. Thesis, Central School of Lyon, Écully, France, 2011. [Google Scholar]

- Jian, Y.; Zhang, D.; Frangi, A.; Yang, J.-Y. Two-dimensional PCA : A new approach to appearance-based face representation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 131–137. [Google Scholar] [CrossRef]

- Ans, B.; Hérault, J.; Jutten, C. Adaptive neural architectures: Detection of primitives. In Proceedings of the COGNITIVA’85, Paris, France, June 1985; pp. 593–597.

- Torgerson, W.S. Multidimensional scaling. Psychometrica 1952, 17, 401–419. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [PubMed]

- Belhumeur, P.N.; Hespanha, J.P.; Kriegman, D.J. Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar] [CrossRef]

- Pentland, A.; Moghaddamand, B.; Starner, T. View-based and modular eigenspaces for face recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994.

- Lu, J.; Plataniotis, K.N.; Venetsanopoulos, A.N. Face recognition using LDA-based algorithms. IEEE Trans. Neural Netw. 2003, 14, 195–200. [Google Scholar] [PubMed]

- Vasilescu, M.A.O.; Terzopoulos, D. Multilinear analysis of image ensembles: Tensor faces. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002; pp. 447–460.

- Kar, A.; Bhattacharjee, D.; Nasipuri, M.; Basu, D.K.; Kundu, M. High performance human face recognition using gabor based pseudo hidden Markov model. Int. J. Appl. Evol. Comput. 2013, 4, 81–102. [Google Scholar] [CrossRef]

- Magesh Kumar, C.; Thiyagarajan, R.; Natarajan, S.P.; Arulselvi, S. Gabor features and LDA based face recognition with ANN classifier. In Proceedings of the 2011 International Conference on IEEE Emerging Trends in Electrical and Computer Technology (ICETECT), Nagercoil, India, 23–24 March 2011.

- Friedman, J.H. Regularized discriminant analysis. J. Am. Stat. Assoc. 1989, 84, 165–175. [Google Scholar] [CrossRef]

- Hastie, T.; Buja, A.; Tibshirani, R. Penalized discriminant analysis. Ann. Stat. 1995, 23, 73–102. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Y.; Li, S.Z.; Tan, T. Null space approach of fisher discriminant analysis for face recognition. In Biometric Authentication, Proceedings of the ECCV 2004 International Workshop on Biometric Authentication, Prague, Czech Republic, 15 May 2004; Maltoni, D., Jain, A.K., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2004; Volume 3087, pp. 32–44. [Google Scholar]

- Wang, X.; Tang, X. Dual-space linear discriminant analysis for face recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 564–569.

- Howland, P.; Park, H. Generalized discriminant analysis using the generalized singular value decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 995–1006. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.W.; Plataniotis, K.N.; Venetsanopoulos, A.N. Boosting linear discriminant analysis for face recognition. In Proceedings of the IEEE International Conference on Image Processing, Barcelona, Spain, 14–18 September 2003; pp. 657–660.

- Yang, Q.; Ding, X.Q. Discriminant local feature analysis of facial images. In Proceedings of the IEEE International Conference on Image Processing, Barcelona, Spain, 14–18 September 2003; pp. 863–866.

- Nhat, V.D.M.; Lee, S. Block LDA for face recognition. In Computational Intelligence and Bioinspired Systems, Proceedings of the 8th International Work-Conference on Artificial Neural Networks, Barcelona, Spain, 8–10 June 2005; Cabestany, J., Prieto, A., Sandoval, F., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2005; Volume 3512, pp. 899–905. [Google Scholar]

- Zhou, D.; Yang, X. Face recognition using enhanced fisher linear discriminant model with Facial combined feature. In PRICAI 2004: Trends in Artificial Intelligence, Proceedings of the 8th Pacific Rim International Conference on Artificial Intelligence, Auckland, New Zealand, 9–13 August 2004; Zhang, C., Guesgen, H.W., Yeap, W.-K., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2004; Volume 3157, pp. 769–777. [Google Scholar]

- Cevikalp, H.; Neamtu, M.; Wilkes, M.; Barkana, A. Discriminative common vectors for face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 4–13. [Google Scholar] [CrossRef] [PubMed]

- Visani, M.; Garcia, C.; Jolion, J.M. Normalized radial basis function networks and bilinear discriminant analysis for face recognition. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Como, Italy, 15–16 September 2005; pp. 342–347.

- Hoffmann, H. Kernel PCA for novelty detection. Pattern Recognit. 2007, 40, 863–874. [Google Scholar] [CrossRef]

- Shawe-Taylor, J.; Cristianini, N. Kernel Methods for Pattern Analysis; Cambridge University Press: New York, NY, USA, 2004. [Google Scholar]

- Maurer, T.; Guigonis, D.; Maslov, I.; Pesenti, B.; Tsaregorodtsev, A.; West, D.; Medioni, G. Performance of Geometrix Active IDTM 3D Face Recognition Engine on the FRGC Data. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 21–23 September 2005; p. 154.

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995. [Google Scholar]

- Guo, G.; Li, S.Z.; Chan, K. Face recognition by support vector machines. In Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 196–201.

- Jian, Y.; Zhang, D.; Frangi, A.; Yang, J.Y. Two-dimensional PCA: A new approach to appearance-based face representation and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 131–137. [Google Scholar] [CrossRef]

- Bach, F.; Jordan, M. Kernel independent component analysis. J. Mach. Learn. Res. 2002, 3, 1–48. [Google Scholar]

- Weinberger, K.Q.; Saul, L.K. Unsupervised learning of image manifolds by semidefinite programming. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 988–995.

- Yang, M.H. Face recognition using extended isomap. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 Septemner 2002; pp. 117–120.

- Hagen, G.; Smith, T.; Banasuk, A.; Coifman, R.R.; Mezic, I. Validation of low-dimensional models using diffusion maps and harmonic averaging. In Proceedings of the IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007.

- Socolinsky, D.A.; Selinger, A. Thermal face recognition in an operational scenario. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 1012–1019.

- He, X.; Yan, S.C.; Hu, Y.X.; Zhang, H.J. Learning a locality preserving subspace for visual recognition. In Proceedings of the 9th IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 385–392.

- Yan, S.C.; Zhang, H.J.; Hu, Y.X.; Zhang, B.Y.; Cheng, Q.S. Discriminant analysis on embedded manifold. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; pp. 121–132.

- Zhang, J.; Li, S.Z.; Wang, J. Nearest manifold approach for face recognition. In Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition, Seoul, Korea, 17–19 May 2004; pp. 223–228.

- Wu, Y.; Chan, K.L.; Wang, L. Face recognition based on discriminative manifold learning. In Proceedings of the IEEE International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; pp. 171–174.

- He, X.; Yan, S.; Hu, Y.; Niyogi, P.; Zhang, H. Face recognition using laplacianfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 328–340. [Google Scholar] [PubMed]

- Raducanu, B.; Dornaika, F. Dynamic facial expression recognition using laplacian eigenmaps-based manifold learning. In Proceedings of the International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 156–161.

- Kim, H.; Park, H.; Zhang, H. Distance preserving dimension reduction for manifold learning. In Proceedings of the International Conference on Data Mining, Minneapolis, MN, USA, 26–28 April 2007.

- Wang, Q.; Li, J. Combining local and global information for nonlinear dimensionality reduction. Neurocomputing 2009, 72, 2235–2241. [Google Scholar] [CrossRef]

- Lawrence, S.; Giles, S.L.; Tsoi, A.C.; Back, A.D. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar] [CrossRef] [PubMed]

- Duffner, S.; Garcia, C. Face recognition using non-linear image reconstruction. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007; pp. 459–464.

- Zhang, T.; Fang, B.; Tang, Y.Y.; Shang, Z.W.; Xu, B. Generalized discriminant analysis: A matrix exponential approach. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 40, 186–197. [Google Scholar]

- Lades, M.; Vorbruggen, J.C.; Buhmann, J.; Lange, J.; von der Malsburg, C.; Wurtz, R.P.; Konen, K. Distortion invariant object recognition in the dynamic link architecture. IEEE Trans. Comput. 1993, 42, 300–311. [Google Scholar] [CrossRef]

- Manjunath, B.S.; Chellappa, R.; von der Malsburg, C. A feature based approach to face recognition. In Proceedings of the 1992 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Champaign, IL, USA, 15–18 June 1992.

- Wiskott, L.; Fellous, J.M.; Kuiger, N.; von der Malsburg, C. Face recognition by elastic bunch graph matching. IEEE Trans. PAMI 1997, 19, 775–779. [Google Scholar] [CrossRef]

- Brunelli, R.; Poggio, T. Face recognition: Features versus templates. IEEE Trans. PAMI 1993, 15, 1042–1052. [Google Scholar] [CrossRef]

- Rowley, H.A.; Baluja, S.; Kanade, T. Neural network-based face detection. IEEE Trans. PAMI 1998, 20, 23–38. [Google Scholar] [CrossRef]

- Lanitis, A. Automatic face identication system using exible appearance models. Image Vis. Comput. 1995, 13, 393–401. [Google Scholar] [CrossRef]

- Lee, T.S. Image representation using 2-d Gabor wavelets. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 959–971. [Google Scholar]

- Duc, B.; Fischer, S.; Bigun, J. Face authentication with gabor information on deformable graphs. IEEE Trans. Image Process. 1999, 8, 504–506. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Qi, Y. Robust visual similarity retrieval in single model face databases. Pattern Recognit. 2005, 38, 1009–1020. [Google Scholar] [CrossRef]

- Ngoc-Son, V. Contributions à La Reconnaissance de Visages à Partir D’une Seule Image et Dans un Contexte Non-Contrôlé. Ph.D. Thesis, University of Grenoble, Grenoble, France, 2010. [Google Scholar]

- Brunelli, R.; Poggio, T. Face recognition: Features versus templates. IEEE Trans. PAMI 1993, 15, 1042–1052. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face recognition with local binary patterns. In Computer Vision—ECCV 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 469–481. [Google Scholar]

- Ojansivu, V.; Heikkila, J. Blur insensitive texture classification using local phase quantization. In Proceedings of the International Conference on Image and Signal Processing, Cherbourg-Octeville, France, 1–3 July 2008; pp. 236–243.

- Chen, J.; Shan, S.; He, C.; Zhao, G.; Pietikainen, M.; Chen, X.; Gao, W. WLD: A robust local image descriptor. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1705–1720. [Google Scholar] [CrossRef] [PubMed]

- Kannala, J.; Rahtu, E. BSIF: Binarized statistical im age features. In Proceedings of the 21st International Conference on Pattern Recognition ICPR, Tsukuba Science City, Japan, 11–15 November 2012; pp. 1363–1366.

- Huang, D.; Shan, C.; Ardabilian, M.; Wang, Y.; Chen, L. Local binary patterns and its application to facial image analysis: A survey. IEEE Trans. Syst. 2011, 41, 765–781. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikinen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Jin, H.; Liu, Q.; Lu, H.; Tong, X. Face detection using improved LBP under Bayesian framework. In Proceedings of the Third International Conference on Image Graphics, Hong Kong, China, 18–20 December 2004; pp. 306–309.

- Tan, X.; Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. Anal. Model. Faces Gestures 2007, 4778, 168–182. [Google Scholar]

- Wolf, L.; Hassner, T.; Taigman, Y. Descriptor based methods in the wild. In Proceedings of the ECCV Workshop Faces ’Real-Life’ Images: Detection, Alignment, and Recognition, Marseille, France, 12–18 Octobrer 2008.

- Nefian, A.V.; HIII, M.H. Face detection and recognition using hidden Markov models. In Proceedings of the IEEE International Conference on Image Processing, Chicago, IL, USA, 4–7 October 1998.

- Jameel, S. Face recognition system using PCA and DCT in HMM. Int. J. Adv. Res. Comput. Commun. Eng. 2015, 4, 13–18. [Google Scholar] [CrossRef]

- Chihaoui, M.; Bellil, W.; Elkefi, A.; Amar, C.B. Face recognition using HMM-LBP. In Hybrid Intelligent Systems; Springer: Cham, Switzerland, 2015; pp. 249–258. [Google Scholar]

- Hashemi, V.H.; Gharahbagh, A.A. A novel hybrid method for face recognition based on 2d wavelet and singular value decomposition. Am. J. Netw. Commun. 2015, 4, 90–94. [Google Scholar] [CrossRef]

- Urtasun, R.; Darrell, T. Discriminative Gaussian process latent variable model for classification. In Proceedings of the 24th international conference on Machine learning, Corvallis, OR, USA, 20–24 June 2007; pp. 927–934.

- Wang, W.; Wang, R.; Huang, Z.; Shan, S. Discriminant analysis on riemannian manifold of gaussian distributions for face recognition with image sets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2048–2057.

- Gao, Y.; Lee, H.J. Viewpoint unconstrained face recognition based on affine local descriptors and probabilistic similarity. J. Inf. Process. Syst. 2015, 11, 643–654. [Google Scholar]

- Cho, H.; Roberts, R.; Jung, B.; Choi, O.; Moon, S. An efficient hybrid face recognition algorithm using PCA and GABOR wavelets. Int. J. Adv. Robot. Syst. 2014, 80. [Google Scholar] [CrossRef]

- Qasim, A.; Prashan, P.; Peter, V. A hybrid feature extraction technique for face recognition. Int. Proc. Comput. Sci. Inf. Technol. 2014, 59, 166–170. [Google Scholar]

- Nebti, S.; Fadila, B. Combining classifiers for enhanced face recognition. In Advances in Information Science and Computer Engineering; Springer: Dordrecht, The Netherlands, 2015; Volume 82. [Google Scholar]

- Zhang, J.; Wang, Y.; Zhang, Z.; Xia, C. Comparison of wavelet, Gabor and curvelet transform for face recognition. Opt. Appl. 2011, 41, 183–193. [Google Scholar]

- Singha, M.; Deb, D.; Roy, S. Hybrid feature extraction method for partial face recognition. Int. J. Emerg. Technol. Adv. Eng. Website 2014, 4, 308–312. [Google Scholar]

- Sompura, M.; Gupta, V. An efficient face recognition with ANN using hybrid feature extraction methods. Int. J. Comput. Appl. 2015, 117, 19–23. [Google Scholar] [CrossRef]

- Kim, D.J.; Lee, S.H.; Shon, M.Q. Face recognition via local directional pattern. Int. J. Secur. Appl. 2013, 7, 191–200. [Google Scholar]

- Wu, F. Face recognition based on wavelet transform and regional directional weighted local binary pattern. J. Multimed. 2014, 9, 1017–1023. [Google Scholar] [CrossRef]

- The At & T Database of Faces. Available online: http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html (accessed on 27 September 2016).

- AR Faces Databases. Available online: http://www2.ece.ohio-state.edu/~aleix/ARdatabase.html (accessed on 23 September 2016).

- The Oulu Physics Database. Available online: http://www.ee.oulu.fi/research/imag/color/pbfd.html (accessed on 27 September 2016).

- The Yale Database. Available online: http://vision.ucsd.edu/content/yale-face-database (accessed on 27 September 2016).

- The Yale B Database. Available online: http://vision.ucsd.edu/~leekc/ExtYaleDatabase/Yale (accessed on 27 September 2016).

- The XM2VTS Database. Available online: http://www.ee.surrey.ac.uk/Reseach/VSSP/xm2vtsdb/ (accessed on 23 September 2016).

- The CVL Database. Available online: https://www.caa.tuwien.ac.at/cvl/research/cvl-databases/an-off-line-database-for-writer-retrieval-writer-identification-and-word-spotting/ (accessed on 27 September 2016).

- The Bern University Face Database. Available online: http://www.fki.inf.unibe.ch/databases/iam-faces-database (accessed on 27 September 2016).

- The CMU-PIE Face Database. Available online: http://vasc.ri.cmu.edu/idb/html/face/ (accessed on 27 September 2016).

- The Stirling Online Database Face Database. Available online: http://pics.stir.ac.uk/ (accessed on 23 September 2016).

- The UMISTFace Database. Available online: http://www.sheffield.ac.uk/eee/research/iel/research/face (accessed on 27 September 2016).

- The JAFEE Face Database. Available online: http://images.ee.umist.ac.uk/danny/database.html (accessed on 27 September 2016).

- The FERET Face Database. Available online: http://www.it1.nist.gov/iad/humanid/feret/ (accessed on 27 September 2016).

- The Kuwait University Face Database. Available online: http://www.sc.kuniv.edu.kw/lessons/9503587/dina.htm (accessed on 27 September 2016).

- The HUMAN SCAN Face Database. Available online: http://web.mit.edu/emeyers/www/face_databases.html#humanscan (accessed on 23 September 2016).

- The LFW Face Database. Available online: http://vis-www.cs.umass.edu/lfw/ (accessed on 23 September 2016).

- The FRAV2D Face Database. Available online: http://www.frav.es/databases/FRAV2d/ (accessed on 23 September 2016).

- The MIT Face Database. Available online: http://cbcl.mit.edu/software-datasets/FaceData2.html (accessed on 27 September 2016).

- The FEI Face Database. Available online: https://data.fei.org/Default.aspx (accessed on 23 September 2016).

- The Extended Yale Face Database. Available online: http://vision.ucsd.edu/leekc/ExtYaleDatabase/ExtYaleB.html (accessed on 23 September 2016).

- Gao, Y.; Leung, M.K.H. Face recognition using line edge map. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 764–779. [Google Scholar]

- Deniz, O.; Castrillon, M.; Hernandez, M. Face recognition using independent component analysis and support vector machines. Pattern Recognit. Lett. 2003, 24, 2153–2157. [Google Scholar] [CrossRef]

- Samaria, F.; Harter, A.C. Parameterisation of a stochastic model for human face identification. In Proceedings of the Second IEEE Workshop Applications of Computer Vision, Sarasota, FL, USA, 5–7 December 1994.

- Le, T.H.; Bui, L. Face recognition based on SVM and 2DPCA. Int. J. Signal Process. Image Process. Pattern Recognit. 2011, 4, 85–94. [Google Scholar]

- Kohir, V.V.; Desai, U.B. Face recognition using a DCT-HMM approach. In Proceedings of the 4th IEEE Workshop on Applications of Computer Vision (WACV ’98), Princeton, NJ, USA, 19–21 October 1998; pp. 226–231.

- Davari, P.; Miar-Naimi, H. A new fast and efficient HMM-based face recognition system using a 7-state HMM along with SVD coefficient. Iran. J. Electr. Electron. Eng. Iran Univ. Sci. Technol. 2008, 4, 46–57. [Google Scholar]

- Sharif, M.; Shah, J.H.; Mohsin, S.; Razam, M. Sub-holistic hidden Markov model for face recognition research. J. Recent Sci. 2013, 2, 10–14. [Google Scholar]

- Rabab, R.; Abdelkader, M.; Rehab, F. Face recognition using particle swarm optimization-based selected features. Int. J. Signal Process. Image Process. Pattern Recognit. 2009, 2, 51–65. [Google Scholar]

- Gan, J.Y.; He, S.B. An Improved 2dpca Algorithm For Face Recognition. In Proceedings of the Eighth International Conference on Machine Learning and Cybernetics, Baoding, China, 12–15 July 2009; pp. 2380–2384.

- Kim, K.I.; Jung, K.; Kim, J. Face recognition using support vector machines with local correlation kernels. Int. J. Pattern Recognit. Artif. Intell. 2002, 16, 97–111. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, C.; Pan, F.; Wang, J. Face recognition based on Gabor with 2DPCA and PCA. In Proceedings of the 24th Chinese Control and Decision Conference, Taiyuan, China, 23–25 May 2012; pp. 2632–2635.

- Wang, S.; Ye, J.; Ying, D. Research of 2DPCA principal component uncertainty in face recognition. In Proceedings of the Internatioanl Conference on computer Science & Education, Colombo, Sri Lanka, 26–28 April 2013; pp. 26–28.

- Dandpat, S.K.; Meher, S. Performance improvement for face recognition using PCA and two-dimensional PCA. In Proceedings of the International Conference on Computer Communation and Information, Coimbatore, India, 4–6 January 2013.

- Wang, A.; Jiang, N.A.; Feng, Y. Face recognition based on wavelet transform and improved 2DPCA. In Proceedings of the Fourth International Conference on Instrumentaion and Measurement, Computer, Communication and Control, Harbin, China, 18–20 September 2014; pp. 616–619.

- Wiskott, L.; Fellous, J.M.; Kruger, N.; Malsburg, C.V. Face recognition by elastic bunch graph matching. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 775–779. [Google Scholar] [CrossRef]

- Raut, S.; Patil, S.H. A review of maximum confidence hidden Markov models in face recognition. Int. J. Comput. Theory Eng. 2012, 4, 119–126. [Google Scholar] [CrossRef]

- Bicego, M.; Castellani, U.; Murino, V. Using hidden Markov models and wavelets for face recognition. In Proceedings of the 12th International Conference on Image Analysis and Processing, Mantova, Italy, 17–19 September 2003; pp. 52–56.

- Bartlett, M.S.; Movellan, J.R.; Sejnowski, T.J. Face recognition by independent component analysis. IEEE Trans. Neural Netw. 2002, 13, 1450–1464. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Wechsler, H. Independent component analysis of gabor features for face recognition. IEEE Trans. Neural Netw. 2003, 14, 919–928. [Google Scholar] [PubMed]

- Arca, S.; Campadelli, P.; Lanzarotti, R. A face recognition system based on local feature analysis. In International Conference on Audio-and Video-based Biometric Person Authentication; Springer: Guildford, UK, 2003; pp. 182–189. [Google Scholar]

- Hajraoui, A.; Sabri, M.; Fakir, M. Complete architecture of a robust system of face recognition. Int. J. Comput. Appl. 2015, 122, 8975–8887. [Google Scholar] [CrossRef]

- Shyam, R.; Singh, Y.N. A taxonomy of 2D and 3D face recognition methods. In Proceedings of the International Conference on Signal Processing and Integrated Networks, Noida, India, 20–21 February 2014; pp. 749–754.

- Lin, S.H.; Kung, S.Y.; Lin, L.J. Face recognition/detection by probabilistic decision-based neural network. IEEE Trans. Neural Netw. 1997, 8, 114–132. [Google Scholar] [PubMed]

- Tolba, A.S. A parameter-based combined classifier for invariant face recognition. Cybern. Syst. 2000, 31, 289–302. [Google Scholar] [CrossRef]

- Sanguansat, P.; Asdornwised, W.; Jitapunkul, S.; Marukat, S. Class pecific subspace based two dimensional principal component analysis for face recognition. In Proceedings of the 18th international Conference on Pattern Recognition, Hong Kong, China, 20–24 August 2006; pp. 1249–1249.

- Ying, L.; Liang, Y. A human face recognition method by improved modular 2DPCA. In Proceedings of the International Symposium on IT in Medicine and Education, Guangzhou, China, 9–11 December 2011; pp. 7–11.

- Deniz, O.; Castrillon, M.; Hernandez, M. Face recognition using independent component analysis and support vector machines. Pattern Recognit. Lett. 2003, 24, 2153–2157. [Google Scholar] [CrossRef]

- Chihaoui, M.; Elkefi, A.; Bellil, W.; Amar, C.B. A novel face recognition recognition system using HMM-LBP. Int. J. Comput. Sci. Inf. Secur. 2016, 14, 308–316. [Google Scholar]

- Kepenekci, B. Face Recognition Using Gabor Wavelet Transform. Ph.D. Thesis, The Middle East Technical University, Ankara, Turkey, 2001. [Google Scholar]

- Han, H.; Jain, A.K. 3D face texture modeling from uncalibrated frontal and profile images. In Proceedings of the Fifth International Conference on Biometrics: Theory, Applications and Systems, Arlington, VA, USA, 23–27 September 2012; pp. 223–230.

- Huang, D.; Sun, J.; Yang, X.; Weng, D.; Wang, Y. 3D face analysis: Advances and perspectives. In Proceedings of the Chinese Conference on Biometric Recognition, Shenyang, China, 7–9 November 2014.

- Drira, H.; Amor, B.B.; Srivastava, A.; Daoudi, M.; Slama, R. 3D face recognition under expressions, occlusions, and pose variations. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2270–2283. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Ardabilian, M.; Wang, Y.; Chen, L. 3-D face recognition using eLBP-based facial description and local feature hybrid matching. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1551–1565. [Google Scholar] [CrossRef]

- Said, S.; Amor, B.B.; Zaied, M.; Amar, C.B.; Daoudi, M. Fast and efficient 3D face recognition using wavelet networks. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 4153–4156.

- Borgi, M.A.; El’Arbi, M.; Amar, C.B. Wavelet network and geometric features fusion using belief functions for 3D face recognition. In Computer Analysis of Images and Patterns, Proceedings of the 15th International Conference, CAIP 2013, York, UK, 27–29 August 2013; Wilson, R., Hancock, E., Bors, A., Smith, W., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2013; Volume 8048, pp. 307–314. [Google Scholar]

- Soltana, W.B.; Bellil, W.; Amar, C.B.; Alimi, A.M. Multi library wavelet neural networks for 3D face recognition using 3D facial shape representation. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, Scotland, 24–28 August 2009; pp. 55–59.

- Bowyer, K.W.; Chang, K.; Flynn, P. A survey of approaches and challenges in 3D and multimodal 3D+2D face recognition. Comput. Vis. Image Understand. 2006, 101, 1–15. [Google Scholar] [CrossRef]

- Lakshmiprabha, N.S.; Bhattacharya, J.; Majumder, S. Face recognition using multimodal biometric features. In Proceedings of the International Conference on Image Information Processing (ICIIP), Shimla, India, 3–5 November 2011; pp. 1–6.

- Radhey, S.; Narain, S.Y. Identifying individuals using multimodal face recognition techniques. Procedia Comput. Sci. 2015, 48, 666–672. [Google Scholar]

- Stephen, B. Deep learning and face recognition: The state of the art. Proc. SPIE 2015, 9457. [Google Scholar] [CrossRef]

- Li, S.Z.; Chu, R.; Liao, S.; Zhang, L. Illumination invariant face recognition using near-infrared images. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 627–639. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Wang, Y. A robust method for near infrared face recognition based on extended local binary pattern. In Proceedings of the International Symposium on Visual Computing, Lake Tahoe, NV, USA, 26–28 November 2007.

- Friedrich, G.; Yeshurun, Y. Seeing people in the dark: Face recognition in infrared images. In Biologically Motivated Computer Vision; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Jeni, L.A.; Hashimoto, H.; Kubota, T. Robust facial expression recognition using near infrared cameras. J. Adv. Comput. Intell. Intell. Inform. 2012, 16, 341–348. [Google Scholar]

- Wang, R.; Liao, S.; Lei, Z.; Li, S.Z. Multimodal biometrics based on near-infrared face. In Biometrics: Theory, Methods, and Applications; Wiley-IEEE Press: Hoboken, NJ, USA, 2009; Volume 9. [Google Scholar]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the 28th International Conference on Machine Learning ICML-11, Bellevue, WA, USA, 28 June–2 July 2011; pp. 689–696.

| Approach | Advantages | Disadvantages | |

|---|---|---|---|

|

| ||

| GLOBAL | Linear |

|

|

| Non linear |

|

| |

|

| ||

| LOCAL | Interest-Point-Based Face Recognition methods |

|

|

| Local Appearance-Based Face Recognition Methods |

|

| |

| HYBRID |

|

|

| Database | RGB Color/grey | Images Size | No. of Persons | Number of Images/Person | Variation | Description |

|---|---|---|---|---|---|---|

| ORL [93] | gray | 92 × 112 | 40 | 10 | i,t |

|

| AR database [94] | RGB | 576 × 768 | 126 (70 men and 56 women) | 26 | i,e,o,t |

|

| Oulu Physics [95] | gray | 42 × 56 | 125 | 16 | i |

|

| Yale [96] | Gray | 320 × 243

100 × 80 | 15 (14 men and 1 woman) | 11 | i,e |

|

| Yale B [97] | Gray | 640 × 40

60 × 50 pixels | 10 | 576 | P,i |

|

| XM2VTS [98] | RGB | 576 × 720 | 295 | P |

| |

| CVL [99] | RGB | 640 × 480 | 114 (108 men

and 6 women) | 7 | P,e | |

| Bern University face Database [100] | Gray | 30 | 10 |

| ||

| PIE [101] | RGB | 640 × 486 | 86 | 608 | b,p,i,e |

|

| The University of Stirling online database [102] | Gray | 300 (men and women) | 1591 |

| ||

| UMIST [103] | RGB | 220 × 220 | 20 | 19–36 | p |

|

| JAFEE [104] | Gray | 256 × 256 | 10 | 7 | e | |

| FERET [105] | RGB | 256 × 384 | 30,000 | p,i,e,t |

| |

| KUFDB [106] | Gray | 24 × 24

36 × 36 64 × 64 | 50 | 5 | p,i,e |

|

| HUMAN SCAN [107] | Gray | 384 × 286 | 23 | 66 | ||

| LFW [108] | RGB | 150 × 150 | 13,233 | P,i,e,o,t |

| |

| FRAV2D [109] | RGB Transformed to gray | 92 × 112 | 100 | 11 | i,e |

|

| MIT [110] | Gray | 480 × 512 15 × 16 | 16 | 27 | p,i |

|

| FEI database [111] | RGB | 200 | 14 |

| ||

| Extended Yale B [112 ] | RGB | 640× 480 | 28 | 576 | p,i |

|

| Database | Approach | Recognition

Rate (%) | Details |

|---|---|---|---|

| 55.4 | ||

| 96.43 | ||

| AR Database |

| 92.67 | |

| 94 | ||

| 96.1 | ||

| 87 | ||

| 75.2 | ||

| 97.3 | ||

| 99.5 | ||

| 99 | ||

| 99.5 | ||

| 99.5 | ||

| 95 | ||

| 91.21 | ||

| 84.6 | ||

| 90 | ||

| 96 | ||

| 98.33 | ||

| 97.9 | ||

| |||

| ORL |

| 97.80 | |

| 92.8 | ||

| 92.0 | ||

| 95.122 | ||

| 99.5 | ||

| 94.29 | ||

| 100 | ||

| 100 | ||

| 100 | ||

| 97 | ||

| 85 | ||

| 100 | ||

| 100 | ||

| 80.5 | ||

| 99 | ||

| 95.45 | ||

| 99.87 | ||

| ORL |

| 96 |

|

| 100 |

| |

| 100 | ||

| Bern |

| 100 | |

| 83.25 | ||

| FRAV2D |

| 87.875 | |

| 84.24 | ||

| 99.33 | ||

| 94.44 | ||

| 97.78 | ||

| 86.45 | ||

| YALE |

| 90.7 | |

| 99.39 | ||

| 88.1 | ||

| |||

| 99.39 | ||

| 99.5 |

| |

| 92.3 | ||

| 81.25 | ||

| 95 | ||

| 84.375 | ||

| FERET |

| 90 | |

| 91.6 | ||

| 95.1 | ||

| 85.2 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chihaoui, M.; Elkefi, A.; Bellil, W.; Ben Amar, C. A Survey of 2D Face Recognition Techniques. Computers 2016, 5, 21. https://doi.org/10.3390/computers5040021

Chihaoui M, Elkefi A, Bellil W, Ben Amar C. A Survey of 2D Face Recognition Techniques. Computers. 2016; 5(4):21. https://doi.org/10.3390/computers5040021

Chicago/Turabian StyleChihaoui, Mejda, Akram Elkefi, Wajdi Bellil, and Chokri Ben Amar. 2016. "A Survey of 2D Face Recognition Techniques" Computers 5, no. 4: 21. https://doi.org/10.3390/computers5040021

APA StyleChihaoui, M., Elkefi, A., Bellil, W., & Ben Amar, C. (2016). A Survey of 2D Face Recognition Techniques. Computers, 5(4), 21. https://doi.org/10.3390/computers5040021