Problems from diverse application domains are modeled as DisCSP. Potential DisCSPs are the problems dealing with the decision of a consistent combination of agent actions and decisions. Typical examples include distributed scheduling [

13], distributed interpretation problems [

14], multiagent truth maintenance systems (a distributed version of a truth maintenance system) [

15,

16] and distributed resource allocation problems in a communication network [

17]. Owing to the conceptualization of a large number of distributed artificial intelligence (DAI) problems as DisCSPs, algorithms for solving DisCSP play a vital role in the development of DAI infrastructure [

18].

2.2. SensorDSCP

Motivated by the real distributed resource allocation problem, Fernandez

et al. [

11] proposed the distributed sensor-mobile problem (SensorDCSP). It consists of a set of sensors

s1, s2... sn and a set of mobiles

m1, m2... mq.

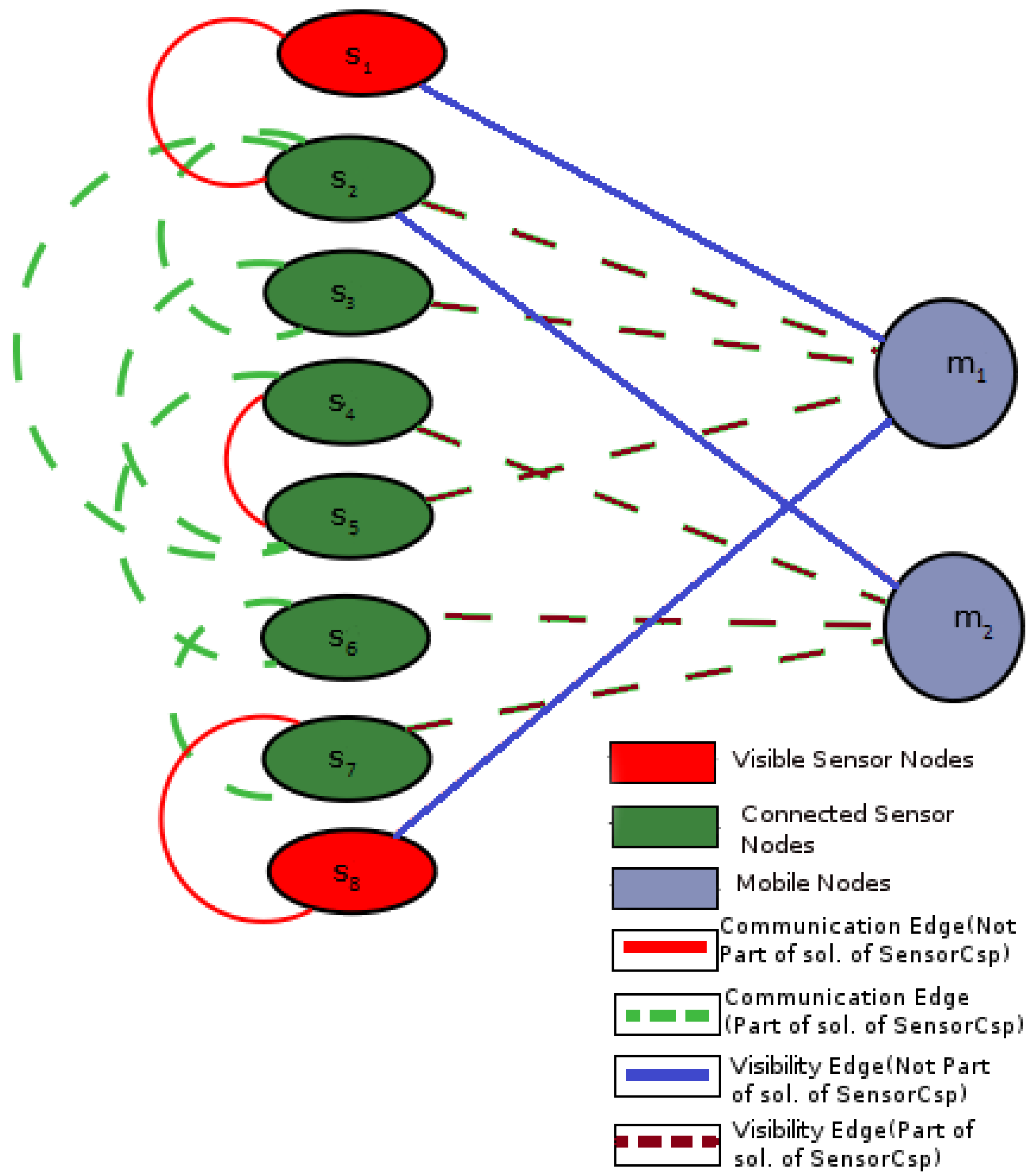

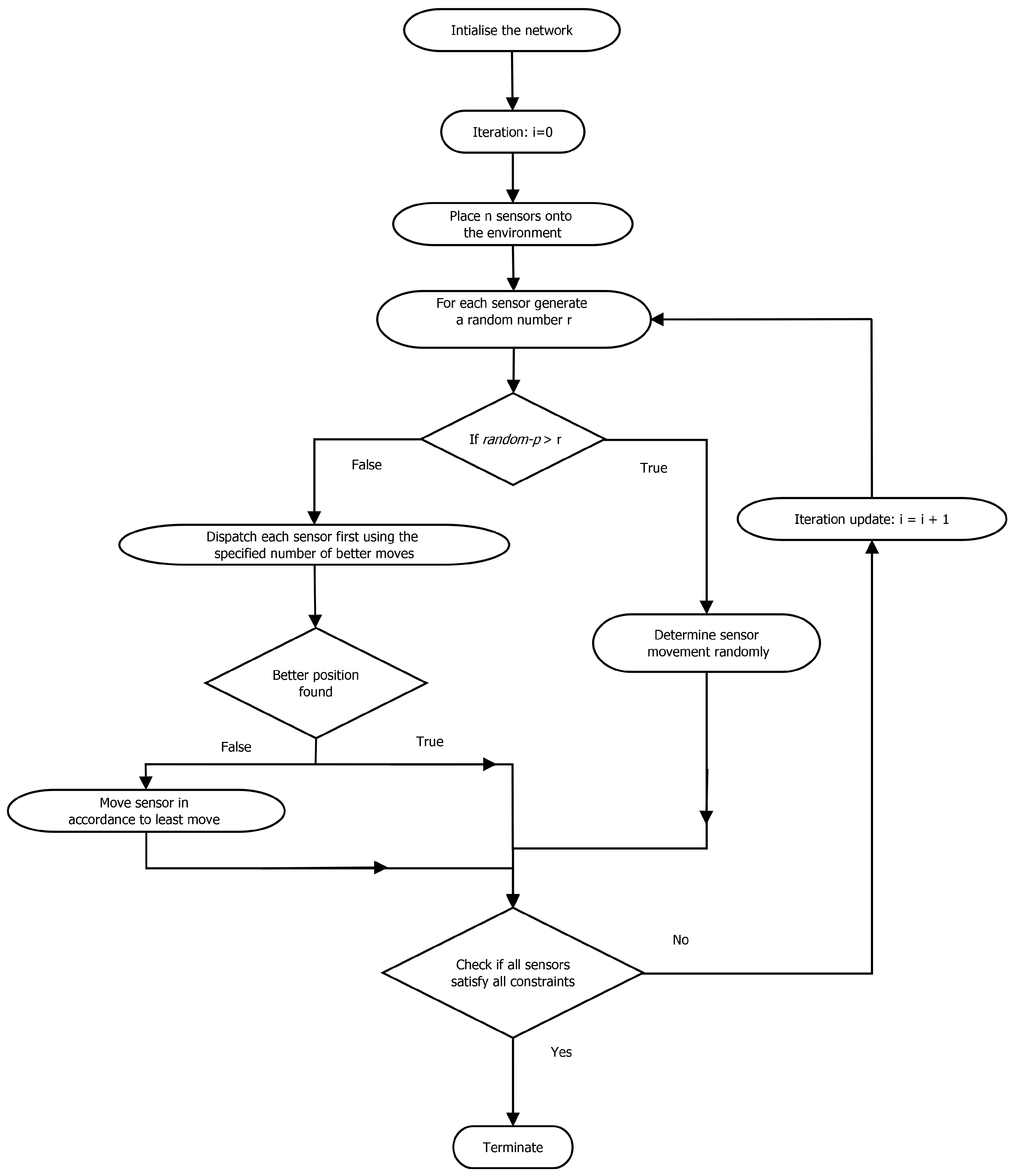

In SensorDCSP, a sensor can monitor at the most one mobile, and three sensors must monitor a mobile. An assignment of three distinct sensors to each mobile is a valid solution following two sets of constraints,

i.e., visibility and compatibility. A directed arc between mobiles indicates the visibility of mobiles to a sensor in the visibility graph. An arc in the compatibility graph between two sensors represents the compatibility between them (

Figure 1). In the solution, the three sensors assigned to each mobile are the sensors that form a triangle where the mobile is inside. This sensor-mobile problem is NP-complete [

11] and can be easily reduced to the problem of partitioning a graph into cliques of size three, which is a well-known NP-complete problem [

21].

Figure 1.

An example Graph G with communication and visibility edges between the sensor and mobile nodes: dashed edges represent a solution to SensorCSP (CSP, constraint satisfaction problem) in this graph (adapted from Bejar

et al. [

22]).

Figure 1.

An example Graph G with communication and visibility edges between the sensor and mobile nodes: dashed edges represent a solution to SensorCSP (CSP, constraint satisfaction problem) in this graph (adapted from Bejar

et al. [

22]).

2.3. Environment, Reactive Rules and Entities Algorithm

The ERE method achieves a notable minimization in the overhead communication cost in comparison to the DisCSP algorithms proposed by Yokoo

et al [

3]. This method implements entity-to-entity communication, wherein each entity is notified of the values from other relevant entities by retrieving an

n ×

n look-up memory table instead of a pairwise value swapping. The succeeding discussion about the ERE algorithm is based on the pioneering work of Liu

et al. [

10]

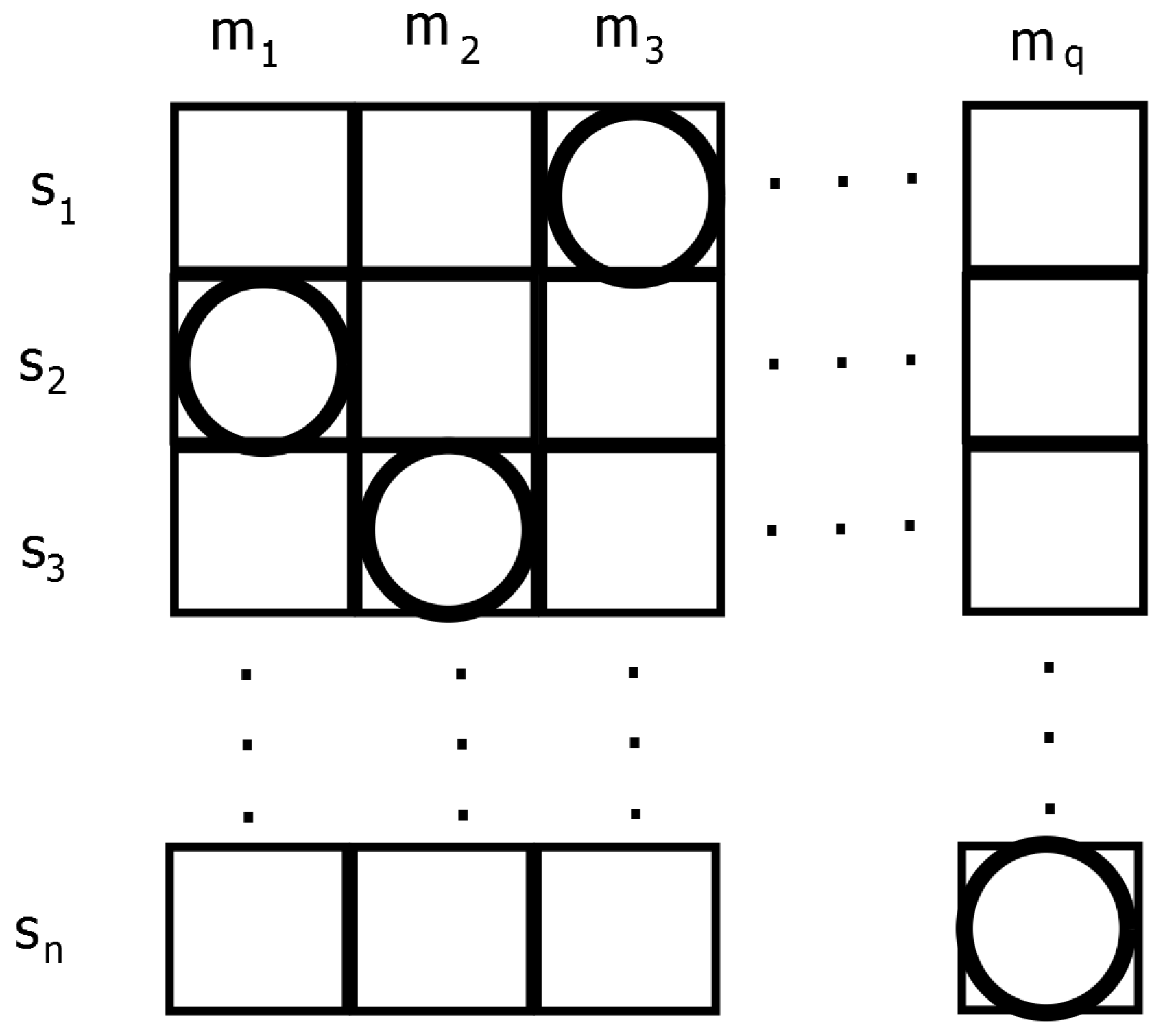

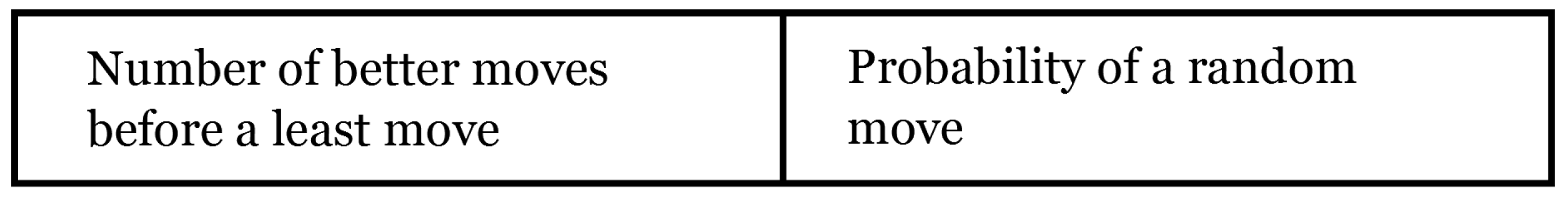

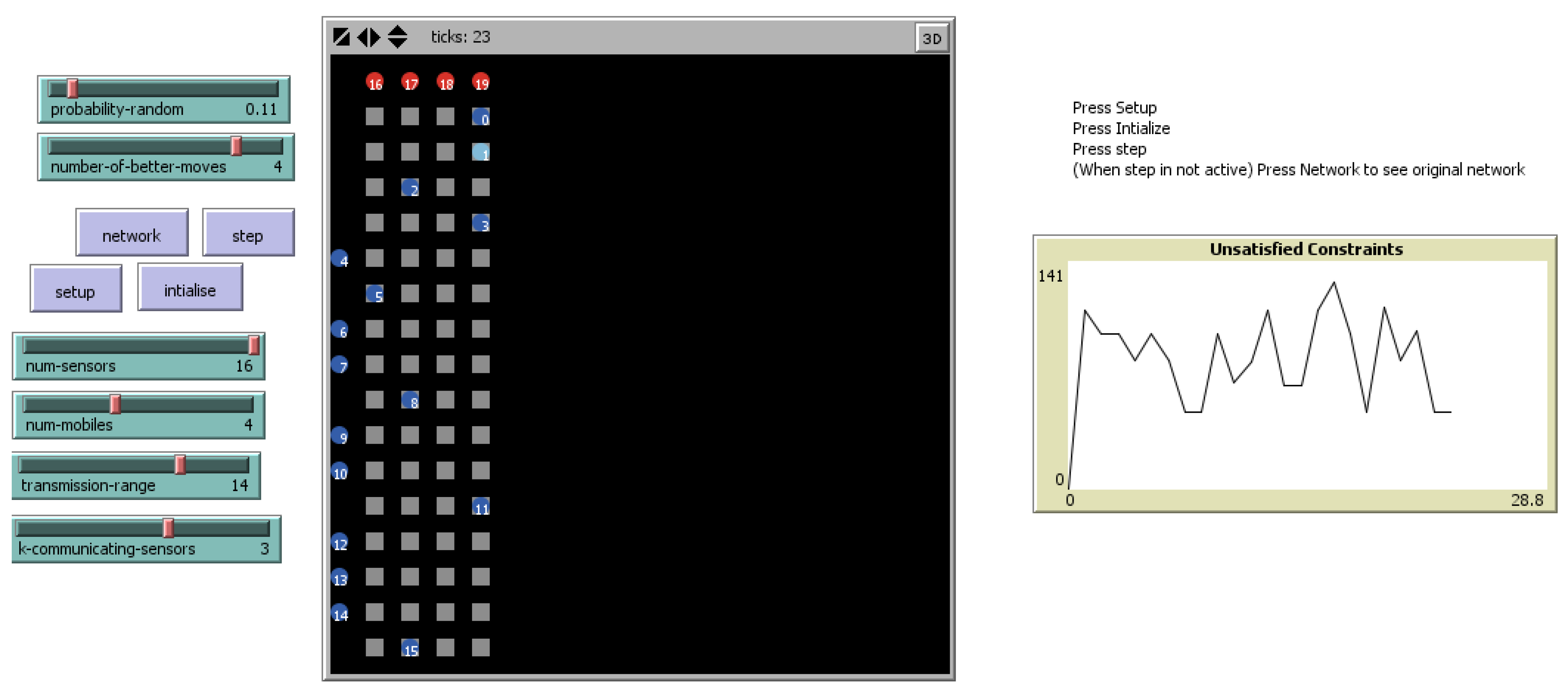

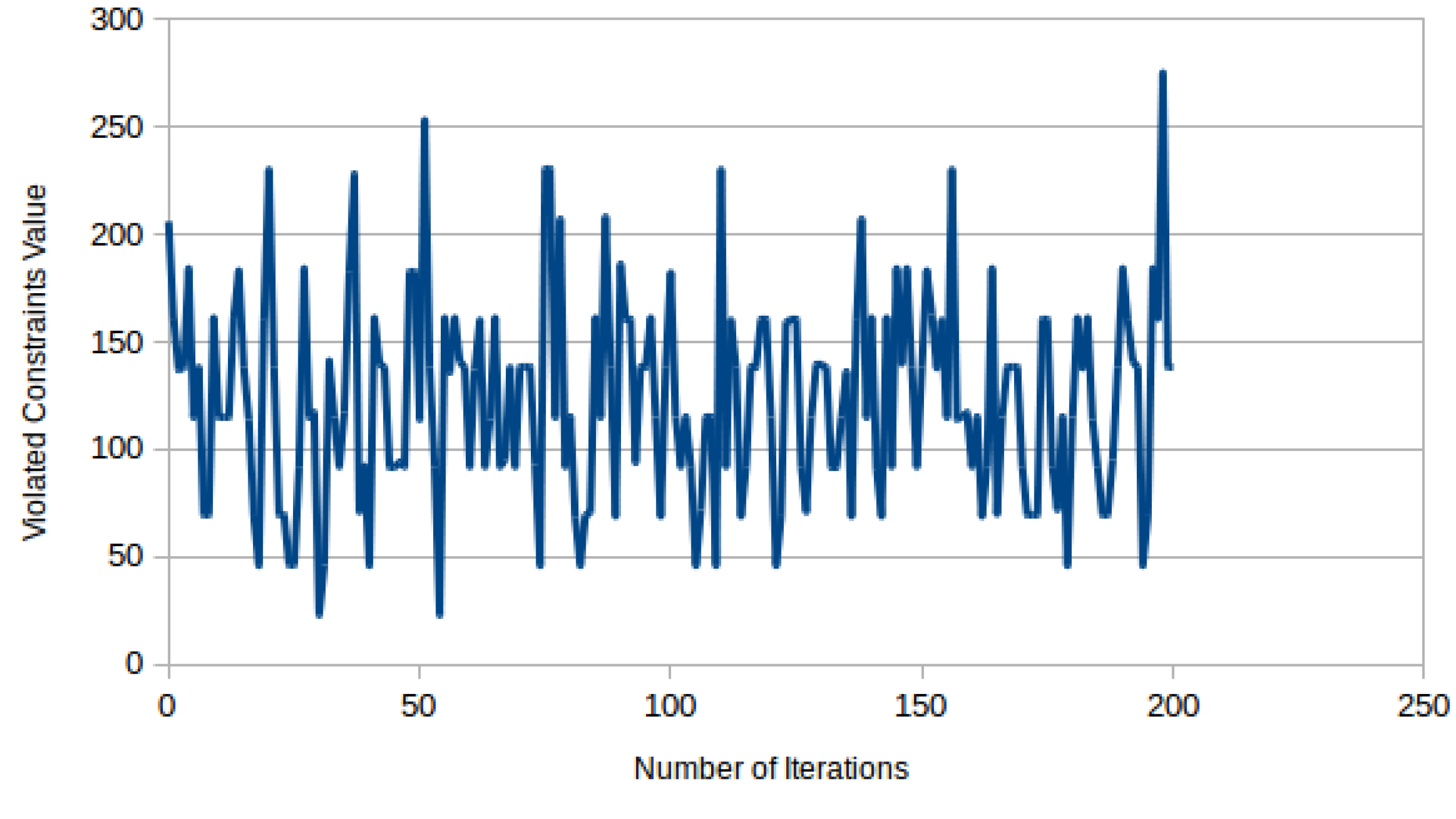

Figure 2 elucidates the movement of entities in the environment. The grid represents an environment, where each row represents the domain of a sensor node, that is the mobile node, which it can track, and the length of the row is equal to the total number of mobile nodes in the wireless network. In each row, there exists only one entity or sensor, and each entity will move within its respective row. There are

n sensors and

q mobile nodes denoted by

si and

mj, respectively. The movement of each entity

si will be within its respective row. At each step, the positions of entities provide an assignment to all sensors, whether it is consistent or not. Entities attempt to find better positions that can lead them to a solution state.

Figure 2.

An illustration of the environment, reactive rules and entities (ERE) model used (Adapted from Liu

et al. [

10]).

Figure 2.

An illustration of the environment, reactive rules and entities (ERE) model used (Adapted from Liu

et al. [

10]).

In any state of the ERE system, the positions of all entities indicate an assignment to all sensors (

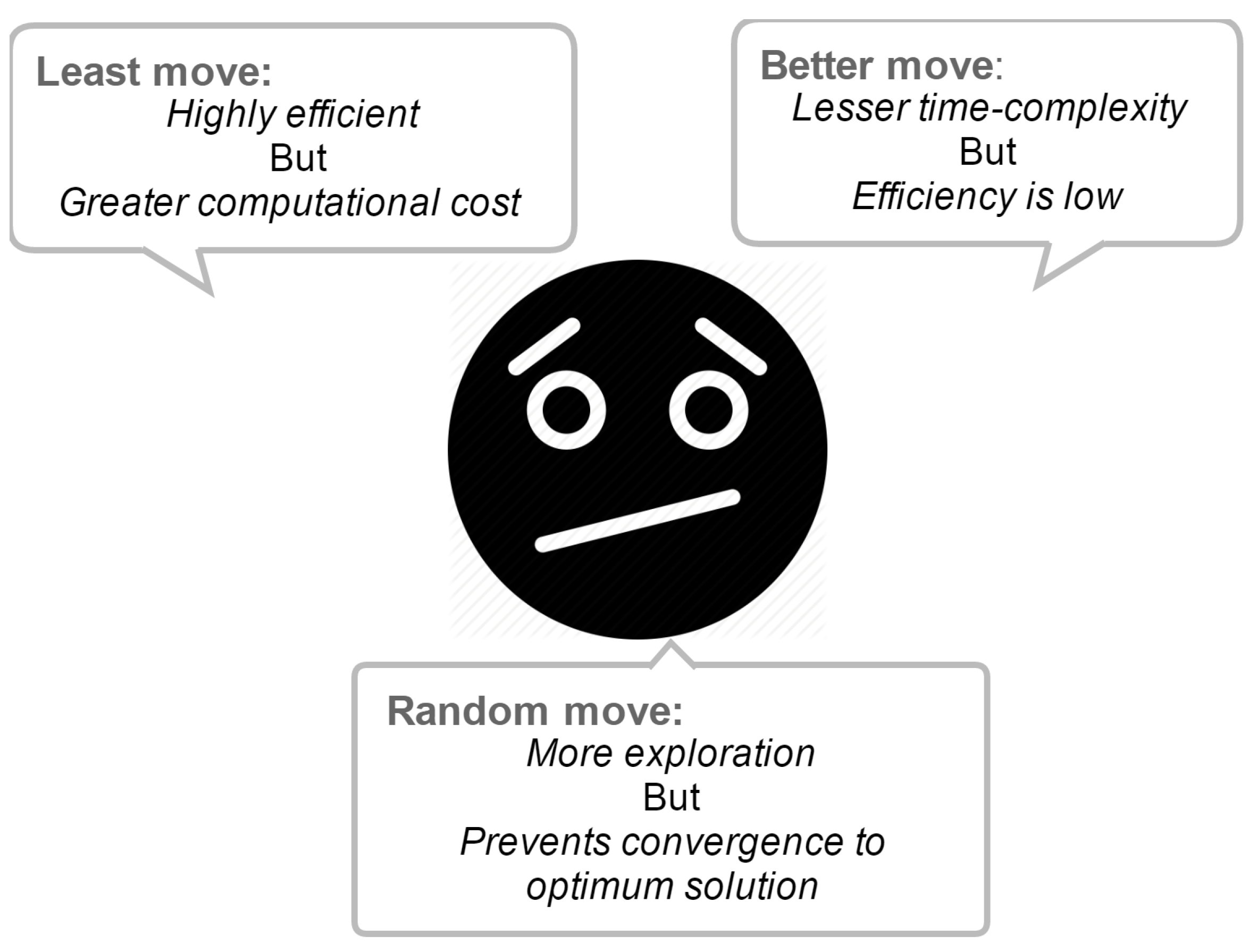

Figure 2). The three rudimentary behaviors exhibited by the ERE algorithm are;

least move,

random move and

better move. It is easier for an entity to find a better position by use of a

least move, since a

least move checks all of the positions within a row in the table, while a

better move randomly selects and checks only one position. This results in the following conundrum:

Many entities cannot find a better position to move at each step, hence if all entities use only

random move and

better move behaviors, the efficiency of the system will be moderate. On the other hand, a

better move has lesser time complexity compared to that of a

least move. Thus, a neutral point between a

least move and a

better move is highly recommended to improve the performance of the system under consideration (

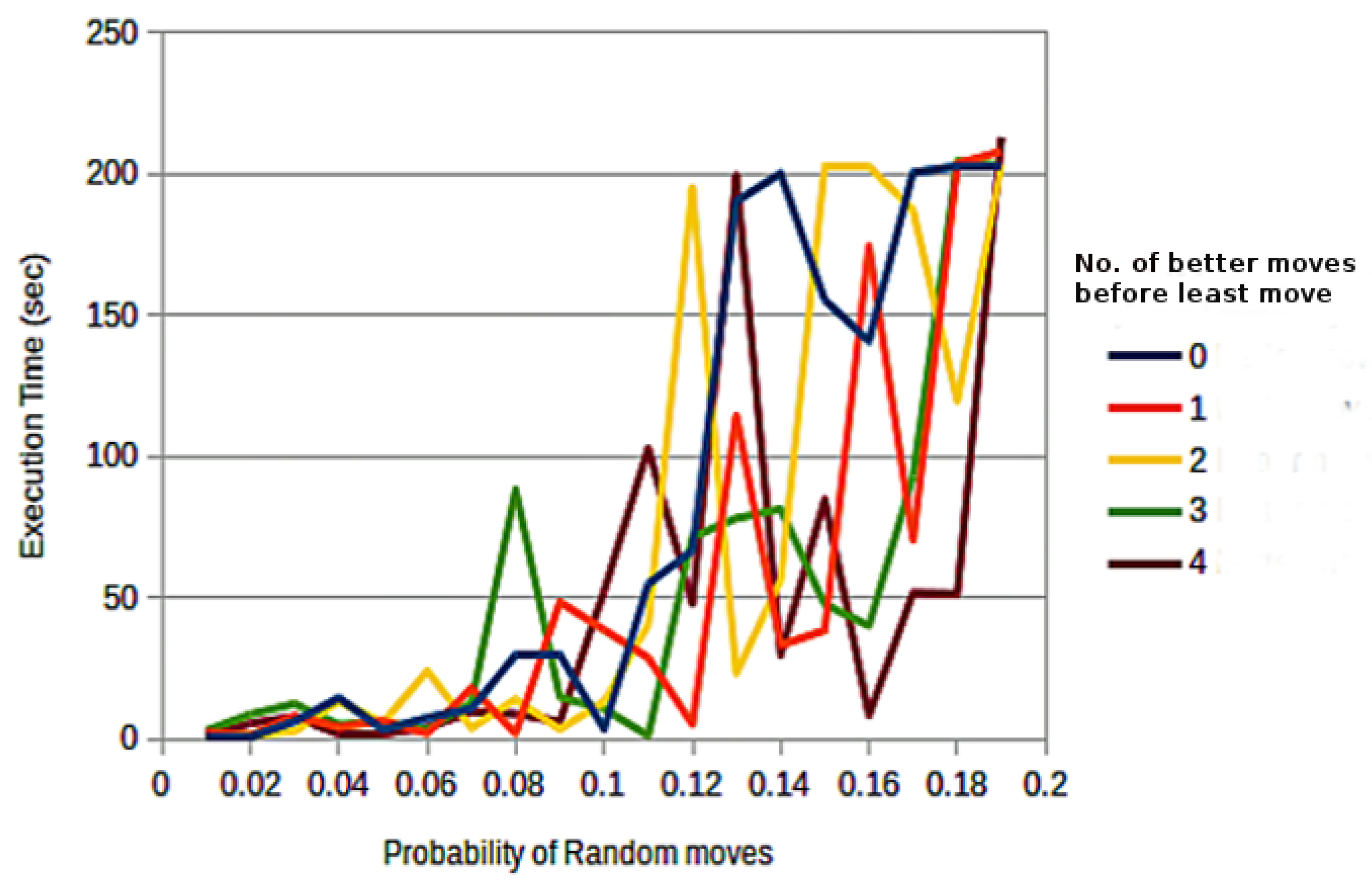

Figure 3).

Figure 3.

An illustration of different moves in the ERE model.

Figure 3.

An illustration of different moves in the ERE model.

To maintain a balance between least-moves and better-moves , an entity will make a

better move to compute its new position. If it succeeds, the entity will move to a new position. However, if it fails, it will continue to perform some further better-moves until it finds a satisfactory

better move. If it fails to take all better-moves, it will then perform a

least move. More better-moves increase the execution time, and fewer better-moves cannot provide enough chance for entities to find better positions. Therefore, in the beginning, either a

random move or a

least move is probabilistically selected by the entity, because opting for a

least move will give more chances to select a

better move before a

least move to maintain the desired system performance [

10].

Following are the three primitive behaviors of entities to accomplish a movement:

Remark 1. Least move: An entity moves to a minimum-position with a probability of least − p . If there exists more than one minimum position, the entity chooses the first one on the left of the row.

Remark 2. Better move: An entity moves to a position with a probability of better − p resulting in the smaller violation value than its current position. To achieve this entity, randomly select a position and compare its violation value with the violation value of its current position, and decide accordingly. ψb defines the behavior of a better move behavior as follows:

where r is a random number generated between one and k.

Remark 3. Random move: An entity moves randomly with a probability of random − p that is smaller than the probabilities of choosing least move and better move. Making a least move and better move alone will leave the entity in the state of local optima, thereby preventing it from moving to a new position.

The ERE method has the following fundamental merits compared to other methodologies prevalent in agent-based systems:

(1) The ERE method does not necessitate any procedure for preserving or dispensing consistent partial solutions as in an asynchronous weak-commitment search algorithm or asynchronous backtracking algorithm [

3,

4]. The latter two algorithms intend to abandon a partial solution, in the case of the non-existence of any value for a variable.

(2) The ERE method is intended to obtain an approximate solution with efficiency, which proves to be useful in the exact solution search within a limited time. On the other hand, the asynchronous weak-commitment search and asynchronous backtracking algorithms are formulated with the aim of completeness.

(3) The functioning of ERE is similar to cellular automata [

23,

24]. There exists a similarity between the behavior exhibited by entities corresponding to the underlying behavioral rules in ERE and the sequential approach followed by local search, yet another popular method for solving large-scale CSPs [

25].

2.4. Model Construction

SensorDCSP can be expressed as an example of DisCSP, wherein each agent represents one mobile and includes three variables, one for each sensor that is required to track the corresponding mobile (

Table 1). The domain of a variable is the set of compatible sensors. A binary constraint exists between each pair of variables in the same agent. The intra-constraints ensure that different, but compatible sensors are assigned to a mobile, whereas inter-constraints ensure that every sensor is chosen by at most one agent.

In the model, we simulate sensors as entities (

S =

s1,

s2,

s3. . .

sn), where

n = number of sensors and mobiles (

M =

m1,

m2,

m3. . .

mq), where

q = number of mobiles as variables with the following constraints:

- (1)

For each sensor node si, there is a tracking mobile node mj; the mobile node mj in si, where zi denotes the set of mobile nodes within the range of si.

- (2)

Let N be the set of all sensor nodes tracking a mobile, and then, all nodes present in N must be compatible with each other.

- (3)

Each mobile must be tracked with exactly k sensor nodes within its range. k is an input parameter to the model and may vary depending on the network.

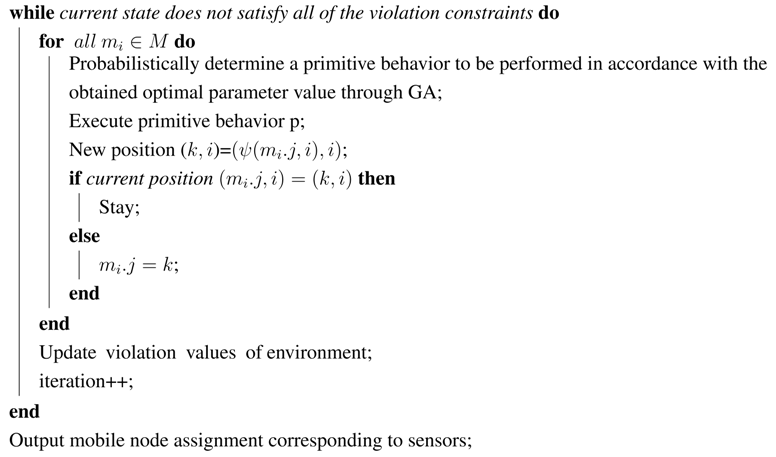

The multi-entity system used is inherently discrete in regards to its space, time and state space. A discrete timer is used in the system for synchronization of its operations. At the start, the network is simulated, assigning positions to sensor and mobile nodes and their corresponding range. Execution of the ERE algorithm to simulate SensorDSCP begins with the placement of nsensor nodes onto the environment, s1 in row1, s2 in row2...., sn in rown. The arrangement of entities takes place in randomly selected areas, thereby supporting the generation of a random network of sensors and mobiles. Subsequently, the developed autonomous system begins execution. At each iteration, all sensors are given an opportunity to determine their movements, i.e., if or not to move and where to move. Each sensor decides which mobile node to track.

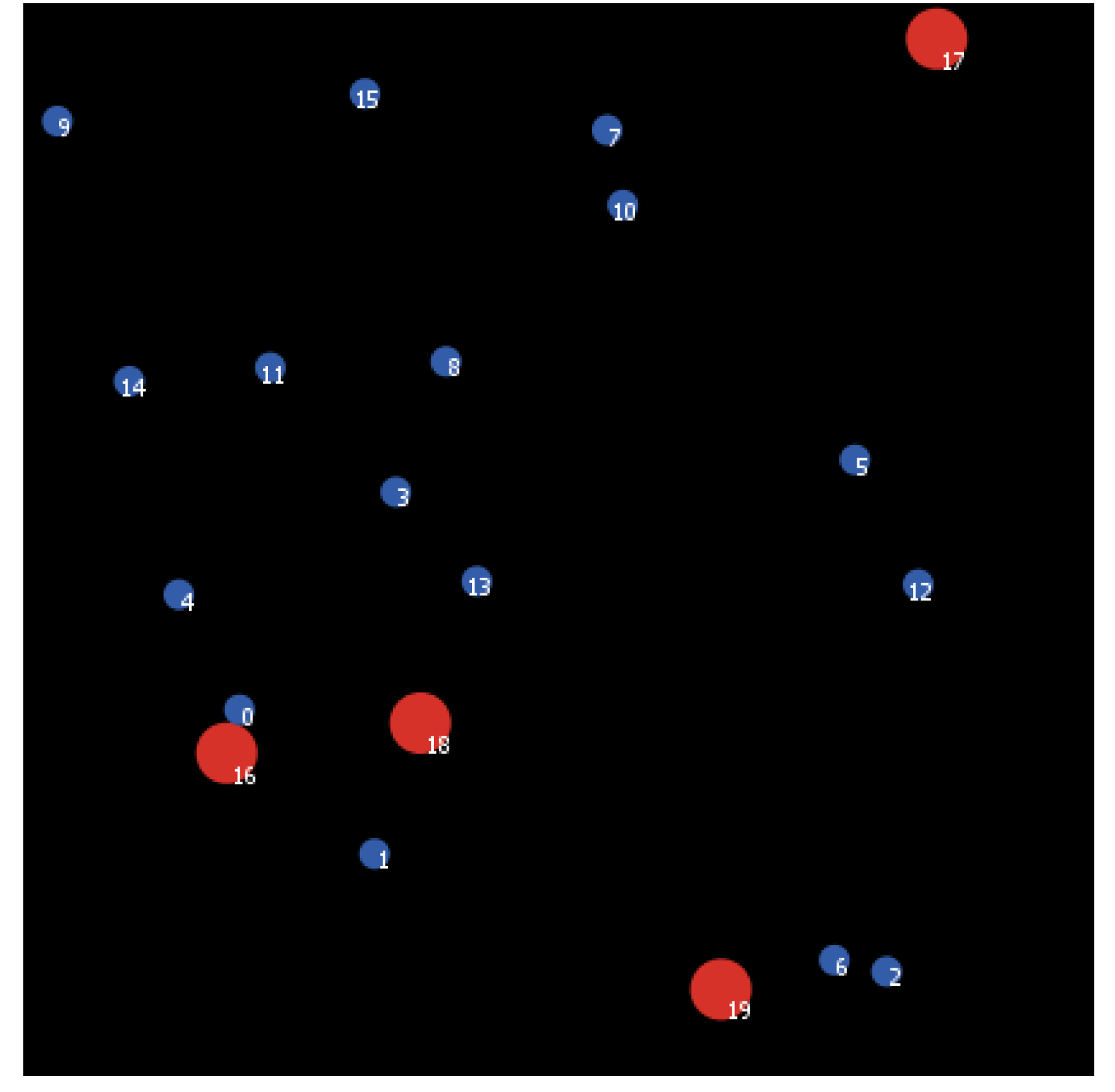

2.5. Termination

After the movements of sensors to decide their tracking mobile node, the system will check whether or not all of the sensors are at zero positions,

i.e., where no constraints are violated. The sensor

si can detect the local environment of

rowi and identify the violation value (unsatisfied constraints) of each cell in

rowi. All sensors at the zero position refer to zero unsatisfied constraints, whereby a solution state has been reached. The algorithm will terminate and thus output the obtained solution. Otherwise, the autonomous system will continue to dispatch sensor entities to move in the dispatching order. (

Figure 4) demonstrates the execution steps of the proposed algorithm.

| Algorithm 1: Pseudocode of the adopted ERE algorithm [10] |

| iteration=0; |

| Assign the entities’, namely sensor and mobile nodes, initial positions. ; |

| Assign mobile node positions and violation values pertaining to sensor position and the monitored mobile; |

![Computers 04 00002 i001]() |

Table 1.

Mapping of SensorDSCP as DisCSP (distributed CSP).

Table 1.

Mapping of SensorDSCP as DisCSP (distributed CSP).

| DCSP Model | Sensor-Centered Approach |

|---|

| Agents | Sensors |

| Variables | Mobiles |

| Intra-agent | Only one mobile per sensor |

| Inter-agent | k communicating sensors per mobile |

Figure 4.

Flow diagram of the algorithm.

Figure 4.

Flow diagram of the algorithm.