1. Introduction

In recent times, researchers have been extensively investigating steganography techniques and their applications in various contexts. With the growing concept of smart cities worldwide, researchers are combining steganography techniques with artificial intelligence to enhance the security of these frameworks [

1,

2]. Steganography is the practice of hiding information in a cover medium and is sometimes referred to as hiding in plain sight. Instead of watermarking the cover media, steganography embeds and hides data in the actual cover media by modifying the media data bit structure [

2,

3].

Data security becomes paramount when introducing aspects of IoT, because it involves numerous systems that communicate over a network and share a significant amount of data. IoT devices primarily exchange data over the internet using various network protocols. Unauthorized interceptors can manipulate these network communication protocols and access the data that is being shared between the IoT systems. Some of this data may contain sensitive and private details, such as health records and customer information. As a result, data should be protected as far as possible, equally as IoT adoption gradually increases over time [

3].

The increased expansion of IoT devices has led to a significant increase in data generation across various fields, including smart cities, industrial automation, and healthcare. While this growth is positive, it also presents a substantial challenge in terms of cyber/data security, as well as authenticity [

3,

4]. Cybercriminals are using artificial intelligence to mimic the operation of IoT systems, which raises a challenge to trust in the digital sphere. Furthermore, advances such as Generative AI (GenAI) can be used to create deepfakes that carry similar data as generated by smart IoT devices (i.e., image files, videos, audio, etc.). As a result, there is a need for more research on how digital trust can be maintained in today’s era, where IoT and AI models are being rapidly adopted [

4,

5,

6].

In IoT systems, integrating techniques such as image steganography and artificial neural networks can be implemented to enhance digital authenticity and trust [

5,

6]. These integrations will enable proactive IoT data forensic validation, ensuring that the data shared between IoT devices is authentic and not intercepted or generated by AI (i.e., deepfakes, etc.) [

2]. Furthermore, these measures will ensure that unauthorized interception of IoT data is rapidly detected and mitigated. Use cases can be in the form of secure real-time intelligent surveillance systems that use image steganography and ANNs for data forensic validation [

2,

3,

4,

5].

The use of multi-layered security models in IoT systems introduces redundancy and enhanced protection against unauthorized data interception, rather than relying solely on digital signatures. A multi-layered approach enables more granular security in IoT systems and can integrate with state-of-the-art models for enhanced security and automation. In this context, a two-layer approach enables authenticity validation through both pattern recognition and embedded validation codes. This approach provides for higher robustness than digital signature models alone. Multi-layered approaches provide better security; however, they typically require more computing processing power and speed than digital signatures [

1,

2,

3,

4,

5,

6].

This study focuses on the integration of image steganography and ANN to enable IoT data forensic validation and hence counter deepfakes or intercepted media. The data forensic validation method referred to is achieved through a two-layered security framework; the image steganography component embeds and hides data within an image, while the ANN will be used to validate the decrypted embedded data and detect any data inconsistencies. Data forensic validation, as used in this paper, refers to the use of decoded codes/signatures embedded in image pixels through steganography algorithms to validate the authenticity of the image, ensuring that it was captured by an authentic device and not intercepted or created using a deepfake. The interaction of these components enables data security and authenticity; hence, attackers will find it difficult to successfully intercept, manipulate, or use deepfakes to forge data in an undetectable manner [

2,

4,

5,

6].

Contribution of this study: Image steganography embeds and hides data using techniques such as the least significant bit (LSB) insertion, which alters the least significant bit of pixel values to hide data [

1,

2]. The discrete cosine transform (DCT) enables embedding data in the frequency domain of the cover media, thereby making it more robust against compression [

2]. Further to the DCT, the discrete wavelet transform (DWT) technique embeds data into different frequency bands, enabling multi-resolution analysis [

3]. These embedding techniques will be used to conceal validation codes/metadata within the pixels of the IoT camera images, enabling forensic validation of the pictures and secure data transmission. An ANN model will be trained to recognize patterns and detect anomalies in the IoT device images. The incoming data from the smart camera device is compared against the learned patterns for anomaly detection and alteration. Through the use of the ANN model, only validated data is accepted; therefore, the method enhances the security of IoT camera systems.

This paper is organized into six sections.

Section 2 outlines previous similar state-of-the-art works and summarizes the contribution of this study in line with the identified study gaps.

Section 3 presents the proposed methodology, which includes the proposed embedding scheme. In

Section 4, the system model and assumptions are discussed.

Section 5 presents the experimental evaluation results and discussion of findings. Finally,

Section 6 concludes the work and outlines the ideas of future related work.

2. Related Works

The researchers in [

2] introduced the components of privacy and authenticity in IoT networks by using a weighted pixel classification image steganography. The work focused on improving performance variables, including embedding capacity, imperceptibility, and the security of the transmitted information. Although this state-of-the-art study yielded better PSNR values, ensuring imperceptibility, compared to other methods, there remains a risk of data tampering due to the lack of data forensic validation for authenticity. The proposed framework presented in this paper aims to introduce the integration with ANN for data forensic validation, in addition to other performance parameters, as per the findings in [

2].

The work presented in [

3] focused on addressing the challenges arising from the significant advancement of IoT, specifically in the healthcare sector. Some of the difficulties identified in the study are the security and integrity of medical data in healthcare service applications. The authors in [

3] proposed a dual security model for securing diagnostic text data in medical images. The proposed model integrated steganographic algorithms, such as the 2-D discrete wavelet transform at one level (2D-DWT-1L) and the 2-D discrete wavelet transform at two levels (2D-DWT-2L), with encryption schemes. The schemes included the Advanced Encryption Standard and the Rivest, Shamir & Adleman algorithms. While this study demonstrated improved results compared to other state-of-the-art methods, it did not include aspects of integration with ANNs for data forensic validation. Hence, our study proposes a framework that integrates ANNs to enable automated data forensic validation.

Another related previous study is presented in [

7]. This study presents a novel steganography method that utilizes the singular value decomposition (SVD) and the tunable Q-factor wavelet transformation (TQWT). It applies the TQWT to distinguish the cover signal into discrete frequency sub-bands. They use the SVD to decompose the high-frequency sub-band coefficients into individual values. This novel technique demonstrated improved patient information security and confidentiality, representing a substantial development in steganography studies. Although the state-of-the-art research in [

7] presents valuable findings, it focuses more on the healthcare application and does not include aspects of ANNs to counter deepfakes and forensic data validation. Hence, the study presented in this paper aims to enhance its contributions.

Ref. [

8] examines several steganographic techniques for safe data concealing. Some of the methods included are image steganography masking schemes, as well as the LSB-1, 2, and 3 methods. In study [

9], researchers employed encrypted secret messages to determine an advanced steganography algorithm. At the encoding side, the encryption process includes a stego-key before embedding the data. This stego key ensures that, in the event of unauthorized interception, the attacker will not be able to decrypt the secret messages, thereby providing the confidentiality of the information. The study in [

9] also focused more on imperceptibility aspects and did not include any elements of authenticity or data forensic validation on the receiver side. Neither of the novel studies in [

8] and [

9] integrated aspects of ANNs into the steganography methods they proposed, nor did they investigate the data forensic validation aspects. Hence, there is a need to explore the identified gap and develop more robust steganography systems.

3. Proposed Scheme and Methodology

This section discusses the methodology of the study and provides an overview of the proposed scheme’s background.

In this study, we propose a discrete cosine transform-least significant bit (DCT-LSB) method with a 2-bit resolution (DCT-LSB-2) for steganography systems. The rationale for choosing a 2-bit resolution in this work was to build upon our previous work by introducing a two-layered approach in this case, while also conserving the computing resources available to us at the time of experimentation. The technique is applied forwardly on the sender side of the system for encryption and embedding the secret data bits within the cover image. And a reverse DCT-LSB-2 is used at the receiver side for decryption and recovery of the hidden information. The hidden information can be a validation code embedded within the pixels of the image captured by the smart camera device (i.e., an IP camera). It can be used as a forensic validation criterion on the receiver side. The successful data forensic validation of the code within the pixels of the image will mean acceptance. The ANN will be located on the receiver side and will perform the reverse DCT-LSB-2 operation, then validate whether the decrypted data matches the criteria of the initial embedded validation code.

The discrete cosine transform-least significant bit 2 method (proposed) utilizes the ASCII method to analyze the image consecutively captured by the IoT device, converting it into decimal and binary forms [

1,

2]. Before encryption is initiated, the algorithm verifies the image dimensions and the size of the secret data. The IoT camera captured image is partitioned into RGB (Red, Green, Blue), and the two least significant bits of each image pixel are replaced with pairs of the hidden information bits (bit substitution). This method alters coefficients in the transform domain and randomly embeds the private information in the image pixels, thereby reducing the chances of steganalysis, distortion, and cross-detection. The algorithm runs till all the bits of the hidden information are inserted into the image pixels. As a result, before the captured image is transmitted from the IoT device (i.e., IP camera), the secret data is embedded (as previously stated, this data can be a validation code that will be used at the receiver side by the ANN for authenticity acceptance) [

7,

8,

9,

10,

11,

12].

The formula for calculating the DCT coefficients matrix for a single 8 × 8 block of image pixels is:

where H

x and H

y are the horizontal and vertical spatial frequencies for the integers 0 ≤ x, y < 8, respectively. And,

After computing the DCT coefficients matrix, each coefficient is divided by the corresponding value in the luminance quantization matrix and rounded to the nearest integer, as per the equation below. The result is then a quantized DCT matrix.

where

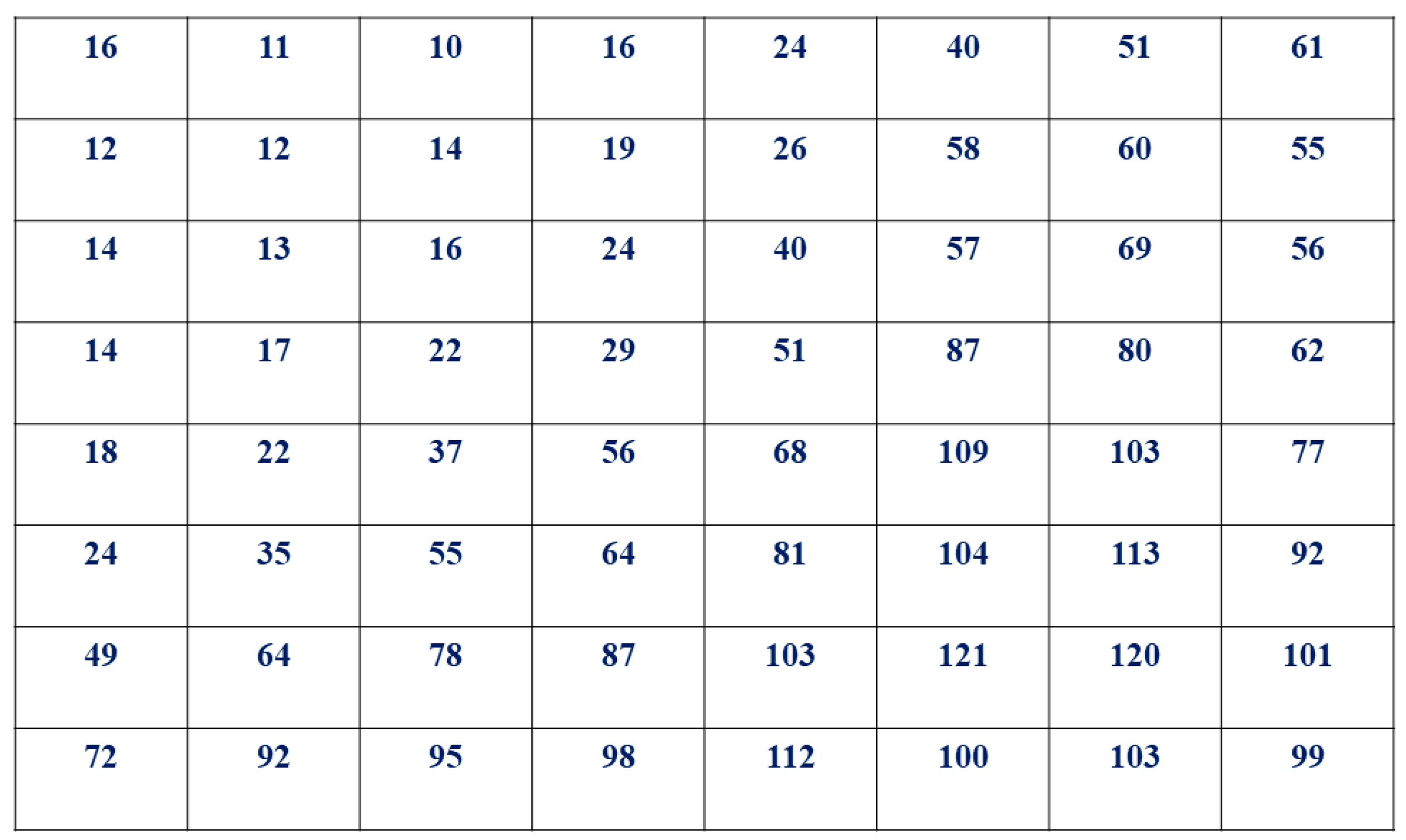

Q

pq is a luminance quantization matrix with 8 × 8 (64) elements, presented in

Figure 1 below.

To evaluate the performance of the proposed method, key parameters such as the peak signal-to-noise ratio (PSNR), mean square error (MSE), and structural similarity index (SSIM) will be utilized. The secret information for experimental purposes will be a static validation code consisting of numerical values, text characters, and special characters. The ANN model will be pre-trained to verify the decoded validation code and confirm that the received image is authentic, has not been tampered with, and is not AI-generated (deepfake).

The structural similarity index is used to quantify the similarity between two images. It considers multiple aspects of the human visual system and quantifies the apparent quality of images. Three key features of the image are compared: structure, luminance, and contrast. The result produces a value between −1 and 1, where a negative value means dissimilarity, 0 means no similarity, and a value of 1 indicates perfect similarity. Hence, higher SSIM values denote greater similarity between images [

2,

3,

4,

5].

The formulae for calculating the SSIM, MSE, Embedding Rate, and PSNR are given below [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10]:

where:

—are the average luminance of the cover image and the stego image.

—is the covariance of the pixel intensities between the cover image and the stego image.

and —are added constants to prevent instability when the denominators are close to zero.

and —are the variances of the pixel intensities of the cover image and the stego image.

M is the number of rows of the cover image

N is the number of columns of the cover image,

is the pixel value from the cover image

is the pixel value from the stego image.

N is the number of secret hidden data bits

H is the cover image height

W is the cover image width

4. System Model and Assumptions

In this section, the system model and the pre-trained ANN model are presented, with assumptions discussed.

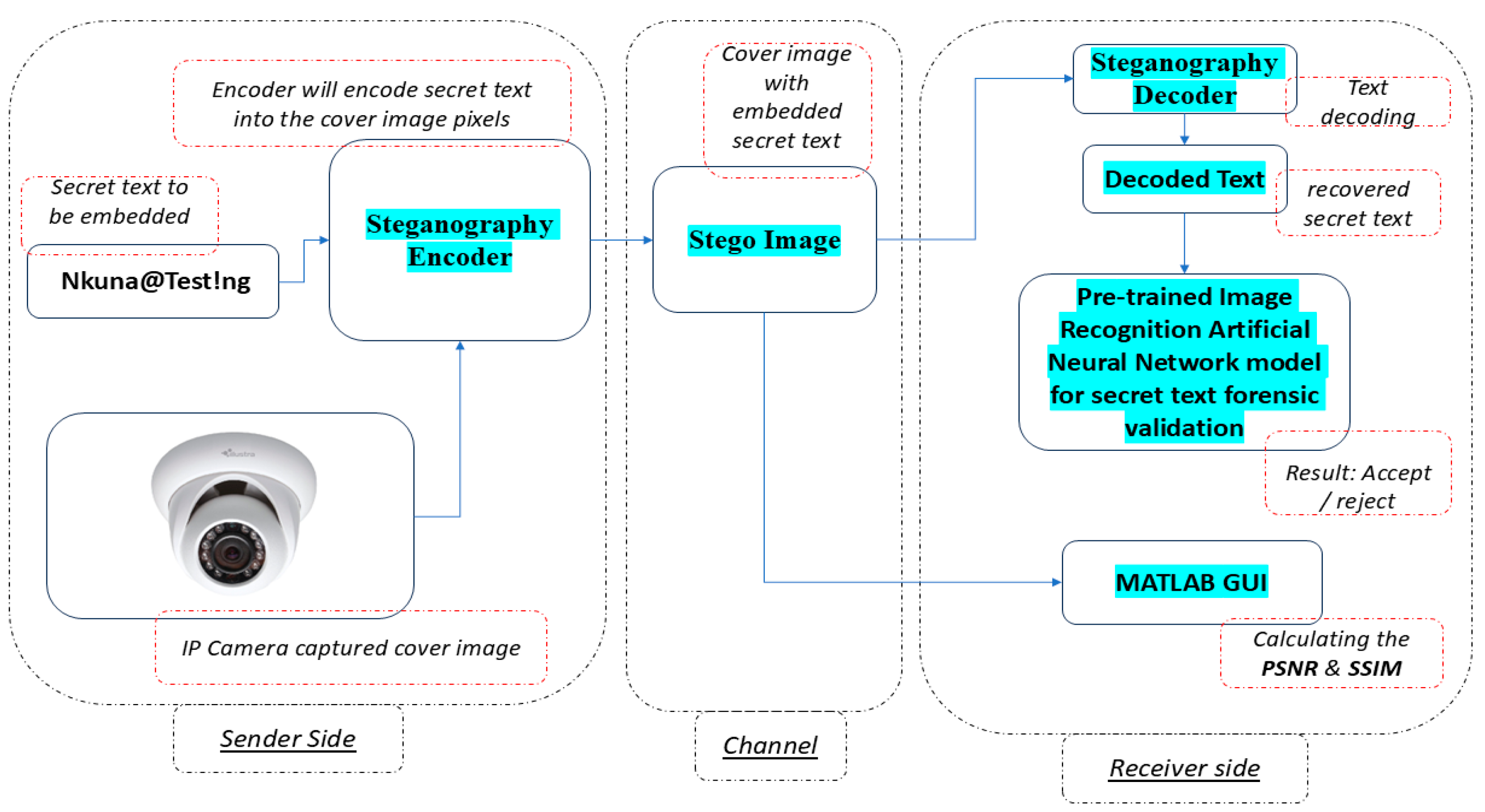

Figure 2 illustrates the proposed system model below.

The IP camera will capture the cover image and parse it to the steganography encoder system (where the embedding of the secret text will occur). The encoding algorithm that will be tested is the proposed DCT-LSB-2 scheme. Once the hidden text is successfully encoded into the cover image pixels, the encoder produces a stego image as output. The stego image will be transmitted through a communication channel to the receiver’s side, where the embedded secret text will be decoded and fed into the pre-trained ANN for validation. The stego decoder system will use a reverse DCT-LSB-2 algorithm to decode/decrypt the secret text. The recovered text will serve as an input to the pre-trained ANN model for data forensic validation. If the text matches the original secret text, the ANN model will return an ‘accept’ message, indicating successful authentication. Hence, this means that the image captured by the IP camera has not been tampered with and is therefore valid.

However, suppose the ANN model detects that the decoded secret text does not match the pre-trained text. In that case, it will return a ‘reject’ message, indicating that the received image file is not from an authorized device, has been intercepted, and may be a deepfake image file, which means that the IP camera has been compromised. An unsuccessful verification indicates that an unauthorized interception of the images sent from the IP camera has occurred. The ANN can be configured to send notification alerts for any unsuccessful authentication attempt, allowing responsible individuals to remediate the breach.

To ensure the robustness of the stego system, the MATLAB R2017a (9.2.0.538062) tool is used to calculate the PSNR and SSIM between the original cover image and the stego image, thereby maximizing imperceptibility. Using these parameters, the risk of steganalysis and unauthorized detection of image alterations will be minimized. Hence, increasing the robustness of the whole system. The PSNR and SSIM results obtained through the proposed method will be compared to those achieved in related previous state-of-the-art schemes from the literature.

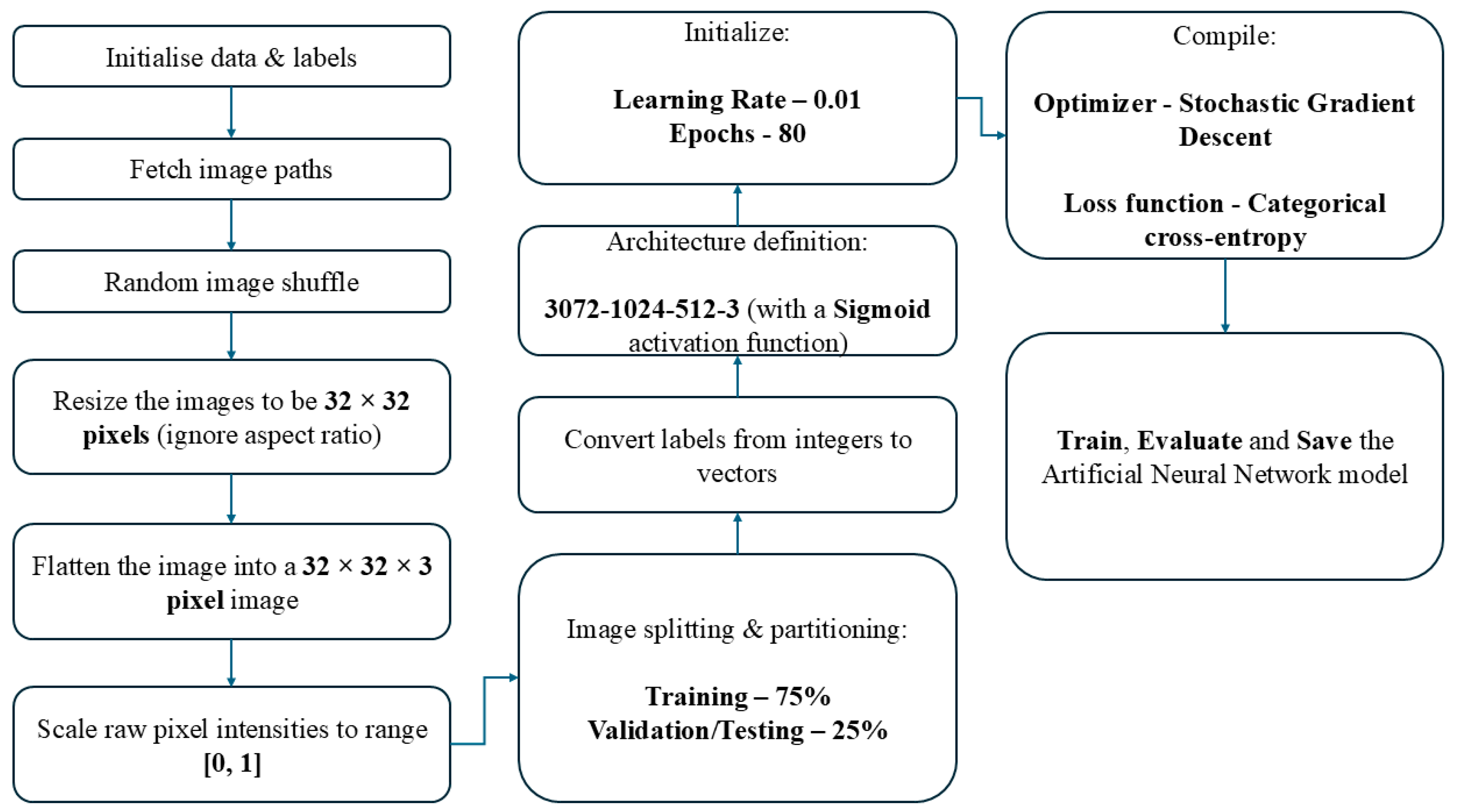

Figure 3 below presents the flow diagram of the trained ANN model.

The data set used to train the ANN model consists of 1000 images of Coke cans. The images were gathered and downloaded from various online sources, and some were captured using a smartphone camera. During the training process, each image from the training set is resized to a 32 × 32 pixel size. The 32 × 32 pixel size is chosen to ensure that the image sizes do not consume a significant amount of computing resources (storage and computing time). Some alternative pixel sizes for resizing include 64 × 64, 128 × 128 pixels, etc.; however, these require more computing resources than are currently available at the time of experimentation. Hence, for this experimentation, the 32 × 32 resizing option is chosen.

For the model training, a 75/25 split is chosen for training and testing/validation, respectively. The rationale for selecting this split was obtained from previous studies, which found that the best results are obtained when 20–30% of the data is used for validation and the remaining 70–80% for training [

6]. The Stochastic Gradient Descent (SGD) optimizer and the Categorical Cross-entropy loss functions are used in Keras Python 3.7 for model training. Both the optimizer and loss functions are imported from the Keras Python 3.7 library. The model is trained and saved for stego-image classification.

5. Results and Discussion

This section presents the results obtained during the experimentation of the system model indicated in

Figure 2.

The results are divided into four subsections: the first one is the data forensic validation portion, which examines the authenticity of the decoded secret text (also referred to as the validation code).

The second, third, and fourth sub-sections focus on the robustness of the proposed encoding scheme by comparing the obtained PSNR, MSE & SSIM results between the proposed framework and previous results obtained from related novel studies.

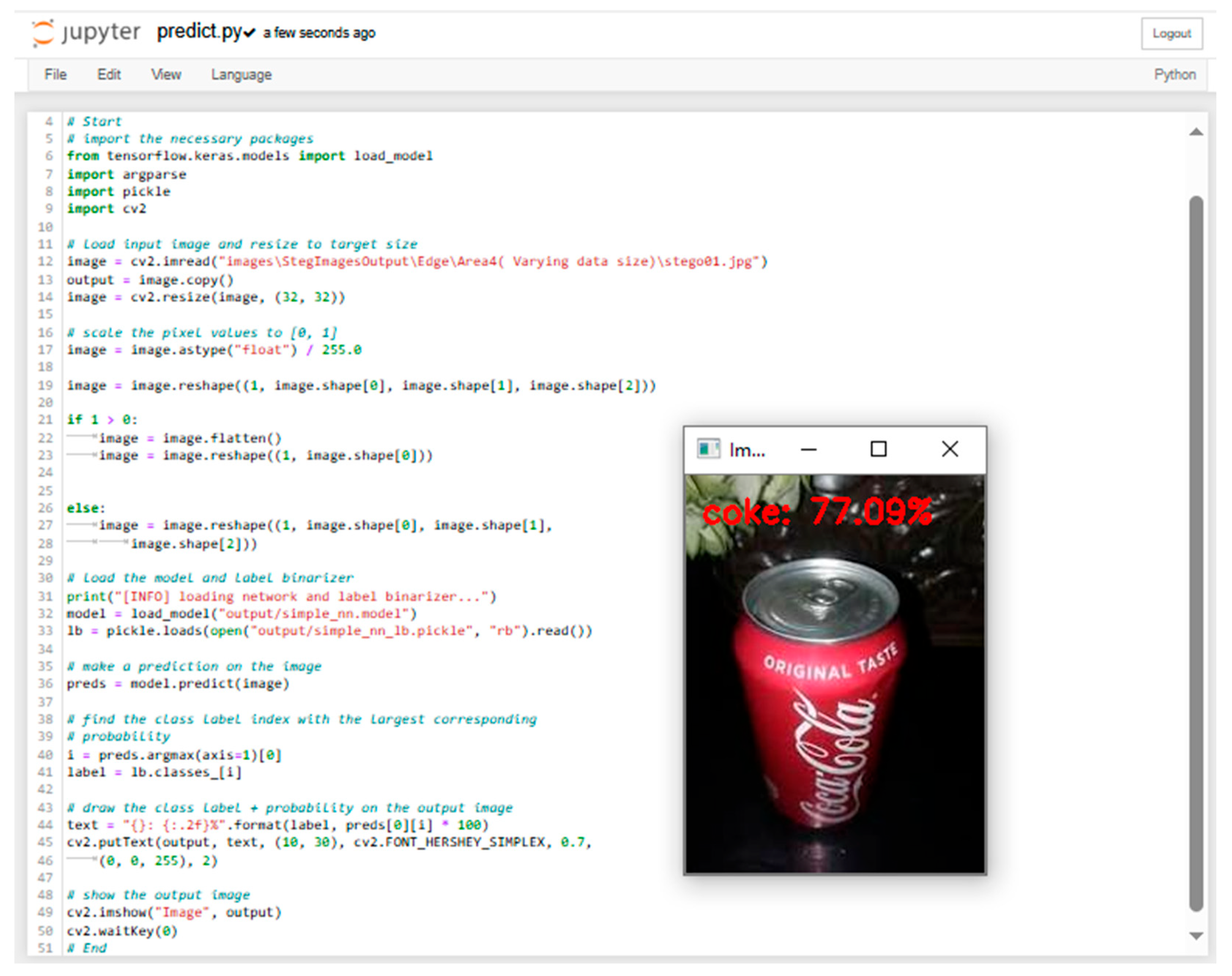

The ANN classifies the nature of the stego image with a specific prediction confidence, as shown in

Figure 4 below.

5.1. Determining the Authenticity of the Encoded & Decoded Secret Text for Data Forensic Validation on the Sender and Receiver Side

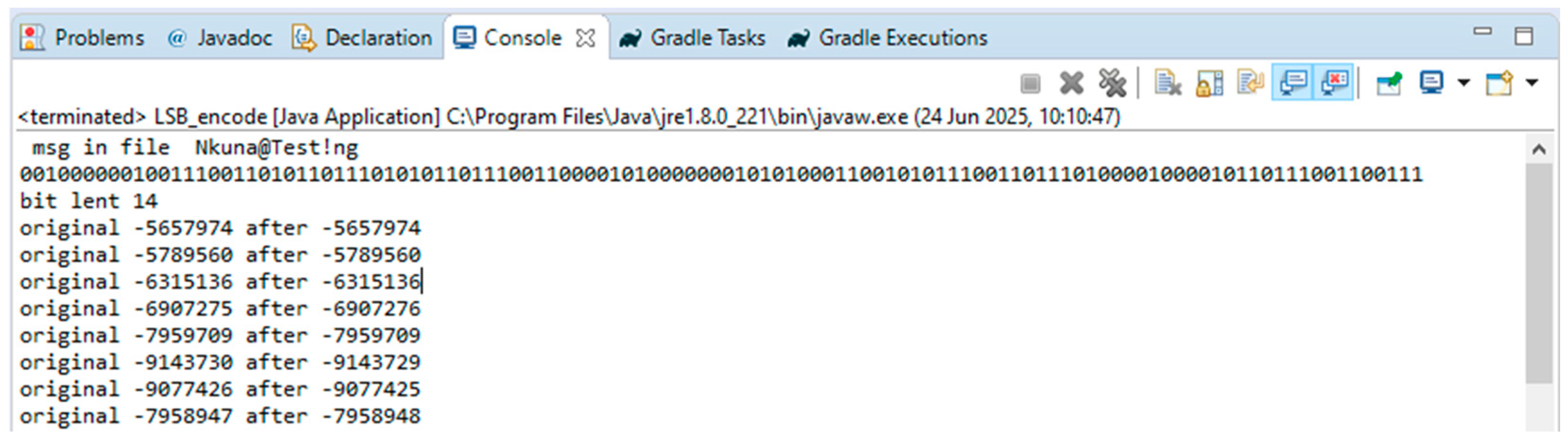

5.1.1. Encoding

The encoding algorithm was executed, and the following Java console output is shown.

The validation code “Nkuna@Test!ng” contains a total of 112 data bits as indicated in

Figure 5. Using Formula (9), the byte length of the validation code is calculated to be 14 bytes.

The output in

Figure 5 also shows that a total of 4 least significant bits were altered in the corresponding pixel locations of the cover (original) image during the encoding process on the sender’s side, and the validation code was successfully embedded within the cover image.

The graphics below illustrate the ‘before and after’ encoding visuals.

According to the illustrations in

Figure 6 and

Figure 7, the encoding process preserves the quality of the cover image and limits visible alterations to the human eye.

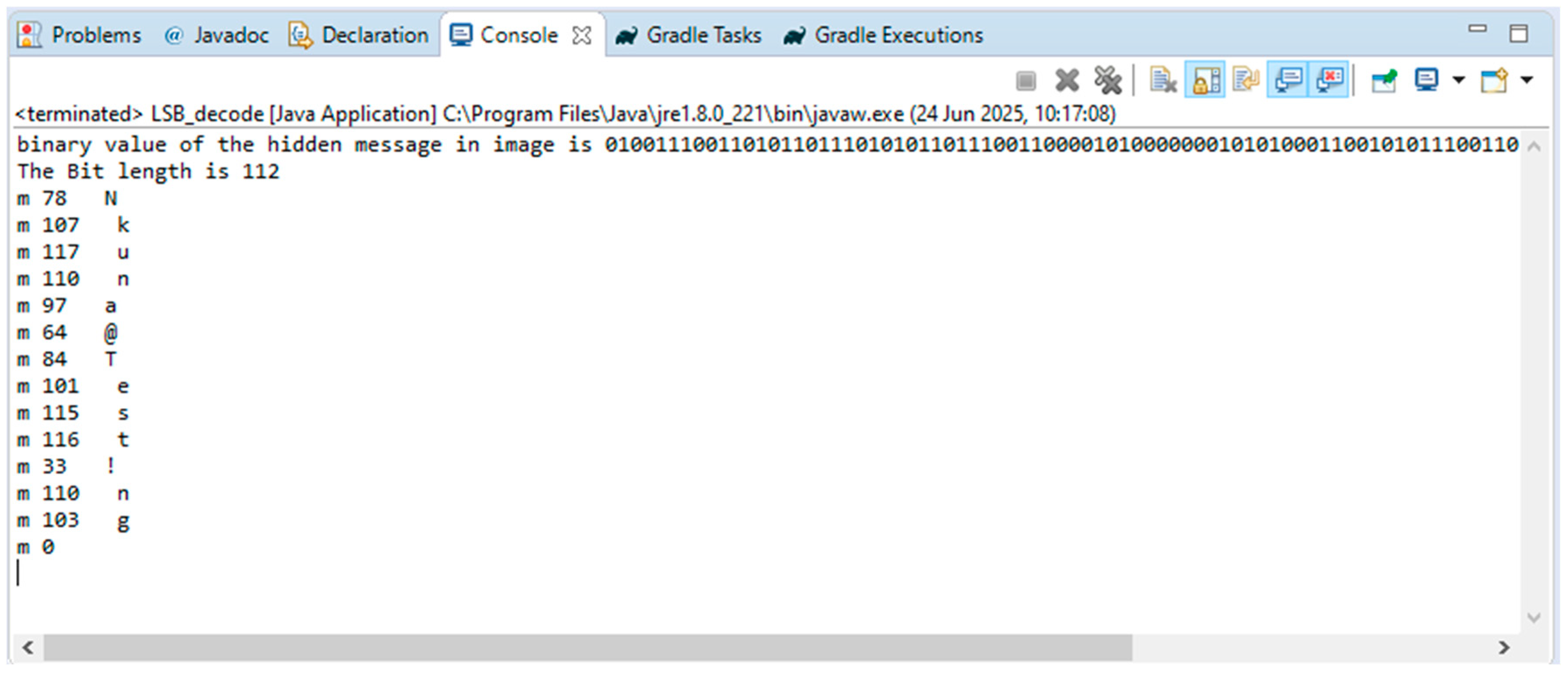

5.1.2. Decoding

The decoding algorithm was also executed on the receiver side, and the following results were obtained from the Java console.

The results presented in

Figure 8 above indicate that, according to the system model in

Figure 2, the original validation code encoded into the image was successfully decoded on the receiver side.

Hence, this demonstrates that the proposed framework achieves a successful data recovery, and this output (validation code) will then be fed into a pre-trained ANN for automated data forensic validation.

The validation code used for experimentation purposes in this case is a static string, which could potentially increase the chances of steganalysis and interception. Using a dynamic timestamp could further enhance system robustness by employing more complex, multi-character validation codes, which are more challenging for hackers to intercept.

5.2. Experimental Evaluation Results Obtained in Determining PSNR, SSIM, and MSE for the Proposed Framework, with a Cover Image Size of 259 × 194 Pixels

This subsection presents the experimental results obtained from the execution of the proposed model.

From the experimental evaluation and analysis results presented in

Table 1, the average PSNR for the proposed framework with a payload of up to 100,000 bits is 42.44 dB. These results align with theoretical knowledge to limit steganalysis and maximize stego image quality [

2,

3,

4,

5].

The average SSIM for the system, as per the parameters in

Table 1, is 0.9927, which aligns with theoretical expectations to maximize system robustness and resilience.

5.3. Determining the Level of Robustness of the Proposed DCT-LSB-2 Framework and the Previous State-of-the-Art Models, Considering Similar Cover Image Sizes

The proposed framework yields competitive PSNR results compared to the novel work presented in [

13,

14,

15] (see

Table 2 below). The results allow for the system to exhibit no physical modification properties on the stego image, thereby increasing the robustness of the stegosystem.

The novel work presented in [

13,

15] did not indicate the corresponding payload associated with the PSNR values presented.

The results in

Table 3 indicate that the proposed framework achieves competitive and, at times, improved PSNR performance compared to the state-of-the-art results presented in [

2].

The work in [

2] is limited to embedding rates between 0.05 and 0.9 bpp, whereas the proposed framework is evaluated for an embedding rate of up to 1.869 bpp (as shown in

Table 1).

The proposed framework exhibits stable PSNR values across varying embedding rates, demonstrating system stability and resilience compared to [

2].

According to the results in

Table 4, the proposed framework demonstrates highly competitive SSIM performance compared to the state-of-the-art work presented in [

2].

The results in [

2] were obtained using image sizes of 512 × 512 pixels. In contrast, the proposed framework was evaluated using a smaller image size of 259 × 194 pixels (hence the slight difference in the SSIM values).

The proposed model can be further assessed using the same image size as in [

2] to increase the SSIM values even higher, thereby enhancing imperceptibility, resilience, and robustness.

5.4. Comparison of the PSNR and SSIM Parameters Between the Proposed Framework and the Work Presented in [16]

The results in

Table 5 indicate an improved PSNR performance of the proposed framework compared to the state-of-the-art PVD_Red method presented in [

16]. The proposed DCT-LSB-2 scheme also demonstrates competitive SSIM performance compared to results in [

16], indicating a strong imperceptibility property of the stegosystem.

6. Conclusions and Recommendations

This paper proposes a two-layered secure steganography–ANN system that combines an image steganography framework with an artificial neural network for robust and resilient IoT systems. The proposed DCT-LSB-2 method demonstrated improved and competitive performance compared to previous state-of-the-art methods, indicating successful evaluation and potential for further investigation to enhance application and performance using higher pixel size cover images. The DCT-LSB-2 method achieved an average SSIM of 0.9927 across the entire range of embedding capacity values, from 0.00 bpp (minimum) to 1.988 bpp (maximum), as specified by the evaluation parameters presented in

Table 1. The proposed framework demonstrated stable PSNR performance throughout the evaluation with different payloads (in kB), indicating a resilient system with varying secret/validation code sizes. The proposed system further demonstrates complete and robust encoding and decoding capabilities, enabling the full recovery of validation codes for data forensic validation and thus satisfying the objectives and scope of this work.

Future work will involve evaluating the model using higher pixel size images of various color gradients and color distributions, as well as a real-time IoT camera stream with dynamic validation code/timestamp generation, to enhance the scheme’s resilience and robustness further. This future advancement will also test the model against steganalysis attacks. Another aspect is the application of convolutional neural networks (CNNs) rather than the current artificial neural networks (ANNs) in evaluating the proposed framework and examining how it will respond to this approach. CNNs may have more powerful features regarding image filtration and feature extraction due to their pooling layers, potentially making them more efficient than the current ANN used in this work [

17,

18,

19,

20,

21,

22]. Furthermore, the model will be expanded to cater to the dynamic distinction between intercepted images and deepfakes in the future.

Author Contributions

Conceptualization, M.C.N.; methodology, M.C.N.; software, M.C.N.; validation, M.C.N., A.A. and E.E.; formal analysis, M.C.N., A.A. and E.E.; investigation, M.C.N.; resources, A.A. and E.E.; data curation, A.A. and E.E.; writing—original draft preparation, M.C.N.; writing—review and editing, A.A. and E.E.; visualization, M.C.N.; supervision, A.A. and E.E.; project administration, M.C.N.; funding acquisition, A.A. and E.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Research Fund (NRF) of South Africa, grant number PMDS23041994813. The APC was funded by the University of Johannesburg’s Department of Electrical and Electronic Engineering Technology and the University of South Africa’s Center for Artificial Intelligence and Multidisciplinary Innovations, College of Research and Graduate Studies.

Data Availability Statement

All data have been presented in the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Singh, W.G.; Shikhar, M.; Kunwar, S.; Kapil, S. Robust stego-key directed LSB substitution scheme based upon cuckoo search and chaotic map. Optik 2018, 170, 106–124. [Google Scholar] [CrossRef]

- Alarood, A.; Ababneh, N.; Al-Khasawneh, M.; Rawashdeh, M.; Al-Omari, M. IoTSteg: Ensuring privacy and authenticity in internet of things networks using weighted pixels classification based image steganography. Clust. Comput. 2022, 25, 1607–1618. [Google Scholar] [CrossRef]

- Elhoseny, M.; Ramirez-Gonzalez, G.; Abu-Elnasr, O.M.; Shawkat, S.A.; Arunkumar, N.; Farouk, A. Secure Medical Data Transmission Model for IoT-Based Healthcare Systems. IEEE Access 2018, 6, 20596–20608. [Google Scholar] [CrossRef]

- Li, L.; Hossain, M.S.; El-Latif, A.A.; Alhamid, M.F. Distortion less secret image sharing scheme for Internet of Things system. Clust. Comput. 2019, 22, 2293–2307. [Google Scholar] [CrossRef]

- Pannu, A. Artificial Intelligence and its Application in Different Areas. Artif. Intell. 2015, 4, 79–84. Available online: https://api.semanticscholar.org/CorpusID:113451322 (accessed on 24 November 2025).

- Ebiaredoh-Mienye, S.A.; Esenogho, E.; Swart, T.G. Artificial neural network technique for improving prediction of credit card default: A stacked sparse autoencoder approach. Int. J. Electr. Comput. Eng. 2021, 11, 4392–4402. [Google Scholar] [CrossRef]

- Mathivanan, P.; Ganesh, A.B. ECG steganography based on tunable Q-factor wavelet transform and singular value decomposition. Int. J. Imaging Syst. Technol. 2020, 31, 270–287. [Google Scholar] [CrossRef]

- Umamaheshwari, M.U.; Sivasubramanian, S.; Pandiarajan, S. Analysis of Different Steganographic Algorithm for Secured Data Hiding. IJCSNS Int. J. Comput. Sci. Netw. Secur. 2010, 10, 154–160. Available online: http://paper.ijcsns.org/07_book/201008/20100825.pdf (accessed on 26 November 2025).

- Nath, J.; Nath, A. Advanced Steganography Algorithm Using Encrypted Secret Message. Int. J. Adv. Comput. Sci. Appl. 2011, 2, 19–24. [Google Scholar] [CrossRef]

- Houssein, E.H.; Ali, M.A.; Hassanien, A.E. An Image Steganography Algorithm using Haar Discrete Wavelet Transform with Advanced Encryption Algorithm. In Proceedings of the Federated Conference on Computer Science and Information Systems (FedCSIS), Gdansk, Poland, 11–14 September 2016. [Google Scholar]

- Zhang, Y.; Jiang, J.; Zha, Y.; Zhang, H.; Zhao, S. Research on Embedding Capacity and Efficiency of Information Hiding Based on Digital Images. Int. J. Intell. Sci. 2013, 3, 77–85. [Google Scholar] [CrossRef]

- Driss, M.; Berriche, L.; Atitallah, S.B.; Rekik, S. Steganography in IoT: A Comprehensive Survey on Approaches, Challenges, and Future Directions. IEEE Access 2025, 13, 74844–74875. [Google Scholar] [CrossRef]

- Susanto, A.; Sinaga, D.; Mulyono, I.U. PSNR and SSIM Performance Analysis of Schur Decomposition for Imperceptible Steganography. Sci. J. Inform. 2024, 11, 803–810. [Google Scholar] [CrossRef]

- Suguna, T.; Padma, C.; Rani, M.J.; Priya, G.P. Hybrid Cryptography and Steganography-Based Security System for IoT Networks. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 415–421. [Google Scholar] [CrossRef]

- Alsamaraee, S.; Ali, A.S. A crypto-steganography scheme for IoT applications based on bit interchange and crypto-system. Bull. Electr. Eng. Inform. 2022, 11, 3539–3550. [Google Scholar] [CrossRef]

- Setiadi, D.I. PSNR vs SSIM: Imperceptibility quality assessment for image steganography. Multimed. Tools Appl. 2021, 80, 8423–8444. [Google Scholar] [CrossRef]

- Helmy, M.; Torkey, H. Secured Audio Framework Based on Chaotic-Steganography Algorithm for Internet of Things Systems. Computers 2025, 14, 207. [Google Scholar] [CrossRef]

- Nkuna, M.C.; Esenogho, E.; Heymann, R.; Matlotse, E. Using Artificial Neural Network to Test Image Covert Communication Effect. J. Adv. Inf. Technol. 2023, 14, 741–748. [Google Scholar] [CrossRef]

- Daiyrbayeva, E.; Yerimbetova, A.; Merzlyakova, E.; Sadyk, U.; Sarina, A.; Taichik, Z.; Ismailova, I.; Iztleuov, Y.; Nurmangaliyev, A. An Adaptive Steganographic Method for Reversible Information Embedding in X-Ray Images. Computers 2025, 14, 386. [Google Scholar] [CrossRef]

- Yin, J.H.J.; Fen, G.M.; Mughal, F.; Iranmanesh, V. Internet of Things: Securing Data Using Image Steganography. In Proceedings of the 3rd International Conference on Artificial Intelligence, Modelling and Simulation (AIMS), Kota Kinabalu, Malaysia, 2–4 December 2015. [Google Scholar] [CrossRef]

- Kallapu, B.; Janardhan, A.N.; Hejamadi, R.M.; Shrinivas, K.R.N.; Saritha Ramesh, R.K.; Gabralla, L.A. Multi-Layered Security Framework Combining Steganography and DNA Coding. Systems 2025, 13, 341. [Google Scholar] [CrossRef]

- Nkuna, M.C.; Ali, A.; Esenogho, E. Integrating Image Steganography Techniques into an Existing Efficient Image Recognition Artificial Neural Network for Optimal Performance. In Proceedings of the IEEE PES/IAS Power Africa Conference, Johannesburg, South Africa, 7–11 October 2024. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |