Explainable Deep Kernel Learning for Interpretable Automatic Modulation Classification

Abstract

1. Introduction

- –

- An enhanced convolutional RFF mechanism leveraging kernel functions to extract salient features from I/Q signals;

- –

- A threshold denoising stage based on the Residual Shrinkage Building Unit (RSBU) architecture to improve signal fidelity;

- –

- A compact time-domain feature extraction module, which combines a CNN with a Gated Recurrent Unit (GRU) before a final dense neural network classification layer;

- –

- A Class Activation Map (CAM)-based approach to reveal discriminative input features learned by the model across varying SNR levels.

2. Materials and Methods

2.1. Automatic Modulation Classification (AMC)

2.2. Enhanced Signal Representation via Convolutional Random Fourier Features

- –

- Randomized Filter Basis: The layer’s convolutional filters, denoted as W, are established a priori by drawing samples from the spectral distribution of a chosen kernel (e.g., a Gaussian distribution for an RBF kernel). These filters can either remain fixed, serving as a static random basis, or be fine-tuned via backpropagation.

- –

- Convolutional Projection and Sine–Cosine Mapping: The projection of an input tensor X onto the random frequency basis is performed by the convolution . In direct correspondence with the formulation in Equation (6), the layer computes both sine and cosine transformations of the projected output. These two sets of feature maps are subsequently concatenated along the channel axis:where s is the scaling hyperparameter, ‘kernel_scale’, which normalizes the projected values before the trigonometric mapping.

- –

- Stochastic Approximation Normalization: A final scaling factor of , where D is the number of output filters (‘output_dim’), is applied to the concatenated feature maps. This normalization is a theoretical requisite of the Monte Carlo formulation, ensuring that the inner product of the output features remains a consistent estimator of the target kernel.

2.3. Class Activation Mapping-Based Model Interpretability

3. Experimental Setup

3.1. Dataset Description

3.2. Architecture Details

3.3. Ablation Study Design

- –

- CRFFDT-Net (trainable_rff): This is the complete proposed architecture, where the randomly sampled Fourier features are fine-tuned during training.

- –

- fixed_rff: This is the same architecture as the full model, but the randomly sampled Fourier features in the CRFFSinCos layer are kept fixed and are not updated during backpropagation.

- –

- no_rff: Ths CRFFSinCos layer is replaced by a standard 2D convolutional layer with the same input/output dimensions and number of filters.

- –

- None: An identity function, where no denoising is applied.

- –

- Soft Thresholding (soft-std): A standard soft thresholding function is applied using a universal, per-channel threshold , where is the estimated standard deviation of the noise in channel c, H and W are the feature map dimensions, and .

- –

- Hard Thresholding (hard-std): A hard thresholding function (setting values below to zero) is applied using the same universal threshold.

- –

- Garrote Thresholding (garrote-std): A non-negative garrote thresholding function is applied, again using the same universal threshold.

- –

- RSBU (Adaptive): Our original proposed block, which estimates an adaptive, per-channel threshold where is derived from the input features via a BN-ReLU-MLP gate. This module then applies soft thresholding.

3.4. Quantitative Interpretability Evaluation

3.5. Training and Validation Strategy

4. Results and Discussion

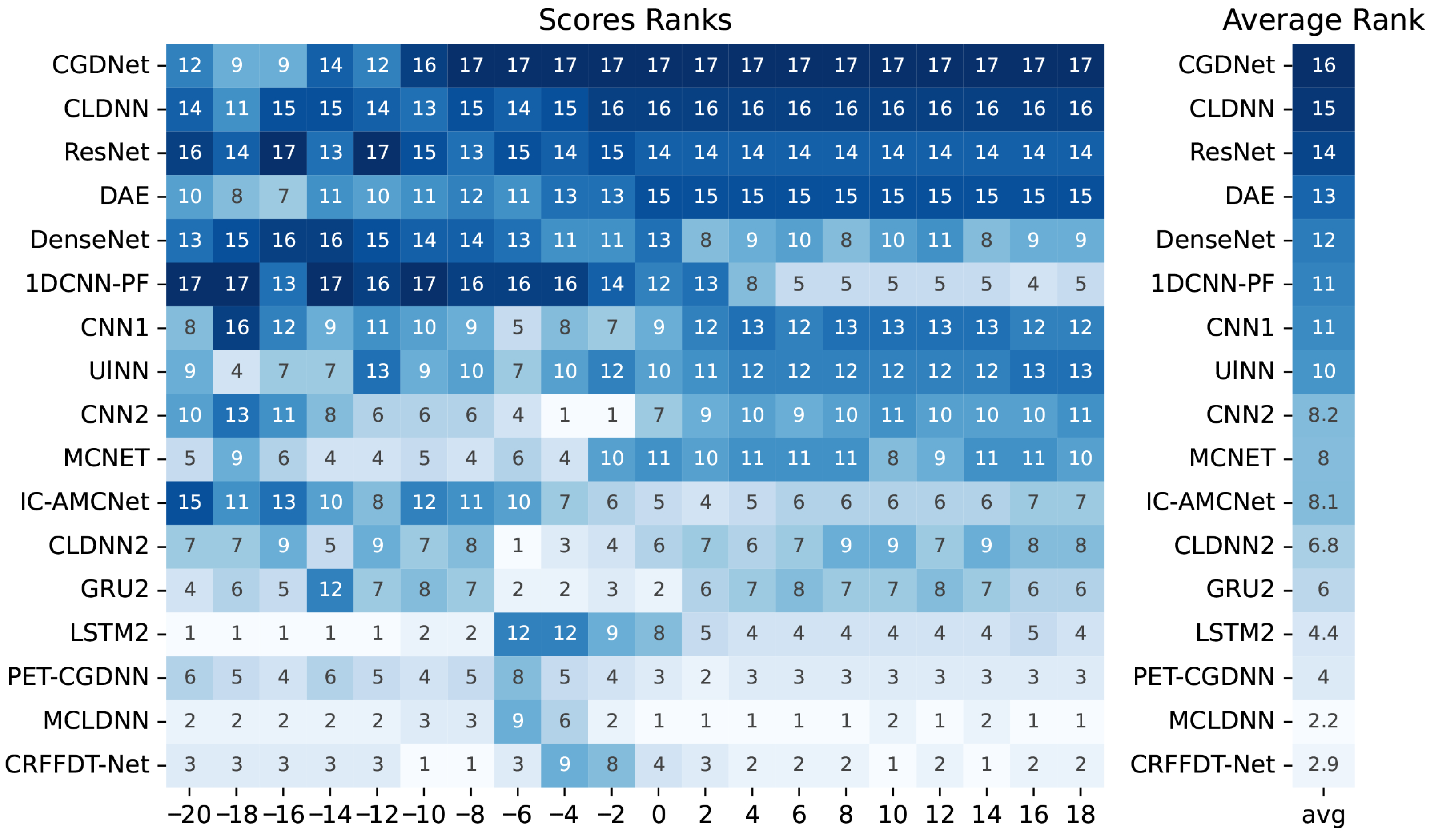

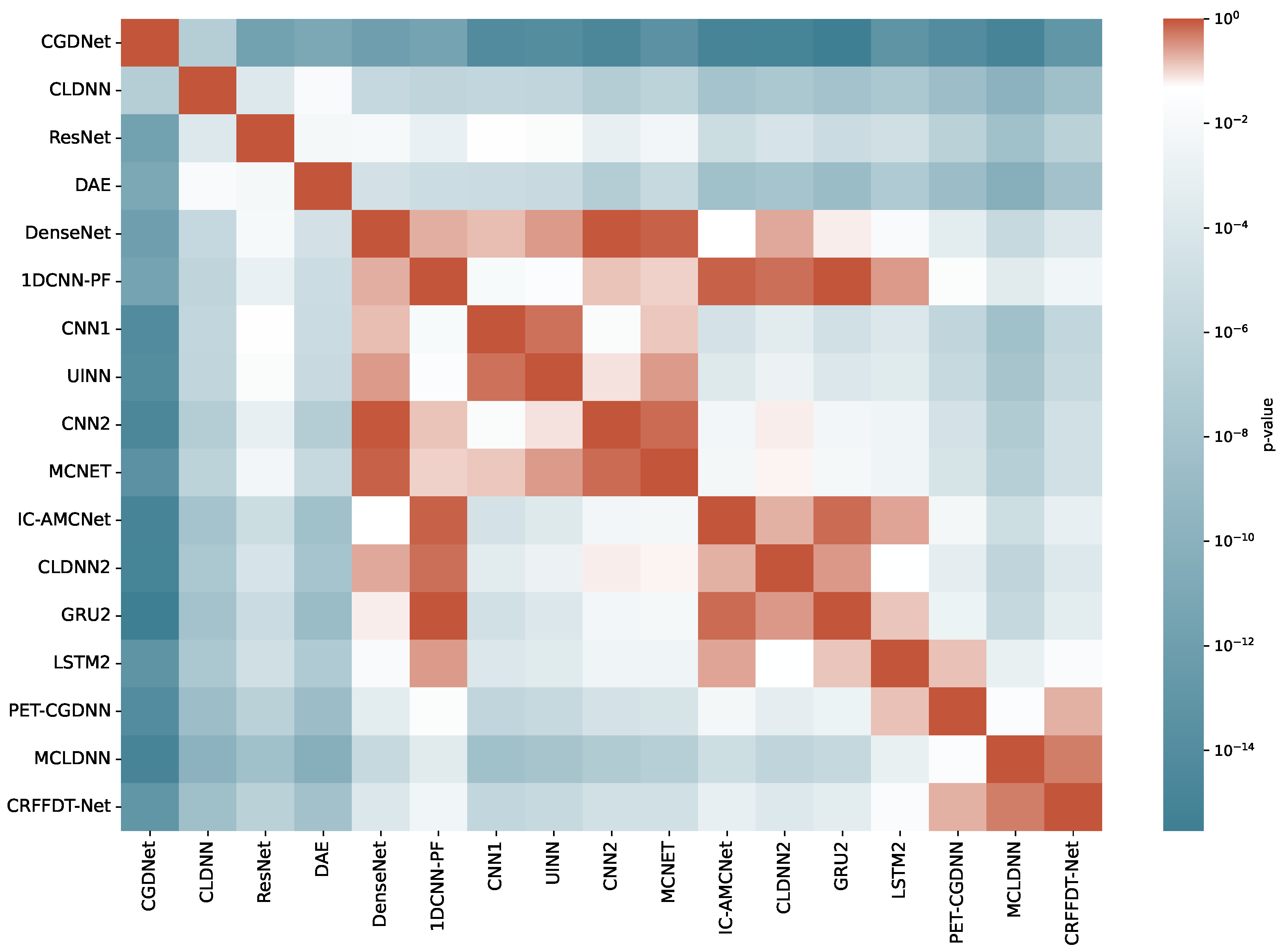

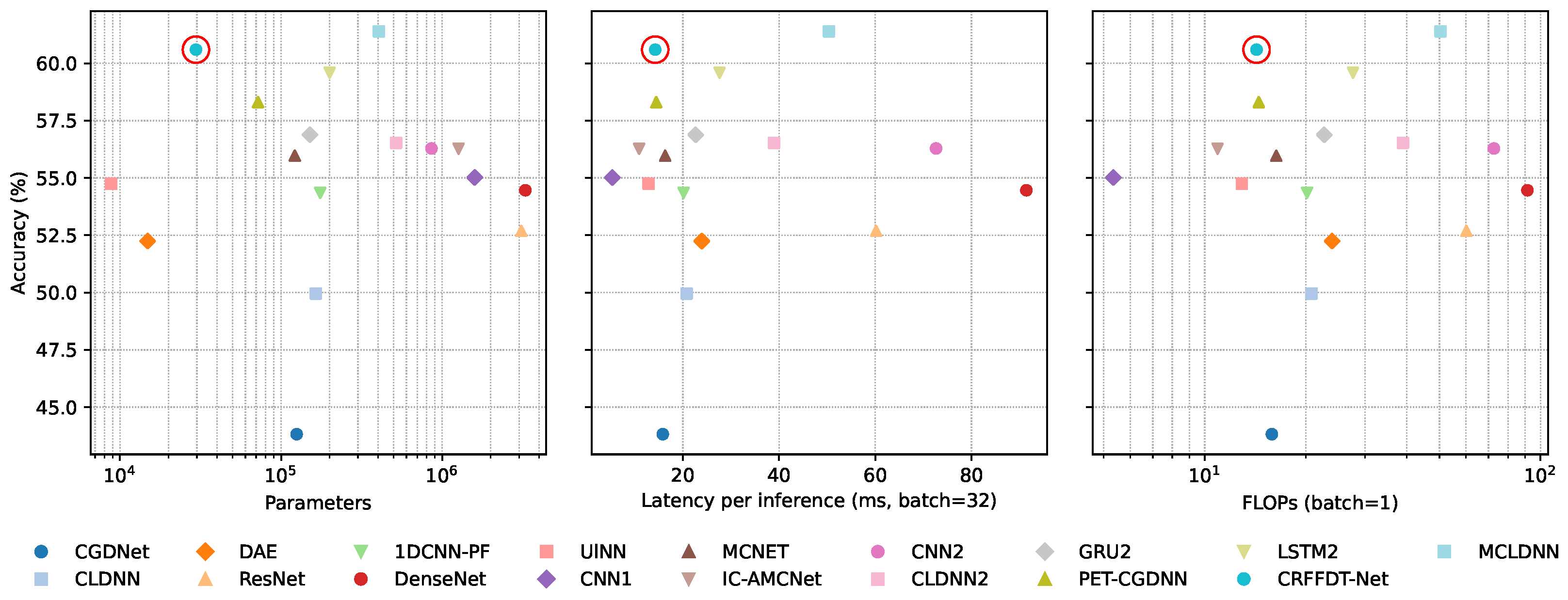

4.1. Classification Performance

4.2. Model Complexity Analysis

4.3. Ablation Study Results

4.4. CAM-Based Model Interpretability

4.5. Achieved Interpretability Evaluation Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abdel-Moneim, M.A.; El-Shafai, W.; Abdel-Salam, N.; El-Rabaie, E.S.M.; Abd El-Samie, F.E. A survey of traditional and advanced automatic modulation classification techniques, challenges, and some novel trends. Int. J. Commun. Syst. 2021, 34, e4762. [Google Scholar] [CrossRef]

- Liao, K.; Zhao, Y.; Gu, J.; Zhang, Y.; Zhong, Y. Sequential Convolutional Recurrent Neural Networks for Fast Automatic Modulation Classification. IEEE Access 2021, 9, 27182–27188. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, J.; Liu, M.; Gui, G. LightAMC: Lightweight Automatic Modulation Classification via Deep Learning and Compressive Sensing. IEEE Trans. Veh. Technol. 2020, 69, 3491–3495. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, H.; Zhu, H.; Adebisi, B.; Gui, G.; Gacanin, H.; Adachi, F. NAS-AMR: Neural Architecture Search-Based Automatic Modulation Recognition for Integrated Sensing and Communication Systems. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 1374–1386. [Google Scholar] [CrossRef]

- Xiao, Y.; Jin, X.; Shen, Y.; Guan, Q. Joint relay selection and adaptive modulation and coding for wireless cooperative communications. IEEE Sens. J. 2021, 21, 25508–25516. [Google Scholar] [CrossRef]

- Chahil, S.T.H.; Zakwan, M.; Khan, K.; Fazil, A. Performance analysis of different signal representations and optimizers for CNN based automatic modulation classification. Wireless Personal Commun. 2024, 139, 2503–2528. [Google Scholar] [CrossRef]

- Cheng, R.; Chen, Q.; Huang, M. Automatic modulation recognition using deep CVCNN-LSTM architecture. Alex. Eng. J. 2024, 104, 162–170. [Google Scholar] [CrossRef]

- Zayed, M.M.; Mohsen, S.; Alghuried, A.; Hijry, H.; Shokair, M. IoUT-Oriented an efficient CNN model for modulation schemes recognition in optical wireless communication systems. IEEE Access 2024, 12, 186836–186855. [Google Scholar] [CrossRef]

- Liu, X.; Li, C.J.; Jin, C.T.; Leong, P.H. Wireless signal representation techniques for automatic modulation classification. IEEE Access 2022, 10, 84166–84187. [Google Scholar] [CrossRef]

- Xu, B.; Bhatti, U.A.; Tang, H.; Yan, J.; Wu, S.; Sarhan, N.; Awwad, E.M.; Syam, M.S.; Ghadi, Y.Y. Towards explainability for AI-based edge wireless signal automatic modulation classification. J. Cloud Comput. 2024, 13, 10. [Google Scholar] [CrossRef]

- Xu, Y.; Xu, G.; Ma, C.; An, Z. An Advancing Temporal Convolutional Network for 5G Latency Services via Automatic Modulation Recognition. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 3002–3006. [Google Scholar] [CrossRef]

- Kindermans, P.J.; Hooker, S.; Adebayo, J.; Alber, M.; Schütt, K.T.; Dähne, S.; Erhan, D.; Kim, B. The (un)reliability of saliency methods. In Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Cham, Switzerland, 2019; pp. 267–280. [Google Scholar]

- Yeh, C.K.; Hsieh, C.Y.; Suggala, A.; Inouye, D.I.; Ravikumar, P.K. On the (in)fidelity and sensitivity of explanations. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Schwab, P.; Karlen, W. Cxplain: Causal explanations for model interpretation under uncertainty. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Choi, I.; Kim, W.C. Unlocking ETF price forecasting: Exploring the interconnections with statistical dependence-based graphs and xAI techniques. Knowl.-Based Syst. 2024, 305, 112567. [Google Scholar] [CrossRef]

- Abd-Elaziz, O.F.; Abdalla, M.; Elsayed, R.A. Deep Learning–Based Automatic Modulation Classification Using Robust CNN Architecture for Cognitive Radio Networks. Sensors 2023, 23, 9467. [Google Scholar] [CrossRef]

- Ma, M.; Liu, S.; Wang, S.; Shi, S. Refined semi-supervised modulation classification: Integrating consistency regularization and pseudo-labeling techniques. Future Internet 2024, 16, 38. [Google Scholar] [CrossRef]

- Dileep, P.; Singla, A.; Das, D.; Bora, P.K. Deep Learning-Based Automatic Modulation Classification Over MIMO Keyhole Channels. IEEE Access 2022, 10, 119566–119574. [Google Scholar] [CrossRef]

- Zheng, Q.; Tian, X.; Yu, Z.; Ding, Y.; Elhanashi, A.; Saponara, S.; Kpalma, K. MobileRaT: A lightweight radio transformer method for automatic modulation classification in drone communication systems. Drones 2023, 7, 596. [Google Scholar] [CrossRef]

- Ghasemzadeh, P.; Banerjee, S.; Hempel, M.; Sharif, H. Accuracy analysis of feature-based automatic modulation classification with blind modulation detection. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019; pp. 1000–1004. [Google Scholar]

- Alarabi, A.; Alkishriwo, O.A.S. Modulation Classification Based on Statistical Features and Artificial Neural Network. In Proceedings of the 2021 IEEE 1st International Maghreb Meeting of the Conference on Sciences and Techniques of Automatic Control and Computer Engineering MI-STA, Tripoli, Libya, 25–27 May 2021; pp. 748–751. [Google Scholar]

- Zheng, Q.; Tian, X.; Yu, L.; Elhanashi, A.; Saponara, S. Recent advances in automatic modulation classification technology: Methods, results, and prospects. Int. J. Intell. Syst. 2025, 2025, 4067323. [Google Scholar] [CrossRef]

- Kumaravelu, V.B.; Gudla, V.V.; Murugadass, A.; Jadhav, H.; Prakasam, P.; Imoize, A.L. A Deep Learning-Based Robust Automatic Modulation Classification Scheme for Next-Generation Networks. J. Circuits Syst. Comput. 2023, 32, 2350067. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, W.; Zhao, Z.; Tang, P.; Zhang, Z. Robust Automatic Modulation Classification via a Lightweight Temporal Hybrid Neural Network. Sensors 2024, 24, 7908. [Google Scholar] [CrossRef]

- Murphy, K.P. Probabilistic Machine Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2022. [Google Scholar]

- Ma, Z.; Fang, S.; Fan, Y.; Hou, S.; Xu, Z. Tackling Few-Shot Challenges in Automatic Modulation Recognition: A Multi-Level Comparative Relation Network Combining Class Reconstruction Strategy. Sensors 2024, 24, 4421. [Google Scholar] [CrossRef]

- Jagannath, A.; Jagannath, J.; Kumar, P.S.P.V. A comprehensive survey on radio frequency (RF) fingerprinting: Traditional approaches, deep learning, and open challenges. Comput. Netw. 2022, 219, 109455. [Google Scholar] [CrossRef]

- Krzyston, J.; Bhattacharjea, R.; Stark, A. Complex-Valued Convolutions for Modulation Recognition using Deep Learning. In Proceedings of the 2020 IEEE International Conference on Communications Workshops (ICC Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Zhang, J.; Li, Y.; Hu, S.; Zhang, W.; Wan, Z.; Yu, Z.; Qiu, K. Joint Modulation Format Identification and OSNR Monitoring Using Cascaded Neural Network With Transfer Learning. IEEE Photonics J. 2021, 13, 7200910. [Google Scholar] [CrossRef]

- Fu, X.; Gui, G.; Wang, Y.; Ohtsuki, T.; Adebisi, B.; Gacanin, H.; Adachi, F. Lightweight Automatic Modulation Classification Based on Decentralized Learning. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 57–70. [Google Scholar] [CrossRef]

- Wang, M.; Fang, S.; Fan, Y.; Li, J.; Zhao, Y.; Wang, Y. An ultra lightweight neural network for automatic modulation classification in drone communications. Sci. Rep. 2024, 14, 21540. [Google Scholar] [CrossRef]

- Xu, J.; Luo, C.; Parr, G.; Luo, Y. A Spatiotemporal Multi-Channel Learning Framework for Automatic Modulation Recognition. IEEE Wirel. Commun. Lett. 2020, 9, 1629–1632. [Google Scholar] [CrossRef]

- An, T.T.; Argyriou, A.; Puspitasari, A.A.; Cotton, S.L.; Lee, B.M. Efficient Automatic Modulation Classification for Next-Generation Wireless Networks. IEEE Trans. Green Commun. Netw. 2025. [Google Scholar] [CrossRef]

- Li, X.; Xiong, H.; Li, X.; Wu, X.; Zhang, X.; Liu, J.; Bian, J.; Dou, D. Interpretable deep learning: Interpretation, interpretability, trustworthiness, and beyond. Knowl. Inf. Syst. 2022, 64, 3197–3234. [Google Scholar] [CrossRef]

- Wang, T.; Dong, B.; Zhang, K.; Li, J.; Xu, L. Slim-RFFNet: Slim deep convolution random Fourier feature network for image classification. Knowl.-Based Syst. 2022, 237, 107878. [Google Scholar] [CrossRef]

- Jimenez-Castaño, C.A.; Álvarez-Meza, A.M.; Aguirre-Ospina, O.D.; Cárdenas-Peña, D.A.; Orozco-Gutiérrez, Á.A. Random fourier features-based deep learning improvement with class activation interpretability for nerve structure segmentation. Sensors 2021, 21, 7741. [Google Scholar] [CrossRef]

- Han, Y.; Hong, B.W. Deep learning based on fourier convolutional neural network incorporating random kernels. Electronics 2021, 10, 2004. [Google Scholar] [CrossRef]

- Harper, C.; Wood, L.; Gerstoft, P.; Larson, E.C. Scaling Continuous Kernels with Sparse Fourier Domain Learning. arXiv 2024, arXiv:2409.09875. [Google Scholar] [CrossRef]

- Likhosherstov, V.; Choromanski, K.M.; Dubey, K.A.; Liu, F.; Sarlos, T.; Weller, A. Chefs’ random tables: Non-trigonometric random features. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 34559–34573. [Google Scholar]

- Mosquera-Trujilo, C.E.; Collazos-Huertas, D.F.; Álvarez-Meza, A.M.; Castellanos-Dominguez, G. Lightweight and Interpretable DL Model Using Convolutional RFF for AMC. In Advances in Computing, Proceedings of the Colombian Conference on Computing, Manizales, Colombia, 4–6 September 2024; Springer: Cham, Switzerland, 2024; pp. 308–323. [Google Scholar]

- Schölkopf, B.; Smola, A.J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; Adaptive Computation and Machine Learning; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Rahimi, A.; Recht, B. Random features for large-scale kernel machines. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2007; Volume 20. [Google Scholar]

- Bochner, S. Contains the classical statement of Bochner’s theorem on positive-definite functions. In Lectures on Fourier Integrals; Annals of Mathematics Studies; Princeton University Press: Princeton, NJ, USA, 1959; Volume 42. [Google Scholar]

- Sutherland, D.J.; Schneider, J. On the Error of Random Fourier Features. arXiv 2015, arXiv:1506.02785. [Google Scholar] [CrossRef]

- Aguirre-Arango, J.C.; Álvarez Meza, A.M.; Castellanos-Dominguez, G. Feet Segmentation for Regional Analgesia Monitoring Using Convolutional RFF and Layer-Wise Weighted CAM Interpretability. Computation 2023, 11, 113. [Google Scholar] [CrossRef]

- Chattopadhyay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-based Visual Explanations for Deep Convolutional Networks. arXiv 2017, arXiv:1710.11063. [Google Scholar]

- Rajaraman, P.; Shanmugam, U. Explainable AI for Medical Imaging: Advancing Transparency and Trust in Diagnostic Decision-Making. In Proceedings of the 2023 Innovations in Power and Advanced Computing Technologies (i-PACT), Kuala Lumpur, Malaysia, 8–10 December 2023; pp. 1–6. [Google Scholar]

- Vo, H.T.V.; Thien, N.N.; Mui, K.C.; Tien, P.P. Enhancing Confidence in Brain Tumor Classification Models with Grad-CAM and Grad-CAM++. Indones. J. Electr. Eng. Inform. (IJEEI) 2024, 12, 926–939. [Google Scholar] [CrossRef]

- Salimy, A.; Mitiche, I.; Boreham, P.; Nesbitt, A.; Morison, G. Dynamic noise reduction with deep residual shrinkage networks for online fault classification. Sensors 2022, 22, 515. [Google Scholar] [CrossRef]

- Tripathi, P.; Bhola, B.; Kumar, R.; Turlapaty, A.C. Advanced CNN-RNN Model Based Automatic Modulation Classification on Resource-Constrained End Devices. In Proceedings of the 2024 IEEE 8th International Conference on Information and Communication Technology (CICT), Prayagraj, India, 6–8 December 2024; pp. 1–4. [Google Scholar]

- Di, C.; Ji, J.; Sun, C.; Liang, L. SOAMC: A Semi-Supervised Open-Set Recognition Algorithm for Automatic Modulation Classification. Electronics 2024, 13, 4196. [Google Scholar] [CrossRef]

- Wang, T.; Hu, Y.; Fang, Q.; He, B.; Gong, X.; Wang, P. DK-Former: A Hybrid Structure of Deep Kernel Gaussian Process Transformer Network for Enhanced Traffic Sign Recognition. IEEE Trans. Intell. Transp. Syst. 2024, 25, 18561–18572. [Google Scholar] [CrossRef]

- Chen, H.; Zhou, R.; Yuan, Q.; Guo, Z.; Fu, W. KAN-ResNet-Enhanced Radio Frequency Fingerprint Identification with Zero-Forcing Equalization. Sensors 2025, 25, 2222. [Google Scholar] [CrossRef]

- Lu, X.; Wang, R.; Zhang, H.; Zhou, J.; Yun, T. PosE-Enhanced Point Transformer with Local Surface Features (LSF) for Wood–Leaf Separation. Forests 2024, 15, 2244. [Google Scholar] [CrossRef]

- Wang, C.; Cai, Z. TADmobileNet: A More Reliable Automatic Modulation Classification Network. In Proceedings of the 2024 Global Reliability and Prognostics and Health Management Conference (PHM-Beijing), Beijing, China, 11–13 October 2024; pp. 1–8. [Google Scholar]

| Model | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| CGDNet | 0.438 | 0.244 | 0.168 | 0.182 |

| CLDNN | 0.500 | 0.230 | 0.171 | 0.182 |

| ResNet | 0.527 | 0.230 | 0.168 | 0.181 |

| DAE | 0.522 | 0.269 | 0.204 | 0.217 |

| DenseNet | 0.545 | 0.272 | 0.208 | 0.219 |

| 1DCNN-PF | 0.544 | 0.349 | 0.288 | 0.299 |

| CNN1 | 0.550 | 0.247 | 0.188 | 0.200 |

| UINN | 0.547 | 0.239 | 0.178 | 0.192 |

| CNN2 | 0.563 | 0.273 | 0.214 | 0.223 |

| MCNET | 0.560 | 0.252 | 0.190 | 0.203 |

| IC-AMCNet | 0.563 | 0.274 | 0.215 | 0.227 |

| CLDNN2 | 0.565 | 0.263 | 0.203 | 0.216 |

| GRU2 | 0.569 | 0.261 | 0.200 | 0.217 |

| LSTM2 | 0.596 | 0.277 | 0.222 | 0.233 |

| PET-CGDNN | 0.583 | 0.286 | 0.231 | 0.241 |

| MCLDNN | 0.614 | 0.300 | 0.250 | 0.261 |

| CRFFDT-Net | 0.609 | 0.277 | 0.219 | 0.216 |

| Model Variant | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Ablation of RFF Layer | ||||

| CRFFDT-Net (trainable_rff) | 0.8948 | 0.4065 | 0.3735 | 0.3873 |

| no_rff (Standard CNN) | 0.8966 | 0.4305 | 0.3975 | 0.4114 |

| fixed_rff | 0.8917 | 0.3820 | 0.3498 | 0.3627 |

| Ablation of Denoising Module | ||||

| RSBU (Adaptive) | 0.8948 | 0.4065 | 0.3735 | 0.3873 |

| none (No Denoising) | 0.8889 | 0.3772 | 0.3437 | 0.3575 |

| soft-std, k = 1, univ | 0.8767 | 0.3975 | 0.3642 | 0.3770 |

| hard-std, k = 1, univ | 0.8749 | 0.3793 | 0.3414 | 0.3562 |

| garrote-std, k = 1 | 0.8653 | 0.3795 | 0.3394 | 0.3550 |

| Model | AUCdel | AUCins |

|---|---|---|

| CRFFDT-Net | 0.3498 | 0.3966 |

| MCLDNN | 0.3585 | 0.3898 |

| PET-CGDNN | 0.3277 | 0.3466 |

| fixed_rff | 0.3604 | 0.3573 |

| no_rff | 0.3790 | 0.3700 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mosquera-Trujillo, C.E.; Lugo-Rojas, J.C.; Collazos-Huertas, D.F.; Álvarez-Meza, A.M.; Castellanos-Dominguez, G. Explainable Deep Kernel Learning for Interpretable Automatic Modulation Classification. Computers 2025, 14, 372. https://doi.org/10.3390/computers14090372

Mosquera-Trujillo CE, Lugo-Rojas JC, Collazos-Huertas DF, Álvarez-Meza AM, Castellanos-Dominguez G. Explainable Deep Kernel Learning for Interpretable Automatic Modulation Classification. Computers. 2025; 14(9):372. https://doi.org/10.3390/computers14090372

Chicago/Turabian StyleMosquera-Trujillo, Carlos Enrique, Juan Camilo Lugo-Rojas, Diego Fabian Collazos-Huertas, Andrés Marino Álvarez-Meza, and German Castellanos-Dominguez. 2025. "Explainable Deep Kernel Learning for Interpretable Automatic Modulation Classification" Computers 14, no. 9: 372. https://doi.org/10.3390/computers14090372

APA StyleMosquera-Trujillo, C. E., Lugo-Rojas, J. C., Collazos-Huertas, D. F., Álvarez-Meza, A. M., & Castellanos-Dominguez, G. (2025). Explainable Deep Kernel Learning for Interpretable Automatic Modulation Classification. Computers, 14(9), 372. https://doi.org/10.3390/computers14090372