ChatGPT’s Expanding Horizons and Transformative Impact Across Domains: A Critical Review of Capabilities, Challenges, and Future Directions

Abstract

1. Introduction: The ChatGPT Inflection Point in AI and Its Applications

2. Advancements in Natural Language Understanding with ChatGPT: Capabilities, Innovations, and Critical Frontiers

2.1. Core NLU Architecture and Functionalities

2.2. Innovative NLU Techniques and Their Impact

- LLMs for Data Annotation and Cleansing: LLMs are increasingly used to automate or assist in data annotation, a traditionally labor-intensive task. For example, the Multi-News+ dataset was enhanced by using LLMs with chain-of-thought and majority voting to cleanse and classify documents, improving dataset quality for multi-document summarization tasks.

- Factual Inconsistency Detection: Given LLMs’ propensity for hallucination, techniques to detect factual inconsistencies are crucial. Methods like FIZZ, which employ fine-grained atomic fact decomposition and alignment with source documents, offer more interpretable ways to identify inaccuracies in abstractive summaries.

- Multimodal NLU Enhancement: Research is exploring the integration of other modalities, such as acoustic speech information, into LLM frameworks for tasks like depression detection, indicating a move toward more holistic NLU that mirrors human multimodal [22].

- “Evil Twin” Prompts: The discovery of “evil twin” prompts, obfuscated and uninterpretable inputs that can elicit desired outputs and transfer between models, opens new avenues for understanding LLM vulnerabilities and their internal representations, posing both security risks and research opportunities. For NLU, these prompts expose the often-superficial nature of model comprehension, revealing instances where models respond to statistical patterns rather than genuine semantic understanding. thereby challenging the very definition of “understanding” in AI systems [7,23,24,25,26,27,28,29], pushing theoretical frontiers to delineate between mere linguistic mimicry and robust, systematic generalization akin to human cognition.

2.3. Evolution and Critical Assessment of GPT Models in NLU

- Misinformation and Hallucinations: A persistent issue is the generation of plausible-sounding but incorrect or nonsensical information, often termed “hallucinations” [1]. This undermines reliability, especially in critical applications.

2.3.1. Analysis of the GPT-3.5 Series: The Catalyst for Widespread Generative AI

Architectural Profile and Training Paradigm

Core Competencies and Limitations

The GLUE Score and Domain-Specific Tests

2.3.2. Analysis of the GPT-4 Series: A Leap in Reasoning and Multimodality

Architectural Enhancements and Expanded Context

Advanced Capabilities in Complex Reasoning and Nuanced Generation

Mitigating Knowledge Gaps: The Critical Role of Retrieval-Augmented Generation (RAG)

- Indexing: External data from sources like document repositories, databases, or APIs is converted into numerical representations (embeddings) and stored in a specialized vector database. This creates an accessible knowledge library for the model [18].

- Retrieval: When a user submits a query, the system converts the query into an embedding and performs a similarity search against the vector database to find and retrieve the most relevant chunks of information [18].

- Generation: The retrieved information is then appended to the original user prompt and sent to the LLM, which uses this augmented context to generate a more accurate, relevant, and grounded response [18].

2.3.3. Analysis of the GPT-4o Series: The Pursuit of Omni-Modal, Efficient Intelligence

The “Omni” Architecture: Unifying Modalities and Advancing Tokenization

Strengths in Interactive and Real-Time Content Generation

The MMLU Score

2.3.4. Analysis of the o1-Series: Specialization in Advanced STEM Reasoning

A New Paradigm: “System 2” Thinking and Chain-of-Thought

Dominance in Scientific and Mathematical Domains

- o1-preview: The initial release, designed to tackle complex problems that require a combination of reasoning and broad general knowledge [45].

- o1-mini: A faster and more cost-effective version that is highly optimized for STEM-related tasks like math and coding. It has less broad world knowledge than the preview model but excels at pure reasoning [45].

- o1 (full): The most capable version, integrating the highest levels of reasoning and multimodality [45].

The AIME and Code Generation Tasks

2.3.5. Synthesis and Future Outlook: Trajectories in LLM Development

Comparative Synthesis Across Model Generations

Key Trends and Implications for Application

2.4. Advancing Method and Theory in NLU Through ChatGPT

3. The New Epoch of Content Generation: Diverse Applications, Quality Assurance, and Ethical Imperatives

3.1. ChatGPT’s Role in Diverse Content Generation and Task Automation

- Technical and Scientific Content: In engineering, ChatGPT assists in drafting reports, generating software documentation, and producing code snippets [69,70]. Multivocal literature reviews indicate error rates for engineering tasks average around 20–30% for GPT-4 [37]. Conversely, in creative writing, while generating novel ideas and structures, outputs can sometimes lack the unique voice or subtle emotional depth characteristic of human authorship, necessitating significant human refinement [37]. In medicine, it is used for generating patient reports and drafting discharge summaries [57], though error rates can range from 8% to 83% [37].

- Marketing and SEO Content: Marketers leverage ChatGPT for creating blog posts, ad copy, social media updates, and personalized email campaigns. It also aids in SEO by generating topic ideas and crafting meta descriptions [71].

- Legal Content: Law firms utilize ChatGPT for drafting client correspondence, creating legal blog content, and developing marketing materials to increase efficiency [71].

- Automated Task Execution with AI Agents: The next frontier lies in agentic AI, where LLMs are empowered to act as autonomous agents. These agents move beyond generating content to performing complex, multi-step tasks. For example, an agent might not just write code but also autonomously execute test suites, debug it, and integrate it into a larger system; or it might not just draft a marketing email but also execute the entire campaign by analyzing performance data, adjusting targeting, and optimizing content strategy in real time [4,16]. This represents a profound shift from a content creator to a full autonomous task Automator.

3.2. Methodologies for Quality Control, Coherence, and Accuracy

- Human Oversight and Human-in-the-Loop: This remains the most critical control measure. Expert review is essential for content where errors have severe consequences [2,32,36]. For agentic AI, this evolves into a “human-in-the-loop” model, where humans supervise, intervene, and approve agent actions before execution to prevent errors and ensure safety [81]. However, scaling human oversight presents its own challenges, including cognitive load on reviewers and the difficulty of verifying complex, multi-step agentic reasoning processes, necessitating the development of intelligent human–AI teaming interfaces and real-time anomaly detection systems [82].

- Process and Tool-Use Validation: For AI agents, quality control must extend beyond the final output to validate the entire process. This includes verifying that the agent’s reasoning is sound and that it uses its tools (e.g., web browsers, APIs) correctly and safely [17].

- Error Rate Analysis: Systematic analysis of error rates provides insights into reliability and highlights areas needing improvement [37].

3.3. Ethical Considerations in Content and Task Automation

- Bias: A critical challenge is inherent bias. LLMs are trained on vast corpora of text and code from the internet, data that inevitably reflects the full spectrum of human societal biases [30]. Consequently, models can learn, reproduce, and even amplify harmful stereotypes related to race, gender, religion, age, and other social categories [30]. This can manifest as discriminatory outputs in sensitive applications like job recruitment, loan approval decisions, and medical diagnostics [30]. Research has shown that even models that have undergone “alignment”—a fine-tuning process to make them appear unbiased on explicit tests—can still harbor and act upon implicit biases in more subtle ways [93]. Studies also indicate that while some sources of bias can be identified and “pruned” from a model’s neural network, the context-dependent nature of bias makes a universal fix nearly impossible. This suggests that accountability for biased outputs may need to shift from the model developers to the organizations that deploy these models in specific, real-world contexts [30]. Generated content and agentic actions can reflect and amplify societal biases from training data [14,30,57].

- Accountability and Autonomous Action: Agents capable of autonomous action raise profound ethical questions about accountability. Determining responsibility when an autonomous agent causes financial, social, or physical harm is a complex challenge for which legal and ethical frameworks are still nascent [35]. Addressing this requires interdisciplinary efforts to develop clear accountability models—perhaps through “responsible-by-design” principles or legal concepts adapted from product liability and human-machine interaction—that allocate responsibility in human–agent systems.

- Copyright and Authorship: The generation of content raises complex questions about intellectual property rights, originality, authorship attribution, and plagiarism, especially when outputs closely resemble training data or are presented as original work [98,102,103,104,105,106]. Legal frameworks are still evolving to address these issues [2].

3.4. Advancing Method and Theory for Responsible Content Generation and Task Automation

4. ChatGPT as a Catalyst for Knowledge Discovery: Methodologies, Scientific Inquiry, and Future Paradigms

4.1. Methodologies for Knowledge Extraction from Unstructured Data

- Qualitative Data Analysis Assistance: Researchers are exploring ChatGPT for assisting in qualitative analysis, such as generating initial codes or identifying potential themes [21,28,74,90,91]. However, careful prompting and validation are required, as LLMs can generate nonsensical data if not properly guided [21,28,74,90,91]. This guidance often requires expert domain knowledge to formulate precise prompts, iterative refinement of generated codes or themes, and a deep understanding of the LLM’s limitations to prevent the introduction of artificial patterns or spurious insights into the analytical process.

- LLMs Combined with Knowledge Graphs (KGs): A promising methodology involves integrating LLMs with KGs. The GoAI method, for instance, uses an LLM to build and explore a KG of scientific literature to generate novel research ideas, providing a more structured approach than relying on the LLM alone [87,112].

- Autonomous Knowledge Discovery with AI Agents: The next methodological leap involves deploying agentic AI to create automated knowledge discovery pipelines. These agents can be tasked with a high-level goal and then autonomously plan and execute a sequence of actions—such as searching databases, retrieving papers, extracting data, and synthesizing findings—to deliver structured knowledge with minimal human intervention [113].

- Prompt Injection Vulnerabilities: Research into prompt injection techniques highlights how the knowledge extraction process can be manipulated, underscoring security vulnerabilities that must be addressed for reliable knowledge discovery, especially in autonomous systems [114].

4.2. Applications in Scientific Research

- Hypothesis Generation: Models like GPT-4 can generate plausible and original scientific hypotheses, sometimes outperforming human graduate students in specific contexts [44,45,110]. For instance, models could suggest novel drug targets by identifying non-obvious correlations across vast biomedical literature or propose new material compositions based on synthesized property databases.

- Experimental Design Support: ChatGPT can assist in outlining experimental procedures but may require expert refinement to address oversimplifications or “loose ends” [26,37,40,45,121]. These “loose ends” often involve the critical details of experimental controls, statistical rigor, feasibility assessments for real-world implementation, and adherence to ethical guidelines, all of which require human scientific judgment beyond current LLM capabilities.

- Automating Research with Scientific Agents: The culmination of these capabilities is the creation of scientific agents. These are autonomous systems designed to conduct research by integrating multiple steps. For instance, a scientific agent could be tasked with a high-level research question and then autonomously search literature, formulate a hypothesis, design and execute code for a simulated experiment, analyze the results, and draft a preliminary report, dramatically accelerating the pace of discovery [131]. OpenAI ChatGPT’s and Google Gemini’s Deep Research language models are good examples.

4.3. Critical Assessment of ChatGPT’s Role in Advancing Research

- Accuracy and Reliability Concerns: The propensity for hallucinations and bias is a major concern that necessitates rigorous validation of all AI-generated outputs [13,30,130]. This risk is magnified for autonomous agents, where acting on a single hallucinated fact could derail an entire research workflow. Mitigating this requires not only robust human-in-the-loop validation points but also the integration of autonomous self-correction loops and continuous cross-validation against established scientific databases or external tools within the agent’s workflow to ensure end-to-end integrity.

- The Indispensable Role of Human Expertise: Human expertise remains crucial for critical evaluation, contextual understanding, and ensuring methodological soundness [26,39,40,85,110,121,130,131,132,133,134,135]. As research becomes more automated, the human role shifts from task execution to high-level strategic direction and critical supervision of the AI’s process and outputs.

4.4. Advancing Method and Theory in AI-Augmented Knowledge Discovery

- Frameworks like GoAI [112] exemplify a move toward structured methodologies that combine LLMs with KGs for more transparent idea generation.

- The concept of LLMs “simulating abductive reasoning” [125] suggests a new theoretical lens for understanding how these models contribute to scientific insight, moving beyond pattern matching toward computational reasoning.

5. Revolutionizing Education and Training: ChatGPT’s Global Impact on Pedagogy, Assessment, and Equity

5.1. Applications in Education

- Personalized Learning: A primary application is facilitating personalized learning experiences. ChatGPT can adapt content, offer real-time feedback, and function as a virtual tutor available 24/7 [137,138]. For instance, ChatGPT can dynamically adjust the complexity of explanations based on a student’s prior responses, offer alternative examples tailored to their learning style, or provide targeted practice problems identified from their specific areas of difficulty.

- Teaching Aids and Interactive Tools: The technology can be harnessed to develop engaging teaching aids, virtual instructors, and interactive simulations [137].

- Support for Diverse Learners: ChatGPT enhances accessibility for students with disabilities and multilingual learners through translation and simplification [140].

- Autonomous Learning Companions and Agents: The next evolutionary step is the deployment of AI agents as personalized learning companions. These agents go beyond tutoring by autonomously managing a student’s long-term learning journey. They can co-design study plans, curate resources from vast digital libraries, schedule tasks, and proactively adapt strategies based on performance, transforming the learning process into a continuous, interactive dialogue [141,142]. This transformation stems from their capacity for dynamic pedagogical intervention, offering personalized feedback loops, adapting content difficulty in real time based on student mastery, and even guiding students in meta-cognitive reflection to enhance self-regulated learning.

5.2. Impact on Critical Thinking, Academic Integrity, and Ethics

- Critical Thinking: A dichotomy exists where AI can either be used to generate thought-provoking prompts that foster analysis or, through over-reliance, erode students’ ability to think deeply [5,143]. Concerns persist that students may become cognitively passive [144]. The introduction of AI agents deepens this concern, as they could automate not just the answers but the entire process of inquiry and discovery, potentially deskilling students in research and problem-solving [145]. To counter this, educators must integrate “AI literacy” and advanced “prompt engineering” into curricula, empowering students to critically engage with AI tools as intellectual collaborators, thereby fostering higher-order skills rather than merely outsourcing tasks.

- Academic Integrity: The risk of plagiarism with AI-generated text is a primary concern [5,143]. With agents, this evolves from verifying authorship of text to verifying authorship of complex, multi-step actions and outcomes. Strategies to uphold integrity must, therefore, shift toward assessments that are inherently human-centric, such as project-based work, oral examinations, and evaluations of the process of inquiry and problem-solving, rather than solely the final product [143]. This necessitates designing assignments that are resistant to full automation, compelling students to demonstrate unique human cognitive contributions. Consequently, the paradigm shift in assessment towards authorship of these complex, multi-step actions and outcomes have potential to foster and develop in students critical thinking, metacognition and self-regulation of learning for quality AI literacy competencies and essential human-AI collaboration skills essential for future workforce preparedness [137,146]. However, for unbiased evaluation of these processes and outcomes of inquiry and problem-based learning to mitigate academic dishonesty in an AI-mediated learning environment and age, generic and context-specific assessment frameworks should be developed for clarity, consistency and reliability. Thus, academic integrity can be upheld if the processes are properly scrutinized and the outcomes are rigorously evaluated within well-defined assessment frameworks.

- Ethical Challenges: Broader ethical issues include data privacy, equity, and potential biases in AI content [5]. Agentic AI introduces new dilemmas regarding student autonomy and data sovereignty. An agent managing a student’s learning collects vast amounts of sensitive performance and behavioral data, raising critical questions about consent, surveillance, and how that data is used to shape a student’s educational future [147].

5.3. Global Perspectives and Educational Equity

- Democratization vs. Digital Divide: ChatGPT has the potential to democratize education by providing widespread access to high-quality learning resources [138]. However, it also risks exacerbating the digital divide if access to technology, internet, and AI literacy are inequitably distributed [140]. The advent of powerful, resource-intensive learning agents could create a new, more profound equity gap between students who have access to personalized autonomous tutors and those who do not [149]. Addressing this necessitates proactive policy development to ensure universal access to foundational AI tools, alongside culturally sensitive AI design and curriculum integration efforts that leverage AI to amplify diverse voices and knowledge systems.

5.4. Advancing Educational Research, Theories, and Pedagogical Models

- Revisiting Learning Theories: ChatGPT’s capabilities challenge and offer new lenses through which to view learning theories such as constructivism (where students actively construct knowledge, potentially aided by AI tools) (Li et al., 2025) and self-determination theory (exploring AI’s impact on student autonomy, competence, and relatedness) [144].

- Transforming Assessment Paradigms: Traditional assessment methods are being questioned. There is a call for innovative assessment strategies that emphasize higher-order thinking, creativity, and authentic application of knowledge, rather than tasks easily outsourced to AI [5]. This includes exploring personalized, adaptive assessments leveraging GenAI [144].

- Methodological Rigor in AI-in-Education Research: There is a critical need for methodological rigor in studying AI’s impact on education. Researchers must carefully define experimental treatments, establish appropriate control groups, and use valid outcome measures that genuinely reflect learning, avoiding pitfalls of earlier “media/methods” debates where technology effects were often confounded with instructional design [150].

- Developing New Pedagogical Models: The situation calls for the development of new pedagogical models that constructively integrate AI. This involves training educators and students in AI literacy, prompt engineering skills, and the critical evaluation of AI-generated outputs, and designing learning experiences that leverage AI as a tool for enhancing human intellect and creativity, rather than replacing it [151,152].

6. Engineering New Frontiers with ChatGPT: Advancing Design, Optimization, and Methodological Frameworks

6.1. Applications in Engineering Disciplines

- Geotechnical Engineering: ChatGPT can generate finite element analysis (FEA) code for modeling complex processes, though its effectiveness varies based on the programming library used, underscoring its role as an assistant [46].

- Control Systems Engineering: Studies show ChatGPT can pass undergraduate control systems courses but struggles with open-ended projects requiring deep synthesis and practical judgment [158].

- Automated Design and Analysis with Engineering Agents: The next frontier is the deployment of engineering agents. These are autonomous systems that can manage complex, multi-step engineering workflows. For example, an agent could be tasked with a high-level goal, such as designing a mechanical part, and then autonomously generate design options, use software tools to run simulations (e.g., finite element analysis (FEA)—a computational method that enables engineers to predict how structures or components will respond to physical forces such as stress, heat, and vibration), interpret the results, and iterate on the design until specifications are met [120]. The criticality of human oversight and validation in these applications scales with the potential impact of errors; while minor inaccuracies in ideation might be tolerable, those in safety-critical FEA code generation demand absolute precision and rigorous validation protocols.

6.2. Theoretical Constructs and Novel Engineering Methodologies

- Prompt Engineering for Optimization: Effective problem formulation using ChatGPT relies heavily on sophisticated prompt engineering and sequential learning approaches [157].

- Human–LLM Design Practices: Comparative studies are yielding insights into LLM strengths (e.g., breadth of ideation) and weaknesses (e.g., design fixation), leading to recommendations for structured design processes with human oversight [159]. This design fixation stems from their training on vast datasets of existing designs, leading them to synthesize rather than truly innovate beyond established paradigms, thus highlighting the continued indispensability of human creativity for disruptive engineering solutions. This also means that while LLMs excel at optimizing within defined constraints, they currently struggle to generate entirely novel paradigms or break free from conventional design wisdom.

- Cognitive Impact on Design Thinking: Research is exploring how AI influences designers’ cognitive processes, such as fostering thinking divergence and fluency [156].

- LLMs in Systems Engineering (SE): While LLMs can generate SE artifacts, there are significant risks, including tendencies toward “premature requirements definition” and “unsubstantiated numerical estimates” [160]. These risks are magnified in autonomous agentic systems where flawed assumptions could propagate through an entire automated workflow.

- Methodologies for Agentic Workflows: The rise of engineering agents necessitates new methodologies for managing human–agent and agent–agent collaboration. This includes designing frameworks for task decomposition, tool selection, and process validation to ensure the reliability and safety of autonomous engineering systems [7].

6.3. Impact on Engineer Productivity and Future Practice

- Productivity Gains: Studies report significant productivity increases from using LLMs for tasks like code generation and drafting [155]. The shift toward agentic AI promises to extend these gains from task assistance to end-to-end workflow automation [154]. This automation particularly targets repetitive, data-intensive, or computationally heavy tasks such as iterative design optimization, extensive code refactoring, or multi-criteria material selection, thereby freeing engineers to focus on higher-level problem definition, innovative conceptualization, and critical validation.

- Preparing Future Engineers: Engineering curricula must adapt to prepare students for workplaces where GenAI tools are prevalent. This includes teaching AI literacy, prompt engineering, and the critical evaluation of AI outputs to ensure they can effectively supervise and collaborate with AI systems [3].

6.4. Advancing Engineering Methodologies and Theoretical Frameworks

- Agent-Assisted Engineering Frameworks: There is an opportunity to develop structured frameworks that explicitly integrate AI agents at various stages of the engineering design process. These frameworks would define roles, responsibilities, and interaction protocols for human engineers and their agentic counterparts.

- Theories of AI-Robustness in Design: The identification of LLM failure modes [160] can inform new theories around “AI-robustness” to predict and mitigate risks associated with using AI in critical applications. Such theories would need to encompass not only the prediction of explicit failure modes but also the development of systems resilient to adversarial inputs, capable of graceful degradation, and equipped with uncertainty quantification for decisions in safety-critical contexts.

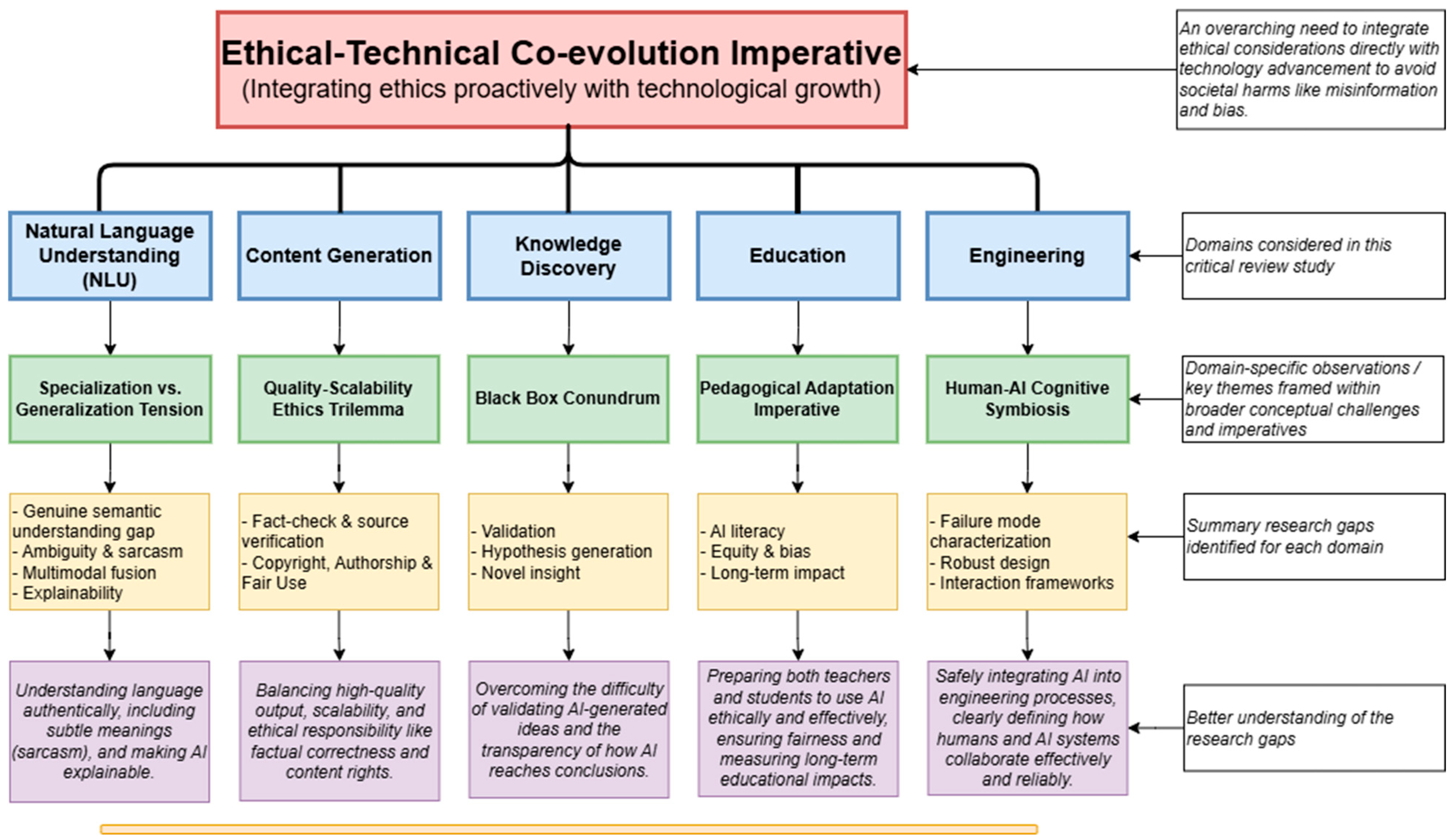

7. Navigating the AI Revolution: Themes, Tensions, Critical Gaps, and Future Directions

7.1. Common Themes Across Domains

7.2. Synthesis of Themes and Identification of Critical Research Gaps

- Natural Language Understanding (NLU): The “Specialization vs. Generalization Tension” persists. A fundamental gap lies in discerning genuine semantic understanding versus sophisticated pattern matching [24,25,27,56]. Future research should employ rigorous experimental designs, including controlled studies on compositional generalization and systematicity, to precisely delineate the nature of LLM comprehension beyond statistical co-occurrence [156,157,158]. This gap becomes a critical safety concern for agentic systems that must act reliably based on their understanding of commands and environmental cues. The lack of explainability hinders trust and theoretical advancement, a problem that becomes acute when an agent’s reasoning cannot be audited [7,23,28,158].

- Content Generation: The “Quality–Scalability–Ethics Trilemma” is a core challenge [5,102]. With the rise of agentic AI, this trilemma intensifies, as the potential for autonomous systems to act unethically at scale poses a far greater risk than generating harmful text alone. New technical solutions, legal and practical frameworks are urgently needed to govern the processes and actions of these agents [162,163].

- Education: The “Pedagogical Adaptation Imperative” demands a shift in focus to skills that complement AI. A critical gap is the lack of research on how to educate students to collaborate with and critically supervise learning agents without sacrificing their own cognitive autonomy [150,151,164]. Ensuring equitable access to powerful learning agents is crucial to prevent a widening of educational disparities [165].

7.3. Proposal of a Forward-Looking Research Agenda

7.3.1. Methodological Advancements

- NLU: Develop benchmarks that assess “deep understanding” and robust reasoning, critical for safe agentic behavior.

- Content and Action Generation: Design adaptive quality and ethical control frameworks that are integrated directly into an agent’s decision-making loop.

- Knowledge Discovery: Develop and validate rigorous protocols for human supervision of AI-assisted hypothesis generation and experimentation.

- Education: Conduct longitudinal studies on the impact of learning agents on cognitive development. Design and test AI literacy curricula focused on human–agent collaboration.

- Engineering: Formulate comprehensive testing and validation protocols for agents used in safety-critical design tasks and implement robust human-in-the-loop control frameworks.

- Cross-Domain Methodologies for Agentic Systems: A crucial priority is to develop standardized safety protocols, robust and intuitive human-in-the-loop control mechanisms, and secure “sandboxing” environments for testing the behavior of autonomous agents before deployment in real-world settings.

7.3.2. Theoretical Advancements

- NLU: Formulate theories of “Explainable Generative NLU” to make agent reasoning transparent.

- Content and Action Generation: Develop “Ethical AI Agency Frameworks” that provide a theoretical basis for guiding the responsible actions of autonomous systems.

- Knowledge Discovery: Propose “Computational Creativity Theories” to explain how AI agents contribute to novel discovery. These theories should model processes such as divergent idea generation, conceptual blending, and the computational mechanisms underlying scientific insight, moving beyond mere hypothesis generation to explain the emergence of truly novel scientific paradigms.

- Education: Build “AI-Augmented Learning Theories” that model how students learn effectively in partnership with AI agents, exploring frameworks like “Cyborg Pedagogy.”

- Engineering: Conceptualize “Human–Agent Symbiotic Engineering Theories” that define principles for shared cognition and distributed responsibility in human–agent teams.

- Theories of Trustworthy Autonomy and Governance: An overarching theoretical challenge is to develop robust theories of human–agent teaming, create computational models for agent accountability, and design governance frameworks for multi-agent ecosystems where agents interact with each other and with society [4].

7.4. Practical Implications for Method, Theory, and Practice

- Method: The identified limitations necessitate new methodological approaches. This includes developing robust validation protocols for both generated content and agentic actions, advancing techniques like Retrieval-Augmented Generation (RAG) to ground agent knowledge [18], and establishing prompt engineering as a core skill for effective human–agent interaction. Crucially, new methods are needed for designing, testing, and ensuring the safety and reliability of complex, multi-step agentic workflows [7].

- Theory: The challenges and emergent interactions demand new theoretical frameworks. These include theories for Explainable NLU, Responsible Generative Efficiency, and AI-assisted Abductive Reasoning. In education and engineering, this means developing AI-Augmented Learning Theories and Human–Agent Symbiotic Engineering Theories. These frameworks are the essential theoretical underpinnings for building trustworthy and beneficial AI agents. Overarching this is the need for Co-evolutionary AI Development Frameworks that model the interplay between technical and ethical progress, which is paramount for guiding agentic systems [167].

- Practice: The practical implications are vast, requiring significant adaptation. This includes revising educational pedagogy to focus on skills like critical thinking and AI literacy, training professionals in human–agent teaming (HAT) [82], implementing rigorous quality assurance for AI outputs through the development and deployment of governance frameworks, and prioritizing ethical design and bias mitigation. Successfully enacting these changes will also demand robust institutional support, significant investment in AI infrastructure and continuous professional development, and overcoming inherent organizational inertia to embrace these transformative paradigms. The shift in practice is from using AI as a tool to leveraging it as a cognitive partner; this partnership is evolving into one where humans provide strategic oversight and ethical judgment for increasingly autonomous AI agents [81].

8. Limitation of This Critical Review Study

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Akhtarshenas, A.; Dini, A.; Ayoobi, N. ChatGPT or A Silent Everywhere Helper: A Survey of Large Language Models. arXiv 2025, arXiv:2503.17403. [Google Scholar]

- Infosys Limited. A Perspective on ChatGPT, Its Impact and Limitations. 2023. Available online: https://www.infosys.com/techcompass/documents/perspective-chatgpt-impact-limitations.pdf (accessed on 22 May 2025).

- Murray, M.; Maclachlan, R.; Flockhart, G.M.; Adams, R.; Magueijo, V.; Goodfellow, M.; Liaskos, K.; Hasty, W.; Lauro, V. A ‘snapshot’of engineering practitioners views of ChatGPT-informing pedagogy in higher education. Eur. J. Eng. Educ. 2025, 1–26. [Google Scholar] [CrossRef]

- Xi, Z.; Chen, W.; Guo, X.; He, H.; Ding, Y.; Hong, B.; Zhang, M.; Wang, J.; Jin, S.; Zhou, E.; et al. The rise and potential of large language model based agents: A survey. arXiv 2023, arXiv:2309.07864. [Google Scholar] [CrossRef]

- Dempere, J.; Modugu, K.; Hesham, A.; Ramasamy, L.K. The impact of ChatGPT on higher education. Front. Educ. 2023, 8, 1206936. [Google Scholar] [CrossRef]

- Al Naqbi, H.; Bahroun, Z.; Ahmed, V. Enhancing work productivity through generative artificial intelligence: A comprehensive literature review. Sustainability 2024, 16, 1166. [Google Scholar] [CrossRef]

- Sapkota, R.; Raza, S.; Karkee, M. Comprehensive analysis of transparency and accessibility of chatgpt, deepseek, and other sota large language models. arXiv 2025, arXiv:2502.18505. [Google Scholar]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing, 3rd ed.; Prentice Hall: Hoboken, NJ, USA, 2000. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. PaLM: Scaling language modeling with pathways. J. Mach. Learn. Res. 2023, 24, 1–13. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. Available online: https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (accessed on 20 December 2024).

- OpenAI. GPT-4 Technical Report. 2023. Available online: https://openai.com/index/gpt-4-research/ (accessed on 22 May 2025).

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Volume 1: Long Papers, Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Association for Computational Linguistics: Berlin, Germany, 2016. [Google Scholar]

- OpenAI. ChatGPT FAQ. 2024. Available online: https://help.openai.com/en/collections/3742473-chatgpt (accessed on 22 May 2025).

- OpenAI. Hello GPT-4o. 2024. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 22 May 2025).

- Hariri, W. Unlocking the potential of ChatGPT: A comprehensive exploration of its applications, advantages, limitations, and future directions in natural language processing. arXiv 2023, arXiv:2304.02017. [Google Scholar]

- Park, J.S.; O’Brien, J.C.; Cai, C.J.; Morris, M.R.; Liang, P.; Bernstein, M.S. Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology; San Francisco, CA, USA, 29 October–1 November 2023, Association for Computing Machinery: New York, NY, USA, 2023; pp. 1–22. [Google Scholar] [CrossRef]

- Shinn, N.; Cassano, F.; Gopinath, A.; Narasimhan, K.; Yao, S. Reflexion: Language agents with verbal reinforcement learning. arXiv 2023, arXiv:2303.11366. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Yu, Y.; Kim, S.; Lee, W.; Koo, B. Evaluating ChatGPT on Korea’s BIM Expertise Exam and improving its performance through RAG. J. Comput. Des. Eng. 2025, 12, 94–120. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997. [Google Scholar]

- Empirical Methods in Natural Language Processing. The 2024 Conference on Empirical Methods in Natural Language Processing. 2024. Available online: https://aclanthology.org/events/emnlp-2024/ (accessed on 11 June 2025).

- Wu, J.; Gan, W.; Chen, Z.; Wan, S.; and Yu, P.S. Multimodal large language models: A survey. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData): Sorrento, Naples, Italy, 15–18 December 2023; pp. 2247–2256. [Google Scholar]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Baroni, M. Linguistic generalization and compositionality in modern artificial neural networks. Philos. Trans. R. Soc. B 2020, 375, 20190307. [Google Scholar] [CrossRef] [PubMed]

- Katzir, R. Why Large Language Models Are Poor Theories of Human Linguistic Cognition. A Reply to Piantadosi (2023); Tel Aviv University: Tel Aviv, Israel, 2023; Available online: https://lingbuzz.net/lingbuzz/007190 (accessed on 22 May 2025).

- Lake, B.M. Compositional generalization through meta sequence-to-sequence learning. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Shormani, M.Q. Non-native speakers of English or ChatGPT: Who thinks better? arXiv 2024, arXiv:2412.00457. [Google Scholar]

- Liu, Y.; Yao, Y.; Ton, J.F.; Zhang, X.; Guo, R.; Cheng, H.; Klochkov, Y.; Taufiq, M.F.; Li, H. Trustworthy llms: A survey and guideline for evaluating large language models’ alignment. arXiv 2023, arXiv:2308.05374. [Google Scholar]

- Liu, Y.; Deng, G.; Xu, Z.; Li, Y.; Zheng, Y.; Zhang, Y.; Zhao, L.; Zhang, T.; Wang, K.; Liu, Y. Jailbreaking chatgpt via prompt engineering: An empirical study. arXiv 2023, arXiv:2305.13860. [Google Scholar]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too bi? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, New York, NY, USA, 3–10 March 2021; pp. 610–623. [Google Scholar]

- OpenAI. GPT-3.5 Turbo. 2023. Available online: https://openai.com/index/gpt-3-5-turbo-fine-tuning-and-api-updates/ (accessed on 22 May 2025).

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi ARush, A.M. Huggingface’s transformers: State-of-the-art natural language processing. arXiv 2019, arXiv:1910.03771. Available online: https://arxiv.org/abs/1910.03771 (accessed on 20 July 2025).

- Mavrepis, P.; Makridis, G.; Fatouros, G.; Koukos, V.; Separdani, M.M.; Kyriazis, D. XAI for all: Can large language models simplify explainable AI? arXiv 2024, arXiv:2401.13110. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Du, M. Explainability for large language models: A survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–38. [Google Scholar] [CrossRef]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.-S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A.; et al. An overarching risk analysis and management framework for frontier AI. arXiv 2024, arXiv:2405.02111. [Google Scholar] [CrossRef]

- Susskind, R.; Susskind, D. The Future of the Professions: How Technology Will Transform the Work of Human Experts; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Shah, N.; Jain, S.; Lauth, J.; Mou, Y.; Bartsch, M.; Wang, Y.; Luo, Y. Can large language models reason about medical conversation? arXiv 2023, arXiv:2305.00412. [Google Scholar]

- Rice, S.; Crouse, S.R.; Winter, S.R.; Rice, C. The advantages and limitations of using ChatGPT to enhance technological research. Technol. Soc. 2024, 76, 102426. [Google Scholar] [CrossRef]

- Nguyen, M.N.; Nguyen Thanh, B.; Vo, D.T.H.; Pham Thi Thu, T.; Thai, H.; Ha Xuan, S. Evaluating the Efficacy of Generative Artificial Intelligence in Grading: Insights from Authentic Assessments in Economics. SSRN Electron. J. 2024. [Google Scholar] [CrossRef]

- Thelwall, M. Evaluating research quality with large language models: An analysis of ChatGPT’s effectiveness with different settings and inputs. J. Data Inf. Sci. 2025, 10, 7–25. [Google Scholar] [CrossRef]

- RedBlink. Llama 4 vs ChatGPT: Comprehensive AI Models Comparison 2025. Available online: https://redblink.com/llama-4-vs-chatgpt/ (accessed on 22 May 2025).

- OpenAI. Introducing o1: Our Next Step in AI research. 2024. Available online: https://openai.com/o1/ (accessed on 22 May 2025).

- OpenAI Help Center. What is the ChatGPT Model Selector? Available online: https://help.openai.com/en/articles/7864572-what-is-the-chatgpt-model-selector (accessed on 11 June 2025).

- OpenAI. o1-mini: Our Best Performing Model on AIME. 2024. Available online: https://openai.com/index/openai-o1-mini-advancing-cost-efficient-reasoning/ (accessed on 4 August 2025).

- Kim, T.; Yun, T.S.; Suh, H.S. Can ChatGPT implement finite element models for geotechnical engineering applications? Int. J. Numer. Anal. Methods Geomech. 2025, 49, 1747–1766. [Google Scholar] [CrossRef]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. In Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP; Association for Computational Linguistics: Brussels, Belgium, 2018. [Google Scholar]

- Chalkidis, I.; Jana, A.; Hartung, D.; Bommarito, M.; Androutsopoulos, I.; Katz, D.M.; Aletras, N. LexGLUE: A benchmark dataset for legal language understanding in English. In Long Papers, Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; Association for Computational Linguistics: Dublin, Ireland, 2022; Volume 1. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Eastman, C.; Teicholz, P.; Sacks, R.; Liston, K. BIM Handbook: A Guide to Building Information Modeling for Owners, Managers, Designers, Engineers and Contractors; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Nori, H.; King, N.; McKinney, S.M.; Carignan, D.; Horvitz, E. The unreasonable effectiveness of GPT-4 in medicine. arXiv 2023, arXiv:2303.12039. [Google Scholar]

- Adams, L.C.; Truhn, D.; Busch, F.; Bressem, K.K. Harnessing the power of retrieval-augmented generation for radiology reporting. arXiv 2023, arXiv:2306.02731. [Google Scholar]

- Hendrycks, D.; Burns, C.; Basart, S.; Zou, A.; Mazeika, M.; Song, D.; Steinhardt, J. Measuring Massive Multitask Language Understanding. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Kahneman, D. Thinking, Fast and Slow; Farrar, Straus and Giroux: New York, NY, USA, 2011. [Google Scholar]

- Mathematical Association of America (MAA). American Invitational Mathematics Examination. 2025. Available online: https://www.maa.org/math-competitions/aime (accessed on 4 August 2025).

- Lake, B.M.; Baroni, M. Human-like systematic generalization through a meta-learning neural network. Nature 2023, 623, 115–121. [Google Scholar] [CrossRef]

- Hu, Y.; Lu, Y. Rag and rau: A survey on retrieval-augmented language model in natural language processing. arXiv 2024, arXiv:2404.19543. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, Y.; Hu, B.; Minervini, P.; Stenetorp, P.; Riedel, S. An efficient memory-augmented transformer for knowledge-intensive nlp tasks. arXiv 2022, arXiv:2210.16773. [Google Scholar]

- Yu, W. Retrieval-augmented generation across heterogeneous knowledge. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; Student Research Workshop: Seattle, WA, USA, 2022; pp. 52–58. [Google Scholar]

- Melamed, R.; McCabe, L.H.; Wakhare, T.; Kim, Y.; Huang, H.H.; Boix-Adsera, E. Prompts have evil twins. arXiv 2023, arXiv:2311.07064. [Google Scholar]

- Mozes, M.A.J. Understanding and Guarding Against Natural Language Adversarial Examples. Ph.D. Thesis, University College London, London, UK, 2024. [Google Scholar]

- Mozes, M.; He, X.; Kleinberg, B.; Griffin, L.D. Use of llms for illicit purposes: Threats, prevention measures, and vulnerabilities. arXiv 2023, arXiv:2308.12833. [Google Scholar] [CrossRef]

- Oremus, W. The Clever Trick That Turns ChatGPT Into Its Evil Twin. The Washington Post. 2023. Available online: https://www.washingtonpost.com/technology/2023/02/14/chatgpt-dan-jailbreak/ (accessed on 22 May 2025).

- Perez, F.; Ribeiro, I. Ignore previous prompt: Attack techniques for language models. arXiv 2022, arXiv:2211.09527. [Google Scholar] [CrossRef]

- Xue, J.; Zheng, M.; Hua, T.; Shen, Y.; Liu, Y.; Bölöni, L.; Lou, Q. Trojllm: A black-box trojan prompt attack on large language models. Adv. Neural Inf. Process. Syst. 2023, 36, 65665–65677. [Google Scholar]

- Hodge, S.D., Jr. Revolutionizing Justice: Unleashing the Power of Artificial Intelligence. SMU Sci. Technol. Law Rev. 2023, 26, 217. [Google Scholar] [CrossRef]

- Perlman, A. The implications of ChatGPT for legal services and society. Mich. Technol. Law Rev. 2023, 30, 1. [Google Scholar]

- Surden, H. ChatGPT, AI large language models, and law. Fordham Law Rev. 2023, 92, 1941. [Google Scholar]

- Naveed, J. Optimized Code Generation in BIM with Retrieval-Augmented LLMs. Master’s Thesis, Aalto University School of Science, Otaniemi, Finland, 2025. [Google Scholar]

- Neveditsin, N.; Lingras, P.; Mago, V. Clinical insights: A comprehensive review of language models in medicine. PLoS Digit. Health 2025, 4, e0000800. [Google Scholar] [CrossRef]

- Fisher, J. ChatGPT for Legal Marketing: 6 Ways to Unlock the Power of AI. AI-CASEpeer. May 2025. Available online: https://www.casepeer.com/blog/chatgpt-for-legal-marketing/ (accessed on 22 May 2025).

- Elkatmis, M. ChatGPT and Creative Writing: Experiences of Master’s Students in Enhancing. Int. J. Contemp. Educ. Res. 2024, 11, 321–336. [Google Scholar] [CrossRef]

- Niloy, A.C.; Akter, S.; Sultana, N.; Sultana, J.; Rahman, S.I.U. Is Chatgpt a menace for creative writing ability? An experiment. J. Comput. Assist. Learn. 2024, 40, 919–930. [Google Scholar] [CrossRef]

- Zhu, S.; Wang, Z.; Zhuang, Y.; Jiang, Y.; Guo, M.; Zhang, X.; Gao, Z. Exploring the impact of ChatGPT on art creation and collaboration: Benefits, challenges and ethical implications. Telemat. Inform. Rep. 2024, 14, 100138. [Google Scholar] [CrossRef]

- Alasadi, E.; Baiz, A.A. ChatGPT: A systematic review of published research in medical education. medRxiv 2023. [Google Scholar]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Isiaku, L.; Muhammad, A.S.; Kefas, H.I.; Ukaegbu, F.C. Enhancing technological sustainability in academia: Leveraging ChatGPT for teaching, learning and evaluation. Qual. Educ. All 2024, 1, 385–416. [Google Scholar] [CrossRef]

- Michel-Villarreal, R.; Vilalta-Perdomo, E.; Salinas-Navarro, D.E.; Thierry-Aguilera, R.; Gerardou, F.S. Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Educ. Sci. 2023, 13, 856. [Google Scholar] [CrossRef]

- Preiksaitis, C.; Rose, C. Opportunities, challenges, and future directions of generative artificial intelligence in medical education: Scoping review. JMIR Med. Educ. 2023, 9, e48785. [Google Scholar] [CrossRef]

- Wu, T.Y.; He, S.Z.; Liu, J.P.; Sun, S.Q.; Liu, K.; Han, Q.L.; Tang, Y. A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA J. Autom. Sinica 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered AI; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Seeber, I.; Bittner, E.; Briggs, R.O.; de Vreede, T.; de Vreede, G.-J.; Elkins, A.; Maier, R.; Merz, A.B.; Oeste-Reiß, S.; Randrup, N.; et al. Machines as teammates: A research agenda on AI in team collaboration. Inf. Manag. 2020, 57, 103174. [Google Scholar] [CrossRef]

- Arvidsson, S.; Axell, J. Prompt Engineering Guidelines for LLMs in Requirements Engineering. Ph.D. Thesis, University of Technology, Gothenburg, Sweden, 2023. Available online: https://gupea.ub.gu.se/bitstream/handle/2077/77967/CSE%2023-20%20SA%20JA.pdf?sequence=1&isAllowed=y (accessed on 20 July 2025).

- Marvin, G.; Hellen, N.; Jjingo, D.; Nakatumba-Nabende, J. Prompt engineering in large language models. In International Conference on Data Intelligence and Cognitive Informatics; Springer Nature: Singapore, 2023; pp. 387–402. [Google Scholar]

- Velásquez-Henao, J.D.; Franco-Cardona, C.J.; Cadavid-Higuita, L. Prompt Engineering: A methodology for optimizing interactions with AI-Language Models in the field of engineering. Dyna 2023, 90, 9–17. [Google Scholar] [CrossRef]

- Zhou, Y.; Muresanu, A.I.; Han, Z.; Paster, K.; Pitis, S.; Chan, H.; Ba, J. Large language models are human-level prompt engineers. In Proceedings of the 11th International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Pan, S.; Luo, L.; Wang, Y.; Chen, C.; Wang, J.; Wu, X. Unifying large language models and knowledge graphs: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2024, 14, e1518. [Google Scholar] [CrossRef]

- Kaushik, A.; Yadav, S.; Browne, A.; Lillis, D.; Williams, D.; Donnell, J.M.; Grant, P.; Kernan, S.C.; Sharma, S.; Mansi, A. Exploring the Impact of Generative Artificial Intelligence in Education: A Thematic Analysis. arXiv 2025, arXiv:2501.10134. [Google Scholar]

- Hadi, M.U.; Qureshi, R.; Shah, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Hassan, S.Z.; Shoman, M.; Wu, J.; et al. Large language models: A comprehensive survey of its applications, challenges, limitations, and future prospects. Authorea Prepr. 2023, 1, 1–26. [Google Scholar]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering for large language models. Patterns 2025, 6, 101260. [Google Scholar] [CrossRef]

- Sivarajkumar, S.; Kelley, M.; Samolyk-Mazzanti, A.; Visweswaran, S.; Wang, Y. An empirical evaluation of prompting strategies for large language models in zero-shot clinical natural language processing: Algorithm development and validation study. JMIR Med. Inform. 2024, 12, e55318. [Google Scholar] [CrossRef]

- Naseem, U.; Dunn, A.G.; Khushi, M.; Kim, J. Benchmarking for biomedical natural language processing tasks with a domain specific ALBERT. BMC Bioinform. 2022, 23, 144. [Google Scholar] [CrossRef] [PubMed]

- Perez, E.; Huang, S.; Song, F.; Cai, T.; Ring, R.; Aslanides, J.; Glaese, A.; McAleese, N.; Irving, G. Red teaming language models with language models. arXiv 2022, arXiv:2202.03286. [Google Scholar] [CrossRef]

- Garousi, V. Why you shouldn’t fully trust ChatGPT: A synthesis of this AI tool’s error rates across disciplines and the software engineering lifecycle. arXiv 2023, arXiv:2504.18858. [Google Scholar]

- Schiller, C.A. The human factor in detecting errors of large language models: A systematic literature review and future research directions. arXiv 2024, arXiv:2403.09743. [Google Scholar] [CrossRef]

- Lee, H. The rise of ChatGPT: Exploring its potential in medical education. Anat. Sci. Educ. 2024, 17, 926–931. [Google Scholar] [CrossRef]

- Johnson, S.; Acemoglu, D. Power and Progress: Our Thousand-Year Struggle over Technology and Prosperity; Hachette UK: London, UK, 2023. [Google Scholar]

- OpenAI. Safety & Alignment. 2023. Available online: https://openai.com/safety/ (accessed on 22 May 2025).

- Veisi, O.; Bahrami, S.; Englert, R.; Müller, C. AI Ethics and Social Norms: Exploring ChatGPT’s Capabilities from What to How. arXiv 2025, arXiv:2504.18044. [Google Scholar]

- Daun, M.; Brings, J. How ChatGPT will change software engineering education. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1, Turku, Finland, 7–12 July 2023; pp. 110–116. [Google Scholar]

- Marques, N.; Silva, R.R.; Bernardino, J. Using chatgpt in software requirements engineering: A comprehensive review. Future Internet 2024, 16, 180. [Google Scholar] [CrossRef]

- Gamage, K.A.; Dehideniya, S.C.; Xu, Z.; Tang, X. ChatGPT and higher education assessments: More opportunities than concerns? J. Appl. Learn. Teach. 2023, 6, 358–369. [Google Scholar] [CrossRef]

- Gao, R.; Yu, D.; Gao, B.; Hua, H.; Hui, Z.; Gao, J.; Yin, C. Legal regulation of AI-assisted academic writing: Challenges, frameworks, and pathways. Front. Artif. Intell. 2025, 8, 1546064. [Google Scholar] [CrossRef]

- Hannigan, T.R.; McCarthy, I.P.; Spicer, A. Beware of botshit: How to manage the epistemic risks of generative chatbots. Bus. Horiz. 2024, 67, 471–486. [Google Scholar] [CrossRef]

- Jiang, Y.; Hao, J.; Fauss, M.; Li, C. Detecting ChatGPT-generated essays in a large-scale writing assessment: Is there a bias against non-native English speakers? Comput. Educ. 2024, 217, 105070. [Google Scholar] [CrossRef]

- Susnjak, T.; McIntosh, J. Academic integrity in the age of ChatGPT. Change Mag. High. Learn. 2024, 56, 21–27. [Google Scholar]

- Levitt, G.; Grubaugh, S. Artificial intelligence and the paradigm shift: Reshaping education to equip students for future careers. Int. J. Soc. Sci. Humanit. Invent. 2023, 10, 7931–7941. [Google Scholar] [CrossRef]

- U.S. Department of Education, Office of Educational Technology. Artificial Intelligence and the Future of Teaching and Learning: Insights and Recommendations. 2023. Available online: https://www.ed.gov/sites/ed/files/documents/ai-report/ai-report.pdf (accessed on 22 May 2025).

- Dagdelen, J.; Dunn, A.; Lee, S.; Walker, N.; Rosen, A.S.; Ceder, G.; Persson, K.A.; Jain, A. Structured information extraction from scientific text with large language models. Nat. Commun. 2024, 15, 1418. [Google Scholar] [CrossRef]

- Mitra, M.; de Vos, M.G.; Cortinovis, N.; Ometto, D. Generative AI for Research Data Processing: Lessons Learnt From Three Use Cases. In Proceedings of the 2024 IEEE 20th International Conference on e-Science (e-Science), Osaka, Japan, 16–20 September 2024; pp. 1–10. [Google Scholar]

- Yang, X.; Chen, A.; PourNejatian, N.; Shin, H.C.; Smith, K.E.; Parisien, C.; Compas, C.; Martin, C.; Flores, M.G.; Zhang, Y.; et al. Gatortron: A large clinical language model to unlock patient information from unstructured electronic health records. arXiv 2022, arXiv:2203.03540. [Google Scholar] [CrossRef]

- Gao, X.; Zhang, Z.; Xie, M.; Liu, T.; Fu, Y. Graph of AI Ideas: Leveraging Knowledge Graphs and LLMs for AI Research Idea Generation. arXiv 2025, arXiv:2503.08549. [Google Scholar]

- Bran, A.; Cox, S.R.; Schilter, P. ChemCrow: Augmenting large-language models with a tool-set for chemistry. arXiv 2024. [Google Scholar] [CrossRef]

- Chang, X.; Dai, G.; Di, H.; Ye, H. Breaking the Prompt Wall (I): A Real-World Case Study of Attacking ChatGPT via Lightweight Prompt Injection. arXiv 2025, arXiv:2504.16125. [Google Scholar]

- Albadarin, Y.; Saqr, M.; Pope, N.; Tukiainen, M. A systematic literature review of empirical research on ChatGPT in education. Discov. Educ. 2024, 3, 60. [Google Scholar] [CrossRef]

- Gabashvili, I.S. The impact and applications of ChatGPT: A systematic review of literature reviews. arXiv 2023, arXiv:2305.18086. [Google Scholar] [CrossRef]

- Haman, M.; Školník, M. Using ChatGPT for scientific literature review: A case study. IASL 2024, 1, 1–13. [Google Scholar]

- Imran, M.; Almusharraf, N. Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature. Cont. Edu. Tech. 2023, 15, ep464. [Google Scholar] [CrossRef]

- Mostafapour, M.; Asoodar, M.; Asoodar, M. Advantages and disadvantages of using ChatGPT for academic literature review. Cogent Eng. 2024, 11, 2315147. [Google Scholar]

- Wang, G.; Xie, Y.; Jiang, Y.; Mandlekar, A.; Xiao, C.; Zhu, Y.; Fan, L.; Anandkumar, A. Voyager: An open-ended embodied agent with large language models. arXiv 2023, arXiv:2305.16291. [Google Scholar] [CrossRef]

- Dai, W.; Lin, J.; Jin, H.; Li, T.; Tsai, Y.S.; Gašević, D.; Chen, G. Can large language models provide feedback to students? A case study on ChatGPT. In Proceedings of the 2023 IEEE International Conference on Advanced Learning Technologies (ICALT), Orem, UT, USA, 10–13 July 2023; IEEE: New York, NY, USA, 2023; pp. 323–325. [Google Scholar]

- Haltaufderheide, J.; Ranisch, R. ChatGPT and the future of academic publishing: A perspective. Am. J. Bioeth. 2024, 24, 4–11. [Google Scholar]

- Garg, R.K.; Urs, V.L.; Agarwal, A.A.; Chaudhary, S.K.; Paliwal, V.; Kar, S.K. Exploring the role of ChatGPT in patient care (diagnosis and treatment) and medical research: A systematic review. Health Promot. Perspect. 2023, 13, 183. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Glickman, M.; Zhang, Y. AI and generative AI for research discovery and summarization. arXiv 2024, arXiv:2401.06795. [Google Scholar] [CrossRef]

- Huang, J.; Chang, K.C.C. Towards reasoning in large language models: A survey. arXiv 2022, arXiv:2212.10403. [Google Scholar]

- Bhagavatula, C.; Bras, R.L.; Malaviya, C.; Sakaguchi, K.; Holtzman, A.; Rashkin, H.; Downey, D.; Yih, S.W.-t.; Choi, Y. Abductive commonsense reasoning. arXiv 2019, arXiv:1908.05739. [Google Scholar]

- Garbuio, M.; Lin, N. Innovative idea generation in problem finding: Abductive reasoning, cognitive impediments, and the promise of artificial intelligence. J. Prod. Innov. Manag. 2021, 38, 701–725. [Google Scholar] [CrossRef]

- Magnani, L.; Arfini, S. Model-based abductive cognition: What thought experiments teach us. Log. J. IGPL 2024, jzae096. [Google Scholar] [CrossRef]

- Pareschi, R. Abductive reasoning with the GPT-4 language model: Case studies from criminal investigation, medical practice, scientific research. Sist. Intelligenti 2023, 35, 435–444. [Google Scholar]

- Boiko, D.A.; MacKnight, R.; Gomes, G. Emergent autonomous scientific research capabilities of large language models. arXiv 2023, arXiv:2304.05332. [Google Scholar] [CrossRef]

- Noy, S.; Zhang, W. Experimental evidence on the productivity effects of generative artificial intelligence. Science 2023, 381, 187–192. [Google Scholar] [CrossRef]

- Eymann, V.; Lachmann, T.; Czernochowski, D. When ChatGPT Writes Your Research Proposal: Scientific Creativity in the Age of Generative AI. J. Intell. 2025, 13, 55. [Google Scholar] [CrossRef]

- Fill, H.G.; Fettke, P.; Köpke, J. Conceptual modeling and large language models: Impressions from first experiments with ChatGPT. Enterp. Model. Inf. Syst. Archit. 2023, 18, 1–15. [Google Scholar]

- Li, R.; Liang, P.; Wang, Y.; Cai, Y.; Sun, W.; Li, Z. Unveiling the Role of ChatGPT in Software Development: Insights from Developer-ChatGPT Interactions on GitHub. arXiv 2025, arXiv:2505.03901. [Google Scholar]

- Dovesi, D.; Malandri, L.; Mercorio, F.; Mezzanzanica, M. A survey on explainable AI for Big Data. J. Big Data 2024, 11, 6. [Google Scholar] [CrossRef]

- Davar, N.F.; Dewan, M.A.A.; Zhang, X. AI chatbots in education: Challenges and opportunities. Information 2025, 16, 235. [Google Scholar] [CrossRef]

- Li, M. The impact of ChatGPT on teaching and learning in higher education: Challenges, opportunities, and future scope. In Encyclopedia of Information Science and Technology, 6th ed.; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 1–20. [Google Scholar]

- Arslan, B.; Lehman, B.; Tenison, C.; Sparks, J.R.; López, A.A.; Gu, L.; Zapata-Rivera, D. Opportunities and challenges of using generative AI to personalize educational assessment. Front. Artif. Intell. 2024, 7, 1460651. [Google Scholar] [CrossRef]

- Lin, X.; Chan, R.Y.; Sharma, S.; Bista, K. (Eds.) ChatGPT and Global Higher Education: Using Artificial Intelligence in Teaching and Learning; STAR Scholars Press: Baltimore, MD, USA, 2024. [Google Scholar]

- Molenaar, I. Human-AI co-regulation: A new focal point for the science of learning. npj Sci. Learn. 2024, 9, 29. [Google Scholar]

- Salesforce. AI Agents in Education: Benefits & Use Cases. Salesforce. 23 June 2025. Available online: https://www.salesforce.com/education/artificial-intelligence/ai-agents-in-education/ (accessed on 22 May 2025).

- Mohammed, A. Navigating the AI Revolution: Safeguarding Academic Integrity and Ethical Considerations in the Age of Innovation. BERA. March 2025. Available online: https://www.bera.ac.uk/blog/navigating-the-ai-revolution-safeguarding-academic-integrity-and-ethical-considerations-in-the-age-of-innovation (accessed on 22 May 2025).

- Alghazo, R.; Fatima, G.; Malik, M.; Abdelhamid, S.E.; Jahanzaib, M.; Raza, A. Exploring ChatGPT’s Role in Higher Education: Perspectives from Pakistani University Students on Academic Integrity and Ethical Challenges. Educ. Sci. 2025, 15, 158. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Atchley, P.; Pannell, H.; Wofford, K.; Hopkins, M.; Atchley, R.A. Human and AI collaboration in the higher education environment: Opportunities and concerns. Cogn. Res. Princ. Implic. 2024, 9, 20. [Google Scholar] [CrossRef]

- Prinsloo, P. Data frontiers and frontiers of power in higher education: A view of the an/archaeology of data. Teach. High. Educ. 2020, 25, 394–412. [Google Scholar] [CrossRef]

- Adiyono, A.; Al Matari, A.S.; Dalimarta, F.F. Analysis of Student Perceptions of the Use of ChatGPT as a Learning Media: A Case Study in Higher Education in the Era of AI-Based Education. J. Educ. Teach. 2025, 6, 306–324. [Google Scholar] [CrossRef]

- UNESCO. Guidance for Generative AI in Education and Research. UNESCO. 2023. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000386693 (accessed on 22 May 2025).

- Weidlich, J.; Gašević, D. ChatGPT in education: An effect in search of a cause. PsyArXiv 2025. preprints. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Zhai, X. ChatGPT for next generation science learning. ACM Mag. Stud. 2023, 29, 42–46. [Google Scholar] [CrossRef]

- Belzner, L.; Gabor, T.; Wirsing, M. Large language model assisted software engineering: Prospects, challenges, and a case study. In International Conference on Bridging the Gap Between AI and Reality; Springer Nature: Cham, Switzerland, 2023; pp. 355–374. [Google Scholar]

- Rawat, A.S.; Fazzini, M.; George, T.; Gokulan, R.; Maddila, C.; Arrieta, A. A new era of software development: A survey on the impact of large language models. ACM Comput. Surv. 2024, 57, 1–40. [Google Scholar]

- Yadav, S.; Qureshi, A.M.; Kaushik, A.; Sharma, S.; Loughran, R.; Kazhuparambil, S.; Shaw, A.; Sabry, M.; St John Lynch, N.; Singh, N.; et al. From Idea to Implementation: Evaluating the Influence of Large Language Models in Software Development--An Opinion Paper. arXiv 2025, arXiv:2503.07450. [Google Scholar]

- Jiang, C.; Huang, R.; Shen, T. Generative AI-Enabled Conceptualization: Charting ChatGPT’s Impacts on Sustainable Service Design Thinking with Network-Based Cognitive Maps. J. Comput. Inf. Sci. Eng. 2025, 25, 021006. [Google Scholar] [CrossRef]

- Vu, N.G.H.; Wang, K.; Wang, G.G. Effective prompting with ChatGPT for problem formulation in engineering optimization. Eng. Optim. 2025, 1–18. [Google Scholar] [CrossRef]

- Puthumanaillam, G.; Ornik, M. The Lazy Student’s Dream: ChatGPT Passing an Engineering Course on Its Own. arXiv 2025, arXiv:2503.05760. [Google Scholar]

- Ege, D.N.; Øvrebø, H.H.; Stubberud, V.; Berg, M.F.; Elverum, C.; Steinert, M.; Vestad, H. ChatGPT as an inventor: Eliciting the strengths and weaknesses of current large language models against humans in engineering design. Artif. Intell. Eng. Des. Anal. Manuf. 2025, 39, e6. [Google Scholar] [CrossRef]

- Topcu, T.G.; Husain, M.; Ofsa, M.; Wach, P. Trust at Your Own Peril: A Mixed Methods Exploration of the Ability of Large Language Models to Generate Expert-Like Systems Engineering Artifacts and a Characterization of Failure Modes. Syst. Eng. 2025, 1–41. [Google Scholar] [CrossRef]

- Hagendorff, T. A virtue ethics-based framework for the corporate ethics of AI. AI Ethics 2024, 4, 653–666. [Google Scholar]

- Ballardini, R.M.; He, K.; Roos, T. AI-generated content: Authorship and inventorship in the age of artificial intelligence. In Online Distribution of Content in the EU; Edward Elgar Publishing: Cheltenham, UK, 2019; pp. 117–135. [Google Scholar]

- Craig, C.J. The AI-copyright challenge: Tech-neutrality, authorship, and the public interest. In Research Handbook on Intellectual Property and Artificial Intelligence; Edward Elgar Publishing: Cheltenham, UK, 2022; pp. 134–155. [Google Scholar]

- Reich, J. Failure to Disrupt: Why Technology Alone Can’t Transform Education; Harvard University Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Sabzalieva, E.; Valentini, A. ChatGPT and Artificial Intelligence in Higher Education: Quick Start Guide; UNESCO: Quito, Ecuador, 2023; pp. 1–14. [Google Scholar]

- Miller, D. Exploring the impact of artificial intelligence language model ChatGPT on the user experience. Int. J. Technol. Innov. Manag. 2023, 3, 1–8. [Google Scholar]

- Floridi, L.; Nobre, C. Artificial intelligence, and the new challenges of anticipatory governance. Ethics Inf. Technol. 2024, 26, 24. [Google Scholar]

- Dimeli, M.; Kostas, A. The Role of ChatGPT in Education: Applications, Challenges: Insights From a Systematic Review. J. Inf. Technol. Educ. Res. 2025, 24, 2. [Google Scholar] [CrossRef] [PubMed]

| Model Version | Key Architectural Features/Training Data Cutoff | Notable NLU Capabilities | Content Generation Strengths | Known Limitations | Key Benchmark Performance (Example) |

|---|---|---|---|---|---|

| ChatGPT-3.5/3.5-Turbo | Based on GPT-3.5, Text/Code (pre-2021/2023) [2,31,36] | Basic text tasks, translation, conversational AI, faster responses [2,31,36] | Dialogue, boilerplate tasks, initial drafts, summaries [2,31,36] | Accuracy issues, bias, limited by training data cutoff, struggles with highly specialized tasks [1,31] | GLUE average score ~78.7% (comparable to BERT-base, lags RoBERTa-large) [27]. Passed Korea’s BIM Expertise Exam with 65% average [19]. Error rates in healthcare can be high [37]. |

| ChatGPT-4 | Based on GPT-4, Text/Code (pre-2023) [2,38,39] | Multimodal (text), high precision, improved reasoning, expanded context window [11,13,15,23] | More coherent, contextually relevant text, complex conversations, nuanced topics [11,13,15,23] | Still prone to hallucinations, bias; costlier; specific weaknesses in areas like local guidelines without RAG [11,23,30,37,40] | Passed Korea’s BIM Expertise Exam with 85% average (improved to 88.6% with RAG for specific categories) [19]. Lower error rates in business/economics (~15–20%) compared to 3.5 [37]. |

| GPT-4o/GPT-4o mini | Text/Code (pre-2024) [14,15] | Multimodal (text/image/audio/video), improved contextual awareness, advanced tokenization, cost-efficiency (mini) [14,15] | Richer, more interactive responses, real-time collaboration support [14,15] | Newer models, long-term limitations still under study, but likely share core LLM challenges | GPT-4o slightly better than 3.5-turbo and 4o-mini on research quality score estimates (correlation 0.67 with human scores using title/abstract) [41]. GPT-4o mini outperforms GPT-3.5 Turbo on MMLU (82% vs. 69.8%) [42]. |

| o1-series (o1-preview, o1-mini, o1) | STEM-focused data, some general data (pre-2024/2025) [7,43] | System 2 thinking, PhD-level STEM reasoning (o1-preview), fast reasoning (o1-mini), full o1 reasoning and multimodality (o1) [7,43] | Analytical rigor, hypothesis generation/evaluation (biology, math, engineering) [44,45] | Specialized for STEM, general capabilities relative to GPT-4o may vary | o1-mini is best performing benchmarked model on AIME 2024 and 2025 [44,45]. Used for generating finite element code in geotechnical engineering [45,46]. |

| Feature | BERT | RoBERTa |

|---|---|---|

| Training Task | Masked Language Model (MLM) and Next Sentence Prediction (NSP) | MLM only (NSP task removed) |

| Training Data Size | ~16 GB (BookCorpus and Wikipedia) | ~160 GB (BookCorpus, Wikipedia, CC-News, etc.) |

| Masking Strategy | Static (masking pattern fixed during pre-processing) | Dynamic (masking pattern changes across training epochs) |

| Batch Size | Smaller (e.g., 256) | Significantly Larger (e.g., 8000) |

| Model Version | Context Window Size (Tokens) | Approximate Page Equivalent |

|---|---|---|

| GPT-4 (Initial Release) | 8192 | ~20 pages |

| GPT-4-32k | 32,768 | ~80 pages |

| GPT-4 Turbo/GPT-4o | 128,000 | ~300 pages |

| GPT-4.1 Series | 1,000,000 | ~2500 pages |

| Benchmark | Model | Score | Interpretation |

|---|---|---|---|

| MMLU (General Knowledge) | GPT-3.5 Turbo | ~70% | Foundational general knowledge. |

| GPT-4o mini | 82% | Strong general knowledge and reasoning. | |

| o1-mini | 85.2% | Very strong general knowledge, but not its primary strength. | |

| AIME (Advanced Math Reasoning) | GPT-4o | ~13% | Lacks specialized, multi-step reasoning ability. |

| o1-mini | 70% | Elite mathematical reasoning, competitive with top human talent. |

| Application Area | Specific Use Cases | Documented Benefits | Key Challenges | Novel Methodological/Theoretical Implications |

|---|---|---|---|---|

| Education | Personalized learning, virtual tutoring [137] | Tailored content, adaptive pacing, 24/7 support, increased engagement [137] | Over-reliance, reduced critical thinking, accuracy of information, data privacy, equity of access | Development of “AI-Integrated Pedagogy”; re-evaluation of constructivist and self-determination learning theories in AI contexts. |

| Curriculum/Lesson Planning [138] | Efficiency for educators, idea generation, diverse material creation [138] | Quality of AI suggestions, maintaining teacher creativity, potential for generic content [138] | Frameworks for AI-assisted curriculum design that balance efficiency with pedagogical soundness and teacher agency. | |

| Student Assessment [140] | Generation of diverse quiz/exam questions, formative feedback, personalized assessment [138] | Academic integrity (plagiarism), difficulty assessing true understanding, fairness of AI-generated assessments [143] | New assessment paradigms focusing on higher-order skills, process over product; ethical guidelines for AI in assessment. | |

| Engineering | Software Engineering (Code generation, debugging, QA) [155] | Increased developer productivity, reduced coding time, improved code quality [155] | Accuracy of generated code, over-dependence, skill degradation, security risks, bias in code [155] | “Human–LLM Cognitive Symbiosis” models for software development; AI-collaboration literacy for engineers. |

| BIM/Architecture/Civil Engineering (Info retrieval, design visualization) [19] | Enhanced understanding of domain-specific knowledge (with RAG), task planning support [19] | Reliance on quality of RAG documents, need for domain expertise in prompt/RAG setup [19] | Methodologies for integrating LLMs with domain-specific knowledge bases (e.g., RAG) for specialized engineering tasks. | |

| Mechanical/Industrial Design (Ideation, prototyping, optimization) [67] | Accelerated idea generation, exploration of diverse concepts, assistance in optimization problem formulation [67] | Design fixation, unnecessary complexity, misinterpretation of feedback, unsubstantiated estimates [34] | “AI-Augmented Engineering Design” frameworks; theories of “AI-robustness” in design; understanding LLM impact on cognitive design processes. | |

| Geotechnical Engineering (finite element analysis code generation) [46] | Assistance in implementing numerical models, especially with high-level libraries [46] | Extensive human intervention needed for low-level programming or complex problems; requires user expertise [46] | Frameworks for human–AI collaboration in complex numerical modeling and simulation. |

| Domain | Specific Identified Research Gap | Proposed Novel Research Question(s) | Potential Methodological Advancement | Potential Theoretical Advancement |

|---|---|---|---|---|

| NLU | True semantic understanding vs. mimicry; robustness to ambiguity; explainability [7] | How can NLU models be designed to exhibit verifiable deep understanding and provide transparent reasoning for their interpretations? | Development of “Deep Understanding Benchmarks”; new XAI techniques for generative NLU. | Theories of “Explainable Generative NLU”; models of computational semantics beyond statistical co-occurrence, drawing from linguistics and cognitive science. |

| Content Generation | Ensuring factual accuracy; dynamic quality control; IP and copyright [5] | What adaptive mechanisms can ensure real-time quality and ethical compliance in AI content generation across diverse contexts? | Adaptive, context-aware QA frameworks; blockchain or other technologies for provenance tracking. | “Ethical AI Content Frameworks” (informed by law and media ethics); theories of “Responsible Generative Efficiency.” |

| Knowledge Discovery | Validating AI-generated hypotheses; moving from info extraction to insight; ethical AI in science [39] | How can LLMs be integrated into the scientific method to reliably generate and validate novel, theoretically grounded hypotheses? | Rigorous validation protocols for AI-discovered knowledge; hybrid LLM-KG-Experimental methodologies. | “Computational Creativity Theories” for scientific discovery (integrating cognitive psychology and philosophy of science); models of AI-assisted abductive reasoning. |

| Education | Longitudinal impact on learning and critical thinking; AI literacy curricula; equity and bias in EdAI [5]; K-12 and special education gaps [168] | What pedagogical frameworks optimize human–AI collaboration for deep learning and critical skill development across diverse learners and contexts? | Longitudinal mixed-methods studies; co-design of AI literacy programs with educators and students; comparative studies in underrepresented educational settings. | “AI-Augmented Learning Theories” (linking to established learning sciences and cognitive psychology); frameworks for “Cyborg Pedagogy”; theories of ethical AI integration in diverse educational systems. |

| Engineering | LLMs in safety-critical tasks; understanding LLM failure modes in complex design [160]; human–LLM collaboration frameworks [21]; NL to code/design beyond software [155] | How can engineering design and optimization processes be re-theorized to effectively and safely incorporate LLM cognitive capabilities? | Protocols for LLM validation in complex simulations; frameworks for human-in-the-loop control for safety-critical engineering AI. | “Human–AI Symbiotic Engineering Design Theories” (grounded in Human-Computer Interaction [HCI] and cognitive engineering); theories of “AI-Robustness” in engineering systems. |