EEG-Based Biometric Identification and Emotion Recognition: An Overview

Abstract

1. Introduction

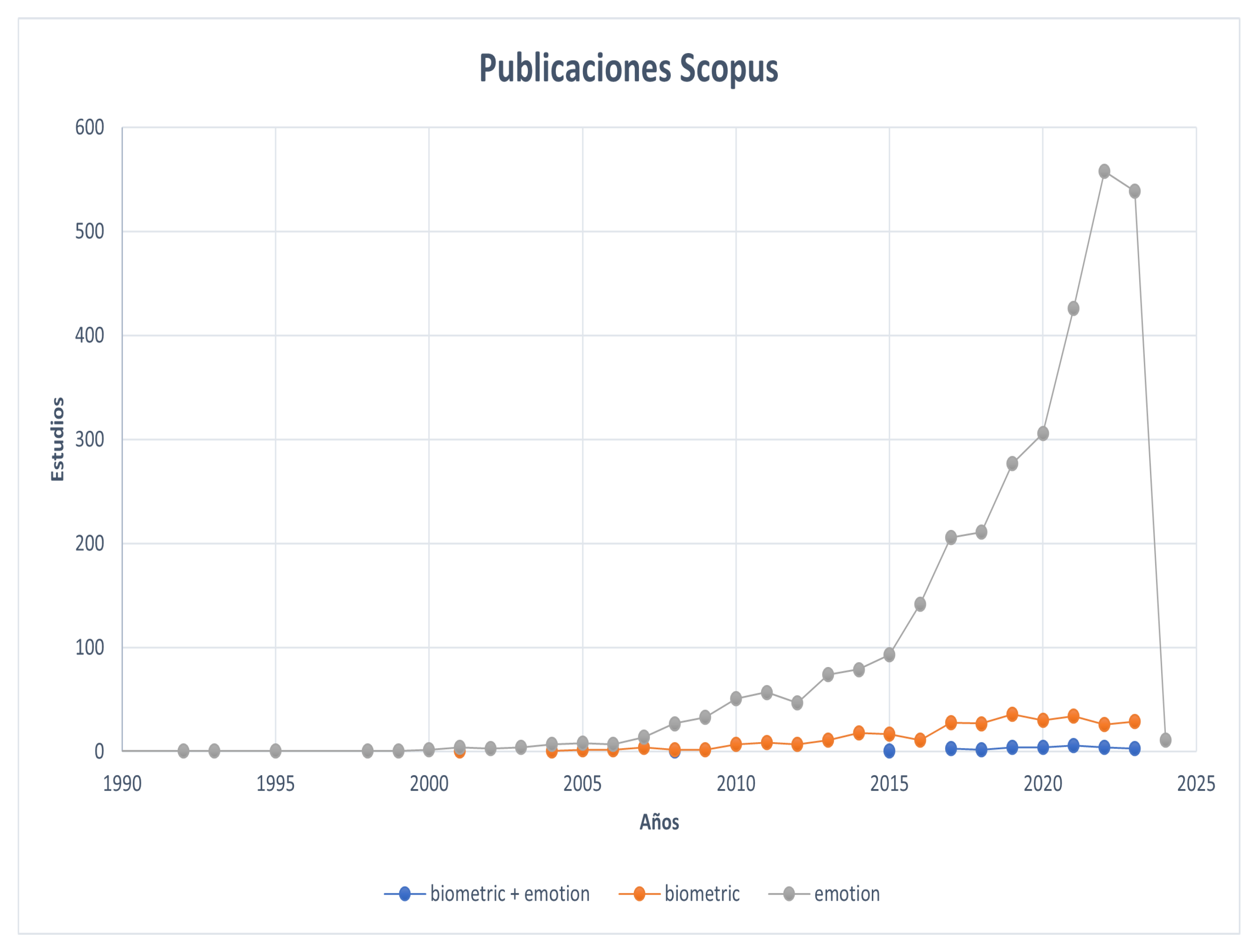

2. Literature Review Process

3. Electroencephalography (EEG): Foundations and Applications

3.1. Brain Anatomy Relevant to EEG

3.2. EEG Signals and Their Properties

3.3. Feature Extraction from EEG Signals

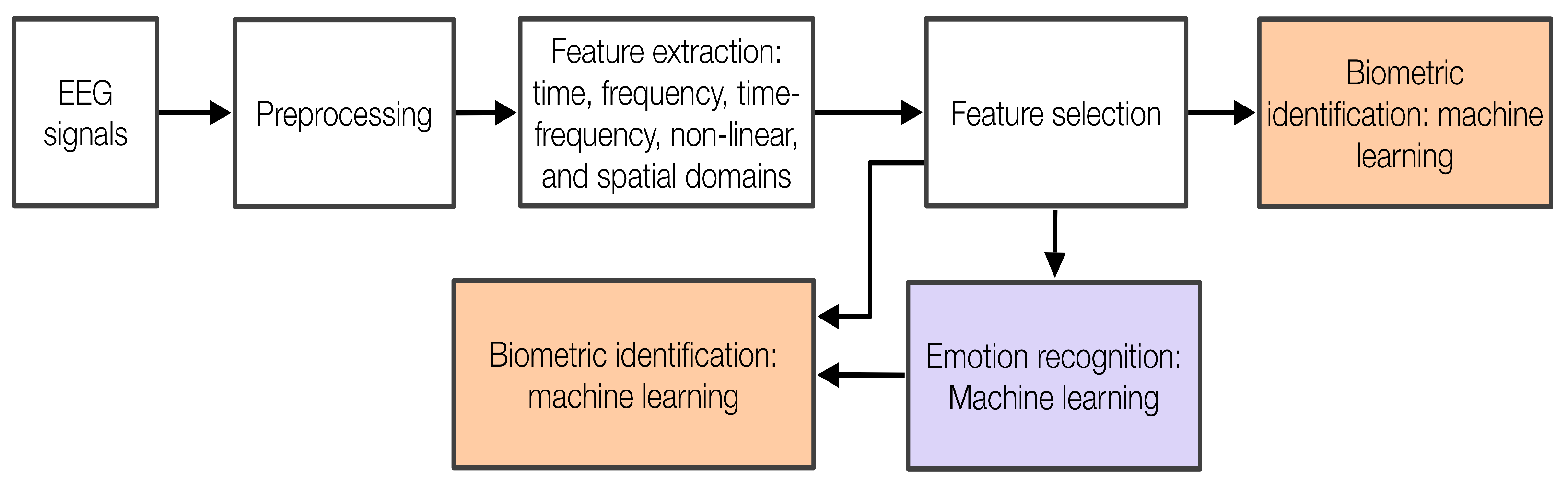

4. Emotion Recognition and Biometric Identification Using EEG

4.1. Biometric from EEG Signals

4.2. Emotion Recognition from EEG Signals

4.3. Emotion-Aware Biometric Identification

4.4. Practical Limitations and Real-World Considerations in Emotion-Aware EEG Biometric Systems

4.5. Ethical Considerations

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zapata, J.C.; Duque, C.M.; Gonzalez, M.E.; Becerra, M.A. Data Fusion Applied to Biometric Identification—A Review. Adv. Comput. Data Sci. 2017, 721, 721–733. [Google Scholar] [CrossRef]

- Zhong, W.; An, X.; Di, Y.; Zhang, L.; Ming, D. Review on identity feature extraction methods based on electroencephalogram signals. J. Biomed. Eng. 2021, 38, 1203–1210. [Google Scholar] [CrossRef]

- Apicella, A.; Arpaia, P.; Frosolone, M.; Improta, G.; Moccaldi, N.; Pollastro, A. EEG-based measurement system for monitoring student engagement in learning 4.0. Sci. Rep. 2022, 12, 5857. [Google Scholar] [CrossRef] [PubMed]

- Aslan, M.; Baykara, M.; Alakuş, T.B. LSTMNCP: Lie detection from EEG signals with novel hybrid deep learning method. Multimed. Tools Appl. 2023, 83, 31655–31671. [Google Scholar] [CrossRef]

- Galli, G.; Angelucci, D.; Bode, S.; Giorgi, C.D.; Sio, L.D.; Paparo, A.; Lorenzo, G.D.; Betti, V. Early EEG responses to pre-electoral survey items reflect political attitudes and predict voting behavior. Sci. Rep. 2021, 11, 18692. [Google Scholar] [CrossRef] [PubMed]

- Belhadj, F. Biometric System for Identification Belhadj F. (2017). Biometric System for Identification and Authentication.nd Authentication [Tesis Doctoral, École Nationale Supérieure d’informatique, Argelia]. HAL. 2017. Available online: https://hal.science/tel-01456829v1/location (accessed on 15 July 2025).

- Moreno-Revelo, M.; Ortega-Adarme, M.; Peluffo-Ordoñez, D.H.; Alvarez-Uribe, K.C.; Becerra, M.A. Comparison Among Physiological Signals for Biometric Identification. In Intelligent Data Engineering and Automated Learning—IDEAL 2017; Yin, H., Gao, Y., Chen, S., Wen, Y., Cai, G., Gu, T., Du, J., Tallón-Ballesteros, A.J., Zhang, M., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 436–443. [Google Scholar]

- Balci, F. DM-EEGID: EEG-Based Biometric Authentication System Using Hybrid Attention-Based LSTM and MLP Algorithm. Trait. Du Signal 2023, 40, 1–14. [Google Scholar]

- Alyasseri, Z.A.A.; Alomari, O.A.; Makhadmeh, S.N.; Mirjalili, S.; Al-Betar, M.A.; Abdullah, S.; Ali, N.S.; Papa, J.P.; Rodrigues, D.; Abasi, A.K. EEG Channel Selection for Person Identification Using Binary Grey Wolf Optimizer. IEEE Access 2022, 10, 10500–10513. [Google Scholar] [CrossRef]

- Abdi Alkareem Alyasseri, Z.; Alomari, O.A.; Al-Betar, M.A.; Awadallah, M.A.; Hameed Abdulkareem, K.; Abed Mohammed, M.; Kadry, S.; Rajinikanth, V.; Rho, S. EEG Channel Selection Using Multiobjective Cuckoo Search for Person Identification as Protection System in Healthcare Applications. Comput. Intell. Neurosci. 2022, 2022, 5974634. [Google Scholar] [CrossRef] [PubMed]

- Lai, C.Q.; Ibrahim, H.; Abdullah, M.Z.; Suandi, S.A. EEG-Based Biometric Close-Set Identification Using CNN-ECOC-SVM. In Artificial Intelligence in Data and Big Data Processing; Springer Nature: Berlin, Germany, 2022; pp. 723–732. [Google Scholar] [CrossRef]

- Sun, Y.; Lo, P.-W.; Lo, B.L. EEG-based user identification system using 1D-convolutional long short-term memory neural networks. Expert Syst. Appl. 2019, 125, 259–267. [Google Scholar] [CrossRef]

- Radwan, S.H.; El-Telbany, M.; Arafa, W.; Ali, R.A. Deep Learning Approaches for Personal Identification Based on EGG Signals. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2021, Cairo, Egypt, 11–13 December; Lecture Notes on Data Engineering and Communications Technologies. Springer Nature: Berlin, Germany, 2022; Volume 100, pp. 30–39. [Google Scholar] [CrossRef]

- Hendrawan, M.A.; Saputra, P.Y.; Rahmad, C. Identification of optimum segment in single channel EEG biometric system. Indones. J. Electr. Eng. Comput. Sci. 2021, 23, 1847–1854. [Google Scholar] [CrossRef]

- Kulkarni, D.; Dixit, V.V. Hybrid classification model for emotion detection using electroencephalogram signal with improved feature set. Biomed. Signal Process. Control 2025, 100, 106893. [Google Scholar] [CrossRef]

- Wang, C.; Li, Y.; Liu, S.; Yang, S. TVRP-based constructing complex network for EEG emotional feature analysis and recognition. Biomed. Signal Process. Control 2024, 96, 106606. [Google Scholar] [CrossRef]

- Kumar, A.; Kumar, A. DEEPHER: Human Emotion Recognition Using an EEG-Based DEEP Learning Network Model. Eng. Proc. 2021, 10, 32. [Google Scholar] [CrossRef]

- Sakalle, A.; Tomar, P.; Bhardwaj, H.; Acharya, D.; Bhardwaj, A. A LSTM based deep learning network for recognizing emotions using wireless brainwave driven system. Expert Syst. Appl. 2021, 173, 114516. [Google Scholar] [CrossRef]

- Galvão, F.; Alarcão, S.M.; Fonseca, M.J. Predicting exact valence and arousal values from EEG. Sensors 2021, 21, 3414. [Google Scholar] [CrossRef] [PubMed]

- Özerdem, M.S.; Polat, H. Emotion recognition based on EEG features in movie clips with channel selection. Brain Inform. 2017, 4, 241–252. [Google Scholar] [CrossRef] [PubMed]

- Maiorana, E. Deep learning for EEG-based biometric recognition. Neurocomputing 2020, 410, 374–386. [Google Scholar] [CrossRef]

- Prabowo, D.W.; Nugroho, H.A.; Setiawan, N.A.; Debayle, J. A systematic literature review of emotion recognition using EEG signals. Cogn. Syst. Res. 2023, 82, 101152. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, M.; Yang, Y.; Zhang, X. Multi-modal emotion recognition using EEG and speech signals. Comput. Biol. Med. 2022, 149, 105907. [Google Scholar] [CrossRef] [PubMed]

- Kouka, N.; Fourati, R.; Fdhila, R.; Siarry, P.; Alimi, A.M. EEG channel selection-based binary particle swarm optimization with recurrent convolutional autoencoder for emotion recognition. Biomed. Signal Process. Control 2023, 84, 104783. [Google Scholar] [CrossRef]

- Huang, G.; Hu, Z.; Chen, W.; Zhang, S.; Liang, Z.; Li, L.; Zhang, L.; Zhang, Z. M3CV: A multi-subject, multi-session, and multi-task database for EEG-based biometrics challenge. NeuroImage 2022, 264, 119666. [Google Scholar] [CrossRef] [PubMed]

- Ortega-Rodríguez, J.; Gómez-González, J.F.; Pereda, E. Selection of the Minimum Number of EEG Sensors to Guarantee Biometric Identification of Individuals. Sensors 2023, 23, 4239. [Google Scholar] [CrossRef] [PubMed]

- Benomar, M.; Cao, S.; Vishwanath, M.; Vo, K.; Cao, H. Investigation of EEG-Based Biometric Identification Using State-of-the-Art Neural Architectures on a Real-Time Raspberry Pi-Based System. Sensors 2022, 22, 9547. [Google Scholar] [CrossRef] [PubMed]

- Tian, W.; Li, M.; Hu, D. Multi-band Functional Connectivity Features Fusion Using Multi-stream GCN for EEG Biometric Identification. In Proceedings of the 2022 International Conference on Autonomous Unmanned Systems (ICAUS 2022), Xi’an, China, 23–25 September; Springer Nature: Berlin, Germany, 2023; pp. 3196–3203. [Google Scholar] [CrossRef]

- Kralikova, I.; Babusiak, B.; Smondrk, M. EEG-Based Person Identification during Escalating Cognitive Load. Sensors 2022, 22, 7154. [Google Scholar] [CrossRef] [PubMed]

- Wibawa, A.D.; Mohammad, B.S.Y.; Fata, M.A.K.; Nuraini, F.A.; Prasetyo, A.; Pamungkas, Y. Comparison of EEG-Based Biometrics System Using Naive Bayes, Neural Network, and Support Vector Machine. In Proceedings of the 2022 International Conference on Electrical and Information Technology (IEIT), Malang, Indonesia, 15–16 September 2022; pp. 408–413. [Google Scholar] [CrossRef]

- Hendrawan, M.A.; Rosiani, U.D.; Sumari, A.D.W. Single Channel Electroencephalogram (EEG) Based Biometric System. In Proceedings of the 2022 IEEE 8th Information Technology International Seminar (ITIS), Surabaya, Indonesia, 19–21 October 2022; pp. 307–311. [Google Scholar] [CrossRef]

- Jijomon, C.M.; Vinod, A.P. EEG-based Biometric Identification using Frequently Occurring Maximum Power Spectral Features. In Proceedings of the 2018 IEEE Applied Signal Processing Conference (ASPCON), Kolkata, India, 7–9 December 2018; pp. 249–252. [Google Scholar] [CrossRef]

- Waili, T.; Johar, M.G.M.; Sidek, K.A.; Nor, N.S.H.M.; Yaacob, H.; Othman, M. EEG Based Biometric Identification Using Correlation and MLPNN Models. Int. J. Online Biomed. Eng. (iJOE) 2019, 15, 77–90. [Google Scholar] [CrossRef]

- Monsy, J.C.; Vinod, A.P. EEG-based biometric identification using frequency-weighted power feature. Inst. Eng. Technol. 2020, 9, 251–258. [Google Scholar] [CrossRef]

- Alsumari, W.; Hussain, M.; Alshehri, L.; Aboalsamh, H.A. EEG-Based Person Identification and Authentication Using Deep Convolutional Neural Network. Axioms 2023, 12, 74. [Google Scholar] [CrossRef]

- TajDini, M.; Sokolov, V.; Kuzminykh, I.; Ghita, B. Brainwave-based authentication using features fusion. Comput. Secur. 2023, 129, 103198. [Google Scholar] [CrossRef]

- Oikonomou, V.P. Human Recognition Using Deep Neural Networks and Spatial Patterns of SSVEP Signals. Sensors 2023, 23, 2425. [Google Scholar] [CrossRef] [PubMed]

- Bak, S.J.; Jeong, J. User Biometric Identification Methodology via EEG-Based Motor Imagery Signals. IEEE Access 2023, 11, 41303–41314. [Google Scholar] [CrossRef]

- Ortega-Rodríguez, J.; Martín-Chinea, K.; Gómez-González, J.F.; Pereda, E. Brainprint based on functional connectivity and asymmetry indices of brain regions: A case study of biometric person identification with non-expensive electroencephalogram headsets. IET Biom. 2023, 12, 129–145. [Google Scholar] [CrossRef]

- Cui, G.; Li, X.; Touyama, H. Emotion recognition based on group phase locking value using convolutional neural network. Sci. Rep. 2023, 13, 3769. [Google Scholar] [CrossRef] [PubMed]

- Khubani, J.; Kulkarni, S. Inventive deep convolutional neural network classifier for emotion identification in accordance with EEG signals. Soc. Netw. Anal. Min. 2023, 13, 34. [Google Scholar] [CrossRef]

- Zali-Vargahan, B.; Charmin, A.; Kalbkhani, H.; Barghandan, S. Deep time-frequency features and semi-supervised dimension reduction for subject-independent emotion recognition from multi-channel EEG signals. Biomed. Signal Process. Control 2023, 85, 104806. [Google Scholar] [CrossRef]

- Vahid, A.; Arbabi, E. Human identification with EEG signals in different emotional states. In Proceedings of the 2016 23rd Iranian Conference on Biomedical Engineering and 2016 1st International Iranian Conference on Biomedical Engineering, ICBME 2016, Tehran, Iran, 24–25 November 2016; pp. 242–246. [Google Scholar] [CrossRef]

- Arnau-González, P.; Arevalillo-Herráez, M.; Katsigiannis, S.; Ramzan, N. On the Influence of Affect in EEG-Based Subject Identification. IEEE Trans. Affect. Comput. 2021, 12, 391–401. [Google Scholar] [CrossRef]

- Kaur, B.; Kumar, P.; Roy, P.P.; Singh, D. Impact of Ageing on EEG Based Biometric Systems. In Proceedings of the 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 459–464. [Google Scholar] [CrossRef]

- Diamond, M.C.; Scheibel, A.B.; Elson, L.M. Libro de Trabajo el Cerebro Humano Barcelona: Editorial Ariel. 2014. Available online: https://books.google.co.th/books/about/El_cerebro_humano_libro_de_trabajo.html?id=n25VCwAAQBAJ&redir_esc=y (accessed on 15 July 2025).

- Romeo Urrea, H. El Dominio de los Hemisferios Cerebrales. Cienc. Unemi 2015, 3, 8–15. [Google Scholar] [CrossRef]

- Buzsáki, G.; Draguhn, A. Neuronal Oscillations in Cortical Networks. Science 2004, 304, 1926–1929. [Google Scholar] [CrossRef] [PubMed]

- Hestermann, E.; Schreve, K.; Vandenheever, D. Enhancing Deep Sleep Induction Through a Wireless In-Ear EEG Device Delivering Binaural Beats and ASMR: A Proof-of-Concept Study. Sensors 2024, 24, 7471. [Google Scholar] [CrossRef] [PubMed]

- Candela-Leal, M.O.; Alanis-Espinosa, M.; Murrieta-González, J.; de J. Lozoya-Santos, J.; Ramírez-Moreno, M.A. Neural signatures of STEM learning and interest in youth. Acta Psychol. 2025, 255, 104949. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Tan, Z.; Xia, W.; Gomes, C.A.; Zhang, X.; Zhou, W.; Liang, S.; Axmacher, N.; Wang, L. Theta oscillations synchronize human medial prefrontal cortex and amygdala during fear learning. Sci. Adv. 2021, 7, abf4198. [Google Scholar] [CrossRef] [PubMed]

- Mikicin, M.; Kowalczyk, M. Audio-Visual and Autogenic Relaxation Alter Amplitude of Alpha EEG Band, Causing Improvements in Mental Work Performance in Athletes. Appl. Psychophysiol. Biofeedback 2015, 40, 219–227. [Google Scholar] [CrossRef] [PubMed]

- Lundqvist, M.; Herman, P.; Warden, M.R.; Brincat, S.L.; Miller, E.K. Gamma and beta bursts during working memory readout suggest roles in its volitional control. Nat. Commun. 2018, 9, 394. [Google Scholar] [CrossRef] [PubMed]

- Hsu, H.H.; Yang, Y.R.; Chou, L.W.; Huang, Y.C.; Wang, R.Y. The Brain Waves During Reaching Tasks in People With Subacute Low Back Pain: A Cross-Sectional Study. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 183–190. [Google Scholar] [CrossRef] [PubMed]

- Subha, D.P.; Joseph, P.K.; U, R.A.; Lim, C. EEG Signal Analysis: A Survey. J. Med. Syst. 2010, 34, 195–212. [Google Scholar] [CrossRef] [PubMed]

- Acharya, D.; Lende, M.; Lathia, K.; Shirgurkar, S.; Kumar, N.; Madrecha, S.; Bhardwaj, A. Comparative Analysis of Feature Extraction Technique on EEG-Based Dataset. In Soft Computing for Problem Solving; Springer Nature: Berlin, Germany, 2020; pp. 405–416. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, Q.Q.; Chen, H.; Hu, X.Q.; Li, W.G.; Bai, Y.; Han, J.X.; Wang, Y.; Liang, Z.H.; Chen, D.; et al. The applied principles of EEG analysis methods in neuroscience and clinical neurology. Mil. Med. Res. 2023, 10, 67. [Google Scholar] [CrossRef] [PubMed]

- Colominas, M.A.; Schlotthauer, G.; Torres, M.E. Improved complete ensemble EMD: A suitable tool for biomedical signal processing. Biomed. Signal Process. Control 2014, 14, 19–29. [Google Scholar] [CrossRef]

- Jaipriya, D.; Sriharipriya, K.C. Brain Computer Interface-Based Signal Processing Techniques for Feature Extraction and Classification of Motor Imagery Using EEG: A Literature Review. Biomed. Mater. Devices 2024, 2, 601–613. [Google Scholar] [CrossRef]

- Oikonomou, V.P. A Sparse Representation Classification Framework for Person Identification and Verification Using Neurophysiological Signals. Electronics 2025, 14, 1108. [Google Scholar] [CrossRef]

- Egorova, L.; Kazakovtsev, L.; Vaitekunene, E. Nonlinear Features and Hybrid Optimization Algorithm for Automated Electroencephalogram Signal Analysis. In Mathematical Modeling in Physical Sciences; Springer Nature: Berlin, Germany, 2024; pp. 233–243. [Google Scholar] [CrossRef]

- Jain, A.K.; Ross, A.; Nandakumar, K. An introduction to biometrics. In Proceedings of the International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; p. 1. [Google Scholar] [CrossRef]

- Kaliraman, B.; Nain, S.; Verma, R.; Dhankhar, Y.; Hari, P.B. Pre-processing of EEG signal using Independent Component Analysis. In Proceedings of the 2022 10th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 13–14 October 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Yamashita, M.; Nakazawa, M.; Nishikawa, Y.; Abe, N. Examination and It’s Evaluation of Preprocessing Method for Individual Identification in EEG. J. Inf. Process. 2020, 28, 239–246. [Google Scholar] [CrossRef][Green Version]

- Bhawna, K.; Priyanka; Duhan, M. Electroencephalogram Based Biometric System: A Review. Lect. Notes Electr. Eng. 2021, 668, 57–77. [Google Scholar] [CrossRef]

- Mishra, A.; Bhateja, V.; Gupta, A.; Mishra, A.; Satapathy, S.C. Feature Fusion and Classification of EEG/EOG Signals. In Soft Computing and Signal Processing; Springer Nature: Berlin, Germany, 2019; pp. 793–799. [Google Scholar] [CrossRef]

- Barra, S.; Casanova, A.; Fraschini, M.; Nappi, M. EEG/ECG Signal Fusion Aimed at Biometric Recognition. In New Trends in Image Analysis and Processing—ICIAP 2015 Workshops; Springer Nature: Berlin, Germany, 2015; pp. 35–42. [Google Scholar] [CrossRef]

- Zhang, X.; Yao, L.; Huang, C.; Gu, T.; Yang, Z.; Liu, Y. DeepKey. ACM Trans. Intell. Syst. Technol. 2020, 11, 1–24. [Google Scholar] [CrossRef]

- Moreno-Rodriguez, J.C.; Ramirez-Cortes, J.M.; Atenco-Vazquez, J.C.; Arechiga-Martinez, R. EEG and voice bimodal biometric authentication scheme with fusion at signal level. In Proceedings of the 2021 IEEE Mexican Humanitarian Technology Conference (MHTC), Puebla, Mexico, 21–22 April 2021; pp. 52–58. [Google Scholar] [CrossRef]

- Du, Y.; Xu, Y.; Wang, X.; Liu, L.; Ma, P. EEG temporal–spatial transformer for person identification. Sci. Rep. 2022, 12, 14378. [Google Scholar] [CrossRef] [PubMed]

- Lin, L.; Zhao, Y.; Meng, J.; Zhao, Q. A Federated Attention-Based Multimodal Biometric Recognition Approach in IoT. Sensors 2023, 23, 6006. [Google Scholar] [CrossRef] [PubMed]

- Delvigne, V.; Wannous, H.; Vandeborre, J.P.; Ris, L.; Dutoit, T. Spatio-Temporal Analysis of Transformer based Architecture for Attention Estimation from EEG. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 1076–1082. [Google Scholar] [CrossRef]

- Fidas, C.A.; Lyras, D. A Review of EEG-Based User Authentication: Trends and Future Research Directions. IEEE Access 2023, 11, 22917–22934. [Google Scholar] [CrossRef]

- Moctezuma, L.A.; Molinas, M. Towards a minimal EEG channel array for a biometric system using resting-state and a genetic algorithm for channel selection. Sci. Rep. 2020, 10, 14917. [Google Scholar] [CrossRef] [PubMed]

- Carla, F.; Yanina, W.; Daniel Gustavo, P. ¿Cuántas Son Las Emociones Básicas? Anu. De Investig. 2017, 26, 253–257. [Google Scholar]

- LeDoux, J.E. Emotion Circuits in the Brain. Annu. Rev. Neurosci. 2000, 23, 155–184. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M. Natural selective attention: Orienting and emotion. Psychophysiology 2009, 46, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Carlos Gantiva, K.C. Caracteristicas de la respuesta emocional generada por las palabras: Un estudio experimental desde LA emoción y la motivación. Psychol. Av. Discip. 2016, 10, 55–62. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Zheng, W.L.; Guo, H.T.; Lu, B.L. Revealing critical channels and frequency bands for emotion recognition from EEG with deep belief network. In Proceedings of the 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 154–157. [Google Scholar] [CrossRef]

- Ekmekcioglu, E.; Cimtay, Y. Loughborough University Multimodal Emotion Dataset-2; Loughborough University: Loughborough, UK, 2020. [Google Scholar] [CrossRef]

- Li, J.W.; Lin, D.; Che, Y.; Lv, J.J.; Chen, R.J.; Wang, L.J.; Zeng, X.X.; Ren, J.C.; Zhao, H.M.; Lu, X. An innovative EEG-based emotion recognition using a single channel-specific feature from the brain rhythm code method. Front. Neurosci. 2023, 17, 1221512. [Google Scholar] [CrossRef] [PubMed]

- Murugappan, M.; Ramachandran, N.; Sazali, Y. Classification of human emotion from EEG using discrete wavelet transform. J. Biomed. Sci. Eng. 2010, 3, 390–396. [Google Scholar] [CrossRef]

- Lee, Y.Y.; Hsieh, S. Classifying Different Emotional States by Means of EEG-Based Functional Connectivity Patterns. PLoS ONE 2014, 9, e95415. [Google Scholar] [CrossRef] [PubMed]

- Iacoviello, D.; Petracca, A.; Spezialetti, M.; Placidi, G. A real-time classification algorithm for EEG-based BCI driven by self-induced emotions. Comput. Methods Programs Biomed. 2015, 122, 293–303. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Khaund, K.; Hazarika, S.M. Bispectral Analysis of EEG for Emotion Recognition. Procedia Comput. Sci. 2016, 84, 31–35. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, S.; Ji, X. EEG-based classification of emotions using empirical mode decomposition and autoregressive model. Multimed. Tools Appl. 2018, 77, 26697–26710. [Google Scholar] [CrossRef]

- Daşdemir, Y.; Yıldırım, E.; Yıldırım, S. Emotion Analysis using Different Stimuli with EEG Signals in Emotional Space. Nat. Eng. Sci. 2017, 2, 1–10. [Google Scholar] [CrossRef][Green Version]

- Singh, M.I.; Singh, M. Development of low-cost event marker for EEG-based emotion recognition. Trans. Inst. Meas. Control 2017, 39, 642–652. [Google Scholar] [CrossRef]

- Nakisa, B.; Rastgoo, M.N.; Rakotonirainy, A.; Maire, F.; Chandran, V. Long Short Term Memory Hyperparameter Optimization for a Neural Network Based Emotion Recognition Framework. IEEE Access 2018, 6, 49325–49338. [Google Scholar] [CrossRef]

- Sovatzidi, G.; Iakovidis, D.K. Interpretable EEG-Based Emotion Recognition Using Fuzzy Cognitive Maps; IOS Press Ebooks: Amsterdam, The Netherlands, Ebook: Volume 302 - Caring is Sharing – Exploiting the Value in Data for Health and Innovation, Series: Studies in Health Technology and Informatics; 2023. [Google Scholar] [CrossRef]

- Ong, Z.Y.; Saidatul, A.; Vijean, V.; Ibrahim, Z. Non Linear Features Analysis between Imaginary and Non-imaginary Tasks for Human EEG-based Biometric Identification. IOP Conf. Ser. Mater. Sci. Eng. 2019, 557, 012033. [Google Scholar] [CrossRef]

- Brás, S.; Ferreira, J.H.T.; Soares, S.C.; Pinho, A.J. Biometric and Emotion Identification: An ECG Compression Based Method. Front. Psychol. 2018, 9, 467. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wu, Q.; Wang, C.; Ruan, Q. DE-CNN: An Improved Identity Recognition Algorithm Based on the Emotional Electroencephalography. Comput. Math. Methods Med. 2020, 2020, 7574531. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.X.; Mao, Z.J.; Yao, W.X.; Huang, Y.F. EEG-based biometric identification with convolutional neural network. Multimed. Tools Appl. 2020, 79, 10655–10675. [Google Scholar] [CrossRef]

- Pandharipande, M.; Chakraborty, R.; Kopparapu, S.K. Modeling of Olfactory Brainwaves for Odour Independent Biometric Identification. In Proceedings of the 2023 31st European Signal Processing Conference (EUSIPCO), Helsinki, Finland, 4–8 September 2023; pp. 1140–1144. [Google Scholar] [CrossRef]

- Becerra, M.A.; Londoño-Delgado, E.; Pelaez-Becerra, S.M.; Serna-Guarín, L.; Castro-Ospina, A.E.; Marin-Castrillón, D.; Peluffo-Ordóñez, D.H. Odor Pleasantness Classification from Electroencephalographic Signals and Emotional States. In Advances in Computing; Springer: Cham, Switzerland, 2018; pp. 128–138. [Google Scholar] [CrossRef]

- Becerra, M.A.; Londoño-Delgado, E.; Pelaez-Becerra, S.M.; Castro-Ospina, A.E.; Mejia-Arboleda, C.; Durango, J.; Peluffo-Ordóñez, D.H. Electroencephalographic Signals and Emotional States for Tactile Pleasantness Classification. In Progress in Artificial Intelligence and Pattern Recognition; Springer Nature: Berlin, Germany, 2018; pp. 309–316. [Google Scholar] [CrossRef]

- Duque-Mejía, C.; Castro, A.; Duque, E.; Serna-Guarín, L.; Lorente-Leyva, L.L.; Peluffo-Ordóñez, D.; Becerra, M.A. Methodology for biometric identification based on EEG signals in multiple emotional states; [Metodología para la identificación biométrica a partir de señales EEG en múltiples estados emocionales]. RISTI—Rev. Iber. Sist. E Tecnol. Inf. 2023, 2023, 281–288. [Google Scholar]

- Zhang, D.; Yao, L.; Zhang, X.; Wang, S.; Chen, W.; Boots, R. Cascade and Parallel Convolutional Recurrent Neural Networks on EEG-based Intention Recognition for Brain Computer Interface. arXiv 2021, arXiv:1708.06578. [Google Scholar] [CrossRef]

- Niso, G.; Romero, E.; Moreau, J.T.; Araujo, A.; Krol, L.R. Wireless EEG: A survey of systems and studies. NeuroImage 2023, 269, 119774. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Gordo, M.; Sanchez-Morillo, D.; Valle, F. Dry EEG Electrodes. Sensors 2014, 14, 12847–12870. [Google Scholar] [CrossRef] [PubMed]

- Alotaiby, T.; El-Samie, F.E.A.; Alshebeili, S.A.; Ahmad, I. A review of channel selection algorithms for EEG signal processing. EURASIP J. Adv. Signal Process. 2015, 2015, 66. [Google Scholar] [CrossRef]

- Lopez, C.A.F.; Li, G.; Zhang, D. Beyond Technologies of Electroencephalography-Based Brain-Computer Interfaces: A Systematic Review From Commercial and Ethical Aspects. Front. Neurosci. 2020, 14, 611130. [Google Scholar] [CrossRef]

- Antunes, R.S.; da Costa, C.A.; Küderle, A.; Yari, I.A.; Eskofier, B. Federated Learning for Healthcare: Systematic Review and Architecture Proposal. ACM Trans. Intell. Syst. Technol. 2022, 13, 54. [Google Scholar] [CrossRef]

- McCall, I.C.; Wexler, A. Peering into the mind? The ethics of consumer neuromonitoring devices. In Developments in Neuroethics and Bioethics; Elsevier B.V.: Amsterdam, The Netherlands, 2020; pp. 1–22. [Google Scholar] [CrossRef]

- Kiran, A.; Ahmed, A.B.G.E.; Khan, M.; Babu, J.C.; Kumar, B.P.S. An efficient method for privacy protection in big data analytics using oppositional fruit fly algorithm. Indones. J. Electr. Eng. Comput. Sci. 2025, 37, 670. [Google Scholar] [CrossRef]

- Green, D.J.; Barnes, T.A.; Klein, N.D. Emotion regulation in response to discrimination: Exploring the role of self-control and impression management emotion-regulation goals. Sci. Rep. 2024, 14, 26632. [Google Scholar] [CrossRef] [PubMed]

- Jalaly Bidgoly, A.; Jalaly Bidgoly, H.; Arezoumand, Z. A survey on methods and challenges in EEG based authentication. Comput. Secur. 2020, 93, 101788. [Google Scholar] [CrossRef]

- Su, J.; Zhu, J.; Song, T.; Chang, H. Subject-Independent EEG Emotion Recognition Based on Genetically Optimized Projection Dictionary Pair Learning. Brain Sci. 2023, 13, 977. [Google Scholar] [CrossRef] [PubMed]

- Yuvaraj, R.; Baranwal, A.; Prince, A.A.; Murugappan, M.; Mohammed, J.S. Emotion Recognition from Spatio-Temporal Representation of EEG Signals via 3D-CNN with Ensemble Learning Techniques. Emerg. Trends Biomed. Signal Process. Intell. Emot. Recognit. 2023, 13, 685. [Google Scholar] [CrossRef] [PubMed]

- Si, X.; Huang, D.; Sun, Y.; Huang, S.; Huang, H.; Ming, D. Transformer-based ensemble deep learning model for EEG-based emotion recognition. Brain Sci. Adv. 2023, 9, 210–223. [Google Scholar] [CrossRef]

- Ji, Y.; Dong, S.Y. Deep learning-based self-induced emotion recognition using EEG. Front. Neurosci. 2022, 16, 985709. [Google Scholar] [CrossRef] [PubMed]

- Deng, X.; Lv, X.; Yang, P.; Liu, K.; Sun, K. Emotion Recognition Method Based on EEG in Few Channels. In Proceedings of the 2022 IEEE 11th Data Driven Control and Learning Systems Conference (DDCLS), Chengdu, China, 3–5 August 2022; pp. 1291–1296. [Google Scholar]

| Ref. | Database | Feature Extraction | Clasification Method | Accuracy | Year |

|---|---|---|---|---|---|

| [26] | Own database (13 subjects) and PhysioNet BCI (109 subjects) | PCA, Wilcoxon test, fast Fourier transform, Power Spectrum (PS), Asymmetry index | RBF-SVM, K-fold, Cross-validation | 99.9 ± 1.39% | 2023 |

| [27] | The BED (Biometric EEG Dataset) 21 subjects | PCA, Wilcoxon test, optimal spatial filtering | Deep learning (DL) | 86.74% | 2022 |

| [28] | PhysioNet EEG MotorMovement/Imagery Dataset (1000 subjects) | Functional connectivity (FC) | Multi-stream GCN (MSGCN) | 98.05% | 2023 |

| [29] | Own database (21 subjects) | 1D-CNN | Cross 5-fold, LDA, SVM, K-NN, DL | 99% | 2022 |

| [30] | Own database (43 subjects) | Power Spectral Density (PSD) | Naive Bayes, Neural Network, SVM | 97.7% | 2022 |

| [31] | Own database (8 subjects) | Power Spectral Density (PSD) from delta (0.5–4 Hz), theta (4–8 Hz), alpha (8–14 Hz), beta (14–30 Hz), gamma (30–50 Hz) bands, LDA | K-NN, SVM | 80% | 2022 |

| [11] | PhysioBank database (109) | CNN | CNN-ECOC-SVM | 98.49% | 2022 |

| [32] | PhysioNet database (109) | Power spectral, PSD | Mean Correlation Coefficient (MCC) | Equal Error Rate 0.014 | 2018 |

| [33] | Own database (6 subjects) | Daubechies (db8) wavelet, PSD | Multilayer Perceptron Neural Network (MLPNN) | 75.8% | 2019 |

| [34] | PhysioNet database (16 subjects) | Frequency-weighted power (FWP) | Proposed method by the author | EER of 0.0039 | 2020 |

| [35] | PhysioNet dataset- 109 subjects | Uses only two EEG channels and a signal measured over a short temporal window of 5 s | CNN | Identification result of 99% and 0.187% of authentication error rate (ERR). | 2023 |

| [36] | The data is collected from 50 volunteer | (1) spectral information, (2) coherence, (3) mutual correlation coefficient, and (4) mutual information. | SVM | 0.52% of ERR, with a classification rate of 99.06%. | 2023 |

| Ref. | Database | Feature Extraction | Clasification Method | Accuracy | Year |

|---|---|---|---|---|---|

| [37] | They have used two SSVEP datasets for PI (Person Identification), the speller dataset and the EPOC dataset | auto-regressive (AR) modeling, power spectral density (PSD) energy of EEG channels, wavelet packet decomposition (WPD), and phase locking values (PLV). | combines common spatial patterns with specialized deep-learning neural networks. | recognition rate of 99% | 2023 |

| [8] | Physionet (109 subjects) | The system uses a Random Forest based binary feature selection method to filter out meaningless channels and determine the optimum number of channels for the highest accuracy | Hybrid Attention-based LSTM-MLP | 99.96% and 99.70% accuracy percentages for eyes-closed and eyes-open datasets | 2023 |

| [38] | The authors used the dataset of ’Big Data of 2-classes MI’ and Dataset IVa | In this study, CSP, ERD/S, AR, and FFT were applied to transform segmented data into informative features. The TDP method was excluded from this work because it is more suitable for motor execution rather than motor imagination. | SVM, GNB | SVM (CSP (98.97%), ERD/S (98.94%), AR (98.93%), and FFT (97.92%)).GNB (CSP (97.47%), ERD/S (94.58%), FFT(53.80%), and AR (50.24%)). | 2023 |

| [39] | Dataset I consisted of a self-collected dataset obtained using a low-cost EEG device and was used as the primary dataset. Dataset II, a widely used dataset from PhysioNet BCI, was employed to evaluate the proposed method with a larger number of subjects. | EEG signals were processed using the FieldTrip toolbox for Matlab. The toolbox provides various useful tools to process EEG, MEG, and invasive electrophysiological data. EEG signals were processed by first applying a baseline correction relative to the mean voltage, and then a finite impulse response (FIR) bandpass filter from 5 to 40 Hz for noise reduction. These preprocessing steps were necessary to smooth the classification procedures and remove or minimize undesired noise nuisance. | Support Vector Machines (SVM), Neural Networks (NN), and Discriminant Analysis (DA). | identification accuracy rates of up to 100% with a low-cost EEG device | 2023 |

| Ref. | Database | Feature Extraction | Clasification Method | Accuracy | Year |

|---|---|---|---|---|---|

| [40] | DEAP | phase locking value (PLV) | CNN | 85% | 2023 |

| [41] | SEED and DEAP | The proposed model uses an Inventive brain optimization algorithm and frequency features to enhance detection accuracy. | optimized deep convolutional neural network (DCNN) K-Nearest Neighbor (KNN), Support Vector Machine (SVM), Random Forest (RF), and Deep Belief Network (DBN). | (DCNN) model achieved an accuracy of 97.12% at 90% of training and 96.83% according to K-fold analysis | 2023 |

| [42] | SEED | Time-Frequency content of EEG signals using the modified Stockwell transform. Extracting deep features from each channel’s time-frequency content using a deep convolutional neural network. Fusing the reduced features of all channels to construct the final feature vector. Utilizing semi-supervised dimension reduction to reduce the features. | CNNs The Inception-V3 CNN and support vector machine (SVM) classifier | ... | 2023 |

| [43] | DEAP | 10-fold cross-validation has been employed for all experiments and scenarios. Sequential floating forward feature (SFFS) selection has been used to select the best features for classification | Support Vector Machine (SVM) with Radial Basis Function (RBF) kernel has been applied for classification | In our study the CCR is in the range of 88–99%, whilst the Equal Error Rate (EER) in the aforementioned research is in the range of 15–35% using SVM | 2017 |

| [44] | DEAP, MAHNOB-HCI, and SEED | The feature extraction process involved the use of time-domain and frequency-domain features | SVM, Random Forest (RF), and k-Nearest Neighbors (k-NN) | ... | 2021 |

| [45] | Own dataset with 60 users acquired with the Emotiv Epoc+ | The signals were filtered by the Savitzky-Golay filter to attenuate its short term variations | Hidden Markov Model (HMM) and Support Vector Machine (SVM) | User identification performance of 97.50% (HMM) and 93.83% (SVM). | 2017 |

| Cite | Year | Preprocessing | Extraction and Selection | Emotion Clasification |

|---|---|---|---|---|

| [84] | 2010 | Laplacian surface filter | wavelet transform, Fuzzy C Means (FCM) y Fuzzy K-Means (FKM) | Linear Discriminant Analysis (LDA) and K Nearest Neighbor (K-NN) |

| [85] | 2014 | FFT, EEGLAB | Correlation, Coherence and phase synchronization | Quadratic discriminant analysis |

| [86] | 2015 | Wavelet filter | PCA | SVM |

| [87] | 2015 | Blind source separation, Bandpass filter 4.0–45.0 Hz | HOSA (Higher order Spectral Analysis) | LS-SVM, Artificial Neural Networks (ANN) |

| [87] | 2016 | Filtro Butterworth | Bispectral analysis with HOSA | SVM |

| [88] | 2016 | Algorithm based on Independent Component Analysis | Sample Entropy, Quadratic Entropy, Distribution entropy | SVM |

| [89] | 2017 | MARA, AAR | Phase Locking Value (PLV) with ANOVA to assess statistical significance | SVM |

| [90] | 2017 | Laplacian surface filter | Wavelet transform | Polynomial kernel- SVM |

| [91] | 2018 | Filtro Butterworth Notch | ACA, SA, GA, SPO Algorithms | SVM |

| [83] | 2023 | DWT, EMD | Smoothed pseudo-Wigner–Ville distribution (RSPWVD) | K-NN, SVM, LDA y LR |

| [92] | 2023 | Finite Impulse Response, Artefact Subspace Reconstruction (ASR) | Power Spectral Density (PSD) | Naïve Bayes (NB), K-NN, SVM, Fuzzy Cognitive Map (FCM) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Becerra, M.A.; Duque-Mejia, C.; Castro-Ospina, A.; Serna-Guarín, L.; Mejía, C.; Duque-Grisales, E. EEG-Based Biometric Identification and Emotion Recognition: An Overview. Computers 2025, 14, 299. https://doi.org/10.3390/computers14080299

Becerra MA, Duque-Mejia C, Castro-Ospina A, Serna-Guarín L, Mejía C, Duque-Grisales E. EEG-Based Biometric Identification and Emotion Recognition: An Overview. Computers. 2025; 14(8):299. https://doi.org/10.3390/computers14080299

Chicago/Turabian StyleBecerra, Miguel A., Carolina Duque-Mejia, Andres Castro-Ospina, Leonardo Serna-Guarín, Cristian Mejía, and Eduardo Duque-Grisales. 2025. "EEG-Based Biometric Identification and Emotion Recognition: An Overview" Computers 14, no. 8: 299. https://doi.org/10.3390/computers14080299

APA StyleBecerra, M. A., Duque-Mejia, C., Castro-Ospina, A., Serna-Guarín, L., Mejía, C., & Duque-Grisales, E. (2025). EEG-Based Biometric Identification and Emotion Recognition: An Overview. Computers, 14(8), 299. https://doi.org/10.3390/computers14080299