Abstract

The current study examines the determinants influencing preparatory year (PY) students’ intentions to adopt AI-powered natural language processing (NLP) models, such as Copilot, ChatGPT, and Gemini, and how these intentions shape their conceptions of digital sustainability. Additionally, the extended unified theory of acceptance and use of technology (UTAUT) was integrated with a diversity of educational constructs, including content availability (CA), learning engagement (LE), learning motivation (LM), learner involvement (LI), and AI satisfaction (AS). Furthermore, responses of 274 PY students from Saudi Universities were analysed using partial least squares structural equation modelling (PLS-SEM) to evaluate both the measurement and structural models. Likewise, the findings indicated CA (β = 0.25), LE (β = 0.22), LM (β = 0.20), and LI (β = 0.18) significantly predicted user intention (UI), explaining 52.2% of its variance (R2 = 0.522). In turn, UI significantly predicted students’ digital sustainability conceptions (DSC) (β = 0.35, R2 = 0.451). However, AI satisfaction (AS) did not exhibit a moderating effect, suggesting uniformly high satisfaction levels among students. Hence, the study concluded that AI-powered NLP models are being adopted as learning assistant technologies and are also essential catalysts in promoting sustainable digital conceptions. Similarly, this study contributes both theoretically and practically by conceptualising digital sustainability as a learner-driven construct and linking educational technology adoption to its advancement. This aligns with global frameworks such as Sustainable Development Goals (SDGs) 4 and 9. The study highlights AI’s transformative potential in higher education by examining how user intention (UI) influences digital sustainability conceptions (DSC) among preparatory year students in Saudi Arabia. Given the demographic focus of the study, further research is recommended, particularly longitudinal studies, to track changes over time across diverse genders, academic specialisations, and cultural contexts.

1. Introduction

In 2015, the United Nations launched the 17 Sustainable Development Goals (SDGs) as a global call to action to eradicate poverty, protect the planet, and ensure prosperity for all by 2030 [1]. Additionally, within this framework, digital sustainability (DS) plays a pivotal role by promoting responsible technology use that minimises ecological harm while maximising efficiency, accessibility, and ethical data practices [2]. Furthermore, key applications of digital sustainability include accelerating clean energy solutions (SDG 7) through smart grids and energy efficient systems [3]; expanding access to quality education (SDG 4) via e-learning platforms and digital resources [4]; supporting sustainable infrastructure (SDG 9) through environmentally friendly digital systems [5]; and advancing climate action (SDG 13) by leveraging AI and big data for environmental monitoring and predictive analytics [6].

Additionally, these technologies are a critical enabler of ecological sustainability, offering transformative solutions that reduce pollution, optimise resource use, curb dependence on finite natural resources, and mitigate the impacts of climate change [7]. Across sectors, innovative applications are reshaping approaches to ecological preservation while advancing the Sustainable Development Goals [8]. However, the digital ecosystem itself carries an environmental cost. Although the energy required for a single internet search or e-mail is minimal, its cumulative impact is substantial [9]. Notably, approximately 5.56 billion people used the internet at the start of 2025, representing 67.9% of the global population [10]. Additionally, the number of internet users increased by 136 million (+2.5%) in 2024 [11]. However, 2.63 billion people remained offline at the beginning of 2025; thus, these small energy demands, along with the associated greenhouse gas emissions from each online activity, add up [10]. Likewise, according to some estimates, the carbon footprint of our gadgets, the internet, and their supporting systems accounts for about 4.0% of global greenhouse gas emissions [12].

In the higher education context, the diverse technological models, especially the artificial intelligence models (AIM), catalyse inclusive, equitable, and efficient learning [13]. Moreover, these models enhance student engagement through key factors such as content availability, learning engagement, motivation, and learner involvement, all of which contribute to greater user satisfaction and support the delivery of interactive and adaptive content [14,15]. In particular, AIMs also expand access to global knowledge and enable personalised learning experiences tailored to diverse learner needs [16]. Additionally, AIMs foster collaboration between educators and students, streamline academic and administrative processes, and ultimately prepare learners for a future driven by technology [17].

In recent years, several studies, particularly within Saudi Arabia, have focused on notable progress in integrating the SDGs into higher education, primarily through the adoption of information technology frameworks [18,19,20,21]. However, a critical application gap remains between theoretical alignment and practical application, particularly in linking these frameworks to measurable digital sustainability conceptions. Hence, to bridge this gap, the current study presents an empirical investigation into the relationship between students’ intention to use technologies, particularly artificial intelligence (AI) models, in learning practices and their awareness of digital sustainability conceptions (DSC), introduced as a novel outcome variable. Likewise, this relationship is further explored within the context of the educational application of AI-powered natural language processing (AI-NLP) models. Additionally, to guide this investigation, the study adopts an extended unified theory of acceptance and use of technology (UTAUT) framework. Accordingly, the study seeks to address the following research questions:

RQ1: To what extent do content availability (CA), learning engagement (LE), learning motivation (LM), and learner involvement (LI) influence user intention (UI) to adopt AI-NLP models in higher education?

RQ2: Does AI satisfaction (AS) moderate the relationships between each cognitive–affective factor (CA, LE, LM, and LI) and UI?

RQ3: What is the effect of UI on students’ DSC?

2. Theoretical Review and Research Hypothesis

2.1. Education with AI-Powered NLP Models

AI-powered natural language processing (AI-NLP) models, such as ChatGPT (GPT-4, OpenAI, San Francisco, CA, USA), Gemini (Gemini 1.5, Google, Mountain View, CA, USA), Copilot (GitHub Copilot, powered by OpenAI Codex, San Francisco, USA), BioGPT (v1.0, Microsoft, Redmond, WA, USA), and PubMedBERT (v1.0, Allen Institute for AI, Seattle, WA, USA), are producing human-like language outputs [22]. Additionally, AI-NLP models are trained on large-scale datasets and use transformer-based architectures (e.g., GPT-4) to generate contextually relevant and coherent responses [23]. Recently, several studies have shown that AI-NLP models, such as ChatGPT and Copilot, excel in general conversational AI tasks, including tutoring, summarising texts, and explaining complex ideas [15,24,25,26].

However, despite the promise of AI-NLP models, these require learners, especially, to have a foundational awareness of limitations, such as the non-verifiability of data [15,26]. Furthermore, awareness thus becomes a critical variable: informed users can use these tools to enhance cognitive performance, whereas uninformed use may lead to misuse or over-reliance on them [27,28]. Therefore, AI awareness may moderate the influence of learners’ behavioural intention [29]. Additionally, AI-NLP models are being increasingly integrated into cognitive learning processes, supporting tasks such as critical reasoning, diagnostic assistance, case-based learning, and exam preparation [29,30]. Likewise, these models enhance knowledge retention, promote metacognitive reflection, and support personalised learning, especially in self-directed or classroom environments [31,32].

However, to the best of the author’s knowledge, only a limited number of studies in Saudi Arabia have examined the use of AI-NLP models in higher education, particularly concerning learners’ behavioural intentions and the factors influencing their adoption. Few investigations have explored the direct effects of CA, LE, LM, and LI on students’ intention to adopt these models. Moreover, critical evaluations of the potential and limitations of AI-NLP models in determining their educational effectiveness remain scarce. This gap is especially significant within the context of DSC (See Table 1 for construct definitions.).

Table 1.

Construct definitions.

A lack of awareness or understanding of AI-generated content can lead to misinterpretations, resulting in inaccurate conclusions, diminished learning outcomes, and an increased potential for digital misinformation [13]. Consequently, this disconnect undermines the alignment between learners’ intentions and their perceptions of core DSC.

To address these gaps, the current study introduces a model that integrates AS as a moderating variable, influencing the relationships between the cognitive–affective predictors (CA, LE, LM, LI, and UI) to inform the adoption of AI-NLP models in higher education. Understanding these dynamics is critical to ensuring that learners’ intentions are not only shaped by technological advancement but also meaningfully aligned with sustainability-oriented educational goals.

2.2. Determinants of User Intentions to Adopt AI-Powered NLP Models in Education

The study aims to highlight students’ intentions regarding digital sustainability in an AI-integrated learning environment. Thus, to achieve this objective, an analysis of relevant research and studies was conducted, including established theories of technology acceptance [33,43,44,45]. Additionally, numerous articles have examined various models of technology acceptance theories, such as the theory of planned behaviour (TPB) [46], the value-based adoption model (VAM) [47], the technology acceptance model (TAM) [48], and the unified theory of acceptance and use of technology (UTAUT) [49]. Likewise, UTAUT has gained widespread recognition for its effectiveness in analysing individual behaviour toward emerging technologies across various domains [49,50,51].

In educational contexts, the UTAUT model, either in its original form or with theoretical extensions, has been applied in numerous studies to understand how users interact with AI-driven systems [49,52,53,54]. Likewise, studies examining learners’ use of AI assistants [55], AI-NLP tools [24], AI large language models (LLMs) [56], AI-powered customer relationship management (CRM) systems [57], and AI-based medical devices [58] have been included. Furthermore, numerous studies have addressed learner intention toward AI adoption in educational contexts, including awareness [58], motivation [59], assessment [60], and digital learning practices [61].

However, to the best of the author’s knowledge, few articles within Saudi Arabia have examined the UTAUT model specifically in the context of AI adoption in medical education [19,62,63]. Moreover, the majority of recent studies have focused on general AI tools, primarily analysing user intention using the core constructs of the extended unified theory of acceptance and use of technology (UTAUT). However, few investigations have explored the direct effects of CA, LE, LM, and LI on students’ intention to adopt AI-NLP models in educational settings.

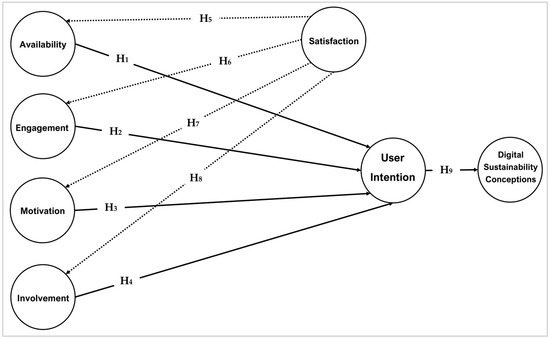

Hence, to address this limitation, the present study examines these factors within the context of AI-NLP models, leading to the formulation of the following direct effect hypotheses:

H1.

CA has a positive effect on UI to adopt AI-NLP models.

H2.

LE has a positive impact on UI.

H3.

LM has a positive impact on UI.

H4.

LI has a positive impact on UI.

Additionally, despite the growing relevance of satisfaction in technology acceptance research, AS remains underexplored as a moderate variable, particularly in understanding how users respond differently to AI-NLP models. Furthermore, empirical studies specifically investigating the adoption of AI-NLP models, such as ChatGPT and Copilot, within higher education contexts remain limited. Moreover, to address these gaps, the current study incorporates AS as a moderator. It empirically examines how CA, LE, LM, and LI influence UI to adopt AI-NLP models for higher education purposes. Thus, the extended conceptual framework facilitates the development of the following moderation hypotheses, which explore both the main effects and the moderating role of AS as a distinct construct beyond traditional cognitive–affective predictors:

H5.

AS moderates the relationship between CA and UI.

H6.

AS moderates the relationship between LE and UI.

H7.

AS moderates the relationship between LM and UI.

H8.

AS moderates the relationship between LI and UI.

2.3. Digital Sustainability in Education and Implications and Conceptual Framework

Digital sustainability leverages the tools of digital transformation—such as enhanced connectivity, artificial intelligence (AI), and the Internet of Things (IoT), to improve environmental outcomes and support sustainable institutional and operational practices [64]. In higher education, digital sustainability encompasses how institutions use technology not only to enhance learning but also to contribute to long-term societal and ecological well-being [65].

Similarly, despite the growing body of research on learners’ perceptions and willingness to integrate AI-NLP models into education [18,24,25,26,31,66], a notable gap persists in studies specifically focused on higher education organisations in Saudi Arabia, particularly those examining learners’ intentions, their perceived satisfaction with AI-NLP models, and their willingness to engage with such technologies within the evolving framework of digital sustainability. Within this context, the current study examines learners’ PDS, offering valuable insights into how UI can be translated into systematic support for the responsible and sustainable integration of educational technologies. Accordingly, the study proposes the following hypothesis:

H9.

UI has a positive effect on DSC.

Figure 1 presents the conceptual model guiding this research, explaining the relationships among the study variables.

Figure 1.

Conceptual modelling.

3. Methods and Materials

3.1. Population and Sample

This study was conducted among students enrolled in the preparatory year (PY) program at King Faisal University (KFU) in Saudi Arabia, using a random sample of 274 undergraduate students. PY is a foundational academic stage designed to equip students with essential educational and language skills before progressing into competitive academic tracks such as Medicine, Pharmacy, Engineering, and Computer Science [67] (See Table 2).

Table 2.

Population and sample.

The sample was primarily female (over 60%), with an average age of 20. This gender variance reflects broader enrollment patterns at KFU from 2022 to 2024, during which female students outnumbered male students across most disciplines [68].

The appropriateness of the sample size was established through multiple criteria:

Hill’s Rule-of-Thumb: A minimum of five observations per measured variable is recommended. With 28 observed indicators in this study, a sample size of at least 140 was required. The final sample of 274 exceeded this threshold [69].

Structural Equation Modelling (PLS-SEM): According to Muthén [70], a minimum of 150 observations is typically sufficient for PLS-SEM, which this study satisfies.

The 10-Times Rule: In PLS-SEM, the sample should be at least 10 times the maximum number of paths leading to any latent variable. The most complex endogenous construct, UI, is predicted by 4 latent constructs (CA, LE, LM, LI) and involves moderation paths with AS, implying a minimum of 40 observations—again, well below the actual sample size [71].

A Priori Power Analysis: Using G*Power 3.1, a power analysis for linear multiple regression with 4 predictors (f2 = 0.15, α = 0.05, power = 0.80) yielded a minimum required sample of 85 [72].

R2-Based Sample Justification: Assuming a conservative R2 of 0.25 for UI, a minimum of n = 37 participants would be needed to detect effects at the 5% level with adequate power [73].

Therefore, the sample size of 274 provides suitable statistical power to detect medium-sized effects. Although the sample is demographically specific and predominantly female, it offers valuable insights into the adoption of AI-NLP models and DSC within the context of Saudi Arabian higher education. This case-specific focus also lays the groundwork for broader comparative or cross-cultural studies in future research.

3.2. Instrument and Data Collection

The study employed an anonymous survey to collect data, ensuring no sensitive or privacy-related concerns were raised. Additionally, participants were informed that the questionnaire was anonymous and that their participation was entirely voluntary. Additionally, to maximise participation, the survey link was disseminated via email, with educators in PY at KFU in Saudi Arabia encouraging learner engagement by emphasising the study’s relevance to the future of higher education.

Additionally, the survey was divided into three main parts: (1) a consent statement explaining the study’s purpose and that participation is voluntary, (2) a demographic section asking for respondents’ gender and age, and (3) a set of structured questions designed to evaluate the constructs of the study’s theoretical model. All items in the seven sections were measured using a 5-point Likert scale, ranging from 1 (Strongly Disagree) to 5 (Strongly Agree). Data collection was conducted in January 2025. (See the full questionnaire at: https://forms.cloud.microsoft/r/DYJsDAUsKR, accessed on 5 July 2025)

Furthermore, the instrument was developed using validated measurement items adapted from established prior studies, as follows:

CA was measured using four items (CA1–CA4), adapted from [4,12], which assessed learners’ perceptions of the accessibility, quality, and user-friendly formatting of AI-powered content.

LE was measured using four items (LE1–LE4), based on [13,15], which assessed cognitive, emotional, and behavioural engagement in AI-powered learning activities.

LM was assessed using four items (LM1–LM4), adapted from [4,17], which evaluated both internal and external motivators for learning with AI tools.

LI was measured using four items (LI1–LI4), adapted from [13,15], which assessed learners’ perceived participation and responsibility in AI-powered learning processes.

AS was assessed using four adjusted items (AS1–AS4), inspired by [74,75], which measured satisfaction with AI tools’ support for academic tasks and outcomes.

UI was measured using four items (UI1–UI4), based on [57], which examined continued usage intentions for AI tools in future learning.

DSC were measured using four items (DSC1–DSC4), based on [64,65], which assessed perceptions of AI’s role in sustainable education, aligned with the UN SDGs.

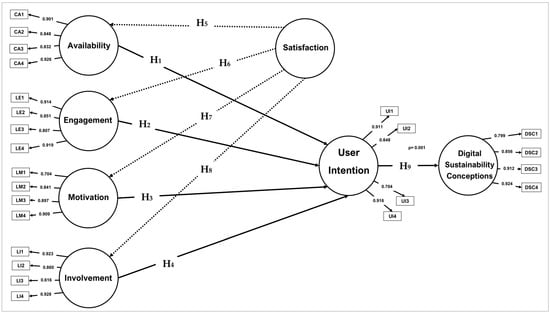

Moreover, all constructions were modelled as reflective potential variables and analysed using PLS-SEM (See Figure 2). Additionally, PLS-SEM was conducted to explore the hypothesised associations among the constructs [73]. Also, this approach was deemed appropriate given the study’s focus on latent variables measured indirectly through observed indicators [76].

Figure 2.

A statistical model.

Additionally, all analyses were performed using SmartPLS 4.0 (developed by SmartPLS GmbH, Bönningstedt, Germany), a statistical software designed explicitly for PLS-SEM. The software facilitated the assessment of measurement models, structural path analysis, and moderation effects. Moreover, SmartPLS was selected for its efficiency in handling latent variable modelling with reflective constructs and its suitability for both exploratory and confirmatory research designs [77].

3.3. Hypothesis Examining Approach

The study employed a sequential analytical approach to examine the hypothesised relationships. Initially, the direct effects of four core UTAUT-based constructs, CA, LE, LM, and LI, on UI were assessed, along with the effect of UI on DSC (H1–H4 and H9) [73]. Next, the moderation hypotheses (H5–H8) were tested by evaluating the statistical significance of the interaction terms based on path coefficients and p-values [78].

Following the methodological best practices [73,76], the moderating variable, AS, was excluded from the initial model to ensure a clear and unbiased interpretation of the direct effects. This approach minimises potential confounding that may occur when examining both main and interaction effects simultaneously [78].

Subsequently, the measurement model was rigorously evaluated to ensure its psychometric soundness, following established PLS-SEM guidelines [73,76,78,79]:

(To enhance clarity and accessibility, the following evaluation metrics are briefly defined and directly linked to their corresponding results as presented in Section 4 “Results”:)

Indicator loadings: Measure the strength of association between each item and its respective latent construct. Loadings of 0.70 or higher are considered acceptable, indicating that each item reliably reflects the construct it is intended to measure. As shown in Table 3, all item loadings met this threshold, confirming high item reliability.

Table 3.

Standards for the conceptual model.

Average variance extracted (AVE): Reflects the proportion of variance captured by a construct relative to measurement error. Values above 0.50 indicate adequate convergent validity. As shown in Table 3, all constructs in this study satisfied this criterion.

Composite reliability (CR) and Cronbach’s alpha (α): Assess the internal consistency of items within each construct. A threshold value greater than 0.70 is generally considered acceptable. As reported in Table 3, all constructs demonstrated strong internal consistency, exceeding this benchmark for both CR and α.

Cross-loadings analysis: Assesses item-level discriminant validity by comparing each item’s loading on its designated construct with loadings on other constructs. An item is considered valid if loading more strongly on the associated construct than on any other, with a primary loading ≥0.70. As shown in Table 4, all items in this study met this requirement.

Table 4.

Factor cross-loadings.

Discriminant validity: Discriminant validity was evaluated using two methods. First, the Fornell–Larcker criterion requires that the square root of a construct’s AVE exceed its highest correlation with any other construct (see Table 5). Second, the heterotrait–monotrait ratio (HTMT) compares correlations between constructs. HTMT values below 0.85 (or <0.90 under more lenient criteria) indicate that constructs are empirically distinct. As shown in Table 6, all HTMT values met these thresholds.

Table 5.

Fornell–Larcker discriminant validity.

Table 6.

HTMT criterion—discriminant validity confirmation.

Path coefficients, t-values, and p-values: These metrics were used to evaluate the hypothesised structural relationships, as presented in Table 7. Path coefficients (β) represent the strength and direction of relationships between constructs, while t-values and p-values assess their statistical significance. A p-value of less than 0.05 was considered statistically significant, supporting the acceptance of the corresponding hypothesis.

Table 7.

Path coefficients, t, and p.

Additionally, to evaluate the significance of the hypothesised relationships, all p-values were assessed against a predefined significance threshold of α = 0.05. Notably, the symbol α is used in this study in two distinct contexts: (a) as Cronbach’s alpha, which measures internal consistency and reliability of the constructs (see Table 3), and (b) as the significance level for hypothesis testing (α = 0.05) (see Table 7).

These analyses confirmed that all constructs met the required thresholds for reliability, convergent validity, and discriminant validity, thereby supporting their inclusion in the structural model analysis [77,80].

3.4. Ethical Approvals and Informed Consent for Participation

Prior to initiating the data collection process, formal institutional approval was obtained from the Institutional Review Board at King Faisal University (Ethics Reference: KFU-2025-ETHICS3435). Furthermore, this procedure certified that our employed methods were consistent with the institutional criteria and the ethical concerns outlined in the Declaration of Helsinki [81].

Additionally, several safeguards to defend participants’ rights have been implemented: all participation was voluntary in its nature, with no pressure; written informed consent was obtained from each participant; respondents had the right to withdraw at any time without giving any reasons; and all data received were anonymised to protect participants’ identities.

4. Results

Table 3 outlines the measurement standards for the conceptual model, which comprises seven principal components: CA, LE, LM, LI, AS, UI, and DSC. However, the moderating effect of AS was excluded from the final analysis as it did not demonstrate statistically significant differentiating effects among participants.

Table 3 and Figure 2 indicate that all constructs in the conceptual model exhibit strong reliability, with item loadings exceeding the conventional threshold of 0.70. This also confirms that each observed variable reliably measures its corresponding latent construct, validating the robustness of the model’s psychometric properties. Additionally, CA results construct indicated strong reliability and convergent validity, with item loadings ranging from 0.832 to 0.926.

Likewise, the results were further supported by excellent measurement quality indicators, including an average variance extracted (AVE) of 0.776, composite reliability (CR) of 0.937, and Cronbach’s α of 0.919. Thus, metrics collectively indicate highly reliable indicators, strong inter-item relationships, and excellent internal consistency. Similarly, LE results indicated high loadings (0.807–0.919), with strong supporting metrics (AVE = 0.761, CR = 0.929, Cronbach’s α = 0.904), confirming its effectiveness in measuring engagement with AI-NLP models.

Additionally, LM findings met all reliability standards, with loadings ranging from 0.704 to 0.909, along with robust validity metrics (AVE = 0.728, CR = 0.911, Cronbach’s α = 0.879), affirming its role as a significant predictor of UI. Furthermore, the LI results construct showed slightly more varied loadings (0.616–0.928), though these remained within acceptable ranges (AVE = 0.714, CR = 0.907, Cronbach’s α = 0.870).

Additionally, although one item (LI3 = 0.616) fell marginally below the ideal threshold, the construct maintained overall psychometric soundness and demonstrated a significant influence on UI. Moreover, the UI results were particularly robust, with loadings ranging from 0.704 to 0.916 and strong measurement metrics (AVE = 0.752, CR = 0.920, Cronbach’s α = 0.891), which support its mediating role between cognitive–affective constructs and DSC.

Similarly, the DSC construct itself demonstrated strong measurement properties, with loadings ranging from 0.799 to 0.924 and strong validity indicators (AVE = 0.775, CR = 0.936, Cronbach’s α = 0.918), confirming its effectiveness in capturing the evolving student understanding of sustainability in AI-enhanced learning environments.

However, analysis of the AI usage satisfaction revealed non-significant predictive relationships with availability, engagement, motivation, and involvement. Thus, findings reflect uniformly high satisfaction levels across the sample, reducing variance and consequently weakening predictive relationships.

Table 4 presents factor cross-loadings analysis used to assess discriminant validity within the PLS-SEM framework. Furthermore, the PLS-SEM examines whether each indicator demonstrates its strongest association with its theoretically assigned construct relative to all other constructs in PLS-SEM. Consistently, with established methodological standards, discriminant validity is confirmed when each indicator’s loading is substantially higher on its corresponding latent construct than on any other construct in the analysis [73].

Table 4 presents a clear indicator of discriminant validity across all constructs included in the study: CA, LE, LM, LI, UI, and DSC. Additionally, discriminant validity is indicated by the fact that each item loads strongly on its corresponding latent construct and lower on all other constructs, confirming the uniqueness of each factor in the structural model.

Similarly, for the CA, all items (CA1–CA4) load highly on the CA construct (ranging from 0.832 to 0.926) and exhibit notably lower cross-loadings on LE, LM, LI, UI, and DSC. Likewise, CA’s conceptual independence and empirical distinction from other constructs is confirmed.

Furthermore, the LE items (LE1–LE4) exhibit the most substantial loadings on their intended factor (0.807–0.919), with diminished loadings on other constructs, validating LE as a distinct cognitive–affective construct. Identically, the LM indicators (LM1–LM4) load strongly on their designated construct (0.704–0.909) and significantly lower on all others, supporting the unique conceptual role of motivation in driving intention.

Likewise, the LI items demonstrate the same pattern of discriminant separation. Although LI3 exhibits a lower factor loading (0.616), the remaining items (LI1, LI2, LI4) range from 0.860 to 0.928 on LI, with consistently smaller loadings on the rest, thus preserving overall construct distinction.

Moreover, all the UI items (UI1–UI4) exhibit high loadings on their respective construct (0.704–0.916) and lower values across other factors, reinforcing the central role of the findings in the model as a mediator between cognitive–affective dimensions and digital sustainability.

Additionally, the DSC items (DSC1–DSC4) load strongly on their construct (0.799–0.924), with much smaller loadings on preceding constructs, confirming that DSC is a well-characterised outcome variable. Hence, the results reflect students’ enhanced understanding of digital sustainability concepts through the integration of sustainable AI in education. Moreover, to thoroughly verify discriminant validity, we confirmed the results using the Fornell–Larcker criterion and the HTMT ratio, as shown in Table 4 and Table 5.

According to Table 5, the Fornell–Larcker criterion results report confirms discriminant validity for all constructs included in the measurement model. According to this criterion, the square root of AVE for each construct (shown on the diagonal in bold) should be greater than its correlation with any other construct in the model (off-diagonal values).

According to the results, the CA showed a square root of AVE of 0.881, which is stronger than its correlations with LE (0.652), LM (0.613), LI (0.590), UI (0.645), and DSC (0.580). Thus, CA’s distinct role in predicting user intention to adopt AI NLP tools is confirmed. Additionally, the LE has a square root of AVE of 0.872, exceeding its highest correlation with UI (0.665).

Hence, this supports the discriminant validity of LE, validating its unique contribution to user intention. Similarly, the LM demonstrates a square root of AVE of 0.853, surpassing its highest inter-construct correlation of 0.633 with LE.

Therefore, it is confirmed that LM is conceptually and statistically distinct in the model. Likewise, the LI yields a square root of AVE of 0.845, which is greater than its correlation with LE (0.610) and UI (0.603), accordingly confirming its uniqueness as a construct.

Furthermore, the UI, a central mediator in the model, has a square root of AVE of 0.867, which is significantly higher than its correlations with CA (0.645), LE (0.665), LM (0.626), LI (0.603), and DSC (0.602). Consequently, this indicates UI maintains its discriminant validity and mediates relationships between learning variables and digital sustainability perceptions.

Moreover, the DSC, the primary outcome variable, has a square root of AVE of 0.880, clearly exceeding its highest correlation with UI (0.602), further affirming its empirical distinction and validating its conceptual placement as the outcome of sustainable AI adoption.

Table 6 presents the results of the heterotrait–monotrait ratio (HTMT) analysis, an established method for assessing discriminant validity in structural equation modelling [80]. All HTMT values were substantially below the conservative threshold of 0.85 (with an alternative threshold of 0.90), demonstrating a clear empirical distinction between constructs.

To further validate discriminant validity beyond cross-loadings and Fornell–Larcker analysis, the HTMT method was employed. Moreover, according to widely accepted thresholds (HTMT < 0.85), all construct pairs in the model demonstrate acceptable levels of discriminant validity.

Notably, Table 5 indicates the HTMT values for key predictor-mediator pairs remain comfortably below the 0.85 threshold: CA ⟷ LE (0.741), LE ⟷ LM (0.726), LM ⟷ UI (0.721), LI ⟷ UI (0.695).

Furthermore, relationships involving the outcome variable, DSC, also remain below the threshold, such as UI ⟷ DSC (0.694), LE ⟷ DSC (0.688), and LM ⟷ DSC (0.657). Therefore, the strongest discriminant separation was observed between LI and DSC (HTMT = 0.616).

Hence, across the entire model, HTMT values ranged narrowly from 0.616 to 0.763, providing robust evidence for the distinctiveness of each latent construct in the measurement model.

Table 7 presents the structural model analysis, including path coefficients (β), t-values, p-values, and R2 values. Likewise, the independent variables (inputs) are CA, LE, LM, LI, and AS. In contrast, the dependent variables (outcomes) are UI and DSC. Additionally, five of the nine hypothesised relationships (RH1 to RH4 and RH9) were supported, whereas three (RH5 to RH8) were rejected.

Notably, CA significantly predicts UI (β = 0.25, t = 2.50, p = 0.012), suggesting that students are more likely to adopt AI-NLP models when they perceive them as readily accessible. Similarly, LE shows a significant positive effect on UI (β = 0.22, t = 2.20, p = 0.028), highlighting the importance of active interaction with AI-NLP models.

Furthermore, LM also has a significant influence on UI (β = 0.20, t = 2.00, p = 0.046), confirming the role of motivational factors in the adoption of technology. Moreover, LI exhibits a marginally significant relationship with UI (β = 0.18, t = 1.80, p = 0.072), indicating a modest but notable contribution. Hence, these four predictors collectively explain 52.2% of the variance in UI (R2 = 0.522), demonstrating moderate to strong predictive power.

Also, UI significantly predicts DSC (β = 0.35, t = 3.50, p = 0.001), accounting for 45.1% of the variance (R2 = 0.451), which confirms that stronger AI-NLP models adoption intentions are associated with increased awareness of DSC. On the other hand, the hypotheses proposing a moderator between cognitive–affective variables (CA, LE, LM, LI) and UI (RH5 to RH8) were not supported. All interaction effects were non-significant: CA × AS (β = 0.05, p > 0.6), LE × AS (β = 0.04, p > 0.6), LM × SA (β = 0.03, p > 0.6), and LI × AS (β = 0.02, p > 0.6). Additionally, the model suggests that uniformly high satisfaction levels among PY students may have created a ceiling effect, limiting the moderator’s discriminative power.

5. Discussion

The current study highlights the critical role of key learning variables —CA, LE, LM, and LI—in predicting students’ intention to adopt AI-NLP models, such as ChatGPT, Gemini, and Copilot. Among these, CA (β = 0.25) and LE (β = 0.22) had the strongest effects on UI, consistent with recent studies [82,83,84,85].

Additionally, the results indicate that students are more likely to integrate AI-NLP models into their learning because they perceive them as accessible and engaging. Also, AI-NLP models enhance learning by analysing student responses, adjusting content difficulty, and providing personalised feedback. This process not only improves academic performance but also enhances awareness of DSC through experiential learning. These findings are consistent with prior research [21,86,87,88]. These practices are widely endorsed by global frameworks such as the SDGs and UNESCO’s digital education objectives [89].

Furthermore, the significant influence of LM (β = 0.20) further confirms that students with strong intrinsic motivation are more receptive to AI-enhanced learning environments. Moreover, students using AI-NLP models showed greater engagement than those in traditional learning settings. They asked more questions, interacted more with peers and instructors, and gave more feedback—behaviours linked to deeper learning and supported in the literature [90,91].

Although LI (β = 0.18, p = 0.072) did not meet the conventional threshold for statistical significance (α = 0.05), the result is considered marginally significant due to its proximity to the cutoff. This suggests a potential trend worth noting in educational research. In line with statistical norms, this path was interpreted as suggestive rather than confirmatory and should be viewed as a possible trend that requires further investigation in future research. The data further suggest that students who are actively involved in their learning processes may be more inclined to adopt AI-NLP models.

Notably, these students demonstrated increased involvement by asking questions, participating in discussions, and offering feedback, practices well-documented as indicators of successful learning experiences [74,92]. Importantly, UI significantly predicted students’ DSC (β = 0.35, p < 0.001). This finding suggests that a stronger intention to adopt AI tools is linked to a greater awareness of sustainable digital practices, including green computing and digital inclusion [86,88].

On the other hand, the moderation hypotheses (H5–H8), which proposed AS as a moderating factor, were not supported. The lack of significance is likely due to the uniformly high satisfaction levels among PY students, which may have introduced a ceiling effect. By the results, responses cluster near the upper limit of a scale, reducing variability and limiting statistical power. This interpretation is consistent with previous research on technology acceptance models, where ceiling effects in satisfaction or perceived usefulness scores have similarly obscured moderation effects or weakened path estimates [93,94,95,96].

Overall, the model demonstrated strong explanatory power, accounting for 52.2% of the variance in UI (R2 = 0.522) and 45.1% of the variance in DSC (R2 = 0.451). AI-NLP models facilitated a learning-by-doing approach, enabling students to engage actively with educational content. This experiential learning process reinforced key sustainability principles relevant to digital education, including energy efficiency, e-waste management, ethical data usage, sustainable digital design, and the circular economy [14,19,97,98].

However, it remains unclear whether elevated DSC scores reflect a deep, applied understanding of sustainability domains or merely a positive perception of AI-NLP models. Future research should incorporate qualitative methods, such as interviews and open-ended surveys, to evaluate students’ actual knowledge of digital sustainability. These insights could then guide targeted curriculum interventions, ensuring that experiential AI engagement is complemented by explicit instruction in ethical data practices, digital environmental impact, and sustainable technology use.

Furthermore, the study highlights AI’s transformative potential in higher education by examining how UI influences DSC among PY students in Saudi Arabia. Given the demographic focus of the study, further research is recommended, particularly longitudinal studies, to track changes over time across diverse genders, academic specialisations, and cultural contexts.

6. Conclusions and Contributions

The current study offers empirical evidence on how AI-NLP models shape PY students’ intentions and conceptions of digital sustainability. Moreover, it identifies learning factors, including availability, engagement, motivation, involvement, and AI satisfaction, as key predictors of the adoption of educational AI-NLP models. Likewise, by reconceptualising digital sustainability as a learner-driven construct within AI-enhanced education, the research bridges cognitive–affective engagement with responsible technology use, expanding theoretical discussions on AI-NLP models integration.

Furthermore, this study extends UTAUT by integrating learning constructs, including availability, engagement, motivation, involvement, and AI satisfaction, demonstrating their direct, mediating, and moderating effects on user intention and digital sustainability conceptions in higher education organisations. Practically, the findings guide the alignment of educational policymakers in higher education to refine institutional priorities and global sustainability agendas, especially SDGs-4 (Quality Education) and SDGs-9 (Industry, Innovation, and Infrastructure).

7. Future Study Opportunities and Limitations

This study focused exclusively on preparatory year (PY) students at a Saudi Arabian university, with a predominantly female sample (over 60%). To enhance generalisability, future research should examine these dynamics across more diverse populations, incorporating balanced gender representation, varied academic disciplines, and broader cultural contexts.

Second, primarily institutional and sociocultural factors may have also shaped participants’ perceptions. Accordingly, future studies should adopt more inclusive sampling strategies that reflect a wider range of academic levels, gender identities, cultural backgrounds, and institutional types across multiple national contexts.

Third, combining cross-sectional survey designs with longitudinal and qualitative methodologies could yield richer insights into how students’ attitudes and behaviours toward AI tools evolve over time and across varied educational environments.

Finally, although this study found that students expressed positive intentions to adopt AI-powered NLP tools—linked to increased motivation, engagement, and awareness of digital sustainability (bridging)—it did not investigate potential negative implications (burning), such as ethical dilemmas, resistance, or adoption failures. Future research should address these underexplored areas to provide a more comprehensive and balanced understanding of AI integration in educational contexts.

Funding

This research was funded by the Deanship of Scientific Research at the King Faisal University of Saudi Arabia, grant number (KFU252492).

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Fund, S. Sustainable Development Goals. 2015. Available online: https://www.un.org/sustainabledevelopment/inequality (accessed on 5 May 2025).

- Ryan, M.; Antoniou, J.; Brooks, L.; Jiya, T.; Macnish, K.; Stahl, B. The ethical balance of using smart information systems for promoting the United Nations’ Sustainable Development Goals. Sustainability 2020, 12, 4826. [Google Scholar] [CrossRef]

- Jayachandran, M.; Gatla, R.K.; Rao, K.P.; Rao, G.S.; Mohammed, S.; Milyani, A.H.; Azhari, A.A.; Kalaiarasy, C.; Geetha, S. Challenges in achieving sustainable development goal 7: Affordable and clean energy in light of nascent technologies. Sustain. Energy Technol. Assess. 2022, 53, 102692. [Google Scholar] [CrossRef]

- Ghanem, S. E-learning in higher education to achieve SDG 4: Benefits and challenges. In Proceedings of the 2020 Second International Sustainability and Resilience Conference: Technology and Innovation in Building Designs (51154), Sakheer, Bahrain, 11–12 November 2020; pp. 1–6. [Google Scholar]

- Pigola, A.; Da Costa, P.R.; Carvalho, L.C.; Silva, L.F.d.; Kniess, C.T.; Maccari, E.A. Artificial intelligence-driven digital technologies to the implementation of the sustainable development goals: A perspective from Brazil and Portugal. Sustainability 2021, 13, 13669. [Google Scholar] [CrossRef]

- Nedungadi, P.; Surendran, S.; Tang, K.-Y.; Raman, R. Big Data and AI Algorithms for Sustainable Development Goals: A Topic Modeling Analysis. IEEE Access 2024, 12, 188519–188541. [Google Scholar] [CrossRef]

- Ezeigweneme, C.A.; Daraojimba, C.; Tula, O.A.; Adegbite, A.O.; Gidiagba, J.O. A review of technological innovations and environmental impact mitigation. World J. Adv. Res. Rev. 2024, 21, 075–082. [Google Scholar] [CrossRef]

- Salem, M.A.; Zakaria, O.M.; Aldoughan, E.A.; Khalil, Z.A.; Zakaria, H.M. Bridging the AI Gap in Medical Education: A Study of Competency, Readiness, and Ethical Perspectives in Developing Nations. Computers 2025, 14, 238. [Google Scholar] [CrossRef]

- Attaran, M.; Woods, J. Cloud computing technology: Improving small business performance using the Internet. J. Small Bus. Entrep. 2019, 31, 495–519. [Google Scholar] [CrossRef]

- DataReportal. Digital 2025: Global Overview Report. 2025. Available online: https://datareportal.com/reports/digital-2025-global-overview-report (accessed on 5 May 2025).

- Muflih, S.; Al-Azzam, S.I.; Alzoubi, K.H.; Karasneh, R.; Hawamdeh, S.; Sweileh, W.M. A bibliometric analysis of global trends in internet addiction publications from 1996 to 2022. Inform. Med. Unlocked 2024, 47, 101484. [Google Scholar] [CrossRef]

- Bonab, S.R.; Haseli, G.; Ghoushchi, S.J. Digital technology and information and communication technology on the carbon footprint. In Decision Support Systems for Sustainable Computing; Elsevier: Amsterdam, The Netherlands, 2024; pp. 101–122. [Google Scholar]

- Bulathwela, S.; Pérez-Ortiz, M.; Holloway, C.; Cukurova, M.; Shawe-Taylor, J. Artificial intelligence alone will not democratise education: On educational inequality, techno-solutionism and inclusive tools. Sustainability 2024, 16, 781. [Google Scholar] [CrossRef]

- Wongmahesak, K.; Karim, F.; Wongchestha, N. Artificial Intelligence: A Catalyst for Sustainable Effectiveness in Compulsory Education Management. Asian Educ. Learn. Rev. 2025, 3, 4. [Google Scholar]

- Salem, M.A.; Alshebami, A.S. Exploring the Impact of Mobile Exams on Saudi Arabian Students: Unveiling Anxiety and Behavioural Changes across Majors and Gender. Sustainability 2023, 15, 12868. [Google Scholar] [CrossRef]

- Huang, Q.; Lv, C.; Lu, L.; Tu, S. Evaluating the Quality of AI-Generated Digital Educational Resources for University Teaching and Learning. Systems 2025, 13, 174. [Google Scholar] [CrossRef]

- Yeganeh, L.N.; Fenty, N.S.; Chen, Y.; Simpson, A.; Hatami, M. The Future of Education: A Multi-Layered Metaverse Classroom Model for Immersive and Inclusive Learning. Future Internet 2025, 17, 63. [Google Scholar] [CrossRef]

- Moursy, N.A.; Hamsho, K.; Gaber, A.M.; Ikram, M.F.; Sajid, M.R. A systematic review of progress test as longitudinal assessment in Saudi Arabia. BMC Med. Educ. 2025, 25, 100. [Google Scholar] [CrossRef] [PubMed]

- Akhtar, S.; Alfuraydan, M.M.; Mughal, Y.H.; Nair, K.S. Adoption of Massive Open Online Courses (MOOCs) for Health Informatics and Administration Sustainability Education in Saudi Arabia. Sustainability 2025, 17, 3795. [Google Scholar] [CrossRef]

- Elshaer, I.A.; AlNajdi, S.M.; Salem, M.A. Sustainable AI Solutions for Empowering Visually Impaired Students: The Role of Assistive Technologies in Academic Success. Sustainability 2025, 17, 5609. [Google Scholar] [CrossRef]

- Salem, M.A.; Sobaih, A.E.E. A Quadruple “E” Approach for Effective Cyber-Hygiene Behaviour and Attitude toward Online Learning among Higher-Education Students in Saudi Arabia amid COVID-19 Pandemic. Electronics 2023, 12, 2268. [Google Scholar] [CrossRef]

- Lane, H.; Dyshel, M. Natural Language Processing in Action; Simon and Schuster: New York, NY, USA, 2025. [Google Scholar]

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J.; Ahmad, J.; Ali, M.E.; Azam, S. A review on large language models: Architectures, applications, taxonomies, open issues and challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Küper, A.; Krämer, N.C. Psychological traits and appropriate reliance: Factors shaping trust in AI. Int. J. Hum. Comput. Interact. 2025, 41, 4115–4131. [Google Scholar] [CrossRef]

- Khan, W.H.; Khan, M.S.; Khan, N.; Ahmad, A.; Siddiqui, Z.I.; Singh, R.B.; Malik, M.Z. Artificial intelligence, machine learning and deep learning in biomedical fields: A prospect in improvising medical healthcare systems. In Artificial Intelligence in Biomedical and Modern Healthcare Informatics; Elsevier: Amsterdam, The Netherlands, 2025; pp. 55–68. [Google Scholar]

- Verkooijen, M.H.; van Tuijl, A.A.; Calsbeek, H.; Fluit, C.R.M.G.; van Gurp, P. How to evaluate lifelong learning skills of healthcare professionals: A systematic review on content and quality of instruments for measuring lifelong learning. BMC Med. Educ. 2024, 24, 1423. [Google Scholar] [CrossRef]

- Ohalete, N.C.; Ayo-Farai, O.; Olorunsogo, T.O.; Maduka, P.; Olorunsogo, T.J. AI-driven environmental health disease modeling: A review of techniques and their impact on public health in the USA and African contexts. Int. Med. Sci. Res. J. 2024, 4, 51–73. [Google Scholar] [CrossRef]

- Vardhani, A.; Findyartini, A.; Wahid, M. Needs Analysis for Competence of Information and Communication Technology for Medical Graduates. Educ. Med. J. 2024, 16, 119–136. [Google Scholar] [CrossRef]

- Bhuiyan, M.R.I.; Husain, T.; Islam, S.; Amin, A. Exploring the prospective influence of artificial intelligence on the health sector in Bangladesh: A study on awareness, perception and adoption. Health Educ. 2025, 125, 279–297. [Google Scholar] [CrossRef]

- Bai, S.; Zhang, X.; Yu, D.; Yao, J. Assist me or replace me? Uncovering the influence of AI awareness on employees’ counterproductive work behaviors. Front. Public Health 2024, 12, 1449561. [Google Scholar] [CrossRef]

- Moldt, J.-A.; Festl-Wietek, T.; Fuhl, W.; Zabel, S.; Claassen, M.; Wagner, S.; Nieselt, K.; Herrmann-Werner, A. Assessing Artificial Intelligence Awareness and Identifying Essential Competencies: Insights from Key Stakeholders in Integrating AI into Medical Education. 2024. Available online: https://ssrn.com/abstract=4713042 (accessed on 5 May 2025).

- Pawar, S.; Park, J.; Jin, J.; Arora, A.; Myung, J.; Yadav, S.; Haznitrama, F.G.; Song, I.; Oh, A.; Augenstein, I. Survey of cultural awareness in language models: Text and beyond. arXiv 2024, arXiv:2411.00860. [Google Scholar] [CrossRef]

- Salem, M.A.; Elshaer, I.A. Educators’ Utilizing One-Stop Mobile Learning Approach amid Global Health Emergencies: Do Technology Acceptance Determinants Matter? Electronics 2023, 12, 441. [Google Scholar] [CrossRef]

- Dias, A.; Bidarra, J. Designing e-content: A challenge for Open Educational Resources. In Proceedings of the EADTU 2007. Annual Conference of the European Association of Distance Teaching Universities, Lisbon, Portugal, 8–9 November 2007. [Google Scholar]

- Barkley, E.F.; Major, C.H. Student Engagement Techniques: A Handbook for College Faculty; John Wiley & Sons: Hoboken, NJ, USA, 2020. [Google Scholar]

- VanDeWeghe, R. Engaged Learning; Corwin Press: Thousand Oaks, CA, USA, 2009. [Google Scholar]

- Cook, D.A.; Artino, A.R., Jr. Motivation to learn: An overview of contemporary theories. Med. Educ. 2016, 50, 997–1014. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, B. Motivation for Learning and Performance; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Himmele, P.; Himmele, W. Total Participation Techniques: Making Every Student an Active Learner; ASCD: Alexandria, VA, USA, 2017. [Google Scholar]

- Salem, M.A.; Sobaih, A.E.E. ADIDAS: An Examined Approach for Enhancing Cognitive Load and Attitudes towards Synchronous Digital Learning Amid and Post COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2022, 19, 16972. [Google Scholar] [CrossRef]

- Makransky, G.; Petersen, G.B. The cognitive affective model of immersive learning (CAMIL): A theoretical research-based model of learning in immersive virtual reality. Educ. Psychol. Rev. 2021, 33, 937–958. [Google Scholar] [CrossRef]

- Sparviero, S.; Ragnedda, M. Towards digital sustainability: The long journey to the sustainable development goals 2030. Digit. Policy Regul. Gov. 2021, 23, 216–228. [Google Scholar] [CrossRef]

- Granić, A. Technology acceptance and adoption in education. In Handbook of Open, Distance and Digital Education; Springer: Berlin/Heidelberg, Germany, 2023; pp. 183–197. [Google Scholar]

- Kaushik, M.K.; Verma, D. Determinants of digital learning acceptance behavior: A systematic review of applied theories and implications for higher education. J. Appl. Res. High. Educ. 2020, 12, 659–672. [Google Scholar] [CrossRef]

- Kemp, A.; Palmer, E.; Strelan, P. A taxonomy of factors affecting attitudes towards educational technologies for use with technology acceptance models. Br. J. Educ. Technol. 2019, 50, 2394–2413. [Google Scholar] [CrossRef]

- Bornschlegl, M.; Townshend, K.; Caltabiano, N.J. Application of the theory of planned behavior to identify variables related to academic help seeking in higher education. Front. Educ. 2021, 6, 738790. [Google Scholar] [CrossRef]

- Wong, C.T.; Tan, C.L.; Mahmud, I. Value-based adoption model: A systematic literature review from 2007 to 2021. Int. J. Bus. Inf. Syst. 2025, 48, 304–331. [Google Scholar] [CrossRef]

- Chahal, J.; Rani, N. Exploring the acceptance for e-learning among higher education students in India: Combining technology acceptance model with external variables. J. Comput. High. Educ. 2022, 34, 844–867. [Google Scholar] [CrossRef] [PubMed]

- Rana, M.M.; Siddiqee, M.S.; Sakib, M.N.; Ahamed, M.R. Assessing AI adoption in developing country academia: A trust and privacy-augmented UTAUT framework. Heliyon 2024, 10, e37569. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Rana, N.P.; Chen, H.; Williams, M.D. A Meta-analysis of the Unified Theory of Acceptance and Use of Technology (UTAUT). In Proceedings of the Governance and Sustainability in Information Systems. Managing the Transfer and Diffusion of IT: IFIP WG 8.6 International Working Conference, Hamburg, Germany, 22–24 September 2011; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Al-Saedi, K.; Al-Emran, M.; Ramayah, T.; Abusham, E. Developing a general extended UTAUT model for M-payment adoption. Technol. Soc. 2020, 62, 101293. [Google Scholar] [CrossRef]

- Jain, R.; Garg, N.; Khera, S.N. Adoption of AI-Enabled Tools in Social Development Organizations in India: An Extension of UTAUT Model. Front. Psychol. 2022, 13, 893691. [Google Scholar] [CrossRef]

- Arbulú Ballesteros, M.A.; Acosta Enríquez, B.G.; Ramos Farroñán, E.V.; García Juárez, H.D.; Cruz Salinas, L.E.; Blas Sánchez, J.E.; Arbulú Castillo, J.C.; Licapa-Redolfo, G.S.; Farfán Chilicaus, G.C. The Sustainable Integration of AI in Higher Education: Analyzing ChatGPT Acceptance Factors Through an Extended UTAUT2 Framework in Peruvian Universities. Sustainability 2024, 16, 10707. [Google Scholar] [CrossRef]

- Tang, X.; Yuan, Z.; Qu, S. Factors Influencing University Students’ Behavioural Intention to Use Generative Artificial Intelligence for Educational Purposes Based on a Revised UTAUT2 Model. J. Comput. Assist. Learn. 2025, 41, e13105. [Google Scholar] [CrossRef]

- Xiong, Y.; Shi, Y.; Pu, Q.; Liu, N. More trust or more risk? User acceptance of artificial intelligence virtual assistant. Hum. Factors Ergon. Manuf. Serv. Ind. 2024, 34, 190–205. [Google Scholar] [CrossRef]

- Nakhaie Ahooie, N. Enhancing Access to Medical Literature Through an LLM-Based Browser Extension. 2024. Available online: https://urn.fi/URN:NBN:fi:oulu-202406144582 (accessed on 5 May 2025).

- Tanantong, T.; Wongras, P. A UTAUT-based framework for analyzing users’ intention to adopt artificial intelligence in human resource recruitment: A case study of Thailand. Systems 2024, 12, 28. [Google Scholar] [CrossRef]

- Su, J.; Wang, Y.; Liu, H.; Zhang, Z.; Wang, Z.; Li, Z. Investigating the factors influencing users’ adoption of artificial intelligence health assistants based on an extended UTAUT model. Sci. Rep. 2025, 15, 18215. [Google Scholar] [CrossRef]

- Chai, C.S.; Wang, X.; Xu, C. An extended theory of planned behavior for the modelling of Chinese secondary school students’ intention to learn artificial intelligence. Mathematics 2020, 8, 2089. [Google Scholar] [CrossRef]

- Li, X.; Jiang, M.Y.-c.; Jong, M.S.-y.; Zhang, X.; Chai, C.-s. Understanding medical students’ perceptions of and behavioral intentions toward learning artificial intelligence: A survey study. Int. J. Environ. Res. Public Health 2022, 19, 8733. [Google Scholar] [CrossRef]

- Naseri, R.N.N.; Syahrivar, J.; Saari, I.S.; Yahya, W.K.; Muthusamy, G. The Development of Instruments to Measure Students’ Behavioural Intention Towards Adopting Artificial Intelligence (AI) Technologies in Educational Settings. In Proceedings of the 2024 5th International Conference on Artificial Intelligence and Data Sciences (AiDAS), Bangkok, Thailand, 3–4 September 2024. [Google Scholar]

- Salem, M.A.; Alsyed, W.H.; Elshaer, I.A. Before and Amid COVID-19 Pandemic, Self-Perception of Digital Skills in Saudi Arabia Higher Education: A Longitudinal Study. Int. J. Environ. Res. Public Health 2022, 19, 9886. [Google Scholar] [CrossRef]

- Sobaih, A.E.E.; Elshaer, I.A.; Hasanein, A.M. Examining students’ acceptance and use of ChatGPT in Saudi Arabian higher education. Eur. J. Investig. Health Psychol. Educ. 2024, 14, 709–721. [Google Scholar] [CrossRef]

- Portillo, J.; Garay, U.; Tejada, E.; Bilbao, N. Self-perception of the digital competence of educators during the COVID-19 pandemic: A cross-analysis of different educational stages. Sustainability 2020, 12, 10128. [Google Scholar] [CrossRef]

- Alrefai, A.; ElBanna, R.; Al Ghaddaf, C.; Abu-AlSondos, I.A.; Chehaimi, E.M.; Alnajjar, I.A. The Role of IoT in Sustainable Digital Transformation: Applications and Challenges. In Proceedings of the 2024 2nd International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, 26–28 February 2024. [Google Scholar]

- Meinhold, R.; Wagner, C.; Dhar, B.K. Digital sustainability and eco-environmental sustainability: A review of emerging technologies, resource challenges, and policy implications. Sustain. Dev. 2025, 33, 2323–2338. [Google Scholar] [CrossRef]

- Abou Hashish, E.A.; Alnajjar, H. Digital proficiency: Assessing knowledge, attitudes, and skills in digital transformation, health literacy, and artificial intelligence among university nursing students. BMC Med. Educ. 2024, 24, 508. [Google Scholar] [CrossRef]

- University, K.F. Preparatory Year Guide 2021–2022; King Faisal University: Al Hofuf, Saudi Arabia, 2021. [Google Scholar]

- Ministry of Education. Higher Education Statistics. 2025. Available online: https://moe.gov.sa/ar/knowledgecenter/dataandstats/edustatdata/Pages/HigherEduStat.aspx (accessed on 17 May 2025).

- Hill, R. What sample size is “enough” in internet survey research. Interpers. Comput. Technol. Electron. J. 21st Century 1998, 6, 1–12. [Google Scholar]

- Muthén, B.; Asparouhov, T. Recent methods for the study of measurement invariance with many groups: Alignment and random effects. Sociol. Methods Res. 2018, 47, 637–664. [Google Scholar] [CrossRef]

- Hair Jr, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M.; Danks, N.P.; Ray, S. Partial Least Squares Structural Equation Modeling (PLS-SEM) Using R: A Workbook; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav Res Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed]

- Leguina, A. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Taylor & Francis: Abingdon, UK, 2015. [Google Scholar]

- Chun, J.; Kim, J.; Kim, H.; Lee, G.; Cho, S.; Kim, C.; Chung, Y.; Heo, S. A Comparative Analysis of On-Device AI-Driven, Self-Regulated Learning and Traditional Pedagogy in University Health Sciences Education. Appl. Sci. 2025, 15, 1815. [Google Scholar] [CrossRef]

- Duan, W.; McNeese, N.; Li, L. Gender Stereotypes toward Non-gendered Generative AI: The Role of Gendered Expertise and Gendered Linguistic Cues. Proc. ACM Hum.-Comput. Interact. 2025, 9, 1–35. [Google Scholar] [CrossRef]

- Alshebami, A.S.; Seraj, A.H.A.; Elshaer, I.A.; Al Shammre, A.S.; Al Marri, S.H.; Lutfi, A.; Salem, M.A.; Zaher, A.M.N. Improving Social Performance through Innovative Small Green Businesses: Knowledge Sharing and Green Entrepreneurial Intention as Antecedents. Sustainability 2023, 15, 8232. [Google Scholar] [CrossRef]

- Hair, J.; Alamer, A. Partial Least Squares Structural Equation Modeling (PLS-SEM) in second language and education research: Guidelines using an applied example. Res. Methods Appl. Linguist. 2022, 1, 100027. [Google Scholar] [CrossRef]

- Becker, J.-M.; Cheah, J.-H.; Gholamzade, R.; Ringle, C.M.; Sarstedt, M. PLS-SEM’s most wanted guidance. Int. J. Contemp. Hosp. Manag. 2022, 35, 321–346. [Google Scholar] [CrossRef]

- Dowdy, S.; Wearden, S.; Chilko, D. Statistics for Research; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Association, W.M. Declaration of Helsinki: World Medical Association. Ethical Princ. Med. Res. Hum. Beings 2004, 64, 2013. [Google Scholar]

- Alabbas, A.; Alomar, K. Tayseer: A novel ai-powered arabic chatbot framework for technical and vocational student helpdesk services and enhancing student interactions. Appl. Sci. 2024, 14, 2547. [Google Scholar] [CrossRef]

- Zhang, Y. A lesson study on a MOOC-based and AI-powered flipped teaching and assessment of EFL writing model: Teachers’ and students’ growth. Int. J. Lesson Learn. Stud. 2024, 13, 28–40. [Google Scholar] [CrossRef]

- Hassan, B.; Raza, M.O.; Siddiqi, Y.; Wasiq, M.F.; Siddiqui, R.A. CONNECT: An AI-Powered Solution for Student Authentication and Engagement in Cross-Cultural Digital Learning Environments. Computers 2025, 14, 77. [Google Scholar] [CrossRef]

- Wen, Y.; Chiu, M.; Guo, X.; Wang, Z. AI-powered vocabulary learning for lower primary school students. Br. J. Educ. Technol. 2025, 56, 734–754. [Google Scholar] [CrossRef]

- Aramburuzabala, P.; Culcasi, I.; Cerrillo, R. Service-learning and digital empowerment: The potential for the digital education transition in higher education. Sustainability 2024, 16, 2448. [Google Scholar] [CrossRef]

- Komarova, S. The Adoption of Artificial Intelligence and Machine Learning Technologies in Enterprises. 2024. Available online: https://urn.fi/URN:NBN:fi:amk-2024121134719 (accessed on 17 May 2025).

- Chergui, A.; Kafi, I.; Elkhalili, M. Human Face Expression Recognition Using Deep Learning Model (YOLO-V9). Master’s Thesis, University Kasdi Merbah Ouargla, Ouargla, Algeria, 2024. [Google Scholar]

- Tawil, S.; Miao, F. Steering the Digital Transformation of Education: UNESCO’s Human-Centered Approach. Front. Digit. Educ. 2024, 1, 51–58. [Google Scholar] [CrossRef]

- Khan, S.; Mazhar, T.; Shahzad, T.; Khan, M.A.; Rehman, A.U.; Saeed, M.M.; Hamam, H. Harnessing AI for sustainable higher education: Ethical considerations, operational efficiency, and future directions. Discov. Sustain. 2025, 6, 23. [Google Scholar] [CrossRef]

- Strielkowski, W.; Grebennikova, V.; Lisovskiy, A.; Rakhimova, G.; Vasileva, T. AI-driven adaptive learning for sustainable educational transformation. Sustain. Dev. 2025, 33, 1921–1947. [Google Scholar] [CrossRef]

- Anderson, J.E.; Nguyen, C.A.; Moreira, G. Generative AI-driven personalization of the Community of Inquiry model: Enhancing individualized learning experiences in digital classrooms. Int. J. Inf. Learn. Technol. 2025, 42, 296–310. [Google Scholar] [CrossRef]

- Edwards, J.R.; Cable, D.M.; Williamson, I.O.; Lambert, L.S.; Shipp, A.J.J.J.o.a.p. The phenomenology of fit: Linking the person and environment to the subjective experience of person-environment fit. J. Appl. Psychol. 2006, 91, 802–827. [Google Scholar] [CrossRef]

- Smith, D.C.; Park, C.W. The effects of brand extensions on market share and advertising efficiency. J. Mark. Res. 1992, 29, 296–313. [Google Scholar] [CrossRef]

- Cataldo, A.; Bravo-Adasme, N.; Riquelme, J.; Vásquez, A.; Rojas, S.; Arias-Oliva, M. Multidimensional Poverty as a Determinant of Techno-Distress in Online Education: Evidence from the Post-Pandemic Era. Int. J. Environ. Res. Public Health 2025, 22, 986. [Google Scholar] [CrossRef]

- Núñez-Regueiro, F.; Juhel, J.; Wang, M.-T. Does need satisfaction reflect positive needs-supplies fit or misfit? A new look at autonomy-supportive contexts using cubic response surface analysis. Soc. Psychol. Educ. 2025, 28, 43. [Google Scholar] [CrossRef]

- Hermundsdottir, F.; Aspelund, A. Sustainability innovations and firm competitiveness: A review Fanny. J. Clean. Prod. 2021, 280, 124715. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).