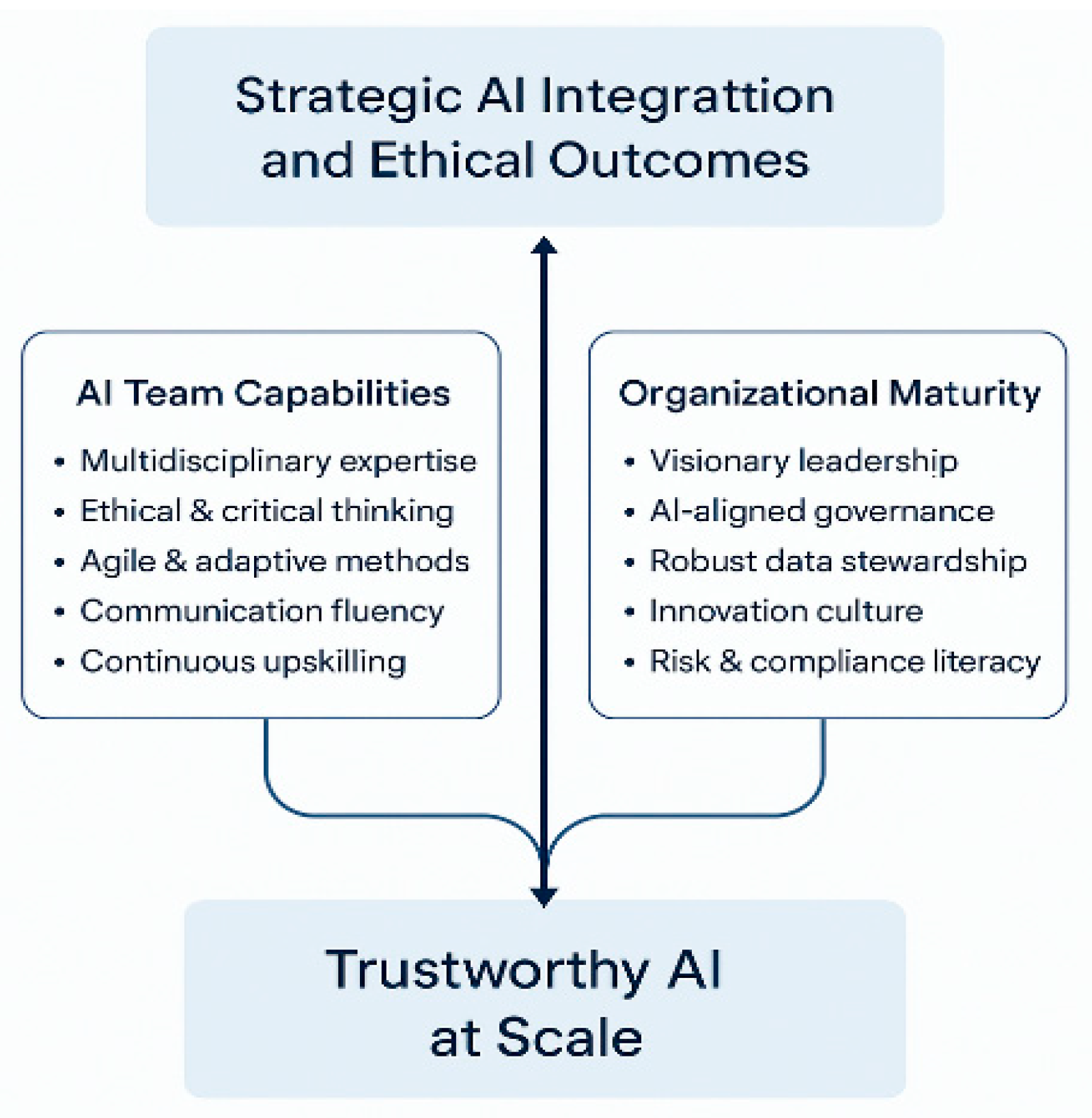

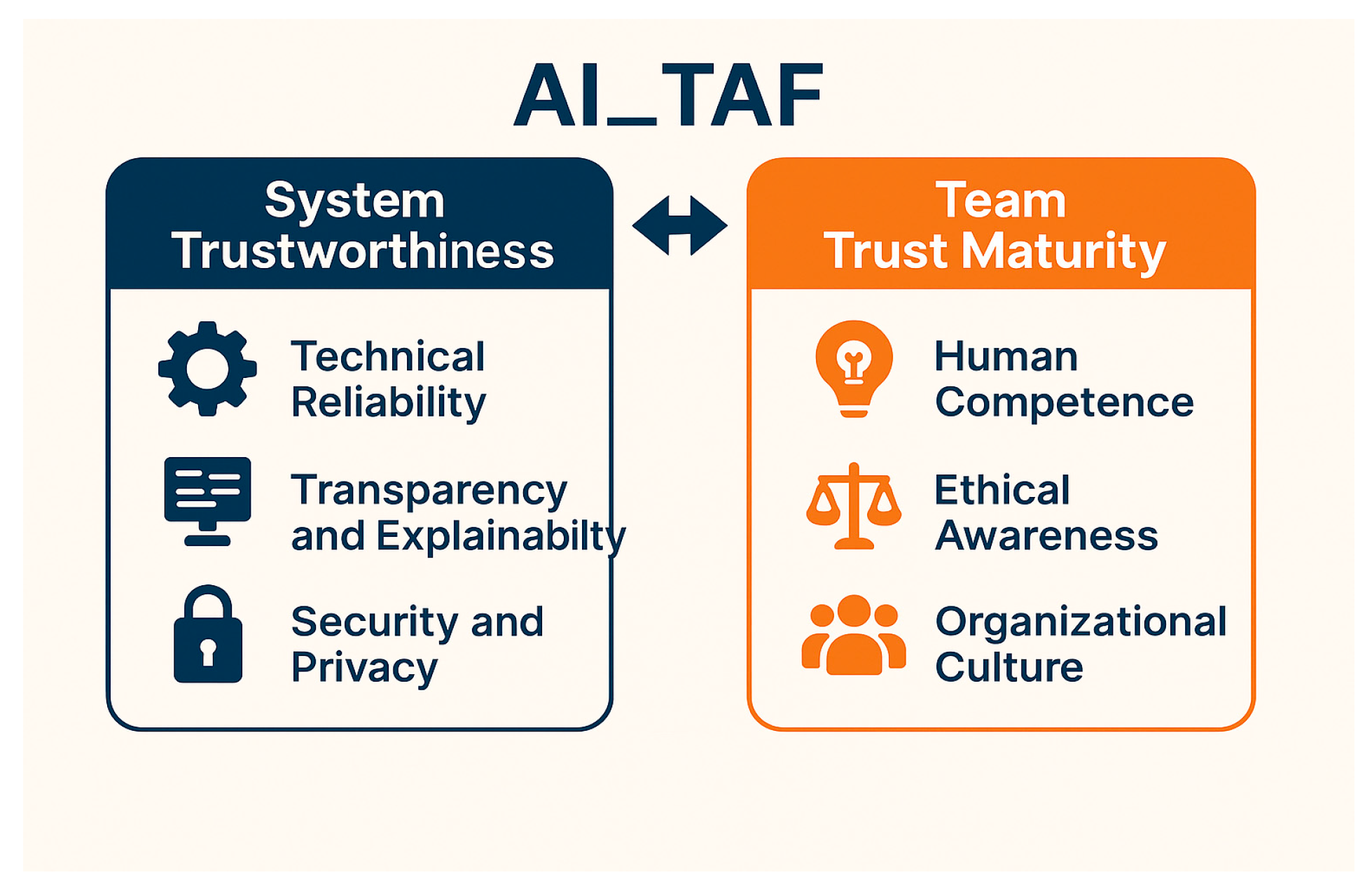

Unlike existing frameworks that often treat trustworthiness as a property of the system or data, AI_TAF reconceptualises trust as a joint property of the AI system and its human stewards. By systematically quantifying the Trust Maturity of AI teams (via tAIP) and incorporating these scores into the risk model, AI_TAF not only acknowledges but also operationalises the human dimension of trust. This goes beyond “human oversight” checklists by embedding team readiness, ethics, and cross-functional collaboration into the risk equation, making the framework genuinely human-centric in its design and application.

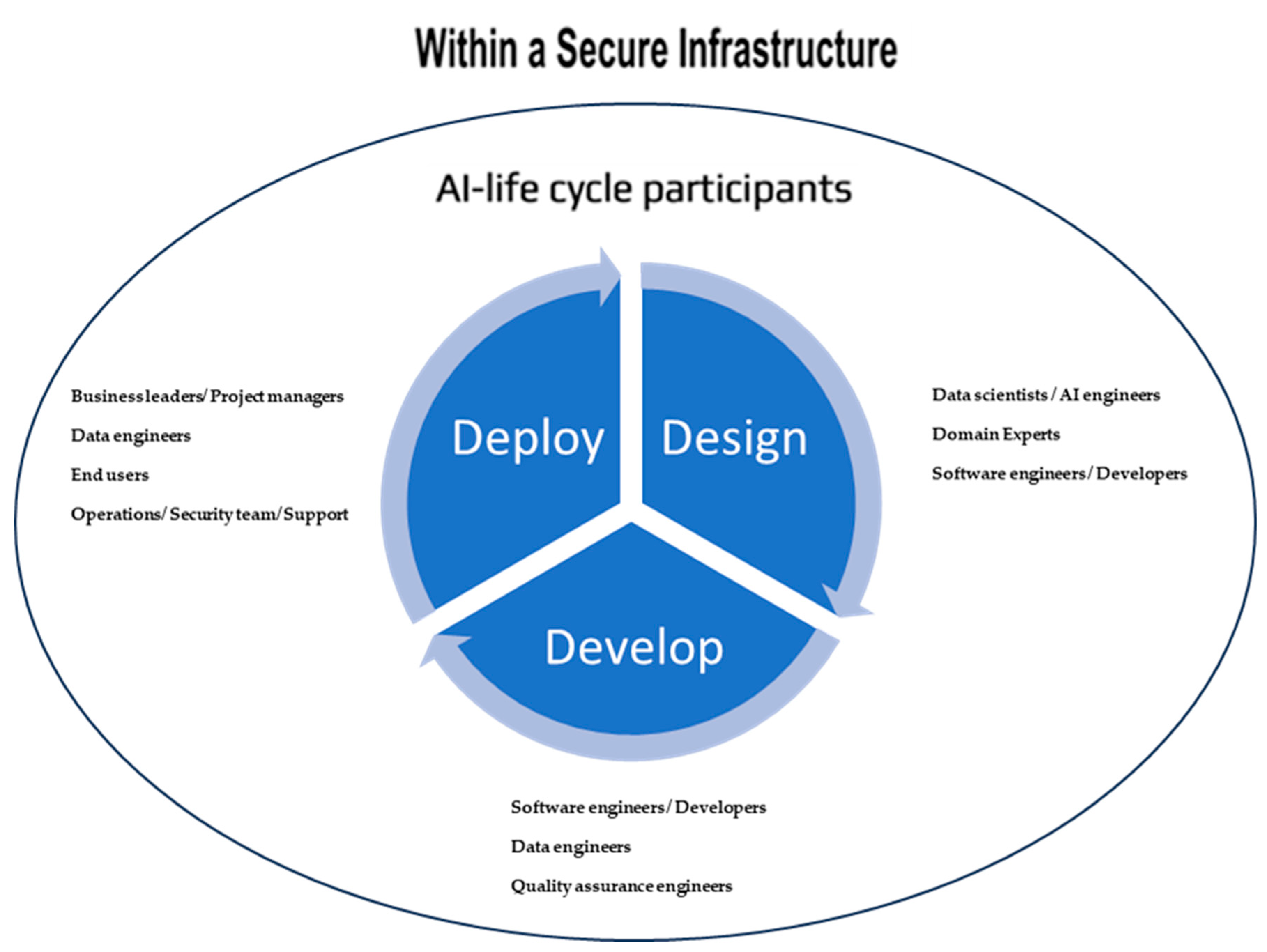

AI_TAF approaches trustworthiness as a dynamic attribute, not something achieved at launch but maintained through continuous assessment. AI_TAF can be applied to each stage of the AI lifecycle. Each assessment incorporates indicators for these attributes (stage of the lifecycle, AI assets involved, AI team “owners” of each AI asset, and trustworthiness dimensions relevant to the stage), linking them to specific threats and mitigations. Hence, the AI_TAF will be applied iteratively to evaluate threats and estimate risks for all assets of the AI system throughout its entire lifecycle, considering sector-specific characteristics, the intended use of the AI system, the trustworthiness of the teams involved, and (optionally) the sophistication level of the potential AI adversaries.

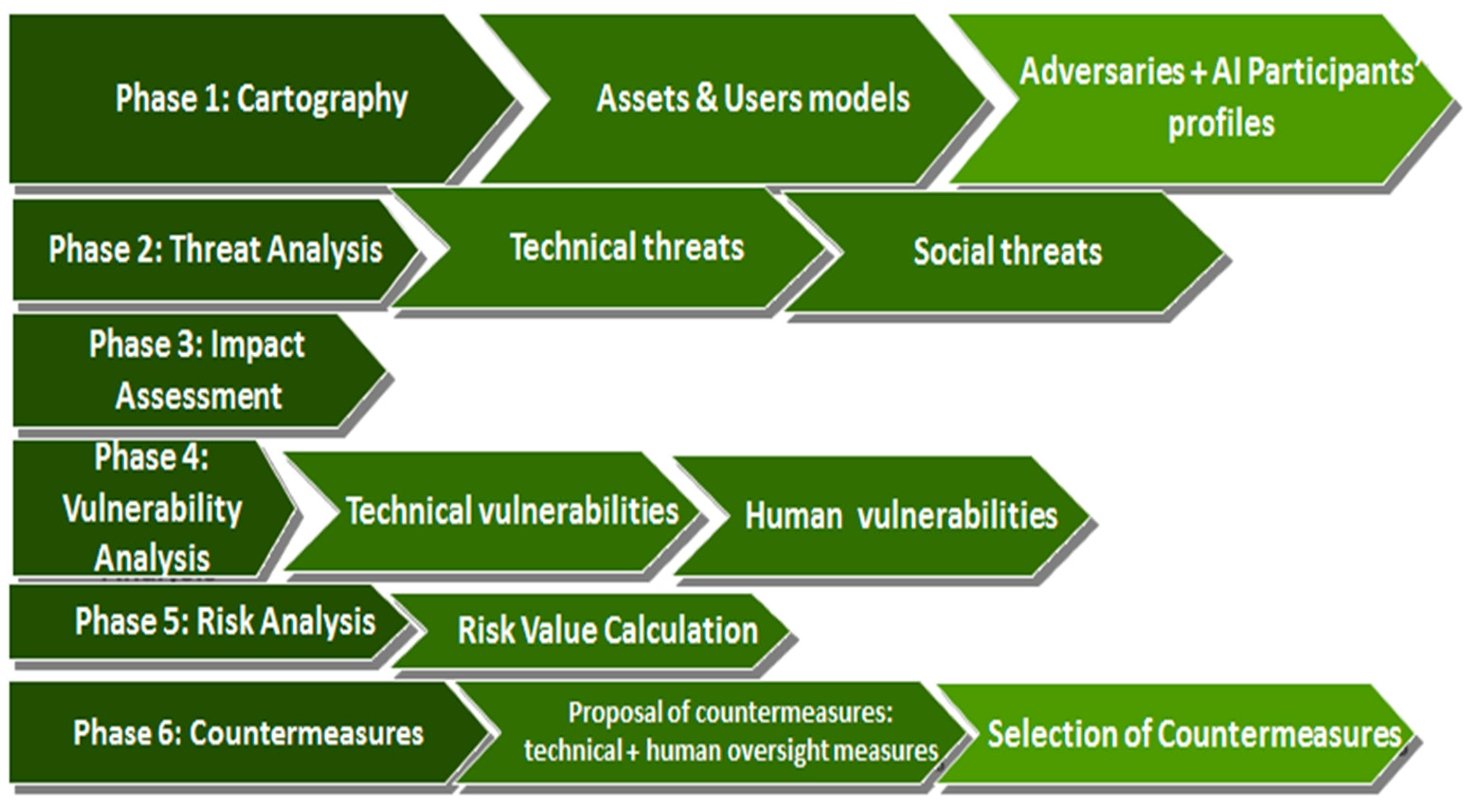

4.2. AI_TAF: A Six-Phase Approach to AI Risk Management

The AI_TAF presents a well-organised six-phase framework (based on ISO27005) for managing AI-related risks (

Figure 6); it is iterative, supporting the assessment of AI systems for each stage of their lifecycle.

4.2.1. Phase 1: Cartography-Initialisation

It begins with clearly establishing the scope of the assessment and setting the initialisation attributes. This involves identifying the AI system, the stage of the AI lifecycle, clarifying its intended purpose, mapping out the AI team involved in this stage of the lifecycle under assessment, and cataloguing the AI system’s assets to be assessed and the controls that have already been implemented to ensure its trustworthiness.

The criticality of an AI system (as outlined in

Table 4), proposed in this paper, is determined by several key factors that help determine the overall importance and regulatory sensitivity of the system, guiding the depth and focus of the trustworthiness and risk assessment.

The criticality level of an AI system imposes the same criticality level on all its assets under assessment.

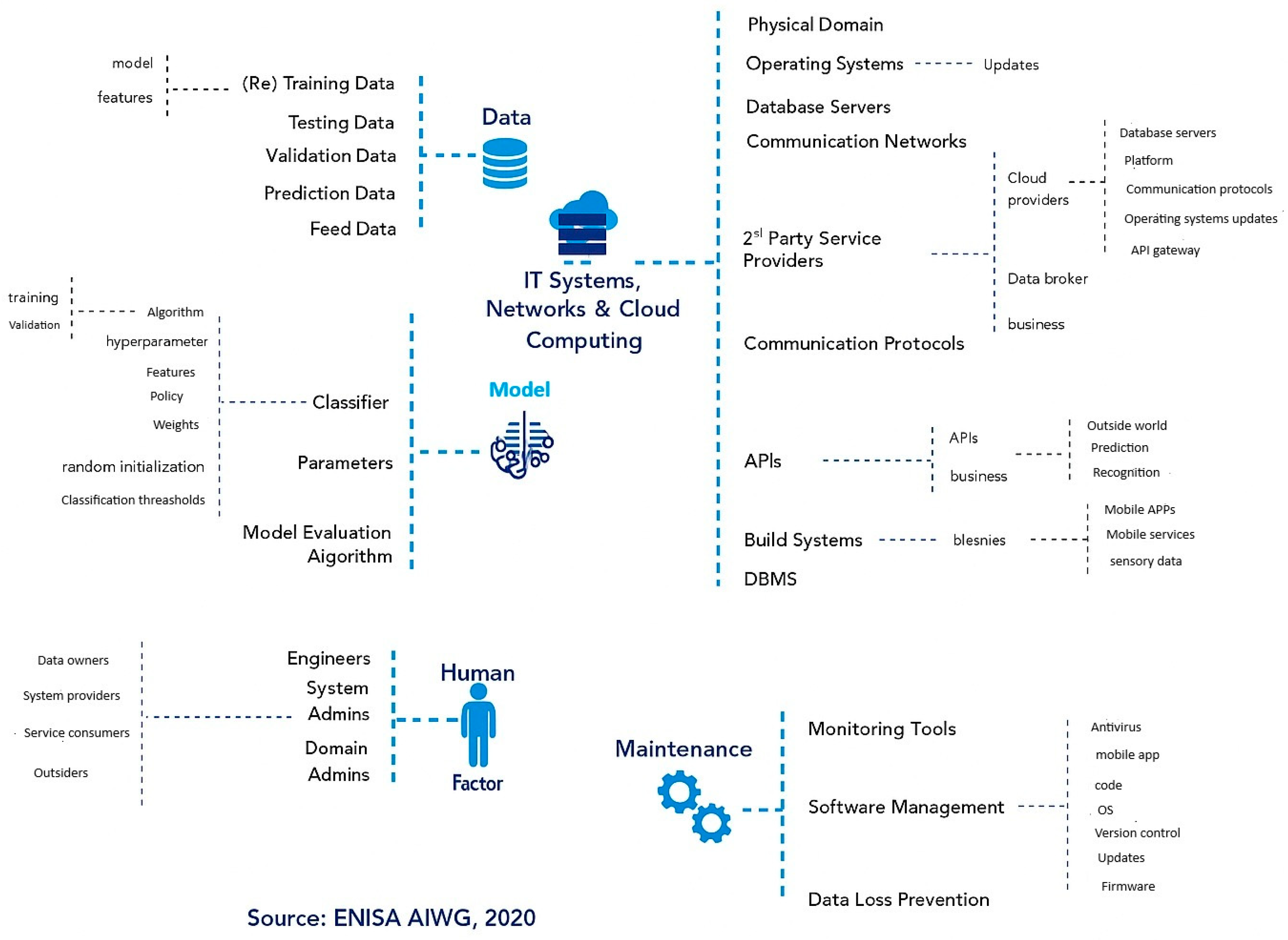

To support this process, an

asset model is created, offering a structured representation of the assets of the AI system in this phase of the lifecycle. This model (e.g.

Figure 7) highlights the relationships and dependencies among assets, such as training models, algorithms, datasets, workflows, and human actors.

By visualising these interconnections, the asset model not only enhances understanding of the system’s structure but also helps pinpoint which elements are most critical to evaluate. Ultimately, this facilitates a more focused and effective prioritisation of trustworthiness concerns across the AI lifecycle.

All implemented controls (technical, procedural, and business) to protect the AI assets under assessment against AI threats must be listed.

A user model will be created, outlining the AI teams involved in this phase of the lifecycle, which are the owners of the AI assets under assessment.

The assessor will contact workshops to evaluate the maturity level of all owners towards trustworthiness and provide tAIP scores. Optionally (upon threat intelligence capabilities), the sophistication scores of potential adversaries (tA) are also provided.

Phase 1 Output:

All attributes for the initialisation of AI_TAF will be provided, namely, the criticality of the AI system, the stage of lifecycle considered in this assessment, the AI asset model; implemented controls; AI user model; maturity scores of the teams (tAIP); and optionally, the sophistication scores of the adversaries (tA).

4.2.2. Phase 2: Threat Assessment

Threat assessment involves a thorough evaluation of potential threats to all AI assets in this phase of the AI lifecycle that could compromise system trustworthiness. This includes technical threats such as malware and data poisoning, as well as social threats like phishing and social engineering, which may vary depending on the AI maturity level of the organisation’s teams. The assessment identifies threats related to data quality, AI model performance, and operational deployment issues. The OWASP AI exchange [

21] and ENISA can be used to identify such threats.

During this phase, we also estimate the likelihood of each AI threat for each AI asset under assessment occurring (

Table 5) (as recorded by administrators or through logs). Factors influencing threat occurrence include:

Historical Data: Past occurrences of similar threats help forecast future risks;

Environmental Factors: Conditions in the sector or location where the AI system operates, such as natural disasters or political/economic stability;

Stability and Trends: Geopolitical events (e.g., economic crises, wars, and pandemics) and technological advancements (e.g., AI-driven attack systems) may signal an increase in threats.

The proposed scale in

Table 5 can be adjusted based on the criticality of the AI system and the organisation’s “risk appetite”. For example, for AI systems with a Very High criticality level, the threat level is categorised as Very High if it occurs twice within the last 10 years.

Phase 2 Output:

All technical and social threats have been identified, and the likelihood of each threat occurring to the AI components of the system under evaluation has been assessed.

4.2.3. Phase 3: Impact Assessment

The goal is to assess the potential impact of each threat on the different dimensions of trustworthiness relevant to the asset and phase in the lifecycle under assessment. This phase involves a thorough evaluation of how identified threats could affect these trustworthiness dimensions across. By referencing repositories like OWASP and ENISA, we can pinpoint the overlapping consequences of threats. For instance, threats like data loss and model poisoning can negatively impact multiple dimensions of trustworthiness, such as accuracy, fairness, and cybersecurity. Another dimension explicitly considered in AI_TAF is explainability. Threats that affect transparency—such as model opacity, biased training logic, or black-box decision-making—can compromise the ability of human actors to interpret system behaviour. This is particularly relevant in high-stakes environments where decisions must be justified or, at times, overridden. As part of the impact evaluation, explainability is assessed as a distinct dimension whenever it is applicable to the AI asset under evaluation.

The table below (

Table 6) will be adjusted to reflect the specific dimensions of trustworthiness pertinent to the system being assessed. The impact on each dimension may differ, and an average will be calculated. Additionally, the impact of each threat can be further evaluated based on the environment in which the AI system operates. In this case, business, technological, legal, financial, and other consequences need to be evaluated that organisations may encounter if the affected trustworthiness dimensions are compromised.

Output of Phase 3:

The consequences of each threat in relation to the trustworthiness dimensions will be evaluated. These assessments will include numerical scores, and, where necessary, qualitative reports will be provided to offer detailed insights into the assessment findings, such as the nature of the threats. Business impact assessment reports reveal the various consequences (e.g., legal, business, financial, technological, societal, and reputation) that the affected trustworthiness dimensions bring to the organisation.

4.2.4. Phase 4: Vulnerability Assessment

This phase focuses on identifying potential weaknesses in the AI system, including technical vulnerabilities (such as software flaws, inadequate data governance, and network weaknesses) that could be exploited by attackers. The AI_TAF framework in this phase estimates the vulnerability level of each AI asset to each of its threats based on the missing controls; the proposed scale is based on the percentage of controls in place versus the total available controls (

Table 7).

Available AI controls can be found in various knowledge databases (DB), e.g., OWASP AI [

45], ENISA [

42], NIST AI Risk Management Framework (AI RMF 1.0) [

2], or by using AI assessment tools (see

Table 1). These databases offer catalogues of AI-related threats, vulnerabilities, and controls that assessors can use as references. Technical vulnerabilities can also be identified using AI assessment tools, penetration testing, vulnerability scans, and social engineering evaluations.

The vulnerability level can be adjusted according to the criticality of the AI system and the organisation’s “risk appetite.” For example, for AI systems with a Very High criticality level, the vulnerability level could be Very High if a significant majority (less than 80–90%) of controls are implemented.

Output of Phase 4:

Vulnerability levels are recorded for each AI asset for each of its AI threats in this lifecycle under assessment.

4.2.5. Phase 5: Risk Assessment

Risk assessment in AI_TAF synthesises the findings of the previous phases. It involves assigning risk levels using:

for each AI asset and threat under assessment using the scales in the following table (

Table 8):

It is important to note that the resulting risk value is a composite ordinal index used for prioritisation and relative severity comparison. The score does not imply a probabilistic or metric interpretation (e.g., “Risk = 80” does not imply 80% likelihood). Rather, it provides a structured way to order AI assets by their estimated trustworthiness threat profile based on lifecycle phase and contextual attributes.

Although different combinations of threat, impact, and vulnerability can produce the same composite score, the AI_TAF framework retains the value of each component during reporting to preserve transparency. For example, an asset with (T = 5, V = 4, I = 1) reflects a high likelihood but minimal consequence, whereas (T = 1, V = 4, I = 5) reflects a high consequence but low likelihood. Risk owners are advised to interpret the results in conjunction with the individual component levels before mitigation.

This Risk level (R) will be refined if the estimated AI maturity level (tAIP) of the asset owner team is considered. The refined risk level (fR) is derived by adjusting the initial risk value (R), which is calculated for each AI asset in relation to each identified threat.

If the AI maturity level (tAIP) of the team responsible for a specific AI asset has been determined, fR can be recalculated as follows:

[where 1 = one level of the scale, for example, from very high to high or from low to medium]

The use of a “−1 level” adjustment to the risk index based on the AI team’s tAIP score is implemented as a heuristic correction. We acknowledge that Likert scales are ordinal and that the distances between levels (e.g., from ‘high’ to ‘moderate’) are not guaranteed to be equidistant. Therefore, subtraction should not be interpreted as a mathematical operation but as a proportional reduction in relative risk—sufficient for comparative prioritisation rather than absolute scoring.

Furthermore, if the sophistication level of the potential adversary (tA) is also determined, the proposed calculation can be further refined by incorporating this factor into the

final risk level (FR) as follows:

[where 1 = one level of the scale, for example, from very high to high or from low to medium].

4.2.6. Phase 6: Risk Management

The final phase addresses how to respond to the identified risks. AI_TAF offers a layered approach:

Proposal of Social controls to enhance the maturity level of AI teams using

Table 2 according to the team’s maturity level.

Technical controls (that will complement the implemented ones) will be selected from the existing OWASP and ENISA databases or those proposed by the AI assessment tools in

Table 1.

Additionally, the framework supports dynamic feedback loops, allowing teams to revisit earlier phases as systems evolve and new threats emerge. AI_TAF encourages the documentation of lessons learned and integrates them into organisational memory, enhancing maturity over time.

Phase 6 Output:

A documented overview of the proposed controls.

4.2.7. Example for Applying the AI-TAF

AI system under assessment: a simple AI-based chatbot designed to provide customer support for an e-commerce platform hosted by a small e-shop. This chatbot responds to customer queries, processes orders, and provides troubleshooting instructions.

Phase 1—

Initialisation: The e-commerce platform is hosted and operated in a small shop and is not a critical AI system; according to

Table 4, the criticality level is low (L). There is no need for an asset model since there is only one AI asset, i.e., the chatbot.

The trustworthiness dimensions that are relevant and interesting to the owners of this AI system are:

D1. Robustness—Ensuring that the chatbot functions correctly under different conditions.

D2. Fairness & Bias—Avoiding discrimination in responses.

The assessment will occur during the operation phase of the AI system lifecycle. The chatbot is operated only by one administrator (owner of the asset), and her trustworthiness maturity level has been reported (tAIP) = H. From previous incidents that conducted criminal investigations, the identified attackers had a sophistication level of tA = VH.

The four controls that have already been implemented are data validation procedures, data policy in place, API security, and encryption of sensitive data.

The risk appetite decided by the management of the shop is that they will treat 100% only those risks with risk levels FR = VH; they will postpone the treatment of the risks with levels FR = H until next year; they will absorb all other risks.

Phase 2—

Threat Assessment: Using the OWASP AI Exchange [

21], it was found that threats related to robustness, fairness, and bias and the controls appropriate to mitigate these threats are (

Table 9):

The four controls that have already been implemented (as reported in the initialisation phase) are the ones in bold, namely data validation, data policy in place, and API security; they also apply encryption to sensitive data for the threats of data poisoning, model extraction, and model inversion respectively. Since the AI system is not critical, the risk assessor used

Table 5 and provided the following threat assessment for the considered threats to the chatbot under assessment (

Table 10):

Phase 3 Impact Assessment: The threats relative to the chatbot impact the two dimensions (D1 and D2) of trustworthiness as follows, according to

Table 11:

Phase 4: Vulnerability Assessment: The four controls that have already been implemented (as reported in the initialisation phase) partially mitigate data poisoning, model extraction, and model inversion threats.

Based on the OWASP controls identified in Phase 2, the assessor used

Table 7 and reported that the vulnerability level of the chatbot to the identified threats are (

Table 12):

Phase 5: Risk Assessment: Using

Table 8 and the conditional equations for the refined (fR) and final risk levels (FR), we conclude (

Table 13):

The above table reveals how the risk calculation depends on human element consideration.