A Systematic Literature Review on Load-Balancing Techniques in Fog Computing: Architectures, Strategies, and Emerging Trends

Abstract

1. Introduction

- To analyze and categorize fog computing architectures and their implications for load balancing.

- To classify load-balancing algorithms and compare their strengths and weaknesses.

- To identify the most frequently used performance metrics and evaluation tools.

- To highlight emerging trends, ongoing challenges, and research gaps.

2. Related Works

- A detailed classification of fog computing architectures and their scalability, security, and application domains.

- A comprehensive taxonomy of load-balancing algorithms, including heuristic, meta-heuristic, ML/RL, and hybrid strategies.

- An in-depth evaluation of performance metrics, workload types, and assessment tools.

- A synthesis of current challenges, emerging trends, and research opportunities, including AI-driven, blockchain-based, and privacy-aware approaches.

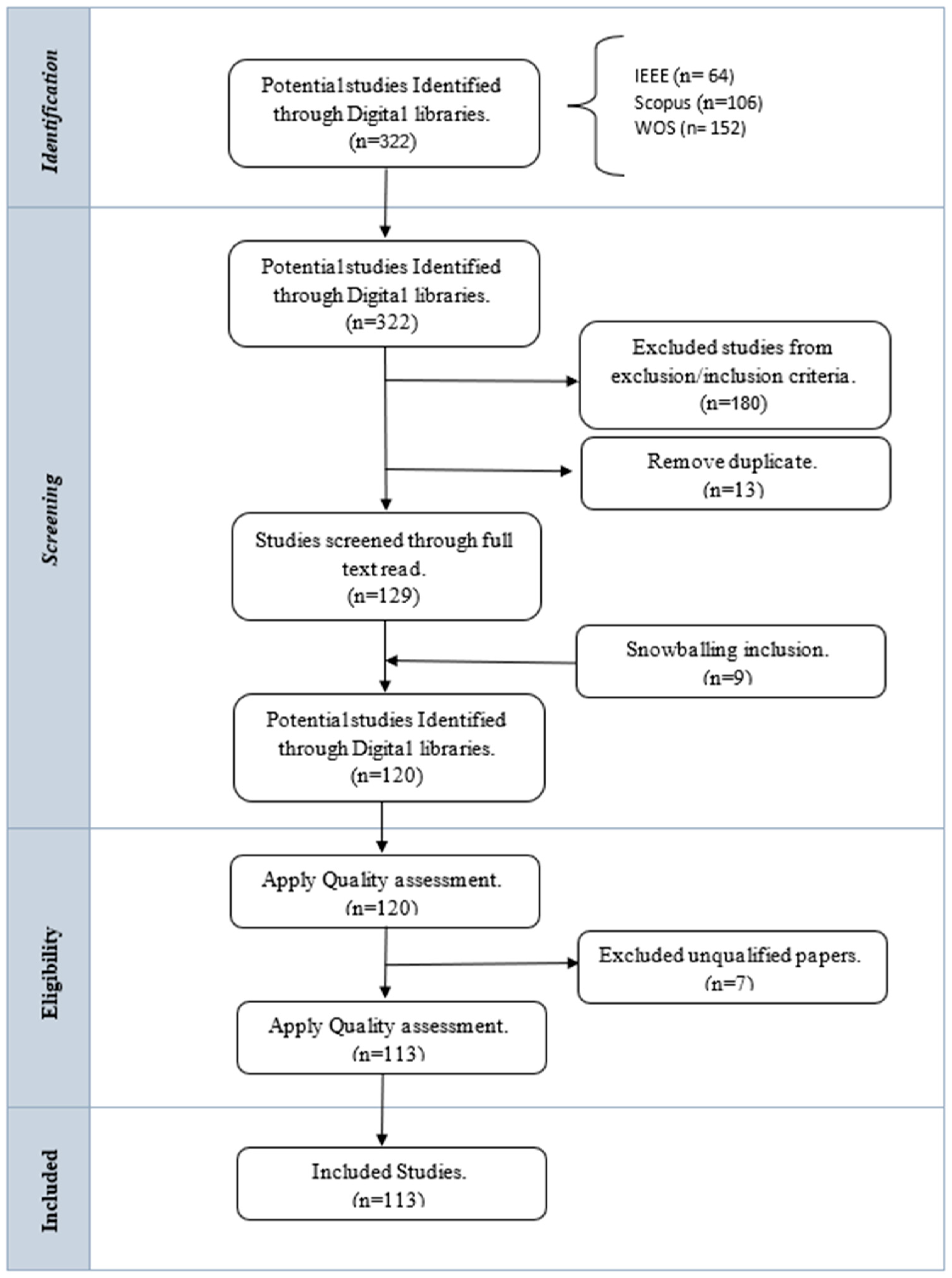

3. Methodology

3.1. Research Questions

- RQ1: What are the various architectures of fog computing, and how do they differ in terms of functionality, scalability, and application?

- RQ2: What types of load-balancing strategies or algorithms are applied in fog computing environments?

- RQ2.1: What are the advantages and disadvantages of these strategies?

- RQ3: What performance metrics are most commonly used to evaluate load-balancing algorithms in fog computing?

- RQ4: What workload types are frequently used to evaluate load balancing in fog computing (e.g., static vs. dynamic)?

- RQ5: What evaluation tools are commonly employed for assessing load-balancing algorithms in fog computing?

- RQ6: What methods are used to assess load-balancing effectiveness in fog computing environments?

- RQ7: What are the key challenges, emerging trends, and unresolved issues related to load balancing in fog computing?

3.2. Study Selection Criteria

3.3. Search Strategy Design

3.4. Quality Assessment

3.5. Data Extraction

- Architecture type, layers, and node configurations (RQ1);

- Load-balancing strategy, classification, and brief description (RQ2);

- Advantages and disadvantages of algorithms (RQ2.1);

- Performance metrics and evaluation tools (RQ3–RQ5);

- Workload types (RQ4);

- Assessment methods (RQ6);

- Challenges, trends, and open issues (RQ7).

3.6. Data Synthesis

3.7. Threats to Validity

- Selection bias: To mitigate this, we used multiple databases and rigorous inclusion/exclusion criteria.

- Publication bias: Only Q1/Q2 journal articles were selected, which may exclude the relevant gray literature.

- Reviewer bias: Dual review and majority voting were employed to reduce subjectivity during selection and extraction.

- Tool limitations: Some studies lacked transparency in tool usage, making cross-comparison more difficult.

4. Results and Discussion

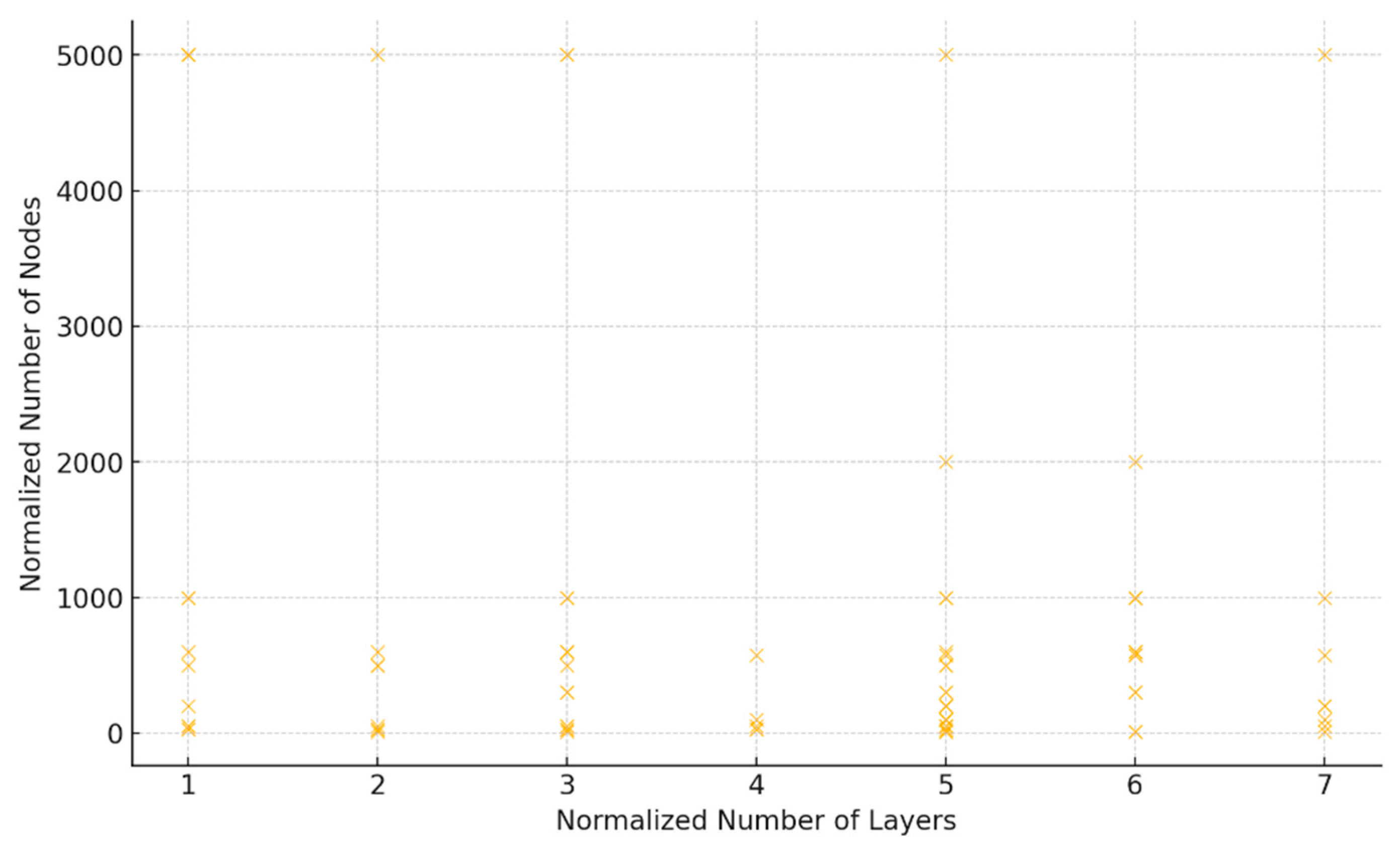

4.1. RQ1: Fog Computing Architectures

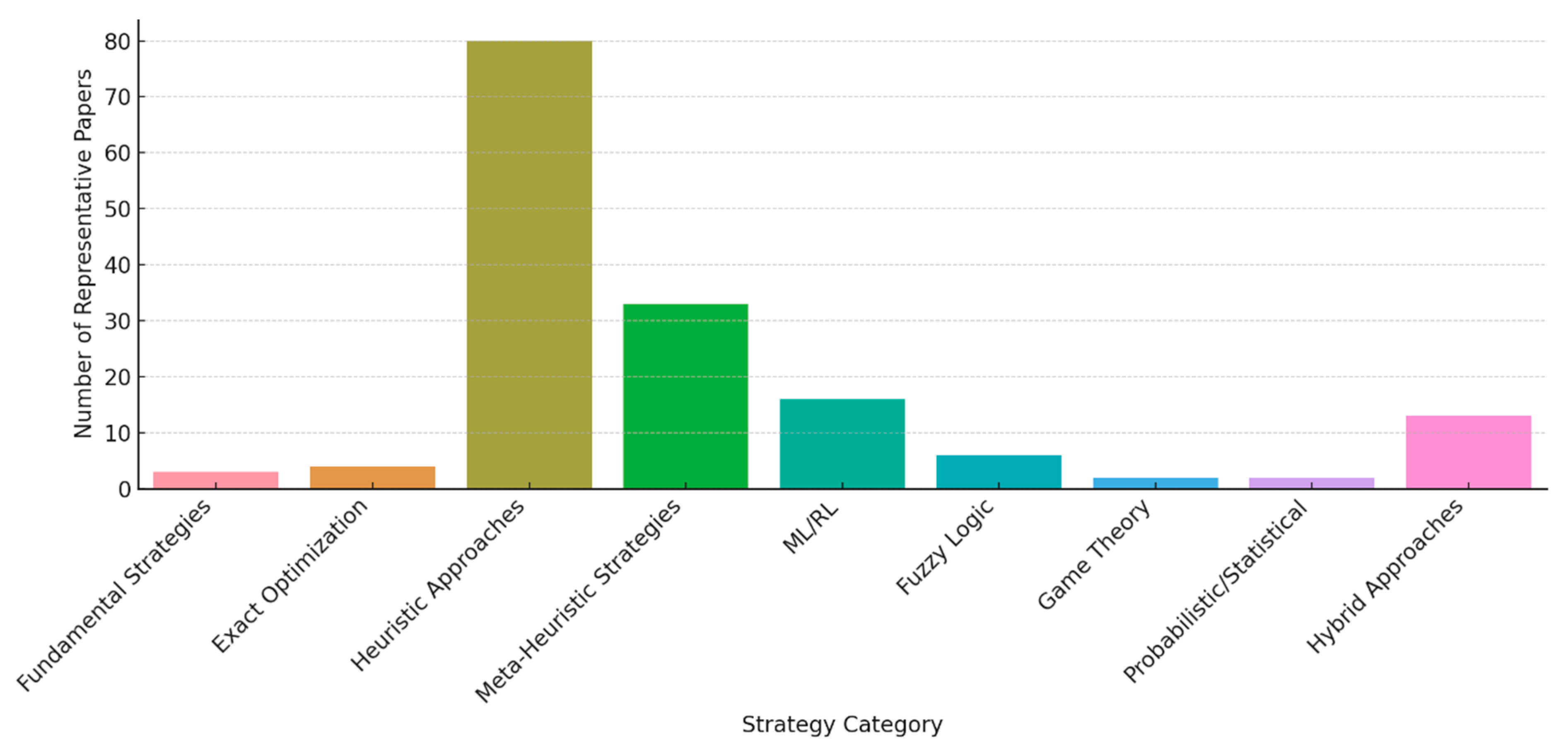

4.2. RQ2: Load-Balancing Strategies and Algorithms

4.3. RQ2.2: Advantages and Disadvantages in Load-Balancing Strategies

4.4. RQ3: Performance Metrics

4.5. RQ4: Workload Types

4.6. RQ5: Evaluation Tools

4.7. RQ6: Assessment Methods

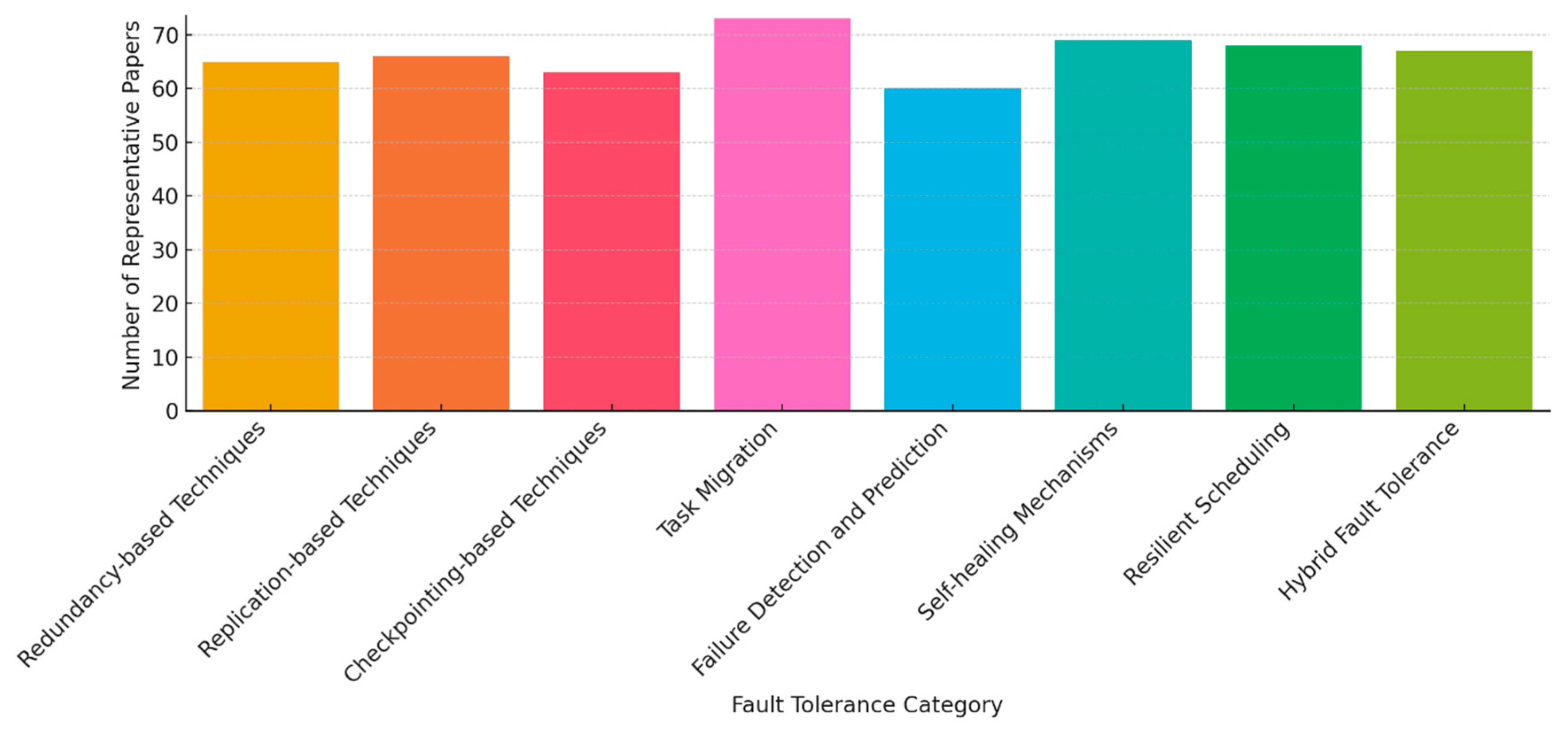

4.8. RQ7: Challenges, Trends, and Future Directions

5. Implications

6. Conclusions and Future Work

6.1. Conclusions

6.2. Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Study | Number of Layers | Architecture Description | Number of Nodes | Number of Clusters |

|---|---|---|---|---|

| [10] | 3 | Cloud layer, fog layer, terminal nodes layer | 50–300 | 6–14 |

| [23] | Multiple | Multiple layers, including cloud, fog nodes, and end devices. | NA | NA |

| [7] | 3 | IoT devices, fog nodes, cloud/central server | 50 | NA |

| [22] | 3 | End-device layer, fog layer, cloud layer | 2–30 fog nodes | NA |

| [15] | Flat | The architecture is non-hierarchical, which suggests a flat structure rather than multiple layers | NA | NA |

| [13] | 3 | Local fog layer, adjacent fog nodes, cloud layer | 4–16 | NA |

| [11] | 3 | IoT devices (edge), fog layer, cloud layer | NA | NA |

| [8] | 3 | Device, fog, control layers | 100–600 IoT, 40 fog nodes, 3 cloud nodes | Clusters for task management |

| [35] | 3 | IoT devices, fog nodes, cloud | 75 fog nodes | 5 clusters |

| [12] | 3 | Cloud computing layer, fog computing layer, user devices | 11 nodes | NA |

| [9] | 3 | IoT layer, fog layer, cloud layer | 12 IoT devices, 4 access points, 4 gateways, 6 fog nodes, 1 cloud data center | 4 clusters |

| [25] | 3 | Devices layer, fog layer, cloud layer | 236–578 vehicles, fog nodes, mobile and dedicated | Dynamically created clusters |

| [54] | 3 | Edge devices, fog nodes, cloud infrastructure | 22 IoT nodes, 3 fog nodes, 1 cloud node | NA |

| [52] | 3 | IoT devices, fog computing, cloud services | 5–25 fog nodes | NA |

| [57] | Multiple | Distributed layers, including sensors, fog nodes, and cloud layers | NA | NA |

| [59] | 3 | IoT devices, fog computing resources, cloud data centers | 50 IoT devices, 4 fog providers (50 VMs each) | 4 clusters |

| [53] | 3 | User devices, fog nodes, cloud systems | 5, 15, 30, 50 VRs | NA |

| [66] | Multiple | Multi-layer architecture integrating edge, fog, and cloud environments | 10–25 IoT devices, 10 fog nodes | NA |

| [31] | 4 | Production equipment, fog computing, cloud computing, user layers | 10 fog nodes | NA |

| [67] | 3 | IoT devices, fog layer, central cloud layer | 3 fog servers | NA |

| [68] | Variable | Varies per deployment scenario | NA | NA |

| [69] | 3 | NA | 5 Fog nodes | 4 cluster |

| [75] | Variable | various architectures, including sequential, tree-based, and DAG-based | NA | NA |

| [74] | 7 | Multiple fog nodes and a centralized cloud environment | 1073 fog nodes distributed across 7 layers | Puddles based on geographic proximity |

| [29] | 3 | Infrastructure tier, fog tier, global management tier | 1000 OBUs, 50 RSUs | RSUs grouped by LSDNC |

| [45] | 2 | Fog layer, cloud layer | NA | NA |

| [16] | 3 | Dew layer, fog layer, cloud layer | NA | NA |

| [61] | 2 | Fog layer, cloud layer | 5 cloud nodes, 3 fog nodes | NA |

| [70] | Multiple | Multi-layered fog computing paradigm extending from IoT sensors to cloud | Varies, heterogeneous fog nodes | Clusters of IoT devices |

| [71] | 3 | Cloud layer, fog layer, IoT layer | 100–500 fog nodes | NA |

| [99] | 3 | IoT devices, fog layer, cloud services | Multiple fog nodes and cloud nodes | NA |

| [96] | 3 | End devices, cog nodes, cloud layer | 16–64 fog nodes | NA |

| [63] | 3 | End-user devices, fog layer, cloud services | 40–100 VMs, 50 physical machines | NA |

| [30] | 4 | Acquisition layer, image layer, computing layer, robot layer | 5 fog nodes | NA |

| [72] | 3 | IoT layer, fog layer, cloud layer | 10–25 fog nodes | NA |

| [39] | 3 | IoT layer, fog layer, cloud layer | 1 master node, multiple SBC devices | Homogeneous or heterogeneous clusters |

| [32] | 4 | IoT gateways, fog broker, fog cluster, applications layer | 5 fog nodes | 1 cluster |

| [52] | 4 | Cloud layer, proxy server layer, gateway layer, edge device layer | Varies based on simulation | NA |

| [34] | 3 | Edge layer, fog layer, cloud layer | 500–2000 fog nodes | NA |

| [42] | 3 | Cloud layer, fog layer, end-user layer | 6 fog nodes | NA |

| [14] | Multiple | A multi-layered approach involving edge, fog, and cloud layers | 2 Raspberry Pis | Single Kubernetes cluster |

| [21] | 4 | Cloud computing layer, SDN control layer, fog computing layer, user layer | 10 fog nodes, 2 cloud nodes | SDN control allows for dynamic clustering |

| [40] | 3 | Edge layer, fog layer, cloud layer | 29 fog nodes | Dynamic clusters |

| [80] | 3 | Edge layer, fog layer, cloud layer | 4–5 fog nodes | Dynamic clusters |

| [76] | 3 | The sensing layer, fog layer, cloud layer | Multiple fog nodes | Dynamic clusters |

| [89] | 3 | Cloud layer, fog layer, end-user layer | 4 fog nodes, 1 cloud node | NA |

| [77] | 3 | End-user layer, fog layer, cloud layer | 2 fog nodes, 1 cloud node | NA |

| [36] | 3 | Lightweight nodes layer, access points layer, dedicated computing servers’ layer | 400 lightweight nodes, 10 access points | Dynamic logical groupings |

| [62] | 3 | Edge/IoT layer, fog layer, cloud layer | 50 fog nodes, 1 cloud node | Dynamic groupings |

| [37] | 3 | IoT device layer, fog layer, cloud layer | 20 fog nodes, 40 volunteer devices, 1 cloud data center | Logical grouping based on proximity |

| [100] | 2 | IoT layer, fog layer | 10 fog nodes | NA |

| [78] | 2 | Vehicular layer, RSU layer | 6 RSUs, 1000–3000 vehicles | Dynamically created clusters |

| [101] | 3 | Edge layer, fog layer, cloud layer | 100 edge devices, 20 fog devices, 5 cloud servers | NA |

| [38] | 3 | IoT layer, fog layer, edge server layer | 1 edge server, multiple fog nodes | Dynamic clusters based on workload |

| [24] | 2 | Fog layer, cloud layer | 3 fog servers, 1 cloud server | Single fog cluster |

| [26] | 3 | IoT layer, fog layer, cloud layer | Dynamic, based on parked vehicles | Dynamically formed clusters |

| [81] | 3 | Vehicular fog layer, fog server layer, cloud layer | Vehicular fog nodes, RSUs, cloud nodes | Dynamically created clusters |

| [20] | 4 | Perception layer, blockchain layer, SDN and fog layer, cloud layer | Distributed RSUs, 500 vehicles | Dynamically created clusters |

| [82] | 3 | Vehicular layer, fog layer, cloud layer | UAVs and RSUs, dynamic | Dynamic swarms |

| [46] | 2 | Fog layer, IoT device layer | 4 fog nodes, 50 IoT devices | 4 clusters |

| [64] | 3 | Fog layer, edge layer, cloud layer | 5 UEs per edge server, 5 edge servers, 1 cloud server | 5 clusters |

| [55] | 3 | IoT device layer, fog layer, cloud layer | 2 fog servers, 1 cloud server | 2 clusters |

| [18] | 3 | IoT layer, fog layer, cloud layer | Multiple fog nodes, dynamic environment | Dynamic clustering |

| [56] | 2 | Fog layer, cloud layer | 15 fog nodes, varying IoT devices | Dynamic clusters |

| [90] | 3 | IoT layer, fog layer, cloud layer | Varies based on demand | Dynamic clustering |

| [83] | 2 | Fog layer, cloud layer | Distributed fog nodes, cloud nodes | Dynamic clusters |

| [91] | 3 | Microgrid layer, fog layer, cloud layer | 3 microgrids, fog nodes | 3 clusters |

| [27] | 3 | IoT layer, fog layer, cloud layer | 9 edge servers, dynamic IoT devices | Dynamic clusters |

| [84] | 3 | Edge layer, fog layer, cloud layer | Multiple appliances, smart sensors | Dynamic clusters based on HEMS |

| [17] | 4 | IoT layer, edge layer, fog layer, cloud layer | Multiple edge, fog, and cloud nodes | Dynamic clusters |

| [85] | 3 | Containerized workload layer, fog layer, cloud layer | 6 nodes, Kubernetes | Dynamic clusters |

| [28] | 3 | Edge, fog, cloud | 100 fog nodes, 10–20 IoT devices | Task-based clusters |

| [86] | 3 | IoT devices layer, fog landscape layer, cloud layer | Multiple fog cells | Fog colonies as clusters |

| [97] | 2 | Fog layer, cloud layer | NA | NA |

| [98] | 3 | Sensor layer, fog layer, cloud layer | Multiple fog nodes | Dynamic clusters |

| [19] | 4 | Sensor layer, fog devices, proxy servers, cloud data centers | 30 microdata centers | Hierarchical MDCs |

| [102] | 3 | IoT layer, fog layer, cloud layer | NA | NA |

| [87] | 3 | IoT layer, fog layer, cloud layer | 50 fog nodes | NA |

| [88] | 3 | IoT layer, fog layer, cloud layer | NA | NA |

| [60] | 3 | IoMT layer, fog layer, cloud layer | 100–500 fog devices | NA |

| [103] | 4 | Fog devices, cloud data center | 5 devices at each tier | NA |

| [65] | Multiple | NA | 10–70 heterogeneous fog nodes | 3 clusters |

| [92] | Multiple | Multiple layers, including fog nodes and cloud | 500 fog nodes, 200 fog–cloud interfaces | NA |

| [104] | 4 | Edge layer, Base station layer, fog layer, cloud layer | 20 heterogeneous virtual machines in the fog layer | NA |

| [41] | 3 | Edge, fog, cloud | 100 fog nodes, 3 data centers | NA |

| [50] | 3 | IoT layer, fog layer, cloud layer | 5–20 fog nodes in experiments | NA |

| [105] | 3 | EDC, Fog nodes, cloud data centers | Distributed fog nodes between data sources and the cloud | NA |

| [33] | 4 | Perception layer, fog layer, cloud layer, communication layer | Dynamic based on vehicles and lanes | NA |

| [106] | 3 | End users, fog nodes, cloud layer | 100 fog nodes, centralized cloud servers | NA |

| [47] | 3 | IoT layer, fog layer, cloud layer | Varies with the application; fog servers categorized into overloaded, balanced, and underloaded | NA |

| [107] | 3 | IoT devices layer, fog layer, cloud layer | 20 fog nodes, 6 cloud nodes | NA |

| [108] | 3 | IoT layer, fog layer, cloud layer | Dynamic, fog nodes per region, cloud nodes | NA |

| [93] | 3 | IoT device layer, fog layer, cloud layer | 1–5 fog nodes as gateways | 10 clusters |

| [51] | 3 | End-user layer, fog layer, cloud layer | 2 to 200 fog nodes, dynamically grouped into clusters | 10 clusters (20 nodes each) |

| [48] | 3 | IoT layer, fog layer, cloud layer | 200 fog servers, dynamic categorization | NA |

| [109] | 4 | WGL, FCL, CCL, RAL | Dynamic, based on the number of IoT devices, fog nodes, and cloud servers | NA |

| [94] | 3 | Infrastructure layer, fog layer, cloud layer | 10 fog nodes, 1 cloud node | NA |

| [44] | 3 | IoT layer, fog layer, cloud layer | 15 IoT devices, 8 fog nodes, 30–180 VMs, 1 cloud data center | NA |

| [95] | 3 | IoT layer, fog layer, cloud layer | 5–20 fog nodes | NA |

| [110] | 3 | IoT layer, fog layer, cloud layer | 20 fog nodes, 30 cloud nodes | NA |

| [111] | 2 | Fog layer, consumer layer | 5 fog nodes in residential areas | NA |

| [112] | 3 | IoT layer, fog layer, cloud layer | Varies dynamically based on IoT devices, fog nodes, and cloud servers | NA |

| [113] | 3 | IoT tier, fog tier, data-center tier | 100 fog nodes, 10–20 IoT devices, 3 cloud data centers | NA |

| [49] | 3 | IoT layer, fog layer, cloud layer | 30 fog nodes, multiple cloud nodes | NA |

| [2] | 3 | IoT layer, fog layer, cloud layer | 5–20 fog nodes with VMs, 1 cloud node | NA |

| [58] | 3 | IoT layer, fog layer, cloud layer | Multiple fog nodes (small servers, routers, gateways) | NA |

| [43] | 3 | IoT devices, fog nodes, cloud data centers | 15 fog nodes | NA |

| [114] | 2 | Load balancer at the first stage and virtual machines/web servers at the second stage | Single load balancer, multiple virtual machines/web servers | NA |

| [115] | 3 | Mobile devices, fog computing layer, cloud layer | 10 fog nodes, 2 cloud nodes | NA |

| [116] | 3 | Edge, fog, cloud | 1 edge node per consumer, 1 fog node per load point, centralized cloud data center | NA |

| [117] | 3 | IoT devices, fog layer, cloud layer | Mobile IoT devices, fog gateways, brokers, and processing servers | NA |

| Category | Advantages | Disadvantages | Representative Papers |

|---|---|---|---|

| Fundamental Strategies | Simple and predictable behavior in static environments. | -Lack of adaptability to dynamic workloads. | [57,88] |

| Low computational overhead, ideal for systems with minimal complexity. | -Inefficient resource utilization in heterogeneous systems. | ||

| Exact Optimization | Guarantees optimal solutions for latency and resource utilization. | -High computational demands. | [19,62] |

| Ideal for theoretical modeling and small-scale systems. | -Poor scalability, unsuitable for real-time and large-scale fog scenarios. | ||

| Heuristic Appoaches | Simple and flexible with moderate computational efficiency. | -Often yield suboptimal solutions. | [20,21,74] |

| Adaptable to system constraints, suitable for dynamic environments. | -Highly dependent on parameter tuning and system-specific configurations. | ||

| -Limited exploration capabilities. | |||

| Meta-Heuristic Strategies | Excel in exploring complex solution spaces and multi-objective optimization. | -Computationally intensive. | [2,49,95,110,111,112] |

| Well suited for dynamic environments, balancing global exploration and local convergence. | -Require extensive tuning of fitness functions. | ||

| -Risk of convergence to local optima without proper hybridization or enhancements. | |||

| ML/RL | Offer intelligent automation, adapting to changing workloads in real time. | -Require large training datasets. | [7,33,41] |

| Optimize across multiple objectives, suitable for dynamic and complex fog systems. | -High implementation complexity. | ||

| -Vulnerable to overfitting and poor performance in underrepresented scenarios. | |||

| Fuzzy Logic | Handle uncertainty and imprecision effectively. | -Dependence on expert-defined rules limits scalability. | [18,44,45] |

| Low resource consumption and easily adaptable to diverse scenarios with clear fuzzy rules. | -Struggle in highly dynamic environments. | ||

| Game Theory | Ensures fairness and efficiency in distributed and competitive environments. | -Computationally complex to achieve equilibrium. | [38,46] |

| Well suited for resource allocation in multi-agent systems. | -Dependent on accurate real-time data, limiting scalability in dynamic systems. | ||

| Probabilistic/Statistical | Simple and efficient for tasks involving statistical variability. | -Oversimplify system dynamics, limiting applicability in deterministic or complex scenarios. | [64,69] |

| Effective in handling uncertainty within fog environments. | -Limited adaptability can lead to suboptimal performance. | ||

| Hybrid Approaches | Combine strengths of multiple methods, such as meta-heuristics, ML/RL, or exact methods. | -High complexity in implementation and parameter tuning. | [2,10,20,26,31] |

| Effective for multi-objective optimization in dynamic and complex environments. | -Often requires specialized hardware for deployment. |

| Category | Description | Representative Papers |

|---|---|---|

| Fundamental Strategies | Basic approaches with predictable behavior are suitable for simple static environments. | [57,58,88] |

| Exact Optimization | Optimization techniques guarantee the theoretical best outcomes, ideal for small-scale scenarios. | [19,62,111,115] |

| Heuristic Approaches | Flexible and efficient algorithms suited for dynamic environments with moderate complexity. | [8,14,20,21,23,29,32,34,40,42,45,52,54,61,63,68,69,70,71,74,76,77,78,89,96,99,100,101] |

| Meta-Heuristic Strategies | Advanced strategies exploring complex solution spaces, balancing exploration and exploitation. | [2,22,35,47,49,106] |

| ML/RL | Machine learning and reinforcement learning models for real-time adaptive optimization. | [17,18,33,41,55] |

| Fuzzy Logic | Techniques for managing uncertainty using fuzzy rules, applicable to imprecise data scenarios. | [8,11,18,22,45] |

| Game Theory | Game theory-based methods ensure fairness and efficiency in distributed systems. | [38,46] |

| Probabilistic/Statistical | Probabilistic models handling variability are effective in uncertain fog environments. | [64,69] |

| Hybrid Approaches | Integrated methods combining strengths of multiple approaches for multi-objective optimization. | [2,10,20,26,31] |

| Primary Category | Subcategory | Percentage |

|---|---|---|

| 1. Latency and Time Metrics | Latency (ms) | 16% |

| Task Completion Time (sec) | ||

| Response Time | ||

| Communication Delay | ||

| Waiting Time in Queue | ||

| Turnaround Time (TAT) | ||

| Service Delay Time | ||

| Temporal Delay | ||

| Processing Time | ||

| Mean Service Time (MST) | ||

| 2. Resource Utilization Metrics | Resource Utilization (CPU%) | 7% |

| Memory Usage | ||

| Number of Computing Resources | ||

| Network Utilization | ||

| Number of Used Devices | ||

| Bandwidth Utilization | ||

| 3. Energy Efficiency Metrics | Energy Consumption (W) | 4% |

| Brown Energy Consumption | ||

| 4. Reliability and Fault Metrics | Fault Tolerance (Yes/No) | 9% |

| Failure Rate | ||

| Access Level Violations | ||

| Deadline Violations | ||

| Number of Deadlines Missed | ||

| 5. Cost Metrics | Communication Cost | 7% |

| Execution Cost | ||

| Service Cost | ||

| Cost of Data Transmission | ||

| 6. Load-Balancing and Distribution Metrics | Load-Balancing Level (LBL) | 10% |

| Workload Distribution | ||

| Queue Length | ||

| Task Delivery | ||

| Workflows | ||

| 7. Network and Scalability Metrics | Network Lifetime | 8% |

| Network Bandwidth | ||

| Jitter | ||

| Congestion | ||

| Scalability | ||

| 8. Prediction and Accuracy Metrics | Prediction Interval Coverage (PIC) | 5% |

| Prediction Accuracy (AAPE) | ||

| Prediction Efficiency | ||

| Accuracy | ||

| Accuracy of IRS Classification | ||

| 9. Quality of Service (QoS) Metrics | QoS Satisfaction Rate | 18% |

| Blocking Probability (bp) | ||

| Success Ratio (SR) | ||

| Fairness Index | ||

| Throughput (Tasks/sec) | ||

| Efficiency | ||

| Scalability | ||

| Prediction Interval Coverage (PIC) | ||

| Prediction Accuracy (AAPE) | ||

| Average Processing Time (APT) | ||

| Success Ratio (SR) | ||

| Blocking Probability (bp) | ||

| Task Delivery | ||

| 10. Security Metrics | Encryption Time | 2% |

| Decryption Time | ||

| Handover Served Ratio (HSR) | ||

| Onboard Unit Served Ratio (OSR) | ||

| 11. Specialized Metrics | Solution Convergence | 14% |

| Queue Management | ||

| Loss Rate | ||

| Consensus Time | ||

| Social Welfare | ||

| Offloading Success | ||

| Task Rejection Rate | ||

| Node Selection Success Rates |

| Workload Type | Paper IDs | Description | Frequency | Percentage |

|---|---|---|---|---|

| Dynamic | [7,8,9,10,15,20,21,22,31,35,74,118] | Workload is dynamically generated based on real-time conditions, reflecting the variability and unpredictability of IoT systems. | 108 | 95.60% |

| Static | [23,56] | Fixed workloads without runtime changes, often used for benchmarking or theoretical analysis. | 2 | 1.80% |

| Dynamic and Data Intensive | [13] | Combines dynamic nature with high data volume or computational intensity. | 1 | 0.90% |

| Static/Dynamic | [57,58] | Incorporates both static and dynamic workloads to evaluate hybrid or flexible algorithms. | 2 | 1.80% |

| Study | Tool Name |

|---|---|

| [11,23,24,69,99] | Custom Simulator |

| [7] | COSCO Simulator |

| [15,54] | Discrete-event Simulator (YAFS) |

| [40,71] | Python (SimPy) |

| [32] | Python (SciPy) |

| [83,91,102] | Python (Custom) |

| [65] | Java (Custom) |

| [57,66] | C (Custom) |

| [8] | IoTSim-Osmosis |

| [22,31,35] | MATLAB |

| [79,95,111,114,117] | iFogSim |

| [68,85] | Kubernetes and Istio |

| [37,58] | OMNeT++ |

| [74] | PFogSim |

| [27] | EdgeCloudSim |

| [20,81] | SUMO Simulator |

| [90,115] | Mininet-WiFi |

| [43,49,58,113] | CloudSim |

| [14] | Truffle Suite and Ganache |

| [72,116] | Docker |

| [28,84] | Real-World Testbeds |

| [82] | AnyLogic |

| [2,87] | FogSim |

| [30] | PySpark |

| [39] | CloudAnalyst |

| [93] | Apache Karaf |

| Tool Name | Classification | Definition |

|---|---|---|

| iFogSim | Widely Recognized Simulator | A simulator designed for fog and IoT environments, providing capabilities for modeling latency, energy consumption, and network parameters. |

| CloudSim | Widely Recognized Simulator | A simulation platform for modeling cloud computing environments, extended for fog computing. |

| PFogSim | Widely Recognized Simulator | An extension of CloudSim designed for large-scale, heterogeneous fog environments. |

| FogSim | Widely Recognized Simulator | A modular simulator tailored for fog and cloud computing environments. |

| YAFS (Yet Another Fog Simulator) | Widely Recognized Simulator | A simulator focusing on fog-specific features and performance metrics. |

| OMNeT++ | Widely Recognized Simulator | A discrete-event network simulation framework for task distribution and communication modeling. |

| MATLAB | Widely Recognized Simulator | A computational environment supporting algorithm implementation and simulation, often used for fuzzy logic and deep learning. |

| Mininet/Mininet-WiFi | Network and IoT-Specific Tool | A network emulator for testing SDN and IoT architectures. |

| SUMO | Network and IoT-Specific Tool | A traffic simulation tool used for vehicular mobility and task-offloading evaluations. |

| IoTSim-Osmosis | Network and IoT-Specific Tool | A Java-based simulator built on the CloudSim framework for IoT and fog computing. |

| Truffle Suite | Blockchain and SDN Tool | A development framework for blockchain applications, used for writing and testing smart contracts. |

| Ganache | Blockchain and SDN Tool | A personal Ethereum blockchain simulator for testing blockchain-based implementations. |

| Docker | Custom Simulator Framework | A containerization platform used for deploying custom simulation environments. |

| SimPy | Python-Based Framework | A process-based discrete-event simulation framework in Python. |

| SciPy | Python-Based Framework | A Python library for optimization and simulation in custom environments. |

| Custom Simulators | Custom Simulator Framework | Tailored simulation environments designed for specific research scenarios. |

| Real-World Testbeds | Real-World Testbed | Physical setups using devices like Raspberry Pi for practical evaluations. |

| AnyLogic | Widely Recognized Simulator | A multi-method simulation modeling tool for evaluating complex systems. |

| EdgeCloudSim | Widely Recognized Simulator | A simulation environment for edge–fog–cloud computing scenarios. |

| Emerging Trend | Key Technologies/Methodologies | Relevant Studies |

|---|---|---|

| AI and Machine Learning | Predictive load balancing, intelligent resource management | [7,33,41] |

| Edge Computing Integration | Enhancing processing capabilities for IoT | [10,78] |

| Reinforcement Learning (RL) | Privacy-aware load balancing, adaptive resource allocation | [15,61,66,75] |

| Intelligent Scheduling | Real-time data processing, decision-making adaptations | [28,42,85,109] |

| Multi-Layer Scheduling Methods | Complex scheduling for large-scale applications | [13,52,74] |

| Clustering Integration | Improving load balancing in vehicular networks | [24] |

| AI-Driven Task Allocation | Real-time prioritization for IoT systems | [11,32,92] |

| Software-Defined Networking (SDN) | Flexibility in network management | [20,21,77] |

| Serverless Computing | Function as a Service (FaaS) for IoT applications | [12] |

| Adaptive Offloading Techniques | Machine learning for resource management in vehicular fog | [25] |

| Fault Tolerance Mechanisms | Hybrid approaches incorporating RL | [54] |

| Scalability and Resilience | Managing large-scale systems and dynamic environments | [52,66,101] |

| Energy Management | Balancing energy efficiency with computational demands | [42,93,95] |

| Security and Privacy Concerns | Addressing challenges in IoT data | [19,64,76] |

| Interoperability Between Fog and Cloud | Seamless task distribution across resources | [37,66,106] |

| Hybrid Optimization Approaches | Combining meta-heuristic algorithms for better performance | [2,44,104] |

| Game–Theoretic Frameworks | Workload distribution strategies | [38] |

| Dynamic Resource Allocation | Adapting systems to real-time changes in workload | [34,80] |

| Blockchain Integration | Secure data handling and load balancing | [14,33,76] |

| Integration of Renewable Energy Sources | Energy-efficient fog devices | [70,108] |

| Community-Based Placement | Distributing workloads effectively across nodes | [94] |

| Hybrid Algorithms | Combining multiple methodologies for enhanced performance | [43,50] |

| Dynamic Offloading | Real-time task-offloading strategies | [24,80,89,119] |

| Fuzzy Logic Integration | Intelligent scheduling strategies | [117] |

| Nature-Inspired Algorithms | Resource optimization strategies | [105,114] |

References

- Kashani, M.H.; Mahdipour, E. Load balancing algorithms in fog computing. IEEE Trans. Serv. Comput. 2022, 16, 1505–1521. [Google Scholar] [CrossRef]

- Kaur, M.; Aron, R. A systematic study of load balancing approaches in the fog computing environment. J. Supercomput. 2021, 77, 9202–9247. [Google Scholar] [CrossRef]

- Sadashiv, N. Load balancing in fog computing: A detailed survey. Int. J. Comput. Digit. Syst. 2023, 13, 729–750. [Google Scholar]

- Shakeel, H.; Alam, M. Load balancing approaches in cloud and fog computing environments: A framework, classification, and systematic review. Int. J. Cloud Appl. Comput. (IJCAC) 2022, 12, 1–24. [Google Scholar] [CrossRef]

- Ebneyousef, S.; Shirmarz, A. A taxonomy of load balancing algorithms and approaches in fog computing: A survey. Clust. Comput. 2023, 26, 3187–3208. [Google Scholar] [CrossRef]

- Keele, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Software Engineering Group, School of Computer Science and Mathematics, Keele University: Keele, UK; Department of Computer Science, University of Durham: Durham, UK, 2007; Volume 5. [Google Scholar] [CrossRef]

- Singh, J.; Singh, P.; Amhoud, E.M.; Hedabou, M. Energy-efficient and secure load balancing technique for SDN-enabled fog computing. Sustainability 2022, 14, 12951. [Google Scholar] [CrossRef]

- Javanmardi, S.; Shojafar, M.; Mohammadi, R.; Persico, V.; Pescapè, A. S-FoS: A secure workflow scheduling approach for performance optimization in SDN-based IoT-Fog networks. J. Inf. Secur. Appl. 2023, 72, 103404. [Google Scholar] [CrossRef]

- Gazori, P.; Rahbari, D.; Nickray, M. Saving time and cost on the scheduling of fog-based IoT applications using deep reinforcement learning approach. Future Gener. Comput. Syst. 2020, 110, 1098–1115. [Google Scholar] [CrossRef]

- Liu, W.; Li, C.; Zheng, A.; Zheng, Z.; Zhang, Z.; Xiao, Y. Fog Computing Resource-Scheduling Strategy in IoT Based on Artificial Bee Colony Algorithm. Electronics 2023, 12, 1511. [Google Scholar] [CrossRef]

- Chaudhry, R.; Rishiwal, V. An Efficient Task Allocation with Fuzzy Reptile Search Algorithm for Disaster Management in urban and rural area. Sustain. Comput. Inform. Syst. 2023, 39, 100893. [Google Scholar] [CrossRef]

- Khansari, M.E.; Sharifian, S. A scalable modified deep reinforcement learning algorithm for serverless IoT microservice composition infrastructure in fog layer. Future Gener. Comput. Syst. 2024, 153, 206–221. [Google Scholar] [CrossRef]

- Kazemi, S.M.; Ghanbari, S.; Kazemi, M.; Othman, M. Optimum scheduling in fog computing using the Divisible Load Theory (DLT) with linear and nonlinear loads. Comput. Netw. 2023, 220, 109483. [Google Scholar] [CrossRef]

- Forestiero, A.; Gentile, A.F.; Macri, D. A blockchain based approach for Fog infrastructure management leveraging on Non-Fungible Tokens. In Proceedings of the 2022 IEEE International Conference on Dependable, Autonomic and Secure Computing, International Conference on Pervasive Intelligence and Computing, International Conference on Cloud and Big Data Computing, International Conference on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Falerna, Italy, 12–15 September 2022; pp. 1–7. [Google Scholar]

- Ebrahim, M.; Hafid, A. Privacy-aware load balancing in fog networks: A reinforcement learning approach. Comput. Netw. 2023, 237, 110095. [Google Scholar] [CrossRef]

- Sarker, S.; Arafat, M.T.; Lameesa, A.; Afrin, M.; Mahmud, R.; Razzaque, M.A.; Iqbal, T. FOLD: Fog-dew infrastructure-aided optimal workload distribution for cloud robotic operations. Internet Things 2024, 26, 101185. [Google Scholar] [CrossRef]

- Menouer, T.; Cérin, C.; Darmon, P. KOptim: Kubernetes Optimization Framework. In Proceedings of the 2024 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), San Francisco, CA, USA, 27–31 May 2024; pp. 900–908. [Google Scholar]

- Mattia, G.P.; Pietrabissa, A.; Beraldi, R. A Load Balancing Algorithm for Equalising Latency Across Fog or Edge Computing Nodes. IEEE Trans. Serv. Comput. 2023, 16, 3129–3140. [Google Scholar] [CrossRef]

- Ameena, B.; Ramasamy, L. Drawer Cosine optimization enabled task offloading in fog computing. Expert Syst. Appl. 2025, 259, 125212. [Google Scholar] [CrossRef]

- Alotaibi, J.; Alazzawi, L. SaFIoV: A Secure and Fast Communication in Fog-based Internet-of-Vehicles using SDN and Blockchain. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems (MWSCAS), Lansing, MI, USA, 9–11 August 2021; pp. 334–339. [Google Scholar]

- Xia, B.; Kong, F.; Zhou, J.; Tang, X.; Gong, H. A Delay-Tolerant Data Transmission Scheme for Internet of Vehicles Based on Software Defined Cloud-Fog Networks. IEEE Access 2020, 8, 65911–65922. [Google Scholar] [CrossRef]

- Singh, S.P. Effective load balancing strategy using fuzzy golden eagle optimization in fog computing environment. Sustain. Comput. Inform. Syst. 2022, 35, 100766. [Google Scholar] [CrossRef]

- Ibrahim, A.H.; Fayed, Z.T.; Faheem, H.M. Fog-Based CDN Framework for Minimizing Latency of Web Services Using Fog-Based HTTP Browser. Future Internet 2021, 13, 320. [Google Scholar] [CrossRef]

- Hameed, A.R.; Islam, S.u.; Ahmad, I.; Munir, K. Energy- and performance-aware load-balancing in vehicular fog computing. Sustain. Comput. Inform. Syst. 2021, 30, 100454. [Google Scholar] [CrossRef]

- Sethi, V.; Pal, S. FedDOVe: A Federated Deep Q-learning-based Offloading for Vehicular fog computing. Future Gener. Comput. Syst. 2023, 141, 96–105. [Google Scholar] [CrossRef]

- Liu, Z.; Dai, P.; Xing, H.; Yu, Z.; Zhang, W. A Distributed Algorithm for Task Offloading in Vehicular Networks With Hybrid Fog/Cloud Computing. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 4388–4401. [Google Scholar] [CrossRef]

- Veloso, A.F.D.S.; De Moura, M.C.L.; Mendes, D.L.D.S.; Junior, J.V.R.; Rabelo, R.A.L.; Rodrigues, J.J.P.C. Towards Sustainability using an Edge-Fog-Cloud Architecture for Demand-Side Management. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Melbourne, Australia, 17–20 October 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2021; pp. 1731–1736. [Google Scholar] [CrossRef]

- Nazeri, M.; Soltanaghaei, M.; Khorsand, R. A predictive energy-aware scheduling strategy for scientific workflows in fog computing. Expert Syst. Appl. 2024, 247, 123192. [Google Scholar] [CrossRef]

- Marwein, P.S.; Sur, S.N.; Kandar, D. Efficient load distribution in heterogeneous vehicular networks using hierarchical controllers. Comput. Netw. 2024, 254, 110805. [Google Scholar] [CrossRef]

- Wang, J.; Huang, D. Visual Servo Image Real-time Processing System Based on Fog Computing. Hum.-Centric Comput. Inf. Sci. 2022, 13, 1–14. [Google Scholar] [CrossRef]

- Liu, W.; Huang, G.; Zheng, A.; Liu, J. Research on the optimization of IIoT data processing latency. Comput. Commun. 2020, 151, 290–298. [Google Scholar] [CrossRef]

- Badidi, E.; Ragmani, A. An architecture for QoS-aware fog service provisioning. Comput. Sci. 2020, 170, 411–418. [Google Scholar] [CrossRef]

- Das, D.; Sengupta, S.; Satapathy, S.M.; Saini, D. HOGWO: A fog inspired optimized load balancing approach using hybridized grey wolf algorithm. Cluster Comput. 2024, 27, 13273–13294. [Google Scholar] [CrossRef]

- Rehman, A.U.; Ahmad, Z.; Jehangiri, A.I.; Ala’Anzy, M.A.; Othman, M.; Umar, A.I.; Ahmad, J. Dynamic Energy Efficient Resource Allocation Strategy for Load Balancing in Fog Environment. IEEE Access 2020, 8, 199829–199839. [Google Scholar] [CrossRef]

- Wu, B.; Lv, X.; Deyah Shamsi, W.; Gholami Dizicheh, E. Optimal deploying IoT services on the fog computing: A metaheuristic-based multi-objective approach. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 10010–10027. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Wei, Y.; Leng, S. Intelligent deployment of dedicated servers: Rebalancing the computing resource in IoT. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Seoul, Republic of Korea, 6–9 April 2020; pp. 1–6. [Google Scholar]

- Hossain, M.T.; De Grande, R.E. Cloudlet Dwell Time Model and Resource Availability for Vehicular Fog Computing. In Proceedings of the 2021 IEEE/ACM 25th International Symposium on Distributed Simulation and Real Time Applications (DSRT), Valencia, Spain, 27–29 September 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Cheng, Y.; Vijayaraj, A.; Sree Pokkuluri, K.; Salehnia, T.; Montazerolghaem, A.; Rateb, R. Vehicular Fog Resource Allocation Approach for VANETs Based on Deep Adaptive Reinforcement Learning Combined With Heuristic Information. IEEE Access 2024, 12, 139056–139075. [Google Scholar] [CrossRef]

- Fayos-Jordan, R.; Felici-Castell, S.; Segura-Garcia, J.; Lopez-Ballester, J.; Cobos, M. Performance comparison of container orchestration platforms with low cost devices in the fog, assisting Internet of Things applications. Procedia Comput. Sci. 2020, 169, 102788. [Google Scholar] [CrossRef]

- Huber, S.; Pfandzelter, T.; Bermbach, D. Identifying Nearest Fog Nodes With Network Coordinate Systems. In Proceedings of the 2023 IEEE International Conference on Cloud Engineering (IC2E), Boston, MA, USA, 25–29 September 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2023; pp. 222–223. [Google Scholar] [CrossRef]

- Kashyap, V.; Ahuja, R.; Kumar, A. A hybrid approach for fault-tolerance aware load balancing in fog computing. Clust. Comput. 2023, 27, 5217–5233. [Google Scholar] [CrossRef]

- Mattia, G.P.; Beraldi, R. On real-time scheduling in Fog computing: A Reinforcement Learning algorithm with application to smart cities. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events, PerCom Workshops 2022, Pisa, Italy, 21–25 May 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2022; pp. 187–193. [Google Scholar] [CrossRef]

- Aldağ, M.; Kırsal, Y.; Ülker, S. An analytical modelling and QoS evaluation of fault-tolerant load balancer and web servers in fog computing. J. Supercomput. 2022, 78, 12136–12158. [Google Scholar] [CrossRef]

- Bhatia, M.; Sood, S.K.; Kaur, S. Quantumized approach of load scheduling in fog computing environment for IoT applications. Computing 2020, 102, 1097–1115. [Google Scholar] [CrossRef]

- Bukhari, A.A.; Hussain, F.K. Fuzzy logic trust-based fog node selection. Internet Things 2024, 27, 101293. [Google Scholar] [CrossRef]

- Hwang, R.H.; Lai, Y.-C.; Lin, Y.D. Queue-Length-Based Offloading for Delay Sensitive Applications in Federated Cloud-Edge-Fog Systems. In Proceedings of the IEEE Consumer Communications and Networking Conference, CCNC, Las Vegas, NV, USA, 6–9 January 2024; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2024; pp. 406–411. [Google Scholar] [CrossRef]

- Najafizadeh, A.; Salajegheh, A.; Rahmani, A.M.; Sahafi, A. Multi-objective Task Scheduling in cloud-fog computing using goal programming approach. Cluster Comput. 2021, 25, 141–165. [Google Scholar] [CrossRef]

- Shamsa, Z.; Rezaee, A.; Adabi, S.; Rahmani, A.M. A decentralized prediction-based workflow load balancing architecture for cloud/fog/IoT environments. Computing 2023, 106, 201–239. [Google Scholar] [CrossRef]

- Yin, C.; Fang, Q.; Li, H.; Peng, Y.; Xu, X.; Tang, D. An optimized resource scheduling algorithm based on GA and ACO algorithm in fog computing. J. Supercomput. 2024, 80, 4248–4285. [Google Scholar] [CrossRef]

- Premkumar, N.; Santhosh, R. Pelican optimization algorithm with blockchain for secure load balancing in fog computing. Multimed. Tools Appl. 2023, 83, 53417–53439. [Google Scholar] [CrossRef]

- Talaat, F.M.; Ali, H.A.; Saraya, M.S.; Saleh, A.I. Effective scheduling algorithm for load balancing in fog environment using CNN and MPSO. Knowl. Inf. Syst. 2022, 64, 773–797. [Google Scholar] [CrossRef]

- Azizi, S.; Shojafar, M.; Farzin, P.; Dogani, J. DCSP: A delay and cost-aware service placement and load distribution algorithm for IoT-based fog networks. Comput. Commun. 2024, 215, 9–20. [Google Scholar] [CrossRef]

- Baniata, H.; Anaqreh, A.; Kertesz, A. PF-BTS: A Privacy-Aware Fog-enhanced Blockchain-assisted task scheduling. Inf. Process. Manag. 2021, 58, 102393. [Google Scholar] [CrossRef]

- Ebrahim, M.; Hafid, A. Resilience and load balancing in Fog networks: A Multi-Criteria Decision Analysis approach. Microprocess. Microsyst. 2023, 101, 104893. [Google Scholar] [CrossRef]

- Abdulazeez, D.H.; Askar, S.K. A Novel Offloading Mechanism Leveraging Fuzzy Logic and Deep Reinforcement Learning to Improve IoT Application Performance in a Three-Layer Architecture Within the Fog-Cloud Environment. IEEE Access 2024, 12, 39936–39952. [Google Scholar] [CrossRef]

- Al Maruf, M.; Singh, A.; Azim, A.; Auluck, N. Faster Fog Computing Based Over-the-Air Vehicular Updates: A Transfer Learning Approach. IEEE Trans. Serv. Comput. 2022, 15, 3245–3259. [Google Scholar] [CrossRef]

- Stavrinides, G.L.; Karatza, H.D. Multicriteria scheduling of linear workflows with dynamically varying structure on distributed platforms. Simul. Model. Pract. Theory 2021, 112, 102369. [Google Scholar] [CrossRef]

- Johri, P.; Balu, V.; Jayaprakash, B.; Jain, A.; Thacker, C.; Kumari, A. Quality of service-based machine learning in fog computing networks for e-healthcare services with data storage system. Soft Comput. 2023. [Google Scholar] [CrossRef]

- Javaheri, D.; Gorgin, S.; Lee, J.-A.; Masdari, M. An improved discrete harris hawk optimization algorithm for efficient workflow scheduling in multi-fog computing. Sustain. Comput. Sustain. Comput. Inform. Syst. 2022, 36, 100787. [Google Scholar] [CrossRef]

- Kaur, A.; Auluck, N. Scheduling algorithms for truly heterogeneous hierarchical fog networks. Softw. Softw. Pract. Exp. 2022, 52, 2411–2438. [Google Scholar] [CrossRef]

- Peralta, G.; Garrido, P.; Bilbao, J.; Agüero, R.; Crespo, P.M. Fog to cloud and network coded based architecture: Minimizing data download time for smart mobility. Simul. Model. Pract. Theory 2020, 101. [Google Scholar] [CrossRef]

- Qayyum, T.; Trabelsi, Z.; Malik, A.W.; Hayawi, K. Multi-Level Resource Sharing Framework Using Collaborative Fog Environment for Smart Cities. IEEE Access 2021, 9, 21859–21869. [Google Scholar] [CrossRef]

- Singh, S.; Mishra, A.K.; Arjaria, S.K.; Bhatt, C.; Pandey, D.S.; Yadav, R.K. Improved deep network-based load predictor and optimal load balancing in cloud-fog services. Concurr. Comput. 2024, 36, e8275. [Google Scholar] [CrossRef]

- Pourkiani, M.; Abedi, M. Machine learning based task distribution in heterogeneous fog-cloud environments. In Proceedings of the 2020 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Hvar, Croatia, 17–19 September 2020. [Google Scholar]

- Dehury, C.K.; Veeravalli, B.; Srirama, S.N. HeRAFC: Heuristic resource allocation and optimization in MultiFog-cloud environment. J. Parallel Distrib. Comput. 2024, 183, 104760. [Google Scholar] [CrossRef]

- Singh, S.; Sham, E.E.; Vidyarthi, D.P. Optimizing workload distribution in Fog-Cloud ecosystem: A JAYA based meta-heuristic for energy-efficient applications. Appl. Soft Comput. 2024, 154, 111391. [Google Scholar] [CrossRef]

- Gowri, V.; Baranidharan, B. An energy efficient and secure model using chaotic levy flight deep Q-learning in healthcare system. Sustain. Comput. Inform. Syst. 2023, 39, 100894. [Google Scholar] [CrossRef]

- Pallewatta, S.; Kostakos, V.; Buyya, R. MicroFog: A framework for scalable placement of microservices-based IoT applications in federated Fog environments. J. Syst. Softw. 2024, 209, 111910. [Google Scholar] [CrossRef]

- Zeng, D.; Gu, L.; Yao, H. Towards energy efficient service composition in green energy powered Cyber–Physical Fog Systems. Future Gener. Comput. Syst. 2020, 105, 757–765. [Google Scholar] [CrossRef]

- Beraldi, R.; Canali, C.; Lancellotti, R.; Mattia, G.P. Distributed load balancing for heterogeneous fog computing infrastructures in smart cities. Pervasive Mob. Comput. 2020, 67, 101221. [Google Scholar] [CrossRef]

- Khan, S.; Ali Shah, I.; Tairan, N.; Shah, H.; Faisal Nadeem, M. Optimal Resource Allocation in Fog Computing for Healthcare Applications. Comput. Mater. Contin. 2022, 71, 6147–6163. [Google Scholar] [CrossRef]

- Lone, K.; Sofi, S.A. e-TOALB: An efficient task offloading in IoT-fog networks. Concurr. Comput. 2024, 36, e7951. [Google Scholar] [CrossRef]

- Abbas, N.; Zhang, Y.; Taherkordi, A.; Skeie, T. Mobile edge computing: A survey. IEEE Internet Things J. 2017, 5, 450–465. [Google Scholar] [CrossRef]

- Shaik, S.; Baskiyar, S. Distributed service placement in hierarchical fog environments. Sustain. Comput. Inform. Syst. 2022, 34, 100744. [Google Scholar] [CrossRef]

- Boudieb, W.; Malki, A.; Malki, M.; Badawy, A.; Barhamgi, M. Microservice instances selection and load balancing in fog computing using deep reinforcement learning approach. Future Gener. Comput. Syst. 2024, 156, 77–94. [Google Scholar] [CrossRef]

- Fugkeaw, S.; Prasad Gupta, R.; Worapaluk, K. Secure and Fine-Grained Access Control With Optimized Revocation for Outsourced IoT EHRs With Adaptive Load-Sharing in Fog-Assisted Cloud Environment. IEEE Access 2024, 12, 82753–82768. [Google Scholar] [CrossRef]

- Stypsanelli, I.; Brun, O.; Prabhu, B.J. Performance Evaluation of Some Adaptive Task Allocation Algorithms for Fog Networks. In Proceedings of the 2021 IEEE 5th International Conference on Fog and Edge Computing (ICFEC2021), Melbourne, Australia, 10–13 May 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2021; pp. 84–88. [Google Scholar] [CrossRef]

- Liu, H.; Long, S.; Li, Z.; Fu, Y.; Zuo, Y.; Zhang, X. Revenue Maximizing Online Service Function Chain Deployment in Multi-Tier Computing Network. IEEE Trans. Parallel Distrib. Syst. 2023, 34, 781–796. [Google Scholar] [CrossRef]

- Bala, M.I.; Chishti, M.A. Offloading in Cloud and Fog Hybrid Infrastructure Using iFogSim. In Proceedings of the 10th International Conference on Cloud Computing, Data Science & Engineering, Noida, India, 29–31 January 2020; pp. 421–426. [Google Scholar]

- Tran-Dang, H.; Kim, D.-S. Dynamic Task Offloading Approach for Task Delay Reduction in the IoT-enabled Fog Computing Systems. In Proceedings of the 2022 IEEE 20th International Conference on Industrial Informatics (INDIN), Perth, Australia, 25–28 July 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2022; pp. 61–66. [Google Scholar] [CrossRef]

- Hayawi, K.; Anwar, Z.; Malik, A.W.; Trabelsi, Z. Airborne Computing: A Toolkit for UAV-Assisted Federated Computing for Sustainable Smart Cities. IEEE Internet Things J. 2023, 10, 18941–18950. [Google Scholar] [CrossRef]

- Huang, X.; Cui, Y.; Chen, Q.; Zhang, J. Joint Task Offloading and QoS-Aware Resource Allocation in Fog-Enabled Internet-of-Things Networks. IEEE Internet Things J. 2020, 7, 7194–7206. [Google Scholar] [CrossRef]

- Zhang, T.; Yue, D.; Yu, L.; Dou, C.; Xie, X. Joint Energy and Workload Scheduling for Fog-Assisted Multimicrogrid Systems: A Deep Reinforcement Learning Approach. IEEE Syst. J. 2023, 17, 164–175. [Google Scholar] [CrossRef]

- Firouzi, F.; Farahani, B.; Panahi, E.; Barzegari, M. Task Offloading for Edge-Fog-Cloud Interplay in the Healthcare Internet of Things (IoT). In Proceedings of the 2021 IEEE International Conference on Omni-Layer Intelligent Systems (COINS) 2021, Barcelona, Spain, 23–26 August 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Keshri, R.; Vidyarthi, D.P. An ML-based task clustering and placement using hybrid Jaya-gray wolf optimization in fog-cloud ecosystem. Concurr. Comput. 2024, 36, e8109. [Google Scholar] [CrossRef]

- Nethaji, S.V.; Chidambaram, M.; Forestiero, A. Differential Grey Wolf Load-Balanced Stochastic Bellman Deep Reinforced Resource Allocation in Fog Environment. Appl. Comput. Intell. Soft Comput. 2022, 2022, 1–13. [Google Scholar] [CrossRef]

- Singh, S.P.; Kumar, R.; Sharma, A.; Nayyar, A. Leveraging energy-efficient load balancing algorithms in fog computing. Concurr. Comput. 2022, 34, e5913. [Google Scholar] [CrossRef]

- Khan, S.; Shah, I.A.; Nadeem, M.F.; Jan, S.; Whangbo, T.; Ahmad, S. Optimal Resource Allocation and Task Scheduling in Fog Computing for Internet of Medical Things Applications. Hum.-Centric Comput. Inf. Sci. 2023, 13. [Google Scholar] [CrossRef]

- Bala, M.I.; Chishti, M.A. Optimizing the Computational Offloading Decision in Cloud-Fog Environment. In Proceedings of the 2020 International Conference on Innovative Trends in Information Technology (ICITIIT), Kottayam, India, 13–14 February 2020; pp. 1–5. [Google Scholar]

- Tajalli, S.Z.; Kavousi-Fard, A.; Mardaneh, M.; Khosravi, A.; Razavi-Far, R. Uncertainty-Aware Management of Smart Grids Using Cloud-Based LSTM-Prediction Interval. IEEE Trans. Cybern. 2022, 52, 9964–9977. [Google Scholar] [CrossRef]

- Casadei, R.; Fortino, G.; Pianini, D.; Placuzzi, A.; Savaglio, C.; Viroli, M. A Methodology and Simulation-Based Toolchain for Estimating Deployment Performance of Smart Collective Services at the Edge. IEEE Internet Things J. 2022, 9, 20136–20148. [Google Scholar] [CrossRef]

- Yakubu, I.Z.; Murali, M. An efficient meta-heuristic resource allocation with load balancing in IoT-Fog-cloud computing environment. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 2981–2992. [Google Scholar] [CrossRef]

- Kaur, M.; Aron, R. FOCALB: Fog Computing Architecture of Load Balancing for Scientific Workflow Applications. J. Grid Comput. 2021, 19, 40. [Google Scholar] [CrossRef]

- Singh, S.P.; Kumar, R.; Sharma, A.; Abawajy, J.H.; Kaur, R. Energy efficient load balancing hybrid priority assigned laxity algorithm in fog computing. Cluster Comput. 2022, 25, 3325–3342. [Google Scholar] [CrossRef]

- Dubey, K.; Sharma, S.C.; Kumar, M. A Secure IoT Applications Allocation Framework for Integrated Fog-Cloud Environment. J. Grid Comput. 2022, 20, 5. [Google Scholar] [CrossRef]

- Ibrahim AlShathri, S.; Hassan, D.S.M.; Allaoua Chelloug, S. Latency-Aware Dynamic Second Offloading Service in SDN-Based Fog Architecture. Comput. Mater. Contin. 2023, 75, 1501–1526. [Google Scholar] [CrossRef]

- Hassan, S.R.; Rehman, A.U.; Alsharabi, N.; Arain, S.; Quddus, A.; Hamam, H. Design of load-aware resource allocation for heterogeneous fog computing systems. PeerJ Comput. Sci. 2024, 10, e1986. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Auluck, N. Load balancing aware scheduling algorithms for fog networks. In Software—Practice and Experience; John Wiley and Sons Ltd.: Hoboken, NJ, USA, 2020; pp. 2012–2030. [Google Scholar] [CrossRef]

- Tran-Dang, H.; Kim, D.-S. Dynamic collaborative task offloading for delay minimization in the heterogeneous fog computing systems. J. Commun. Netw. 2023, 25, 244–252. [Google Scholar] [CrossRef]

- Huang, X.; Fan, W.; Chen, Q.; Zhang, J. Energy-Efficient Resource Allocation in Fog Computing Networks With the Candidate Mechanism. IEEE Internet Things J. 2020, 7, 8502–8512. [Google Scholar] [CrossRef]

- Yi, C.; Cai, J.; Zhu, K.; Wang, R. A Queueing Game Based Management Framework for Fog Computing With Strategic Computing Speed Control. IEEE Trans. Mob. Comput. 2022, 21, 1537–1551. [Google Scholar] [CrossRef]

- Potu, N.; Bhukya, S.; Jatoth, C.; Parvataneni, P. Quality-aware energy efficient scheduling model for fog computing comprised IoT network. Comput. Electr. Eng. 2022, 97, 107603. [Google Scholar] [CrossRef]

- Sabireen, H.; Venkataraman, N. A Hybrid and Light Weight Metaheuristic Approach with Clustering for Multi-Objective Resource Scheduling and Application Placement in Fog Environment. Expert Syst. Appl. 2023, 223, 119895. [Google Scholar] [CrossRef]

- Ramezani Shahidani, F.; Ghasemi, A.; Toroghi Haghighat, A.; Keshavarzi, A. Task scheduling in edge-fog-cloud architecture: A multi-objective load balancing approach using reinforcement learning algorithm. Computing 2023, 105, 1337–1359. [Google Scholar] [CrossRef]

- Sahil; Sood, S.K.; Chang, V. Fog-Cloud-IoT centric collaborative framework for machine learning-based situation-aware traffic management in urban spaces. Computing 2022, 106, 1193–1225. [Google Scholar] [CrossRef]

- Talaat, F.M.; Saraya, M.S.; Saleh, A.I.; Ali, H.A.; Ali, S.H. A load balancing and optimization strategy (LBOS) using reinforcement learning in fog computing environment. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 4951–4966. [Google Scholar] [CrossRef]

- Abbasi, M.; Yaghoobikia, M.; Rafiee, M.; Khosravi, M.R.; Menon, V.G. Optimal Distribution of Workloads in Cloud-Fog Architecture in Intelligent Vehicular Networks. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4706–4715. [Google Scholar] [CrossRef]

- Mota, E.; Barbosa, J.; Figueiredo, G.B.; Peixoto, M.; Prazeres, C. A self-configuration framework for balancing services in the fog of things. Internet Things Cyber-Phys. Syst. 2024, 4, 318–332. [Google Scholar] [CrossRef]

- Yang, J. Low-latency cloud-fog network architecture and its load balancing strategy for medical big data. J. Ambient. Intell. Humaniz. Comput. 2020. [Google Scholar] [CrossRef]

- Jain, A.; Jatoth, C.; Gangadharan, G.R. Bi-level optimization of resource allocation and appliance scheduling in residential areas using a Fog of Things (FOT) framework. Cluster Comput. 2024, 27, 219–229. [Google Scholar] [CrossRef]

- Daghayeghi, A.; Nickray, M. Delay-Aware and Energy-Efficient Task Scheduling Using Strength Pareto Evolutionary Algorithm II in Fog-Cloud Computing Paradigm. Wirel. Pers. Commun. 2024, 138, 409–457. [Google Scholar] [CrossRef]

- Alqahtani, F.; Amoon, M.; Nasr, A.A. Reliable scheduling and load balancing for requests in cloud-fog computing. Peer-to-Peer Netw. Appl. 2021, 14, 1905–1916. [Google Scholar] [CrossRef]

- Deng, W.; Zhu, L.; Shen, Y.; Zhou, C.; Guo, J.; Cheng, Y. A novel multi-objective optimized DAG task scheduling strategy for fog computing based on container migration mechanism. Wirel. Netw. 2024, 31, 1005–1019. [Google Scholar] [CrossRef]

- Chuang, Y.-T.; Hsiang, C.-S. A popularity-aware and energy-efficient offloading mechanism in fog computing. J. Supercomput. 2022, 78, 19435–19458. [Google Scholar] [CrossRef]

- Oprea, S.-V.; Bâra, A. An Edge-Fog-Cloud computing architecture for IoT and smart metering data. Peer Peer Netw. Appl. 2023, 16, 818–845. [Google Scholar] [CrossRef]

- Ali, H.S.; Sridevi, R. Mobility and Security Aware Real-Time Task Scheduling in Fog-Cloud Computing for IoT Devices: A Fuzzy-Logic Approach. Comput. J. 2024, 67, 782–805. [Google Scholar] [CrossRef]

- Verma, R.; Chandra, S. HBI-LB: A Dependable Fault-Tolerant Load Balancing Approach for Fog based Internet-of-Things Environment. J. Supercomput. 2023, 79, 3731–3749. [Google Scholar] [CrossRef]

- Chiang, M.; Zhang, T. Fog and IoT: An overview of research opportunities. IEEE Internet Things J. 2016, 3, 854–864. [Google Scholar] [CrossRef]

- Mahapatra, A.; Majhi, S.K.; Mishra, K.; Pradhan, R.; Rao, D.C.; Panda, S.K. An energy-aware task offloading and load balancing for latency-sensitive IoT applications in the Fog-Cloud continuum. IEEE Access 2024, 12, 14334–14349. [Google Scholar] [CrossRef]

| Criteria | Inclusion | Exclusion |

|---|---|---|

| Literature | Peer-reviewed journal articles (Q1/Q2) | Non-indexed journals, retracted papers, conference proceedings |

| Publication Date | 2020–2024 | Prior to 2020 |

| Language | English | Non-English |

| Focus | Fog computing with emphasis on load balancing | Studies on edge computing or unrelated topics |

| Criterion ID | Description |

|---|---|

| C1 | Are the research objectives clearly defined? |

| C2 | Are the methods well described and appropriate? |

| C3 | Is the experimental design justifiable? |

| C4 | Are the performance results measured and reported in detail? |

| C5 | Are limitations acknowledged and conclusions supported by results? |

| Challenge | Paper(s) |

|---|---|

| Resource Heterogeneity | [8,9,10,12,13,15,22,25,31,35,54,57,59,66,67,68,69] |

| Dynamic Workloads | [8,9,10,12,13,15,16,22,29,30,34,39,42,63,70,71,72,73] |

| Latency Sensitivity | [8,10,12,13,15,22,52,54] |

| Energy Management and Efficiency | [8,10,13,15,20,21,22,25,30,34,35,36,37,38,39,53,54,55,57,59,66,69,70,71,74,75,76,77,78] |

| Security and Privacy | [8,10,14,15,19,21,35,38,40,41,45,46,54,55,56,57,59,62,63,64,65,66,69,70,71,72,74,76,79,80,81,82,83,84,85,86,87,88] |

| Scalability | [8,12,13,14,15,20,22,27,35,36,37,39,41,44,48,52,55,63,66,77,80,88,89,90,91,92,93,94,95] |

| Mobility and Environmental Changes | [32,39,63,72,96] |

| Category | Best With | Example Use Case |

|---|---|---|

| Heuristic | Real-time, moderate complexity | Smart traffic coordination |

| Meta-Heuristic | Complex optimization problems | Vehicle routing and resource migration |

| ML/RL | Adaptive/predictive control | Energy-aware scheduling in fog systems |

| Fuzzy Logic | Input uncertainty or vagueness | Health monitoring with fuzzy sensor data |

| Hybrid Approaches | Multi-objective, large-scale, or mobile systems | Smart cities with dynamic resource allocation |

| Category | Best When | Example Use Case |

|---|---|---|

| Resource-aware | System has limited fog resources like CPU or bandwidth | Workload balancing in industrial IoT systems |

| Deadline-aware | Tasks must be completed within strict time constraints | Real-time video analytics or emergency response |

| Energy-aware | Reducing power usage is a high priority | Battery-constrained sensor networks or mobile fog nodes |

| Latency-aware | Application requires real-time responsiveness | Autonomous vehicles, remote surgery, AR/VR streaming |

| Security-aware | Sensitive data or user privacy are involved | Healthcare data processing or financial transactions |

| Cost-aware | Cloud offloading or communication costs must be minimized | Fog–cloud collaboration in smart cities |

| Context-aware | Environmental or user context must adapt scheduling decisions | Location-based task delegation or user mobility prediction |

| Hybrid scheduling | Multiple conflicting objectives must be balanced | Smart cities managing energy, latency, and cost together |

| Best When | Example Use Case | |

|---|---|---|

| Full Offloading | End device is severely resource constrained, or idle task tolerance is high | Wearable health sensors offloading all processing to fog/cloud |

| Partial Offloading | Partial processing is possible on device to save bandwidth or preserve privacy | Edge preprocessing for smart surveillance before cloud analytics |

| Dynamic Offloading | Network or device status changes rapidly; decisions must be adaptive | Mobile users in vehicular fog systems or smart retail scenarios |

| Application-Specific Offloading | Offloading depends on application logic or requirements | Augmented reality or IoT applications with tailored offloading models |

| Resource-Aware Offloading | Fog/cloud resources are heterogeneous, and availability varies | Load balancing in multi-tier fog infrastructure |

| Latency-Aware Offloading | Real-time response is critical | Tactile internet or remote healthcare diagnostics |

| Energy-Aware Offloading | Energy savings are more important than performance | Battery-powered IoT devices or sensor networks |

| Context-Aware Offloading | Offloading needs to adapt to user behavior or environmental data | Location-based task assignment in smart cities |

| Hybrid Offloading | Multiple objectives like latency, energy, and context must be optimized together | Smart transportation systems with high mobility and QoS constraints |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aldossary, D.; Aldahasi, E.; Balharith, T.; Helmy, T. A Systematic Literature Review on Load-Balancing Techniques in Fog Computing: Architectures, Strategies, and Emerging Trends. Computers 2025, 14, 217. https://doi.org/10.3390/computers14060217

Aldossary D, Aldahasi E, Balharith T, Helmy T. A Systematic Literature Review on Load-Balancing Techniques in Fog Computing: Architectures, Strategies, and Emerging Trends. Computers. 2025; 14(6):217. https://doi.org/10.3390/computers14060217

Chicago/Turabian StyleAldossary, Danah, Ezaz Aldahasi, Taghreed Balharith, and Tarek Helmy. 2025. "A Systematic Literature Review on Load-Balancing Techniques in Fog Computing: Architectures, Strategies, and Emerging Trends" Computers 14, no. 6: 217. https://doi.org/10.3390/computers14060217

APA StyleAldossary, D., Aldahasi, E., Balharith, T., & Helmy, T. (2025). A Systematic Literature Review on Load-Balancing Techniques in Fog Computing: Architectures, Strategies, and Emerging Trends. Computers, 14(6), 217. https://doi.org/10.3390/computers14060217