Abstract

This study investigated how undergraduate students collaborated when working with ChatGPT and what teamwork approaches they used, focusing on students’ preferences, conflict resolution, reliance on AI-generated content, and perceived learning outcomes. In a course on the Applications of Information Systems, 153 undergraduate students were organized into teams of 3. Team members worked together to create a report and a presentation on a specific data mining technique, exploiting ChatGPT, internet resources, and class materials. The findings revealed no strong preference for a single collaborative mode, though Modes #2, #4, and #5 were marginally favored due to clearer structures, role clarity, or increased individual autonomy. Students reasonably encountered initial disagreements (averaging 30.44%), which were eventually resolved—indicating constructive debates that improve critical thinking. Data also showed that students moderately modified ChatGPT’s responses (50% on average) and based nearly half (44%) of their overall output on AI-generated content, suggesting a balanced yet varied level of reliance on AI. Notably, a statistically significant relationship emerged between students’ perceived learning and actual performance, implying that self-assessment can complement objective academic measures. Students also employed a diverse mix of communication tools, from synchronous (phone calls) to asynchronous (Instagram) and collaborative platforms (Google Drive), valuing their ease of use but facing scheduling, technical, and engagement issues. Overall, these results reveal the need for flexible collaborative patterns, more supportive AI use policies, and versatile communication methods so that educators can apply collaborative learning effectively and maintain academic integrity.

1. Introduction

Recently, generative artificial intelligence (GenAI) tools have gained increasing popularity. They are based on large language models (LLMs) trained on vast amounts of data and can generate human-like content (e.g., text, images, video) in response to prompts (e.g., [1]). They perform various language-related tasks, such as text generation [1,2], question answering [3], and translation, while comprehending the context to produce responses that resemble human language [4]. Currently, the most popular GenAI tool is ChatGPT [5]. It is an LLM based on generative pre-trained transformer (GPT) architecture which is designed for text generation and language understanding (e.g., [6]). In February 2025, there were 3.9 B visits to chatgpt.com [7].

Education has adopted such GenAI tools because they have the potential to facilitate adaptive and personalized learning [2,3,6,8]; increase student learning [1] and engagement [2,3]; enable the understanding of complex concepts [8,9]; provide personalized feedback [2,6,8,9,10]; aid in developing innovative assessments [6]; and support research and data analysis [2,4,6]. However, there are concerns with respect to academic integrity, plagiarism, and cheating [1,3,10,11]; potential biases and falsified information [1,2,6]; copyright [2]; privacy [9,11,12]; and the possible reduction in human interaction [13].

An effective instructional method is collaborative learning [14]. Collaborative learning refers to learners collaborating with other learners to co-construct knowledge through social interaction and is based on the theoretical framework of social constructivism learning theory and group or social cognition [14,15]. It involves two or more students working together to achieve a common goal or reach a common objective, typically through peer-directed interactions [16,17]. It is well known [18] that students who learn in small groups typically exhibit higher academic achievement, more positive attitudes toward learning, and more persistence in science, mathematics, engineering, and technology (SMET) courses or programs than their peers who learn individually (not collaboratively). Also, collaboration has significant positive effects on students’ knowledge gain, skill acquisition, and perceptions in computer-based learning settings, while computer use has positive effects on knowledge gain, skill acquisition, student perceptions, group task performance, and social interaction in collaborative learning settings [14]. Although various digital technologies have been used in collaborative learning, previous studies pointed out that there is a need for empirical research regarding the use of AI and ChatGPT in collaborative learning [12,19,20].

2. Theoretical Background

Many previous studies argued that artificial intelligence (AI) can facilitate collaborative learning [10,12,21,22]. More specifically, AI can support group creation [12,23], group discussions [2,8,22], collaborative writing [2,24], group outcomes’ evaluation [12], and group management [22].

Recent literature reviews found that AI tools support collaborative learning by providing peer learning opportunities [25], enabling personalized learning through feedback and group work [26], and enhancing students’ cognitive engagement [27]. Most previous studies used AI-driven learning analytics to analyze students’ communicative discourse, behavioral, and evaluation data to display visualizations of students’ outcomes [27]. Also, ref. [28] found that using AI to analyze discussion data helps to understand the online collaborative learning process leading to increased students’ learning and higher-order presence. Additionally, ref. [29] observed that ChatGPT-supported collaborative argumentation significantly enhanced English as a Foreign Language (EFL) students’ argumentative speaking performance, critical thinking awareness, and collaboration tendency. Furthermore, [30] found that AI-empowered recommendations could significantly improve collaborative knowledge-building, co-regulated behaviors, and group performance. On the other hand, a few studies noticed that the overuse of ChatGPT may decrease students’ creative and collaborative abilities [31]. For example, when working on group projects, students preferred using ChatGPT on their own rather than brainstorming and working with peers.

Many researchers [12,19,20] recognized the lack of empirical studies on AI in collaborative learning and stressed the need for further research on how AI can be used in collaborative learning. Although several previous studies used AI to support the collaborative learning process, there is limited research on how students actually collaborate and communicate among themselves when they use AI tools. Knowing how students prefer to work together, when prompting ChatGPT, is important because their comfort with a specific collaborative approach may influence their motivation, engagement, and overall learning outcomes. Also, understanding how teammates like to collaborate, when working with ChatGPT, enables instructors to structure collaborative activities that align with their preferences, maximizing participation and knowledge sharing. In a previous study [32], thirty graduate students reported their preferences regarding different ways that they communicated and collaborated when working with GenAI tools. The current study extends that research by examining a larger sample of undergraduate students who collaboratively designed prompts for ChatGPT. It also analyzes the students’ experiences using both quantitative analysis and qualitative analysis (content analysis reporting the frequencies of themes). Furthermore, it examines the initial disagreements in teams, the teams’ reliance on AI-generated content, and the students’ perceived learning. Thus, this study aims to investigate the following research questions:

RQ1

: Which collaborative mode do team members prefer when they interact with ChatGPT? What are the pros and cons of each collaborative mode?

RQ2

: To what extent do students modify ChatGPT’s responses? To what extent do students use ChatGPT’s responses in their final output?

RQ3

: To what extent do students learn during their collaborative activities?

RQ4

: (i) Which communication channel do team members use to collaborate? (ii) What aspects of the communication methods do they like? (iii) What challenges do they face in communication? (iv) What suggestions do they have for improving communication?

3. Methodology

3.1. Triangulation and Mixed-Methods Research Design

Triangulation was employed to enhance the analysis and interpretation of the collected data by utilizing multiple data sources, researchers, and research methods [33,34]. This approach enhances trust in the data, reduces analyst bias, and provides diverse perspectives, leading to a more comprehensive understanding of the investigated issue. It also reveals insights that might remain hidden when relying on a single method. This study implements between-method triangulation [35], integrating data triangulation (multiple sources) and methodological triangulation (varied methods).

Additionally, this study adopts a mixed-methods research approach [36]. Student responses were analyzed both quantitatively and qualitatively. Descriptive and inferential statistics were used for the quantitative analysis of students’ responses to closed-ended questions, while content analysis [37] was applied for the qualitative analysis of students’ responses to open-ended questions, as well as data from observations of teams’ presentations, focus group discussions, and teams’ reports.

3.2. Data Collection Methods

The data collection methods included students’ participation in online questionnaires and focus groups discussions, as well as educators’ direct observations of teams’ presentations and grading of teams’ reports. Thus, the data included students’ (i) answers to the closed- and open-ended questions of a questionnaire, (ii) perspectives during focus group discussions, (iii) presentations, (iv) reports, and (v) grades.

3.3. Questionnaire

To gather data, a questionnaire was developed using Google Forms. The questionnaire included 19 questions (items) on students’ demographics, evaluation of collaborative modes (described in Section 3.8), extent of utilizing ChatGPT-generated content, evaluation of students’ learning, and open-ended questions. The questionnaire was initially reviewed by three researchers of technology-enhanced learning and three students from the University of Macedonia, Thessaloniki, Greece. The questionnaire items were reviewed based on whether they cover the issue to be measured, they made sense, and whether the language was correct, clear, and appropriate. Also, the questionnaire was pilot-tested for the clarity of instructions, length, and usability. Reviewers’ suggestions were incorporated into the questionnaire. So, content validity and face validity were assured. Participants were fully informed about the purpose of this study and voluntarily gave their consent and answered the questionnaire. This study was conducted in accordance with ethical guidelines, having received approval from the University of Macedonia Ethical Committee.

3.4. Focus Group Discussions

During week #j (j = 1, 5), there was a 20-min focus group discussion with students that followed Collaborative Mode #j (j = 1, 5), described in Section 3.8, when interacting with ChatGPT. At the end of each class in week #j (j = 1, 5), the educators triggered discussions with students about their perspectives, the advantages and disadvantages of using Mode #j (j = 1, 5), and their roles and the role of ChatGPT in their interactions.

3.5. Content Analysis

Data from students’ responses to open-ended questions, observations of teams’ presentations, focus group discussions, and teams’ reports were analyzed using inductive content analysis [37]. Inductive content analysis is a qualitative research method aimed at the subjective interpretation of text data through systematic coding and theme identification. Two researchers repeatedly read the data to achieve immersion, followed by word-by-word analysis to derive initial codes from significant phrases [38]. The researchers made notes on their impressions, leading to the emergence of a coding scheme that reflected key concepts. Then, they grouped the codes into categories based on their relationships, forming meaningful clusters. Finally, they calculated the code frequency counts for each identified category. The inter-rater reliability was over 90%. In the case of disagreement, they discussed the issues and reached a consensus.

3.6. Context and Participants

A total of 256 first-year undergraduate students took an elective course on the Applications of Information Systems in the department of Economics at the University of Macedonia, Thessaloniki, Greece, during the spring semester of 2024. This was a first-year undergraduate course on businesses’ digital infrastructures, data management and business intelligence, telecommunications and networking, and information systems security and applications in businesses. The requirements of the course included the development of a team (small-group) project that accounts for 25% of the student’s final grade. One of the course topics in business intelligence included an overview of data mining techniques (e.g., classification, clustering, associations, sequences, anomaly detection, regression, prediction, forecasting). So, a short overview and the definitions of various data mining techniques were presented during one lecture in March 2024. Then, each team selected a specific data mining technique (among six data mining techniques). The goal of each team was to study and collaboratively develop a report on the specific data mining technique chosen during March and April 2024 and present their teamwork in class during May and June 2024.

Initially, students had to freely form teams of three peers and choose to collaboratively investigate a specific data mining technique. In other words, students self-selected their teammates without any instructor intervention in team formation. The teams’ final product was a report and a 20–30-slide presentation on their specific data mining technique, presenting it in class. Each team should study and present an overview of their selected data mining technique. The overview should include the definition, input and output, popular algorithms and available software, potential challenges and limitations, common applications in business, examples and real cases, emerging trends, and references. The students could find relevant information in the course’s resources (on the university’s learning management system), the educator’s presentations, books in the university’s library, and the internet. Students were also required to use the free version of ChatGPT (version GPT-3.5 in spring 2024) and other generative AI tools. They were advised to follow a simple and compact form (adapted to the students’ level and work) of the Collaborative Preparation, Implementation, Revision (CoPIR) Methodology [32] (presented in Section 3.7). Then, the teams were randomly assigned to five (5) collaborative modes. These collaborative modes are presented in Section 3.8. The members of a particular team were required to follow the specific collaborative mode assigned to them. The team had to include in their presentation only the final results that they obtained after their conversation with ChatGPT and the discussions among the team members. However, in the appendix of their report, the team had to report all the prompts that they gave to ChatGPT, the answers received, the revised prompts, and so on.

Each team had to assign a Creative Commons license to their report and presentation. The references in the team’s presentation must follow APA style. All materials (e.g., text, graphs, tables, images, video, responses of ChatGPT) need to be cited wherever they appear in the presentation and also be listed in the references at the end of the presentation and the report.

During a five (5)-week period in May and June 2024, teams (with a random sequence) presented their work in class. In week #j (j = 1 to 5), there were presentations in class by teams that followed Collaborative Mode #j. At the end of each class, there was also a focus group discussion with all students who followed the particular Collaborative Mode #j. Finally, students were expected to answer an online questionnaire regarding their experience using ChatGPT and collaborating with their team members. The online questionnaire was active on Google Forms for two weeks during June 2024.

3.7. Collaborative Preparation, Implementation, Revision (CoPIR) Methodology

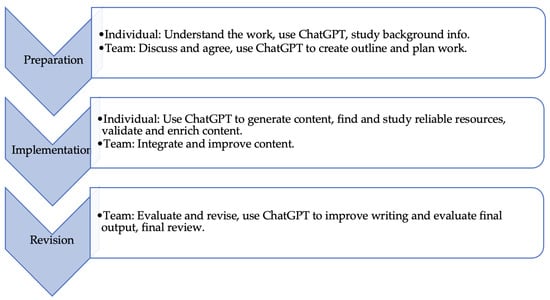

All teams were suggested to follow a simplified condensed version of the Collaborative Preparation, Implementation, Revision (CoPIR) methodology [32] adjusted to the team’s work. This CoPIR methodology has the following three phases (Figure 1, Table 1):

Figure 1.

The flow of the Collaborative Preparation, Implementation, Revision (CoPIR) methodology.

Table 1.

Collaborative Preparation, Implementation, Revision (CoPIR) methodology for accomplishing work (e.g., assignment, activity, project) with the help of ChatGPT.

- (1)

- Preparation: Focus on understanding the work (e.g., assignment, project), creating a plan, and assigning roles.

- (2)

- Implementation: Generate and validate content individually, then integrate and improve it as a team.

- (3)

- Revision: Review, refine, and finalize the output, ensuring quality and academic integrity.

Note that work can correspond to any of the following: assignment, report, presentation, simulation, experiment, portfolio, project, etc.

3.8. Collaborative Prompting When Using GenAI Tools

Team members were required to collaborate with one another following one specific collaborative mode among the five collaborative modes [32]. The teams were randomly divided into five clusters, and a specific collaborative mode was assigned to each cluster. So, teams in a specific cluster were instructed to employ the assigned collaborative mode.

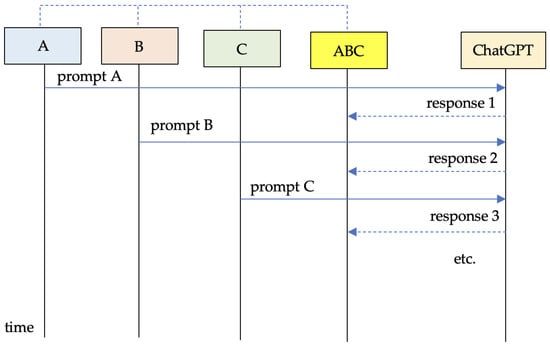

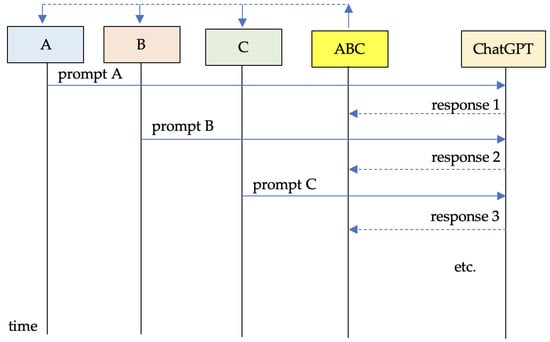

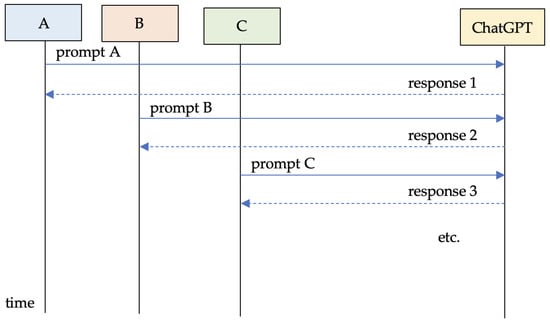

(1) Collaborative Mode #1 (one in a row): Each team member takes turns refining a prompt for ChatGPT (Figure 2). The first member provides an initial prompt, and all members see ChatGPT’s response. The second member revises the prompt based on the response and submits a new one. After seeing the new response, the third member submits their revised prompt. Finally, the team discusses ChatGPT’s latest response and agrees on the final result. In summary, each member revises the prompt based on ChatGPT’s response to another member’s prompt. At the end of an iteration cycle, the team discusses the final response to reach a conclusion.

Figure 2.

Collaborative Mode #1 (one in a row) sequence diagram of team ABC (members: A, B, and C) when interacting with ChatGPT.

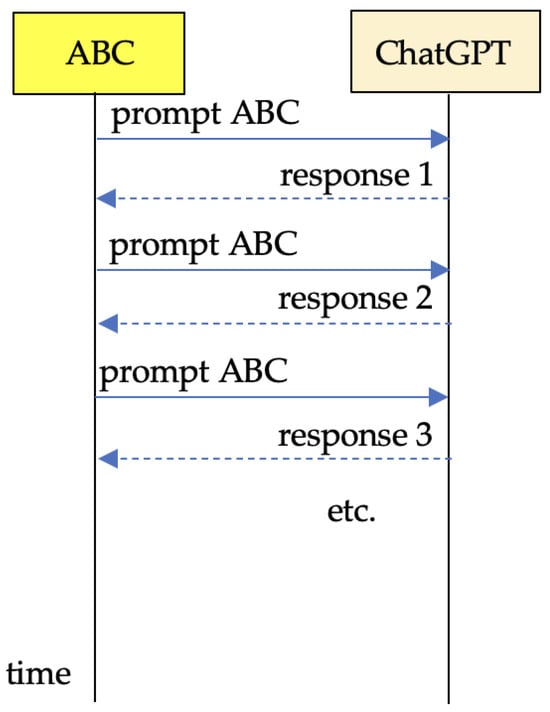

(2) Collaborative Mode #2 (team-oriented): The team collaborates to create and refine prompts for ChatGPT (Figure 3). They start by agreeing on an initial prompt, which the team leader submits. After seeing ChatGPT’s response, they discuss and agree on a revised prompt, which the leader submits again. This process repeats until the team agrees on a final result. This is a simple and collaborative approach where the team works together throughout. In summary, the team always works together to create and refine prompts until they reach a final result.

Figure 3.

Collaborative Mode #2 (team-oriented) sequence diagram of team ABC (members: A, B, and C) when interacting with ChatGPT.

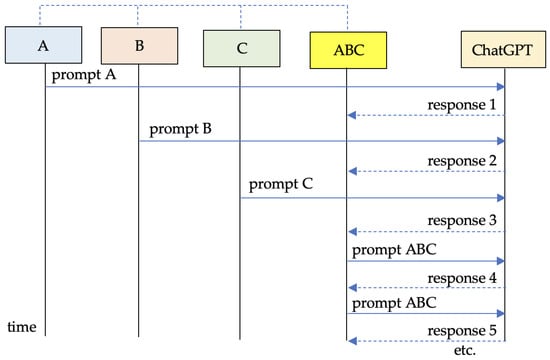

(3) Collaborative Mode #3 (member then team): Each team member starts by independently submitting their own prompt to ChatGPT (Figure 4). After reviewing all responses, the team discusses and agrees on a joint revised prompt, which the leader submits. This process is repeated: the team discusses ChatGPT’s response, agrees on a new revised prompt, and the leader submits it. Finally, the team reviews the last response and decides on the final result. This approach allows members to explore different ideas independently before collaborating to refine the prompts and reach a conclusion. In summary, there is an independent start where each member submits their own prompt to explore different perspectives. Then, there is a collaborative iterative refinement where the team discusses responses and agrees on revised prompts until they reach a final result.

Figure 4.

Collaborative Mode #3 (member then team) sequence diagram of team ABC (members: A, B, and C) when interacting with ChatGPT.

(4) Collaborative Mode #4 (team to member): Each team member starts by independently submitting their own prompt to ChatGPT (Figure 5). After reviewing all responses, the team discusses and suggests revised prompts to each member. Each member then independently decides on their revised prompt and submits it. This process is repeated: the team discusses the new responses and suggests further revisions, and each member independently submits their updated prompt. Finally, the team reviews all responses and agrees on the final result.

Figure 5.

Collaborative Mode #4 (team to member) sequence diagram of team ABC (members: A, B, and C) when interacting with ChatGPT.

(5) Collaborative Mode #5 (independently, cooperatively): The work is split into three parts. Each team member works independently on their assigned part, using ChatGPT alone (Figure 6). Once all parts are completed, the team discusses ChatGPT’s responses and agrees on the final result. This is the simplest form of collaboration, where members work alone on their part and only come together at the end to combine their individual contributions.

Figure 6.

Collaborative Mode #5 (independently) sequence diagram of team ABC (members: A, B, and C) when interacting with ChatGPT.

4. Quantitative Analysis and Results

This section presents the results of the quantitative analysis of the answers to the closed-ended questions of the questionnaire and the grades of the students’ outputs. The statistical analysis of the data included descriptive statistics, Spearman’s Rho tests, and Pearson correlations.

4.1. Students Profiles

Out of 256 students who took the course, 51 teams of 3 students (153 students) delivered a report and a presentation. The rest of the students decided to not conduct this work and lost 25% of their final grade. The teams chose to explore the following data mining techniques: classification (16 teams), clustering (15 teams), forecasting (10 teams), sequences (6 teams), associations (3 teams), and anomaly detection (1 team). Then, 10 or 11 teams were randomly assigned to each collaborative mode.

Out of 153 students who delivered a report and a presentation, 136 students answered the questionnaire. Most students (97.1%) were under 22 years old. Almost half of them used the internet for 4–6 h daily (47.8%), a third of them used it for 2–4 h (32.4%), and a tenth of them used it for 4–8 h daily (11.8%). The rest, 8%, used it less than 2 h or more than 8 h daily.

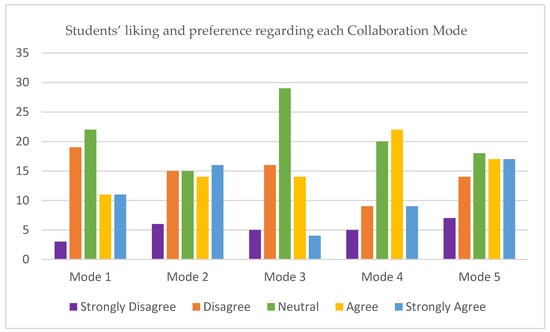

4.2. Students’ Preferences Regarding Collaborative Modes

Students possess diverse skills and preferences, with some preferring structured collaborative settings and others favoring flexibility and independence. By experimenting with different collaboration structures, they can develop essential skills such as communication, teamwork, conflict resolution, and leadership. Investigating various collaborative patterns helps educators create effective learning environments that promote inclusivity and engagement. In this study, students did not have strong preferences regarding a specific Collaboration Mode (Table 2, Figure 7). On average, their responses regarding their liking and preference of each Collaboration Mode were close to neutral (M = 3). They marginally liked Mode #1 (M = 3.12, SD = 1.14), Mode #2 (M = 3.29, SD = 1.31), Mode #4 (M = 3.32, SD = 1.12), and Mode #5 (M = 3.15, SD = 1.29). On the other hand, they marginally did not like Mode #3 (M = 2.94, SD = 0.99).

Table 2.

Students’ liking and preference for each Collaboration Mode when interacting with ChatGPT.

Figure 7.

Students’ liking and preference for each Collaboration Mode when interacting with ChatGPT (n = 136).

More specifically, they agreed and strongly agreed that they liked Mode #1 (16.2%), Mode #2 (22.1%), Mode #3 (13.2%), Mode #4 (22.8%), and Mode #5 (25%). So, it seems that they have a slight preference for Mode #2, Mode #4, and Mode #5.

The moderate preferences of students for some collaborative modes implies that students are flexible but potentially uncertain about how collaboration structures affect their learning, indicating a need for clearer scaffolding. Thus, there is a need for adaptability and flexible AI-supported pedagogies, grounded on differentiated instruction in digital education [39].

The slight preference for Modes #2 and #5 indicates the contrast between tight collaboration (emphasizing team coherence) and independent work (emphasizing autonomy), as discussed in the collaborative learning literature [16]. The findings show that technology integration into group work requires collaborative structures that support both individual agency and collective knowledge construction [40].

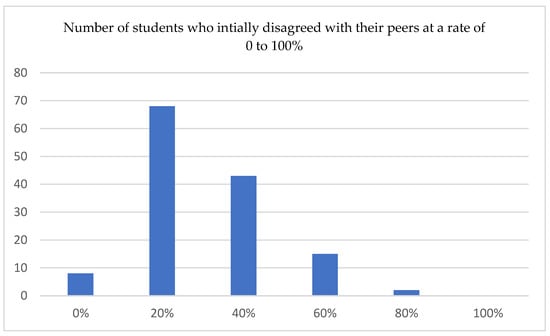

4.3. Initial Disagreements Among Team Members

During the implementation of their work, teams faced several critical decisions that required thoughtful discussion and deliberation. The team members discussed each issue, presenting their arguments and perspectives, which encouraged critical thinking and collaboration. They worked together to reach consensus and develop their final output. On average, they initially disagreed 30.44% of the time but eventually reached an agreement. More specifically, half of the students (50%) initially disagreed on 20% of the issues, 43 students (31.6%) initially disagreed on 40% of the issues, and 15 students (11%) initially disagreed on 60% of the issues (Figure 8).

Figure 8.

The number of students who initially disagreed with their peers at a rate of 0 to 100% (n = 136).

The initial disagreements and eventual consensus among teammates are related to social constructivism [41], where knowledge is co-constructed through interaction. Note that socio-constructivist learning theory emphasizes the importance of dialogue and negotiation in knowledge construction. The initial disagreements followed by consensus-building are also related to the socio-cognitive conflict process [42], where productive tensions in collaborative learning lead to deeper understanding. The reported disagreements (30.44% initially) align with conflict theory in collaborative learning, where cognitive debate can drive deeper engagement [43].

Also, on average, students input about six prompts into ChatGPT (M = 5.74, SD = 2.81). In fact, only 19.9% of students input more than 10 prompts into ChatGPT. The low use of ChatGPT indicates that students may not fully exploit AI’s potential, indicating concerns about superficial engagement [44].

4.4. Extent of Modifying ChatGPT’s Responses

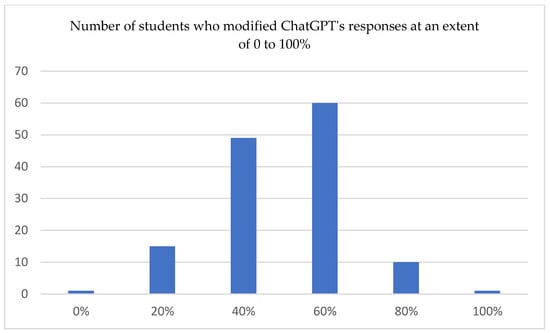

Knowing the extent to which students modify ChatGPT’s responses helps educators understand how effectively students engage with AI-generated content. It can inform educators whether students use ChatGPT to comprehend the educational subject or whether they just copy its responses. On average, students modified the responses of ChatGPT at a rate of 50% (SD = 0.17). Most students (44.1% of students, n = 60) reported that they modified ChatGPT’s responses at a rate of 60% (Figure 9). Fewer students (36% of students, n = 49) reported that they modified ChatGPT’s responses at a rate of 40%. Similar fractions of students (11%, n = 15 and 7.4%, n = 10) reported that they modified ChatGPT’s responses at a rate of 20% and 80%, correspondingly.

Figure 9.

The number of students who modified ChatGPT’s responses to an extent of 0% to 100% (n = 136).

The substantial modification rate (50% average) of ChatGPT’s responses aligns with Bloom’s revised taxonomy [45], implying that students are engaging in higher-order thinking, including analysis, evaluation, and creation, rather than mere knowledge consumption. This finding is supported by constructionist theory [46], where learners actively construct knowledge through manipulating external objects—in this case, AI-generated text.

The fact that students often modified ChatGPT’s responses shows their active engagement with AI and supports cognitive apprenticeship models [47], where students refine AI-generated output through their own judgment. This means that students are not passive consumers of AI content but rather active participants in constructing knowledge—supporting the use of AI as a cognitive partner [48].

4.5. Extent of Students’ Final Output Based on ChatGPT’s Responses

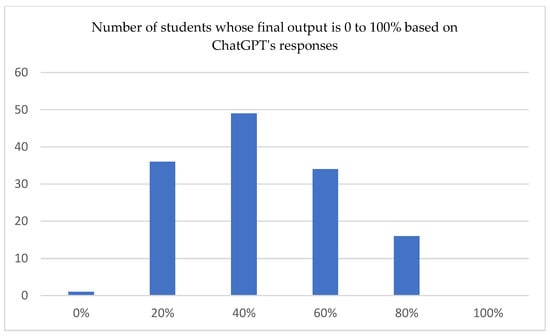

Understanding the percentage of ChatGPT’s responses used by students in their final output is essential for assessing academic integrity and the development of critical thinking skills. Determining whether students simply copy responses or significantly modify them reveals their engagement with the material. This finding can inform educators about the effectiveness of AI tools in promoting genuine learning versus dependency on fabricated material. On average, a fraction, 44% (SD = 0.20), of students’ final output was based on ChatGPT’s responses. Most students (36% of students, n = 49) reported that their final output was 40% based on ChatGPT’s responses (Figure 10). Similar fractions of students (26.5%, n = 36; and 25%, n = 34) reported that their final output was 20% and 60% based on ChatGPT responses. Fewer students (11.8%, n = 16) reported that their final output was 80% based on ChatGPT responses.

Figure 10.

Number of students whose final output is 0 to 100% based on ChatGPT’s responses (n = 136).

This finding is related to concerns in AI ethics and academic integrity, questioning the role of AI as a co-author [10]. It shows the contrast between augmentation and overreliance on AI, where educational outcomes depend on students’ critical engagement rather than passive acceptance of AI outputs. From a learning perspective, the moderate reliance on AI-generated output shows the importance of improving meta-cognitive skills so that students evaluate and refine AI suggestions rather than overdepend on them [44,49].

The distribution of reliance on AI across different percentages (20–80%) is related to the distributed intelligence framework [50], where cognition is shared between humans and technological artifacts in complementary ways. In addition, the distribution indicates the divergent student approaches of using AI as a cognitive tool that supports and extends but does not replace human cognition and critical thinking in learning environments [51].

4.6. Student Learning During Collaboration

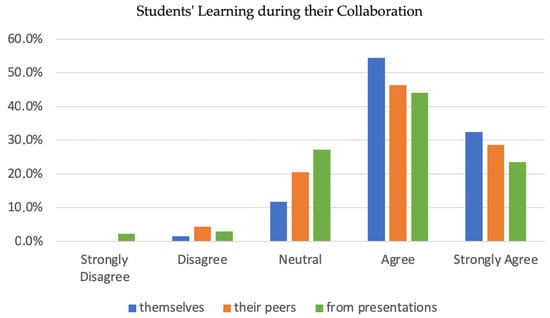

Students can learn significantly during collaborative activities as they engage in discussions, share diverse perspectives, and solve problems together. Students agreed that they learned a lot about their specific data mining technique during their work with their team (M = 4.18, SD = 0.69) (Table 3). Slightly fewer students agreed that their team partners learned a lot about their specific data mining technique during their work with their team (M = 4.00, SD = 0.82). Even fewer students agreed that they learned a lot about other data mining techniques from presentations on the other topics (M = 3.84, SD = 0.90).

Table 3.

Student learning during collaboration.

More specifically, 74 students (54.4%) agreed and 44 students (32.4%) strongly agreed that they learned a lot about their specific data mining technique during their team’s work (Figure 11). Slightly fewer students, 63 (46.3%), agreed and 39 students (28.7%) strongly agreed that their team partners gained a lot of knowledge about their specific data mining technique during their work. In fact, students believed that they learned slightly more than their team partners. By conducting Spearman’s Rho tests, it was found that the association between the grades of the teams’ final outputs and their learning perceptions would be considered statistically significant (rs = 0.40393, p (two-tailed) = 0). Also, the association between the grades of the teams’ final outputs and their perceptions about their team members’ learning would be considered statistically significant (rs = 0.3358, p (two-tailed) = 0.00011).

Figure 11.

Student perceptions about their own learning and their team members’ learning while developing their work and during other teams’ presentations (n = 136).

In addition, the association between the students’ perceptions that they learnt a lot and also that their team members learnt a lot would be considered statistically significant (Spearman’s Rho test: rs = 0.77404, p (two-tailed) = 0; Pearson Correlation Coefficient: r(133) = 0.6954, p = 0.00001).

Finally, their agreement is lower with regard to the extent of their learning about other data mining techniques from the presentations by other teams. A total of 60 students (44.1%) agreed and 32 students (23.5%) strongly agreed that they learned a lot from the presentations of the other teams.

The high self-reported learning (M = 4.18) aligns with social constructivism [41], social learning theory [52], the community of inquiry framework [40], and the conversational framework [53], which emphasize that collaboration can enhance subject understanding and knowledge construction.

5. Qualitative Analysis and Results

This section presents the results of the content analysis [37,38] of the students’ answers to the open-ended questions of the questionnaire, focus group discussions, and the teams’ reports and presentations (153 students).

5.1. Student Perspectives on Collaborative Mode Advantages and Limitations

For all collaborative modes, there were more positive than negative comments (Table 4). All collaborative modes were praised for the quick generation of results. Collaborative Mode #2 supported smooth and continuous collaborative processes that enable consistent and coherent final results. On the other hand, Collaborative Mode #5 emphasized individual work, supporting individual responsibility and flexibility.

Table 4.

Student perspectives on the advantages and limitations of the collaborative modes.

Each collaborative mode had its own challenges. In Collaborative Mode #1, it was difficult to revise prompts, and there was a risk of prompt repetition. Collaborative Mode #2 was not ideal for independent thinkers since it required a common mindset among team members. In Collaborative Mode #3, there was a risk of confusion due to similar prompts. In Collaborative Mode #4, there was a redundancy in ChatGPT’s responses since students were receiving similar responses. In Collaborative Mode #5, there were difficulties in integrating students’ work due to inconsistent formatting.

Overall, each method had its strengths and weaknesses. Collaborative Mode #2 appears to be the most balanced approach, allowing for individual contributions while maintaining team coherence. Collaborative Mode #5 offered the most individual freedom.

The diversity of students’ feedback shows that collaboration is complex, and different students may prefer different approaches based on their working styles and personalities and the specific task at hand. These findings align with collaborative learning theory [16] and adaptive learning design [54], showing that student experiences depend on personal preferences, team dynamics, and the structure of collaboration. These findings also align with the community of inquiry framework [40] which describes how different collaborative structures influence social and cognitive presence in technology-enhanced learning environments.

Modes supporting individual autonomy (e.g., Mode #5) align with constructivist and connectivism principles, while those enabling tight group work (e.g., Mode #2) align with collaborative learning strategies.

The diversity of students’ views illustrates that no single AI collaborative mode fits all learners, emphasizing the need for adaptive pedagogy. Multiple options for collaboration in AI-supported higher education should be available to students.

5.2. Student Perspectives on Communication Tools

Students used a variety of communication methods and tools to communicate and collaborate during the development of their team output. They most frequently mentioned the use of phone calls, Instagram, and Google Drive (Table 5). It seems that they combined direct synchronous communication by phone with asynchronous messaging (e.g., Instagram) and file sharing (e.g., Google Drive).

Table 5.

Communication Tools used by Students.

Students mainly valued the ease of use of their preferred communication method (Table 6). They liked communications that provide immediate, real-time, and direct interaction, as well as simultaneously enabling document editing and file sharing.

Table 6.

Positive aspects of communication methods.

Regarding communication, students mentioned fewer negative issues than positive aspects (Table 6 and Table 7). Students mainly faced scheduling difficulties since it was not easy to find common free time due to conflicting individual programs (Table 7). They also encountered technical issues such as internet connectivity problems, signal variations, and platform limitations. Finally, the lack of direct and in-person communication caused less engagement and more misunderstandings.

Table 7.

Challenges faced in communication.

Finally, students mainly suggested increasing face-to-face collaboration (Table 8) since it enables engagement and mutual understanding. They also suggested resolving technical issues and facilitating scheduling coordination.

Table 8.

Suggestions for improvement.

The tables above provide a comprehensive overview of students’ communication experiences, presenting the tools used, their most appreciated characteristics, challenges encountered, and suggestions for improvement. The analysis revealed a complex landscape of communication and collaboration, with students demonstrating adaptability in using various communication platforms, valuing user-friendliness, flexibility, and immediacy while also recognizing the limitations of remote communication. It would be useful for such communication and collaboration tools to also provide scheduling features as well as efficient integration with other tools. While digital platforms offer convenience, most students still value in-person interactions for more effective teamwork. Many students expressed a preference for face-to-face meetings because they think that they are more effective for understanding, interaction, and team dynamics.

These findings align with “media richness” theory [55] and the “media multiplexity” concept [56], which emphasize the importance of multiple communication channels in digital learning. The preference for hybrid (synchronous/asynchronous) tools relates to the theory of “transactional distance” [57], where different communication tools help overcome spatial and temporal separation in technology-mediated learning.

The desire for in-person interaction indicates the importance of face-to-face communication in digital learning settings [58]. Challenges like scheduling conflicts indicate systemic barriers in blended learning [59]. The challenges with scheduling and technical issues highlight what [60] termed the “digital disconnect” in educational technology implementation.

In summary, successful team communication and collaboration require a balanced approach, adopting both digital tools and personal interaction.

6. Discussion and Implications

This study investigated how undergraduate students prefer to collaborate and communicate with their teammates to develop their teamwork skills when they prompt ChatGPT. It also examined the initial disagreements in teams, the teams’ reliance on AI-generated content, and the students’ perceived learning. The findings revealed that the students did not show a strong preference for any one collaborative mode. This implies that students may not have distinct expectations regarding collaboration, possibly because they were not familiar with structured teamwork or they did not understand how different modes influence their learning experience. Modes #2, #4, and #5 received marginally higher preference ratings, possibly because they offered well-defined structures and role clarity. Similar preferences were observed in graduate students’ collaboration [32]. Specifically, Mode #2 facilitated continuous teamwork, while Mode #5 promoted individual autonomy. Conversely, Mode #3 received lower preference ratings, possibly because students were uncomfortable with transitions between individual and collective work phases. These preference variations indicate the necessity of providing diverse collaborative structures to accommodate varied student profiles and optimize engagement. These findings are important since they show the need for educators to design collaborative assignments to gradually familiarize students with structured collaboration strategies. Universities and instructional designers can use these findings to create flexible collaborative learning environments that accommodate diverse student needs and preferences. Organizations can also use these findings to enhance team dynamics and optimize project-based work.

Each collaborative mode showed distinct strengths and limitations, indicating the complexity of teamwork and diverse student preferences. Mode #2, which emphasized cohesive team-centered collaboration, received higher evaluations, possibly due to its clear structure and coherent teamwork. Mode #5, which supported individual accountability and responsibility, received positive evaluations, possibly due to its clear structure and autonomy. Conversely, Mode #1 presented challenges in prompt refinement and redundancy, Mode #2 required a unified mindset restraining independent thinking, and Mode #5 encountered difficulties in combining individually developed work. These differences express the trade-offs between teamwork and individual autonomy, suggesting that no single mode suits all students or tasks. The findings demonstrate the importance of aligning collaborative approaches with learning objectives and student preferences. Educators can use these findings to develop tailored collaborative activities that accommodate diverse learning preferences. For instance, Mode #2 could be used for tasks requiring cohesion, while Mode #5 may better serve learning objectives that emphasize individual accountability and specialized experience.

Initial disagreements among team members were reasonably common (30.44% average), possibly due to differences in perspectives and critical thinking processes. Similarly, [32] found that graduate students demonstrated a slightly lower initial disagreement rate (24%). These disagreements may originate from differing student profiles, viewpoints, knowledge, and problem-solving approaches or even varying types of work to be conducted. In other words, students were actively engaged in natural deliberations, analytical thinking, and the evaluation of differing viewpoints, thus increasing their collaborative skills. The final resolution of conflicts demonstrates that students are capable of communicating effectively and building consensus. Initial disagreements do not necessarily lead to negative outcomes; rather, they can serve as a critical starting point for building a shared understanding and trust within a team [61].

Students modified ChatGPT responses to a fair degree (50% average), showing a moderate degree of critical engagement with AI-generated content. A similar modification rate of ChatGPT’s responses (48%) was found by [62]. This intermediate modification rate shows a balance between reliance on ChatGPT and independent critical thinking, possibly due to differences in students’ skills, confidence, or willingness to rely on AI assistance. Educators can use these findings to design activities that encourage deeper analysis, originality, and the responsible use of AI, supporting independent thought and creativity.

Students based nearly half (44%) of their final output on ChatGPT’s responses, showing moderate reliance on AI-generated content. A similar rate of students’ work being based on ChatGPT responses (42%) was found by [62]. However, the significant variability in usage (20–80%) reveals that students use AI tools differently, possibly due to differences in students’ confidence, conceptual understanding, or commitment to originality. These findings are important for educators, showing the need to guide students toward responsible AI usage, improve students’ authentic learning, and maintain academic integrity. Institutions can apply these findings to inform policies that promote critical engagement with AI while discouraging overdependence on AI-generated content.

Students reported a significant degree of learning regarding their own team’s work during collaboration (M = 4.18), with a slightly lower degree of learning with respect to their teammates (M = 4.00) and even less from other teams’ presentations (M = 3.84). This result is in line with [32] who found a similar pattern in terms of graduate students’ own learning (M = 4.6) and their teammates’ learning (M = 4.4) from their team’s work, as well as in terms of their own learning from other teams’ presentations (M = 3.97). This finding indicates that the degree of learning via active engagement and collaboration is higher than that of learning from watching others’ presentations.

In addition, own perceived learning and teammates’ learning are significantly associated with each other. This result is in line with [32] who also found that the association between graduate students’ perceptions that they learnt a lot and also that their team members learnt a lot from their team’s work would be considered statistically significant. Therefore, students believed that both themselves and their teammates benefited from their teamwork.

Furthermore, the statistically significant correlations between teams’ grades and their perceived learning indicate that subjective assessments of knowledge acquisition align meaningfully with objective academic performance metrics. On the contrary, ref. [32] found that graduate students’ grades and their learning perceptions were not correlated. Thus, further research is needed on this issue. Educators can exploit these findings by creating structured collaborative opportunities that enhance both individual and collective learning within teams and between teams.

Students utilized a diverse mix of communication methods and tools, including synchronous channels (phone calls), asynchronous messaging (Instagram), and collaborative workspaces (Google Drive), to coordinate team activities. This technological diversity highlights the complexity of modern collaboration, where students use a combination of different communication media [63]. Students valued ease of use, immediate real-time interaction, and simultaneous document editing features, but they had concerns related to scheduling conflicts, technical issues (e.g., poor connectivity), and the limitations of remote communication (e.g., reduced engagement and misunderstandings). Similarly, ref. [64] found that substantial variation in team virtuality can negatively impact team performance. Educators can use these findings to recommend tools that combine real-time interaction with advanced file-sharing capabilities while also encouraging periodic face-to-face meetings when feasible. Additionally, institutions could provide training on effective remote collaboration and ensure reliable technical infrastructure to minimize disruptions.

7. Conclusions, Limitations, and Future Research

This study offers empirical evidence on how undergraduate students collaboratively prompt ChatGPT. It examined five distinct collaborative modes in which teams of three students interacted with ChatGPT to facilitate their teamwork. There were 51 teams randomly assigned to these five collaborative modes. This research explored the advantages and disadvantages of each mode, the communication methods and tools employed, the extent of ChatGPT-generated content incorporated into their final output, and the students’ degree of learning. Knowing such information may help educators to appropriately design collaborative assignments, identify and mitigate potential conflicts or inefficiencies within teams, and provide personalized feedback to each student and each team.

Regarding RQ1, students demonstrated no strong preference for specific collaborative modes, suggesting flexibility in their approach to teamwork. However, there was a slight preference for Mode #2 (cohesive team-oriented collaboration) and Mode #5 (individual responsibility with final integration), which provided a clearer structure and defined roles. This indicates that students value both unified teamwork and individual autonomy depending on the context. Initial disagreements among team members were common (approximately 30%), implying healthy diversity in perspectives and critical thinking. These disagreements were generally resolved through communication and consensus-building, demonstrating students’ developing collaboration skills.

Regarding RQ2, students modified ChatGPT’s responses by approximately 50% and incorporated about 44% of ChatGPT’s suggestions into their final outputs. This balance suggests a thoughtful integration of AI assistance rather than uncritical acceptance or complete rejection. The significant variability (20–80%) in AI reliance indicates diverse attitudes toward AI tools among students.

Regarding RQ3, students’ perceptions were the strongest regarding their own learning during teamwork (M = 4.18), with worse perceptions regarding teammates’ learning (M = 4.00) and their own learning from other teams’ presentations (M = 3.84). The significant correlation between perceived learning and academic performance validates students’ self-assessment as a meaningful indicator of educational outcomes.

Regarding RQ4, communication methods varied, combining synchronous conversation (phone calls), asynchronous messaging (Instagram), and collaborative platforms (Google Drive). Students valued ease of use, real-time interaction, and simultaneous editing capabilities, though they encountered challenges with scheduling conflicts, technical issues, and remote communication limitations.

This study makes several important contributions to understanding AI-supported collaborative learning:

- Framework for AI-Enhanced Collaboration: This study provides students’ perspectives on different collaborative modes when working with AI tools, offering educators an adaptable framework for various learning contexts.

- Flexible Collaborative Structures: By demonstrating that students do not exhibit a strong preference for any single collaborative mode—and that each mode offers distinct advantages and limitations—these findings highlight the need for educational designs that accommodate diverse students’ profiles and tasks.

- Moderate but Varying AI Reliance: Students’ partial dependence on ChatGPT indicates a balanced yet diverse level of reliance. This emphasizes the importance of guiding students toward the responsible, critical use of AI rather than simple adoption or rejection.

- Linking Perceived Learning to Performance: The correlation between students’ perceived learning and actual grades implies that subjective assessments can align with objective measures.

- Communication Strategies for Collaboration: A mix of synchronous and asynchronous tools was common; however, scheduling conflicts, technical limitations, and reduced face-to-face interaction remain concerns. Institutions can optimize technology infrastructure, provide relevant training, and encourage in-person meetings when feasible to enhance teamwork quality.

Despite offering important results, this study has several limitations. First, the relatively small and demographically narrow sample restricts the broader applicability of the results. Larger (e.g., thousands of students), more diverse student profiles (e.g., educational levels, educational subjects, backgrounds, countries) could improve the understanding of the impact of ChatGPT-supported collaboration across different contexts. Second, the reliance on self-reported data introduces the risk of social desirability or recall biases. Third, this study was conducted during a single semester, and it did not investigate possible changes in students’ behavior and performance over long time periods.

Since this study investigated a new topic, there are many opportunities for future research. Future studies could examine the impact of different collaborative modes on teams’ and students’ skills, engagement, motivation, satisfaction, and performance. Also, future research could employ longitudinal designs to examine how students’ (or even workers) collaborative preferences, AI utilization, and teamwork skills evolve over time. In parallel, future studies may investigate the long-term effects of using AI on learning and wellbeing. Researchers might also conduct discipline-specific analyses to explore how different fields or course requirements affect AI-supported teamwork. Additionally, exploring instructor perspectives could provide complementary insights into the effective integration of AI tools in collaborative tasks. Cross-cultural comparisons could show how diverse cultural contexts influence both AI usage and teamwork preferences. Furthermore, designing innovative assessments that differentiate individual contributions from collective outcomes and investigating the impact of different collaborative modes on students’ learning outcomes, skills, engagement, motivation, and overall satisfaction are attractive directions for further enhancing our understanding of AI’s pedagogical potential. Finally, future research may compare the proposed collaborative modes with respect to teams’ dynamics, engagement, satisfaction, outputs, performance, etc. Then, appropriate collaborative modes could be suggested for different team and student profiles for different educational contexts.

Author Contributions

The authors contributed equally to this work. Conceptualization, M.P. and A.A.E.; methodology, M.P. and A.A.E.; software, M.P. and A.A.E.; validation, M.P. and A.A.E.; formal analysis, M.P. and A.A.E.; investigation, M.P. and A.A.E.; resources, M.P. and A.A.E.; data curation, M.P. and A.A.E.; writing—original draft preparation, M.P. and A.A.E.; writing—review and editing, M.P. and A.A.E.; visualization, M.P. and A.A.E.; supervision, M.P. and A.A.E.; project administration, M.P. and A.A.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by RESEARCH ETHICS COMMITTEE of the University of Macedonia 5/20-12-2023 2023-12-20.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the anonymous reviewers for their constructive suggestions.

Conflicts of Interest

The authors have no conflicts of interest to disclose. The authors declare that they have no known competing financial interests or personal relationships that could appear to have influenced the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial intelligence. |

| CoPIR | Collaborative Preparation, Implementation, Revision. |

| GenAI | Generative artificial intelligence. |

| GPT | Generative pre-trained transformer. |

| LLM | Large language model. |

| M | Mean. |

| SD | Standard deviation. |

References

- Lim, W.M.; Gunasekara, A.; Pallant, J.L.; Pallant, J.I.; Pechenkina, E. Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 2023, 21, 100790. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gaseer, U.; Groh, G.; Gunnemann, S.; Hullermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Appolloni, A.; Treiblmaier, H.; Iranmanesh, M. Exploring the impact of ChatGPT on education: A web mining and machine learning approach. Int. J. Manag. Educ. 2024, 22, 100932. [Google Scholar] [CrossRef]

- Lund, B.D.; Wang, T.; Mannuru, N.R.; Nie, B.; Shimray, S.; Wang, Z. ChatGPT and a new academic reality: Artificial Intelligence-written research papers and the ethics of the large language models in scholarly publishing. J. Assoc. Inf. Sci. Technol. 2023, 74, 570–581. [Google Scholar] [CrossRef]

- OpenAI. ChatGPT [Large Language Model]. 2025. Available online: https://chat.openai.com/chat (accessed on 10 April 2024).

- Rasul, T.; Nair, S.; Kalendra, D.; Robin, M.; de Oliveira Santini, F.; Ladeira, W.J.; Sun, M.; Day, I.; Rather, R.A.; Heathcote, L. The role of ChatGPT in higher education: Benefits, challenges, and future research directions. J. Appl. Learn. Teach. 2023, 6, 41–56. [Google Scholar] [CrossRef]

- SimilarWeb. Chatgpt.com Website Analysis for February 2025. 2025. Available online: https://www.similarweb.com/website/chatgpt.com/#overview (accessed on 10 March 2025).

- Montenegro-Rueda, M.; Fernández-Cerero, J.; Fernández-Batanero, J.M.; López-Meneses, E. Impact of the implementation of ChatGPT in education: A systematic review. Computers 2023, 12, 153. [Google Scholar] [CrossRef]

- Crompton, H.; Burke, D. The educational affordances and challenges of ChatGPT: State of the field. TechTrends 2024, 68, 380–392. [Google Scholar] [CrossRef]

- Cotton, D.R.E.; Cotton, P.A.; Shipway, J.R. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2024, 61, 228–239. [Google Scholar] [CrossRef]

- Tlili, A.; Shehata, B.; Adarkwah, M.A.; Bozkurt, A.; Hickey, D.T.; Huang, R.; Agyemang, B. What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- Tan, S.C.; Lee, A.V.Y.; Lee, M. A systematic review of artificial intelligence techniques for collaborative learning over the past two decades. Comput. Educ. Artif. Intell. 2022, 3, 100097. [Google Scholar] [CrossRef]

- Thorp, H.H. ChatGPT is fun, but not an author. Science 2023, 379, 313. [Google Scholar] [CrossRef]

- Chen, J.; Wang, M.; Kirschner, P.A.; Tsai, C.C. The role of collaboration, computer use, learning environments, and supporting strategies in CSCL: A meta-analysis. Rev. Educ. Res. 2018, 88, 799–843. [Google Scholar] [CrossRef]

- Salomon, G.; Perkins, D.N. Individual and social aspects of learning. Rev. Res. Educ. 1998, 23, 1–24. [Google Scholar] [CrossRef]

- Dillenbourg, P. What do you mean by collaborative learning. In Collaborative-Learning: Cognitive and Computational Approaches; Dillenbourg, P., Ed.; Elsevier: Oxford, UK, 1999; pp. 1–19. [Google Scholar]

- Prince, M. Does active learning work? A review of the research. J. Eng. Educ. 2004, 93, 223–231. [Google Scholar] [CrossRef]

- Springer, L.; Stanne, M.E.; Donovan, S.S. Effects of small-group learning on undergraduates in science, mathematics, engineering, and technology: A meta-analysis. Rev. Educ. Res. 1999, 69, 21–51. Available online: http://www.jstor.org/stable/1170643 (accessed on 10 April 2024). [CrossRef]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How does ChatGPT perform on the United States Medical Licensing Examination (USMLE)? The implications of large language models for medical education and knowledge assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef]

- Mena-Guacas, A.F.; Urueña Rodríguez, J.A.; Santana Trujillo, D.M.; Gómez-Galán, J.; López-Meneses, E. Collaborative learning and skill development for educational growth of artificial intelligence: A systematic review. Contemp. Educ. Technol. 2023, 15, ep428. [Google Scholar] [CrossRef]

- Kumar, J.A. Educational chatbots for project-based learning: Investigating learning outcomes for a team-based design course. Int. J. Educ. Technol. High. Educ. 2021, 18, 65. [Google Scholar] [CrossRef]

- Sikström, P.; Valentini, C.; Sivunen, A.; Kärkkäinen, T. How pedagogical agents communicate with students: A two-phase systematic review. Comput. Educ. 2022, 188, 104564. [Google Scholar] [CrossRef]

- Haq, I.U.; Anwar, A.; Rehman, I.U.; Asif, W.; Sobnath, D.; Sherazi, H.H.R. Dynamic group formation with intelligent tutor collaborative learning: A novel approach for next generation collaboration. IEEE Access 2021, 9, 143406–143422. [Google Scholar] [CrossRef]

- Wiboolyasarin, W.; Wiboolyasarin, K.; Suwanwihok, K.; Jinowat, N.; Muenjanchoey, R. Synergizing collaborative writing and AI feedback: An investigation into enhancing L2 writing proficiency in wiki-based environments. Comput. Educ. Artif. Intell. 2024, 6, 100228. [Google Scholar] [CrossRef]

- Msambwa, M.M.; Wen, Z.; Daniel, K. The impact of AI on the personal and collaborative learning environments in higher education. Eur. J. Educ. 2025, 60, e12909. [Google Scholar] [CrossRef]

- Kovari, A. A systematic review of AI-powered collaborative learning in higher education: Trends and outcomes from the last decade. Soc. Sci. Humanit. Open 2025, 11, 101335. [Google Scholar] [CrossRef]

- Ouyang, F.; Zhang, L. AI-driven learning analytics applications and tools in computer-supported collaborative learning: A systematic review. Educ. Res. Rev. 2024, 44, 100616. [Google Scholar] [CrossRef]

- Kong, X.; Liu, Z.; Chen, C.; Liu, S.; Xu, Z.; Tang, Q. Exploratory study of an AI-supported discussion representational tool for online collaborative learning in a Chinese university. Internet High. Educ. 2025, 64, 100973. [Google Scholar] [CrossRef]

- Darmawansah, D.; Rachman, D.; Febiyani, F.; Hwang, G.J. ChatGPT-supported collaborative argumentation: Integrating collaboration script and argument mapping to enhance EFL students’ argumentation skills. Educ. Inf. Technol. 2025, 30, 3803–3827. [Google Scholar] [CrossRef]

- Zheng, L.; Fan, Y.; Gao, L.; Huang, Z.; Chen, B.; Long, M. Using AI-empowered assessments and personalized recommendations to promote online collaborative learning performance. J. Res. Technol. Educ. 2024, 1–27. [Google Scholar] [CrossRef]

- Albadarin, Y.; Saqr, M.; Pope, N.; Tukiainen, M. A systematic literature review of empirical research on ChatGPT in education. Discov. Educ. 2024, 3, 60. [Google Scholar] [CrossRef]

- Perifanou, M.; Economides, A.A. Collaborative uses of GenAI tools in project-based learning. Educ. Sci. 2025, 15, 354. [Google Scholar] [CrossRef]

- Fusch, P.; Fusch, G.E.; Ness, L.R. Denzin’s paradigm shift: Revisiting triangulation in qualitative research. J. Sustain. Soc. Change 2018, 10, 19–32. [Google Scholar] [CrossRef]

- Thurmond, V.A. The point of triangulation. J. Nurs. Scholarsh. 2001, 33, 253–258. [Google Scholar] [CrossRef]

- Carter, N. The use of triangulation in qualitative research. Oncol. Nurs. Forum 2014, 41, 545–547. [Google Scholar] [CrossRef]

- Johnson, R.B.; Onwuegbuzie, A.J.; Turner, L.A. Toward a definition of mixed methods research. J. Mix. Methods Res. 2007, 1, 112–133. [Google Scholar] [CrossRef]

- Hsieh, H.F.; Shannon, S.E. Three approaches to qualitative content analysis. Qual. Health Res. 2005, 15, 1277–1288. [Google Scholar] [CrossRef]

- Neuendorf, K.A. Content analysis and thematic analysis. In Advanced Research Methods for Applied Psychology; Routledge: London, UK, 2018; pp. 211–223. [Google Scholar]

- Ziernwald, L.; Hillmayr, D.; Holzberger, D. Promoting high-achieving students through differentiated instruction in mixed-ability classrooms—A systematic review. J. Adv. Acad. 2022, 33, 540–573. [Google Scholar] [CrossRef]

- Garrison, D.R. E-learning in the 21st Century: A Community of Inquiry Framework for Research and Practice, 3rd ed.; Routledge: London, UK, 2016. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, MA, USA, 1978; Volume 86. [Google Scholar]

- Doise, W.; Mugny, G. The Social Development of the Intellect; Oxford University Press: Oxford, UK, 1984. [Google Scholar]

- Jehn, K.A.; Mannix, E.A. The dynamic nature of conflict: A longitudinal study of intragroup conflict and group performance. Acad. Manag. J. 2001, 44, 238–251. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education–where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Krathwohl, D.R. A revision of Bloom’s taxonomy: An overview. Theory Into Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Papert, S.A. Mindstorms: Children, Computers, and Powerful Ideas; Basic books: New York, NY, USA, 2020. [Google Scholar]

- Collins, A.; Brown, J.S.; Newman, S.E. Cognitive apprenticeship: Teaching the crafts of reading, writing, and mathematics. In Knowing, Learning, and Instruction; Routledge: London, UK, 1989; pp. 453–494. [Google Scholar] [CrossRef]

- Pea, R.D. The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. J. Learn. Sci. 2004, 13, 423–451. [Google Scholar] [CrossRef]

- Wijaya, T.T.; Yu, Q.; Cao, Y.; He, Y.; Leung, F.K. Latent Profile Analysis of AI Literacy and Trust in Mathematics Teachers and Their Relations with AI Dependency and 21st-Century Skills. Behav. Sci. 2024, 14, 1008. [Google Scholar] [CrossRef]

- Pea, R.D. Practices of distributed intelligence and designs for education. Distrib. Cogn. Psychol. Educ. Consid. 1993, 11, 47–87. [Google Scholar]

- Jonassen, D.H. Computers as Mindtools for Schools: Engaging Critical Thinking, 2nd ed.; Prentice Hall: Saddle River, NJ, USA, 2000. Available online: https://lccn.loc.gov/99032758 (accessed on 31 March 2025).

- Bandura, A.; Walters, R.H. Social Learning Theory; Prentice hall: Englewood Cliffs, NJ, USA, 1977; Volume 1, pp. 141–154. [Google Scholar]

- Laurillard, D. Rethinking University Teaching: A Conversational Framework for the Effective Use of Learning Technologies, 2nd ed.; Routledge: London, UK, 2013. [Google Scholar] [CrossRef]

- Economides, A.A. Adaptive context-aware pervasive and ubiquitous learning. Int. J. Technol. Enhanc. Learn. 2009, 1, 169–192. [Google Scholar] [CrossRef]

- Daft, R.L.; Lengel, R.H. Organizational information requirements, media richness and structural design. Manag. Sci. 1986, 32, 554–571. [Google Scholar] [CrossRef]

- Haythornthwaite, C. Social networks and Internet connectivity effects. Inf. Community Soc. 2005, 8, 125–147. [Google Scholar] [CrossRef]

- Garrison, R. Theoretical challenges for distance education in the 21st century: A shift from structural to transactional issues. Int. Rev. Res. Open Distrib. Learn. 2000, 1, 1–17. [Google Scholar] [CrossRef]

- Bali, S.; Liu, M.C. Students’ perceptions toward online learning and face-to-face learning courses. J. Phys. Conf. Ser. 2018, 1108, 012094. [Google Scholar] [CrossRef]

- Graham, C.R. Emerging practice and research in blended learning. In Handbook of Distance Education; Moore, M.G., Ed.; Routledge: London, UK, 2013; pp. 333–350. [Google Scholar]

- Selwyn, N. Exploring the ‘digital disconnect’ between net-savvy students and their schools. Learn. Media Technol. 2006, 31, 5–17. [Google Scholar] [CrossRef]

- Gevers, J.; Li, J.; Rutte, C.G.; Eerde, W.v. How dynamics in perceptual shared cognition and team potency predict team performance. J. Occup. Organ. Psychol. 2019, 93, 134–157. [Google Scholar] [CrossRef]

- Economides, A.A.; Perifanou, M. Higher education students using GenAI tools to design websites. In Proceedings of the Innovating Higher Education conference (I-HE2024) Proceedings, Limassol, Cyprus, 23–25 October 2024. [Google Scholar] [CrossRef]

- Kaneko, A. Team Communication in the workplace: Interplay of communication channels and performance. Bus. Prof. Commun. Q. 2023, 57, 370–400. [Google Scholar] [CrossRef]

- Hincapie, M.X.; Hill, N.S. The impact of team virtuality on the performance of on-campus student teams. Acad. Manag. Learn. Educ. 2024, 23, 158–175. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).