Abstract

Computer-driven assessment has revolutionized the way educational and professional assessments are conducted. Using artificial intelligence for data analytics, computer-based assessment improves efficiency, accuracy, and optimization of learning across disciplines. Optimizing e-learning requires a structured approach to analyzing learners’ progress and adjusting instruction accordingly. Although learning effectiveness is influenced by numerous parameters, competency-based assessment provides a structured and measurable way to evaluate learners’ achievements. This study explores the application of artificial intelligence algorithms to optimize e-learners’ studying within a generalized e-course framework. A competency-based assessment model was developed using weighted parameters derived from Bloom’s taxonomy. The key contribution of this work is an innovative method for calculating competency scores using weighted attributes and a dynamic assessment parameter, making the optimization process applicable to both learners and instructors. The results indicate that using the weighted attribute method with a dynamic assessment parameter can improve the structuring of e-courses, increase learner engagement, and provide instructors with a clearer understanding of learners’ progress. The proposed approach supports data-driven decision making in e-learning, ensuring a personalized learning experience, and improving overall learning outcomes.

1. Introduction

In an era of rapid technological advancement, computer-driven assessment (CDA) has become a transformative tool in education, certification, and process optimization. Traditional assessment methods that rely on human evaluation are often time-consuming, prone to bias, inaccurate, and limited in scalability. The advent of CDA has addressed these issues by providing automated, data-driven assessment methods that improve both efficiency and accuracy.

The integration of Artificial Intelligence (AI) into learning analytics is transforming traditional teaching and assessment methods. AI-driven assessment offers advantages such as objectivity, adaptability, and scalability, allowing for a more precise evaluation of students’ learning progress. These technologies enable the personalization of learning resources, real-time feedback, and data-driven optimization of learning pathways [1]. However, their implementation raises concerns about data privacy, transparency, and interpretability [2].

Competency-based assessment plays a crucial role in modern education, shaping learning outcomes and instructional strategies. International frameworks, such as the European Qualifications Framework [3] and the OECD Learning Compass 2030 [4], define competency as a combination of knowledge, skills, and autonomy essential for lifelong learning. However, traditional competency assessments often fail to dynamically adjust to students’ learning progress, necessitating more adaptive and AI-assisted approaches.

To structure competency assessment more effectively, weighted attributes provide a quantifiable framework for evaluating different aspects of student learning. Business intelligence maturity models [5] have been widely used in higher education institutions (HEIs) to optimize decision-making processes, but they often lack mechanisms for real-time adaptation of competency-weighted attributes. Similarly, textual analysis and automation techniques [6] have been proposed to extract key competency attributes, while depth-first search algorithms have been applied to recommend personalized learning paths [7]. However, these models do not dynamically adjust competency weightings based on real-time student performance.

The role of weighted attributes in e-learning optimization has been explored in various computational and AI-driven models. Competency-based education (CBE) emphasizes learner-centered, skill-transferable education models [8,9] (Malhotra et al., 2023; Kennedy, 2006), but its implementation remains challenging without structured weighting systems. AI-driven systems, including Item Response Theory (IRT) models [10] and machine learning-based optimization methods [11], have been proposed to automate competency evaluation. Furthermore, crowdsensing models [12] have demonstrated how data-driven approaches can optimize engagement and participation, providing insights into real-time learning analytics. However, challenges such as data privacy, transparency, and interpretability persist in AI-driven assessment models [2]. With the increasing role of AI in e-learning, the integration of heterogeneous data sources has become essential for optimizing education [13]. Heterogeneous Internet of Things (HetIoT) architectures process large-scale student data in real-time, enabling adaptive learning systems to optimize trajectories based on weighted attributes.

While existing models, such as IRT and crowdsensing, have made significant strides in automating competency evaluation and optimizing engagement, they often lack dynamic adaptability to real-time student performance. The proposed approach addresses this gap by introducing a dynamic weighting system that adjusts competency assessments based on real-time student behavior and performance trends. Unlike traditional models that treat competencies as static entities, our method integrates weighted attributes and a dynamic assessment parameter, allowing for continuous optimization of learning pathways. This approach personalizes the assessment process, offering learners and instructors actionable insights into progress and areas needing improvement.

This study builds on weighted attributes as a structured method for dynamic competency assessment. By integrating AI-driven competency indicators with weighted assessment parameters and 3D visualization, this approach seeks to bridge the gap between static competency frameworks and adaptive learning path optimization.

2. Materials and Methods

This study focuses on the use of a universal structure for an e-course, considering the basic competencies for using indicators of educational resources to generate recommendations and optimize the student’s learning path. To select a universal course structure using competencies, a review and analysis of four subjects taught at TTK University of Applied Sciences (TTK UAS), Estonia, for technical specialties was conducted. The subjects selected for review and analysis are mandatory for first-year students; out of 778 first-year students in the 2023/2024 academic year, 614 students (78.9%) are enrolled in these subjects.

Since these subjects form a core part of the technical curriculum, they provide a strong basis for assessing competency-based learning approaches across different disciplines. The selection of these subjects ensures that the proposed e-learning structure is both broadly applicable and adaptable to diverse technical fields.

The overview of the various technical subjects is presented in Table 1.

Table 1.

Learning subjects’ general data.

Given the high usage rate and significance of academic subjects for first-year engineering students, developing a universal course structure would help incorporate key learning outcome parameters and competencies.

2.1. Competencies Mapping

Competency mapping is a structured approach used to define, assess, and optimize learning paths based on students’ knowledge, skills, and abilities. In modern e-learning environments, it plays a crucial role in tracking learning progress and ensuring that students develop essential competencies. However, traditional competency mapping models often treat all competencies equally, without considering their relative importance in different learning contexts.

This study introduces an AI-driven competency mapping approach where competencies are treated as one element of a broader set of weighted attributes. By integrating weighted attributes, we enhance the accuracy of assessments and optimize learning pathways based on dynamic, real-time student progress.

Competencies can be categorized into three primary groups:

- Knowledge-based competencies— Theoretical understanding of concepts.

- Skill-based competencies—Practical application and problem-solving abilities.

- Application-based competencies—Ability to integrate and transfer knowledge into real-world scenarios.

In traditional frameworks, these competencies are assessed independently. However, in this model, they are assigned weights based on their significance in a given learning module. This ensures that more critical competencies influence the learning path more strongly than less essential ones.

While competencies are a central component of assessment, they are only one of several weighted attributes used to evaluate learning effectiveness. Other attributes include:

- Engagement levels—Time spent on tasks, participation in discussions.

- Adaptability—Ability to improve performance based on feedback.

- Learning trajectory—Rate of progress across different modules.

By applying dynamic weighting, the system can adjust competency assessments based on student behavior, performance trends, and AI-driven analytics. This prevents rigid assessment structures and allows for a more adaptive learning experience.

Each competency is structured hierarchically, meaning that subsequent competencies build upon previous ones. To ensure a logical progression of learning, the relationship between competencies can be established using weighted attributes. The weight distribution of competencies plays a crucial role in structuring the learning path, as it determines the emphasis placed on each competency within the course.

To establish a structured approach to competency assessment, a comparative analysis of international knowledge assessment frameworks was conducted, including PISA 2018 [14], the revised Bloom’s taxonomy [15], and the SOLO taxonomy [16]. These studies highlight the importance of defining competencies in a clear, organized manner to enhance learning outcomes [17]. Based on this analysis, a generalized competency structure was developed, which categorizes competencies into three progressive levels:

- Understand—The ability to grasp basic concepts, definitions, and terminology, forming the foundation of knowledge acquisition.

- Evaluates—The ability to compare, analyze, and assess different tools, resources, or approaches in each context, demonstrating critical thinking.

- Implements—The ability to apply acquired knowledge independently in practical or problem-solving scenarios, ensuring competency in real-world applications.

This three-level model aligns with the principles of Bloom’s taxonomy by incorporating sublevels that refine the complexity of acquired skills. By structuring competencies in this manner, the course material can be more effectively sequenced, supporting students in gradual skill development while fostering deeper engagement in learning.

The percentage distribution of competencies is typically determined by the teacher designing the course structure, allowing for flexibility in defining which competencies should be prioritized. Table 2 presents two approaches to competency distribution:

Table 2.

Competencies distribution.

- A uniform distribution, where all competencies are weighted equally.

- A teacher-defined distribution, which assigns different weights based on the importance and complexity of each competency in the learning process.

By integrating computer-driven assessment, this model allows for dynamic adjustments to competency weightings, ensuring that students receive personalized and adaptive learning experiences based on their progress.

One ECTS (one Credit) corresponds to 25 to 30 h of work. Since workload is an estimation of the average time spent by students to achieve the expected learning outcomes, the actual time spent by an individual student may differ from this estimate [18]. In Estonia, in accordance with the University Act law, 1 ECTS is 26 h, and if a learning subject workload is estimated as 3 ECTS, that means 78 academic hours (ac. h.), including 32 ac. h. of classroom work and 46 ac. h. of independent work. Using the extraction of the required part of the program description in TTK UAS Tahvel (Estonian educational information system board) for analysis, data were processed and resources were allocated:

- Predefined inputs—The program name, learning outputs (LOs), ECTS, and the competency percentages of competencies (Understands, Evaluates, Implements);

- Computation—Total hours per subject are calculated ECTS × 26. Each competency’s percentage is applied to obtain the respective hours and converted back to ECTS portions;

- Outputs—The corresponding competencies were identified for the learning outcomes by arranging them, and the main cognitive competencies were summarized. The planned time for acquiring each competence was calculated considering their distribution. The computation output is shown in Table 3 and Table 4.

Table 3. Learning subjects’ learning outcomes and competencies.

Table 3. Learning subjects’ learning outcomes and competencies. Table 4. E-course resource competence indication.

Table 4. E-course resource competence indication.

When calculating the average value of each competence considered in each subject, the following values were obtained:

- Understands/knows—Covers foundational knowledge; required to progress further and establish a foundation for subsequent learning;Σ (1st competence type hours)/12 (1st competence type count) is 28.47 h and 38%;

- Evaluates—Builds on foundational knowledge with intermediate reasoning, focusing on analytical skills, bridging understanding and application;Σ (2nd competence type hours)/14 (2nd competence type count) is 21.06 h and 28%;

- Implements—Focuses on practical implementation and skill mastery, emphasizing practical implementation, with slightly greater weight due to its real-world relevance;Σ (3rd competence type hours)/12 (3rd competence type count) is 24.96 h and 34%.

In total, it gives 74.5 h, which corresponds to the planned volume of hours with an accuracy of 95.54% for acquiring the competencies of the basic academic subject of 3 ECTS. Thus, for further analysis, it is advisable to use the data from courses with 3 ECTS, with competency type distributions of 38%, 28%, 34%.

2.2. Comptencies Indicator Calculation

To ensure the quality of ECTS, clear information about their level and assessment, as well as the realistic workload, must be available.

The time to achieve a certain level of competence depends on the number of resources used in the e-course.

To check the course structure and the compatibility of the sequence of educational resources proposed by the teacher with the acquisition of the necessary competencies, an indicator is needed that will not only allow you to analyze the course structure but also help optimize the student’s educational trajectory. This indicator is called the resource-competence indicator. To calculate the educational resource competence indicator, it is necessary to use resource features that can be used when developing the structure of the course and determining the hierarchical order of resources in the structure: the level and sublevel of the Bloom taxonomy of the resource determining the complexity, the order of the resource in the hierarchical structure of the course, and the planned time for studying the resource based on the number of credits of the entire course, with an equal distribution between the educational resources of the course.

The formula for calculating the educational resource competence indicator is based on the fundamental principles of multi-criteria decision making and weighted assessment and combines the factors of Bloom’s level weight, educational resource weight, and the credit portion of the educational resource into a single structure, reflecting the balance between complexity, resource weight, and planned time in accordance with their assigned importance (Table 3).

where

Cn = WiD + WnT + WnECTS,

n—learning resource (Tool) contribution (Table 4, columns 6, 7);

Cn—n-th tool competence indicator (Table 4, columns 18, 19);

WiD—i-th difficulty weight, with ΣWiD = 1;

where i is a Bloom level (Table 4, columns 4, 5);

and WiD = i/Σi = i/100,

WnT—n-th learning resource (Tool) weight (Table 4, columns 14, 15), with ΣWnT = 1;

where

WnT = (1/ΣT) × n

WnECTS—n-th tool competence ECTS weight (Table 4, columns 16, 17), with Σ WnECTS = 1,

where (see Table 4, columns 10, 11)

WnECTS = (3 × 38|28|34)/100) × (Σk 38|28|34),

k38—the number of the 1st competence type;

k28—the number of the 2nd competence type;

k34—the number of the 3rd competence type.

The distribution of data is based on most of the basic knowledge, 38% and an even distribution of 28 and 34% between the comparison and application levels.

If, in the opinion of the teacher, the percentage distribution by the value of the levels differs from the proposed one, then it is necessary to make appropriate changes to the values of the coefficients in the formula.

To use the formula, the competencies of the educational materials of two electronic courses, “CAD Design” (131, 3 ECTS) and “Engineering Graphics” (116, 3 ECTS), were determined to obtain a more generalized result. The assignment of weight to each competence, based on relative importance, competence type (Table 3, column 4), and the level of complexity, was determined in accordance to the order of studying the educational material developed by the teacher (Table 3, columns 12, 13). Table 4 summarizes the data of two learning subjects after calculations using the specified formulas in Excel.

The statistical metrics used in the study are as follows:

- Mean: The average value of the competency indicator across all observations, providing a central tendency measure of student performance.

- Variance: A measure of the spread of the competency indicator values, indicating how much the individual scores deviate from the mean. A high variance suggests a wide range of performance levels among students.

The analysis of these statistical metrics of Table 4 data—changes in mean and variance at different weights, hypothesis testing, and confidence intervals—was performed for subject 131 and showed the following results [19] (Analyze_statistics, 1) (Table 5).

Table 5.

E-course 131 resource statistical metrics.

Results explanation:

- Tool’s competence indication (Cni_131) fluctuates significantly, meaning that different students or instruments have a large range of competence scores.

- Tool’s competence ECTS (WnECTS_131) has a larger effect than WnT_131 but is also more variable.

- Tool’s weight (WnT_131) is more stable—it makes a smaller contribution but in a more predictable way.

There is no clear statistical difference between WnECTS_131 and WnT_131, meaning that they may work in a similar way.

Tabular data can be used to visually demonstrate the proposed learning path when developing the structure of each course by the teacher. The heat map shows the increasing difficulty of the course (Figure 1).

Figure 1.

E-course CAD design (131, 3 ECTS) structure visualization with a learning path.

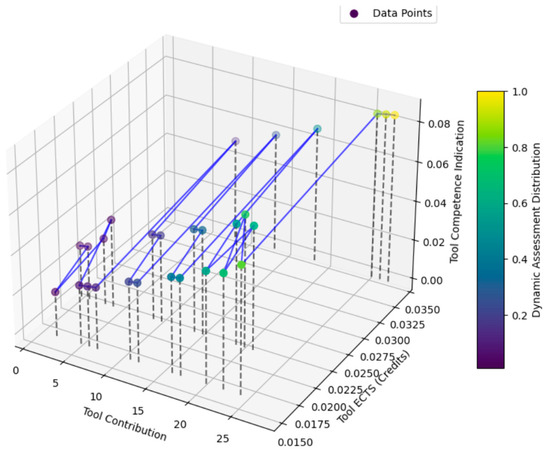

To produce a 3D visualization, a combination of pandas is used to manipulate the data, with matplotlib for visualization, and mpl_toolkits.mplot3d to plot the 3D graphs from Excel table [19] (code_1, code_2).

The components of the methodology are as follows:

- Data processing with pandas. The panda’s library is used to load, manipulate, and organize the data stored in the Excel file into a DataFrame. The Tool_contribution, Tool_ECTS, and Tool_competence_indication columns are extracted to be used as the basis for the visualization.

- Visualization with matplotlib, a widely used Python 3.11 library for creating static, interactive, and animated visualizations, is used to initiate a figure with predefined dimensions. To this figure, a subplot is added and configured with 3D plotting capabilities to support the spatial representation of the data.

- Three-dimensional plots with mpl_toolkits.mplot3d—This module facilitates the representation of data points in a 3D coordinate system and improves the interpretation of spatial relationships between data points. A 3D scatter plot is implemented to illustrate individual data points in 3D space. Visualization options include the coordinates of each point, marker color, size, and additional elements in the form of vertical and horizontal support lines—connecting lines between successive points to emphasize relationships.

This visualization method effectively represents the relationships between the Tool_contribution, Tool_ECTS, and Tool_competence_indication parameters. The approach supports the identification of trends, patterns, and outliers, offering a spatial perspective that improves interpretability.

2.3. Competence Indicator Calculation with Dynamic Assessment

Assessments, not just credits, are properly reflected in any final qualifications obtained.

Therefore, it is necessary to clarify the formula of the competency indicator, considering the dynamic change in assessment. For this purpose, a formula was created based on the calculations used, with the additional value of the dynamic assessment attribute introduced:

where

CA = Cn + DA,

CA—competence indicator with assessment;

Cn—n-th tool competence indicator;

DA—Dynamic Component Assessment, with

where

DA = WA × fA,

WA—assessment weight 0.1…1.0;

fA = A2—assessment function translates assessment scores into a competency contribution with an exponential relation.

To apply the dynamic competency formula and process the obtained data [19] (dataset_1), pandas library algorithms were used for data processing and openpyxl for reading and writing the Excel files [19] (code_3). The dynamic formula includes factors such as Bloom taxonomy level weights, resource weights, and scores. The updated data is then saved to a new Excel file. Subsequently, the data from several tables, structured according to assessment ratings from 0.1 to 1, is combined into one comprehensive table [19] (dataset_2). The extracted data is shown in Table 6.

Table 6.

E-course resource competence indication with assessment.

The analysis of statistical metrics of competence indication, weight, and ECTS (European Credit Transfer and Accumulation System) (Table 6)—including changes in mean and variance at different weights, hypothesis testing, and confidence intervals—performed for subject 131 showed the following results [19] (Analyze_statistics, 2) (Table 7).

Table 7.

E-course 131 resource updated statistical metrics.

The means and variances are reasonable, indicating small variations in Tool_competence_indication, Tool_weight, and Tool_ECTS. These metrics suggest that all three variables have relatively small variability, implying consistency in the data.

The next step involves testing the developed formula to verify its validity for use in further data processing.

2.4. Testing the Reliability of the Formula

To test the reliability of a formula in the context of educational or competency assessment, a systematic approach involving verification, testing, and analysis was taken.

An increasing complexity weight demonstrates a higher competency indicator. This confirms the consistency of the competency indicator formula, which uses the weight parameters of competency attributes, learning resources, and credits (planned time of resource use).

The formula was cross-checked for correctness using Excel spreadsheet data and the panda library to calculate the competency score using the provided formula [19] (code_4), with the results recorded into computed_results.xlsx [19] (dataset_3). The method is implemented using Python to ensure the reproducibility of results and to handle different input configurations (different Bloom levels or instrument contributions) (Table 8).

Table 8.

Cross-validation results comparison.

Comparison results explanation:

Input Tool Competence Indication = 0.121572 does not match the computed Cn = 0.044132642 because they originate from distinct methods of calculation using an alternative scaling approach. To align the computed Cn with the input value, normalization [20] with adjustable coefficients needs to be applied. These coefficients will account for the different approaches teachers use in assigning weighting parameters.

2.5. Distinction Between Existing and Proposed Methods

Traditional competency-based assessment models, such as Item Response Theory (IRT) [10] and machine learning-based optimization methods [11], have been widely used to automate competency evaluation. These models rely on static weighting systems and predefined parameters, which lack the ability to adapt to real-time student performance. Crowdsensing models [12] have also been employed to optimize engagement and participation, but they often fail to provide personalized feedback or dynamically adjust to individual learning trajectories. While these methods have improved the efficiency of competency assessment, they face challenges in terms of adaptability, personalization, and real-time data integration.

In contrast, the present approach introduces a dynamic weighting system that adjusts competency assessments based on real-time student behavior and performance trends. Unlike traditional models, which treat competencies as static entities, our method integrates weighted attributes and a dynamic assessment parameter. This allows for continuous optimization of learning pathways, ensuring that the assessment process is both adaptive and personalized. Specifically, the proposed method:

- Utilizes weighted attributes to prioritize competencies based on their relevance and complexity.

- Incorporates a dynamic assessment parameter that adjusts in real time based on student performance, providing actionable insights for both learners and instructors.

- Employs 3D visualization to represent competency progression, enabling a clearer understanding of learning trajectories and areas for improvement.

By bridging the gap between static competency frameworks and adaptive learning path optimization, our approach addresses the limitations of existing methods and provides a more effective solution for e-learning optimization.

3. Results

For verification of the formula’s reliability, the Error Analysis was used [19] (code_4). The formula was used to test the obtained Excel tabular data with the pandas library algorithms: The CA competence indicator was calculated for each row, and an error analysis was performed. When testing the formula, the Bloom level weights were normalized by the sum of the Bloom level weights, the instrument weights were normalized by the sum of the instrument weights, the credit weights were normalized and scaled, and then the competency indicator was calculated by summing up all the listed elements. The calculation of the dynamic assessment and the competency indicator with dynamic assessment was performed without normalization or scaling (Formulas (5) and (6)). The calculation results were saved in a new Excel file [19] (dataset_3) with the calculated columns (Table 9).

Table 9.

Error analysis results.

Observation and results explanation:

Mismatch in CA: The calculated CA (5.098247021) is significantly higher than the expected CA (0.01). This indicates a potential scaling issue or misalignment in the expected values.

Dynamic Assessment Dominance: The DA component (0.098247021) is disproportionately large compared to the Cn value (0.121572299). This heavily influences CA.

Scaling Issue in CA: The scaled competency indicator CA(0.01) scaled = 0.01 does not seem to align with the calculated CA, suggesting a mismatch in scaling.

3.1. Component Weight Normalization

To make changes to the formula, the normalization of the weights of all components was used, the results were recalculated, and a new analysis of the data was carried out, which is given in Table 10.

Table 10.

Error analysis results for updated data.

The Error column (Table 8) shows low and negative values, indicating that the estimated CA (after scaling) is lower than the expected CA. That is, the formula parameters are good but can be adjusted to better match predictions with expectations and improve accuracy. Normalization coefficients, NW, for weighted parameters, in accordance with the importance of the parameters chosen by a course developer, were determined and used to recalculate the data.

NWD = 0.5, NWT = 0.3, and NWECTS = 0.2, where ΣNW = 1

The distribution of the normalization coefficients, N, of the weight characteristics of the parameters 0.5, 0.2, and 0.3 reflects the value of the weight parameters in this generalized course and can be changed by the course developer depending on the content of a particular course.

The scaling factor used for the results is approximately 5.256 (matches all rows with minor rounding differences) [21]. This factor was applied to bring the raw CA values to a smaller scale for comparison with Expected_CA. Scaling ensures that the calculated values (CA) are expressed in the same range as Expected_CA. Without scaling, mismatches in ranges can lead to misleading error values, since the raw CA values may naturally be in a larger or smaller range. In this case, CA was divided by ~5.256 to get CA scaled [19] (dataset_4).

3.2. The Proposed Solution Validation

The proposed method was tested on data from the e-course Applied Mathematics 123, 6 ECTS, the most used subject for first-year technical students (Table 1).

The testing carried out confirmed the validity of the formula using machine learning algorithms [19] (code_5) with ratings of 0.1 and 0.5. The data are presented in Table 11, and the data for the entire table with results are available at the link [19] (dataset_5).

Table 11.

Error analysis results for e-course Applied Mathematics 123, 6 ECTS data.

The Error column shows low and negative values, below the expected CA. Normalization factors N for the weighted parameters, according to the importance of the parameters chosen by the course developer, were also used to rescale the data:

NWD = 0.5;

NWT = 0.3;

NWECTS = 0.2;

where ΣNW = 1.

The scaling factor used for the results is approximately 5.256 and was applied to bring the raw CA values to a smaller scale for comparison with Expected_CA.

4. Discussion and Future Directions

The developed method, verified using computer assessment, allows flexible use of competency distribution coefficients for developing the e-course structure. The use of the weighted component formula with a dynamic evaluation parameter has good prospects for visualizing the student’s trajectory. This visualization enables real-time feedback: teachers can adjust teaching strategies, and students can adjust their learning methods with the ability to track how these changes dynamically impact competency scores.

Using information about course learning resources improves the analysis of their use, learning paths, and assessment of student competence. Competency indicators are organized into graduated sub-levels, and students are expected to have developed certain skills at a previous stage of learning. The purpose of competency indicators is to help understand student development. Competency indicators record an identified stage of learning at one of three levels:

- Beginning—Understanding: The student has mastered the skill but only occasionally applies their understanding.

- Developing—Comparing: The student is beginning to apply their understanding.

- Advanced—Using: The student continually applies and develops knowledge and skills.

E-learning comes with various uncertainties that can impact both the learning process and learning outcomes. The main factors contributing to these uncertainties are:

- Technological Issues: Access to reliable technology is fundamental to e-learning. Students often face issues such as limited internet connectivity, lack of suitable devices, and technical problems that can hinder their learning. These issues can lead to decreased motivation and engagement [22].

- Learner Motivation and Self-Discipline: The flexibility of e-learning requires learners to be self-motivated and disciplined. However, some students find it difficult to manage their time and stay motivated without the structure of a traditional classroom. This lack of self-regulation can lead to procrastination and failure to complete assignments [23].

- Social Interaction and Engagement: E-learning environments lack face-to-face interaction, leading to feelings of isolation among students. Lack of immediate tutor feedback and student collaboration can negatively impact learning outcomes and reduce satisfaction with the learning experience [24].

- Assessment and Feedback: Providing timely and constructive feedback in an e-learning environment can be challenging. Delays or lack of personalized feedback can prevent students from understanding their progress and areas for improvement [22].

- Course Design and Content Delivery: Poorly designed courses that do not utilize interactive elements can lead to disengagement. Effective e-learning requires thoughtful integration of multimedia, interactive activities, and clear instructions to promote active learning [23].

To address these uncertainties and further enhance the effectiveness of the developed method, the following strategies are proposed:

- Improving Technology Infrastructure: Ensuring reliable access to technology to reduce barriers and increase engagement.

- Incorporating Interactive Elements: Adding discussion forums, group projects, and collaborative activities to foster social interaction and reduce isolation.

- Diversifying Assessment Strategies: Using a variety of assessment methods and providing timely, personalized feedback to help students understand their progress.

- Designing Engaging Courses: Integrating multimedia and interactive elements to cater to diverse learning styles and promote active engagement.

Additionally, expanding the use and improvement of research methodology in testing methodology and computational formulas for use in AI algorithms within various courses will help test the effectiveness of course development.

The use of computer analysis and assessment in evaluating competence is semi-automatic, which affects the convenience of data processing and somewhat reduces the accuracy of the results. Therefore, additional automation of the data processing process will increase accuracy and reduce time spent on research.

Integrating visualization into existing learning management systems (LMS) to automatically update competency assessments and tools as learning is recorded and the student’s knowledge portfolio is updated will allow for real-time tracking and feedback. Visualization provides a holistic approach to real-time learning optimization, leading to continuous improvement and deeper understanding for both students and instructors.

Developing additional attributes such as time spent on a learning resource and resource difficulty ratings will provide a more complete picture of learning progress and tool effectiveness.

Statistical data often exhibit specific distribution characteristics that can significantly affect data analysis. Balancing these characteristics requires specialized techniques, including weight optimization and regularization methods. For example, the study by [25] presents a nonparametric regularization approach to improve the accuracy of processing amplitude-modulated (AM) and frequency-modulated (FM) signals. This method includes applying regularization techniques that do not assume a specific parametric form for the underlying model, thereby providing flexibility in capturing complex signal structures. By incorporating such methods, the study achieves improved demodulation accuracy, demonstrating the effectiveness of weight optimization in handling complex data characteristics.

5. Conclusions

The aim of this study was to develop and validate a weighted attribute method for optimizing e-learning using computer-aided evaluation of the generalized e-course structure.

The generalized e-course structure was determined based on four e-courses used continuously in the period 2021–2024 at TTK UAS, Estonia, for technical specialties: two 6 ECTS e-courses and two 3 ECTS e-courses.

In developing the course structure, the expected competencies in studying the educational material were used, considering not only Bloom’s competency levels, but also certain sublevels, considering the groups of basic verbs describing competencies—understands/knows, evaluates/compares, implements/applies—with the necessary terminology of the subject of study.

The study used the developed method of weighted attribute values in the formula for calculating the competency indicator with a dynamic assessment parameter, with normalization and scaling coefficients of the weighted parameters for updating the data. Validation of the developed method demonstrated minor errors and confirmed the accuracy and precision of the calculations.

These calculations enable 3D data visualization, enhancing assessment and decision-making. Visualization of the resulting calculated data transforms complex data into an understandable, practically applicable format. Students can better focus on areas for improvement and independently plan their learning path. At the same time, teachers can personalize learning and improve the curriculum. Together, they can continuously optimize the learning path for maximum development of competencies. Using this visualization iteratively, both students and teachers can ensure that learning is effective, targeted, and adaptive.

Author Contributions

Conceptualization, O.O.; methodology, O.O.; software, O.O.; validation, O.O. and E.S.; formal analysis, O.O.; resources, O.O. and E.S.; data curation, O.O.; writing—original draft preparation, O.O.; writing—review and editing, E.S.; visualization, O.O.; supervision, O.O.; project administration, O.O.; funding acquisition, O.O. and E.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All materials used for the article are openly available for use. The authors provide open access for the use of processed data uploaded to the platform figshare and available via the link in the References section. The authors provide open access for using the code in an interactive Colab Notebook, uploaded to the GitHub platform and available at the References link.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| OECD | Organization for Economic Co-operation and Development |

| HEI | Higher Education Institutions |

| CBE | Competency-based education |

| IRT | Item Response Theory |

| HetIoT | Heterogeneous Internet of Things |

| TTK UAS | TTK University of Applied Sciences |

| ECTS | European Credit Transfer System |

| LMS | Learning Management Systems |

| LO | Learning Outcomes |

| AM | amplitude-modulated |

| FM | frequency-modulated |

References

- Ovtšarenko, O. Opportunities of Machine Learning Algorithms for Education. Discov. Educ. 2024, 3, 209. [Google Scholar] [CrossRef]

- Hansen, C.T. Applications of Artificial Intelligence in Educational Assessment: Opportunities, Challenges, and Future Directions; Summit Institute: Auckland, New Zealand, 2024. [Google Scholar]

- European Parliament and Council of the European Union. Recommendations on the European Qualifications Framework for Lifelong Learning (2008/C 111/01). Off. J. Eur. Union 2008, C111, 1–7. [Google Scholar] [CrossRef]

- OECD. OECD Future of Education and Skills 2030: OECD Learning Compass 2030; OECD Publishing: Paris, France, 2019; Available online: https://www.oecd.org/education/2030-project/ (accessed on 15 January 2025).

- Stewart, C.L.; Dewan, M.A. A Systemic Mapping Study of Business Intelligence Maturity Models for Higher Education Institutions. Computers 2022, 11, 153. [Google Scholar] [CrossRef]

- Margienė, A.; Ramanauskaitė, S.; Nugaras, J.; Stefanovič, P.; Čenys, A. Competency-based e-learning systems: Automated integration of user competency portfolio. Sustainability 2022, 14, 16544. [Google Scholar] [CrossRef]

- Nabizadeh, A.H.; Gonçalves, D.; Gama, S.; Jorge, J.; Rafsanjani, H. Adaptive learning path recommender approach using auxiliary learning objects. Comput. Educ. 2020, 147, 103777. [Google Scholar] [CrossRef]

- Malhotra, R.; Massoudi, M.; Jindal, R. Shifting from traditional engineering education towards competency-based approach: The most recommended approach-review. Educ. Inf. Technol. 2023, 28, 9081–9111. [Google Scholar] [CrossRef]

- Kennedy, D. Writing and Using Learning Outcomes: A Practical Guide; University College Cork: Cork, Ireland, 2006. [Google Scholar]

- Imamah, Y.; Djunaidy, A.; Purnomo, M.H. Enhancing students’ performance through dynamic personalized learning path using ant colony and item response theory (ACOIRT). Comput. Educ. Artif. Intell. 2024, 7, 100280, ISSN 2666-920X. [Google Scholar] [CrossRef]

- Child, S.F.; Shaw, S.D. A purpose-led approach towards the development of competency frameworks. J. Furth. High. Educ. 2020, 44, 1143–1156. [Google Scholar] [CrossRef]

- Cardone, G.; Foschini, L.; Bellavista, P.; Corradi, A.; Borcea, C.; Talasila, M.; Curtmola, R. Fostering participation in smart cities: A geosocial crowdsensing platform. IEEE Commun. Mag. 2013, 51, 112–119. [Google Scholar]

- Qiu, T.; Chen, N.; Li, K.; Atiquzzaman, M.; Zhao, W. How Can Heterogeneous Internet of Things Build Our Future: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 2011–2027. [Google Scholar] [CrossRef]

- Lewis, S. PISA, Policy and the OECD; Springer: Singapore, 2020. [Google Scholar]

- Widiana, I.W.; Triyono, S.; Sudirtha, I.G.; Adijaya, M.A.; Wulandari, I.G.A.A.M. Bloom’s revised taxonomy-oriented learning activity to improve reading interest and creative thinking skills. Cogent Educ. 2023, 10. [Google Scholar] [CrossRef]

- Biggs, J.B.; Collis, F.K. Evaluating the quality of learning: The SOLO taxonomy (Structure of the Observed Learning Outcome); Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Wong, S.-C. Competency Definitions, Development and Assessment: A Brief Review. Int. J. Acad. Res. Progress. Educ. Dev. 2020, 9, 95–114. [Google Scholar] [CrossRef] [PubMed]

- European Commission: Directorate-General for Education, Youth, Sport and Culture. ECTS Users’ Guide; Publications Office: 2009. Available online: https://data.europa.eu/doi/10.2766/88064 (accessed on 15 January 2025).

- Ovtsarenko, O. Python Algorithms and Datasets. Figshare Collect. 2024. Available online: https://figshare.com/account/home#/collections/7590089 (accessed on 23 January 2025).

- Apicella, A.; Isgrò, F.; Pollastro, A.; Prevete, R. On the effects of data normalization for domain adaptation on EEG data. Eng. Appl. Artif. Intell. 2023, 123 Pt A, 106205. [Google Scholar] [CrossRef]

- Sharma, V. A Study on Data Scaling Methods for Machine Learning. Int. J. Glob. Acad. Sci. Res. 2022, 1, 31–42. [Google Scholar] [CrossRef]

- Romi Ismail, M. E-Learning Success: Requirements, Opportunities, and Challenges. In Reimagining Education-The Role of E-Learning, Creativity, and Technology in the Post-Pandemic Era; BoD–Books on Demand: Hamburg, Germany, 2023. [Google Scholar] [CrossRef][Green Version]

- Higley, M. e-Learning: Challenges and Solutions. e-Learn. Ind. 2023. Available online: https://elearningindustry.com/e-learning-challenges-and-solutions (accessed on 15 January 2025).

- Regmi, K.; Jones, L. A systematic review of the factors–enablers and barriers–affecting e-learning in health sciences education. Bmc Med. Educ. 2020, 20, 91. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Peng, S.; Guo, B.; Xu, P. Accurate AM-FM signal demodulation and separation using nonparametric regularization method. Signal Process. 2021, 186, 108131. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).