1. Introduction

Speech is the basic form of communication that humans use every day. Because of this, the possibility of communicating with machines through speech has been of interest for many decades. Over the past few years, language and speech models have acquired significant importance in society, mainly due to the recent advances in Natural Language Processing (NLP) and deep neural networks (DNNs). Current models, like Whisper [

1] and Wav2Vec 2.0 [

2], allow users to have fluent communication with conversational bots, either over text or speech, in real time. The improvements in speech processing have also been reflected in many other system features, for instance, the automatic captioning of speech generation for artificial text readers.

Despite these improvements, there are still many scenarios where speech processing needs improvement [

3,

4]. Automatic Speech Recognition (ASR) has achieved high scores in performance for common languages, but for low-resource languages, the performance of ASR models is still far from acceptable. In the case of the Arabic language, the word error rate is around 25% or even higher in some cases [

5,

6]. As demonstrated in previous studies, to improve their performance, ASR models can be trained applying pre-processing techniques such as segmentation, word modelling, or the detection of different environmental or speaker variations [

7,

8]. These tasks are modelled as speech classification tasks and can be easy to address when sufficient labelled data are available. However, for many languages, it is hard to find enough transcribed and labelled data to train a robust ASR model [

9].

In this work, we propose a pre-processing speech model that can perform three tasks at once: prosodic boundary detection (PBD), speaker change detection (SCD), and accent classification (AC). Our hypothesis is two-fold: (i) that a Wav2Vec 2.0 base model, as a feature extractor block, can be fine-tuned to extract the features from the audio that are needed to perform the three classification tasks correctly, and that (ii) this transformer block can generate context representations that share all these features. The most distinctive feature of our model is, precisely, that it is trained only on audio data. We assume that this can help to improve speech recognition performance in terms of precision and resource optimisation.

The rest of the text is organised as follows.

Section 2 presents a brief overview about past and recent methods for speech processing.

Section 3 describes the methodology and materials used in our experiments.

Section 4 reports on the experimental results, which are then discussed in

Section 5. Finally,

Section 6 provides the main conclusions and directions for future work.

2. A Brief Overview

In computer science, speech processing is defined as the study of speech signals and their processing methods. It encompasses a range of tasks, including classification, recognition, transformation, and the generation of speech data, among others. Speech processing tasks have traditionally been based on signal processing [

10,

11] and linguistic features [

12,

13,

14] until the growth of Deep Learning (DL) [

15,

16,

17,

18]. In this section, we focus on DL-based techniques applied to three tasks which are particularly relevant to our research: phrase boundary detection (PBD), speaker change detection (SCD), and accent classification (AC). As a convenient theoretical framework, a short description of the techniques applied to these tasks before and after the popularity of DNNs will be presented in order to locate this study in context.

Before Deep Learning, Hidden Markov Models (HMM) [

11,

19] were being applied for speech recognition (ASR) and became the dominant paradigm until DNNs started to outperform them [

20,

21]. Other speech tasks, such as voice activity detection (VAD), speaker recognition and diarisation, prosody detection and segmentation, among others, play an important role as speech pre-processing tasks to ensure optimal performance in ASR and speech generation (text-to-speech, TTS). Regarding these tasks, numerous alternative methods have been employed prior to the emergence of DNNs. Signal processing algorithms and linguistic feature methods have demonstrated a notable performance for VAD [

10], speaker diarisation [

22], classification problems [

23], and prosody-related tasks [

13].

Even though some of the former methods and algorithms are still in use [

24,

25], DNNs are currently the most popular method for speech pre-processing, as well as for ASR, to the point where most of the new methods for these tasks are based on or derived from DNNs (see [

8] for a comprehensive review).

2.1. Speech Processing Methods and Techniques

As DNNs became the standard for ASR tasks, the encoder-decoder architecture obtained the most promising performance. In this approach, the encoder extracts features from the input speech, while the decoder transforms those features into the desired output, namely a transcription [

26]. In this manner, the encoder learns to generate representations or embeddings that represent the extracted features. Different methods of representation are employed in the literature for speech processing tasks. For instance, i-vectors [

27], d-vectors [

28], and x-vectors [

29] are embeddings based on convolutional NNs (CNNs) and recurrent NNs (RNNs) that have resulted in improved performance in tasks such as speaker diarisation or VAD. However, transformer NNs [

30] have surpassed RNNs and CNNs in the context of speech processing tasks. The utilisation of self- and cross-attention transformer NNs allows parallelisation and performance improvements that explain the success of them in Natural Language Processing (NLP) tasks [

31].

2.2. Automatic Speech Segmentation

Despite the mentioned computational progress, automatic speech segmentation is still one of the most difficult challenges in speech processing. The variation in speaker characteristics, speaking style, and environment make the learning process of prosodic boundaries hard for machines [

32]. However, detecting and processing prosodic phrasing is relevant for many speech processing tasks as it can help to improve naturalness in speech synthesis and to enhance audio data for training [

33]. In the case of speech synthesis, the common scenario for the detection of prosodic boundaries is performed by relying solely on text [

34], while other systems rely solely on acoustic information [

35], or on a combination of both lexical and acoustic information [

24]. For the purpose of this study, we opted for the detection of prosodic boundaries based solely on acoustic information (cf.

Section 3. ‘Materials and Methods’).

Speaker change detection (SCD) is another speech segmentation task used to determine the boundaries between speakers in a conversation. In simpler words, SCD is the action to detect the moments when a speaker stops talking and another speaker starts to talk, regardless of the identity of the speakers. SCD is part of speaker diarisation systems (who talks and when) [

36], but it is also useful for speaker tracking in video data [

37] and for transcribing audio with multiple speakers [

38]. There are many approaches to performing this task based on DNNs, such as the previously mentioned x-vectors [

39] or methods based on self-attention mechanisms [

40,

41]. Overlapped segments (i.e., several speakers talking at the same time) are a common, recurrent problem in SCD. Many of the existing methods opt for the omission of the overlapped segments. However, similar DNN-based approaches are being applied specifically to overlapped speech detection [

42,

43,

44]. In addition, adding extra information during training through multi-tasking techniques has shown that multi-task models can achieve better results than models trained for only one task [

45].

2.3. Accent Identification

Before DNNs, studies in accent identification focused on leveraging linguistic features in statistical approaches. For instance, some of the features that have been combined with statistical analysis are prosodic parameters, syllable structure, and speaker characteristics [

23,

46,

47]. Recent studies are centred on DNNs for spoken language identification [

48], the generation of representations around features related to accent identification [

49,

50,

51] or other DL approaches for accent classification [

52,

53,

54]. Other recent approaches have explored the possibilities of combining accent identification and ASR as a viable way to improve the performance of ASR models [

55]. It is notable to mention that some methods, like the one presented by Ghorbani and Hansen [

56], have been able to reach better scores than human-perceived scores.

2.4. Joint and Multi-Task Learning in Speech Processing

Joint learning and multi-task learning are both approaches used in machine learning to leverage shared information across tasks. In speech processing, this approach was demonstrated to be beneficial and to achieve better performance in many tasks [

57]. The method presented in [

58] shows that joint training could help the encoder of the NN to learn transferable, robust, and problem-agnostic features that carry relevant information from the speech signal, which contributes to discovering general representations. For speech segmentation, joint and multi-task frameworks have been applied to prosodic boundary detection and SCD with successful results [

35,

45,

59]. With regard to joint learning methods involving accent classification, recent studies have shown high accent classification performance by adding this task to speech recognition models [

60,

61,

62,

63].

3. Material and Methods

The main goal of the research presented in this paper is to develop an innovative multi-task training approach for PBD, SCD, and accent classification by relying only on acoustic information. To address this multi-task problem, we fine-tuned a Wav2Vec 2.0 model for audio frame classification with three classification layers on top of the model, one layer for each task. For this experiment, we used an American English oral corpus as a base dataset for training and validation of the models. This corpus was pre-processed using different methods for each task, which led to the generation of new datasets adapted for the purpose of the experiment. The following sections provide detailed information on the base dataset selected and the transformations applied to generate the final datasets, as well as the methods applied for the training, evaluation, and validation of the models.

3.1. Speech Corpus Used in the Experiment

The selected corpus for the experiment is the Santa Barbara Corpus of Spoken American English (SBCSAE) (the SBCSAE corpus can be accessed and downloaded here:

https://www.linguistics.ucsb.edu/research/santa-barbara-corpus (accessed on 15 January 2025)) [

64]. This is a prosodically annotated speech corpus (also called a spoken corpus), which encompasses a total of 60 transcribed and annotated conversations, including the timestamps at the level of individual intonation units. The corpus is based on a large body of recordings of naturally occurring spoken interaction from all over the United States, representing a wide variety of people of different regional origins, ages, occupations, genders, and ethnic and social backgrounds. The predominant form of language use represented is face-to-face conversation, but the corpus also documents many other ways people use language in their everyday lives: telephone conversations, card games, food preparation, on-the-job talk, classroom lectures, sermons, storytelling, town hall meetings, tour-guide spiels, and more. The transcriptions are formatted in one utterance per line, together with the initial and final timestamps, the name of the speaker, and the intonation information of the utterance. In addition, the metadata of each conversation includes a short description and the location where it was recorded. All this information makes the SBCSAE a useful dataset for many supervised learning tasks in speech processing. Given the purpose of this work, we are specifically interested in the timestamps for SCD and PB, and the location information for the accent detection.

3.2. Data Preparation

This section covers all the automatic pre-processing and annotation tasks applied to the dataset used for the experiments. The first two subsections explain the design of the annotation process for each task, while the last one describes how all the processes were applied automatically.

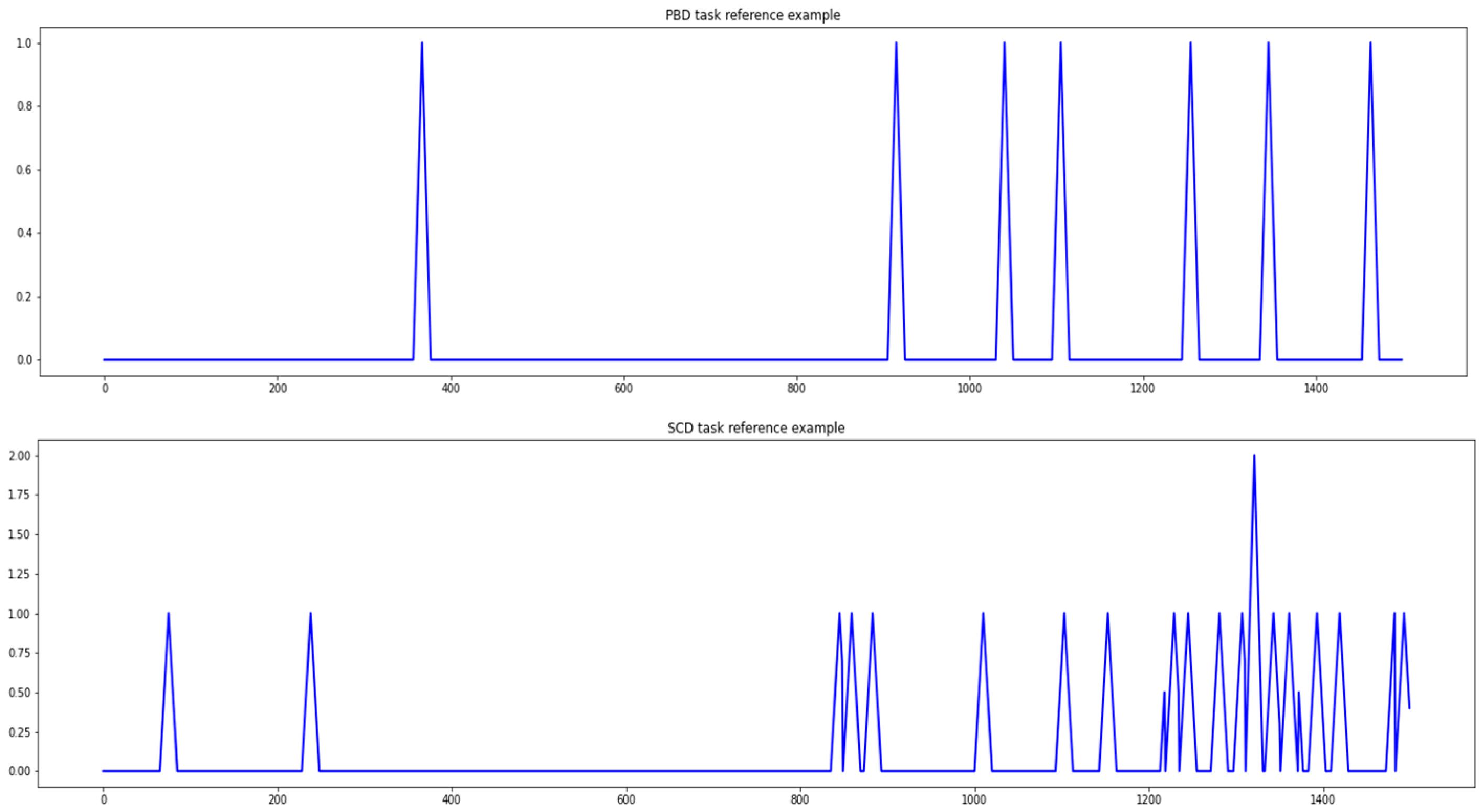

3.2.1. Audio Frame Labels

Audio segmentation can be seen as the act of slicing the audio into pieces following a specific criterion. Our approach aims at detecting the specific frames in which the speaker finishes an utterance or ends their intervention. For this reason, we needed to define the frames and assign specific labels for each frame. We followed the methodology presented in [

65], where the authors trained a prosodic boundary detection (PBD) model for the Czech language. In their work, the authors assigned a label every 20 ms of audio, giving a 1 if the frame contains a boundary, or a 0 if not. In addition, to avoid delays from the manual annotation, the frames around each prosodic boundary (PB) were given a label between 0 or 1, thus creating a linearly increasing and decreasing interval. For the PBD task, we followed this exact same method applied to the SBCSAE.

The SBCSAE includes the name of the speaker for each utterance in the transcript file. We used this information to create the reference data for the SCD task in a similar manner to previously mentioned PB references: we gave the label ‘1’ to the frames when a new speaker starts to talk and ‘0’ for later utterances of the same speaker. This method for label setting has one problem: it does not consider whether two or more speakers are talking at the same time. To address this issue, the SBCSAE corpus includes the labels “BOTH” and “MANY” when more than one person is speaking. This annotation was adapted to our labelling method by assigning the label ‘2’ to the frames that correspond to these utterances. This is also known as Overlapping Speech Detection (OSD). In addition, the corpus also includes specific labels for timestamps where a sound or environmental noise occurs. Following our labelling process, we assigned the label ‘3’ to these timestamps. In this way, the label ‘3’ indicates frames without voice activity. Although our dataset is focused on SCD, these annotations enable the model to also perform OSD and VAD.

3.2.2. Accent Classification Annotation

The labelling process for accent detection differs considerably from the process applied to SCD and PBD (see above). The SBCSAE includes the city and state from the United States corresponding to each of the conversations as part of the metadata of the audio. For this reason, it was necessary to label the whole audio with its accent rather than splitting the audio into frames. This poses another challenge, that is, to merge the audio classification task with two audio frame classification tasks. To solve this, we assigned the corresponding accent label to every frame of the audio and then reduced the set of frame labels to only one for the whole audio.

To establish the number of classes for this task, instead of assigning the labels based on the states, we applied the classification of the major regional dialects of American English as demarcated by Labov et al. [

66]. This classification divides the map of the United States into six dialect regions.

Table 1 shows the six dialects, the states that belong to each region and the labels that we assigned for each dialect to prepare the data for this task.

3.2.3. Automatic Annotation Process

Once the labelling process is completed, the annotation files created from the SBCSAE are converted to the desired format (see

Section 3.2.1. ‘Audio Frame Labels’ and

Section 3.2.2. ‘Accent Classification Annotation’). In order to automate the process, various Python (v3.8.12) scripts were designed to streamline a variety of sequential tasks. The first task consists of splitting the audios into chunks of 30 s with a 15 s overlap between one audio and the next, to avoid context missing. Then, reference labels for PBD and SCD tasks are generated from the timestamps in the original annotations, and the speaker labels. The result is a set of 1500 labels for each task.

Figure 1 shows example representations of the set of labels for PBD and SCD. It depicts the graphical representation of the reference labels for 30 s audio. Given that one label is assigned every 20 ms, the Y axis represents the number of the frame (1500 frames for the 30 s audio) while the X axis shows the value assigned to each frame. The last script assigns the labels corresponding to the dialects according to the name of the state which appears in the metadata. The part of the scripts that perform the extraction of the key information were coded using regular expressions. The extracted data were saved as JSON files.

Once all the conversion and annotation processes described had been performed, the target dataset was created through the Datasets package from HuggingFace (

https://huggingface.co/docs/datasets/index (accessed on 18 January 2025)). This package allows the organisation of the dataset in three splits: train, test, and validation. The entries inside each one of the splits contains the attributes “path” and “label”. The “path” is the absolute path to the audio file and the “label” includes the three sets of reference labels, one set for each task. After the generation of the dataset format, the entries are randomly divided into the three splits as follows: 80% of the dataset for training, 10% for testing, and another 10% for validation.

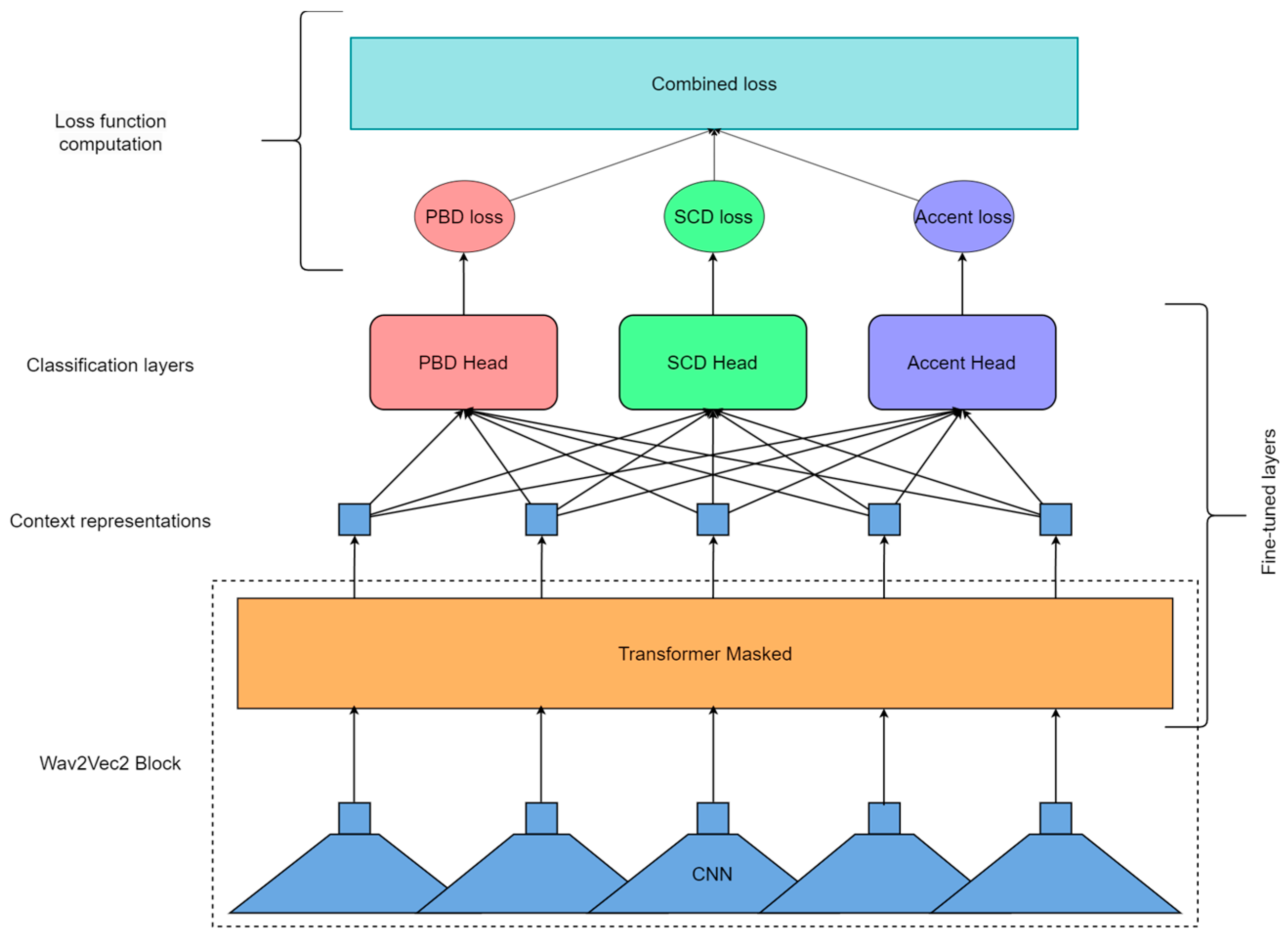

3.3. Model Architecture and Experimental Design

Wav2Vec 2.0 (hereafter referred to as “wav2vec2”) is a pretrained speech model whose architecture is mainly based on transformers [

2]. The model has a structure of three main parts: the feature encoder, the context network, and the quantisation module. The encoder consists of several blocks of temporal convolutions that normalise the speech input and outline the number of time-steps of the speech which, in turn, serve as input to the context network. The context network is a transformer NN which learns contextualised representations from the output of the encoder. The quantisation module discretises the output of the feature encoder via product quantisation for self-supervised training. The wav2vec model is able to learn representations from speech audio in the same way language models learn linguistic representations from text, achieving better results in ASR than previous state-of-the-art models [

2].

In this study, we leveraged the language representations generated by wav2vec2 to perform a supervised learning experiment. This experiment consisted of fine-tuning and validating a wav2vec2 model for multi-task audio frame classification. To implement the desired architecture of the model, we used the transformers (

https://huggingface.co/docs/transformers/index (accessed on 18 January 2025)) package by HuggingFace, which already includes an implementation of an audio frame classification architecture using a wav2vec2 encoder and a classification head on top. Given that we need three classification heads instead of only one, we built our own architecture for audio frame classification based on the existing implementation in the transformers package. Each one of the classification layers performs its own task: one of them for PBD, another for SCD, and the last one for accent classification. During training, the context representations generated by the wav2vec2 block are given to each one of the classification heads and they produce their own results. This process is illustrated in

Figure 2.

Our architecture is composed of a wav2vec2 layer [

2] as a feature extractor and three classification heads, one for each task. During training, the loss function is computed through a two steps process: first, the loss for each classification layer is calculated separately, and then given to a combined loss function as weighted parameters. This process is further detailed in the next section (cf.

Section 3.4).

3.4. Training, Testing, and Validation Processes

Following previous studies (cf.

Section 2.4 ´Joint and Multi-Task Learning in Speech Processing´), we focused on multi-task training approaches as a key part of the training methodology. To train our model, we used the wav2vec2 base model which can be obtained from the HuggingFace models repository (

https://huggingface.co/facebook/wav2vec2-base (accessed on 20 January 2025)). This is the wav2vec2 block of our architecture. As shown in

Figure 2, only the transformer layer of this block is fine-tuned during training. On the other hand, the three classification layers are randomly initiated and fully trained. The training is a supervised learning process, as the dataset includes the audio as inputs and the reference labels to adjust the loss function. For each step of the training, the context representations generated by the wav2vec2 block are given to the classification layers as the hidden states, and each one of the classification layers produces their corresponding label outputs. Following the starting hypothesis (cf.

Section 1. ‘Introduction’), the Transformer layer of the wav2vec2 block was fine-tuned to extract the necessary features to perform the three classification tasks and generate the context embeddings that represent those features.

To achieve our goal, we designed a composed loss function which is the combination of the loss from each of the classification layers. For PBD and SCD we applied mean squared error (MSE) as the loss function, and cross-entropy (CE) was the loss function applied for accent classification (AC). The reason for this difference in loss calculation is that for AC the labels are the same for every frame; therefore, the output of the layer is converted into a probability distribution instead of a set of labels. The composed loss is calculated as the weighted average of the three individual functions as follows:

The challenge of this loss function was to determine the optimal weights (

) applied to every task. To address this challenge, we ran a set of training experiments, using different parameters and weights. This process helped to obtain the best context representations by fine-tuning the transformer layer. The post-training results are presented below (cf.

Section 4. ‘Results’).

Next, the fine-tuned models were evaluated through test and validation; for this task, the corresponding subsets from the dataset were used. Testing was carried out during fine-tuning, after each epoch. To this end, a composed metric was used, similarly to what we carried out in the case of the loss function. However, we used accuracy as a metric for accent classification instead of CE, and the MSE values from PBD and SCD were inverted (1—MSE). After training, the best checkpoint was selected based on the results of the test phases.

The best weights for the loss function were established on the basis of the results of the validation phase, starting with the same value for each task (0.33). With this value, the AC task was the one with the highest accuracy, while SCD reported the lowest accuracy. Finally, the selected weights were obtained from the training in which the penalisation in accuracy for the AC task was not found to be significant, while the accuracy rates for SCD and PBD were the highest.

4. Results

Once the model was fine-tuned, the validation was performed for each task separately by applying post-processing and different metrics. Post-processing consists of the reverse conversion of the label set of SCD and PBD classifiers by turning the labels into timestamps. After the reverse conversion, the F1 metric is applied to measure the performance of SCD and PBD tasks. In the case of the accent classifier, its performance is measured using the accuracy metric (ACC).

4.1. Post-Processing Approach and Validation Measures

To convert the output labels given by the model back to timestamps, we applied different criteria to the PBD outputs and the SCD outputs. For the first task, the frames labelled with ‘1’ are the PBs detected, although the model is not entirely accurate when assigning the labels. For that reason, we established a threshold of 0.5. Every label bigger than this threshold is considered as a PB. In the case of SCD, the approach is similar, every frame with a label above 0.5 is considered an SC timestamp. We also established thresholds for the labels corresponding to more than one person talking (1.5) and the labels for environmental sound or noises (2.5). For AC, no post-processing was needed.

After the post-processing was applied, we obtained two lists of detected timestamps for PBD and SCD, respectively, and the corresponding reference timestamps. To evaluate the performance of each task, we applied several metrics, namely Precision, Recall, and F1. In the case of the AC output, we measured the performance using ACC by comparing the reference set of accent labels and the output given by the accent classifier.

4.2. Parameter Evaluation and Model Validation

The results discussed in the section are intended to fulfil a two-fold purpose. One is to validate the methodology and the trained models. The other is to find the optimal weights for the loss function.

Table 2,

Table 3 and

Table 4 show the key results of each training, and their corresponding parameters, in terms of the applied loss weights and the number of epochs. For PBD and SCD task results,

Table 2 (PBD) and

Table 3 (SCD) contain the Precision, Recall, and F1 values. In addition,

Table 3 contains the accuracy results for the accent classification task.

In the three tables, each entry corresponds to the results from the model trained using the indicated weights for the loss function and the number of epochs. Entries are ordered by the number of epochs, since we observed that this parameter has a higher impact on the results. This can be seen in the results from models 1 and 5. Model 5 achieved a higher punctuation in all three tasks, even though both models were trained with the same weights. No improvements can be observed in learning for more than 50 epochs, which justifies the maximum number of epochs (50) presented in the tables.

The results obtained during the fine-tuning of the applied weights indicate that the task that presents the most problems for the models to predict is SCD. This is not unexpected, as the references from the SCD task present higher variations at labels per frame than the other two (see

Section 3.2 ´Data Preparation´). On the contrary, the high accuracy levels achieved at the accent classification task show that this is the task that presents fewer difficulties. Since the model only needs to predict one label for the AC task, the high difference in the results between this task and the other two is not surprising. This explains why the weight for accent classification showed the highest decrease, whereas the weight for SCD increased in each training by the highest factor (see

Section 3.2 ´Data preparation´).

Finally, our findings suggest that model 8 is the one that exhibits the best performance. Even if the results for accent classification and PBD are affected negatively by the weights of this model, it shows an appropriate balance in its performance for the three tasks.

5. Discussion

From the perspective of previous studies, this is not the first time that wav2vec2 has been leveraged to perform PBD in a single task environment or leveraged to perform multi-task speech processing. In [

65], the authors present a methodology to fine-tune a wav2vec2 model to detect PB on Czech speech data, relying solely on acoustic information. An extended approach based on the previous one was also applied for multi-task speech processing on English data in [

59], where the trained model performed SCD, OSD, and VAD as different tasks. Regarding other NN architectures, a few studies have introduced multi-task or joint learning approaches for SCD, PBD, and AC [

35,

45,

60]. In [

32], fine-tuned Whisper models for PBD on the SBCSAE presented satisfactory results, although their acoustic model performances were worse than those of their lexical models. Referring to accent classification, the authors of [

67] present a particular Multi-Kernel Extreme Learning Machine (MKELM) architecture which achieved similar scores to other DNNs architectures.

Given the possibilities of transformer models for speech processing, the objective of this work was to fine-tune a wav2vec2 model with three classification layers to perform PBD, SCD, and AC by relying only on acoustic information. We tested our method on spoken English by using the SBCSAE corpus, like most of the models considered in this study. To the best of our knowledge, this is the first experiment that applies one single model to perform the three target tasks at the same time. The results obtained during the fine-tuning experiments show that our model can achieve a performance score close to previous methods or even higher.

A comparison between our model and the most relevant previously proposed models is highlighted in

Table 5. This comparison shows that our model achieved slightly lower performance scores for SCD and PBD than previous methods, but its performance on AC is the best. The results of the proposed model are highlighted in bold (

Table 5).

Our model exhibits lower performance for PBD than the models presented in [

35,

65], but higher than the model presented in [

32]. In [

65], the described method is applied to the Czech language, and the model presented in [

35] is trained on text information, not only on acoustics/audio data. This explains the difference in performance compared to our model. On the other hand, the approach in ref. [

32] is the only one that used the same corpus (SBCSAE). The performance of this model, which relies solely on acoustic information, is slightly lower than our model’s performance. Regarding the SCD task, our model has the lowest performance score on the table, although the three models were trained on the English corpora following multi-task approaches. However, the architecture and the tasks of our model are more complex than the previous models, which can explain the lower results. Finally, for AC, our model shows better performance than previous works. The main differences in this case are that the model in [

67] is not a transformer model and that the approach applied in [

60] combines ASR and AC.

Model sizes and architectures could be major factors responsible for the differences between our results and those from other previous studies. For example, refs. [

59,

65] similarly use a wav2vec2 model, but it is not the same base model applied in our experiments. The other studies applied other models and architectures, which could be instrumental for the lower performances of PBD and SCD. The evaluation data can equally impact the performance. The differences in dataset size, language, and possible biases could also explain the differences in performance for the three tasks.

In addition to the mentioned differences between our work and previous studies, it is important to note that, except for the model described in [

59], this is the only model that performs three speech processing tasks. In terms of model size, adding a new classification layer does not have a significant impact, keeping the model size almost the same. This means that our model requires one-third of the memory compared to single-task models that perform the same three tasks, allowing for the more efficient and cost-effective use of resources.

6. Conclusions and Future Work

Following our initial hypothesis, we assume that speech representations from the wav2vec2 framework could be leveraged in multi-task speech processing. In this context, the main aim of this work was to demonstrate that a single wav2vec2 acoustic model can be fine-tuned, using joint learning techniques, for PBD, SCD, and accent classification. Our findings confirm our hypothesis: our model (trained and validated on the SBCSAE) is the best performing one, as it achieved an F1 score of 0.74 for PBD and SCD and a 0.93 Accuracy score for accent classification.

To the best of our knowledge, ours is the first fine-tuned speech model which is able to perform all three tasks, namely PBD, SCD, and accent classification, by relying solely on acoustic information. This can be particularly useful in situations where no transcriptions or other language data are available. These tasks can be applied to speech pre-processing and segmentation, which can help to improve speech recognition performance in terms of precision and resource optimisation. This is the main contribution of this study. Another contribution is the data preparation process applied to the SBCSAE, which was fully automated. This makes the process reproducible, scalable, and transferable to other timestamp-annotated corpora (with some minor adjustments, if needed). Finally, the proposed model architecture further advances the state-of-the-art fine-tuning methods, as the classifier layers can be trained to perform any audio frame classification task, which means that our model can be easily adapted to fine-tune models on other corpora.

The scope of this study was limited in terms of metrics and the relatively small data sample. It would be worth considering further complementary metrics, such as coverage and measure training, as well as the inference times of the models. But the most important limitation is related to the data used for training and validation. Our findings suggest that better results could be achieved by expanding the training and validation dataset with more speech corpora. Despite the significant contributions of our study, the issue of data size is an intriguing one which could be usefully explored in further research. To this end, we intend to repeat the experiments using more English datasets, in addition to the SBCSAE, to train and validate our model. Another future solution to address data limitations could be the application of different transfer learning techniques. One of the advantages of our model is that the pre-trained base model can be altered without major changes. Given this, we intend to test transfer learning from larger wav2vec2 or other models and larger datasets. In addition, several issues related to the limitations of our study still remain unsolved. For instance, we must apply more metrics to expand the evaluation of the methods analysed. Finally, a natural progression for this work would be to test our model on other languages and on multilingual data (e.g., parallel corpora). In addition, our methodology could be applied to test other speech models and compare the results. Another possible study could involve applying our model to cascaded or multi-model speech systems, for instance, in a complete speech recognition system. This would be a fruitful area for further work.

Author Contributions

Conceptualisation, F.J.L.F. and G.C.P.; methodology, F.J.L.F. and G.C.P.; software, F.J.L.F.; validation, F.J.L.F.; formal analysis, F.J.L.F.; investigation, F.J.L.F. and G.C.P.; resources, G.C.P.; data curation, F.J.L.F.; writing—original draft preparation, F.J.L.F. and G.C.P.; writing—review and editing, G.C.P.; visualisation, F.J.L.F.; supervision, G.C.P.; project administration, G.C.P.; funding acquisition, G.C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was carried out in the framework of various research projects on multilingual language technologies (VIP II, ref. no. PID2020-112818GB-I00/AEI/10.13039/501100011033), RECOVER, ref. no. ProyExcel_00540, Projects of Excellence, Andalusian Regional Government (Junta de Andalucía), PAIDI 2020; DIFARMA, ref. no. HUM106-G-FEDER, European Regional Development Fund (ERDF), within the Framework of the II own Research, Transfer and Dissemination Plan of the University of Malaga; and DÍGAME, ref. no. JA.A1.3-06, European Regional Development Fund (ERDF), within the Framework of the II own Research, Transfer and Dissemination Plan of the University of Malaga. The research reported in this study was funded by MCINAEI/10.13039/501100011033 (grant ref. no. PRE2021-098899).

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; Mcleavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. In Proceedings of the 40th International Conference on Machine Learning, PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Baevski, A.; Zhou, H.; Mohamed, A.; Auli, M. Wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. Adv. Neural Inf. Process. Syst. 2020, 33, 12449–12460. [Google Scholar]

- Fu, B.; Fan, K.; Liao, M.; Chen, Y.; Shi, X.; Huang, Z. Wav2vec-S: Adapting Pre-Trained Speech Models for Streaming. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 11465–11480. [Google Scholar]

- Arriaga, C.; Pozo, A.; Conde, J.; Alonso, A. Evaluation of Real-Time Transcriptions Using End-to-End ASR Models. arXiv 2024, arXiv:2409.05674. [Google Scholar]

- Besdouri, F.Z.; Zribi, I.; Belguith, L.H. Arabic Automatic Speech Recognition: Challenges and Progress. Speech Commun. 2024, 163, 103110. [Google Scholar] [CrossRef]

- Hsu, M.-H.; Huang, K.P.; Lee, H. Meta-Whisper: Speech-Based Meta-ICL for ASR on Low-Resource Languages. arXiv 2024, arXiv:2409.10429. [Google Scholar]

- Synnaeve, G.; Xu, Q.; Kahn, J.; Likhomanenko, T.; Grave, E.; Pratap, V.; Sriram, A.; Liptchinsky, V.; Collobert, R. End-to-End ASR: From Supervised to Semi-Supervised Learning with Modern Architectures. In Proceedings of the ICML 2020 Workshop on Self-Supervision in Audio and Speech, Virtual, 17 July 2020. [Google Scholar]

- Mehrish, A.; Majumder, N.; Bharadwaj, R.; Mihalcea, R.; Poria, S. A Review of Deep Learning Techniques for Speech Processing. Inf. Fusion 2023, 99, 101869. [Google Scholar] [CrossRef]

- Aldarmaki, H.; Ullah, A.; Ram, S.; Zaki, N. Unsupervised Automatic Speech Recognition: A Review. Speech Commun. 2022, 139, 76–91. [Google Scholar] [CrossRef]

- Ramírez, J.; Górriz, J.M.; Segura, J.C. Voice Activity Detection. Fundamentals and Speech Recognition System Robustness. In Robust Speech Recognition and Understanding; Grimm, M., Kroschel, K., Eds.; InTech: Rijeka, Croatia, 2007; pp. 1–22. [Google Scholar]

- Levinson, S.E.; Rabiner, L.R.; Sondhi, M.M. An Introduction to the Application of the Theory of Probabilistic Functions of a Markov Process to Automatic Speech Recognition. Bell Syst. Tech. J. 1983, 62, 1035–1074. [Google Scholar] [CrossRef]

- Allen, J.; Hunnicutt, S.; Carlson, R.; Granstrom, B. MITalk-79: The 1979 MIT Text-to-Speech System. J. Acoust. Soc. Am. 1979, 65, S130. [Google Scholar] [CrossRef]

- Taylor, P.; Black, A.W. Assigning Phrase Breaks from Part-of-Speech Sequences. Comput. Speech Lang. 1998, 12, 99–117. [Google Scholar] [CrossRef]

- Torres, H.M.; Gurlekian, J.A.; Mixdorff, H.; Pfitzinger, H. Linguistically Motivated Parameter Estimation Methods for a Superpositional Intonation Model. EURASIP J. Audio Speech Music Process. 2014, 2014, 28. [Google Scholar] [CrossRef]

- Zen, H.; Senior, A.; Schuster, M. Statistical Parametric Speech Synthesis Using Deep Neural Networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 7962–7966. [Google Scholar]

- Graves, A.; Mohamed, A.; Hinton, G. Speech Recognition with Deep Recurrent Neural Networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 6645–6649. [Google Scholar]

- Roger, V.; Farinas, J.; Pinquier, J. Deep Neural Networks for Automatic Speech Processing: A Survey from Large Corpora to Limited Data. EURASIP J. Audio Speech Music Process. 2022, 2022, 19. [Google Scholar] [CrossRef]

- Ren, Y.; Ruan, Y.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.-Y. FastSpeech: Fast, Robust and Controllable Text to Speech. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019); The MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Juang, B.H.; Rabiner, L.R. Hidden Markov Models for Speech Recognition. Technometrics 1991, 33, 251–272. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Yu, D.; Deng, L. Automatic Speech Recognition; Springer: London, UK, 2015; ISBN 978-1-4471-5778-6. [Google Scholar]

- Ning, H.; Liu, M.; Tang, H.; Huang, T. A Spectral Clustering Approach to Speaker Diarization. In Proceedings of the INTERSPEECH 2006 and 9th International Conference on Spoken Language Processing, INTERSPEECH 2006—ICSLP, Pittsburgh, PA, USA, 17–21 September 2006; International Speech Communication Association: Antwerp, Belgium, 2006; pp. 2178–2181. [Google Scholar]

- Piat, M.; Fohr, D.; Illina, I. Foreign Accent Identification Based on Prosodic Parameters. In Proceedings of the Interspeech 2008, Brisbane, Australia, 22–26 September 2008; ISCA: Winona, MN, USA; pp. 759–762. [Google Scholar]

- Kocharov, D.; Kachkovskaia, T.; Skrelin, P. Prosodic Boundary Detection Using Syntactic and Acoustic Information. Comput. Speech Lang. 2019, 53, 231–241. [Google Scholar] [CrossRef]

- Hogg, A.O.T.; Evers, C.; Naylor, P.A. Speaker Change Detection Using Fundamental Frequency with Application to Multi-Talker Segmentation. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5826–5830. [Google Scholar]

- Chorowski, J.; Bahdanau, D.; Cho, K.; Bengio, Y. End-to-End Continuous Speech Recognition Using Attention-Based Recurrent Nn: First Results. In Proceedings of the NIPS 2014 Workshop on Deep Learning, Montreal, QC, Canada, 12–13 December 2014. [Google Scholar]

- Sell, G.; Garcia-Romero, D. Speaker Diarization with Plda I-Vector Scoring and Unsupervised Calibration. In Proceedings of the 2014 IEEE Spoken Language Technology Workshop (SLT), South Lake Tahoe, NV, USA, 7–10 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 413–417. [Google Scholar]

- Wan, L.; Wang, Q.; Papir, A.; Moreno, I.L. Generalized End-to-End Loss for Speaker Verification. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4879–4883. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-Vectors: Robust DNN Embeddings for Speaker Recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 5329–5333. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Tay, Y.; Dehghani, M.; Bahri, D.; Metzler, D. Efficient Transformers: A Survey. ACM Comput. Surv. 2023, 55, 1–28. [Google Scholar] [CrossRef]

- Roll, N.; Graham, C.; Todd, S. PSST! Prosodic Speech Segmentation with Transformers. arXiv 2023. [Google Scholar] [CrossRef]

- Taylor, P. Text-to-Speech Synthesis, 1st ed.; Cambridge University Press: New York, NY, USA, 2009. [Google Scholar]

- Volín, J.; Řezáčková, M.; Matouřek, J. Human and Transformer-Based Prosodic Phrasing in Two Speech Genres. In Proceedings of the Speech and Computer. SPECOM 2021; Karpov, A., Potapova, R., Eds.; Springer: Cham, Switzerland, 2021; pp. 761–772. [Google Scholar]

- Lin, B.; Wang, L.; Feng, X.; Zhang, J. Joint Detection of Sentence Stress and Phrase Boundary for Prosody. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Shanghai, China, 25–29 October 2020; International Speech Communication Association: Winona, MN, USA, 2020; Volume 2020, pp. 4392–4396. [Google Scholar]

- Hruz, M.; Zajic, Z. Convolutional Neural Network for Speaker Change Detection in Telephone Speaker Diarization System. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4945–4949. [Google Scholar]

- Kwon, S.; Narayanan, S.S. Speaker Change Detection Using a New Weighted Distance Measure. In Proceedings of the 7th International Conference on Spoken Language Processing (ICSLP 2002), Denver, CO, USA, 16–20 September 2002; ISCA: Winona, MN, USA, 2002; pp. 2537–2540. [Google Scholar]

- Aronowitz, H.; Zhu, W. Context and Uncertainty Modeling for Online Speaker Change Detection. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 8379–8383. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; McCree, A.; Povey, D.; Khudanpur, S. Speaker Recognition for Multi-Speaker Conversations Using X-Vectors. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5796–5800. [Google Scholar]

- Fujita, Y.; Kanda, N.; Horiguchi, S.; Xue, Y.; Nagamatsu, K.; Watanabe, S. End-to-End Neural Speaker Diarization with Self-Attention. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 296–303. [Google Scholar]

- Anidjar, O.H.; Estève, Y.; Hajaj, C.; Dvir, A.; Lapidot, I. Speech and Multilingual Natural Language Framework for Speaker Change Detection and Diarization. Expert Syst. Appl. 2023, 213, 119238. [Google Scholar] [CrossRef]

- Mateju, L.; Kynych, F.; Cerva, P.; Malek, J.; Zdansky, J. Overlapped Speech Detection in Broadcast Streams Using X-Vectors. In Proceedings of the Interspeech 2022, Incheon, Republic of Korea, 18–22 September 2022; ISCA: Winona, MN, USA, 2022; pp. 4606–4610. [Google Scholar]

- Bullock, L.; Bredin, H.; Garcia-Perera, L.P. Overlap-Aware Diarization: Resegmentation Using Neural End-to-End Overlapped Speech Detection. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2020; pp. 7114–7118. [Google Scholar]

- Du, Z.; Zhang, S.; Zheng, S.; Yan, Z. Speaker Overlap-Aware Neural Diarization for Multi-Party Meeting Analysis. arXiv 2022, arXiv:2211.10243. [Google Scholar]

- Su, H.; Zhao, D.; Dang, L.; Li, M.; Wu, X.; Liu, X.; Meng, H. A Multitask Learning Framework for Speaker Change Detection with Content Information from Unsupervised Speech Decomposition. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 8087–8091. [Google Scholar]

- Berkling, K.; Zissman, M.A.; Vonwiller, J.; Cleirigh, C. Improving Accent Identification through Knowledge of English Syllable Structure. In Proceedings of the 5th International Conference on Spoken Language Processing (ICSLP 1998), Sydney, Australia, 30 November–4 December 1998; paper 0394. ISCA: Winona, MN, USA, 1998. [Google Scholar]

- Chen, T.; Huang, C.; Chang, E.; Wang, J. Automatic Accent Identification Using Gaussian Mixture Models. In Proceedings of the IEEE Workshop on Automatic Speech Recognition and Understanding, Madonna di Campiglio, Italy, 9–13 December 2001; ASRU’01. IEEE: Piscataway, NJ, USA, 2001; pp. 343–346. [Google Scholar]

- O’Shaughnessy, D. Spoken Language Identification: An Overview of Past and Present Research Trends. Speech Commun. 2025, 167, 103167. [Google Scholar] [CrossRef]

- Watanabe, C.; Kameoka, H. GE2E-AC: Generalized End-to-End Loss Training for Accent Classification. In Proceedings of the 2024 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Macau, China, 3–6 December 2024. [Google Scholar]

- Huang, H.; Xiang, X.; Yang, Y.; Ma, R.; Qian, Y. AISpeech-SJTU Accent Identification System for the Accented English Speech Recognition Challenge. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 6254–6258. [Google Scholar]

- Lesnichaia, M.; Mikhailava, V.; Bogach, N.; Lezhenin, I.; Blake, J.; Pyshkin, E. Classification of Accented English Using CNN Model Trained on Amplitude Mel-Spectrograms. In Proceedings of the Interspeech 2022, Incheon, Republic of Korea, 18–22 September 2022; ISCA: Winona, MN, USA, 2022; pp. 3669–3673. [Google Scholar]

- Matos, A.; Araújo, G.; Junior, A.C.; Ponti, M. Accent Classification Is Challenging but Pre-Training Helps: A Case Study with Novel Brazilian Portuguese Datasets. In Proceedings of the 16th International Conference on Computational Processing of Portuguese, Galicia, Spain, 14–15 March 2024; pp. 364–373. [Google Scholar]

- Subhash, D.; Premjith, B.; Ravi, V. A Robust Accent Classification System Based on Variational Mode Decomposition. Eng. Appl. Artif. Intell. 2025, 139, 109512. [Google Scholar] [CrossRef]

- Song, T.; Nguyen, L.T.H.; Ta, T.V. MPSA-DenseNet: A Novel Deep Learning Model for English Accent Classification. Comput. Speech Lang. 2025, 89, 101676. [Google Scholar] [CrossRef]

- Viglino, T.; Motlicek, P.; Cernak, M. End-to-End Accented Speech Recognition. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; ISCA: Winona, MN, USA, 2019; pp. 2140–2144. [Google Scholar]

- Ghorbani, S.; Hansen, J.H.L. Advanced Accent/Dialect Identification and Accentedness Assessment with Multi-Embedding Models and Automatic Speech Recognition. J. Acoust. Soc. Am. 2024, 155, 3848–3860. [Google Scholar] [CrossRef]

- Ravanelli, M.; Zhong, J.; Pascual, S.; Swietojanski, P.; Monteiro, J.; Trmal, J.; Bengio, Y. Multi-Task Self-Supervised Learning for Robust Speech Recognition. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6989–6993. [Google Scholar]

- Pascual, S.; Ravanelli, M.; Serrà, J.; Bonafonte, A.; Bengio, Y. Learning Problem-Agnostic Speech Representations from Multiple Self-Supervised Tasks. arXiv 2019, arXiv:1904.03416. [Google Scholar]

- Kunešová, M.; Zajíc, Z. Multitask Detection of Speaker Changes, Overlapping Speech and Voice Activity Using Wav2vec 2.0. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Zhang, J.; Peng, Y.; Van Tung, P.; Xu, H.; Huang, H.; Chng, E.S. E2E-Based Multi-Task Learning Approach to Joint Speech and Accent Recognition. arXiv 2021, arXiv:2106.08211. [Google Scholar]

- Yolwas, N.; Meng, W. JSUM: A Multitask Learning Speech Recognition Model for Jointly Supervised and Unsupervised Learning. Appl. Sci. 2023, 13, 5239. [Google Scholar] [CrossRef]

- Wang, R.; Sun, K. TIMIT Speaker Profiling: A Comparison of Multi-Task Learning and Single-Task Learning Approaches. arXiv 2024, arXiv:2404.12077. [Google Scholar]

- Shah, S.M.; Moinuddin, M.; Khan, R.A. A Robust Approach for Speaker Identification Using Dialect Information. Appl. Comput. Intell. Soft Comput. 2022, 2022, 4980920. [Google Scholar] [CrossRef]

- Du Bois, J.W.; Chafe, W.L.; Meyer, C.; Thompson, S.A.; Martey, N. Santa Barbara Corpus of Spoken American English. In CD-ROM; Linguistic Data Consortium: Philadelphia, PA, USA, 2000. [Google Scholar]

- Kunešová, M.; Řezáčková, M. Detection of Prosodic Boundaries in Speech Using Wav2Vec 2.0. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2022; Volume 13502, pp. 377–388. [Google Scholar]

- Labov, W.; Ash, S.; Boberg, C. The Atlas of North American English; Mouton de Gruyter: Berlin, Germany, 2006; ISBN 978-3-11-016746-7. [Google Scholar]

- Kashif, K.; Alwan, A.; Wu, Y.; De Nardis, L.; Di Benedetto, M.G. MKELM Based Multi-Classification Model for Foreign Accent Identification. Heliyon 2024, 10, e36460. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).