Deep Learning-Based Citrus Canker and Huanglongbing Disease Detection Using Leaf Images

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

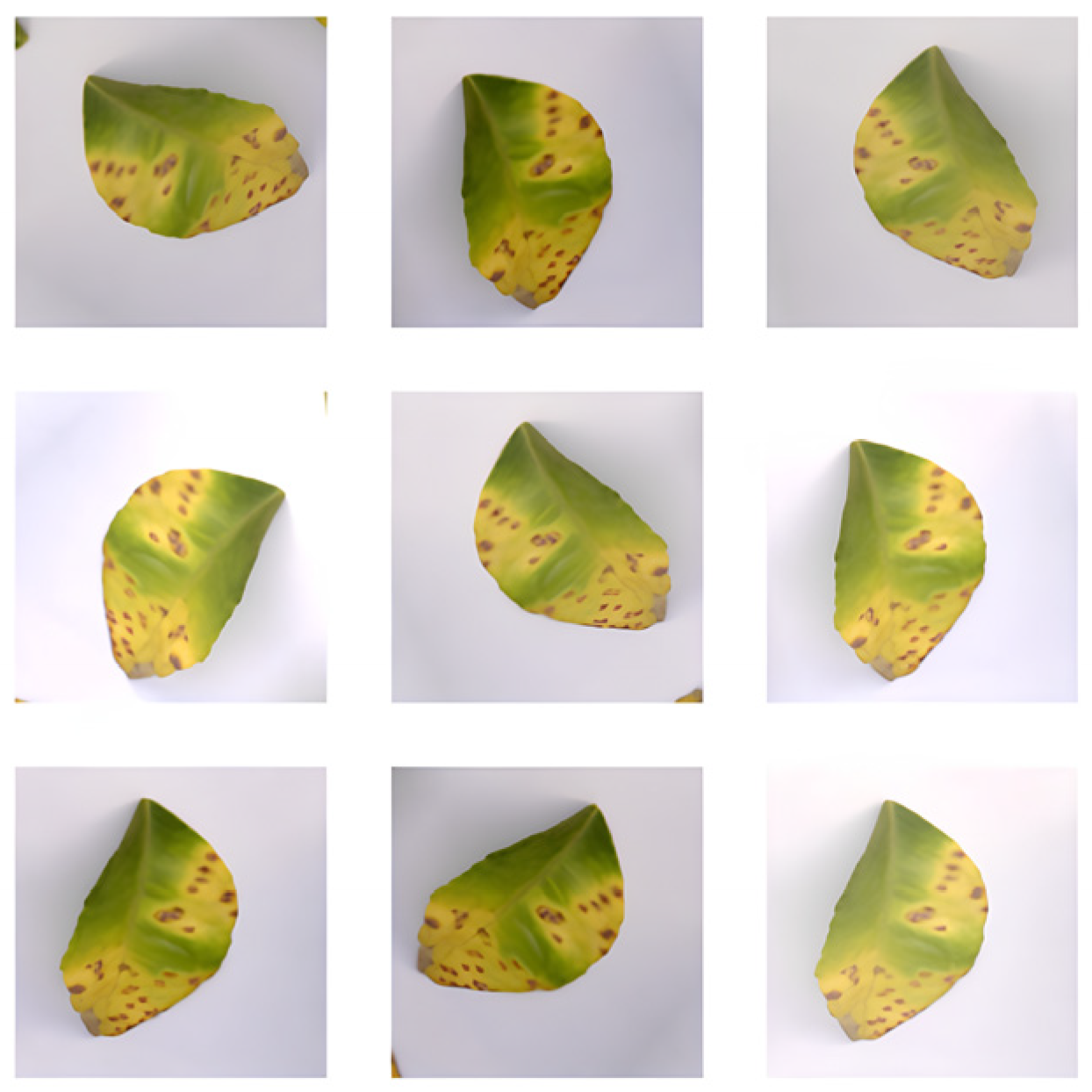

3.1. Dataset

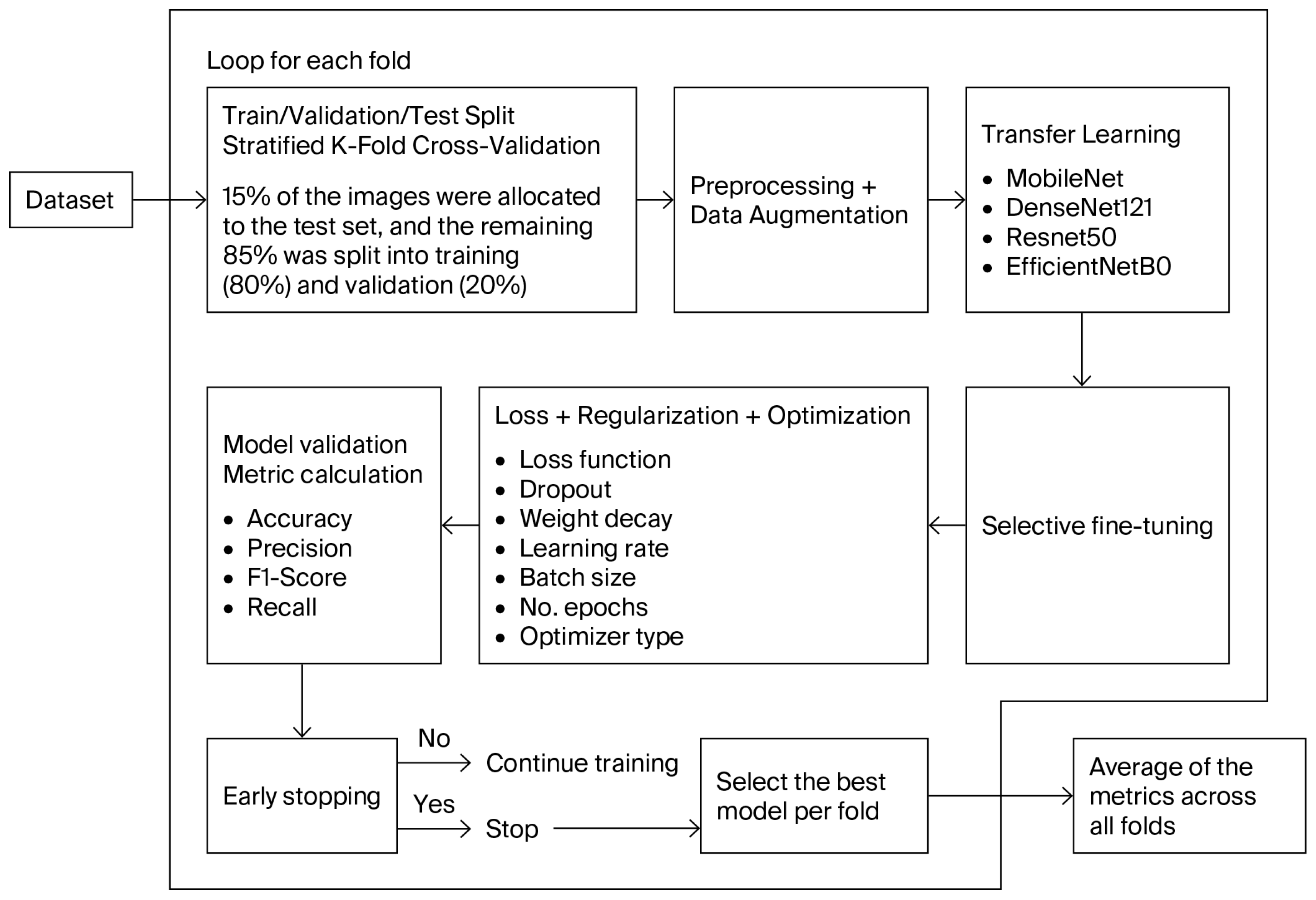

3.2. Stratified K-Fold Cross-Validation

3.3. Data Augmentation

3.4. Transfer Learning

3.5. Proposed Approach

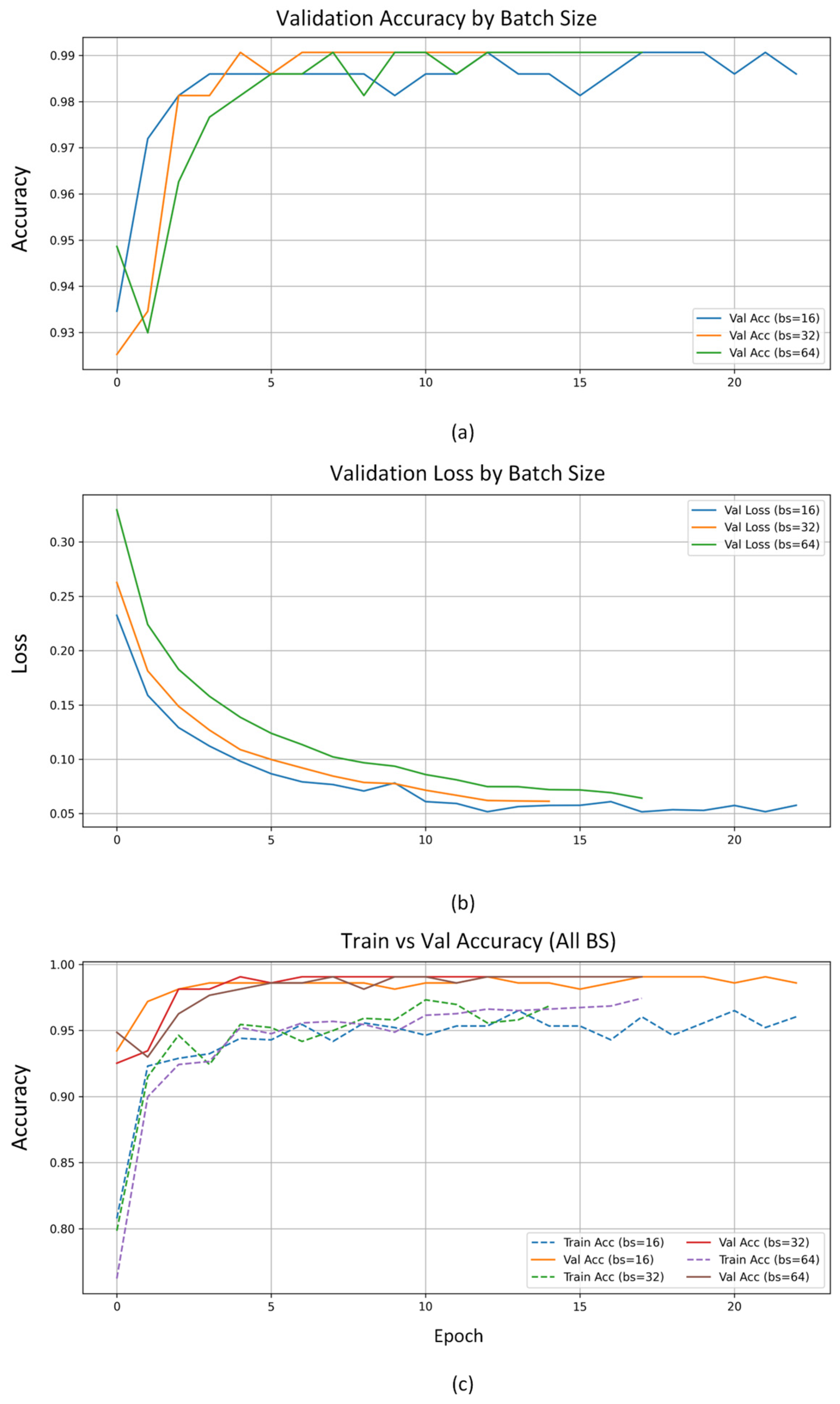

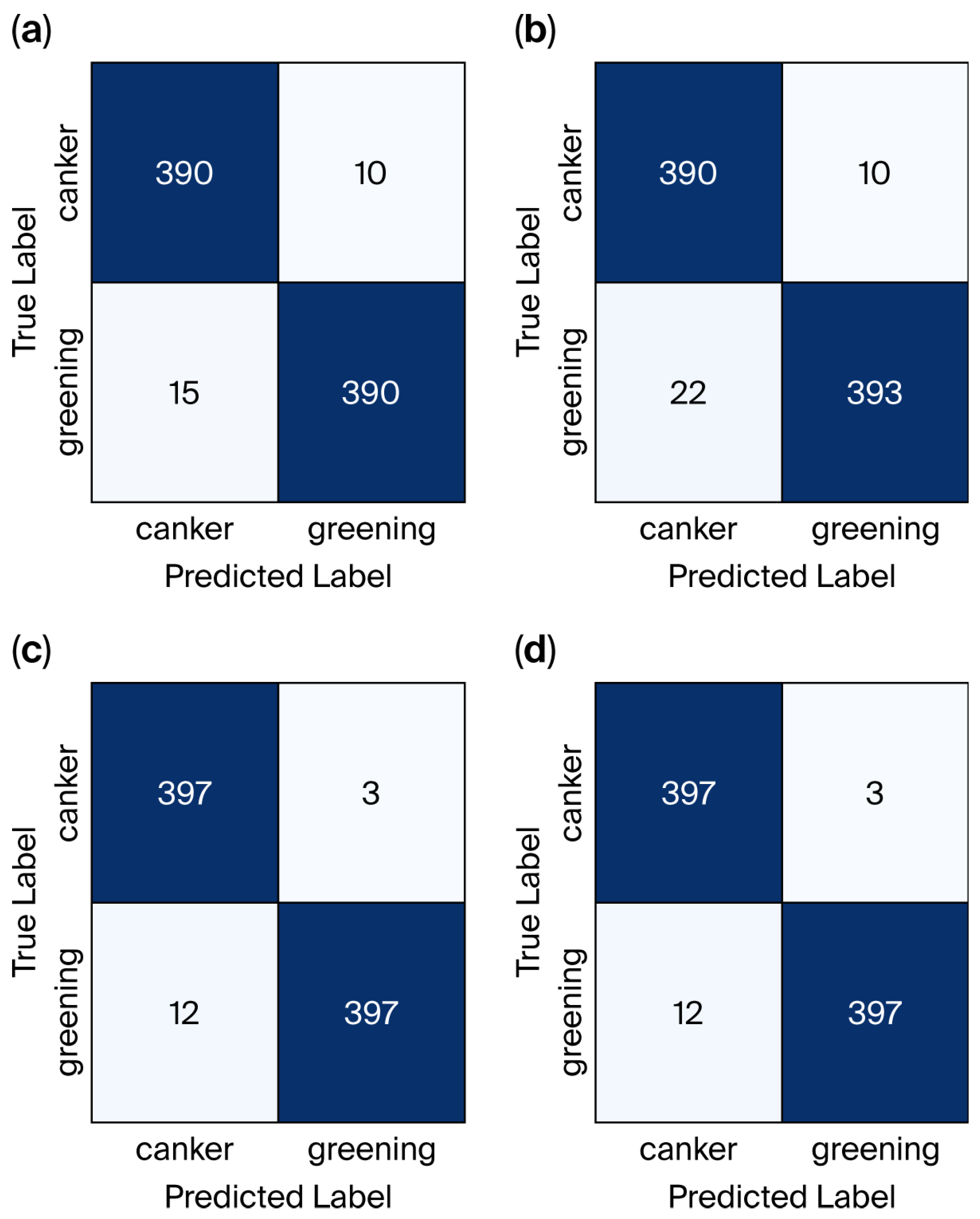

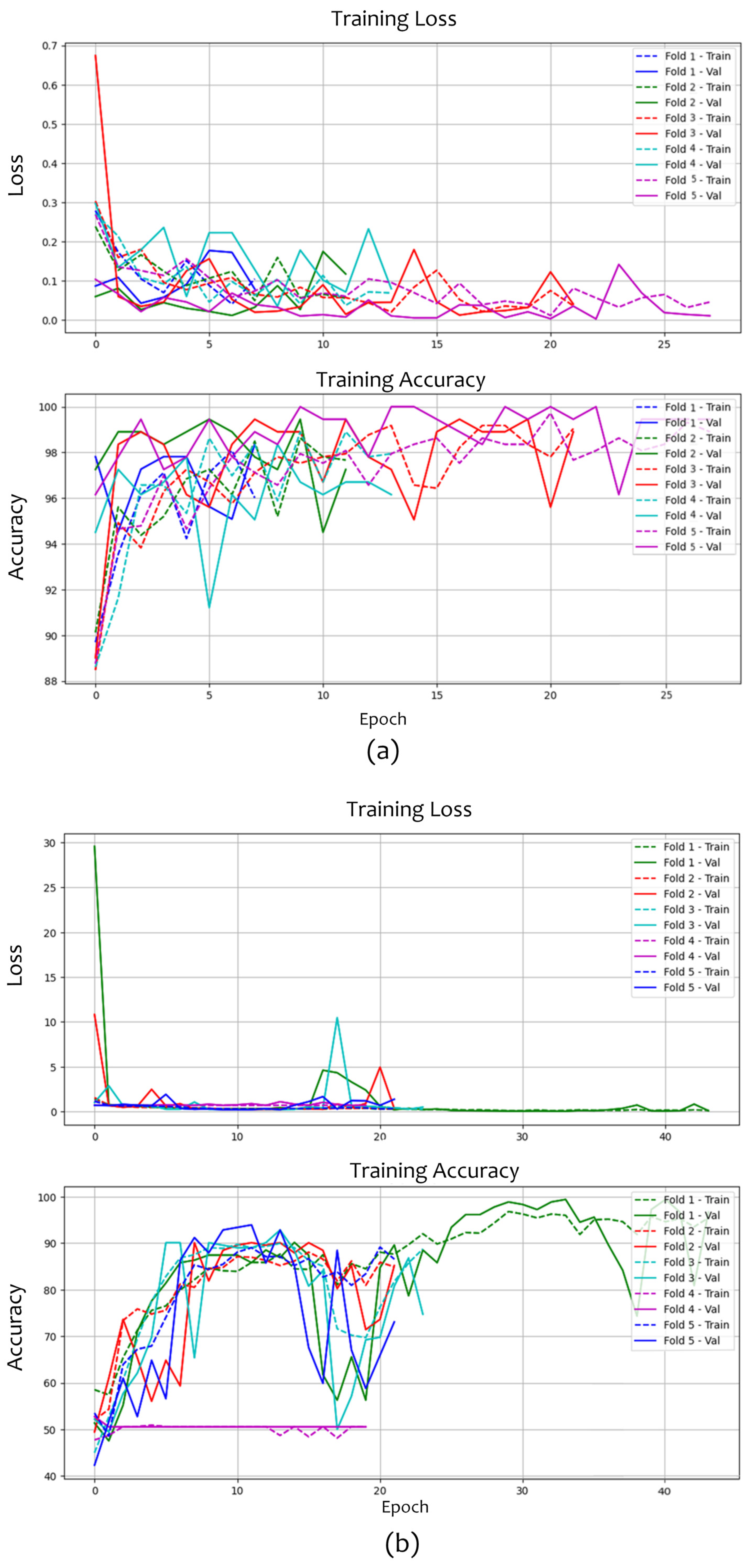

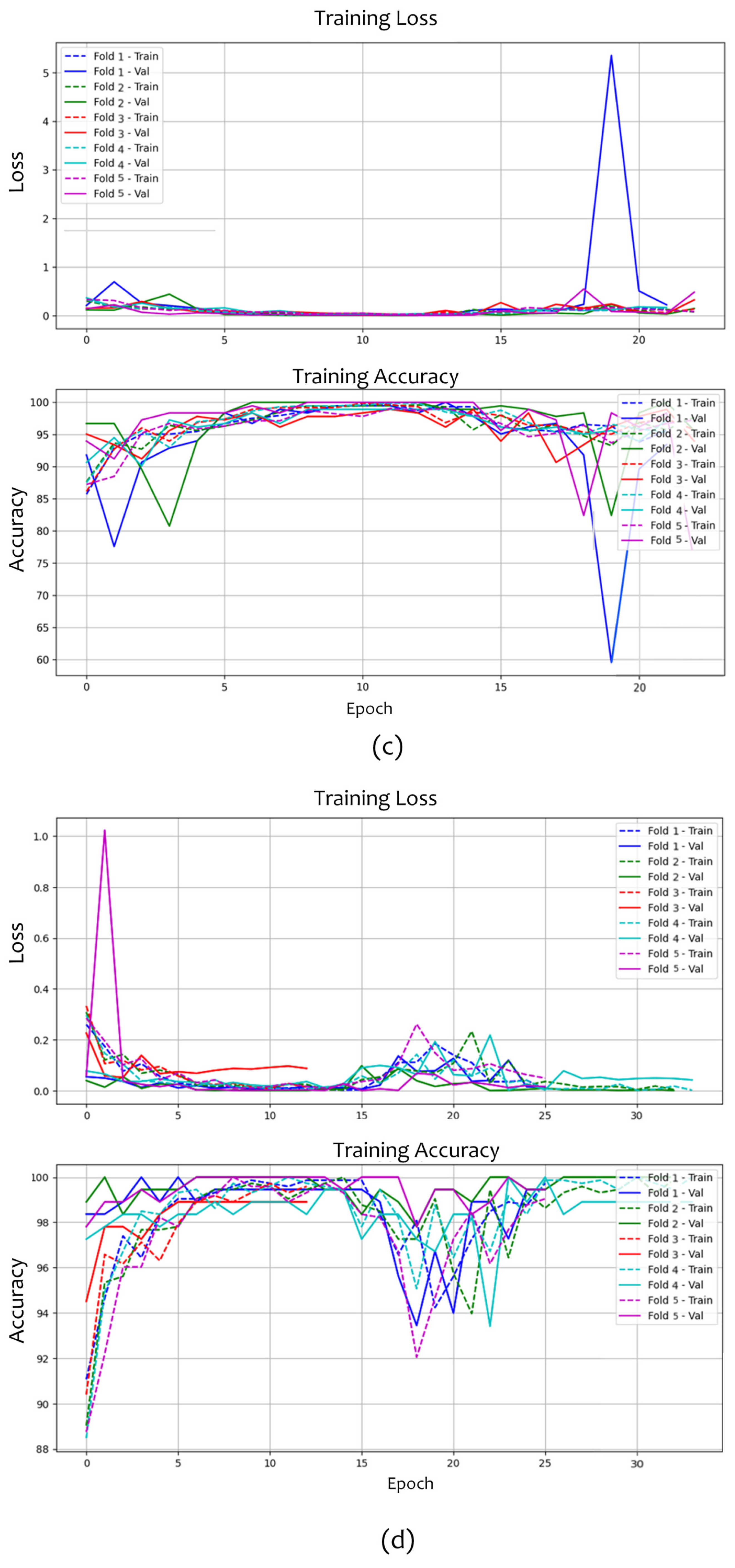

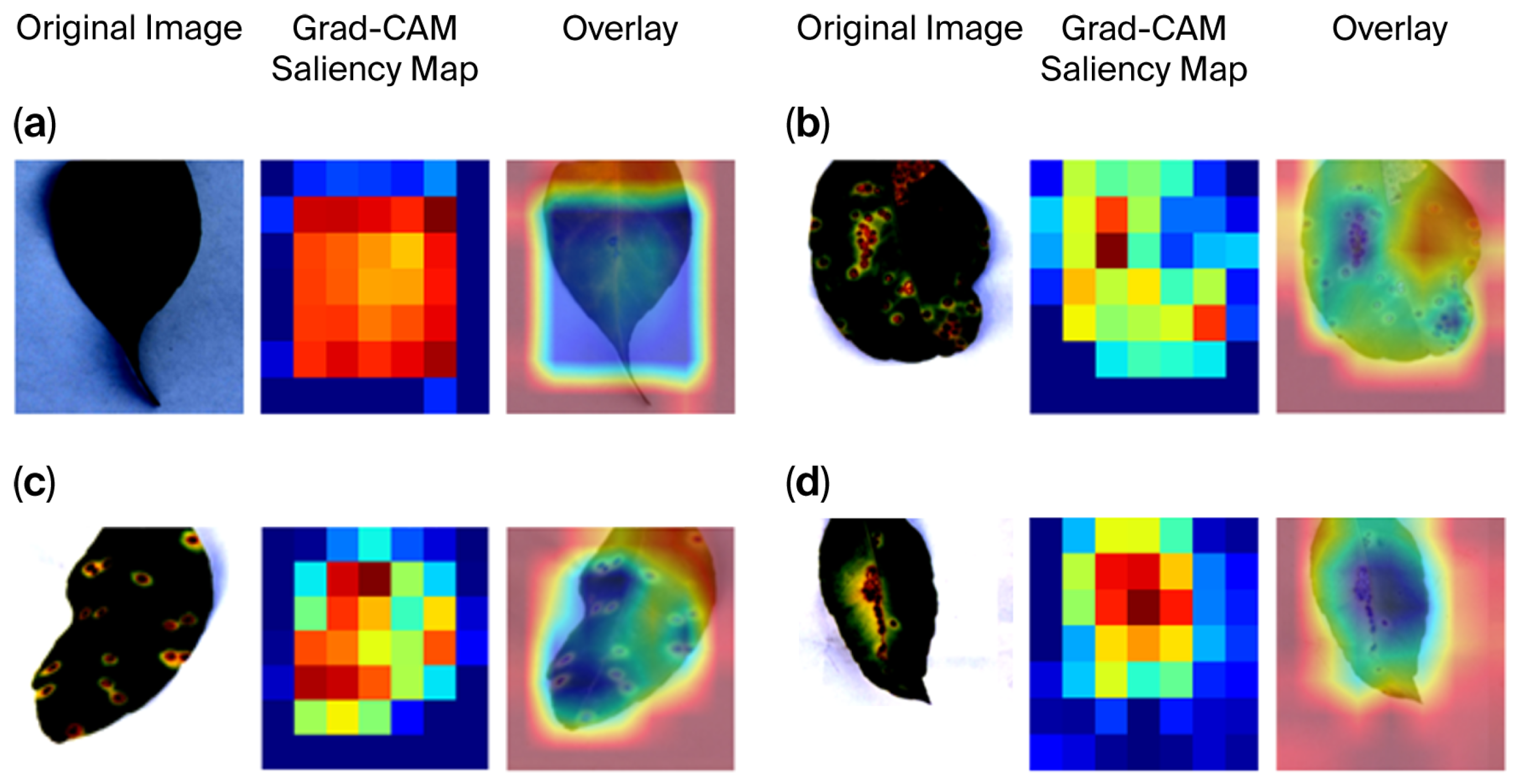

4. Experiments and Results

5. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, H.; Li, Z.; Deng, X.; Zhao, Z. Enhancing agricultural sustainability: Optimizing crop planting structures and spatial layouts within the water-land-energy-economy-environment-food nexus. Geogr. Sustain. 2025, 6, 100258. [Google Scholar] [CrossRef]

- Lo Vetere, M.; Iobbi, V.; Lanteri, A.P.; Minuto, A.; Minuto, G.; De Tommasi, N.; Bisio, A. The biological activities of Citrus species in crop protection. J. Agric. Food Res. 2025, 22, 102139. [Google Scholar] [CrossRef]

- Kato-Noguchi, H.; Kato, M. Pesticidal Activity of Citrus Fruits for the Development of Sustainable Fruit-Processing Waste Management and Agricultural Production. Plants 2025, 14, 754. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Meng, Q. Automatic citrus canker detection from leaf images captured in field. Pattern Recognit. Lett. 2011, 32, 2036–2046. [Google Scholar] [CrossRef]

- Matshwene, M.; Loyiso, M. Identification of Citrus Canker on Citrus Leaves and Fruit Surfaces in the Grove Using Deep Learning Neural Networks. J. Agric. Sci. Technol. 2020, 10, 49–53. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, Z.; Yang, J.; Zheng, Z.; Xianbo, H.; Shujin Wei, Y.; Lan, Y. Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sens. 2020, 12, 2678. [Google Scholar] [CrossRef]

- Gómez-Flores, W.; Garza-Saldaña, J.J.; Varela-Fuentes, S.E. Detection of Huanglongbing disease based on intensity-invariant texture analysis of images in the visible spectrum. Comput. Electron. Agric. 2019, 162, 825–835. [Google Scholar] [CrossRef]

- Omaye, J.D.; Ogbuju, E.; Ataguba, G.; Jaiyeoba, O.; Aneke, J.; Oladipo, F. Cross-comparative review of Machine learning for plant disease detection: Apple, cassava, cotton and potato plants. Artif. Intell. Agric. 2024, 12, 127–151. [Google Scholar] [CrossRef]

- Harakannanavar, S.; Rudagi, J.; Puranikmath, V.I.; Siddiqua, A.; Pramodhini, R. Plant leaf disease detection using computer vision and machine learning algorithms. Glob. Transit. Proc. 2022, 3, 305–310. [Google Scholar] [CrossRef]

- Vinay, K.; Vempalli, S.; Thushar, S.; Tripty, S.; Apurvanand, S. A Deep Learning Framework for Early Detection and Diagnosis of Plant Diseases. Procedia Comput. Sci. 2025, 258, 1435–1445. [Google Scholar] [CrossRef]

- Andrew, J.; Eunice, J.; Elena Popescu, D.; Kalpana Chowdary, M.; Hemanth, J. Deep Learning-Based Leaf Disease Detection in Crops Using Images for Agricultural Applications. Agronomy 2022, 12, 2395. [Google Scholar] [CrossRef]

- Konstantinos, D.; Dimitrios, T.; Dimitrios, T.; Lykourgos, M.; Lazaros, I.; Panayotis, K. Pandemic Analytics by Advanced Machine Learning for Improved Decision Making of COVID-19 Crisis. Processes 2021, 9, 1267. [Google Scholar] [CrossRef]

- Ching-Nam, H.; Yi-Zhen, T.; Pei-Duo, Y.; Jiasi, C.; Chee-Wei, T. Privacy-Enhancing Digital Contact Tracing with Machine Learning for Pandemic Response: A Comprehensive Review. Big Data Cogn. Comput. 2023, 7, 108. [Google Scholar] [CrossRef]

- Sharif, M.; Attique Khan, M.; Iqbal, Z.; Faisal Azam, M.; Ikram, M.; Lali, U.; Younus Javed, M. Detection and classification of citrus diseases in agriculture based on optimized weighted segmentation and feature selection. Comput. Electron. Agric. 2018, 150, 220–234. [Google Scholar] [CrossRef]

- Wetterich, C.B.; Oliveira Neves, R.F.; Belasque, J.; Marcassa, L.G. Detection of citrus canker and Huanglongbing using fluorescence imaging spectroscopy and support vector machine technique. Appl. Opt. 2016, 55, 400–407. [Google Scholar] [CrossRef] [PubMed]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-Based Remote Sensing Technique to Detect Citrus Canker Disease Utilizing Hyperspectral Imaging and Machine Learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.F.; Ritenour, M.A.; Bonn, W.G. Detection of citrus canker using hyperspectral reflectance imaging with spectral information divergence. J. Food Eng. 2009, 93, 183–191. [Google Scholar] [CrossRef]

- Narayanan, K.B.; Krishna Sai, D.; Akhil Chowdary, K.; Reddy, S. Applied Deep Learning approaches on canker effected leaves to enhance the detection of the disease using Image Embedding and Machine Learning Techniques. EAI Endorsed Trans. Internet Things 2024, 10. [Google Scholar] [CrossRef]

- Yan, K.; Fang, X.; Yang, W.; Xu, X.; Lin, S.; Zhang, Y.; Lan, Y. Multiple light sources excited fluorescence image-based non-destructive method for citrus Huanglongbing disease detection. Comput. Electron. Agric. 2025, 237, 110549. [Google Scholar] [CrossRef]

- Yan, K.; Song, X.; Yang, J.; Xiao, J.; Xu, X.; Guo, J.; Zhu, H.; Lan, Y.; Zhang, Y. Citrus huanglongbing detection: A hyperspectral data-driven model integrating feature band selection with machine learning algorithms. Crop Prot. 2025, 188, 107008. [Google Scholar] [CrossRef]

- Kong, L.; Liu, T.; Qiu, H.; Yu, X.; Wang, X.; Huang, Z.; Huang, M. Early diagnosis of citrus Huanglongbing by Raman spectroscopy and machine learning. Laser Phys. Lett. 2024, 21, 015701. [Google Scholar] [CrossRef]

- Kent, M.G.; Schiavon, S. Predicting Window View Preferences Using the Environmental Information Criteria. LEUKOS 2022, 19, 190–209. [Google Scholar] [CrossRef]

- Goyal, A.; Lakhwani, K. Integrating advanced deep learning techniques for enhanced detection and classification of citrus leaf and fruit diseases. Sci. Rep. 2025, 15, 12659. [Google Scholar] [CrossRef]

- Faisal, S.; Javed, K.; Ali, S.; Alasiry, A.; Marzougui, M.; Attique Khan, M.; Cha, J.H. Deep Transfer Learning Based Detection and Classification of Citrus Plant Diseases. Comput. Mater. Contin. 2023, 76, 895–914. [Google Scholar] [CrossRef]

- Sharma, P.; Abrol, P. Multi-component image analysis for citrus disease detection using convolutional neural networks. Crop Prot. 2025, 193, 107181. [Google Scholar] [CrossRef]

- Butt, N.; Munwar Iqbal, M.; Ramzan, S.; Raza, A.; Abualigah, L.; Latif Fitriyani, M.; Gu, Y.; Syafrudin, M. Citrus diseases detection using innovative deep learning approach and Hybrid Meta-Heuristic. PLoS ONE 2025, 20, e0316081. [Google Scholar] [CrossRef]

- Syed-Ab-Rahman, S.F.; Hesam Hesamian, M.; Prasad, M. Citrus disease detection and classification using end-to-end anchor-based deep learning model. Appl. Intell. 2022, 52, 927–938. [Google Scholar] [CrossRef]

- Zhang, X.; Xun, Y.; Chen, Y. Automated identification of citrus diseases in orchards using deep learning. Biosyst. Eng. 2022, 223, 249–258. [Google Scholar] [CrossRef]

- Arthi, A.; Sharmili, N.; Althubiti, S.A.; Laxmi Lydia, E.; Alharbi, M.; Alkhayyat, A.; Gupta, D. Duck optimization with enhanced capsule network based citrus disease detection for sustainable crop management. Sustain. Energy Technol. Assess. 2023, 58, 103355. [Google Scholar] [CrossRef]

- Dhiman, P.; Kaur, A.; Hamid, Y.; Alabdulkreem, E.; Elmannai, H.; Ababneh, N. Smart Disease Detection System for Citrus Fruits Using Deep Learning with Edge Computing. Sustainability 2023, 15, 4576. [Google Scholar] [CrossRef]

- Mahesh, T.R.; Kumar, V.; Kumar, D.; Geman, O.; Margala, M.; Guduri, M. The stratified K-folds cross-validation and class-balancing methods with high-performance ensemble classifiers for breast cancer classification. Healthc. Anal. 2023, 4, 100247. [Google Scholar] [CrossRef]

- Dtrilsbeek. Citrus Leaves Prepared; [Dataset]. Kaggle. Available online: https://www.kaggle.com/datasets/dtrilsbeek/citrus-leaves-prepared (accessed on 30 October 2025).

- Kaku321. Citrus Plant Disease; [Dataset]. Kaggle. Available online: https://www.kaggle.com/datasets/kaku321/citrus-plant-disease (accessed on 30 October 2025).

- Huang, D.; Xiao, K.; Luo, H.; Yang, B.; Lan, S.; Jiang, Y.; Li, Y.; Ye, D.; Sun, D.; Weng, H. Implementing transfer learning for citrus Huanglongbing disease detection across different datasets using neural network. Comput. Electron. Agric. 2025, 238, 110886. [Google Scholar] [CrossRef]

- Li, S.; Zhao, P.; Zhang, H.; Sun, X.; Wu, H.; Jiao, D.; Wang, W.; Liu, C.; Fang, Z.; Xue, J. Surge phenomenon in optimal learning rate and batch size scaling. In Proceedings of the 38th International Conference on Neural Information Processing Systems (NIPS ‘24), Vancouver, BC, Canada, 10–15 December 2024; Curran Associates Inc.: Red Hook, NY, USA, 2025; Volume 37, pp. 132722–132746. [Google Scholar]

- Kobir Siam, F.; Bishshash, P.; Asraful Sharker Nirob, M.D.; Bin Mamun, S.; Assaduzzaman, M.; Rashed Haider Noori, S. A comprehensive image dataset for the identification of lemon leaf diseases and computer vision applications. Data Brief 2025, 58, 111244. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Jin, X.; Lin, G.; Jiang, J.; Wang, M.; Junhua Hu, S.; Lyu, Q. Hybrid attention network for citrus disease identification. Comput. Electron. Agric. 2024, 220, 108907. [Google Scholar] [CrossRef]

- Lawal Rukuna, A.; Zambuk, F.U.; Gital, A.Y.; Muhammad Bello, U. Citrus diseases detection and classification based on efficientnet-B5. Syst. Soft Comput. 2025, 7, 200199. [Google Scholar] [CrossRef]

- Sujatha, R.; Moy Chatterjee, J.; Jhanjhi, N.Z.; Nawaz Brohi, S. Performance of deep learning vs machine learning in plant leaf disease detection. Microprocess. Microsyst. 2021, 80, 103615. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.F.; Zhao, X.; Niphadkar, N.; Ritenour, M.A. Development of a two-band spectral imaging system for real-time citrus canker detection. J. Food Eng. 2012, 108, 87–93. [Google Scholar] [CrossRef]

- Dinesh, A.; Balakannan, S.P.; Maragatharaja, M. A novel method for predicting plant leaf disease based on machine learning and deep learning techniques. Eng. Appl. Artif. Intell. 2025, 155, 111071. [Google Scholar] [CrossRef]

- Yang, B.; Yang, Z.; Xu, Y.; Cheng, W.; Zhong, F.; Ye, D.; Weng, H. A 1D-CNN model for the early detection of citrus Huanglongbing disease in the sieve plate of phloem tissue using micro-FTIR. Chemom. Intell. Lab. Syst. 2024, 252, 105202. [Google Scholar] [CrossRef]

- Cao, L.; Xiao, W.; Hu, Z.; Li, X.; Wu, Z. Detection of Citrus Huanglongbing in Natural Field Conditions Using an Enhanced YOLO11 Framework. Mathematics 2025, 13, 2223. [Google Scholar] [CrossRef]

- Qiu, R.-Z.; Chen, S.-P.; Chi, M.-X.; Wang, R.-B.; Huang, T.; Fan, G.-C.; Zhao, J.; Weng, Q.-Y. An automatic identification system for citrus greening disease (Huanglongbing) using a YOLO convolutional neural network. Front. Plant Sci. 2022, 13, 1002606. [Google Scholar] [CrossRef]

| Disease Type | Training (n) | Test (n) |

|---|---|---|

| Canker | 451 | 79 |

| HLB | 461 | 81 |

| Total | 912 | 160 |

| Hyperparameter | Range |

|---|---|

| Learning Rate | 1 × 10−6–0.1 |

| Batch size | 16, 32, 64 |

| No. epochs | 10–100 |

| Optimizer type | SGD, Adam, AdamW |

| Model | Accuracy | Loss | Recall | F1-Score |

|---|---|---|---|---|

| MobileNetV2 | 0.9652 | 0.0230 | 0.9629 | 0.9690 |

| DenseNet121 | 0.8422 | 0.0771 | 0.7852 | 0.8952 |

| Resnet50 | 0.9867 | 0.0391 | 0.9863 | 0.9863 |

| EfficientNetB0 | 0.9988 | 0.0058 | 0.9989 | 0.9988 |

| Method | Accuracy |

|---|---|

| Current work. Data augmentation + Transfer learning + selective fine-tuning + Optimization + EfficientNetB0 | 99.88 |

| GA + multi-feature fusion of vegetation index + SAE [6] | 99.72 |

| intensity-invariant texture analysis + Ranklet transform + Random Forest [7] | 95 |

| Optimized weighted segmentation method + hybrid feature selection method + M-SVM [14] | 97.00 |

| FIS + SVM [15] | 97.8 |

| SID [17] | 96.2 |

| EEMs + FCR1-FCR4 + Random Forest [19] | 87.5 |

| SPA-STD-SVM [20] | 97.46 |

| PCA-SVM [21] | 95.56 |

| Data augmentation + InceptionV3 [23] | 99.12 |

| Transfer learning + EfficientNetB3 [24] | 99.58 |

| RPN + Faster RCNN [27] | 97.2 |

| YOLOV4 + EfficientNet [28] | 89.00 |

| DOECN-CDDCM [29] | 98.40 |

| CNN-LSTM [30] | 98.25 |

| DenseNet121 [36] | With augmentation 98.56 |

| Without augmentation 96.19 | |

| FdaNet + HaNet50 [37] | 98.83 |

| SMOTE + efficientNet-B5 [38] | 99.22 |

| VGG-16 [39] | 89.5 |

| two-band ratio approach (R830/R730) [40] | 95.3 |

| M-D-C-A-S-ASVM [41] | 99 |

| 1D-CNN + PLSR + LS-SVR [42] | 98.65 |

| DCH-YOLO11 [43] | 91.6 |

| Yolov5l-HLB2 [44] | 85.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Devora-Guadarrama, M.; Luna-Benoso, B.; Alarcón-Paredes, A.; Martínez-Perales, J.C.; Morales-Rodríguez, Ú.S. Deep Learning-Based Citrus Canker and Huanglongbing Disease Detection Using Leaf Images. Computers 2025, 14, 500. https://doi.org/10.3390/computers14110500

Devora-Guadarrama M, Luna-Benoso B, Alarcón-Paredes A, Martínez-Perales JC, Morales-Rodríguez ÚS. Deep Learning-Based Citrus Canker and Huanglongbing Disease Detection Using Leaf Images. Computers. 2025; 14(11):500. https://doi.org/10.3390/computers14110500

Chicago/Turabian StyleDevora-Guadarrama, Maryjose, Benjamín Luna-Benoso, Antonio Alarcón-Paredes, Jose Cruz Martínez-Perales, and Úrsula Samantha Morales-Rodríguez. 2025. "Deep Learning-Based Citrus Canker and Huanglongbing Disease Detection Using Leaf Images" Computers 14, no. 11: 500. https://doi.org/10.3390/computers14110500

APA StyleDevora-Guadarrama, M., Luna-Benoso, B., Alarcón-Paredes, A., Martínez-Perales, J. C., & Morales-Rodríguez, Ú. S. (2025). Deep Learning-Based Citrus Canker and Huanglongbing Disease Detection Using Leaf Images. Computers, 14(11), 500. https://doi.org/10.3390/computers14110500