1. Introduction

Museums play a crucial role in preserving and communicating cultural heritage, providing visitors with opportunities to engage with history, art, and science in tangible form. However, traditional exhibitions typically rely on static displays and written explanations, which may constrain both accessibility and engagement. Recent developments in digital technologies—particularly AR—offer museums innovative ways to design interactive and immersive experiences that enhance learning and sustain visitor attention [

1,

2].

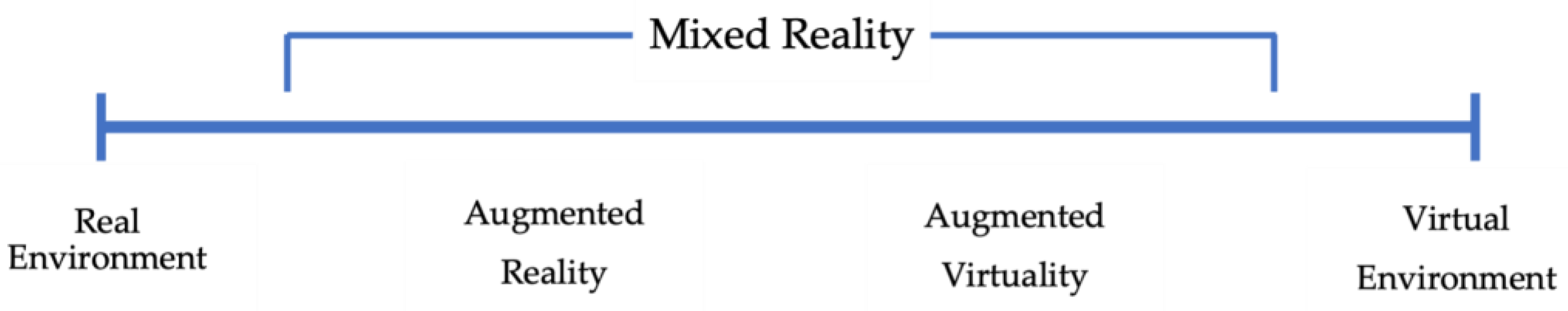

AR, as defined in Milgram and Kishino’s Reality-Virtuality Continuum (

Figure 1) [

3], occupies a position close to the real environment, overlaying digital content onto physical objects and creating hybrid spaces that merge physical and virtual elements. By doing so, AR bridges the gap between traditional museum displays and contemporary expectations of interactivity, allowing audiences to explore cultural artifacts through a combination of visual augmentation and contextual information [

4].

Beyond the conceptual placement of AR on the Reality-Virtuality Continuum, recent systems research shows how AR-driven “digital museums” reshape interaction design and data stewardship. Hu et al. demonstrate that coupling AR with networked sensing (IoT) and secure content pipelines (e.g., blockchain-backed asset records) increases perceived interactivity across age groups and reduces time–place constraints of access, while keeping physical collections central to interpretation [

5].

Empirical studies confirm that AR strengthens engagement and facilitates learning by transforming passive observation into active exploration. In educational contexts, AR-based tools have demonstrated significant improvements in conceptual understanding and learner motivation [

6,

7]. When applied to museums, similar mechanisms operate: visitors become co-creators of meaning rather than mere observers, interacting dynamically with virtual reconstructions, 3D models, and multimedia narratives that enrich artifact interpretation [

8,

9]. These applications not only enhance visitor enjoyment but also democratize access to knowledge, supporting diverse learning styles and fostering inclusive participation.

Complementing these general findings, controlled deployments in university and heritage museums report that AR layers (3D reconstructions, animations, contextual media) improve on-site didactics and preservation by explaining function without handling fragile artifacts, while VR serves as an off-site complement that widens access rather than replacing the physical visit [

10]. Evaluations of an object-focused AR prototype likewise show higher visitor engagement, meaningful experience, and learning compared with text-only presentation, supporting the shift from passive viewing to active exploration [

11].

Research has also shown that AR technologies in museums can expand the sensory and cognitive dimensions of visitor experience. For instance, Wang and Zhu [

8] demonstrated that the integration of interactive visualizations and audio layers stimulates multisensory perception, deepening visitors’ understanding of exhibits. Likewise, studies such as Nguyen [

12] reveal that AR supports mixed media storytelling, enabling users to connect emotional, historical, and spatial contexts within a unified narrative structure. Such immersive engagement transforms the museum visit into a personalized, memorable experience that combines education and entertainment.

Nevertheless, while numerous studies celebrate the advantages of AR, critical analyses have identified persistent challenges. Fernandes and Casteleiro-Pitrez [

13] emphasize that the successful implementation of AR requires close collaboration among developers, curators, and educators to ensure that digital augmentation complements rather than replaces authentic artifacts. Other scholars note issues of usability, technological barriers, and cognitive overload, which can diminish the intended benefits for less technologically adept visitors [

14,

15]. These limitations underline the need for thoughtful design, emphasizing simplicity, clarity, and inclusivity in AR interfaces [

16].

Beyond usability concerns, several studies have emphasized the creative and educational potential of AR in museum environments. Camps-Ortueta et al. [

17] argue that overlaying digital content directly onto real artifacts fosters richer learning experiences and strengthens the emotional connection between visitors and cultural heritage. Likewise, Ossmann et al. [

18] describe AR as a “creative playground” that sustains visitor attention through interactive and visually engaging content. In a similar vein, Nofal [

19] shows that AR supports informal learning and participatory exploration, transforming visitors from passive observers into active co-creators of meaning.

At the same time, AR has been recognized for its capacity to foster collaboration and social interaction among visitors. Ahmad et al. [

20] report that shared AR experiences promote collective learning and inclusive participation by enabling users to exchange interpretations of cultural objects in real time. Similarly, Chen and Lai [

21] propose asynchronous collaboration interfaces that allow remote users to jointly explore exhibits, expanding access beyond physical boundaries. Such findings indicate that AR’s role extends beyond visualization—it reshapes the social dynamics of museum visits.

Importantly, AR can also advance accessibility and inclusivity within cultural spaces. Sheehy et al. [

22] demonstrated that adaptive AR content enhances engagement for visitors with disabilities by providing alternative modalities for interaction. At the same time, reliance on mobile interfaces can marginalize older adults or visitors with limited digital literacy, underscoring the need for universal-usability principles and low-friction interaction design [

23,

24]. Recent work on the “future museum” further argues for layering AR with virtual text and AI-enhanced information systems to personalize pacing, depth, and modalities (captioning, audio description), thereby strengthening accessibility without displacing object-centeredness [

25]. Parallel VR exhibition pipelines-built on high-fidelity 3D digitization and semantic curation-show how online and immersive access can extend participation beyond physical constraints while preserving scholarly rigor [

26]; in education-oriented contexts, VR/AI scenarios are also being used to scaffold inclusive, dialogic learning around folk and material culture [

27].

Overall, the growing body of research affirms that AR mobile applications hold transformative potential for museums. They enhance engagement, encourage active learning, and foster deeper emotional connections between audiences and artifacts [

28,

29,

30]. Yet, these benefits are balanced by constraints—technical complexity, financial limitations, and the need for sustained content development. As Marques and Costello [

14] argue, the true challenge lies not in deploying AR but in integrating it meaningfully into curatorial practice. Addressing these issues requires not only technological advancement but also interdisciplinary collaboration to ensure that AR strengthens the museum’s educational mission rather than distracting from it.

Despite recent advances, current research still shows clear gaps. Most studies focus on descriptive prototypes rather than empirical evaluations of user acceptance, leaving the mechanisms of how visitors perceive and adopt AR insufficiently explored. Limited reporting on software architecture, datasets, and performance also reduces reproducibility and scalability. Moreover, the assumption that digital augmentation automatically improves engagement remains untested—few studies analyze whether AR’s impact stems from novelty, interactivity, or genuine educational value. Thus, systematic, theory driven evaluation frameworks are needed to assess both functional and emotional outcomes in museum contexts.

To address these issues, this study applies the extended Technology Acceptance Model (TAM) to evaluate an AR-based mobile application developed for the Abylkhan Kasteyev State Museum of Arts in Almaty, Kazakhstan. The system recognizes exhibits through machine learning (YOLO) and overlays 3D reconstructions and multimedia content, enriching the physical environment without separating visitors from it [

3]. Unlike QR-based guides, the app offers interactive, adaptive experiences aligned with users’ field of view, fostering engagement, learning, and immersion.

Using the TAM framework allows for an integrated assessment of usability, usefulness, enjoyment, and immersion—linking objective performance with subjective perception. This approach bridges technical evaluation and human centered design, clarifying how digital augmentation influences visitor experience. The project also broadens the geographic scope of AR research by providing insights from a Central Asian context and demonstrating that high performance, inclusive AR systems can be developed for resource constrained environments.

In summary, this study combines technological innovation with an empirical evaluation of user acceptance. By developing and testing a custom AR mobile application for the Abylkhan Kasteyev State Museum of Arts, it contributes both practical strategies for cultural institutions and theoretical insights into technology adoption in immersive heritage environments.

Accordingly, this study addresses two primary research questions:

How does the developed AR-based mobile application influence visitor engagement, enjoyment, and intention to reuse the technology in a museum context?

What is the level of recognition accuracy and usability achieved through the integration of machine learning and AR visualization?

These questions are examined using an extended TAM framework to evaluate perceived usefulness, ease of use, enjoyment, and immersion, together with empirical testing of recognition performance.

The structure of this paper is organized as follows:

Section 2 outlines the dataset and research methodology;

Section 3 presents the machine learning model;

Section 4 describes the development of the mobile application;

Section 5 reports and analyzes the experimental results; and

Section 6 concludes the paper by summarizing the key findings and proposing directions for future research.

2. Materials and Methods

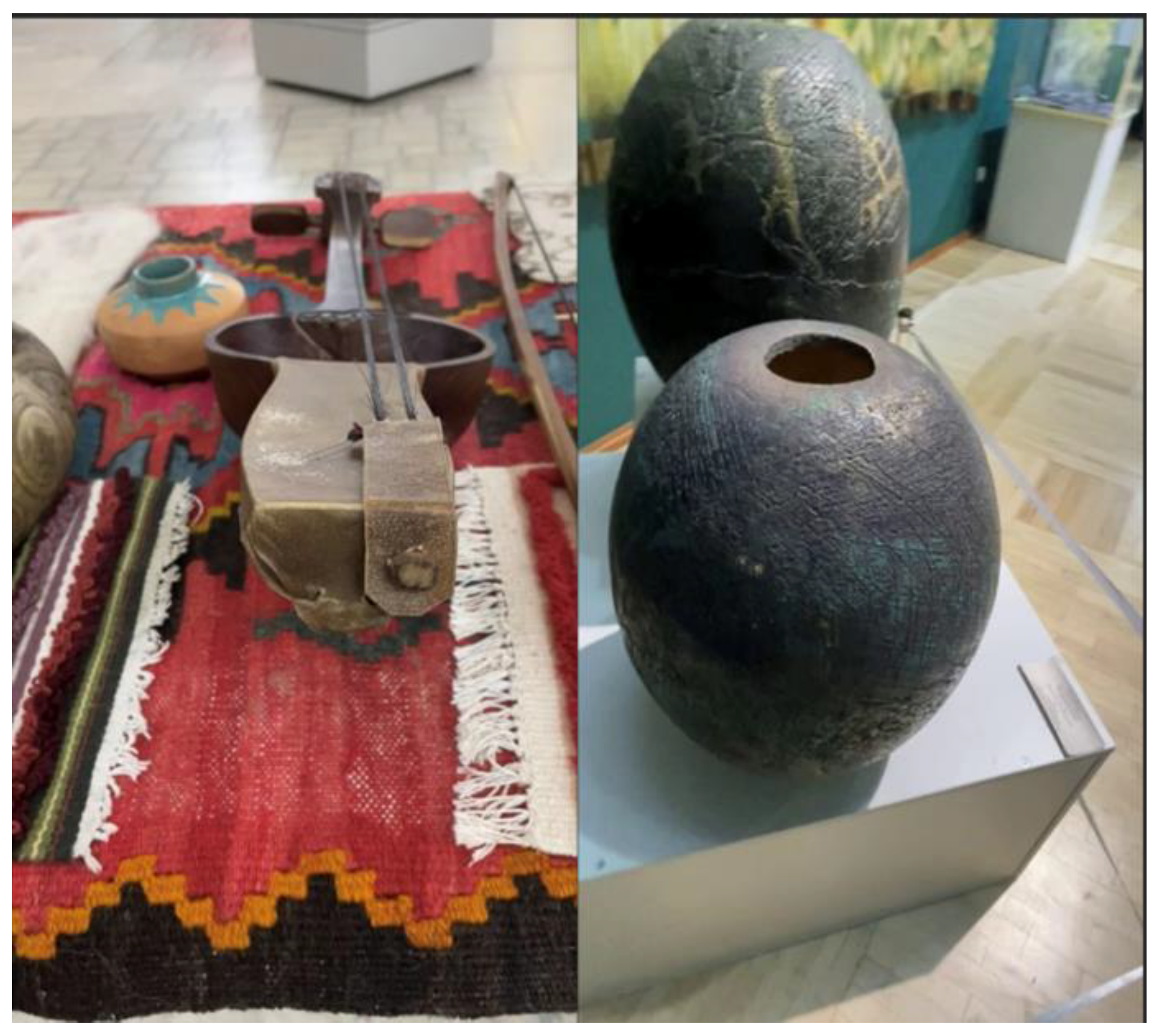

All visual materials used in this study were provided by the Abylkhan Kasteyev State Museum of Arts, and the entire work was conducted using exhibits from its collection (

Figure 2). The dataset was developed based on real museum artifacts, ensuring that the training data reflected authentic lighting, composition, and environmental conditions typical of the museum setting.

The initial dataset consisted of 51 exhibits representing various categories of art, including painting, sculpture, and weaving, with an average of 60 images per class. Video recordings were captured directly within the museum environment using a standard video camera, without any special preparation, under conditions replicating those of ordinary visitors. From these recordings, two to three frames per second were extracted to maximize image diversity and informativeness for training. Each extracted frame was manually verified and assigned to a specific exhibit class based on the artifact depicted. The tagging was performed using metadata and descriptions provided by the museum.

However, the volume of the initial dataset proved insufficient: during testing in the actual museum environment, the model successfully recognized only about one-third of the objects. Attempts to fine-tune the model on this dataset did not produce meaningful improvements, indicating the need for a revised data preparation strategy. Consequently, it was decided to increase the number of images per class while maintaining the original exhibit categories and annotation structure.

In the second stage of dataset preparation, the average number of images per class was doubled to 120. The same methodology—video recording, frame extraction, classification, and annotation—was retained to ensure methodological consistency. The resulting expanded dataset, comprising a total of 6128 images, enabled the model to learn more distinctive and stable object features, leading to a significant improvement in recognition accuracy, which reached up to 97% during machine learning model validation. These findings confirm that dataset expansion and balanced representation across categories are critical factors for improving model performance in real world museum recognition tasks.

Furthermore, the effectiveness of the developed AR application was assessed using the extended TAM proposed by Davis [

31] and further expanded in subsequent studies on immersive and interactive technologies [

32,

33]. TAM has been widely used to explain user acceptance of digital systems, including mobile learning, virtual reality, and AR applications. The model posits that users’ behavioral intention (BI) to adopt a system is primarily influenced by their perceived usefulness (PU) and perceived ease of use (PEOU).

This framework was selected because it provides a theoretically grounded and empirically validated approach to evaluating how users perceive and accept interactive technologies. Unlike general usability or satisfaction scales, TAM focuses on the determinants of technology adoption, making it especially suitable for assessing AR systems that combine functional performance with experiential engagement. In digital and immersive environments, where subjective experience and enjoyment play a central role, TAM has been successfully extended by incorporating additional constructs such as perceived enjoyment (PE) and immersion (IM) to capture emotional and experiential dimensions of user interaction.

These extensions are particularly relevant in museum contexts, where the success of AR applications depends not only on technical usability but also on their capacity to engage, educate, and emotionally involve visitors. Therefore, TAM and its extensions were considered the most appropriate framework for this study, as they enable the systematic evaluation of both the practical effectiveness and affective appeal of the AR mobile application developed for the Abylkhan Kasteyev State Museum of Arts.

Accordingly, this study employs an extended TAM framework to evaluate user perceptions of the AR mobile application developed for the Abylkhan Kasteyev State Museum of Arts. This theoretical grounding ensures both conceptual rigor and comparability with previous AR acceptance research.

The framework incorporates five constructs that together explain behavioral intention to use the application:

Perceived Usefulness (PU): the extent to which the application enhances the value of the museum visit;

Perceived Ease of Use (PEOU): the degree to which the application is intuitive and user friendly;

Perceived Enjoyment (PE): the extent to which the application is enjoyable regardless of its instrumental purpose;

Immersion (IM): the level of emotional and cognitive involvement experienced during use;

Behavioral Intention (BI): the likelihood of continued use of the application in the future.

Based on these constructs, the following hypotheses were formulated:

H1: PEOU positively influences PU—This means that the easier and more intuitive the AR application is to use, the more useful it is perceived to be by users in enhancing their museum experience.

H2: PEOU positively influences PE—This indicates that when users find the application simple and effortless to operate, they also experience greater enjoyment during interaction.

H3: PU positively influences BI—This suggests that users who perceive the application as beneficial and valuable for their visit are more likely to continue using it in the future.

H4: PE positively influences BI—This implies that the more enjoyable the interaction with the AR application, the stronger the users’ intention to reuse it becomes.

H5: IM positively influences BI—This means that a higher sense of immersion and engagement while using the AR application leads to a stronger behavioral intention to adopt the technology.

3. Machine Learning Model

The novelty of this study lies in the adaptation and optimization of machine learning models for mobile devices, ensuring both high accuracy and efficient processing of local exhibit data.

For model training, the YOLOv8 architecture was selected, specifically the nano and small configurations. These models demonstrated strong performance in object recognition, with the small model achieving a mAP50 of 99.5% and mAP50-95 of 96.5% after 150 epochs. Training and testing were carried out on the Google Colab platform using a Tesla T4 GPU, which significantly reduced processing time. The key hyperparameters used in the training process, including batch size, image resolution, learning rate, and regularization settings, are summarized in

Table 1.

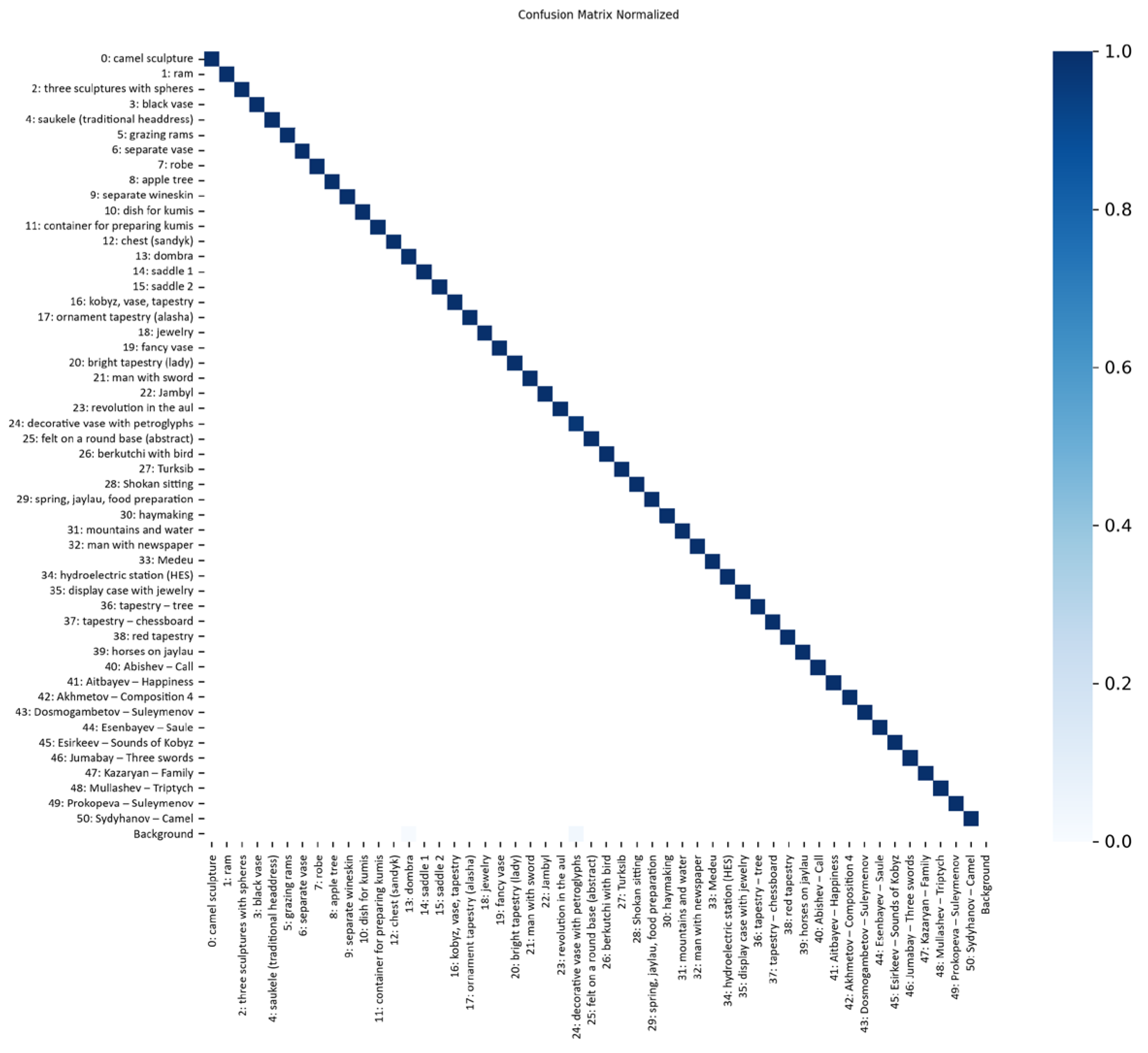

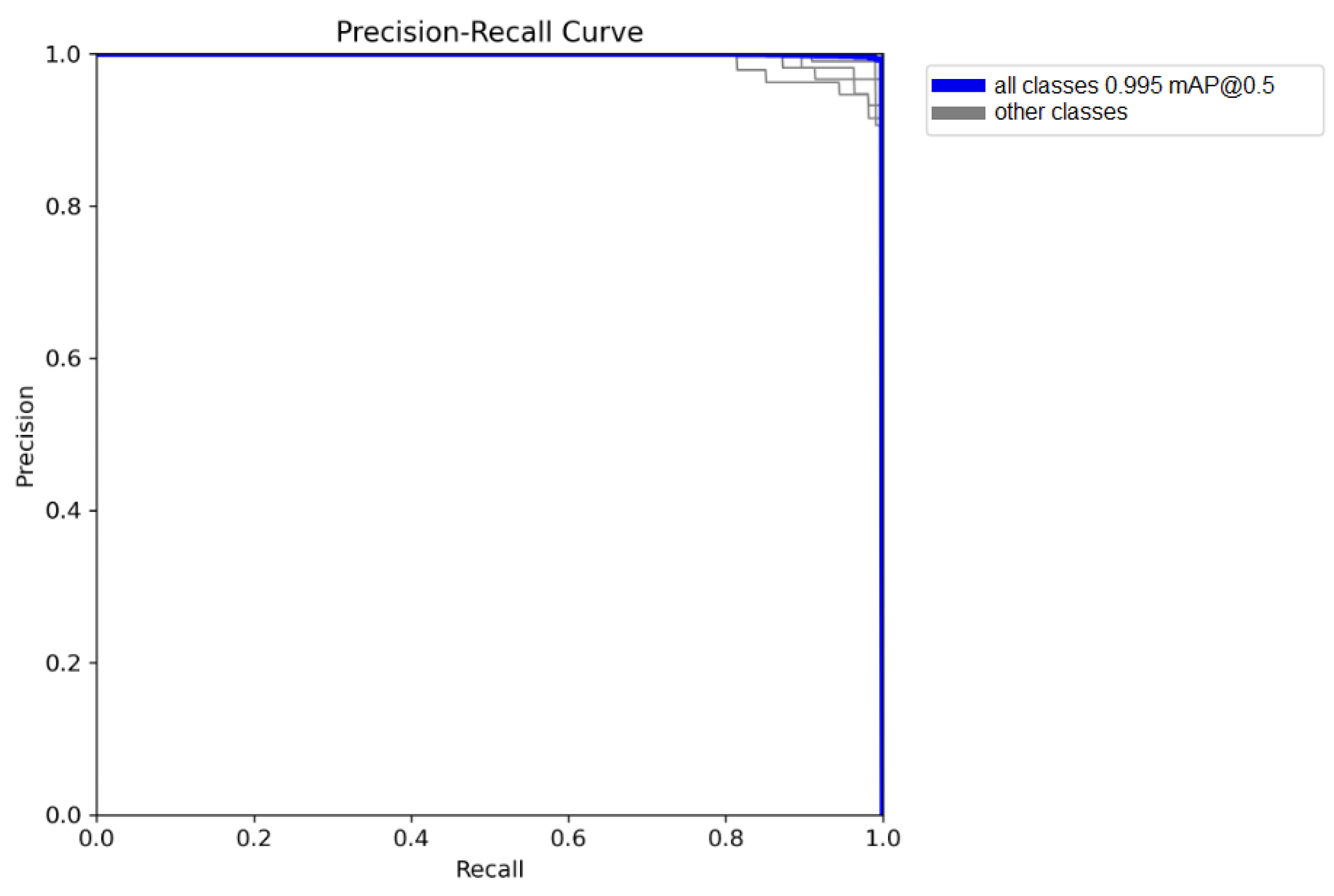

Figure 3 presents the normalized confusion matrix illustrating the classification performance across 51 exhibit categories and the background class. The strong diagonal dominance indicates that the model achieved nearly perfect recognition accuracy, with minimal misclassifications between similar exhibit types.

Figure 4 shows the Precision-Recall curve, where the model reached an average mAP0.5 of 0.995, confirming its high precision and recall consistency across all classes.

The trained machine learning model was integrated into the first beta version of the application, which was then tested in the real conditions of the museum. During the testing process, the application sent frames to the model for object recognition. When the model identified a match, it displayed the name of the recognized object. The recognition results, including the video stream and logs, were saved for further analysis.

After analyzing the data, it was found that the average recognition accuracy across all exhibits was about 97%, and the results were relatively consistent across most objects. However, two misclassifications were observed in the images (

Figure 5)—the black vase and the horizontally placed kobyz. The misclassification of the kobyz is likely due to the fact that it is the only exhibit in the dataset placed horizontally, which does not match the standard orientation in the dataset. In the case of the black vase, the error was attributed to the complex structure of the exhibit, which makes it difficult to distinguish, even though the model successfully recognized other objects with simpler shapes.

The work successfully demonstrates the application of machine learning in the field of exhibit recognition, with a high accuracy achieved in recognizing various objects in the museum. However, certain challenges were identified during the testing phase, specifically with the recognition of exhibits placed in a horizontal orientation, such as the kobyz, and the black vase, whose complex structure posed difficulties for accurate identification. Future work in machine learning will focus on addressing these challenges by expanding the dataset to include more diverse exhibit orientations and improving the model’s ability to recognize complex structures.

4. Mobile Application

The mobile application concept development of the future mobile application was undertaken in collaboration with representatives of the Abylkhan Kasteyev Museum of Arts. At the initial stage, the core functionality was defined, consisting of the following key components:

Integration of an exhibit recognition module powered by machine learning algorithms. This feature enables interactive engagement with museum exhibits, thereby enriching the visitor experience.

Users can interact with digital replicas of artifacts and explore them from multiple perspectives, an opportunity often restricted in traditional exhibition settings: operating with 3D model shown in AR display.

A trilingual user interface in Kazakh, Russian, and English. Kazakh, as the official state language of Kazakhstan, ensures cultural authenticity; Russian, widely used as the language of interethnic communication, reflects the country’s historical and geographical context; and English addresses the growing number of international tourists seeking access to Kazakhstan’s cultural and historical heritage. This trilingual approach is intended to meet the needs of a diverse audience and maximize accessibility for all museum visitors.

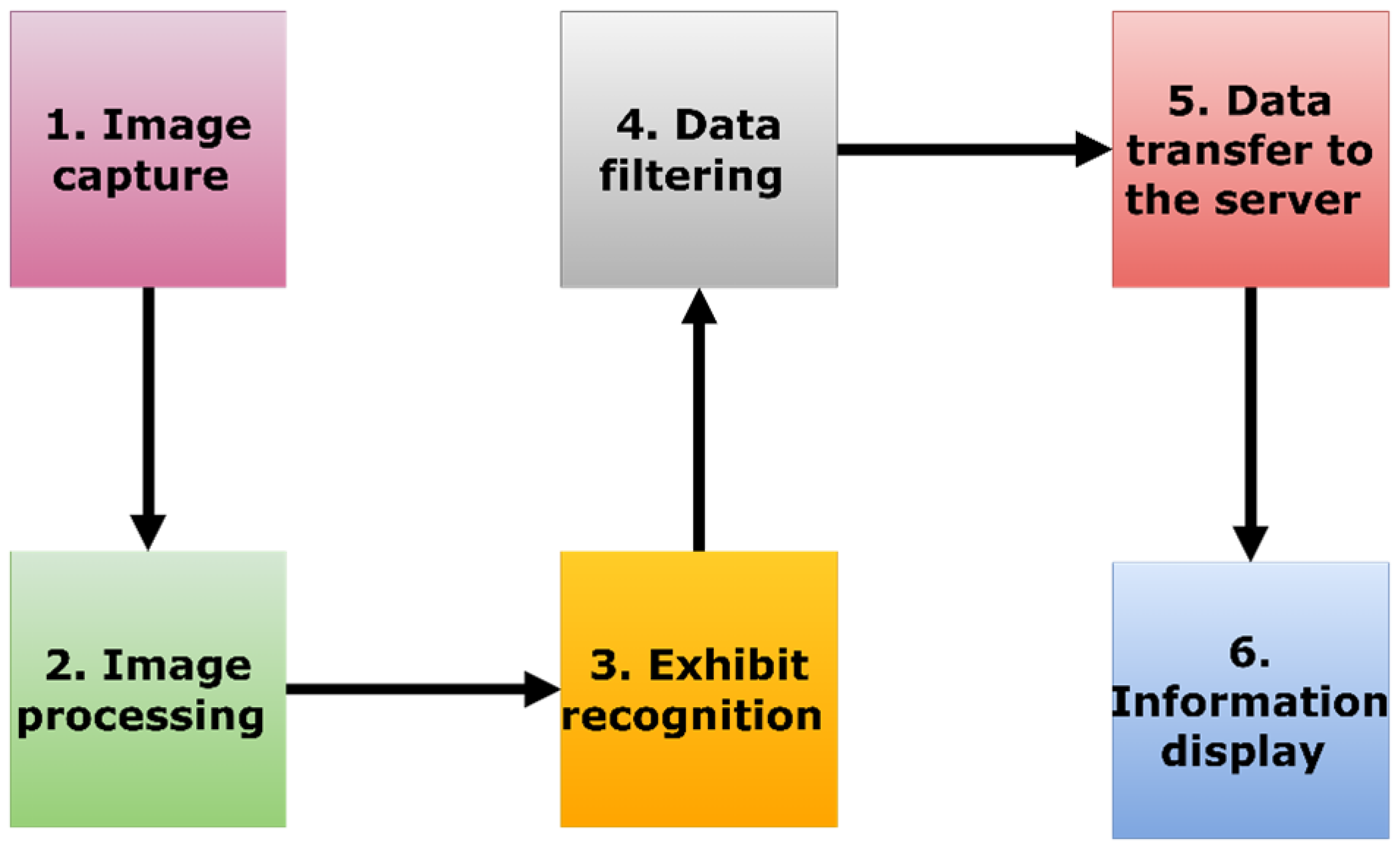

The operating principle of the application follows a sequence of steps, illustrated in

Figure 6:

Image capture—the device’s camera records the current frame.

Image processing—the captured frame is passed to the YOLO model, which, through a dedicated library, analyzes the objects within the image.

Exhibit recognition—the model identifies objects and assigns their unique identifiers.

Data filtering—duplicate detections are eliminated by suspending repeated analysis of the same objects.

Data transfer to the server—the identifiers of recognized exhibits are sent to the server for further processing.

Information display—the server returns the corresponding information, which is then presented to the user.

Figure 6.

Application operating principal scheme.

Figure 6.

Application operating principal scheme.

Modern mobile development technologies make it possible to create high performance cross-platform applications. In this project, the mobile application for exhibit recognition was implemented using the Flutter framework, the Dart programming language, and the flutter_vision library, which integrates a specially trained YOLOv8 model for recognizing cultural heritage objects.

Building on this foundation, the next stage involved designing the overall system architecture to integrate the mobile client with the server components and ensure stable data exchange. On the server side, Python 3.11 was selected as the primary programming language, with Django serving as the web framework and PostgreSQL as the database management system. The Django REST Framework (DRF) was used to implement RESTful APIs that facilitate structured communication between the backend and the mobile client.

The backend subsystem is responsible for processing recognition results, managing exhibit information, and delivering multilingual content. All components of the backend, including the web server and the database, are containerized using Docker, ensuring consistent behavior across development and production environments. The server side components were deployed on a dedicated virtual server equipped with 8 CPU cores and 16 GB of RAM, providing sufficient computational capacity for database operations and real-time communication. Nginx server functions as a reverse proxy, distributing HTTP requests to the Django application and serving static files. This configuration increases reliability, scalability, and ease of maintenance while ensuring secure communication between the client and the server.

The overall application architecture (

Figure 7) follows a multi-layered design that integrates the backend and frontend through the HTTP protocol. On the client side, communication with the server occurs via the REST API. Combined with the application of SOLID principles and the Bloc state management pattern, this design provides a clear separation between user interface and business logic, resulting in improved code readability, maintainability, and performance.

The mobile client is structured into several functional layers that communicate by exchanging data as class instances and JSON objects. The presentation layer, built with Flutter, includes a native camera preview component that captures the image stream. The captured frame is passed to the recognition layer, where an embedded machine learning model (stored as a .tflite file) performs exhibit detection and returns an array of recognized objects with their coordinates on the frame.

The data layer handles communication with the server and manages the recognition results. Once an exhibit is identified, its label is sent to the backend to retrieve detailed information. To minimize network requests and reduce backend load, the client application uses a local cache with a two-minute data lifetime. Before sending a new request, the app checks whether the corresponding exhibit data is already stored and up to date.

After retrieving the exhibit details, the application overlays the recognized object on the Flutter interface with interactive information panels and an option to view the exhibit’s 3D model in AR.

This multi-layered architecture not only supports efficient data exchange and model-based recognition but also enables the creation of a seamless and visually engaging user interface. Building upon this foundation, the design of the user interface focused on accessibility, clarity, and visual immersion.

The REST API enables standardized communication between client and server over the HTTP protocol, with data exchanged in JSON format. This design increases fault tolerance, simplifies data integration, and facilitates interoperability with other services.

The choice of Django and Python for the server side was motivated by their convenience, flexibility, and extensive ecosystem of ready-made solutions, which considerably accelerate development. The key advantages include:

Multilingual support—dedicated Django libraries enable the implementation of multilingual functionality, including the storage of data in three language versions.

Object-relational mapping (ORM)—Django’s ORM facilitates efficient interaction with the database, allowing developers to manipulate data through Python objects rather than raw SQL queries.

Administration panel—Django provides a built-in administrative interface for data management, eliminating the need to develop such functionality from scratch.

API infrastructure—the Django REST Framework streamlines the creation of RESTful APIs, automatically generating endpoints to support communication between the mobile client and the server.

Together, this technology stack reduces development time, enhances code maintainability, and ensures a robust and scalable project architecture.

Figure 8 presents the database model designed for the application. The model supports multilingual content, nested categories, thematic exhibitions, relationships between works and authors, and a mechanism for screen customization. It is composed of the following entities:

Author—stores information about the creator of the work.

Exhibition—groups works into thematic collections.

Category—classifies exhibits with support for hierarchical nesting.

Work—represents identifiable objects with unique search codes.

Screen—defines the configuration of displayed data.

Figure 8.

UML diagram of entities in the application.

Figure 8.

UML diagram of entities in the application.

This structure ensures flexibility in organizing exhibits by both exhibitions and categories. Multilingual support (Kazakh, Russian, and English) further broadens accessibility, making the application valuable not only for local visitors but also for international audiences. Since each entity manages a distinct part of the dataset, the system is easier to scale and maintain. Overall, the model provides reliable information storage and efficient management of digital exhibit collections.

Building upon this data structure, the AR component of the application was developed to extend the user’s interaction beyond textual and visual information. The AR content consisted of interactive 3D models of museum exhibits, allowing visitors to engage with artifacts in a more immersive way. These models were provided directly by the Abylkhan Kasteyev State Museum of Arts and were obtained through 3D scanning of the actual artifacts conducted by the museum’s specialists. Each scanned model was processed, optimized in the .glb format, and embedded into the mobile application for offline use. After recognition, the corresponding 3D model appeared in the user’s real environment, anchored to a flat surface detected by the device’s camera. Users could freely rotate, scale, and examine the object from different perspectives. The inclusion of interactive manipulation and contextual information helped visitors better understand the exhibit’s form, craftsmanship, and cultural significance.

From a technical perspective, the current AR implementation employs the open source model_viewer_plus library, which provides native support for both Android and iOS platforms, ensuring full cross-platform compatibility. After an exhibit is recognized and its information panel appears on the screen, users can tap the AR button next to the recognition card to display the corresponding 3D model in AR. The model can be rotated, zoomed in, and explored from various angles, enhancing the sense of immersion. The spatial 3D models of exhibits are stored as .glb files within the mobile application itself, allowing for offline access and ensuring rapid loading without the need for a network connection.

For displaying 3D models of exhibits in AR mode, integration with the flutter_3d_controller package is planned. This library supports real time manipulation of 3D objects, including zoom, rotation, and translation, with compatibility for .obj and .fbx models and optimizations for mobile rendering. To anchor volumetric models within real world space, the ar_flutter_plugin will be employed. Future work will focus on tighter integration between flutter_3d_controller and ar_flutter_plugin to enhance real-time rendering and user interaction. Planned improvements include dynamic lighting effects, physics-based interactions, and multi-user collaboration, enabling multiple visitors to simultaneously engage with AR content.

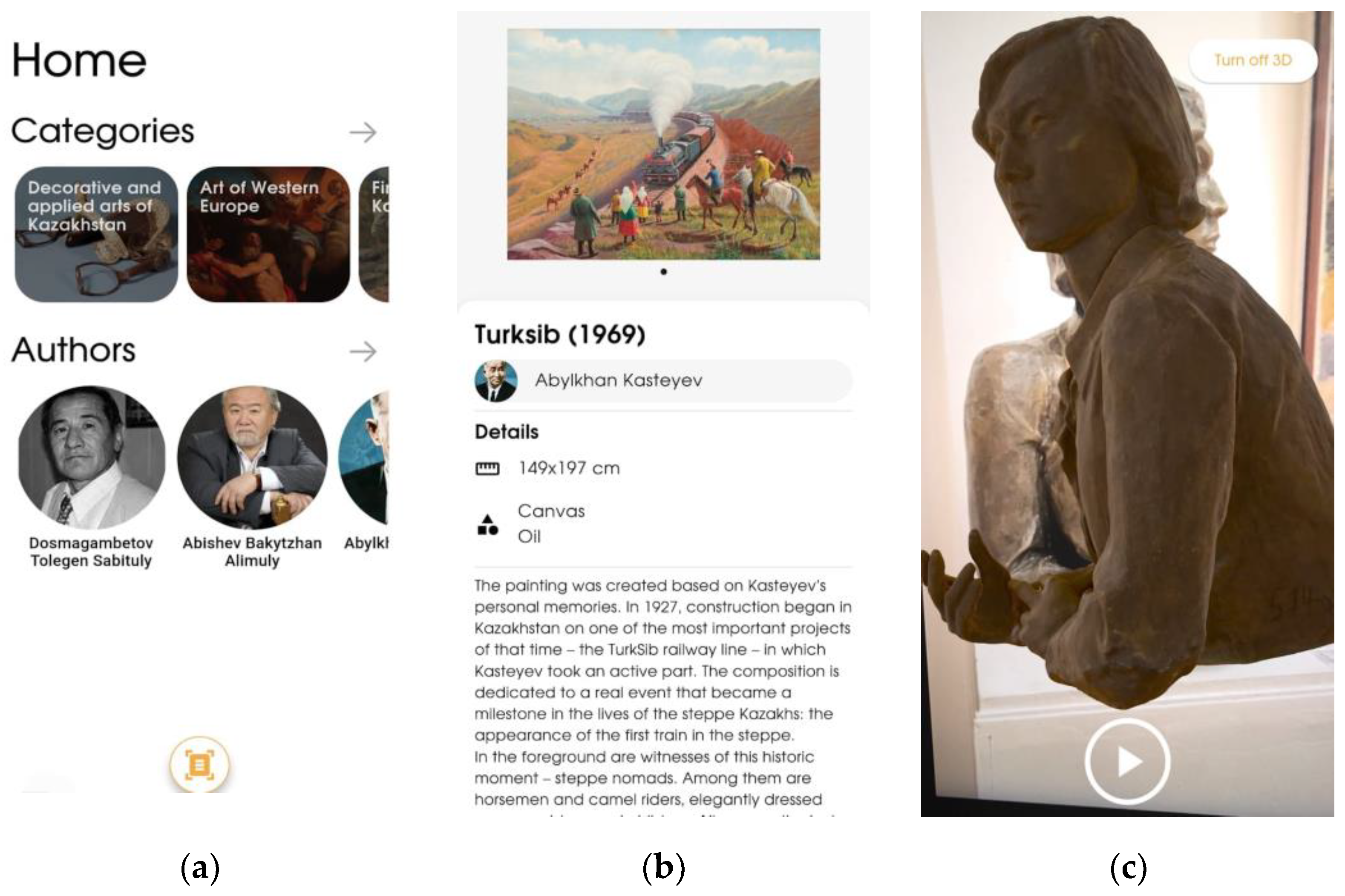

The user interface (

Figure 9) was designed in a minimalist and intuitive style with a strong emphasis on visual content—images of authors and categories are placed at the center of the experience. The main screen (

Figure 9a) serves as the central navigation point of the application. It is clearly structured and contains key interactive elements that provide quick access to the core functionality. The “Scan and get information” block is an active component: tapping it launches the camera for exhibit recognition. The “Categories” section presents a horizontally scrollable list of exhibit groups, each represented by a card containing an image and a short title. Selecting a card redirects the user to a detailed catalog of exhibits in that category. The “Authors” section provides a gallery of personalities related to the exhibits (artists, creators, historical figures). Each person is displayed on a card with a portrait and a name; tapping a card opens a personal page with a biography and a list of works.

The detailed exhibit page (

Figure 9b) focuses on the visual representation of the object. The upper part of the screen is occupied by the artwork image, followed by essential metadata such as title, author, creation date, dimensions, and technique. A text block provides a concise historical background and interpretation of the work. Another dedicated screen (

Figure 9c) is used for 3D visualization: it highlights an interactive model of the exhibit, such as a sculpture, which users can rotate, scale, and explore from different angles. This functionality enhances the perception of cultural artifacts and creates an immersive effect of presence.

In addition to improvements achieved through dataset expansion and model refinement, the final version of the application was evaluated in a real museum setting (

Figure 10). Testing demonstrated an average recognition accuracy of approximately 97%, with the system consistently identifying a wide range of exhibits under varying lighting conditions and viewing angles. This accuracy was evaluated through the analysis of internal recognition logs, as was also done in the beta version of the application. User feedback was predominantly positive, emphasizing the intuitive interface, seamless integration of AR, and the added value of interactive 3D models and historical narratives.

Some limitations were also observed, including occasional misclassification of exhibits. These errors were primarily attributed to factors such as insufficient lighting, background noise, and shadows—issues commonly encountered in computer vision research [

34] rather than being specific to the application domain. Future work will therefore focus on further optimizing processing to improve responsiveness and reduce misclassification rates.

5. Results

The effectiveness of the developed AR application was evaluated using the extended TAM framework described in

Section 2. The proposed hypotheses (H1–H5) were tested through correlation analysis to examine relationships among the five constructs.

To operationalize the constructs, a structured questionnaire was developed and adapted from validated instruments used in previous AR and mobile learning research [

31,

32,

33], ensuring conceptual alignment with established TAM dimensions. The items were translated into Russian and Kazakh and refined to reflect the museum context.

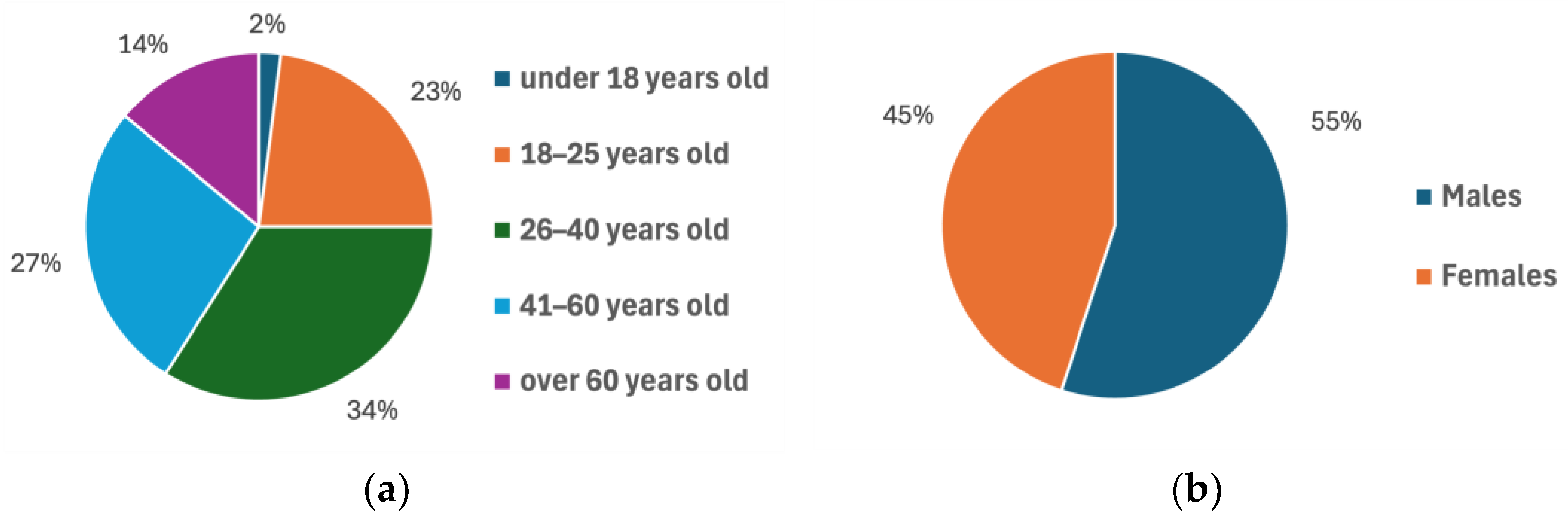

To assess the effectiveness of the implemented AR solutions, a field test was conducted under real world conditions in collaboration with the Abylkhan Kasteyev State Museum of Arts. The survey was administered on-site over several days among visitors who voluntarily agreed to participate, ensuring random and unbiased sampling of museum attendees. Ethical review and approval were waived for this study because the research involved anonymous survey responses without the collection of sensitive data. In total, 44 respondents aged 16 to 78 took part in the study, representing diverse levels of digital literacy and prior AR experience. All participants provided informed consent prior to participation, and ethical approval was obtained from all respondents.

The demographic composition of the sample was as follows: the majority of participants were aged between 26 and 40 years (34%), followed by those aged 41–60 years (27%), 18–25 years (23%), over 60 years (14%), and one participant under 18 years (2%), as shown in

Figure 11a. The gender distribution included 20 females (45%) and 24 males (55%), as illustrated in

Figure 11b.

Reliability of the instrument was assessed using Cronbach’s alpha, with all constructs showing values above 0.85 (

Table 2), confirming high internal consistency and reliability.

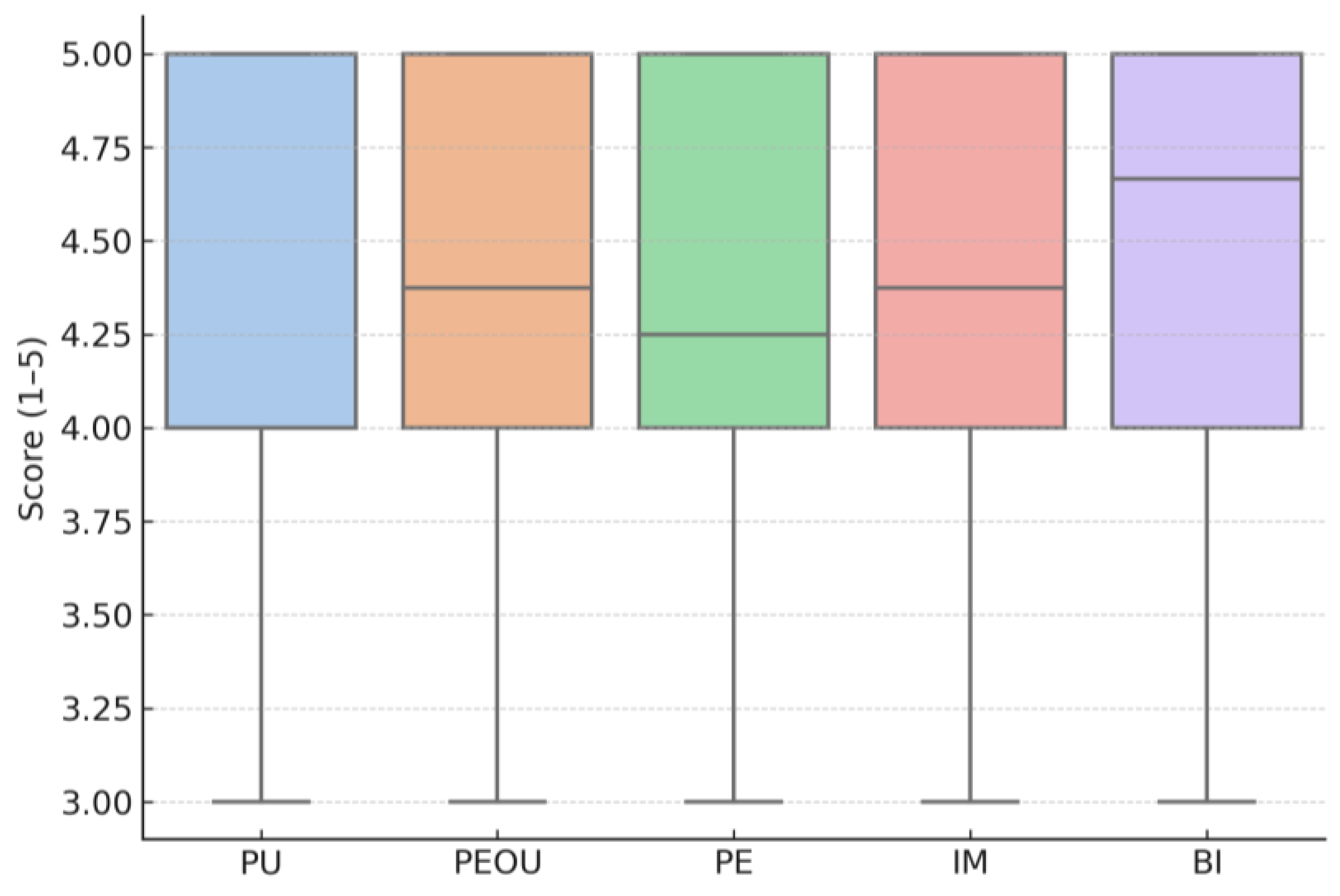

The questionnaire consisted of 19 items distributed across the five constructs: PU (4 items), PEOU (4 items), PE (4 items), IM (4 items), and BI (3 items). Each item was measured on a 5-point Likert scale ranging from 1 = strongly disagree to 5 = strongly agree.

To validate the hypotheses, a correlation analysis was conducted. The results are shown in

Table 3.

Table 4 summarizes the descriptive statistics of the five constructs in the extended TAM. All constructs exhibited high mean values (M = 4.20–4.49 on a 5-point scale), indicating a generally positive evaluation of the AR application. The lowest variability was observed for Perceived Ease of Use (SD = 0.61), suggesting consistent agreement among participants regarding the app’s usability. Behavioral Intention (M = 4.49) achieved the highest mean score, followed by Perceived Ease of Use (M = 4.36) and Perceived Enjoyment (M = 4.34), demonstrating that both functional and affective aspects contributed to positive user acceptance.

Table 4 summarizes the descriptive statistics of the five constructs in the extended TAM. All constructs exhibited high mean values (M = 4.20–4.49 on a 5-point scale), indicating a generally positive evaluation of the AR application. The lowest variability was observed for Perceived Ease of Use (Standard Deviation = 0.61), suggesting consistent agreement among participants regarding the app’s usability. Behavioral Intention (M = 4.49) achieved the highest mean score, followed by Perceived Ease of Use (M = 4.36) and Perceived Enjoyment (M = 4.34), demonstrating that both functional and affective aspects contributed to positive user acceptance.

All correlation coefficients were found to be statistically significant at p < 0.001, confirming strong positive relationships between the examined variables. The findings reveal that Perceived Ease of Use (PEOU) had a substantial effect on both Perceived Usefulness (PU) (r = 0.677) and Perceived Enjoyment (PE) (r = 0.775), supporting hypotheses H1 and H2. This suggests that the more intuitive and user friendly the AR application is, the greater the perceived value and enjoyment users experience during interaction. Similarly, Perceived Usefulness (PU) showed a strong positive correlation with Behavioral Intention (BI) (r = 0.700), indicating that users who found the application beneficial were more inclined to continue using it (H3).

The strongest correlation was observed between Perceived Enjoyment (PE) and Behavioral Intention (BI) (r = 0.825), followed closely by Immersion (IM) and Behavioral Intention (BI) (r = 0.771), confirming H4 and H5. These results highlight the critical role of emotional engagement and immersive experience in shaping user acceptance of AR technologies in museum contexts. In particular, enjoyment and immersion appear to be more influential predictors of behavioral intention than purely utilitarian factors, emphasizing the importance of affective design elements in interactive cultural applications.

Figure 12 displays the correlation matrix among the TAM constructs. All correlations were positive and strong (r = 0.62–0.83), confirming close interrelationships between usability, enjoyment, immersion, and intention to use. The strongest correlation was observed between Perceived Enjoyment (PE) and Behavioral Intention (BI) (r = 0.82), followed by Immersion (IM) and Behavioral Intention (BI) (r = 0.77). This finding highlights that emotional engagement and immersion are stronger predictors of technology adoption than purely utilitarian factors.

Figure 13 illustrates the distribution of responses for each construct using box plots. The narrow interquartile ranges for PEOU and PU reflect a high degree of agreement among participants regarding usability and usefulness, while slightly greater variance for IM indicates individual differences in perceived immersion. For the PU construct, the median coincides with the upper quartile, reflecting high consistency among participant responses and a strong consensus on the perceived usefulness of the AR application. This pattern confirms the reliability of user evaluations and demonstrates a generally positive attitude toward the AR mobile application.

Together, these visual analyses provide clear statistical evidence supporting the robustness of the extended TAM framework in the museum context, emphasizing that both functional ease and affective engagement are essential for promoting user acceptance of AR technologies.

Overall, the correlation analysis confirms the validity of the extended TAM framework in the context of museum-based AR applications. The results demonstrate that emotional and experiential factors—particularly enjoyment and immersion—play a decisive role in shaping visitors’ behavioral intention to adopt AR technology. These findings provide a strong empirical foundation for the subsequent discussion on the implications of AR integration in cultural heritage environments.

6. Discussion and Conclusions

The findings of this study confirm the significant potential of AR mobile applications to enhance museum experiences by increasing visitor engagement, enjoyment, and intention to reuse the technology. Pilot testing at the Abylkhan Kasteyev State Museum of Arts demonstrated that participants evaluated the AR application highly in terms of usability, immersion, and overall satisfaction. The survey data revealed that perceived enjoyment emerged as the strongest predictor of behavioral intention to continue using the application, highlighting that emotional engagement plays a more decisive role than purely utilitarian benefits. These outcomes align with prior research emphasizing the importance of affective factors in the adoption of immersive technologies in museums [

8,

12,

18].

The extended TAM framework used in this research provided a robust lens for understanding user perceptions of the developed system. All five hypotheses (H1–H5) were confirmed with statistically significant correlations (p < 0.001). Perceived ease of use was found to have a strong positive impact on both perceived usefulness and perceived enjoyment (supporting H1 and H2), confirming that intuitive interaction not only facilitates functional access but also fosters pleasure during use. Furthermore, perceived enjoyment and immersion demonstrated the highest correlations with behavioral intention (supporting H4 and H5), showing that affective engagement and a sense of presence are essential for sustaining continued interaction with AR technology. The influence of perceived usefulness on behavioral intention (H3) further suggests that visitors are willing to reuse AR tools when they clearly see their added educational value. Together, these results reaffirm that the success of AR in cultural settings depends on achieving both cognitive efficiency and emotional resonance.

From a usability perspective, the intuitive interface, trilingual design, and smooth integration of 3D models contributed to user satisfaction and accessibility. However, certain demographic differences were observed: older visitors and individuals with limited digital literacy faced difficulties navigating AR features, echoing inclusivity challenges noted in earlier studies [

29,

30]. Addressing these concerns may require the introduction of guided tutorials, adaptive font scaling, or voice assisted navigation to make the interface accessible to a wider audience. Another promising direction is the implementation of collaborative and social features, as discussed in [

28], enabling shared exploration among multiple users and encouraging intergenerational learning.

From a technical standpoint, the machine learning model achieved recognition accuracy exceeding 97%, yet some artifacts with complex textures or reflective surfaces—such as the black vase and horizontally placed kobyz—remained challenging. These limitations stem from lighting variability, occlusions, and limited dataset diversity, which are typical for real world computer vision systems. Future development should expand the dataset to include more varied orientations, apply image normalization techniques, and explore hybrid cloud-based inference to improve recognition under non-ideal museum conditions.

Despite encouraging results, several constraints must be acknowledged. First, the participant sample (44 respondents from a single museum) limits the generalizability of findings to broader visitor populations or international contexts. Second, environmental factors such as lighting conditions and background clutter influence detection reliability. Finally, while the TAM framework effectively captured user perceptions, qualitative methods such as interviews or observations could provide deeper insights into user experience. Addressing these limitations in future research will strengthen both the theoretical and practical contributions of AR-based museum systems.

Overall, the development and deployment of the AR-based mobile application for the Abylkhan Kasteyev State Museum of Arts demonstrated considerable potential to enhance visitor engagement, learning, and accessibility. Through the integration of machine learning and computer vision, the application successfully recognized and visualized exhibits, offering users an interactive and educational experience. To further advance this approach, future work should focus on expanding the database of recognized artifacts, refining recognition algorithms to minimize errors, and enriching interactivity through features such as gamification, multi-user collaboration, and remote access. Particular attention will also be given to accessibility, with plans to extend multilingual support and improve usability for visitors with disabilities.

In conclusion, the study highlights the transformative role of AR technologies in reimagining traditional museum experiences. By making cultural heritage more accessible, inclusive, and engaging, AR-based applications hold strong potential to modernize museums, strengthen learning outcomes, and foster deeper emotional and cognitive connections between audiences and exhibits.