SACW: Semi-Asynchronous Federated Learning with Client Selection and Adaptive Weighting

Abstract

1. Introduction

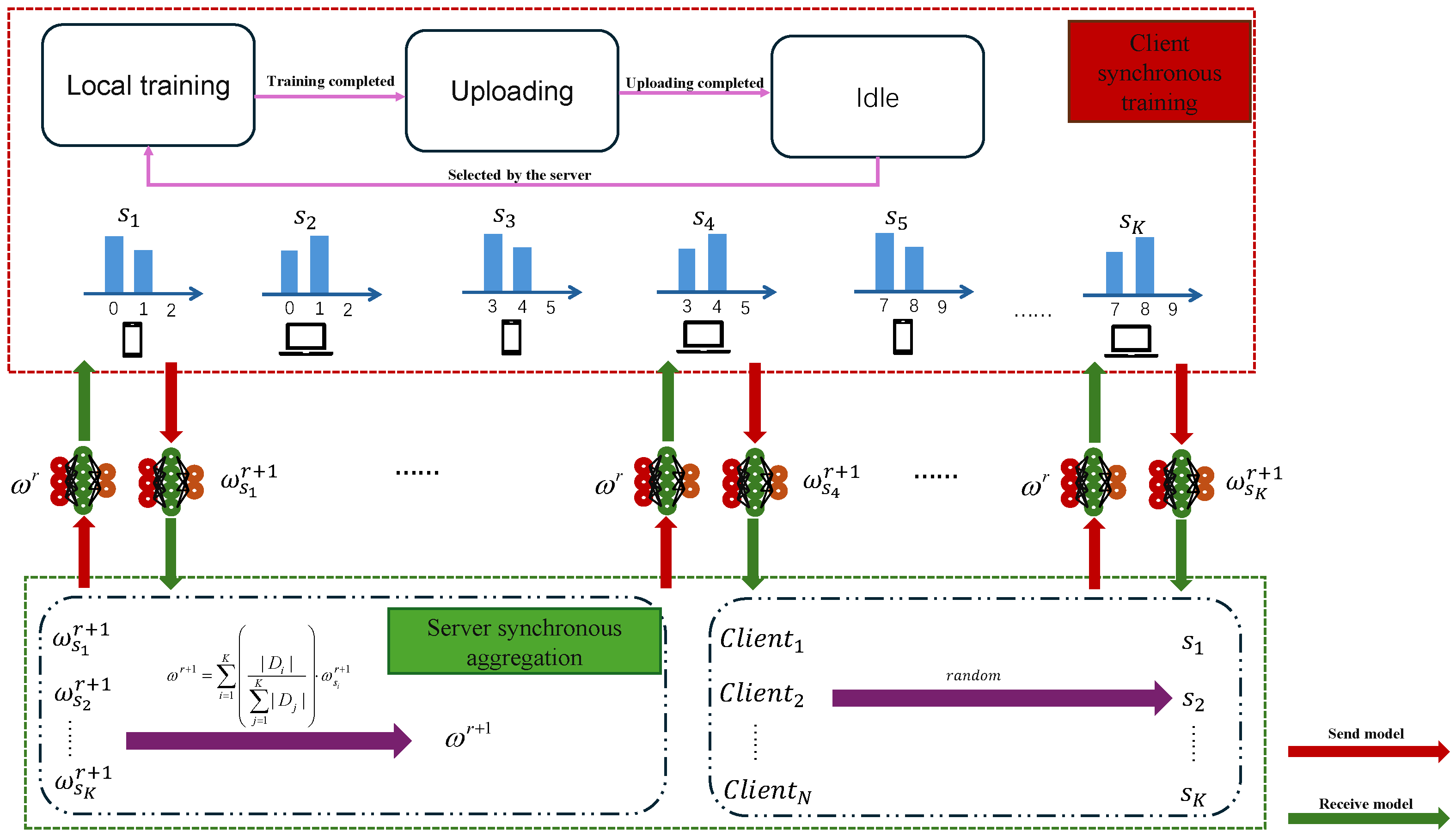

- We propose the SACW framework, which adopts a semi-asynchronous strategy of “asynchronous client training–synchronous server aggregation.” This design balances training efficiency with model quality. In addition, an adaptive weighting algorithm based on model staleness and data volume improves system resource utilization and effectively mitigates system heterogeneity.

- We present a DBSCAN-based client selection strategy that automatically identifies clients with similar data distributions through density clustering, without requiring predefined cluster numbers. This approach forms a grouping structure with “inter-cluster heterogeneity and intra-cluster homogeneity.” Representative clients are then selected from each cluster for aggregation, which alleviates data heterogeneity. To further reduce the network load introduced by semi-asynchronous communication, we introduce a lattice-based quantization scheme for model compression. This method significantly decreases the transmission overhead between clients and the server.

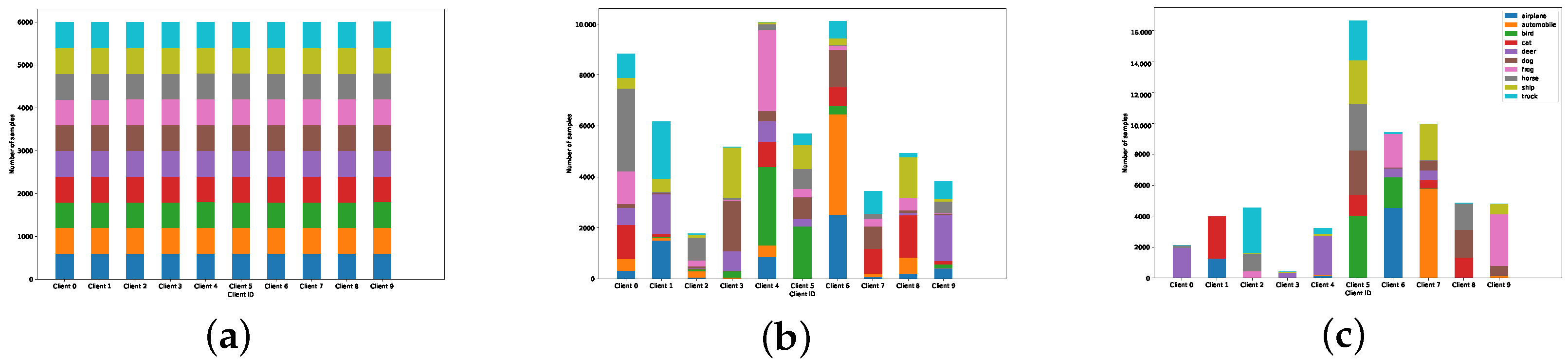

- To evaluate the practicality of our method, we construct a realistic heterogeneous training environment. We simulate fast and slow clients by assigning different computing resources and generate Non-IID data distributions through non-uniform dataset partitioning. Experiments on three benchmark datasets show that SACW achieves high model accuracy while delivering faster convergence and better communication efficiency.

2. Related Work

3. Preliminary

3.1. Federated Learning

- Initialization: The central server broadcasts initialization parameters to all clients. performs local initialization after receiving the broadcast information from the server.

- Client Selection: The server randomly selects a subset of clients from all available clients, forming a candidate set , and sends the global model to the selected clients.

- Local Client Training: Each selected trains the model using its local dataset based on , obtaining updated parameters , and then uploads the trained model parameters to the server. Upon completing training, the client stops local training and waits for subsequent selection by the server. The client state transitions as follows: .

- Server Aggregation: After collecting all updated parameters , the server performs a weighted aggregation of local models based on each client’s data volume to obtain a new global model .

- Model Evaluation: The system evaluates whether the global model’s accuracy meets the target threshold or whether the total training rounds reach R. If yes, the federated learning task terminates. Otherwise, the next training round begins immediately, repeating steps 2–5.

3.2. DBSCAN

- Parameter Initialization: Predefine the neighborhood radius (Eps) and minimum density threshold MinPts to characterize the density conditions for core points.

- Point Access and Core Point Determination: Randomly select a point f from the dataset and mark it as visited. If the -neighborhood of f contains at least MinPts objects, d is identified as a core point, and a new cluster C is created. Otherwise, f is temporarily marked as noise. If f is a core point, all objects within its neighborhood are added to the candidate set F.

- Cluster Expansion: While the candidate set F is non-empty, repeat the following operations: Extract any object d from F that has not been assigned to another cluster. If d has not been visited, mark it as visited and examine its -neighborhood. If d also satisfies the core point condition, append all objects in its neighborhood not yet assigned to the current cluster into F. Then, assign d to cluster C. This process continues until F is empty, at which point cluster C is fully constructed.

- Iteration: Randomly select another unvisited point and repeat steps 2 and 3 until all objects in the dataset have been visited.

- Output: Finally, all points successfully assigned to a cluster C form the clustering result, while points never incorporated into any cluster are treated as noise.

4. Proposed Method: SACW

4.1. Problem Description

- The client set remains fixed during training, with no dynamic addition of new clients;

- Each client’s local dataset remains unchanged throughout the training process;

- The central server is honest and coordinates all clients for global training.

4.2. Algorithm Description

- Initialization: All clients receive the initial model () broadcast by the server, including the learning rate and local maximum training steps L. They subsequently transmit their local data distributions to the server.

- Client Clustering and Selection: The server performs clustering based on all clients’ local data distributions and periodically (with interval T) randomly selects clients from each cluster to receive the latest global model , where R represents the total number of global training rounds.

- Local Client Training: Each local client operates under one of three conditions:

- (a)

- When selected by the server, the client immediately uploads its compressed local model (full or partial) along with the model version number, then initiates local training based on the latest global model. State transition: .

- (b)

- When not selected by the server but with local training steps below the maximum threshold, the client continues local model training. State transition: .

- (c)

- When not selected and having reached the maximum training steps, the client remains idle until scheduled by the server. State transition: Persists in .

- Server Aggregation: Upon receiving all selected clients’ models and version numbers, the server decompresses the models, calculates their staleness, and computes adaptive weighting factors based on both model staleness and training data volume. It then performs weighted aggregation to update the global model .

- Model Evaluation: The server evaluates whether the global model’s accuracy meets the target threshold or whether the training duration has expired. If yes, the federated learning task terminates; otherwise, it repeats steps 2–5 after waiting for period T.

4.3. Method Description

4.3.1. Client Selection

| Algorithm 1: Pseudocode of SACW |

|

4.3.2. The Semi-Asynchronous Framework with Adaptive Weighting

- Problem 1: In each training round, the central server selects only a subset of clients to participate in global training (for instance, 200 out of 1000 clients). As communication rounds accumulate, certain clients may remain unselected for an extended period, resulting in local models that are excessively stale relative to the latest global model. Directly averaging these highly stale local models with the global model can severely impede convergence. Therefore, in SACW, a local model is assigned higher utility—and consequently a larger weight in server aggregation—when its host client possesses a larger dataset and exhibits lower staleness. This relationship is strictly linear: weight increases proportionally with data volume and decreases proportionally with staleness. Conversely, clients with smaller datasets and higher staleness receive proportionally smaller weights. This adaptive weighting strategy demonstrably accelerates convergence, as corroborated by the experimental results in Section 4.3.3.

- Problem 2: The server must communicate cyclically with all selected clients, transmitting the global model and receiving their local updates in every round. As both the number of communication rounds and participating clients grows, this imposes substantial communication overhead on the server. To mitigate this pressure, SACW incorporates model compression techniques, detailed in Section 4.3.4.

4.3.3. Adaptive Weighting

4.3.4. Lattice-Based Model Quantization

5. Experimental Description

5.1. Dataset and Model

5.2. Experimental Setup

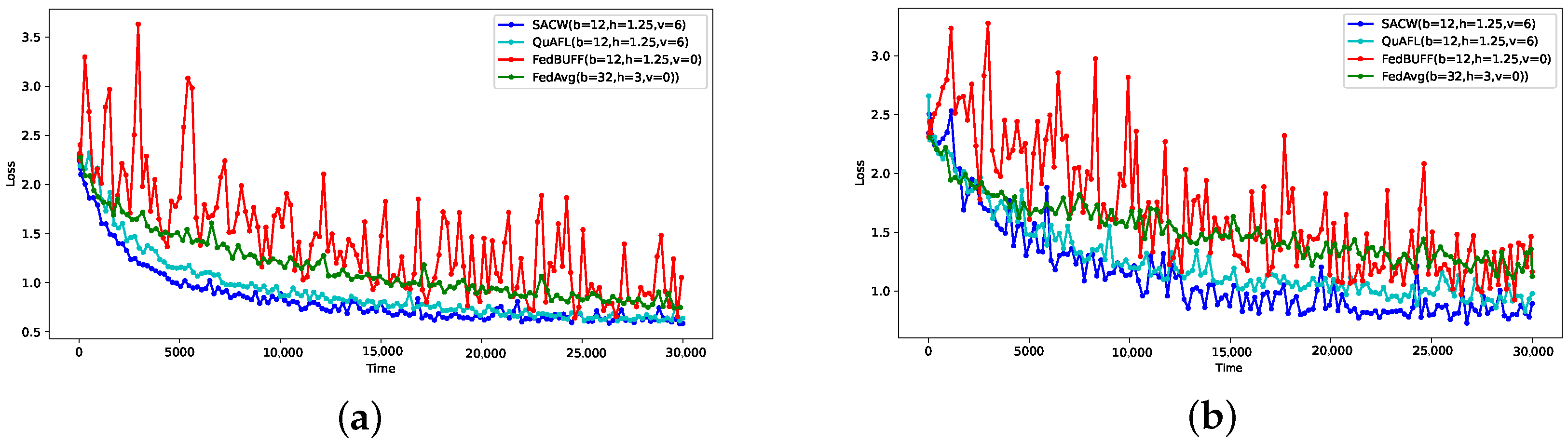

5.3. Comparative Experimental Results and Analysis

5.4. Hyperparameter Analysis

5.4.1. Analysis of Core Hyperparameters (b, v, )

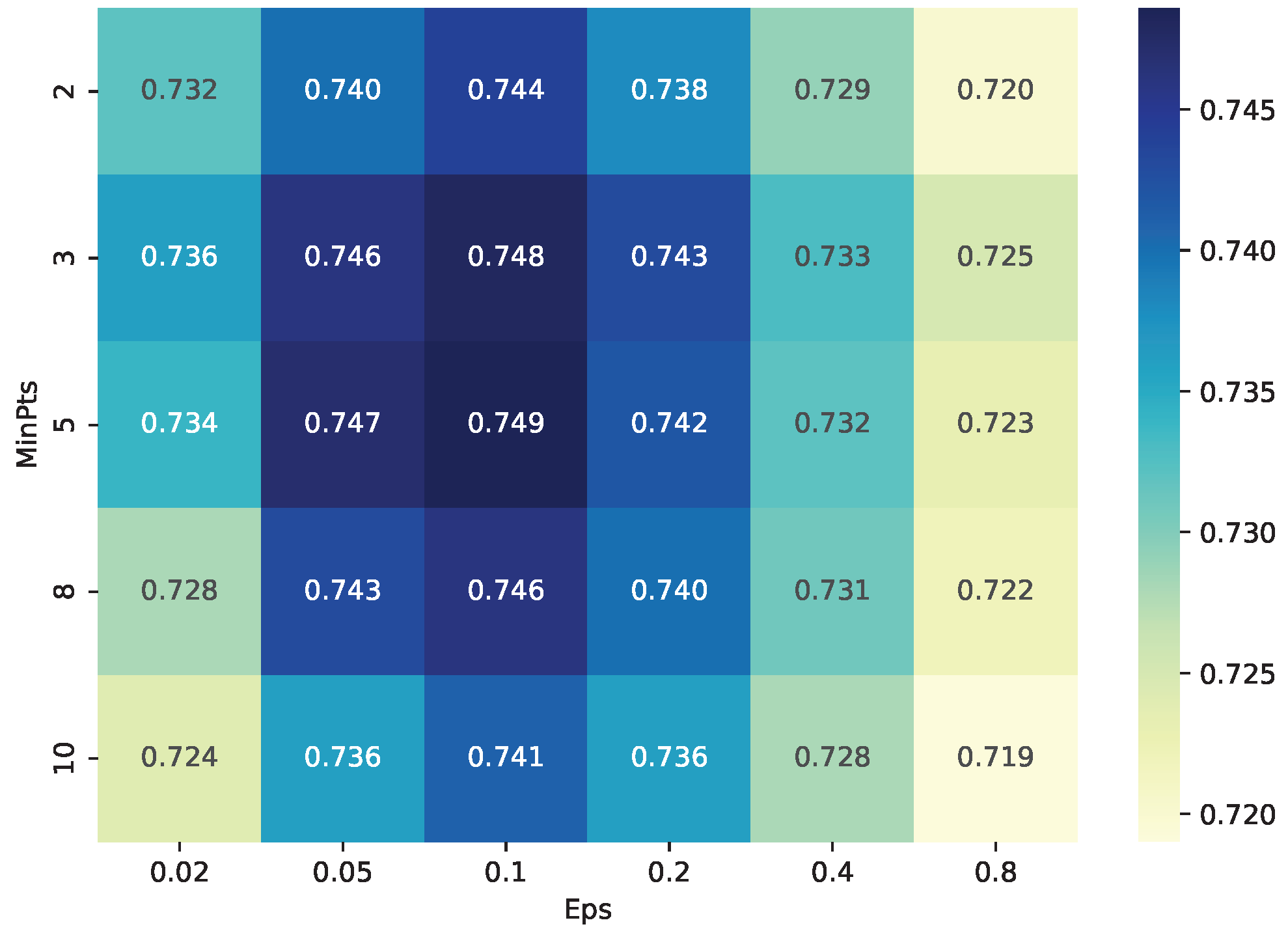

5.4.2. Sensitivity analysis to DBSCAN Clustering Parameters (, MinPts)

- is set to = , covering a wide spectrum from very tight to loose clustering thresholds.

- MinPts is set to , representing progressively stricter density constraints.

5.5. Ablation Analysis

- Default: Neither DBCS nor ALMW; employs random client selection and data-volume-based weighting.

- Default + DBCS: Replaces the client selection strategy with DBCS.

- Default + ALMW: Replaces the server-side weighting strategy with ALMW.

- SACW: The complete framework that combines both modules.

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Evaluation Metrics

Appendix A.2. Performance Comparison

| Dataset | Method | ||||||

|---|---|---|---|---|---|---|---|

| Pre | Rec | F1 | Pre | Rec | F1 | ||

| Mnist | FedAvg | 0.8930 | 0.8912 | 0.8921 | 0.8815 | 0.8715 | 0.8765 |

| FedBUFF | 0.9179 | 0.9092 | 0.9135 | 0.9109 | 0.9010 | 0.9059 | |

| QuAFL | 0.9238 | 0.9227 | 0.9232 | 0.8883 | 0.8805 | 0.8844 | |

| SACW | 0.9396 | 0.9400 | 0.9398 | 0.9272 | 0.9261 | 0.9266 | |

| Fashion Mnist | FedAvg | 0.7063 | 0.6984 | 0.7023 | 0.6742 | 0.6391 | 0.6562 |

| FedBUFF | 0.7879 | 0.7929 | 0.7904 | 0.7513 | 0.7576 | 0.7544 | |

| QuAFL | 0.8767 | 0.8830 | 0.8848 | 0.8655 | 0.8540 | 0.8597 | |

| SACW | 0.9008 | 0.9018 | 0.9013 | 0.8915 | 0.8878 | 0.8896 | |

| Cifar-10 | FedAvg | 0.7452 | 0.7389 | 0.7420 | 0.6378 | 0.5899 | 0.6129 |

| FedBUFF | 0.7869 | 0.7643 | 0.7754 | 0.6836 | 0.6649 | 0.6741 | |

| QuAFL | 0.8021 | 0.7912 | 0.7966 | 0.7337 | 0.7172 | 0.7253 | |

| SACW | 0.8164 | 0.8105 | 0.8134 | 0.7839 | 0.7489 | 0.7660 | |

References

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-edge AI: Intelligentizing mobile edge computing, caching and communication by federated learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends® Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Zhang, T.; Gao, L.; He, C.; Zhang, M.; Krishnamachari, B.; Avestimehr, A.S. Federated learning for the internet of things: Applications, challenges, and opportunities. IEEE Internet Things Mag. 2022, 5, 24–29. [Google Scholar] [CrossRef]

- Li, L.; Fan, Y.; Tse, M.; Lin, K.Y. A review of applications in federated learning. Comput. Ind. Eng. 2020, 149, 106854. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Fu, L.; Zhang, H.; Gao, G.; Zhang, M.; Liu, X. Client selection in federated learning: Principles, challenges, and opportunities. IEEE Internet Things J. 2023, 10, 21811–21819. [Google Scholar] [CrossRef]

- Mayhoub, S.; Shami, T.M. A review of client selection methods in federated learning. Arch. Comput. Methods Eng. 2024, 31, 1129–1152. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics; PMLR: New York, NY, USA, 2017; pp. 1273–1282. [Google Scholar] [CrossRef]

- Imteaj, A.; Thakker, U.; Wang, S.; Li, J.; Amini, M.H. A survey on federated learning for resource-constrained IoT devices. IEEE Internet Things J. 2021, 9, 1–24. [Google Scholar] [CrossRef]

- Sasindran, Z.; Yelchuri, H.; Prabhakar, T.V. Ed-Fed: A generic federated learning framework with resource-aware client selection for edge devices. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023; IEEE: New York City, NY, USA, 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated learning with non-IID data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Cheng, S.L.; Yeh, C.Y.; Chen, T.-A.; Pastor, E.; Chen, M.S. FedGCR: Achieving Performance and Fairness for Federated Learning with Distinct Client Types via Group Customization and Reweighting. Proc. AAAI Conf. Artif. Intell. 2024, 38, 11498–11506. [Google Scholar] [CrossRef]

- Moe, C.; Koyejo, S.; Gupta, I. Asynchronous federated optimization. arXiv 2019, arXiv:1903.03934. [Google Scholar]

- Liu, J.; Xu, H.; Xu, Y.; Ma, Z.; Wang, Z.; Qian, C.; Huang, H. Communication-efficient asynchronous federated learning in resource-constrained edge computing. Comput. Netw. 2021, 199, 108429. [Google Scholar] [CrossRef]

- Xu, C.; Qu, Y.; Xiang, Y.; Gao, L. Asynchronous federated learning on heterogeneous devices: A survey. Comput. Sci. Rev. 2023, 50, 100595. [Google Scholar] [CrossRef]

- Deng, Y.; Lyu, F.; Ren, J.; Wu, H.; Zhou, Y.; Zhang, Y.; Shen, X. AUCTION: Automated and quality-aware client selection framework for efficient federated learning. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 1996–2009. [Google Scholar] [CrossRef]

- Ye, M.; Fang, X.; Du, B.; Yuen, P.C.; Tao, D. Heterogeneous Federated Learning: State-of-the-art and Research Challenges. ACM Comput. Surv. 2024, 56, 79. [Google Scholar] [CrossRef]

- Li, Q.; He, B.; Song, D. Model-contrastive federated learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10713–10722. [Google Scholar] [CrossRef]

- Li, X.C.; Zhan, D.C. FedRS: Federated learning with restricted softmax for label distribution non-IID data. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery Data Mining, Singapore, 14–18 August 2021; pp. 995–1005. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Z.; Li, B.; Xu, J.; Wu, S.; Ding, S.; Wu, C. Federated learning with label distribution skew via logits calibration. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; PMLR: New York, NY, USA, 2022; pp. 26311–26329. [Google Scholar] [CrossRef]

- Nguyen, J.; Malik, K.; Zhan, H.; Yousefpour, A.; Rabbat, M.; Malek, M.; Huba, D. Federated learning with buffered asynchronous aggregation. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022; PMLR: New York, NY, USA, 2022; pp. 3581–3607. [Google Scholar]

- Li, Y.; Yang, S.; Ren, X.; Shi, L.; Zhao, C. Multi-Stage Asynchronous Federated Learning With Adaptive Differential Privacy. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 1243–1256. [Google Scholar] [CrossRef]

- Ma, Q.; Xu, Y.; Xu, H.; Jiang, Z.; Huang, L.; Huang, H. FedSA: A semi-asynchronous federated learning mechanism in heterogeneous edge computing. IEEE J. Sel. Areas Commun. 2021, 39, 3654–3672. [Google Scholar] [CrossRef]

- Wu, W.; He, L.; Lin, W.; Mao, R.; Maple, C.; Jarvis, S. SAFA: A semi-asynchronous protocol for fast federated learning with low overhead. IEEE Trans. Comput. 2020, 70, 655–668. [Google Scholar] [CrossRef]

- Zakerinia, H.; Talaei, S.; Nadiradze, G.; Alistarh, D. Communication-efficient federated learning with data and client heterogeneity. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Valencia, Spain, 2–4 May 2024; PMLR: New York, NY, USA, 2024; pp. 3448–3456. [Google Scholar] [CrossRef]

- Konečný, J.; McMahan, B.; Ramage, D. Federated optimization: Distributed optimization beyond the datacenter. arXiv 2015, arXiv:1511.03575. [Google Scholar] [CrossRef]

- Ester, M.; Sander, J. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD’96: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar] [CrossRef]

- Davies, P.; Gurunathan, V.; Moshrefi, N.; Ashkboos, S.; Alistarh, D. New bounds for distributed mean estimation and variance reduction. arXiv 2020, arXiv:2002.09268. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar] [CrossRef]

- Krizhevsky, A. Dataset: Learning Multiple Layers of Features from Tiny Images. 2024. Available online: https://service.tib.eu/ldmservice/dataset/learning-multiple-layers-of-features-from-tiny-images (accessed on 20 October 2025). [CrossRef]

- Guo, S.; Yang, X.; Feng, J.; Ding, Y.; Wang, W.; Feng, Y.; Liao, Q. FedGR: Federated learning with gravitation regulation for double imbalance distribution. In International Conference on Database Systems for Advanced Applications; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 703–718. [Google Scholar]

- Tan, A.Z.; Yu, H.; Cui, L.; Yang, Q. Towards personalized federated learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 9587–9603. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Tan, H.; Luo, W.; Mao, H.; Ma, D.; Feng, S.; Fan, J. MR-DBSCAN: An Efficient Parallel Density-Based Clustering Algorithm Using MapReduce. In Proceedings of the 2011 IEEE 17th International Conference on Parallel and Distributed Systems, Tainan, Taiwan, 7–9 December 2011; pp. 473–480. [Google Scholar] [CrossRef]

- Zhu, L.; Liu, Z.; Han, S. Deep leakage from gradients. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019; pp. 14774–14784. [Google Scholar]

- Dwork, C. Differential privacy. In Proceedings of the 33rd international conference on Automata, Languages and Programming—Volume Part II (ICALP’06), Venice, Italy, 10–14 July 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–12. [Google Scholar]

- Shan, F.; Lu, Y.; Li, S.; Mao, S.; Li, Y.; Wang, X. Efficient adaptive defense scheme for differential privacy in federated learning. J. Inf. Secur. Appl. 2025, 89, 103992. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Diao, Y.; Li, Q.; He, B. Exploiting Label Skews in Federated Learning with Model Concatenation. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 38, pp. 11784–11792. [Google Scholar] [CrossRef]

| Symbol | Descriptions |

|---|---|

| N | total number of clients |

| R | number of global model aggregation rounds |

| r | current round number |

| T | total training time of the global model |

| L | maximum number of local training steps |

| K | number of clusters for clustering all clients |

| client | |

| adaptive weight of client | |

| cluster | |

| S | set of selected clients |

| selected representative client of | |

| dataset of | |

| size of dataset | |

| loss function of | |

| weight factor of | |

| parameters of the Dirichlet probability distribution | |

| model version number of | |

| local model of after local training | |

| global model in the round | |

| b | quantization bit-width |

| v | server visiting time |

| h | server handling time |

| actual local training steps of | |

| local label distribution of | |

| local gradient computation function of |

| Experiment Environment | Configuration |

|---|---|

| CPU | Intel(R) Core(TM) i9-12900K (Intel Corporation, Santa Clara, CA, USA) |

| GPU | NVIDIA RTX A5000 (NVIDIA Corporation, Santa Clara, CA, USA) |

| RAM (random access memory) | 64 GB |

| Programming language | Python 3.8 |

| Pytorch version | 1.8.2 |

| CUDA toolkit | 11.6 |

| Method | MNIST | Fashion MNIST | CIFAR 10 | ||||

|---|---|---|---|---|---|---|---|

| DBCS | ALMW | = 0.1 | = 0.5 | = 0.1 | = 0.5 | = 0.1 | = 0.5 |

| × | × | 0.8928 | 0.9255 | 0.8548 | 0.8843 | 0.6541 | 0.786 |

| ✓ | × | 0.9105 | 0.9391 | 0.8689 | 0.8923 | 0.6705 | 0.7968 |

| × | ✓ | 0.9072 | 0.9324 | 0.875 | 0.8913 | 0.6758 | 0.8009 |

| ✓ | ✓ | 0.9276 | 0.9477 | 0.8902 | 0.9016 | 0.7486 | 0.8164 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Shan, F.; Mao, S.; Lu, Y.; Miao, F.; Chen, Z. SACW: Semi-Asynchronous Federated Learning with Client Selection and Adaptive Weighting. Computers 2025, 14, 464. https://doi.org/10.3390/computers14110464

Li S, Shan F, Mao S, Lu Y, Miao F, Chen Z. SACW: Semi-Asynchronous Federated Learning with Client Selection and Adaptive Weighting. Computers. 2025; 14(11):464. https://doi.org/10.3390/computers14110464

Chicago/Turabian StyleLi, Shuaifeng, Fangfang Shan, Shiqi Mao, Yanlong Lu, Fengjun Miao, and Zhuo Chen. 2025. "SACW: Semi-Asynchronous Federated Learning with Client Selection and Adaptive Weighting" Computers 14, no. 11: 464. https://doi.org/10.3390/computers14110464

APA StyleLi, S., Shan, F., Mao, S., Lu, Y., Miao, F., & Chen, Z. (2025). SACW: Semi-Asynchronous Federated Learning with Client Selection and Adaptive Weighting. Computers, 14(11), 464. https://doi.org/10.3390/computers14110464